Reproducibility—the ability to recompute results—and replicability—the chances other experimenters will achieve a consistent result—are two foundational characteristics of successful scientific research. Consistent findings from independent investigators are the primary means by which scientific evidence accumulates for or against a hypothesis. Yet, of late, there has been a crisis of confidence among researchers worried about the rate at which studies are either reproducible or replicable. To maintain the integrity of science research and the public’s trust in science, the scientific community must ensure reproducibility and replicability by engaging in a more preventative approach that greatly expands data analysis education and routinely uses software tools.

We define reproducibility as the ability to recompute data analytic results given an observed dataset and knowledge of the data analysis pipeline. The replicability of a study is the chance that an independent experiment targeting the same scientific question will produce a consistent result (1). Concerns among scientists about both have gained significant traction recently due in part to a statistical argument that suggested most published scientific results may be false positives (2). At the same time, there have been some very public failings of reproducibility across a range of disciplines from cancer genomics (3) to economics (4), and the data for many publications have not been made publicly available, raising doubts about the quality of data analyses. Popular press articles have raised questions about the reproducibility of all scientific research (5), and the US Congress has convened hearings focused on the transparency of scientific research (6). The result is that much of the scientific enterprise has been called into question, putting funding and hard won scientific truths at risk.

From a computational perspective, there are three major components to a reproducible and replicable study: (i) the raw data from the experiment are available, (ii) the statistical code and documentation to reproduce the analysis are available, and (iii) a correct data analysis must be performed. Recent cultural shifts in genomics and other areas have had a positive impact on data and code availability. Journals are starting to require data availability as a condition for publication (7), and centralized databases such as the National Center for Biotechnology Information's Gene Expression Omnibus are being created for depositing data generated by publicly funded scientific experiments. New computational tools such as knitr, iPython notebook, LONI, and Galaxy (8) have simplified the process of distributing reproducible data analyses.

Unfortunately, the mere reproducibility of computational results is insufficient to address the replication crisis because even a reproducible analysis can suffer from many problems—confounding from omitted variables, poor study design, missing data—that threaten the validity and useful interpretation of the results. Although improving the reproducibility of research may increase the rate at which flawed analyses are uncovered, as recent high-profile examples have demonstrated (4), it does not change the fact that problematic research is conducted in the first place.

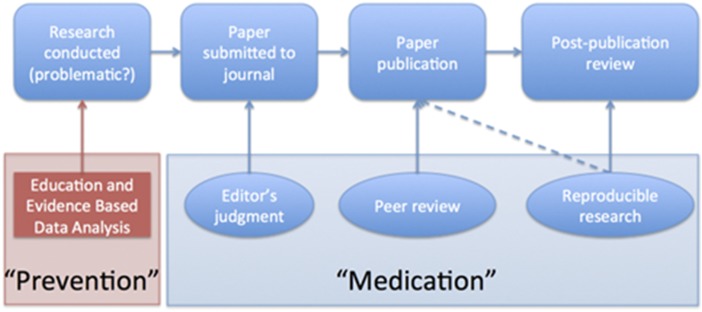

The key question we want to answer when seeing the results of any scientific study is “Can I trust this data analysis?” If we think of problematic data analysis as a disease, reproducibility speeds diagnosis and treatment in the form of screening and rejection of poor data analyses by referees, editors, and other scientists in the community (Fig. 1).

Fig. 1.

Peer review and editor evaluation help treat poor data analysis. Education and evidence-based data analysis can be thought of as preventative measures.

This medication approach to research quality relies on peer reviewers and editors to make this diagnosis consistently—which is a tall order. Editors and peer reviewers at medical and scientific journals often lack the training and time to perform a proper evaluation of a data analysis. This problem is compounded by the fact that datasets and data analyses are becoming increasingly complex, the rate of submission to journals continues to increase (9), and the demands on statisticians to referee are increasing. These pressures have reduced the efficacy of peer review in identifying and correcting potential false discoveries in the medical literature. Crucially, the medication approach does not address the problem at its source.

We suggest that the replication crisis needs to be considered from the perspective of primary prevention. If we can prevent problematic data analyses from being conducted, we can substantially reduce the burden on the community of having to evaluate an increasingly heterogeneous and complex population of studies and research findings. The best way to prevent poor data analysis in the scientific literature is to (i) increase the number of trained data analysts in the scientific community and (ii) identify statistical software and tools that can be shown to improve reproducibility and replicability of studies.

How can we dramatically scale up data science education in the short term? One approach that we have taken is through massive online open courses (MOOCs). The Johns Hopkins Data Science Specialization (jhudatascience.org) is a sequence of nine courses covering the full spectrum of data science skills from formulating quantitative questions, to cleaning data, to statistical analysis and producing reproducible reports. Thus far, we have enrolled more than 1.5 million students in this Specialization. A complementary approach is crowd-sourced short courses such as Data and Software Carpentry (software-carpentry.org) that have addressed the extreme demand for data science knowledge on a smaller scale.

However, simply increasing data analytic literacy comes at a cost. Most scientists in these programs will receive basic to moderate training in data analysis, creating the potential for producing individuals with enough skill to perform data analysis but without enough knowledge to prevent mistakes.

To improve the global robustness of scientific data analysis, we must couple education efforts with the identification of data analytic strategies that are most reproducible and replicable in the hands of basic or intermediate data analysts. Statisticians must bring to bear their history of developing rigorous methods to the area of data science.

A fundamental component of scaling up data science education is performing empirical studies to identify statistical methods, analysis protocols, and software that lead to increased replicability and reproducibility in the hands of users with basic knowledge. We call this approach evidence-based data analysis. Just as evidence-based medicine applies the scientific method to the practice of medicine, evidence-based data analysis applies the scientific method to the practice of data analysis. Combining massive scale education with evidence-based data analysis can allow us to quickly test data analytic practices in a population most at risk for data analytic mistakes (10).

In much the same way that epidemiologist John Snow ended a London cholera epidemic by removing a pump handle to make contaminated water unavailable, we have an opportunity to attack the crisis of scientific reproducibility at its source. Dramatic increases in data science education, coupled with robust evidence-based data analysis practices, have the potential to prevent problems with reproducibility and replication before they can cause permanent damage to the credibility of science.

Footnotes

Any opinions, findings, conclusions, or recommendations expressed in this work are those of the authors and do not necessarily reflect the views of the National Academy of Sciences.

References

- 1.Peng RD. 2011. Reproducible research in computational science. Science (New York) (6060):1226–1227.

- 2.Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baggerly KA, Coombes KR. Deriving chemosensitivity from cell lines: Forensic bioinformatics and reproducible research in high-throughput biology. Ann Appl Stat. 2009;3(4):1309–1334. [Google Scholar]

- 4.Herndon T, Ash M, Pollin R. Does high public debt consistently stifle economic growth? A critique of Reinhart and Rogoff. Camb J Econ. 2014;38(322):257–279. [Google Scholar]

- 5. Marcus G, Davis E (2014) Eight (no, nine!) problems with big data. Available at www.nytimes.com/2014/04/07/opinion/eight-no-nine-problems-with-big-data.html?\s\do5(r)=0. Accessed November 19, 2014.

- 6.Alberts B, Stodden V, Young S, Choudhury S. 2013. Testimony on scientific integrity & transparency. Available at www.science.house.gov/hearing/subcommittee-research-scientific-integrity-transparency. Accessed November 19, 2014.

- 7.Bloom T. 2014. PLOS’s new data policy: Part two. Available at blogs.plos.org/everyone/2014/02/24/plos-new-data-policy-public-access-data-2/. Accessed November 19, 2014.

- 8.Goecks J, Nekrutenko A, Taylor J. Galaxy Team Galaxy: A comprehensive approach for supporting accessible, reproducible, and transparent computational research in the life sciences. Genome Biol. 2010;11(8):R86. doi: 10.1186/gb-2010-11-8-r86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jager LR, Leek JT. An estimate of the science-wise false discovery rate and application to the top medical literature. Biostatistics. 2014;15(1):1–12. doi: 10.1093/biostatistics/kxt007. [DOI] [PubMed] [Google Scholar]

- 10.Fisher A, Anderson GB, Peng R, Leek J. A randomized trial in a massive online open course shows people don’t know what a statistically significant relationship looks like, but they can learn. PeerJ. 2014;2:e589. doi: 10.7717/peerj.589. [DOI] [PMC free article] [PubMed] [Google Scholar]