Abstract

As technology advances, robots and virtual agents will be introduced into the home and healthcare settings to assist individuals, both young and old, with everyday living tasks. Understanding how users recognize an agent’s social cues is therefore imperative, especially in social interactions. Facial expression, in particular, is one of the most common non-verbal cues used to display and communicate emotion in on-screen agents (Cassell, Sullivan, Prevost, & Churchill, 2000). Age is important to consider because age-related differences in emotion recognition of human facial expression have been supported (Ruffman et al., 2008), with older adults showing a deficit for recognition of negative facial expressions. Previous work has shown that younger adults can effectively recognize facial emotions displayed by agents (Bartneck & Reichenbach, 2005; Courgeon et al. 2009; 2011; Breazeal, 2003); however, little research has compared in-depth younger and older adults’ ability to label a virtual agent’s facial emotions, an import consideration because social agents will be required to interact with users of varying ages. If such age-related differences exist for recognition of virtual agent facial expressions, we aim to understand if those age-related differences are influenced by the intensity of the emotion, dynamic formation of emotion (i.e., a neutral expression developing into an expression of emotion through motion), or the type of virtual character differing by human-likeness. Study 1 investigated the relationship between age-related differences, the implication of dynamic formation of emotion, and the role of emotion intensity in emotion recognition of the facial expressions of a virtual agent (iCat). Study 2 examined age-related differences in recognition expressed by three types of virtual characters differing by human-likeness (non-humanoid iCat, synthetic human, and human). Study 2 also investigated the role of configural and featural processing as a possible explanation for age-related differences in emotion recognition. First, our findings show age-related differences in the recognition of emotions expressed by a virtual agent, with older adults showing lower recognition for the emotions of anger, disgust, fear, happiness, sadness, and neutral. These age-related difference might be explained by older adults having difficulty discriminating similarity in configural arrangement of facial features for certain emotions; for example, older adults often mislabeled the similar emotions of fear as surprise. Second, our results did not provide evidence for the dynamic formation improving emotion recognition; but, in general, the intensity of the emotion improved recognition. Lastly, we learned that emotion recognition, for older and younger adults, differed by character type, from best to worst: human, synthetic human, and then iCat. Our findings provide guidance for design, as well as the development of a framework of age-related differences in emotion recognition.

Keywords: Older adults, Younger adults, Aging, Virtual agents, Emotion recognition, Emotion expression

1. INTRODUCTION

People have been fascinated with the concept of intelligent agents for decades. Science fiction machines, such as Rosie from the Jetsons or C3PO from Star Wars, are idealized representations of advanced forms of technology coexisting with humans. Recent research and technology advancements have shed light onto the possibility of intelligent machines becoming a part of everyday living and socially interacting with human users. As such, understanding fluid and natural social interactions should not be limited to only the study of human-human interaction. Social interaction is also involved when humans are interacting with an agent, such as a robot or animated software agent (e.g., virtual agent).

The label “agent” is widely used and there is no agreed upon definition. Robots and virtual agents can both be broadly categorized as agents; however, there is differentiation between the terms. A robot is a physical computational agent (Murphy, 2000; Sheridan, 1992). A virtual agent does not have physical properties; rather, it is embodied as a computerized 2D or 3D software representation (Russell & Norvig, 2003). Whether an agent is robotic or virtual, it can be broadly defined as a hardware or software computational system that may have autonomous, proactive, reactive, and social ability (Wooldridge & Jennings, 1995). The social ability of the agent may be further defined as social interaction with either other agents or people.

It is generally accepted people are willing to apply social characteristics to technology. Humans have been shown to apply social characteristics to computers, even though the users admit that they believe these technologies do not possess actual human-like emotions, characteristics, or “selves” (Nass, Steuer, Henriksen, & Dryer, 1994). Humans have been shown to elicit social behaviors toward computers mindlessly (Nass & Moon, 2000), as well as to treat computers as teammates with personalities, similar to human-human interaction (Nass, Fogg, & Moon, 1996; Nass, Moon, Fogg, & Reeves, 1995).

How are social cues communicated? Facial expressions are one of the most important media for humans to communicate emotional state (Collier, 1985), and a critical component in successful social interaction. Similarly, facial expression is one of the most common non-verbal cues used to display emotion in onscreen agents (Cassell, Sullivan, Prevost, & Churchill, 2000). Humans learn and remember hundreds (if not thousands) of faces throughout a lifetime. Face processing may be special for humans and primates due to the social importance placed on facial expressions. Emotional facial expressions may be defined as configurations of facial features that represent discrete states recognizable across cultures and norms (Ekman & Friesen, 1975). These discrete states, as proposed by Ekman and Friesen, are often referred to as ‘basic emotions.’ Research on emotions has evolved over the decades, with the exact number and definition of basic emotions debated. In later research Ekman considered as many as 28 emotions as having some or all of the criteria for being considered basic (Ekman & Cordaro, 2011). Nonetheless, six of these emotions (anger, disgust, fear, happiness, sadness, and surprise) have been studied in detail (Ekman & Friesen, 2003; Calder et al. 2003; Sullivan & Ruffman, 2004). Internationally standardized photograph sets (Beaupre & Hess, 2005; Ekman & Friesen, 1978) make it possible to compare results across studies for this set of emotions.

An agent may make use of facial expressions to facilitate social interaction, communication, and express emotional state, requiring the user to interpret its facial expressions. To facilitate social interaction, a virtual agent will need to demonstrate emotional facial expression effectively to depict its intended message. Emotion is thought to create a sense of believability by allowing the viewer to assume that a social agent is capable of caring about its surroundings (Bates, 1994) and creating a more enjoyable interaction (Bartneck, 2003). The ability of an agent to express emotion may play a role in the development of intelligent technology. Picard (1997) stressed that emotion is a critical component and active part of intelligence. More specifically, Picard stated that “computers do not need affective abilities for the fanciful goal of becoming humanoids; they need them for a meeker and more practical goal: to function with intelligence and sensitivity toward humans” (p. 247).

Social cues, such as emotional facial expression, are not only critical in creating intelligent agents that are sensitive and reactive toward humans, but will also affect the way in which people respond to the agent. The impetus of this research was to investigate how basic emotions may be displayed by virtual agents that are appropriately interpreted by humans.

1.2 Emotion Expression of Virtual and Robotic Agents

There are many agents, applied to a variety of applications, developed to express emotion. Virtual agents, such as eBay’s chatbots, ‘Louise and ‘Emma,’ have been used to provide users with instructional assistance when using a web-based user interface. Video game users both young and old engage in virtual words, interacting with other agents or avatars in a social manner. Previous research has shown that participants’ recognition of facial emotion of robotic characters and virtual agents are similar (Bartneck, Reichenbach, & Breemen, 2004), and commercial robot toys, such as Tiger Electronics and Hasbro’s Furby, have been designed to express emotive behavior and social cues.

The development of agents with social capabilities affords future applications in social environments, requiring collaborative interaction with humans (Breazeal, 2002; Breazeal et al., 2004). In fact, a growing trend in intelligent agent research is addressing the development of socially engaging agents that may serve the role of assistants in home or healthcare settings (Broekens, Heerink, & Rosendal, 2009; Dautenhahn, Woods, Kaouri, Walters, Koay, & Werry, 2005). Assistive agents are expected to interact with users of all ages; however, the development of assistive intelligent technology has the promise of increasing the quality of life for older adults in particular. Previous research suggests that older adults are willing to consider having robotic agents in their homes (Ezer, 2008; Ezer, Fisk, & Rogers, 2009). For example, one such home/healthcare robot, known as Pearl, included a reminder system, telecommunication system, surveillance system, the ability to provide social interaction, and a range of movements to complete daily household tasks (Davenport, 2005).

Many agent applications may require some level of social interaction with the user. The development of social agents has a long academic history, with a number of computational systems developed to generate agent facial emotion. Some of these generation systems (e.g., Breazeal, 2003; Fabri, Moore, & Hobbs, 2004) have been modeled from psychological-based models of emotion facial expression, such as FACS (Ekman & Friesen, 1978) or the circumplex model of affect (Russell, 1980). Another approach has focused on making use of animation principles (e.g., exaggeration, slow in/out, arcs, timing; Lasseter, 1987) to depict emotional facial expression (e.g., Becker-Asano & Wachsmuth, 2010) and other non-verbal social cues (Takayama, Dooley, & Ju, 2011). Emotion generation systems are generally developed with the emphasis of creating “believable” agent emotive expression..

Although some previous research has incorporated the generation of agent facial expression based on the human emotion expression literature (i.e., how humans demonstrate emotion), the design of virtual agents can be further informed by the literature on human emotion recognition (i.e., how humans recognize and label emotion). Can agents be designed to express facial expression effectively? Studies that have investigated humans’ recognition of agent emotional facial expressions have suggested that the agent faces are recognized categorically (Bartneck & Reichenbach, 2005; Bartneck, Reichenbach, & Breeman), and can be labeled effectively with a limited number of facial cues (Fabri, Moore, & Hobbs, 2004). As previously mentioned, emotion recognition is integral in humans’ everyday activities; however, humans’ ability to recognize agent expression likely depend on many factors. For example, wrinkles, facial angle, and gaze are all design factors known to affect recognition and perceptions of expressivity for virtual agents (Courgeon, Buisine, & Martin, 2009; Courgeon, Clavel, Tan & Martin, 2011; Lance & Marsella, 2010). Given that assistive agents are likely to interact with a range of users, other factors such as the user’s age, perceptual capability, and intensity of the agent emotion must be considered as well.

1.3 Understanding Human Facial Expressions of Emotion

How humans interpret other humans’ facial expressions may provide some insight into how they might recognize agent facial expressions. Due to the importance of emotion recognition in social interaction, it is not surprising that considerable research has been conducted investigating how accurately people recognize human expression of the six basic emotions. The literature suggests that emotion recognition is largely dependent on the following four factors: age, facial features, motion, and intensity.

Age-Related Differences in Emotion Recognition

Age brings about many changes such as cognitive, perceptual, and physical declines. Such declines can potentially be eased or mitigated by socially assistive agents and technological interventions (Beer et al., 2001; Broadbent et al., 2009). However, an open question remains, how well can older adults recognize emotions of agents? It is easy to imagine social virtual and robotic agents assisting older adults with household tasks, therapy, shopping, and gaming. The social effectiveness of these agents will depend on whether the older adult recognizes the expressions the agent is displaying.

The literature suggests that the ability to recognize emotional facial expressions differs across adulthood, with many studies investigating differences between younger adults, typically aged 18–30, and older adults, typically aged 65+ years old. Isaacowitz et al. (2007) summarized the research in this area conducted within the past fifteen years. Their summary (via tabulating the percentages of studies resulting in significant age group differences) found that of the reviewed studies, 83% showed an age-related decrement for the identification of anger, 71% for sadness, and 55% for fear. No consistent differences were found between age groups for the facial expressions of happiness, surprise, and disgust. These trends in age group differences were also reported in a recent meta-analysis (Ruffman, Henry, Livingstone, & Phillips, 2008).

Older and younger adults’ differences in labeling emotional facial expressions appear to be relatively independent of cognitive changes that occur with age (Keightley et al., 2006; Sullivan & Ruffman, 2004). Theories of emotional-motivational changes with age posit that shifts in emotional goals and strategies occur across adulthood. One such motivational explanation, the socioemotional selectivity theory, suggests that time horizons influence goals (Carstensen, Isaacowitz, & Charles, 1999), resulting in many outcomes including a positivity effect. That is, as older adult near the end of life, their goals shift so that they are biased to attend to and remember positive emotional information compared to negative information (Carstensen & Mikels, 2005; Mather & Carstensen, 2003; 2005).

Other related motivational accounts suggest that emotional-motivational changes are a result of compensatory strategies to adapt to age-related declining resources (e.g., Labouvie-Vief, 2003) or emotional-regulation strategies actually becoming less effortful with age (e.g., Scheibe & Blanchard-Fields, 2009). The positivity effect has been suggested as a possible explanation for age-related decrements in emotion recognition (Ruffman et al., 2008; Williams et al., 2006) for negative emotions such as anger, fear, and sadness; however, the effect does not explain why older adults have been shown to sometimes recognize disgust just as well, if not better, than younger adults (Calder et al., 2003).

Note that the literature generally indicates that older adults show a deficit in recognition of negative emotions. However in one study, where the cognitive demands of the task were minimized, age-related differences in recognizing anger and sadness (Mienaltowski et al., 2013).

Configural and Featural Processing of Human Facial Expressions of Emotion

Early studies have provided evidence that faces are processed holistically, meaning that faces are more easily recognized when presented as the whole face rather than as isolated parts/features (Tanaka & Farah, 1993; Neath & Itier, 2014). Additional work by Farah, Tanaka, & Drain (1995) showed that inverted faces were harder to recognize than inverted objects, suggesting that the spatial relations of features are important in face recognition. Similarly, the recognition of facial emotions is influenced by both configural and featural processing of human facial expressions (McKelvie, 1995). The arrangement of the features of the face (e.g., mouth, eyebrows, eyelids) influences both processing of the face holistically and by its individual features to some degree (McKelvie, 1995). As such, facial features may be a critical factor in processing an emotional facial expression (see Frischen, Eastwood, & Smilek, 2008 for summary).

Despite the support for holistic processing of faces, some posit that age related differences in emotion recognition may be explained by the way in which older and younger adults perceive or attend to the individual facial components (e.g., facial features) that convey an expression. In other words, some suggest age related differences in emotion recognition might be attributed to biological changes in the perceptual systems and visuospatial ability involved in processing facial features (Calder et al. 2003; Phillips, MacLean, & Allen, 2002; Ruffman et al., 2008; Suzuki, Hoshino, Shigemasu, & Kawamura, 2006). For example, older adults may focus their attention on mouth regions of the face, rather than eye regions (Sullivan, Ruffman, & Hutton, 2007). One explanation for this bias is that the mouth is less threatening than the eyes; because negative facial emotions are generally assumed to be more distinguishable according to changes in the eyes, attending to mouth regions may result in less accurate identification of negative emotion (Calder, Young, Keane, & Dean, 2000).

Intensity and Motion of Human Facial Expressions of Emotion

While most of the research discussed thus far relates to studies investigating emotion recognition of static facial expressions, it is important to note that in everyday interactions emotion are depicted as a dynamic formation, with faces transitioning between emotions and various intensities of emotions through motion. Although emotional facial expressions vary in intensity in day-to-day living, they are usually subtle (Ekman, 2003). Investigating peoples’ recognition of facial emotion across all intensities (i.e., subtle to intense) provides a better understanding of how people process and interpret emotional facial expression in everyday interactions. For example, understanding what level of intensity a facial emotion should be expressed to support accurate recognition is an important factor to consider for both human-human and human-agent interaction. For both virtual agents (Bartneck & Reichenbach, 2005; Bartneck, Reichenback, & Breeman, 2004) as well as human faces (Etcoff & Magee, 1992), recognition of emotional facial expressions improve as the intensity of the emotion increases, but in a curvilinear fashion. Thus the relationship between recognition and intensity is not a 1:1 ratio. Typically once a facial emotion reaches some threshold, recognition reaches a ceiling, suggesting a categorical perception of facial expressions (Bartneck & Reichenbach, 2005; Etcoff & Magee, 1992). However, it is unclear how intensity of facial expressions displayed by a virtual agent may influence age-related differences in emotion recognition, and whether the threshold for emotion recognition differs across age groups.

Not only are emotional facial expressions displayed at varying intensities, but also in everyday interaction the expression of emotion usually dynamically changes in both intensity and from one emotion to another. Thus, the idea is that the formation of emotional facial expression may contain information additional to static pictures (Bould, Morris, & Wink, 2008). Seeing facial emotions in motion (i.e., dynamic formation) may facilitate recognition of facial expressions at less intense levels. Bould and Morris (2008) found that younger adults had higher recognition of emotions when viewing the dynamic formation of emotions as opposed to seeing a single static picture or several static pictures in sequence (multi-static condition). The multi-static condition contained the same amount of frames as the dynamic condition but had a masker between each frame to remove the perception of motion. Their findings as well as others suggest some aspect of motion aids emotion recognition more than viewing the same number of static frames in sequence (Ambadar et al., 2005; Bould & Morris, 2008). To date, research investigating older adults’ recognition of dynamic emotions is largely lacking.

Adding motion information may help individuals, even older adults, to recognize emotional facial expressions at more subtle intensities. In particular, older adults have been shown in laboratory studies to have difficulty identifying anger, sadness, and fear from static pictures of human faces (Ruffman et al., 2008). Showing the dynamic formation of facial emotion is more reflective of emotion expression formation in daily life, which older adults are more familiar with than the static faces in the laboratory. Motion could provide some additional information potentially making facial emotions less ambiguous.

1.4 Goal of Current Research

As outlined in the literature review, emotional facial expression is a critical component in successful social interaction, and thus will be critical in designing agents with social ability. Although research has been conducted investigating the role of social cues in human-agent interaction (Bartneck & Reichenbach, 2005; Becker-Asano & Wachsmuth, 2010; Breazeal, 2003; Fabri, Moore, & Hobbs, 2004), little research has compared in-depth younger and older adults’ ability to label the facial emotion displayed by a social agent, an important consideration because socially assistive agents will be required to interact with users of varying ages. Compared to previous works, the goal of the current research is to understand age-related differences in emotion recognition; we believe it is critical to understand how people of all ages interpret social cues the agent is displaying, particularly facial expression of emotion. Overall, age-related differences in emotional recognition of human facial expressions have been supported (Ruffman et al., 2008). If such age-related differences exist for recognition of virtual agent facial expressions, we aim to understand if those age-related differences are related to the intensity of the emotion, dynamic formation of emotion, or the character type.

The literature suggests that while emotion expression is an important component of communication in all cultures, particularly human recognition of the six basic emotions (Ekman & Friesen, 1975; 2003). Although we recognize that humans express and recognize hundreds of expressions, we focus on six basic emotions that have been examined previously in detail; they also represent emotions for which age-related differences have been most prevalently found.

If in fact people do apply social characteristics to technology in the same way in which they do to other humans (e.g., Nass, Fogg, & Moon, 1996; Nass & Moon, 2000), it is unknown whether certain variables (i.e., intensity, dynamic formation, and character type) affect the recognition of facial expressions displayed by a virtual agent, and, importantly, to what degree the effects of these variables differ across age groups. Specifically to better understand age-related differences in facial emotion recognition, our research aimed to:

Assess age-related differences in emotion recognition of virtual agent emotional facial expressions. Furthermore, we assessed misattributions older and younger adults made when identifying agent emotions to better understand the nature of their perceptions. [Study 1 and Study 2]

Consider the implication of dynamic formation of emotional facial expressions (i.e., motion), with further consideration of possible age-related differences in emotion recognition. [Study 1]

Examine the role of facial emotion intensity in emotion recognition, with emotions ranging from subtle to extreme, and whether emotions of varying intensity increase/decrease age-related differences. [Study 1]

Investigate age-related differences in emotion recognition of facial expressions displayed by a variety of agent characters. That is, the agent characters ranged in human-like features and facial arrangement, so we may better understand the role of configural and featural processing in emotion recognition. [Study 2]

The research presented here is part of a large research project, and preliminary results have been published (Beer, Fisk, & Rogers 2009; 2010; Smarr, Fisk, & Rogers, 2011). The current report differs from previous publications by:

Including additional data (i.e., intensity in Study 1)

Describing our methodology in detail regarding the creation of emotional facial expressions based on Ekman and Friesen’s (1975; 2003) six basic emotions (i.e., Study 1 and Study 2)

Reporting additional analyses to investigate misattributions participants made when labeling facial emotions (i.e., Study 2)

Reporting additional analyses to investigate feature similarity as an explanation for misattributions of labeling facial emotions (i.e., Study 2)

Synthesizing the results from both Study 1 and Study 2 to move toward an understanding of the theoretical underpinnings of emotion recognition

Discussing the implications our data have for the design of virtual agents to be used by older and younger adults

In sum, our results contribute to both a better understanding of emotion recognition, as well as provide design guidelines for developers to consider when creating virtual agents for younger and older adults.

2. STUDY ONE

As previously discussed, there is substantial evidence for age-related deficits in labeling emotional facial expressions displayed by humans (Ruffman et al., 2008 for a review). Most experiments (14 of 17) included in Ruffman et al.’s (2008) meta-analysis used static facial expressions. Do age-related deficits still exist if people can see the dynamic formation of the emotion (i.e., a neutral expression developing into an expression of emotion through motion)? In other words, in day-to-day interaction, emotions are depicted not as static facial expressions, but rather as a dynamic formation of faces transitioning between various intensities of emotions.

The recognition of facial emotions is influenced by both configural and featural processing of human facial expressions (McKelvie, 1995). The arrangement of the features of the face (e.g., mouth, eyebrows, and eyelids) influences both processing of the face holistically and by its individual features to some degree (McKelvie, 1995).

Most research has used static photographs such as Ekman and Friesen’s (1976) pictures of human facial stimuli. However, little research has focused on manipulating the dynamic formation of emotional facial expressions and how that influences older adults’ recognition of emotions. Static pictures may not be representative of information used to determine emotion during interactions in daily life because they are devoid of motion and often depict highly intense facial expressions (Caroll & Russell, 1997). Although emotional facial expressions vary in intensity in day-to-day living, they are usually subtle (Ekman, 2003) and seeing facial emotions in motion may facilitate recognition of facial expressions at less intense levels.

Previous research with human and synthetic human faces has provided evidence of a dynamic advantage, or better recognition of emotion when viewing a face in motion versus a static picture. The dynamic advantage has been found for younger and middle-aged adults (Ambadar, Schooler, & Cohn, 2005; Bould & Morris, 2008; Bould, Morris, & Wink, 2008; Wehrle, Kaiser, Schmidt, & Scherer, 2000). However, this dynamic advantage was attenuated with expressions of higher intensity (Bould & Morris, 2008).

Unfortunately, the previous studies (e.g., Ambadar, Schooler, & Cohn, 2005; Wehrle et al., 2000) demonstrating a dynamic advantage for younger and middle-aged adults confounded dynamic display with total presentation time. Hence, it is unclear if the dynamic advantage observed was due to facial emotion development relative to static information or simply to more time to view the emotional facial expression. More specifically, Ambadar and colleagues (2005) displayed dynamic formation of emotion, then allowed participants to view the final frame (displayed as a static image) until they responded. Therefore, the dynamic “advantage” may be due to, in part, an additive effect of the dynamic display followed by a static display of emotion with unlimited duration. In the current study, we unconfounded the effect of total presentation time and specifically examined the benefit of dynamic presentation of facial emotion. As illustrated in Figure 1, the methodology used in the present research unconfounded this issue because the dynamic and static conditions had relatively equal presentation times.

FIGURE 1.

Comparison of dynamic and static conditions used the current study and in Ambadar et al. (2005).

The current study was carefully designed to assess the effects of dynamic formation of emotional facial expression and expression intensity on emotion recognition for younger and older adults. It extends the investigation of the dynamic advantage found with human faces to that of virtual agents as a possible explanation for age-related differences in emotion recognition. Specifically, we addressed whether motion per se influenced emotion recognition of a virtual agent’s facial expression and if so, whether the effect(s) interacted with age and across a range of emotion intensities.

To address these questions, the Philips Virtual iCat was used because its facial features are able to demonstrate facial expression via manipulation of individual facial feature placement (e.g., eyes, eyebrows; Bartneck, 2003; Bartneck & Reichenbach, 2005; Beer, Fisk, & Rogers, 2009). Younger and older adults were randomly assigned to one of two motion conditions. In the dynamic condition, participants viewed the virtual agent’s dynamic formation of an emotional expression from neutral to one of five levels of intensity. In the static condition, participants viewed the static picture of an expression. The emotional expressions were anger, fear, disgust, happiness, sadness, and surprise. Each emotion expression was presented at five different emotion intensities (20%, 40%, 60%, 80%, and 100%). At the end of each stimulus presentation, participants selected which facial expression (anger, fear, disgust, happiness, sadness, surprise, or neutral) they thought the virtual agent displayed. A large number of trials was used to get a stable estimate of participants’ emotion interpretations across the conditions.

2.1 Participants

Thirty-one younger adults aged 18–26 years old participated in this study (M = 19.87, SD = 1.93; 14 males). Twenty-nine older adults aged 65–85 years old participated as well (M = 73.97, SD = 4.28; 16 males). The younger adults were recruited from the Georgia Institute of Technology undergraduate population, and received credit for participation as a course requirement. The older adults were community-dwelling Atlanta-area residents, and were recruited from the Human Factors and Aging Laboratory participant database. The older adults received monetary compensation of $25 for their participation in this study. All participants had 20/40 or better visual acuity for near and far vision (corrected or uncorrected). All participants reported their race/ethnicity. Older adults reported themselves as 83% Caucasian, and 17% minority. For the younger adults, 42% reported themselves as Caucasian, and 58% as minority. All participants spoke fluent English.

Due to recruitment logistics, all the older adult participants had experience with the virtual iCat; this experience was one year or more prior to participating in the present study. The younger adults, however, did not have prior experience with the agent. Two measures were taken to ensure experience did not influence the emotion recognition data. First, the older adult data from this study were compared to the emotion recognition data in a previous study that provided no feedback on performance (Beer, Fisk, & Rogers, 2010). The older adults’ patterns of emotion recognition were not statistically different (F(1, 41) = 0.39, p = .54, ηp2 = .01) between the two studies. Second, both younger and older adults participated in multiple practice phases to alleviate any novelty effect the agent may have one emotion recognition. The practice phases included participants familiarizing themselves with the response keys, the virtual agent, and the experimental procedure.

To assess cognitive ability, all participants completed six ability tests: Benton Facial Discrimination Test-short form (Levin, Hamsher & Benton, 1975), choice reaction time test (locally developed), Digit Symbol Substitution (Wechsler, 1997), Digit Symbol Substitution Recall (Rogers, 1991), Reverse Digit Span (Wechsler, 1997), Shipley Vocabulary (Shipley, 1986), and the Snellen Eye chart (Snellen 1868). Table 1 depicts the means and standard deviations for the ability tests within each age group. No significant differences were found between motion conditions for participants’ performance on abilities tests (ps > .27) so data were combined for Table 1. Younger adults’ self-reported health was better than the older adults’ self-reported health (F(1, 56) = 4.57, p = .04, ηp2 = .08; Table 1). Finally, the older adults were highly educated, with 79% reporting having some college or higher. Note that the Benton Facial Recognition ability test yielded no significant differences between older and younger adults, indicating that participants in both age groups had similar facial discrimination ability. These data were consistent with past research (Czaja, Charness, Fisk, Hertzog, Nair, Rogers, & Sharit, 2006; Benton, Eslinger, & Damasio, 1981) and all participants’ ability scores were within the expected range for their age group.

TABLE 1.

Younger and older adult scores on ability tests.

| Younger Adults

|

Older Adults

|

F value | |||

|---|---|---|---|---|---|

| M | SD | M | SD | ||

| Benton Facial Recognitiona | 48.16 | 2.83 | 46.90 | 3.64 | 2.18 |

| Choice Reaction Timeb | 320.58 | 39.70 | 449.21 | 117.81 | 33.14* |

| Digit-Symbol Substitutionc | 77.39 | 11.14 | 52.21 | 11.65 | 72.87* |

| Reverse Digit Spand | 9.19 | 2.56 | 7.03 | 2.29 | 11.36* |

| Shipley Vocabularye | 29.94 | 3.65 | 35.00 | 2.94 | 34.06* |

p<.05.

Facial discrimination (Levin, Hamsher & Benton, 1975); score was total number correct converted to 54 point scale.

Response time (locally developed); determined by 45 trial test in ms for both hands.

Perceptual speed (Weschler, 1997); score was total number correct of 100 items.

Memory span (Weschler, 1997); score was total correct for the 14 sets of digits presented.

Semantic knowledge (Shipley, 1986); score was the total number correct from 40.

2.2 Apparatus/Materials

Philips’ Open Platform for Personal Robotics (OPPR) software was used to create each expression by manipulating individual servos of the Virtual iCat’s face. E-Prime was used to develop a software program to display the expressions to participants (Psychology Software Tools, Pittsburgh, PA). Dell Optiplex 760 computers with 508 mm monitors displaying 1280 × 1024 pixels in 32 bit color were used to run the software program. All textual instructions on the computer screen were displayed in 18 point font size. The software program displayed the stimuli as 631 × 636 pixels in size. Participants were seated approximately 635 mm from the computer monitor. The stimuli were 187.96 mm in width by 189.23 mm in height subtending a visual angle of approximately 17 degrees.

A QWERTY keyboard was used for participants to indicate their responses by pressing a key. The participant pressed one of seven numeric keys (`, 2, 4, 6, 8, 0, =) that corresponded to and were labeled with anger, fear, disgust, happiness, sadness, surprise, and neutral. The participant’s response and response time (RT) in milliseconds were recorded.

Virtual Agent

The virtual agent face used in this study was the Philips iCat robot. We chose this platform for a number of reasons. First, our goal was to investigate feature-based facial expressions, thus the iCat provided a character that was less complex than a realistic simulation of a human. Second, the iCat does not pose a risk of participants encountering the uncanny valley (Mori, 1970). Originally proposed for robots, the Uncanny Valley assumes that when an agent is almost human looking a persons’ liking or familiarity of it decreases. We postulate that this negative reaction might possibly apply to virtual agents as well. Finally, we chose the iCat due to the evidence (e.g., Bartneck & Reichenbach, 2005; Bartneck, Reichenbach, & Breeman, 2004) suggesting it is a platform suitable for studying emotionally expressive agents.

The iCat is equipped with 13 servo motors, 11 of which control different features of the face. The virtual agent used was an animated replica of the iCat robot, capable of creating the same facial expressions with the same level of control. The virtual agent’s emotions were created using Philips’s OPPR software. OPPR consists of a Robot Animation Editor for creating the animations, providing control over each individual servo motor. Specifically, the iCat specifies the following 11 facial control parameters: (1) left eye gaze; (2) right eye gaze; (3) left eyebrow; (4) right eyebrow; (5) left upper eye lid; (6) right upper eye lid; (7) right upper lip; (8) left upper lip; (9) right lower lip; (10) left lower lip; and (11) head tilt.

Using the OPPR software, we developed the six basic facial expressions of emotion: anger, disgust, fear, happiness, sadness, and surprise. Similar to methodology used by Fabri, Moore, and Hobbs (2004), the virtual agent’s emotions were based on Ekman and Friesen’s (1975; 2003) qualitative descriptions of universally recognized emotive facial expressions (see Table 2). The qualitative descriptions are related to or based on facial action coding system (FACS; Ekman & Friesen, 1978), which is based on highly detailed anatomical studies of human faces that decomposes each facial expression into specific facial muscle movements. Although the iCat is not designed to emulate each facial muscle movement, control over the facial features (i.e., eyelids, lips, eyebrows) achieves a visual effect similar to the result of muscle activity in the face.

TABLE 2.

Qualitative descriptions of virtual iCat facial expressions.

| Qualitative Descriptions of Facial Expressions | |

|---|---|

|

Anger: Brows are lowered and drawn together. Upper lid is tense and may be lowered by the action of the brow. Lips are pressed firmly together with corners straight or down. |

|

Disgust: The upper lip may be raised. The lower lip may also be raised and pushed up to the upper lip, or is lowered and slightly protruding. The brow is lowered, and upper eyelids are also lowered. |

|

Fear: The brows are raised and drawn together. Upper eyelid raised (exposing sclera). Open mouth, but lips are either tense and drawn back, or stretched and drawn back. |

|

Happiness: Corners of lips are drawn back and up (lips may or may not be parted). Eyes are not tense. Brows may be neutral. |

|

Sadness: Inner corners of eyebrows are raised and drawn together. Eyes are slightly cast down. The corners of the lips are down. |

|

Surprise: The brows are raised, so that they are curved and high. Eyelids are open so expose white of eye (sclera). Upper and lower lips are parted. |

|

Neutral: Lips together, but not pressing. Eyes forward staring. Not tensing eyelids. |

Note: Qualitative descriptions adopted from Ekman and Friesen (1975; 2003). The descriptions only include the facial features available on the iCat.

All facial expressions were displayed in color with five intensities per emotion (5 intensities x 6 emotions = 30 expressions). The different intensities (for example see Figure 2) were fashioned by linear interpolation of the iCat’s servo positions in 20% increments from neutral (0%; no expression of emotion) to extreme emotion (100%; e.g., the saddest face the Virtual iCat can display). A previous study used 10% increments in an iCat’s emotion intensity, but found that participants could not discriminate a difference in intensity for such small steps (Bartneck et al., 2004).

FIGURE 2.

The iCat displaying the emotion happiness at varying intensities: 20%, 40%, 60%, 80%, 100%

Dynamic Condition

For a given trial in the dynamic condition, a participant was shown a 3.25 second video of the Virtual iCat transitioning from neutral to one of the 30 expressions (e.g., neutral to 80% happy). The first three seconds of the video showed the iCat transitioning and the last 0.25 seconds of the video showed the final expression.

Because each video shows the formation of an emotive expression from a neutral expression, the lack of change in a video of the iCat transitioning from neutral to neutral would be obvious. Thus, there are no neutral videos. However, neutral was a response that participants could choose because the lower intensities of the emotive facial expressions could be perceived as lacking emotion, or neutral.

Static Condition

In the static condition, participants saw a still picture of the emotive facial expression (30 expressions total) for 3.25 seconds instead of a video displaying the dynamic formation of the expression.

Ability Tests and Questionnaires

In addition to the abilities tests previously mentioned, participants completed a demographic and health questionnaire (adopted from Czaja et al., 2006). It collected data such as information on age, education, current health status, and medication regimen.

2.3 Design

Age (younger and older adults) was a grouping variable. There were three manipulated variables: (1) Motion Condition (dynamic or static) as a between-subjects variable; (2) Emotion Expression (anger, fear, disgust, happiness, sadness, and surprise) as a within-subjects variable; and (3) Expression Intensity (20%, 40%, 60%, 80%, and 100%) as a within-subjects variable. The dependent variable was a match of the participant’s response with the intended emotion from which a mean proportion match score was derived.

2.4 Procedure

After providing informed consent, the participant completed the demographic and health questionnaire and the aforementioned ability tests. Participants were offered a short break before beginning practice.

Practice was divided into three parts. First, to familiarize the participants with the response keys, a word representing an emotion (e.g., “disgust”) was displayed and they were required to press the correspondingly labeled key. Participants were able to practice correctly matching the text label of all emotion responses used in the study and their corresponding response keys. A given practice trial terminated when the label and response key were correctly matched. Each emotion word, including neutral, was displayed six times for a total of 42 practice trials.

Second, to help participants get accustomed to the Virtual iCat’s appearance, they were shown a static picture of it displaying a neutral expression. Participants had as much time as they needed to examine the picture. The second part of practice terminated when the participant pressed the “enter” key.

The last practice task allowed participants to become familiar with the sequencing of an experimental trial. Depending on random assignment, a participant saw either dynamic videos or static pictures of the Virtual iCat for 3.25 seconds. The participant had up to 27 seconds to respond by pressing the labeled key that matched the facial expression he or she perceived from the Virtual iCat’s face. Participants were instructed that their interpretation of the facial expression was more important than the time it takes for them to make a response. The experimenter guided each participant through two practice trials (neutral to 100% happy, neutral to 40% happy).

The experimental trials began after a participant finished the three practice tasks. Each participant completed 120 trials. The trials were presented to each participant in four blocks of 30 randomly permuted trials such that no more than two of the same emotion were presented consecutively.

Each trial began with the participant pressing the spacebar key which was followed immediately by an orientation cross (+) centered on the monitor for one second and either a dynamic or static display of an iCat expression for 3.25 seconds (depending on the participant’s condition assignment). Key responses were not valid or registered while an expression was on the screen. The expression disappeared after 3.25 seconds and the participant was immediately prompted to select an emotion by the question, “Which Emotion?” The participant had up to 27 seconds to make a response using the keys labeled with the emotion he or she thought the iCat was displaying. Once a response was made or 27 seconds elapsed without a response, the trial terminated. A screen prompting the participant to press the spacebar to begin the next trial was shown. All 30 facial intensity expressions were represented in each block of trials. Participants were offered a break at the mid-way point during a block (after 15 trials) and between blocks (after 30 trials). After completing all the experimental trials, participants were debriefed and compensated.

2.5 Results

In this section, mean proportion match is reported as a way to assess participants’ emotion recognition. The mean proportion match for each of the 30 facial expressions was calculated by averaging a participant’s match of his or her response to the intended emotion across the four times a facial expression was presented. Unless otherwise noted, for all statistical tests alpha was set at p < .05, all t-tests were conducted using two-tailed analysis. Huynh-Feldt corrections were used for F-tests with a repeated measures factor to meet the sphericity assumption. Bonferroni corrections were used where appropriate to control for Type I error.

Omnibus Analysis of Emotion Recognition

The effects of age, motion condition, emotion expression, and expression intensity on emotion recognition were analyzed via a 2 (age) × 2 (motion condition) × 6 (emotion expression) × 5 (expression intensity) mixed-design analysis of variance (ANOVA). The dependent variable was proportion match. All main effects and interactions were significant (p < .05) except: 4-way interaction (F(17.26, 966.59) = 0.72, p = .78, ηp2 = .01); age x motion condition x emotion expression interaction (F(4.81, 269.47) = 0.88, p = .49, ηp2 = .02); and the age x motion condition interaction (F(1, 56) = 2.58, p = .11, ηp2 = .04). Detailed below are the analyses further exploring the age-related interactive effects.

Motion, Intensity, and Age-related Differences Recognizing Emotion

The interaction among age, motion condition, and expression intensity for emotion recognition (F(3.35, 187.74) = 3.19, p = .02, ηp2 = .05) was investigated further by performing separate motion condition x expression intensity ANOVAs for younger adults and older adults.

Younger adults had lower proportion match at lower expression intensities (i.e., 20% and 40%) than at higher intensities (i.e., 60%, 80%, and 100%). Ten Bonferroni corrected (α = .005) paired t-tests were conducted to further examine the main effect of expression intensity (F(4, 116) = 156.99, p < .001, ηp2 = .84). That is, proportion match was compared for each pair of expression intensities (e.g., 20% vs. 40%, 60% vs. 100%). Proportion match was statistically lower for 20% and 40% expression intensities compared to higher expression intensities (i.e., 60%, 80%, and 100%). Emotion recognition did not statistically differ among higher expression intensities (i.e., 60%, 80%, and 100%).

Younger adults had similar levels of proportion match in the dynamic and static conditions. The main effect of motion condition (F(1, 29) = 0.40, p = 0.53, ηp2 = .01) and the expression intensity x motion condition interaction were not significant (F(4, 116) = 0.36, p = .84, ηp2 = .01).

Similar to younger adults, older adults had lower proportion match at lower expression intensities than higher intensities. A main effect of expression intensity was found (F(3.03, 81.67) = 48.92, p < .001, ηp2 = .64), suggesting that older adults’ proportion match varied among the levels of intensity. Ten Bonferroni corrected paired t-tests were conducted to examine the main effect of expression intensity. Proportion match for older adults was lower for 20% and 40% expression intensities than higher expression intensities. Expression intensities of 60%, 80% and 100% did not differ statistically from one another in proportion match.

A main effect of motion condition (F(1, 27) = 5.08, p = .03, ηp2 = .16) was found, with older adults’ proportion match lower in the dynamic condition than in the static condition.

In both motion conditions, older adults had significantly lower proportion match for lower expression intensities (i.e., 20% and 40%) than higher intensities (i.e., 60%, 80%, and 100%). There was a motion condition x expression intensity interaction (F(3.03, 81.67) = 56.17, p = .001, ηp2 = .19) (Figure 3), suggesting that older adult emotion recognition depends on both motion condition and expression intensity. A simple main effects analysis was used to explore this interaction further. First, an independent t-test compared proportion match of the dynamic condition against that of the static condition for each expression intensity. None of the Bonferroni corrected independent t-tests were statistically significant, suggesting that older adults’ proportion match was similar between the dynamic and static conditions for each expression intensity. Second, a set of 10 paired t-tests compared all possible pairs of expression intensity for each motion condition separately. Older adults had lower proportion match (e.g., 20% and 40%) for lower expression intensities than higher intensities (e.g., 60%, 80%, and 100%) in both motion conditions.

FIGURE 3.

Older adults’ emotion recognition in the dynamic and static conditions for five different expression intensities.

In sum, older adults’ proportion match depended on both motion condition and expression intensity whereas younger adults depended only on expression intensity. Older adults in the static condition had higher proportion match than those in the dynamic condition. Both age groups were worse at labeling emotion at lower expression intensities than higher intensities.

Intensity and Emotion: Understanding Age-Related Differences in Emotion Recognition

The interaction among age, emotion expression, and expression intensity for emotion recognition (F(17.26, 966.59) = 3.51, p < .001, ηp2 = .06) was investigated further by performing separate age x expression intensity ANOVAs for each emotion expression.

Older adults had lower proportion match than younger adults for anger, disgust, fear, happiness, and sadness (Table 3). Older adults were no worse than younger adults at labeling surprise.

TABLE 3.

Main effects of age and intensity for recognizing the six basic emotion expressions.

| Expression | Age

|

Expression Intensity

|

||

|---|---|---|---|---|

| F | ηp2 | F | ηp2 | |

| Anger | 77.50** | 0.72 | 40.38** | 0.41 |

| Disgust | 17.76** | 0.23 | 30.26** | 0.34 |

| Fear | 19.54** | 0.25 | 29.86** | 0.34 |

| Happy | 17.86** | 0.24 | 24.13** | 0.29 |

| Sad | 35.13** | 0.38 | 86.88** | 0.60 |

| Surprise | 0.57 | 0.01 | 9.12** | 0.14 |

p < .001

Collapsed across age groups, emotion recognition was lower for lower expression intensities than for higher intensities. There was also a main effect of expression intensity for all six emotion expressions respectively (Table 3). To further examine the main effect of expression intensity, 10 Bonferroni corrected dependent t-tests compared all expression intensities for each emotion. Proportion match was lower for lower expression intensities (e.g., 20% and 40%) than for higher expression intensities (e.g., 60%, 80%, and 100%).

Interaction effects of expression intensity and emotion

There were age x expression intensity interactions for the emotions of anger (F(4, 232) = 26.13, p < .001, ηp2 = .31) and sadness (F(3.68, 213.52) = 9.12, p = .03, ηp2 = .05) (Figure 4). The age x expression intensity interaction was not significant (ps > .27) for disgust, fear, happiness, or surprise (Figure 4). To further examine the interactions, separate simple main effects analyses were conducted for anger and sadness. The simple main effects analysis consisted of two steps. First, an independent t-test compared proportion match of younger adults and older adults for each expression intensity. Second, a set of 10 paired t-tests compared all possible pairs of expression intensity for each age group separately.

FIGURE 4.

Younger and older adults’ proportion match for five intensities of anger, disgust, fear, happiness, sadness, and surprise.

Anger

Older adults had lower proportion match than younger adults for the all intensities of anger greater than 20% (ps < .001) whereas both age groups had similar proportion match for 20% intensity of anger (t(45.59) = 0.91, p = .37; Figure 4). These results were revealed from five Bonferroni corrected independent t-tests (α = 0.01) comparing proportion match for younger versus older adults at each intensity of anger.

Younger adults had lower proportion match for lower expression intensities of anger than higher intensities (Figure 4) as revealed by 10 Bonferroni corrected paired t-tests comparing all possible pairs of expression intensity for anger was conducted for younger adults. Younger adults’ proportion match was lower (p < .0025) for lower intensities (i.e., 20% and 40%) of anger than for higher intensities (i.e., 60%, 80%, and 100%).

Older adults, unlike younger adults, did not improve their emotion recognition as the intensity of anger increased (Figure 4). That is, older adults’ emotion recognition did not differ (p > .0025) among any of the intensities for anger (means ranged from .05 to .16) as revealed by 10 Bonferroni corrected (α = .0025) paired t-tests comparing all possible pairs of intensity for anger was conducted for older adults.

Sadness

Similar to the pattern seen for anger, younger adults had higher proportion match than older adults for all intensities of sadness above 40% (ps < .001) whereas both age groups had similar proportion match for 20% intensity of sadness (t(49.81) = 2.14, p = .04; Figure 4). These results were revealed from Bonferroni corrected independent t-tests (α = 0.01) comparing proportion match for younger versus older adults at each intensity of sadness.

Similar to younger adults’ recognition of different intensities of anger, younger and older adults had lower emotion recognition for lower expression intensities of sadness than high intensities (Figure 4). Ten Bonferroni corrected paired t-tests comparing all possible pairs of expression intensity for sadness was conducted for younger adults and older adults separately (α = .0025). Younger adults and older adults respectively had statistically lower (p < .0025) proportion match for lower expression intensities (i.e., 20% and 40%) than higher intensities (i.e., 60%, 80%, and 100%).

Summary of Age-Related Differences in Emotion Recognition

Consistent with previous research (Ruffman et al., 2008), Study 1 found age-related deficits in recognizing the emotions anger, fear, happiness, and sadness. In contrast to previous findings (Ruffman et al., 2008), an age-related deficit in recognizing disgust was found. There was no age-related difference in recognizing surprise. However, this is not unexpected, because age-related decrements in recognizing surprise are found less often and when decrements are found, they are substantially smaller in magnitude than when recognizing other emotions (e.g., anger, fear, happiness, and sadness) (Ruffman et al., 2008).

Both age groups had lower proportion match for lower expression intensities (e.g., 20% and 40%) than higher intensities (e.g., 60%, 80%, and 100%) for all emotions with the exception of anger. Older adults’ proportion match was similar for anger regardless of expression intensity. For anger and sadness, older adults had significantly lower proportion match than younger adults at all expression intensities except 20%. This suggests that 20% expression intensity was less recognizable as the intended emotion.

2.6 Discussion of Study One

Study 1 was designed to address three aspects of emotion recognition of a virtual agent: (1) age-related differences, (2) dynamic formation of emotion, and (3) intensity of the emotion.

Regarding age-related differences, the findings suggest that the younger and older adults did not recognize emotions displayed by a virtual agent in the same way. Younger adults’ had higher proportion match than older adults for anger, disgust, fear, happiness, and sadness. These data suggest that age-related differences in emotion recognition of facial expressions, found for recognition of human faces (Ruffman et al., 2008), are present for virtual agents as well.

Previous research with human and synthetic human faces has provided evidence of a dynamic advantage, or better recognition of emotion when viewing a face in motion versus a static picture (Ambadar et al., 2005; Bould & Morris, 2008; Bould, Morris, & Wink, 2008; Wehrle et al., 2000). However, all of these previous studies confounded display type with display duration. It is important to note that the current study unconfounded those variables, thus enabling a more direct comparison of dynamic vs. static presentation. At least for recognition of facial emotion portrayed by a virtual agent, whether the emotion was presented dynamically or statically did not affect emotion recognition for younger adults. It did affect older adults’ emotion recognition, but in a negative way; older adults had worse emotion recognition in the dynamic condition than in the static condition. Therefore, our data suggest that the dynamic advantage found in previous studies (Ambadar et al., 2005; Bould & Morris, 2008; Bould, Morris, & Wink, 2008; Wehrle et al., 2000) may be the result of an extended display of the dynamic condition’s last frame (i.e., static presentation of the emotion until the participant makes a response). We believe our careful methodology provides compelling support for the fact that the dynamic advantage is not, in fact, advantageous for older adults. Rather the static display of the emotion is a better display option to ensure more accurate emotion recognition.

Finally, both age groups demonstrated less emotion recognition for low expression intensities than high expression intensities. Similar to previous research (Bartneck & Reichenbach, 2005), the findings suggest a curvilinear relationship of intensity and emotion recognition, with younger and older adults’ recognition of most emotions increasing as the intensity increases. For all emotional facial expressions except anger, the younger and older adults’ emotion recognition was highest for high intensities (60%, 80%, 100%) compared to low intensities. This positive effect of intensity on emotion recognition was not evident for the facial emotions of anger for older adults. For this emotion, older adults demonstrated low proportion match, across all intensities.

3. STUDY TWO

The results of Study 1 suggested that age-related differences in emotion recognition of facial expressions extend to those expression displayed by a virtual agent. Additionally, Study 1 suggested generally that as intensity of the emotional facial expression increases, so did emotion recognition in a curvilinear relationship (replication of Bartneck & Reichenbach, 2005). However, Study 1 did not find support for a dynamic advantage for older adults. To meet our overarching goal of understanding how older adults recognize and label emotional facial expressions, Study 2 included a number of important considerations. First, because the dynamic advantage was not supported, Study 2 was designed to investigate Study 1’s significant finding of age-related differences in emotion recognition of static faces in more detail. Second, we recognize that there are many types of virtual agents, of varying human-likeness (e.g., Becker-Asano & Ishiguro, 2011; Courgeon, Buisine, & Martin, 2009; Courgeon, Clavel, Tan, & Martin, 2011). Thus we explored whether Study 1’s results of age related differences in emotion recognition were specific to the iCat, or also observed for other agent types. For this study, three on-screen characters were compared. The facial expressions depicted by each character were chosen or created based upon similar criteria for emotion generation. To assess emotion recognition across a variety of character types, the three agents represented a range of human-likeness. In this study, we used a non-humanoid virtual agent (the iCat from Study 1), and added two more characters of increasing human-likeness: photographs of human and synthetic human faces.

Photographs of human faces were used in this study to allow the comparison of a human character to other on-screen characters of less human-likeness. Accordingly, a non-humanoid virtual agent was used to represent a character less human-like on the continuum (i.e., it was catlike in appearance). Additionally, the precise placement of facial features permitted the calculation of similarity between any two emotion feature arrangements.

A synthetic human character (Wilson et al., 2002; Goren & Wilson, 2006) was used because fundamental visual differences existed between the virtual agent and the photographs of human faces. For example, the virtual agent demonstrated emotional facial expressions by only using geometric positioning of facial features such as the eyebrows, mouth, and eyelids. The agent was incapable of demonstrating texture based transformations, such as wrinkling of the nose, which were present in the photographs of human faces. Similar to the virtual agent the synthetic faces displayed emotion using only facial feature geometric cues, yet its overall appearance was more humanoid by nature.

Because dynamic formation of emotional facial expression did not improve emotion recognition (i.e., Study 1), static pictures of these characters were presented to participants demonstrating four basic emotional facial expressions (anger, fear, happiness, and sadness) and neutral. These four emotions (plus neutral) were chosen because they have the most consistent age related differences in the literature (Isaacowitz et al. 2007; Ruffman et al. 2008) and showed age-related differences in Study 1. Study 1 also showed an age related difference for the facial emotion of disgust; however, disgust was not included in Study 2 because this particular emotion was not available in the synthetic human stimuli (Goren & Wilson, 2006). Participants responded by selecting an emotion they thought was displayed by the face. Using this method, the goals of the study were to investigate age-related differences in emotion recognition of the three characters (human, synthetic human, and virtual agent) and to compare emotion recognition of these three characters to one another.

Finally, a major goal of Study 2 was to investigate possible explanation for age-related differences in emotion recognition, particularly the possibility of age related differences in processing of facial features. Thus, additional analyses were conducted to assess misattributions, or mislabels, that participants made, as well as assess the relationship between emotion recognition and facial feature configuration of the iCat (i.e., older and younger adults’ ability to discriminate between facial emotions of similar configuration).

3.1 Participants

Forty-two younger adults, between the ages of 18 to 28 (M = 19.74, SD = 1.43, equal number of males and females), and forty-two older adults, between the ages of 65 to 85 (M = 72.48, SD = 4.69, equal number of males and females) participated in this study. Participants were recruited and compensated in the same manner as Study 1. All participants were screened to have visual acuity of 20/40 or better for far and near vision (corrected or uncorrected). Finally, the older adults were highly educated, with 86% of the participants reporting having some college or higher. All participants reported their race/ethnicity. Older adults reported themselves as 83% Caucasian, and 17% minority. For the younger adults, 57% reported themselves as Caucasian, and 43% as minority. All participants spoke fluent English.

All participants completed the same five ability tests as in Study 1 and the results are presented in Table 4. Overall age differences (p < .05) for ability tests were the same patterns as Study 1: Younger adults were faster on choice response time, and provided more correct answers for digit-symbol substitution, digit-symbol recall, and reverse digit span. Older adults performed better than younger adults for the vocabulary test. No difference between age-groups was found for the Benton Facial Recognition task. These data were consistent with Study 1 as well as past research (Czaja, Charness, Fisk, Hertzog, Nair, Rogers, & Sharit, 2006; Benton, Eslinger, & Damasio, 1981) and all participants’ ability scores were within the expected range for their age group. Additionally, both younger (M = 3.83, SD = 0.94) and older adults (M = 3.71, SD = .84) rated themselves as having good health (1 = poor, 5 = excellent).

TABLE 4.

Younger and older adult scores on ability tests.

| Younger Adults

|

Older Adults

|

t value | |||

|---|---|---|---|---|---|

| M | SD | M | SD | ||

| Benton Facial Recognitiona | 48.26 | 2.95 | 47.71 | 3.62 | 0.76 |

| Choice Reaction Timeb | 319.98 | 51.72 | 418.10 | 86.92 | −6.29* |

| Digit-Symbol Substitutionc | 74.52 | 11.06 | 51.36 | 11.58 | 9.38* |

| Reverse Digit Spand | 10.83 | 2.33 | 9.12 | 2.41 | 3.32* |

| Shipley Vocabularye | 31.93 | 3.25 | 35.60 | 3.88 | −4.69* |

p < .05.

Facial discrimination (Levin, Hamsher & Benton, 1975); score was total number correct converted to 54 point scale.

Response time (locally developed); determined by 45 trial test in ms for both hands.

Perceptual speed (Weschler, 1997); score was total number correct of 100 items.

Memory span (Weschler, 1997); score was total correct for the 14 sets of digits presented.

Semantic knowledge (Shipley, 1986); score was the total number correct from 40.

3.2 Apparatus/Materials

All apparatus and materials were the same as Study 1 except where noted. Females were chosen for the synthetic and human faces because of anecdotal reports from participants stating that they thought the iCat looked female. Thus, we decided to control for gender and make all stimuli female.

Virtual Agent Face

As in Study 1, the virtual agent face used in this study was the Philips Virtual iCat robot.

Synthetic Human Face

The synthetic faces were obtained from a database of digitized grayscale male and female photographs. Wilson and colleagues created these faces using MATLAB to digitize 37 points on the face (for full description, see Wilson et al., 2002; Goren & Wilson, 2006). Goren and Wilson (2006) morphed the faces to express emotion according to Ekman and Friesen’s (1975) qualitative descriptions of facial expressions. In developing these pictures, changes to eyebrows, eyelid position, and closed mouth were made, so that wrinkles, hair and skin textures, color and luminance was removed (Goren & Wilson, 2006). For this study, the mean female synthetic face was selected (Wilson et al., 2002), creating a generic female eye, nose, and mouth template.

Human Face

The human faces were obtained from the Montreal Set of Facial Displays of Emotion (MSFDE; Beaupre & Hess, 2005). In this set, each expression was created using a directed facial action task and was Facial Action Coding System (FACS; Ekman & Friesen, 1978) coded to assure identical expressions across actors. For this study, photographs of female faces were used. The same female actor was chosen for each of the emotions and was selected according to visual similarity to the female synthetic face.

Standardization of Character Type and Emotion

The stimuli were displayed for a longer duration than Study 1 (up to 20 s compared to 3.25 s) as well as most previous works (<150 ms in Frank & Ekman, 1997; Schweinberger et al., 2003) to ensure both older and younger participants had ample time to process the face stimuli and determine what, if any, emotion was conveyed.

All characters were shown in black and white, and only a frontal view of the face was displayed. The statistical distribution of black/white color for all pictures was balanced using the GNU Image Manipulation Program 2.6 (GIMP). The iCat is more often depicted as female (e.g., Meerbeek, Hoonhout, Bingley, & Terken, 2006). Therefore, the average female synthetic human face, and a female face from the MSFDE was used.

The emotions anger, fear, happiness, sadness, and neutral were used. These emotions were chosen based on data from Study 1, as well as from a previous study (Beer, Fisk & Rogers, 2008) demonstrating age-related differences in emotion recognition of the virtual agent’s facial expressions of anger, fear, happiness, and neutral. Preliminary testing indicated that faces displaying medium-intensity emotion ensured that recognition of the emotions was not at ceiling. Emotion depicted by the MSFDE human faces were displayed at medium emotion intensity (60%). The facial emotions displayed on the synthetic human and virtual agent were also displayed at medium (60%) intensity level. Sixty percent emotion intensity was created by calculated geometric placement of facial features between neutral and 100% emotion. See Figure 5 for examples of the characters and emotions.

FIGURE 5.

Five emotions for each character type.

Ability Tests and Questionnaires

Participants in Study 1 and 2 completed the same ability tests, and demographics and health questionnaire.

3.3 Design

Within participant variables were character type (virtual agent, synthetic face, and human face) and emotion type (anger, fear, happiness, sadness, and neutral). Age (older and younger adults) was a grouping variable. The dependent variable was proportion match (emotion recognition), which is defined as the mean proportion of participant responses matching the emotion the character was designed to display.

3.4 Procedure

The procedure was the same in Studies 1 and 2 except where noted.

The experimental session followed completion of practice, which followed the same general format as Study 1. Character type presentation was blocked (i.e., each block of trials consisting of a single character type). Presentation order of the character types (human face, virtual agent, synthetic face) was counter-balanced across participants using a partial Latin-Square, and the Emotions (anger, fear, happy, sad, and neutral) within each block were permuted with the constraint that each emotion occurred three times with no more than two of the same emotions shown in a row. There were a total of 135 trials. There were a total of nine blocks, three blocks for each character type (i.e., 45 trials per character type), and 15 trials per block. Breaks were offered between each block of trials.

Each experimental trial was similar to those in Study 1 with the following exceptions: (1) only static pictures of the human face, synthetic human face, and virtual iCat were shown; (2) when prompted with “Which Emotion?”, participants had up to 20 seconds to make a response and the picture remained on the screen until the participant responded; (3) only medium intensity (60%) of the emotions was displayed; (4) only four emotions types and neutral were used for this experiment, however participants selected from the six basic emotions and neutral on the keyboard; this allowed for the assessment of misattributions associated with each emotion used in previous research (Ekman & Friesen, 1975).

3.5. Results

Mean proportion match is reported as a way to measure participants’ emotion recognition in this section. The mean proportion match for each facial expression was computed by averaging a participant’s match of his or her response with the intended emotion across the nine times a facial expression was presented. Unless otherwise noted, for all statistical tests alpha was set at p < .05, all t-tests were conducted using two-tailed analysis. Huynh-Feldt corrections were used for F-tests with a repeated measures factor to meet the sphericity assumption. Bonferroni corrections were used where appropriate to control for Type I error.

Emotion Recognition as a Function of Emotion, Character, and Age

To determine whether emotion, character type, or age affected participants’ emotion recognition, an Emotion (5) x Character Type (3) x Age Group (2) ANOVA was conducted.

A main effect of emotion was found (F(2.91, 238.32) = 195.91, p < .001, ηp2 = .71), suggesting that for older and younger adults combined, recognition varied by emotion (see Table 5). The main effect of Age Group was also significant (F(1, 82) = 55.13, p < .001, ηp2 = .40), with younger adults (M = .82, SD = .10) having higher proportion match than older adults (M = .66, SD = .10).

TABLE 5.

Main effect of emotion

| Emotion | Marginal Mean | Standard Deviation |

|---|---|---|

| Happiness | 0.94 | 0.08 |

| Neutral | 0.90 | 0.13 |

| Sadness | 0.86 | 0.02 |

| Anger | 0.60 | 0.17 |

| Fear | 0.41 | 0.23 |

There was also a main effect of Character Type (F(2, 164) = 86.91, p < .001, ηp2 = .52), suggesting that overall emotion recognition differed between characters. Each participant’s proportion match for each character was aggregated across emotion. Paired sample t-tests were then conducted, comparing emotion recognition for each character type. The human face demonstrated highest overall proportion match (M = .82, SD = .14), and differed (t(2,83) = 4.11, p < .001) from the synthetic human face (M = .76, SD = .16). The virtual agent (M = .64, SD = .14) showed the lowest proportion match, and differed from the synthetic face (t(2,83) = −12.71, p < .001) and the human face (t(2,83) = −9.24, p < .001). Mean proportion match for each character is shown in Figure 6.

FIGURE 6.

Mean proportion match and standard error for each character type.

The main effects Emotion and Character Type and Age were qualified by two interactions. The Emotion x Age Group interaction was significant (F(2.91, 238.32) = 6.68, p < .001, ηp2 = .08) as was the Character Type x Emotion (F(5.60, 459.03) = 52.09, p < .001, ηp2 = .39). These interactions are explored in detail in the next section. The Character Type x Age interaction (F(2,164) = 0.65, p = .52), and the Emotion, Character Type, and Age interaction (F(8, 656) = 1.93, p = .08) failed to reach statistical significance.

Age-Related Differences in Emotion Recognition

To further investigate how emotion recognition differed as a function of emotion and age, separate Emotion x Age ANOVAs were conducted for each character type. The results are shown in Table 6. For each character type, a main effect of emotion was found, suggesting that proportion match depended on the type of emotion displayed. Furthermore, younger adults overall had higher proportion match for all characters; and the age by emotion interactions suggest that those age-related differences depended on the emotion displayed.

TABLE 6.

Emotion recognition as a function of emotion and age.

| Character | Emotion

|

Age

|

Emotion x Age

|

|||

|---|---|---|---|---|---|---|

| F | η2p | F | η2p | F | η2p | |

| Human | 67.99*** | .45 | 4.08* | .05 | 32.17*** | .28 |

| Synthetic Human | 62.99*** | .43 | 38.13*** | .32 | 4.86** | .06 |

| Virtual Agent | 220.69*** | .73 | 40.47*** | .33 | 3.22* | .04 |

p < .05,

p < .01,

p < .001

The data shown in Table 6 suggest that across all characters, age related differences occurred, and depended on the emotion shown. To better understand these findings, the following sections describe further analysis conducted for each individual character.

Human Face

Proportion match for each human emotion is shown in Figure 7. For the human face, the emotion of fear revealed low proportion match by both the older and younger adults. To investigate which human emotions resulted in age-related differences, independent samples t-tests were conducted to. In parallel with previous emotion recognition research on human faces (e.g., Ruffman, Henry, Livingstone, & Phillips, 2008), the Bonferroni corrected t-tests (criterion p < .01) revealed age-related differences in emotion recognition for the emotions anger (t(1,82) = 3.59, p = .001), fear (t(1,82) = 3.11, p = .003)., and sadness (t(1,82) = 4.04, p < .001). Younger adults showed a higher proportion match for labeling these three emotions. The human face also revealed an age-related difference for the emotion neutral (t(1,82) = 3.42, p = .001), again with younger adults showing higher proportion match.

FIGURE 7.

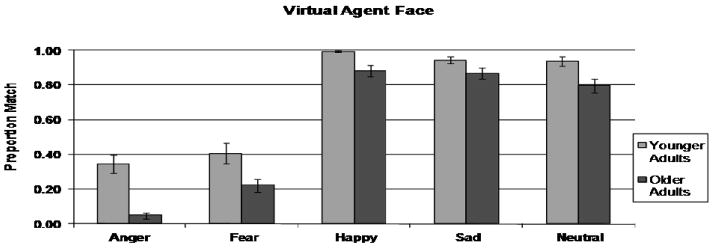

Younger and older adult emotion recognition for the human face (error bars indicate standard error).