Abstract

A wearable surgical navigation system is developed for intraoperative imaging of surgical margin in cancer resection surgery. The system consists of an excitation light source, a monochromatic CCD camera, a host computer, and a wearable headset unit in either of the following two modes: head-mounted display (HMD) and Google glass. In the HMD mode, a CMOS camera is installed on a personal cinema system to capture the surgical scene in real-time and transmit the image to the host computer through a USB port. In the Google glass mode, a wireless connection is established between the glass and the host computer for image acquisition and data transport tasks. A software program is written in Python to call OpenCV functions for image calibration, co-registration, fusion, and display with augmented reality. The imaging performance of the surgical navigation system is characterized in a tumor simulating phantom. Image-guided surgical resection is demonstrated in an ex vivo tissue model. Surgical margins identified by the wearable navigation system are co-incident with those acquired by a standard small animal imaging system, indicating the technical feasibility for intraoperative surgical margin detection. The proposed surgical navigation system combines the sensitivity and specificity of a fluorescence imaging system and the mobility of a wearable goggle. It can be potentially used by a surgeon to identify the residual tumor foci and reduce the risk of recurrent diseases without interfering with the regular resection procedure.

Keywords: Surgical resection margin, fluorescence imaging, Google glass, surgical navigation, head mounted display, augmented reality

Introduction

Despite the recent therapeutic advances, surgery remains a primary treatment option for many diseases such as cancer. Accurate assessment of surgical resection margins and early recognition of occult diseases in a cancer resection surgery may reduce the recurrence rates and improve the long-term outcomes. However, many existing medical imaging modalities focus primarily on preoperative imaging of tissue malignancies but fail to provide surgeons with real-time and disease-specific information critical for decision-making in an operating room. Therefore, it is important to develop surgical navigation tools for real-time detection of the surgical resection margins and accurate assessment of the occult diseases. Clinical significance of intraoperative surgical navigation can be exemplified by the inherent problems in breast-conserving surgery (BCS). BCS is a common method for treating early stage breast cancer (1). However, up to 30% to 60% patients after BCS require a re-excision procedure that delays the postoperative adjuvant therapies, increases patient stress, and yields suboptimal cosmetic outcomes (2). The high re-excision rate is partially due to the failure to obtain clean margins, which can subsequently lead to increased local recurrence rates (3). The current clinical gold standard for surgical margin assessment is histopathology analysis of biopsy specimens. However, conventional histopathology analysis procedure is time-consuming, costly, and hard to provide real-time imaging feedback in the operating room. In addition, this procedure only evaluates a small fraction of resected surgical specimen and therefore may miss the residual cancer cells owing to specimen sampling errors. In some instances, the residual tumor foci unrecognized during BCS may be further developed into the recurrent disease (4). In this regard, developing an intraoperative surgical navigation system may help the surgeon to identify the residual tumor foci and reduce the risk of recurrent diseases without interfering with the regular surgical procedures.

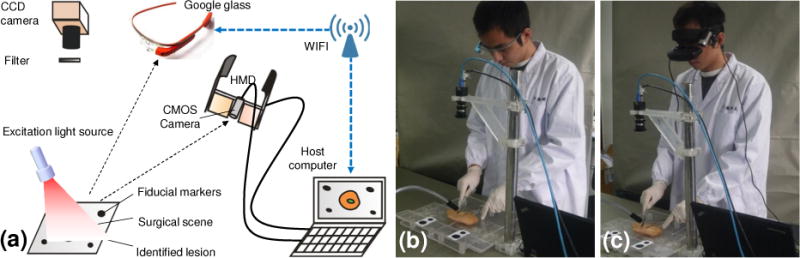

In the past, optical imaging has been explored for surgical margin assessment in various medical conditions such as tumor delineation (5–7), lymph node mapping (8; 9), organ viability evaluation (10), tissue flap vascular perfusion assessment during plastic surgery (11), necrotic boundary identification during wound debridement (12; 13), breast tumor characterization (14), chronic wound imaging (15), and detection of iatrogenic bile duct injuries during cholecystectomy (16). However, these imaging systems are not wearable devices. Therefore, they cannot provide a natural environment to facilitate intraoperative visual perception and navigation by the surgeon. Recently, fluorescence goggle systems have been developed for intraoperative surgical margin assessment (17; 18). These goggle systems integrate excitation light source, fluorescence filter, camera, and display in a wearable headset, allowing for intraoperative evaluation of surgical margins in a natural mode of visual perception. However, clinical usability of these goggle systems is suboptimal, owing to several design limitations. First of all, these goggle navigation systems integrate multiple opto-electrical components in a bulky headset that is inconvenient to wear or take off. The headset significantly reduces the surgeon’s manipulability, blocks the surgeon’s vision, and may even cause iatrogenic injury owing to visual fatigue or distraction. Second, current efforts to reduce the headset weight, such as replacing a CCD camera with a miniature CMOS camera, may sacrifice imaging sensitivity and dynamic range required for fluorescence detection of cancerous margin. Finally, using a head-mounted light source to provide fluorescence excitation may result in poor illumination conditions vulnerable to many imaging defects, such as insufficient light intensity for fluorescence imaging in the specific region of interest, non-uniform illumination, motion artifact, and specular reflection. In order to overcome these limitations, we propose a wearable surgical navigation system that combines the high sensitivity of a stationary fluorescence imaging system and the high mobility of a wearable goggle device. The concept of this wearable surgical navigation system is illustrated in Figure 1. As the surgical site is illuminated by a handheld excitation light source, fluorescence emission from the disease is collected by a stationary CCD camera through a long pass filter. The resultant fluorescence image of the surgical margin is transmitted to a host computer. In the meantime, a miniature CMOS camera is installed on the wearable headset unit to monitor the surgical site and transmit the surgical scene images to the computer host. The surgical scene images are fused with the surgical margin images and sent back to the wearable headset unit for display. The wearable surgical navigation system is implemented in two operation modes. In the first mode, a Google glass device captures the surgical scene images and communicate with the host computer wirelessly. In the second mode, a miniature camera installed on a head-mounted display (HMD) device captures the surgical scene and transmit the image data to the host computer through a USB port. Co-registration between the surgical margin and the surgical scene images is through four fiducial markers placed within the surgical scene.

Figure 1.

(a) Schematic diagram of the surgical navigation system in two operation modes: Google glass mode and HMD mode. (b) surgical navigation in the Google glass mode. (c) Surgical navigation in the HMD mode.

Materials and Methods

Hardware design for the surgical navigation system

The major hardware components of the proposed surgical navigation system include a MV-VEM033SM monochromatic CCD camera (Microvision, Xian, China) for fluorescence imaging, a FU780AD5-GC12 laser diode (FuZhe Corp., Shenzhen, China) for excitation illumination at 780 nm, and a wearable headset unit comprising either a HMD device or a Google glass. In the HMD mode, an ANC HD1080P CMOS camera (Aoni, Shenzhen, China) is installed on a PCS personal cinema system (Headplay, Inc., Los Angeles, CA) to capture the surgical scene images. In the Google glass mode, the build-in CMOS camera in the Google glass (Google Labs, Mountain View, CA) captures the surgical scene images. The excitation light of 780 nm is delivered to the surgical site by an optical fiber. A beam expander further expands the illumination area up to 25 square centimeter. To leave adequate space for surgical operation and ensure sufficient level of fluorescence emission without irreversible photobleaching or tissue thermal damage, the excitation light source is placed 7 cm away from the surgical site, with its fluence rate controlled between 8 mW/cm2 and 50 mW/cm2. The CCD camera and the CMOS camera are used to acquire the fluorescence and the surgical scene images respectively at a resolution of 640×480 pixels and a frame rate of 30 frames per second (fps). The CCD camera uses a one-fourth inch monochrome CCD sensor with a dynamic range of 10 bits. An FBH800-10 800 nm long pass filter (Thorlabs Inc., Newton, NJ) is mounted in front of the CCD camera to collect fluorescence emission in the near infrared wavelength range. The CCD camera is installed 40 centimeters above the surgical site. A desktop computer is used to synchronize all the image acquisition, processing, transferring, and display tasks.

Navigation strategy for the surgical navigation system

A multi-step co-registration strategy is implemented for seamless fusion of the tumor margin images with the surgical scene. To facilitate adequate co-registration between the fluorescence images acquired by the stationary CCD camera and the surgical scene images acquired by the wearable headset unit, four black fiducial markers are placed around the designated surgical site. Before navigation, the surgical site is imaged by the CCD camera with and without a fluorescence filter so that the coordinates for fluorescence imaging can be calibrated with respect to these fiducial markers. During navigation, the fluorescence and the surgical scene images are concurrently transmitted to the host computer for further processing.

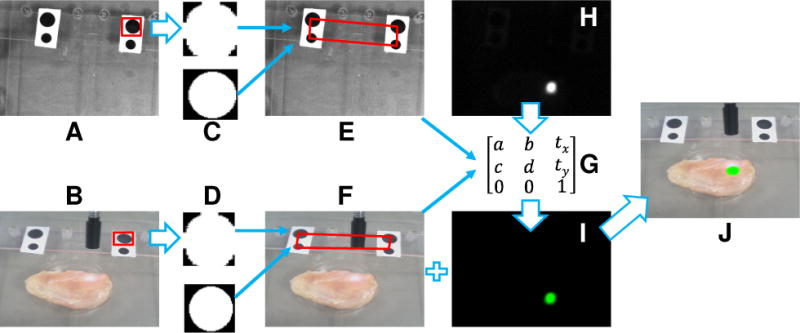

For both the HMD and the Google glass navigation systems, the image acquisition and processing tasks are carried out by a custom program implemented in Python programming language that calls the OpenCV functions. A find and locate specific blob method (FLSBM) as illustrated in Figure 2 determines the coordinates of the tumor margin and the surgical scene by the following steps: (1) find the block connected areas in the image; (2) encircle each block by an rectangular box with internal ellipse; (3) generate a normalized circle for each elliptical area through rotation and resizing; (4) segment each block area into 50 × 50 normalized picture; (5) subtract the normalized picture in (4) by a standard circular picture to get different pixel numbers (DPNs); (6) define a DPN threshold to classify each block into the marker and the non-marker areas. The above steps are repeated for each of the block connected areas until all the four fiducial markers are identified and sorted to form a quadrangle. The four vertices of the quadrangle define the specific perspective whose coordinates can be obtained by geometric calculation. After that, an OpenCV function, getPerspectiveTransform, is used to transform the perspective of the stationary fluorescence camera to that of the wearable headset unit (i.e., the surgeon’s perspective). The transformed fluorescence image is then co-registered and fused with the surgical scene image. The image fusion is then transmitted back to the wearable headset unit for display. For surgical navigation performed in the Google glass mode, augmented reality is achieved by seamless registration of the Google glass display with the surrounding surgical scene perceived by the surgeon in reality.

Figure 2.

Flow chart for navigation system calibration and image co-registration. A: image of the surgical scene acquired by the stationary CCD camera. B: image of the tumor-simulating tissue acquired by the wearable headset. C and D: fiducial markers are identified by FLSBM algorithm. E and F: four vertices of the fiducial marker quadrangle are calculated for C and D respectively. G: a transform matrix is generated from E and F. H: fluorescence image acquired by the stationary CCD camera. I: the transformed fluorescence image after the transform matrix G is applied to H. J: image fusion between the surgical scene image F and the transformed fluorescence image I.

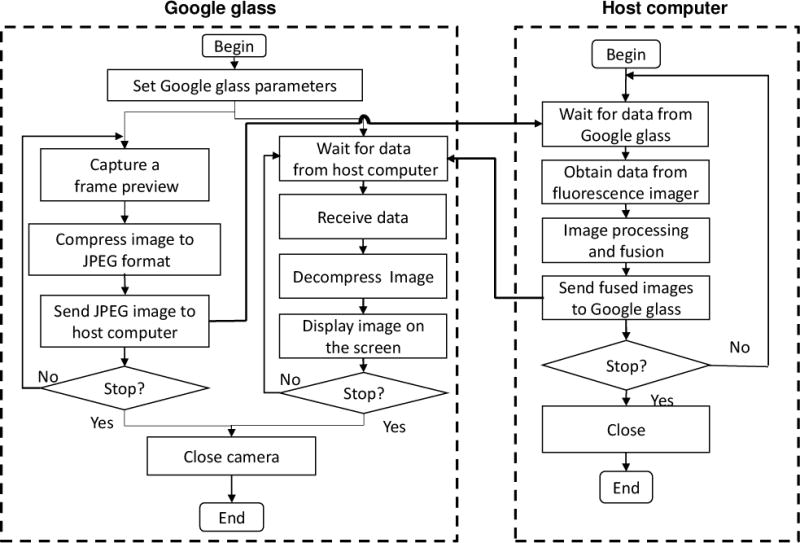

Real-time display of the image fusion to the HMD device is relatively easy since the HMD device is connected to the host computer via a USB port and serves as an external monitor. In comparison, data transport between the Google glass and the host computer needs more programming efforts. In general, a Google glass communicates with a host computer in the following three ways: Bluetooth, Wi-Fi, and USB port. Considering that USB communication requires a physical cable and Bluetooth communication has speed and distance limitations, we choose to use Wi-Fi connection for data transport between the Google glass and the host computer. Figure 3 shows the flow chart for the Wi-Fi communication protocols from both the Google glass side and the host computer side. First, a wireless network connection is established between the Google glass and the host following the transmission control protocol (TCP) (19). The host keeps broadcasting synchronization (SYN) packets that contain its IP and aim at a special port (Port A) upon advanced agreement with the google glass. The google glass monitors the incoming packets at Port A. Upon receiving a SYN packet, the google glass obtains the IP of the host and immediately sends an acknowledgment packet (ACK) (which includes the IP of the google glass) to Port B of the host. As the host receives ACK packet and extracts the IP, it sends an ACK packet back to the google glass to confirm the successful establishment of the network connection between the host and the google glass. It is worthwhile to note that this network connection will not be interfered by the neighboring wireless devices since these devices do not respond to the SYN packets in the same way as the glass and the host. Once the network connection is established, the TCP/IP protocol is implemented for communication between the glass and the host. The communication functionality of the google glass is realized by two concurrent threads: the receiving thread and the sending thread. In the sending thread, a camera entity responsible for capturing live images is declared. The captured frame preview is first compressed by the JPEG image compression algorithm and then transmitted to the host through the sending socket. The JPEG format is chosen because of its high compression ratio (up to 15:1) and good imaging quality after reconstruction. In the receiving thread, the google glass keeps receiving packets from the host through a receiving socket. After the google glass receives all the data for the whole JPEG compressed image, it decompresses and displays the image immediately. The above data transport functions are implemented by Andriod programming (20).

Figure 3.

Program flow chart for image acquisition, processing, transport, and display on the Google glass side and the host computer side of the surgical navigation system.

Performance validation and optimization for surgical navigation

Multiple tumor simulating phantoms are developed for validation and optimization of the wearable surgical navigation technique. Indocyanine Green (ICG) is mixed with the phantom materials to simulate fluorescence emission from the tumor simulators. ICG is a cyanine dye that has been approved by US Food and Drug Administration (FDA) for fluorescence imaging in many medical diagnostic applications such as ophthalmic angiography and hepatic photometry (21). Depending on its solvent environment and concentration, ICG varies its absorption peak between 600nm and 900nm, and emission peak between 750nm and 950nm. In aqueous solution at low concentration, ICG has an absorption peak at around 780nm and an emission peak at around 820nm. Accordingly, a laser diode of 780nm and a long pass filter of 800nm are used for surgical navigation setup.

To prepare the tumor simulators, 2.1 g of agar-agar, 0.28 g of titanium dioxide (TiO2), and 4.9 ml of glycerol are mixed with distilled water to make a 70 ml volume. The mixture is heated up to 95°C for approximately 30 minutes on a magnetic stirrer with a high power setting and then cooled down. As the mixture temperature drops down to 65°C, 0.008 mg of ICG is dissolved in distilled water and added to the mixture to make a total volume of 70 ml. The mixture is then maintained at 65°C with continuous stirring to prevent sediment of TiO2. By injecting this tumor simulating mixture in agar-agar gel and chicken tissue respectively, we have developed both a solid phantom model and an ex vivo tissue model for design optimization of the surgical navigation system and feasibility testing of the image-guide tumor resection technique.

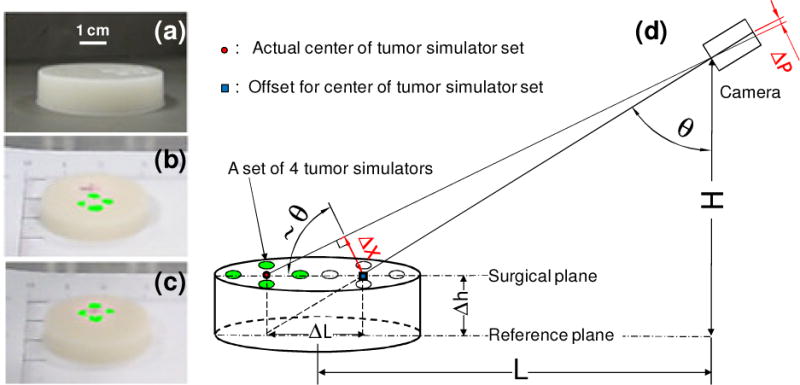

A cylindrical agar-agar gel phantom of 1 cm thick is used to study the co-registration accuracy between the fluorescence image and the surgical scene image, as shown in Figure 4a. The tumor simulating material is injected in four cavities on one side of the agar-agar gel phantom, simulating a set of four solid tumors. The fluorescence image of the tumor margin and the surgical scene image are co-registered and fused following the algorithm described before. As shown in Figure 4b, a large positional offset exists for the fused fluorescence image, indicating a major co-registration error between the projected center of the tumor set and the actual center. This co-registration error is primarily caused by the mismatch between the surgical plane and the reference plane. As illustrated in Figure 4d, the offset of the projected tumor image from its actual position ΔL is a function of the surgical plane height Δh, the camera perspective angle θ, the camera height H, and the camera horizontal distance L:

Figure 4.

Correction of the co-registration error between the fluorescence image of the tumor margin and the surgical scene image. (a) Photo of agar-agar gel phantom with four embedded tumor simulators. (b) Without correction, the co-registration error between the fluorescence image (green) and surgical scene image is significant. (c) With height correction, the center of the projected tumor simulator set matches the actual position.

The resultant deviation of the tumor set center Δx can be approximated as:

The corresponding co-registration error in the imaging plane of the camera is therefore:

Where f is the focal length of the camera.

Since L, H, and f are known experimental parameters, the above co-registration error can be corrected as long as the height of the surgical plane is given. In the case of surgical navigation in a three dimensional (3D) scene, surface profiles and geometric features of the surgical cavity can be reconstructed by optical topographic methods such as multiview imaging and structured illumination (22). The reconstructed surface profiles will define the fiducial marker coordinates with respect to other biologic features and correct the co-registration error. Figure 4c shows the accurately co-registered fluorescence image of the tumor simulator set after the compensation factor Δp is calculated and applied.

Results

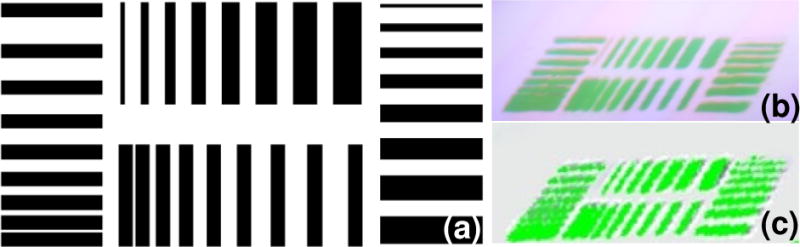

The achievable spatial resolutions of the surgical navigation system in the Google glass mode and the HMD mode are compared using a test pattern as shown in Figure 5a. The size of the test pattern is 71 mm × 36 mm, consisting of horizontal and vertical stripes with thicknesses from 0.5 mm to 4 mm, separated by 0.5 mm to 4 mm. Quantitative evaluation of the achievable spatial resolution by the human eye through the navigation system is difficult. Therefore, we use a DMC-GF5XGK camera (Panasonic, Japan) to record the test patterns displayed on the Google glass and the HMD device so that their relative spatial resolutions can be semi-quantitatively evaluated. According to Figure 5b, the Google glass is able to differentiate the features smaller than 0.5 mm, with a vertical separation greater than 1.5 mm and a horizontal separation greater than 1 mm. According to Figure 5c, the HMD device is able to differentiate the features smaller than 0.5 mm, with a vertical separation greater than 2 mm and a horizontal separation greater than 1.5 mm. Therefore, under the same illumination condition and the same imaging system configuration, a Google glass is able to differentiate more details than a HMD device.

Figure 5.

Relative comparison of the achievable spatial resolutions in the Google glass and the HMD navigation modes. (a) The test pattern is 71 mm × 36 mm, consisting of horizontal and vertical stripes with thicknesses from 0.5 mm to 4 mm and the separation distances from 0.5 mm to 4 mm. (b) Test pattern image acquired by the Google glass. (c) Test pattern image acquired by the HMD device.

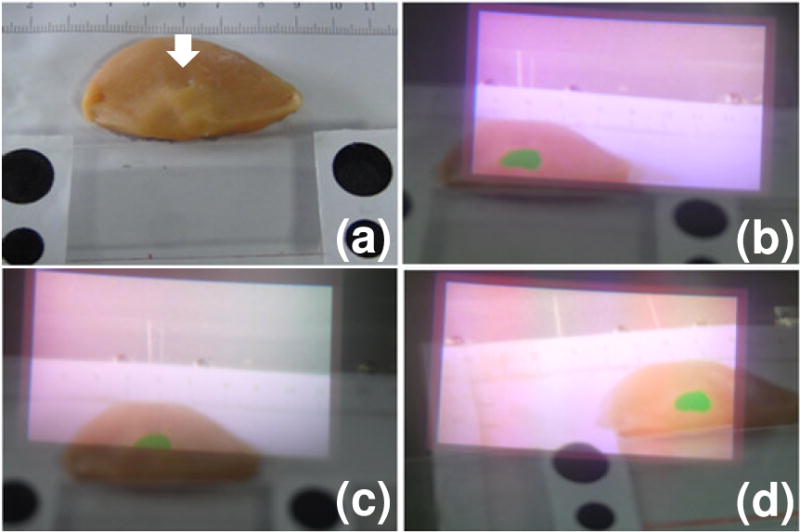

An ex-vivo tumor simulating tissue model as shown in Figure 6a is used to evaluate the navigation system performance and demonstrate the image-guided tumor resection procedure. The tissue model is made by subcutaneous injection of the tumor simulating material into fresh chicken breast tissue obtained from a local grocery store. Within a few minutes after injection, the liquid mixture is cooled down to form a solid tumor with the designated geometric feature. Augmented reality of the Google glass navigation system is demonstrated by changing the field of view at different positions of the surgical scene and capturing the Google glass display as well as the surrounding surgical scene by the DMC-GF5XGK camera. According to Figures 6b–6d, the Google glass display seamlessly matches the surrounding surgical scene during navigation. Especially in Figure 6c, only a portion of the surgical margin is highlighted in the Google glass display but invisible in the surrounding surgical scene, indicating that the Google glass navigation system is able to identify surgical margin in a natural mode of visual perception and with minimal distraction to the routine surgical procedure.

Figure 6.

Augmented reality of the surgical margin image. (a) The ex vivo tumor simulating model with tumor simulating material injected at the location of the white arrow. (b–d) Google glass display and the surrounding surgical scene as the glass moves to different locations.

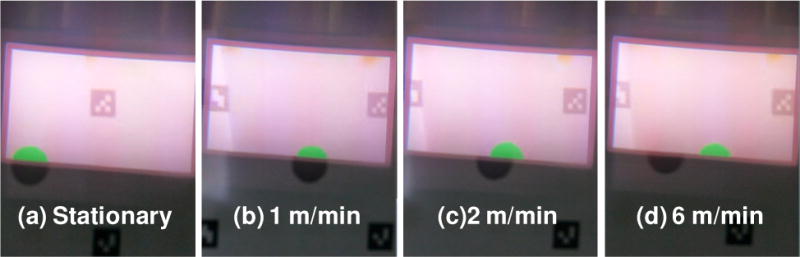

Considering that a surgeon will make frequent motions during a surgical procedure, it is important to optimize the navigation system configuration in order to obtain high quality images of the resection margin without significant “lagging” effect. The motion-induced image lagging is evaluated quantitatively in an experimental setup where the motion of a circular tumor simulator placed on a translational linear stage is tracked by the Google glass navigation system. The navigation system is operated at an overall imaging speed of 6 fps. The velocity of the linear stage is controlled from 0 to 6 meters per minute (m/min). An additional camera is placed behind the Google glass to capture the glass display and the background scene at an imaging speed of 30 fps. The position of the linear stage is adjusted carefully so that half of the circular tumor simulator is shown in the glass display. Figure 7 shows the images of the Google glass display and the background scene at different translational velocities. According to the figure, the navigation system is able to track the surgical scene without significant lagging at a translational velocity of less than 1 m/min. However, significant lagging effect will occur when the surgeon’s motion velocity is greater than 1 m/min.

Figure 7.

Experimental results that simulate the effect of the surgeon’s motion on the imaging lag of the surgical navigation system. (a): No motion exists between the navigation system and the surgical scene. (b)–(d): The navigation system moves with respect to the surgical scene at a speed of 1 m/min, 2m/min, and 6 m/min, respectively.

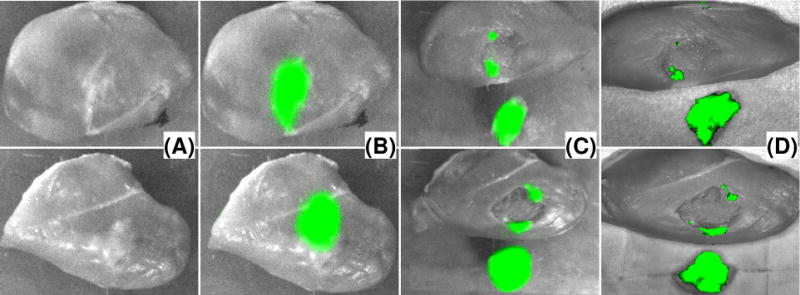

Image-guided tumor resection surgeries are carried out in the ex vivo tissue model using the wearable surgical navigation system in both the Google glass mode and the HMD mode. Before resection, the surgical scene is imaged by the stationary CCD camera with and without fluorescence filter. The acquired surgical margin image is transformed, fused with the surgical scene image, and projected to the wearable headset unit for resection guidance. After resection, the surgical cavity is re-examined by the surgical navigation system to identify the residual lesion and guide the re-resection process. By the end of each experiment, the image fusion of the resected tissue specimen and the surgical cavity is compared with that acquired by an IVIS Lumina III small animal imaging system (Perkin Elmer, Waltham, MA). Figure 8 shows the surgical scenes without fluorescence imaging (A), surgical margins with fluorescence image fusion (B), surgical cavity and resected tissue specimen images after resection (C), and IVIS images of the same tissue specimens. The images are acquired by the wearable surgical navigation system in the Google glass and the HMD modes, respectively. These experimental results imply that our wearable surgical navigation system is able to detect the tumor margin with sensitivity and specificity comparable to a commercial fluorescence imaging system.

Figure 8.

Image-guided tumor resection surgery on an ex vivo tumor-simulating model. Top row are images for Google glass guided surgery. Bottom row are images for HMD guided surgery. (A) Photographic images of the surgical field without fluorescence filter. (B) Fluorescence images of the surgical margins before resection. (C) Fluorescence images of the surgical margins and the resected tissue samples after resection. (D) IVIS image of the resected tissue sample and the surgical cavity.

Discussion

We have developed a wearable surgical navigation system that combines the accuracy of a standard fluorescence imaging system and the mobility of a wearable goggle. The surgical navigation system can be operated in both a Google glass mode and a HMD mode. The performance characteristics of these two operation modes are compared in Table 1. According to the table, Google glass navigation has several advantages over HMD and is suitable for image-guided surgery in clinical settings.

Table 1.

Performance comparison between HMD guided surgical navigation and Google glass guided surgical navigation.

| Headset | Clinical mobility | Interference to surgery | Accuracy | Speed | |

|---|---|---|---|---|---|

| HMD surgical navigation | Heavy (>250g), hard to wear | Poor, requires USB cable connection | Block regular vision, causes distraction and fatigue | Comparable to standard fluorescence imaging | Fast (~30fps), direct image projection |

| Google glass surgical navigation | Light (~36g), easy to wear | Good, wireless connection without cable | Minimal | Comparable to standard fluorescence imaging | Slow (6–10fps), require wireless data transport |

With the current hardware and software configurations, the Google glass surgical navigation system is able to achieve an overall imaging speed up to 10 fps. Considering that a human visual system is able to process 10 to 12 separate images per second, this imaging speed is able to generate nearly smooth images of the surgical scene. In order to capture the field of view of the surgeon in real time, we also have to limit the surgeon’s locomotion velocity. According to the simulated experimental results, our surgical navigation system is able to track the surgical scene without significant lagging effect if the relative motion between the glass and the surgical scene is controlled slower than 1 m/min. Further improvement in hardware design, communication protocol, and image processing algorithm is required in order to improve the intraoperative guidance in clinical surgery.

The wearable surgical navigation system described in this paper can be potentially used to improve the clinical outcome for cancer resection surgery. Possible applications include: (1) identifying otherwise missed tumor foci; (2) determining the resection margin status for both resected tissue specimens and within the surgical cavity; (3) guiding the re-resection of additional tumor tissues if positive surgical margins or additional tumor foci are identified; and (4) directing the sampling of suspicious tissues for histopathologic analysis. In addition to cancer surgery, the wearable navigation technique can be used in many other clinical procedures such as wound debridement, organ transplantation, and plastic surgery. The achievable imaging depth of the wearable navigation system is affected by multiple parameters such as tissue type, chromophore concentration, and working wavelength. In general, fluorescence imaging in the near infrared wavelength range may achieve deep penetration in biologic tissue of low absorption. Therefore, an ideal clinical application of the system includes surgical navigation tasks in superficial tissue, such as sentinel lymph node biopsy. The system is not suitable for surgical guidance in thick tissue or tissue of high absorbance, such as liver tumor resection. We have previously demonstrated that fluorescence emission of a simulated bile duct structure embedded 7 mm deep in a tissue simulating phantom can be imaged using ICG-loaded microballoons in combination with near infrared light (16). Further increase of the imaging depth for the wearable navigation system requires spatial guidance provided by other imaging modalities (23; 24).

Further engineering optimization and validation works are required for successful translation of the proposed navigation system from the benchtop to the bedside. For example, the navigation system needs to be integrated with a topographic imaging module so that 3D profiles of the surgical cavity can be obtained in real-time for the improved co-registration accuracy. In the current experimental setup, four fiducial markers are placed within the field of view of the wearable navigation system for appropriate image co-registration. For clinical implementation in the future, the fiducial markers will be placed on the patient and the surgeon; and a set of stereo cameras will be used to track the relative locations of the surgical scene with respect to the wearable goggle. Furthermore, our current surgical navigation software grabs only the images within the designated window for further processing and display tasks. Therefore, it is technically flexible to integrate the wearable surgical navigation system with other modalities, such as magnetic resonance imaging, positron emission tomography, computed tomography, and ultrasound, for intraoperative surgical guidance in a clinical setting.

Acknowledgments

This project was partially supported by National Cancer Institute (R21CA15977) and the Fundamental Research Funds for the Central Universities. The authors are grateful to Ms. Chuangsheng Yin at University of Science and Technology of China for helping the manuscript preparation and Drs. Edward Martin, Stephen Povoski, Michael Tweedle, and Alper Yilmaz at The Ohio State University for their technical and clinical helps and suggestions.

References

- 1.Weber WP, Engelberger S, Viehl CT, Zanetti-Dallenbach R, Kuster S, et al. Accuracy of frozen section analysis versus specimen radiography during breast-conserving surgery for nonpalpable lesions. World Journal of Surgery. 2008;32:2599–606. doi: 10.1007/s00268-008-9757-8. [DOI] [PubMed] [Google Scholar]

- 2.Waljee JF, Hu ES, Newman LA, Alderman AK. Predictors of re-excision among women undergoing breast-conserving surgery for cancer. Ann Surg Oncol. 2008;15:1297–303. doi: 10.1245/s10434-007-9777-x. [DOI] [PubMed] [Google Scholar]

- 3.Horst KC, Smitt MC, Goffinet DR, Carlson RW. Predictors of local recurrence after breast-conservation therapy. Clin Breast Cancer. 2005;5:425–38. doi: 10.3816/cbc.2005.n.001. [DOI] [PubMed] [Google Scholar]

- 4.Holland R, Veling SH, Mravunac M, Hendriks JH. Histologic multifocality of Tis, T1–2 breast carcinomas. Implications for clinical trials of breast-conserving surgery. Cancer. 1985;56:979–90. doi: 10.1002/1097-0142(19850901)56:5<979::aid-cncr2820560502>3.0.co;2-n. [DOI] [PubMed] [Google Scholar]

- 5.Kuroiwa T, Kajimoto Y, Ohta T. Development of a fluorescein operative microscope for use during malignant glioma surgery: a technical note and preliminary report. Surg Neurol. 1998;50:41–8. doi: 10.1016/s0090-3019(98)00055-x. discussion 8–9. [DOI] [PubMed] [Google Scholar]

- 6.Haglund MM, Hochman DW, Spence AM, Berger MS. Enhanced optical imaging of rat gliomas and tumor margins. Neurosurgery. 1994;35:930–40. doi: 10.1227/00006123-199411000-00019. discussion 40–1. [DOI] [PubMed] [Google Scholar]

- 7.De Grand AM, Frangioni JV. An operational near-infrared fluorescence imaging system prototype for large animal surgery. Technol Cancer Res Treat. 2003;2:553–62. doi: 10.1177/153303460300200607. [DOI] [PubMed] [Google Scholar]

- 8.Soltesz EG, Kim S, Laurence RG, DeGrand AM, Parungo CP, et al. Intraoperative sentinel lymph node mapping of the lung using near-infrared fluorescent quantum dots. Ann Thorac Surg. 2005;79:269–77. doi: 10.1016/j.athoracsur.2004.06.055. discussion –77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tanaka E, Choi HS, Fujii H, Bawendi MG, Frangioni JV. Image-guided oncologic surgery using invisible light: completed pre-clinical development for sentinel lymph node mapping. Ann Surg Oncol. 2006;13:1671–81. doi: 10.1245/s10434-006-9194-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kubota K, Kita J, Shimoda M, Rokkaku K, Kato M, et al. Intraoperative assessment of reconstructed vessels in living-donor liver transplantation, using a novel fluorescence imaging technique. J Hepatobiliary Pancreat Surg. 2006;13:100–4. doi: 10.1007/s00534-005-1014-z. [DOI] [PubMed] [Google Scholar]

- 11.Mothes H, Donicke T, Friedel R, Simon M, Markgraf E, Bach O. Indocyanine-green fluorescence video angiography used clinically to evaluate tissue perfusion in microsurgery. J Trauma. 2004;57:1018–24. doi: 10.1097/01.ta.0000123041.47008.70. [DOI] [PubMed] [Google Scholar]

- 12.Brem H, Stojadinovic O, Diegelmann RF, Entero H, Lee B, et al. Molecular markers in patients with chronic wounds to guide surgical debridement. Mol Med. 2007;13:30–9. doi: 10.2119/2006-00054.Brem. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shah SA, Bachrach N, Spear SJ, Letbetter DS, Stone RA, et al. Cutaneous wound analysis using hyperspectral imaging. Biotechniques. 2003;34:408–13. doi: 10.2144/03342pf01. [DOI] [PubMed] [Google Scholar]

- 14.Xu RX, Ewing J, El-Dahdah H, Wang B, Povoski SP. Design and benchtop validation of a handheld integrated dynamic breast imaging system for noninvasive characterization of suspicious breast lesions. Technol Cancer Res Treat. 2008;7:471–82. doi: 10.1177/153303460800700609. [DOI] [PubMed] [Google Scholar]

- 15.Xu RX, Huang K, Qin R, Huang J, Xu JS, et al. Dual-mode imaging of cutaneous tissue oxygenation and vascular thermal reactivity. Journal of Visualized Experiments. 2010 doi: 10.3791/2095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mitra K, Melvin J, Chang S, Park K, Yilmaz A, et al. Indocyanine-green-loaded microballoons for biliary imaging in cholecystectomy. J Biomed Opt. 2012;17:116025. doi: 10.1117/1.JBO.17.11.116025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liu Y, Njuguna R, Matthews T, Akers WJ, Sudlow GP, et al. Near-infrared fluorescence goggle system with complementary metal-oxide-semiconductor imaging sensor and see-through display. J Biomed Opt. 2013;18:101303. doi: 10.1117/1.JBO.18.10.101303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Martin JEW, Xu R, Sun D, Povoski SP, Heremans JP, et al. Fluorescence Detection System. 09/30763 US Patent. 2009

- 19.Kurose JF, Ross KW. Computer Networking: A Top-Down Approach. Pearson Education, Inc; 2007. [Google Scholar]

- 20.Mednieks Z, Dornin L, Meike GB, Nakamura M. Programming Android. O’ Reilly Media; 2011. [Google Scholar]

- 21.Desmettre T, Devoisselle JM, Mordon S. Fluorescence properties and metabolic features of indocyanine green (ICG) as related to angiography. Surv Ophthalmol. 2000;45:15–27. doi: 10.1016/s0039-6257(00)00123-5. [DOI] [PubMed] [Google Scholar]

- 22.Liu P, Zhang S, Xu RX. 3D topography of biologic tissue by multiview imaging and structured light illumination. Proceedings of SPIE. 2014;8935-0H:1–8. [Google Scholar]

- 23.Zhu Q, Kurtzma SH, Hegde P, Tannenbaum S, Kane M, et al. Utilizing optical tomography with ultrasound localization to image heterogeneous hemoglobin distribution in large breast cancers. Neoplasia. 2005;7:263–70. doi: 10.1593/neo.04526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ntziachristos V, Yodh AG, Schnall MD, Chance B. MRI-guided diffuse optical spectroscopy of malignant and benign breast lesions. Neoplasia. 2002;4:347–54. doi: 10.1038/sj.neo.7900244. [DOI] [PMC free article] [PubMed] [Google Scholar]