Abstract

To investigate the effect of preprocessing techniques including contrast enhancement and illumination correction on retinal image quality, a comparative study was carried out. We studied and implemented a few illumination correction and contrast enhancement techniques on color retinal images to find out the best technique for optimum image enhancement. To compare and choose the best illumination correction technique we analyzed the corrected red and green components of color retinal images statistically and visually. The two contrast enhancement techniques were analyzed using a vessel segmentation algorithm by calculating the sensitivity and specificity. The statistical evaluation of the illumination correction techniques were carried out by calculating the coefficients of variation. The dividing method using the median filter to estimate background illumination showed the lowest Coefficients of variations in the red component. The quotient and homomorphic filtering methods after the dividing method presented good results based on their low Coefficients of variations. The contrast limited adaptive histogram equalization increased the sensitivity of the vessel segmentation algorithm up to 5% in the same amount of accuracy. The contrast limited adaptive histogram equalization technique has a higher sensitivity than the polynomial transformation operator as a contrast enhancement technique for vessel segmentation. Three techniques including the dividing method using the median filter to estimate background, quotient based and homomorphic filtering were found as the effective illumination correction techniques based on a statistical evaluation. Applying the local contrast enhancement technique, such as CLAHE, for fundus images presented good potentials in enhancing the vasculature segmentation.

Keywords: Computer assisted, diabetic retinopathy, diagnostic imaging, illumination correction, image analysis, image processing, retinal image, vessel segmentation

INTRODUCTION

Digital retinal images are used for diagnosis of many retinal diseases such as diabetic retinopathy (micro aneurysms, hemorrhages, ischemic changes), age-related macular degeneration (macular edema, cystoids macular edema), atherosclerosis, glaucoma etc.[1] These diseases are one of the significant causes of blindness amongst adults worldwide. Therefore, early diagnosis and detection of these diseases are indispensable, and computer aided tools for this purpose can be very helpful.[2]

The good quality of retinal images is very essential for accurate detection and diagnosis either manually or automatically. However, studies show that approximately 12% of retinal images can’t be analyzed clinically because of inadequate quality.[3,4] Nonuniform illumination and poor contrast due to the anatomy of eye fundus (the eye fundus with a three-dimensional concave shape), opaque media, wide-angle optics of the fundus cameras, insufficient pupil size, geometry of the sensor array, and movement of the eye are major causes of the low quality in retinal images.[4] Therefore, the improvement of image quality can play an essential role in retinal images analysis.[5]

Image processing, analysis and computer vision techniques are growing progressively in all fields of medical imaging especially in modern ophthalmology.[6] In order to increase retinal image quality, the preprocessing stages would be applied. The main purpose of the preprocessing technique is to increase the diagnostic possibility in fundus images for visual assessment and also for the computer aided segmentation. The criteria to determine the quality of the retinal image is whether the dark blood vessels can be easily distinguished from the background.[7] In two different studies, blood vessel detection and the length of visible small vessels around the fovea were used to assess the image quality.[7,8]

To do preprocessing for retinal images, different techniques have been used by some authors. In recent studies, the histogram specification technique was used for the first step of preprocessing in retinal image segmentation.[9,10,11] Additionally, contrast limited adaptive histogram equalization (CLAHE) was performed on retinal images to enhance local contrast.[10,12,13] A recent study used CLAHE to improve contrast and correct nonuniform background to extract boundary of optic disk.[14] Another study, a modified quotient based approach and nonuniform illumination model were applied to correct the nonuniform illumination in fundus fluorescein angiograms.[15] The optic disk was segmented in retinal images by means of color mathematical morphology and the use of active contours after using an image enhancement technique. Also, as a preprocessing technique, color remapping was performed after single channel enhancing.[16] One study compared some contrast enhancement and illumination equalization techniques for vascular segmentation in retinal images. In this study, the performances of preprocessing techniques in vascular segmentation were evaluated by calculating the value of the area under receiver operating characteristic curve. The adaptive histogram equalization was found to be the most effective technique and improved the segmentation of the vessel in the retina.[17]

Considering the importance of preprocessing stage in the retinal image processing, we carried out a comparative study on a few illumination correction techniques including dividing techniques, homomorphic filtering and quotient based method. Also, we performed CLAHE and polynomial transformation operator for contrast enhancement in retinal images.[3,10,13,15,18,19,20] We assessed the techniques quantitatively and compare them to choose the most suitable preprocessing techniques for further works.

MATERIALS AND METHODS

Image Data

We implemented the preprocessing techniques on two databases; 1. The clinical database was twenty digital retinal images obtained using Topcon TRC-50 IX Mydriatic Retinal Camera from the university eye clinics in Tabriz, Iran. The size of images was 500 × 752 pixels with 24 bit color were stored in tiff format files. 2. The publicly available DRIVE database, which consists of 40 images captured by the Canon CR5 camera at 45° FOV were digitized at 24 bit with a spatial resolution of 565 × 584 pixels. The results of the manual segmentation of vessels are available for this database.[21]

Preprocessing Techniques

Several techniques have been used to improve uneven illumination and contrast levels in different studies. We used the common techniques, which were introduced in the papers about preprocessing of retina images of the eye that were explained in details.

Noise reduction technique

The noise of all studied images was removed using adaptive noise removing filter before implementing illumination correction and contrast enhancement techniques. This filtering is performed by considering the local mean and the variance around each pixel.[19] The following equation shows the adaptive function of pixel that is given based on adaptive noise removing by mean and variance around each pixel in the image g (x, y):

Where μ, σ are the local mean and variance around each pixel respectively:

and υ2, the variance of noise in g (x, y), is taken by averaging of all the local estimated variances in image. Sab is the N-by-M local neighborhood of each pixel in the image. We chose a 3 × 3 window for Sab. Since it was found that the window size of 3 × 3 gives good result for noise reduction.[22,23] The larger window size leads to blur the image and the details reduced.

Illumination correction

The following methods are the illumination correction techniques and are used in this study:

Dividing methods

A two-dimensional image, f (x, y) was modeled as having two components that can be seen in the following equation.[19]

The nature of i (x, y) is determined by illumination source, which generally is characterized by gradual nonuniform spatial variations, whilst reflectance component r (x, y) is determined by the characteristics of the imaged objects and vary abruptly at the edge of anatomical feature. In dividing method, the original image is divided by an estimation of the illumination pattern as follows below:

In order to estimate the illumination pattern of images i (x, y), the following methods were used.

Applying a median filter with 25 × 25 window size, which is larger than the maximum size of the features presented on components of a RGB image f (x, y)[24,25,26,27]

-

Based on our preliminary study, a bright center and dim peripheral were presented in the captured image of a sample white paper sheet using fundus camera. So we preferred to use the two-dimensional Gaussian distribution to model the illumination of the fundus camera, vertically and horizontally. Therefore, to obtain a mathematical function of illumination pattern in our camera, the intensity profiles along two directions of image were computed, vertically and horizontally. Then the parameters of the Gaussian distribution model were obtained by fitting to Gaussian function, Eq. (6)

In this equation, x0 and y0 are the center of peak in the Gaussian distribution, A is amplitude (adjusted intensity of image) and σx, σy are the x and y standard deviations, we estimated that σx = σy = 0.8 R where R is row size of image where R is row size of image. Because of Gaussian pattern of illumination in retinal images, we set A = 1 in Eq. (6) in order to keep the average intensity of reflectance images constant. The obtained G (x, y) function was used as the illumination function in the Eq. (5)

-

A mathematical model of camera function for illumination correction was introduced in a study by Cree et al.[28] We used this technique to estimate the background function as the illumination pattern. For this purpose, 50 even control points (excluded the fovea and the central macular region and any other parts of the retinal image including features or pathologies) were chosen on red component of three selected retinal images of left eyes. A fifth-degree two-dimensional polynomial model was chosen to provide a good fit for the control points. The background intensity was calculated at these points, and the model was restricted to fit at each point. This leads to a set of 50 linear combinations that must be solved for the ai in Eq. (7). Solution could be performed with the singular value decomposition technique. The fifth-degree two-dimensional polynomial is given by:

The eye illumination pattern was calculated by averaging the solved polynomial of the three selected sample images for both right and left eyes

We used two illumination patterns in above sections (2 and 3), were obtained from the illumination of camera function (section 2) and intensity of patient's retinal features (section 3), to correct nonuniform illumination of retinal images. We intend to obtain the illumination function based on these two objects; the illumination of the camera and the surface of the retina. Therefore, the Gaussian function of camera illumination was multiplied by the polynomial function for estimating illumination pattern of left and right retinal images. The resultant equations represent the estimation of illumination from the camera function and from the retinal surface.

Homomorphic filtering

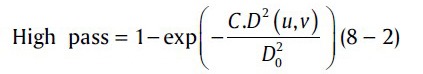

The illumination and reflectance components are associated with a low and high frequencies in the Fourier transform of the logarithm of the image.[19] In color retinal images, there are slow spatial variations in illumination component and abruptly variation in reflectance component because of vessels and other features. The homomorphic filtering in the frequency domain with cutoff frequency locus in the distance of D0 is given by:[19]

In this equation, C is introduced to control the sharpness of the slope of the filter function as it transits between γL and γH. D(u,v) is the distance from any point to the center of Fourier transform and given by:

M2 and N/2 are center of the frequency coordinate.

To correct the uneven illumination, after converting RGB retinal images to hue saturation intensity (HSI) space, the Homomorphic filter on the intensity component of HSI was applied. To get the optimum parameters of Eq. (8), the simple form of low pass filter, Eq. (8–1) was applied on the image in order to acquire the enhanced illumination intensity form of image and then the optimum parameter of C and D0 were chosen.

Then for convert to high-frequency pass filter we subtract this from one, in a result γL and γL set to be 0, 1 respectively.

We experimentally obtained parameters in the equation to be C = 0.1, D02 = 150. It was suggested that an important advantage of HSI color model is that it decouples intensity and color information.[19] The corrected HSI was converted to RGB space.

Quotient method

A modified quotient based approach[15] was used to model the nonuniform illumination as a multiplicative degradation function that can be described by Eq. (10).

I (x, y) is input, I0(x, y) will be corrected image, l0 is ideal illumination value and Is (x, y) is smoothed image that can be produced from original image using median filter with large window size.[15]

We applied this function on intensity component of HSI space, and the modified HSI was converted to RGB space. To obtain Is (x, y) the intensity component was smoothed by median filter with a 25 × 25 window size. This size is chosen based on previous works[24,25,26,27] and our experience using different size windows. The ideal illumination l0 was set to be the mean value of the intensity image from intensity component of HSI.

Techniques for contrast enhancement

Vision science defines contrast as the perceived brightness or color variation within an image.[29] This study investigated two contrast enhancement techniques as follow:

The CLAHE is a histogram processing technique that operates on small regions of the image rather than the entire of image.[19] It performs image contrast enhancement significantly better than the regular histogram equalization.[17,30] We applied CLAHE technique with the specification of 8 × 8 neighborhood windowing and bottom 0.8% histogram clipping limit

-

Walter et al.[18] defined a polynomial gray level transformation operator for the global polynomial contrast enhancement and locally applied it on green component of retinal images that can be seen in Eq. (11) as follows:

where (tmax, tmin) and (umax, umin) are the maximum and minimum intensity of input and output images respectively. f (x) is input image, μ*(x) is the local mean value of f (x) within a window W centered in the pixel x, and parameter r determines the enhancement of contrast. The window size was chosen to be 25 × 25 pixel and r was set to be 2 according to the previous study.[18] In two other studies, the local contrast enhancement method was used in the intensity channel of retinal images.[31,32] The contrast enhancement techniques were implemented on all images on the both green and intensity components.

Statistical and Visual Evaluation

The studied techniques were implemented in MATLAB R2010a software. The performance of illumination correction techniques on the clinical database was evaluated by statistical analysis and subjective visual assessment.

To compare illumination correction techniques statistically and find out which one is more effective to produce a uniform illumination image, the statistical evaluations were carried out for the red and green components. The blue component was excluded due to the lack of applicable information in retinal images.[33]

According to our previous study, we used six regions as an appropriate number for the evaluations of illumination uniformity.[34] To do this evaluation six cells around the fovea, excluding vessels on the image were selected. The cell size was chosen to be at least twice as large as the expected maximum diameter of retinal vessels.[35] The optimum size of the cell was chosen to be 31 × 31 to include the variations in illumination.[34]

The standard deviation in each cell was calculated and divided by mean value of intensity to compensate the variation of intensity in each cell. This means that the coefficient of variation (CV), in each cell was calculated in the images. The mean value of CVs in each image indicates the variation of illumination.[36] Additionally, small mean value of CVs shows the low illumination variation on image and vice versa.

To achieve the best choice for the contrast enhancement, we applied a vessel segmentation algorithm[36] on retina images, before and after enhancing the contrast of them. The green component of RGB space was used for the vessel segmentation.[21]

The accuracy, sensitivity and specificity of the vessel segmentation of the automated method with respect to available result of manual vessel segmentation[21] were obtained on an accurate pixel-by-pixel basis. The detection accuracy was defined as the ratio of the total number of correctly classified pixels to the number of pixels inside the FOV.[36]

On the other hand, the performance of the techniques were visually evaluated based on clarity of the detection of features and the diagnosis of abnormalities in diabetic retinopathy images. For this purpose, a subjective visual assessment was carried out by three ophthalmologists. The twenty color images from the clinical (first database) were corrected by preprocessing techniques. The corrected images and the original one were shown to three expert ophthalmologists to assess the images and fill the assessment form. They were asked to assess the images based on visual appearance, vessels and details, exudates and diagnosis and then to categorize them based on weak, medium, excellent grades. They scored the corrected and original images from 1 to 3. The results of average scores of techniques calculated and categorize to weak (mean score 1–1.4), medium (mean score 1.5–2.4) and good (mean score 2.5–3) grades.

RESULTS

The results of applying the preprocessing techniques on a sample of the retinal image are shown in Figure 1.

Figure 1.

A sample of retinal images that used for preprocessing techniques.(a) Original, (b) M1R*, (c) M1G, (d) M2, (e) M3, (f) M4, (g) M5 on intensity component of HSI, (h) M6, (i) M7 on intensity component of HSI, (j) M8. *M1R – Divided by smoothed red component, M1G – Divided by smoothed green component, M2 – Divided by Gaussian function, M3 – Divided by fifth-degree two-dimensional polynomial, M4 – Divided by Gaussian function and fifthdegree two-dimensional polynomial, M5 – Homomorphic filtering, M6 – Quotient based method, M7 – Contrast limited adaptive histogram equalization, M8: Contrast enhancement by polynomial transformation operator

The mean of CVs versus illumination correction techniques on the red and the green components have shown in Figure 2. It shows three illumination correction methods consisted of dividing methods using the median filter, homomorphic filtering and Quotient based that had the lower mean value of the CVs in comparison with the other three techniques for each component. Furthermore, all mean values of CVs for the red component were smaller than those for the green component.

Figure 2.

Box-plot of the mean value of the coefficient of variation (a) on the red component, (b) on the green component

The results of visual assessment by ophthalmologists are summarized in Table 1.

Table 1.

The results of the visual assessment of preprocessed color images by the ophthalmologists. * levels of classification

According to the visual assessment the corrected images by dividing method, which used Gaussian function to estimate the illumination pattern of camera light, as well as the fifth-degree two-dimensional polynomial to estimate the background illumination were categorized in excellent category. The corrected images by homomorphic, quotient base method, and the smoothed image by median filter were categorized in medium. However, the images corrected by the contrast enhancement techniques, were classified in the weak category [Table 1].

The contrast enhancement techniques were also analyzed by calculating the sensitivity, specificity and accuracy of vessel segmentation algorithm on retinal images of DRIVE database. The results of vessel segmentation on a sample image before and after enhancing the contrast using above methods are shown in Figure 3. Table 2 represents the results of mean sensitivity, specificity and accuracy of the vessel segmentation before and after using the contrast enhancement technique on retinal images of DRIVE database. Appling the CLAHE on the green component of RGB space and on the I component of HSI space showed a high sensitivity compared with the original images and the images corrected by the polynomial method [Table 2].

Figure 3.

(a) The green component of the original image.(b) A manual segmentation (gold standard). The result of vessel segmentation: (c) on original image, (d) after applying the CLAHE on intensity component, (e) after applying the CLAHE on Green component, and (f) after applying Walter suggested techniques on Green component.

Table 2.

Mean value of sensitivity, specificity, and accuracy of the vessel segmentation using the contrast enhancement techniques on retinal images of DRIVE database

The result of vessel segmentation (c) on original image, (d) after applying the CLAHE on intensity component, (e) after applying the CLAHE on Green component, and (f) after applying Walter suggested techniques on Green component.

DISCUSSION

This work investigated and compared the eight preprocessing techniques with aimed to correct uneven illumination and low contrast, which appeared in the retinal images. Based on the statistical evaluation results, the dividing method using median filter on Red component for background estimation presented a good ability in correction of illumination with the low value of CVs compared with original images [Figure 2]. The high mean value of CVs for the green component in comparison to the red one could be due to the high mean value of intensity on the red component of retinal images. It should be reminded that the CV is equal to Standard Deviation divided by the mean value of intensity (standard deviation/mean). From Figure 2, it can be seen that the background estimation using a third method, M3, did not produce a significant difference in the CVs of corrected images compared to original images. This might be due to the different backgrounds of retinal images caused by variations in anatomical features and retinal pigmentations in different people. So, as presented in the study of Cree et al.[28] the individual background estimation for each retinal image is suggested.

According to our results, three techniques consisted of the dividing method using median filter to estimate background (M1), quotient based (M6) and homomorphic filtering (M5) were found to be the effective correction techniques among the techniques we investigated. They reduced some of the variation of background illumination that was indicated by low mean value of CVs [Figure 2]. However, the resultant images were categorized as low-grade images in subjective visual evaluation by ophthalmologists. Because, some features such as the macula were eliminated in the corrected images using these methods. As a result, in order to extract the retinal structures based on texture features, applying of these methods are not recommended.

The results of visual assessment of the corrected color images [Table 1] showed that the techniques have good potential in the background estimation based on Gaussian distribution (M2) and the fifth-degree two-dimensional polynomial (M3) in comparison with the other techniques. These methods were preferred by our expert clinicians because they did not change the usual appearance of images.

However, the results of visual assessment showed the contrast enhancement methods have poor performance for producing a suitable appearance of retinal images; they could enhance the sensitivity in vessel extraction due to enhancing of vessels. Using CLAHE methods increased the sensitivity of the vasculature segmentation algorithm up to 5% with the same level of accuracy [Table 2].

The results of this study are in good agreement with the study of Youssif et al.[17] As they found that a noticeable enhancing in the vascular segmentation was achieved by applying a local contrast enhancement based on adaptive histogram equalization. However, it is worth to mention that different preprocessing techniques were used by this group. Thus, it can be concluded that the local histogram equalization could be efficient techniques in enhancing the contrast.

In the study of Salvatelli et al.[37] some preprocessing methods have been applied on retinal images of STAR database. The performance of the preprocessing methods was evaluated by applying two different segmentation algorithms of exudates (i.e. local fractal dimension and fuzzy c-mean). They achieved significant results by combinations of homomorphic filtering for illumination correction, along with morphologic filtering for contrast enhancement. Our result in the study also showed good agreement with Salvatelli's results for homomorphic filtering as the application of homomorphic filtering corrected the illumination effectively.

Finally, it can be concluded that three techniques including the dividing method using the median filter, quotient based and homomorphic filtering may be used as effective correction techniques for illumination of retinal images. The most effective technique on the red component found to be dividing method using the median filter to estimate background while the quotient method was the most effective technique on green component. Applying a local contrast enhancement for fundus images showed good potential in enhancing the vasculature segmentation. Also, the result showed using CLAHE as a preprocessing method a high level of vessel detection was achievable. For automatic detection of retinal features in color images, CLAHE for enhancing the contrast is recommended. In addition using dividing method for illumination correction we could reduce the difference of intensity of images. Our results showed that the preprocessing methods improved the quality of images for automatic detection of features more than that for human viewing.

There are wide varieties of image preprocessing methods in the literature. However, preprocessing methods of fundus images were compared only in a few studies. So, we were limited in comparing the result with other studies.

In future works, we plan to expand the data set and use other segmentation algorithms such as exudate segmentation to indicate that which preprocessing techniques are useful for the computer segmentation of retinal lesions.

BIOGRAPHIES

Seyed Hossein Rasta joined the academic board of the Tabriz University of Medical Sciences as a lecturer in 1996, lecturing Medical Biophysics. Then he was awarded a PhD scholarship by TUMS and so studied Medical Physics and Bioengineering field at University of Aberdeen and graduated in 2008. He was then appointed assistant professor of Medical Physics in TUMS. He has been appointed as head of Medical Bioengineering since 2014. He collaborates as honorary postdoc researcher with University of Aberdeen. He works on developing of medical imaging methods and medical image/signal processing.

E-mail: s.h.rasta@abdn.acc.uk

Mahsa Eisazadeh Partovi received her B.S degree in Physics from University of Tabriz and M.S. degree in Medical Physics from Tabriz University of Medical Science in 2013. Now she is Medical Physicist of Radiotherapy Dept. in Tabriz International Hospital. Her research interests are Medical Imaging, and Image processing.

E-mail: mahsa.e.partovi@gmail.com

Hadi Seyedarabi received B.S. degree from University of Tabriz, Iran, in 1993, the M.S. degree from K.N.T. University of technology, Tehran, Iran in 1996 and Ph.D. degree from University of Tabriz, Iran, in 2006 all in Electrical Engineering. He is currently an associate professor of Faculty of Electrical and Computer Engineering in University of Tabriz, Tabriz, Iran His research interests are image processing, computer vision, Human-Computer Interaction, facial expression recognition and facial animation.

E-mail: seyedarabi@yahoo.com

Alireza Javadzadeh professor of ophthalmology received the M.D. degree from Tabriz University of Medical Sciences, in 1987. He completed the Ophthalmology Residency and VitreoRetinal Fellowship at Nikookari Eye Hospital of Tabriz University of Medical Sciences, and Hzrat Rasoul Akram Hospital of Iran University of Medical Sciences in 1994 and 1998, respectively. Since 1994, he has been a faculty member in Tabriz University of Medical Sciences; and he was head of ophthalmology for five years.

E-mail: javadzadehalireza@yahoo.com

ACKNOWLEDGEMENTS

This study was a part of Ms. Mahsa E Partovi's postgraduate thesis and was supported by a grant from Tabriz University of Medical Sciences. The authors wish to thank Dr. Toka Banaee and Dr. Yashar Amizadeh for invaluable comments.

Footnotes

Source of Support: Tabriz University of Medical Sciences

Conflict of Interest: None declared

REFERENCES

- 1.Patton N, Aslam TM, MacGillivray T, Deary IJ, Dhillon B, Eikelboom RH, et al. Retinal image analysis: Concepts, applications and potential. Prog Retin Eye Res. 2006;25:99–127. doi: 10.1016/j.preteyeres.2005.07.001. [DOI] [PubMed] [Google Scholar]

- 2.Sopharak A, Uyyanonvara B, Barman S. Automatic exudate detection for diabetic retinopathy screening. Scienceasia. 2009;35:80–8. doi: 10.3390/s90302148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fleming AD, Philip S, Goatman KA, Olson JA, Sharp PF. Automated assessment of diabetic retinal image quality based on clarity and field definition. Invest Ophthalmol Vis Sci. 2006;47:1120–5. doi: 10.1167/iovs.05-1155. [DOI] [PubMed] [Google Scholar]

- 4.Teng T, Lefley M, Claremont D. Progress towards automated diabetic ocular screening: A review of image analysis and intelligent systems for diabetic retinopathy. Med Biol Eng Comput. 2002;40:2–13. doi: 10.1007/BF02347689. [DOI] [PubMed] [Google Scholar]

- 5.Nirmala SR, Nath MK, Dandapat S. Retinal image analysis: A review. Int J Comput Commun Technol (Spec Issue) 2011;2:11–4. [Google Scholar]

- 6.European Conference on Screening for Diabetic Retinopathy in Europe. 2006. May, [Last accessed on 2007 Mar 19]. Available from: http://www.drscreening2005.org.uk/conference_report.doc .

- 7.Wen YH, Smith AB, Morris AB. New Zealand: Proceedings of Image and vision Computing; 2007. Automated Assessment of Diabetic Retinal Image Quality Based on Blood Vessel Detection; pp. 132–6. [Google Scholar]

- 8.Philip S, Fleming AD, Goatman KA, Fonseca S, McNamee P, Scotland GS, et al. The efficacy of automated “disease/no disease” grading for diabetic retinopathy in a systematic screening programme. Br J Ophthalmol. 2007;91:1512–7. doi: 10.1136/bjo.2007.119453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mengko TR, Handayani A, Valindria VV, Hadi S, Sovani I. Image Processing in Retinal Angiography: Extracting Angiographical Features without the Requirement of Contrast Agents. 2009:451–4. [Google Scholar]

- 10.Sriranjini R, Devaki M. Detection of exudates in retinal images based on computational intelligence approach. Int J Comput Sci Netw Secur. 2013;13:86–9. [Google Scholar]

- 11.Wisaeng KH, Pothiruk E. Automatic detection of retinal exudates using a support vector machine. Appl Med Inform. 2013;32:33–42. [Google Scholar]

- 12.Sopharak A, Uyyanonvara B, Barman S, Williamson TH. Automatic detection of diabetic retinopathy exudates from non-dilated retinal images using mathematical morphology methods. Comput Med Imaging Graph. 2008;32:720–7. doi: 10.1016/j.compmedimag.2008.08.009. [DOI] [PubMed] [Google Scholar]

- 13.Sharma A, Nimabrte N, Dhanvijay S. Localization of optic disc in retinal images by using an efficient k-means clustering algorithm. Int J Ind Electron Electr Engineering. 2014;2:14–7. [Google Scholar]

- 14.Esmaeili M, Rabbani H, Dehnavi MA. Automatic optic disk boundary extraction by the use of curvelet transform and deformable variational level set model. Pattern Recognit. 2012;47:2832–42. [Google Scholar]

- 15.Sivaswamy J, Agarwal A, Chawla M, Rani A, Das T. Extraction of capillary non-perfusion from fundus fluorescein angiogram. Commun Comput Inf Sci. 2009;25:176–88. [Google Scholar]

- 16.Marrugo AG, Millan MS. Retinal image analysis: Preprocessing and feature extraction. J Phys. 2011;274:1–8. [Google Scholar]

- 17.Youssif AA, Ghalwash AZ, Ghoneim AS. Proc. Cairo International Biomedical Engineering Conference; 2006. Comparative Study of Contrast Enhancement and Illumination Equalization Methods for Retinal Vasculature Segmentation; pp. 1–5. [Google Scholar]

- 18.Walter T, Massin P, Erginay A, Ordonez R, Jeulin C, Klein JC. Automatic detection of microaneurysms in color fundus images. Med Image Anal. 2007;11:555–66. doi: 10.1016/j.media.2007.05.001. [DOI] [PubMed] [Google Scholar]

- 19.Gonzales RC, Woods RE. 3rd ed. USA: Prentice Hall; 2007. Digital Image Processing. [Google Scholar]

- 20.Ramaswamy G, Lombardo M, Devaney N. Registration of adaptive optics corrected retinal nerve fiber layer (RNFL) images. Biomed Opt Express. 2014;5:1941–51. doi: 10.1364/BOE.5.001941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imaging. 2004;23:501–9. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 22.Dhivyaa CD, Nithya K, Saranya M. Automatic detection of diabetic retinopathy from color fundus retinal images. Int J Recent Innov Trends in Comput Commun. 2014;2:533–6. [Google Scholar]

- 23.Somasundaram K, Sharma A. Effect of window size and weight of median filters in removing impulse noise. In: Thangavel K, Balasubramaniam P, editors. Computing and Mathematical Modeling. New Dehli, India: 2006. pp. 121–7. [Google Scholar]

- 24.Fleming AD, Philip S, Goatman KA, Williams GJ, Olson JA, Sharp PF. Automated detection of exudates for diabetic retinopathy screening. Phys Med Biol. 2007;52:7385–96. doi: 10.1088/0031-9155/52/24/012. [DOI] [PubMed] [Google Scholar]

- 25.Streeter L, Cree MJ. Image and Vision Computing NZ; 2003. Microaneurysm Detection in Colour Fundus Images; pp. 280–5. [Google Scholar]

- 26.Laliberté F, Gagnon L, Sheng Y. Registration and fusion of retinal images – An evaluation study. IEEE Trans Med Imaging. 2003;22:661–73. doi: 10.1109/TMI.2003.812263. [DOI] [PubMed] [Google Scholar]

- 27.Yang G, Gagnon L, Wang S, Boucher MC. Algorithm for detecting micro-aneurysms in low-resolution color retinal images. Ottawa. 2001:265–71. [Google Scholar]

- 28.Cree MJ, Olson JA, McHardy KC, Sharp PF, Forrester JV. The preprocessing of retinal images for the detection of fluorescein leakage. Phys Med Biol. 1999;44:293–308. doi: 10.1088/0031-9155/44/1/021. [DOI] [PubMed] [Google Scholar]

- 29.Wade NJ. Image, eye, and retina (invited review) J Opt Soc Am A Opt Image Sci Vis. 2007;24:1229–49. doi: 10.1364/josaa.24.001229. [DOI] [PubMed] [Google Scholar]

- 30.Joshi s, Karule PT. Retinal blood vessel segmentation. Int J Eng Innov Technol. 2012;1:175–8. [Google Scholar]

- 31.Usher D, Dumskyj M, Himaga M, Williamson TH, Nussey S, Boyce J. Automated detection of diabetic retinopathy in digital retinal images: A tool for diabetic retinopathy screening. Diabet Med. 2004;21:84–90. doi: 10.1046/j.1464-5491.2003.01085.x. [DOI] [PubMed] [Google Scholar]

- 32.Sinthanayothin C, Kongbunkiat V, Phoojaruenchanachain S, Singlavanija A. Proceedings of the 3rd International Symposium on Image and Signal Processing and Analysis 2014; 2003. Automated screening system for diabetic retinopathy; pp. 915–20. [Google Scholar]

- 33.Bernardes R, Serranho P, Lobo C. Digital ocular fundus imaging: A review. Ophthalmologica. 2011;226:161–81. doi: 10.1159/000329597. [DOI] [PubMed] [Google Scholar]

- 34.Rasta SH, Manivannan A, Sharp PF. Spectral imaging technique for retinal perfusion detection using confocal scanning laser ophthalmoscopy. J Biomed Opt. 2012;17:116005. doi: 10.1117/1.JBO.17.11.116005. [DOI] [PubMed] [Google Scholar]

- 35.Rasta SH, Manivannan A, Sharp PF. Spectroscopic imaging of the retinal vessels using a new dual-wavelength. Proc SPIE. 2009;736805:1–11. [Google Scholar]

- 36.Zhang B, Zhang L, Zhang L, Karray F. Retinal vessel extraction by matched filter with first-order derivative of Gaussian. Comput Biol Med. 2010;40:438–45. doi: 10.1016/j.compbiomed.2010.02.008. [DOI] [PubMed] [Google Scholar]

- 37.Salvatelli A, Bizai G, Barbosa G, Drozdowicz B, Delrieux C. A comparative analysis of pre-processing techniques in colour retinal images. J Phys. 2007;90:1–7. [Google Scholar]