Abstract

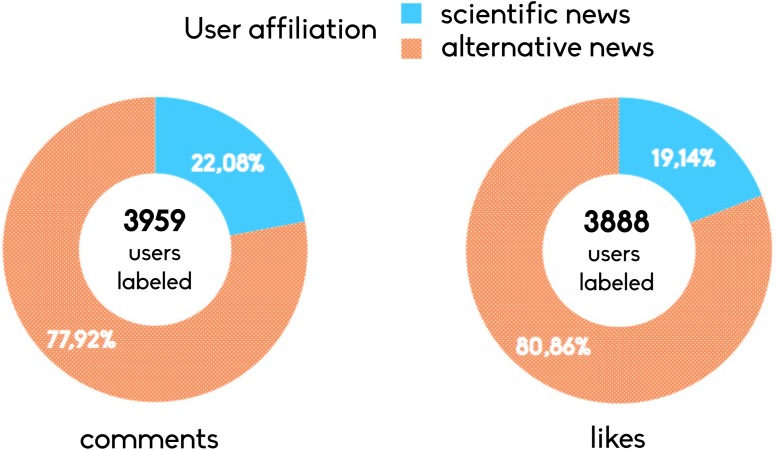

The large availability of user provided contents on online social media facilitates people aggregation around shared beliefs, interests, worldviews and narratives. In spite of the enthusiastic rhetoric about the so called collective intelligence unsubstantiated rumors and conspiracy theories—e.g., chemtrails, reptilians or the Illuminati—are pervasive in online social networks (OSN). In this work we study, on a sample of 1.2 million of individuals, how information related to very distinct narratives—i.e. main stream scientific and conspiracy news—are consumed and shape communities on Facebook. Our results show that polarized communities emerge around distinct types of contents and usual consumers of conspiracy news result to be more focused and self-contained on their specific contents. To test potential biases induced by the continued exposure to unsubstantiated rumors on users’ content selection, we conclude our analysis measuring how users respond to 4,709 troll information—i.e. parodistic and sarcastic imitation of conspiracy theories. We find that 77.92% of likes and 80.86% of comments are from users usually interacting with conspiracy stories.

Introduction

The World Wide Web has changed the dynamics of information transmission as well as the agenda-setting process [1]. Relevance of facts, in particular when related to social relevant issues, mingle with half-truths and untruths to create informational blends [2, 3]. In such a scenario, as pointed out by [4], individuals can be uninformed or misinformed and the role of corrections in the diffusion and formation of biased beliefs are not effective. In particular, in [5] online debunking campaigns have been shown to create a reinforcement effect in usual consumers of conspiracy stories. In this work, we address users consumption patterns of information using very distinct type of contents—i.e., main stream scientific news and conspiracy news. The former diffuse scientific knowledge and the sources are easy to access. The latter aim at diffusing what is neglected by manipulated main stream media. Specifically, conspiracy theses tend to reduce the complexity of reality by explaining significant social or political aspects as plots conceived by powerful individuals or organizations. Since these kinds of arguments can sometimes involve the rejection of science, alternative explanations are invoked to replace the scientific evidence. For instance, people who reject the link between HIV and AIDS generally believe that AIDS was created by the U.S. Government to control the African American population [6]. The spread of misinformation in such a context might be particularly difficult to detect and correct because of the social reinforcement—i.e. people are more likely to trust an information someway consistent with their system of beliefs [7–17]. The growth of knowledge fostered by an interconnected world together with the unprecedented acceleration of scientific progress has exposed the society to an increasing level of complexity to explain reality and its phenomena. Indeed, a shift of paradigm in the production and consumption of contents has occurred, utterly increasing the volumes as well as the heterogeneity of available to users. Everyone on the Web can produce, access and diffuse contents actively participating in the creation, diffusion and reinforcement of different narratives. Such a large heterogeneity of information fostered the aggregation of people around common interests, worldviews and narratives.

Narratives grounded on conspiracy theories tend to reduce the complexity of reality and are able to contain the uncertainty they generate [18–20]. They are able to create a climate of disengagement from mainstream society and from officially recommended practices [21]—e.g. vaccinations, diet, etc. Despite the enthusiastic rhetoric about the collective intelligence [22, 23] the role of socio-technical system in enforcing informed debates and their effects on the public opinion still remain unclear. However, the World Economic Forum listed massive digital misinformation as one of the main risks for modern society [24].

A multitude of mechanisms animates the flow and acceptance of false rumors, which in turn create false beliefs that are rarely corrected once adopted by an individual [8, 10, 25, 26]. The process of acceptance of a claim (whether documented or not) may be altered by normative social influence or by the coherence with the system of beliefs if the individual [27, 28]. A large body of literature addresses the study of social dynamics on socio-technical systems from social contagion up to social reinforcement [12–15, 17, 29–41].

Recently in [42, 43] it has been shown that online unsubstantiated rumors—such as the link between vaccines and autism, the global warming induced by chem-trails or the secret alien government—and main stream information—such as scientific news and updates—reverberate in a comparable way. Pervasiveness of unreliable contents might lead to mix up unsubstantiated stories with their satirical counterparts—e.g. the presence of sildenafil-citratum (the active ingredient of Viagra™) [44] in chem-trails or the anti hypnotic effects of lemons (more than 45000 shares on Facebook) [45, 46]. In fact, there are very distinct groups, namely trolls, building Facebook pages as a caricatural version of conspiracy news. Their activities range from controversial comments and posting satirical contents mimicking conspiracy news sources, to the fabrication of purely fictitious statements, heavily unrealistic and sarcastic. Not rarely, these memes became viral and were used as evidence in online debates from political activists [47].

In this work we target consumption patterns of users with respect to very distinct types of information. Focusing on the Italian context and helped by pages very active in debunking unsubstantiated rumors (see acknowledgment section), we build an atlas of scientific and conspiracy information sources on Facebook. Our dataset contains 271,296 post created by 73 Facebook pages. Pages are classified according to the kind of information disseminated and their self description in conspiracy news—alternative explanations of reality aiming at diffusing contents neglected by main stream information—and scientific news. For further details about the data collection and the dataset refer to the Methods section. Notice that it is not our intention claiming that conspiracy information are necessarily false. Our focus is on how communities formed around different information and narratives interact and consume their preferred information.

In the analysis, we account for user interaction with respect to pages public posts—i.e. likes, shares, and comments. Each of these actions has a particular meaning [48–50]. A like stands for a positive feedback to the post; a share expresses the will to increase the visibility of a given information; and comment is the way in which online collective debates take form around the topic promoted by posts. Comments may contain negative or positive feedbacks with respect to the post. Our analysis starts with an outline of information consumption patterns and the community structure of pages according to their common users. We label polarized users—users which their like activity (positive feedback) is almost (95%) exclusively on the pages of one category—and find similar interaction patterns on the two communities with respect to preferred contents. According to literature on opinion dynamics [37], in particular the one related to the Bounded confidence model (BCM) [51]—two individuals are able to influence each other only if the distance between their opinion is below a given distance—users consuming different and opposite information tend to aggregate into isolated clusters (polarization). Moreover, we measure their commenting activity on the opposite category finding that polarized users of conspiracy news are more focused on posts of their community and that they are more oriented on the diffusion of their contents—i.e. they are more prone to like and share posts from conspiracy pages. On the other hand, usual consumers of scientific news result to be less committed in the diffusion and more prone to comment on conspiracy pages. Finally, we test the response of polarized users to the exposure to 4709 satirical and demential version of conspiracy stories finding that, out of 3888 users labeled on likes and 3959 on comments, the most of them are usual consumers of conspiracy stories (80.86% of likes and 77.92% of comments). Our findings, coherently with [52–54] indicate that the relationship between beliefs in conspiracy theories and the need for cognitive closure—i.e. the attitude of conspiracists to avoid profound scrutiny of evidence to a given matter of fact—is the driving factors for the diffusion of false claims.

Results and discussion

In this work we address the driving forces behind the popularity of contents on online social media To do this, we start our analysis by characterizing users’ interaction patterns with respect to different kind of contents. Then, we label typical users according to the kind of information they are usually exposed to and validate their tolerance with respect to information that we know to be false as they are a parodistic imitation of conspiracy stories containing fictitious and heavily unrealistic statements.

Consumption patterns on science and conspiracy news

Our analysis starts by looking at how Facebook users interact with contents from pages of conspiracy and mainstream scientific news. Fig. 1 shows the empirical complementary cumulative distribution function (CCDF) for likes (intended as positive feedbacks to the post), comments (a measure of the activity of online collective debates), and shares (intended as the the will to increase the visibility of a given information) for all posts produced by the different categories of pages. Distributions of likes, comments, and shares on both categories are heavy–tailed.

Fig 1. Users Activity.

Empirical complementary cumulative distribution function (CCDF) of users’ activity (like, comment and share) for post grouped by page category. The distributions are indicating heavy–tailed consumption patterns for the various pages.

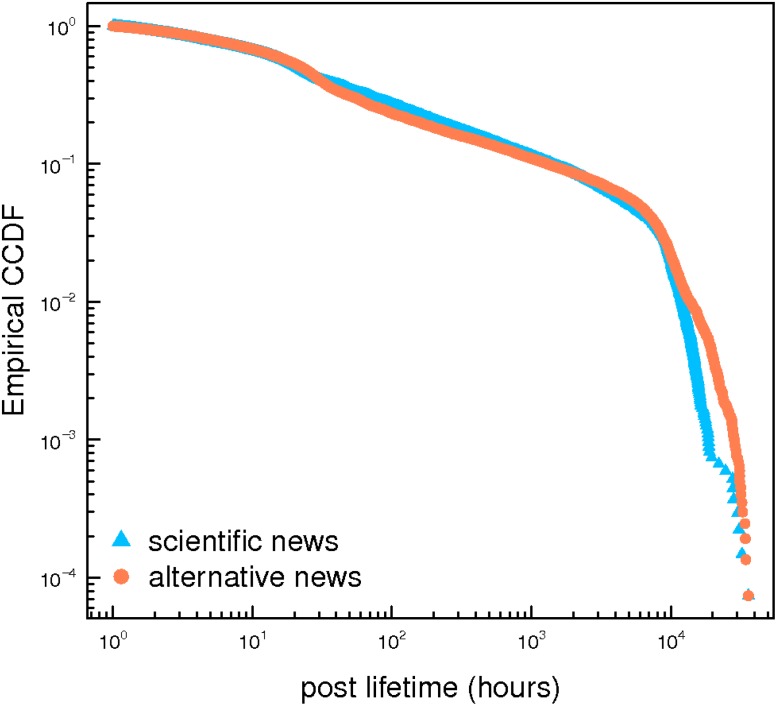

A post sets the attention on a given topic, then a discussion may evolve in the form of comments. To further investigate users consumption patterns, we zoom in at the level of comments. Such a measure is a good approximation of users attention with respect to the information reported on by the post. In Fig. 2 we show CCDF of the posts lifetime—i.e. the temporal distance between the first and the last comment for each post from the two categories of pages. Very distinct kinds of contents have have a comparable lifetime.

Fig 2. Post lifetime.

Empirical complementary cumulative distribution function (CCDF), grouped by page category, of the temporal distance between the first and last comment to each post. The life time of posts in both categories is similar.

To account for the distinctive features of the consumption patterns related to different contents, we focus on the correlation of combination of users’ interactions with posts. Likes and comments have a different meaning from a user viewpoint. Most of the time, a like stands for a positive feedback to the post; a share expresses the will to increase the visibility of a given information; and a comment is the way in which online collective debates take form and may contain negative or positive feedbacks with respect to the post. Notice that, cases in which they are motivated by ironic reasons are impossible to detect. In order to compute the correlation among different actions, we use the Pearson coefficient—i.e., the covariance of two variables (in this case couples of action) divided by the product of their standard deviations. In Table 1 we show the Pearson correlation for user couple of actions on posts (likes, comments and shares). As an example, a high correlation coefficient for Comments/Shares indicates that posts more commented are likely to be shared and vice versa.

Table 1. Users Actions.

Correlation (Pearson coefficient) between couple of actions to each post in scientific and conspiracy news. Posts from conspiracy pages are more likely to be liked and shared by users, indicating a major commitment in the diffusion.

| Likes/Comments | Likes/Shares | Comments/Shares | |

|---|---|---|---|

| Science | 0.523 | 0.218 | 0.522 |

| Conspiracy | 0.639 | 0.816 | 0.658 |

Correlation values for posts of conspiracy news have higher values than those in science news. They receive more likes and shares, indicating a preference of conspiracy users to promote their liked contents. This finding is consistent with [52–54] which state that conspiracists need for cognitive closure, i.e. they are more likely to interact with conspiracy based theories and have a lower trust in other information sources. Qualitatively different information are consumed in a comparable way. However, zooming in at the combination of actions we find that users of conspiracy pages are more prone to share and like on a post. Such a latter result indicates a higher level of commitment of consumers of conspiracy news. They are more oriented to the diffusion of conspiracy related topics that are—according to their system of beliefs—neglected by main stream media and scientific news and consequently very difficult to verify. Such a pattern oriented to diffusion of conspiracy news opens to interesting about the pervasiveness of unsubstantiated rumors in online social media.

Information-based communities

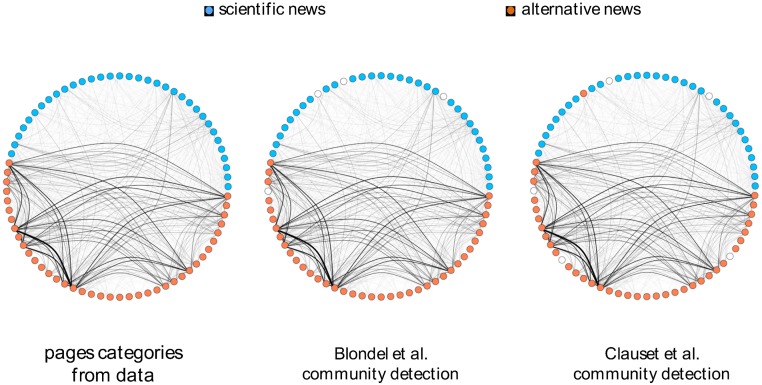

The classification of pages in science and conspiracy related contents is grounded on their self-description and on the kind of promoted content (see the Methods section for further details and the list of pages). We want to understand if users engagement across very distinct contents shapes different communities around contents. We apply a network based approach aimed at measuring distinctive connectivity patterns of these information-based communities? i.e., users consuming information belonging to the same narrative. In particular, we transform data in order to have a bipartite network of pages and users—i.e., two pages are connected if a user liked a post from both of them. In Fig. 3 we show the membership of pages (orange for conspiracy and azure for science). In the first panel, memberships are given according to our categorization of pages (for further details refer to the Methods section). The second panel shows the page network with membership given by applying the multi-level modularity optimization algorithm [55]. In the third panel, membership is obtained by applying an algorithm that looks for the maximum modularity score [56].

Fig 3. Page Network.

The membership of 73 pages as a) identified by means of their self-description, b) by applying the multi-level modularity optimization algorithm, and c) by looking at the maximum modularity score. Community detection algorithms based on modularity are good discriminants for community partitioning.

These findings indicates that connectivity patterns, in particular the modularity, between the two categories of pages differ. Since we are considering users’ likes on the pages’ posts, this aspect is pointing out a higher mobility of users of across pages of the conspiracy category.

Polarized users and their interaction patterns

In this section we focus on the users engagement across the different contents. Hence, we label users by means of a simple thresholding algorithm accounting for the percentage of likes on one or the other category. Notice that the choice of the like as a discriminant is grounded on the fact that generally such an action stands for a positive feedback to a post [50]. We consider a user to be polarized in a community when the number of his/her likes with respect to his/her total like activity on one category—scientific or conspiracy news—is higher than 95% (for further details about the algorithm refer to the Methods section). We identify 255,225 polarized users of scientific pages—i.e., resulting t be the 76,79% of users interacted on scientific pages) and 790,899 conspiracy polarized users—i.e., the 91,53% of users interacting with conspiracy pages in terms of liking. Users activity across pages is highly polarized. According to literature on opinion dynamics [37] in particular the one related to the Bounded Confidence Model (BCM) [51]—two nodes are able to influence each other only if the distance between their opinions is below a given distance—users consuming different and opposite information tend to form polarized clusters. The same hold If we look at commenting activity of polarized users inside and outside their community. In particular, those users that are polarized on conspiracy news tend to interact especially in their community both in terms of comments (99,08%) and likes. Users polarized in science tend to comment slightly more outside their community (90,29%). Results are summarized in Table 2.

Table 2. Activity of polarized users.

Number of classified users for each category and their commenting activity on the category in which they are classified and on the opposite category. Users polarized on conspiracy pages tend to interact especially in their community both in terms of comments and likes. Users polarized in science are more active elsewhere.

| Users classified | (%) Users classified | Comments on their category | Comments on the opposite category | Comments on both categories | |

|---|---|---|---|---|---|

| Science News | 255,225 | 76,79 | 126,454 | 13,603 | 140,057 |

| Conspiracy News | 790,899 | 91,53 | 642,229 | 5,954 | 648,183 |

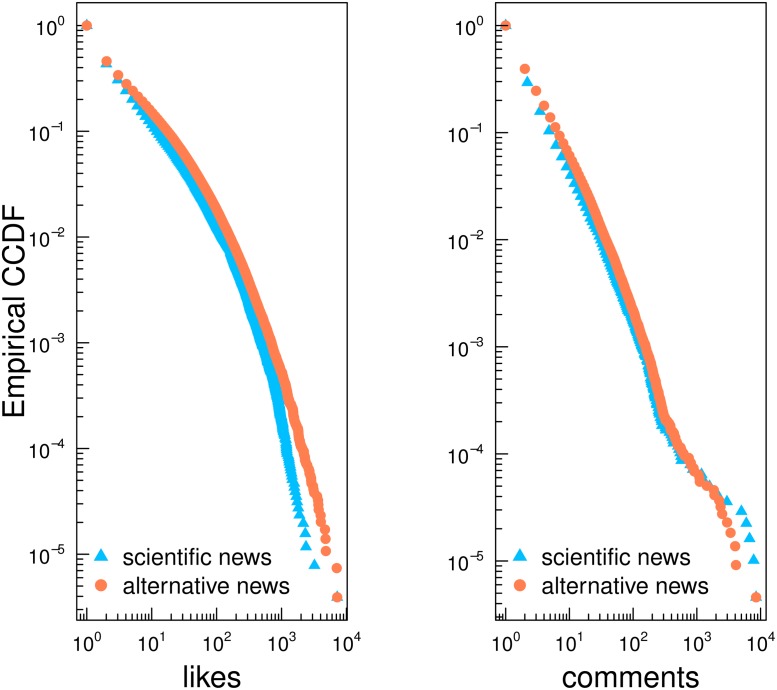

Fig. 4 shows the CCDF for likes and comments of polarized users. Despite the very profound different nature of contents, consumption patterns are nearly the same both in terms of likes and comments. This finding indicates that very engaged users of different and clustered communities formed around different kind of narratives consume their preferred information in a similar way.

Fig 4. Consumption patterns of polarized users.

Empirical complementary cumulative distribution function (CCDF) for likes and comments of polarized users.

As a further investigation, we focus on the post where polarized users of both communities commented. Hence, we select the set of posts on which at least a polarized user of each of the two communities has commented. We find polarized users of communities debating on 7,751 posts (1,991 from science news and 5,760 from conspiracy news). The post at the interface, where the two communities discuss are mainly on the conspiracy side. As shown in Fig. 5, polarized users of scientific news made 13,603 comments on post published by conspiracy news (9.71% of their total commenting activity), whereas polarized users of conspiracy news commented on scientific posts only 5,954 times (0.92% of their total commenting activity, i.e. roughly ten times less than polarized users of scientific news).

Fig 5. Activity and communities.

Posts on which at least a member of each the two communities has commented. The number of posts is 7,751 (1,991 from scientific news and 5,760 from conspiracy news). Here we show the commenting activity in terms of polarized users on the two categories.

Response to false information

On online social networks, users discover and share information with their friends and through cascades of reshares information might reach a large number of individuals. Interesting is the popular case of Senator Cirenga’s [57, 58] law proposing to fund policy makers with 134 billion of euros (10% of the Italian GDP) in case of defeat in the political competition. This was an intentional joke with an explicit mention to its satirical nature. The case of Senator Cirenga became popular within online political activists and used as an argumentation in political debates [47].

Our analysis showed that users tend to aggregate around preferred contents shaping well defined groups having similar information consumption patterns. Our hypothesis is that the exposure to unsubstantiated claims (that are pervasive in online social media) might affect user selection criteria by increasing the attitude to interact with false information. Therefore, in this section we want to test how polarized users usually exposed to distinct narrative—one that can be veriefied (science news) and one that by definition is almost impossible to check—interact with posts that are deliberately false.

To do this we collected a set of troll posts—i.e. paradoxical imitations of conspiracy information sources. These posts are clearly unsubstantiated claims, like the undisclosed news that infinite energy has been finally discovered, or that a new lamp made of actinides (e.g. plutonium and uranium) might solve problems of energy gathering with less impact on the environment, or that the chemical analysis revealed that chem-trails contains sildenafil citratum (the active ingredient of Viagra™). Fig. 6 shows how polarized users of both categories interact with troll posts in terms of comments and likes. We find that polarized users of conspiracy pages are more active in liking and commenting on intentionally false claims.

Fig 6. Polarized users on false information.

Percentage of comments and likes on intentional false memes posted by a satirical page from polarized users of the two categories.

Conclusions

Recently in [42, 43] has been shown that unsubstantiated claims reverberate for a timespan comparable to the one of more verified information and that usual consumers of conspiracy theories are more prone to interact with them. Conspiracy theories find on the internet a natural medium for their diffusion and, not rarely, trigger collective counter-conspirational actions [59, 60]. Narratives grounded on conspiracy theories tend to reduce the complexity of reality and are able to contain the uncertainty they generate [18–20]. In this work we studied how users interact with information related to different (opposite) narratives on Facebook. Through a thresholding algorithm we label polarized users on the two categories of pages identifying well shaped communities. In particular, we measure commenting activity of polarized users on the opposite category, finding that polarized users of conspiracy news are more focused on posts of their community and their attention is more oriented to diffuse conspiracy contents. On the other hand, polarized users of scientific news are less committed in the diffusion and more prone to comment on conspiracy pages. A possible explanation for such a behavior is that the former want to diffuse what is neglected by main stream thinking, whereas the latter aims at inhibiting the diffusion of conspiracy news and proliferation of narratives based on unsubstantiated claims. Finally, we test how polarized users of both categories responded to the inoculation of 4,709 false claims produced by a parodistic page, finding polarized users of conspiracy pages to be the most active.

These results are coherent with the findings of [52–54] indicating the existence of a relationship between beliefs in conspiracy theories and the need for cognitive closure. Those who use a more heuristic approach when evaluating evidences to form their opinions are more likely to end up with an account more consistent with their existing system of beliefs. However, anti-conspiracy theorists may not only reject evidence that points toward a conspiracy theory account, but also spend cognitive resources for seeking out evidences to debunk conspiracy theories even when these are satirical imitation of false claims. These results open to new possibilities to understand popularity of information in online social media beyond simple structural metrics. Furthermore, we show that where unsubstantiated rumors are pervasive, false rumors might easy proliferate. Next envisioned steps for our research is to look at reactions of users to different kind of information according to a more detailed classification on contents.

Methods

Ethics Statement

The entire data collection process has been carried out exclusively through the Facebook Graph API [61], which is publicly available, and for the analysis (according to the specification settings of the API) we used only public available data (users with privacy restrictions are not included in the dataset). The pages from which we download data are public Facebook entities (can be accessed by anyone). User content contributing to such pages is also public unless the user’s privacy settings specify otherwise and in that case it is not available to us.

Data collection

In this study we address the effect of the usual exposure to diverse verifiable contents on the diffusion of false rumors. We identified two main categories of pages: conspiracy news—i.e. pages promoting contents neglected by main stream media—and science news. We defined the space of our investigation with the help of Facebook groups very active in debunking conspiracy theses (Protesi di Protesi di Complotto, Che vuol dire reale, La menzogna diventa verita e passa alla storia). We categorized page according to their contents and their self description.

Concerning conspiracy news, their self description is often claiming the mission to inform people about topics neglected by main stream media. Pages like Scienza di Confine, Lo Sai or CoscienzaSveglia promote heterogeneous contents ranging from aliens, chemtrails, geocentrism, up to the causal relation between vaccinations and homosexuality. We do not focus on the truth value of their information but rather on the possibility to verify their claims. Conversely, science news—e.g Scientificast, Italia unita per la scienza are active in diffusing posts about the most recent scientific advances. The selection of the source has been iterated several times and verified by all the authors. To our knowledge, the final dataset is the complete set of all scientific and conspiracist information sources active in the Italian Facebook scenario. In addition, we identify two pages posting satirical news with the aim of mocking usual rumors circulating on line by adding satirical contents.

The pages from which we downloaded data are public Facebook entities (can be accessed by virtually anyone). The resulting dataset is composed of 73 public pages divided in scientific and conspiracist news for which we downloaded all the posts (and their respective users interactions) over a timespan of 4 years (2010 to 2014).

The exact breakdown of the data is presented in Table 3. The first category includes all pages diffusing conspiracy information—pages which disseminate controversial information, most often lacking supporting evidence and sometimes contradictory of the official news (i.e. conspiracy theories). The second category is that of scientific dissemination including scientific institutions and scientific press having the main mission to diffuse scientific knowledge.

Table 3. Breakdown of Facebook dataset.

The number of pages, posts, likes, comments, likers, and commenters for conspiracy and science news.

| Total | Science News | Conspiracy News | |

|---|---|---|---|

| Pages | 73 | 34 | 39 |

| Posts | 271,296 | 62,705 | 208,591 |

| Likes | 9,164,781 | 2,505,399 | 6,659,382 |

| Comments | 1,017,509 | 180,918 | 836,591 |

| Likers | 1,196,404 | 332,357 | 864,047 |

| Commenters | 279,972 | 53,438 | 226,534 |

Preliminaries and Definitions

Statistical Tools. To characterize random variables, a main tool is the probability distribution function (PDF), which gives the probability that a random variable X assumes a value in the interval [a, b], i.e. . The cumulative distribution function (CDF) is another important tool giving the probability that a random variable X is less than or equal to a given value x, i.e. . In social sciences, an often occuring probability distribution function is the Pareto’s law f(x) ∼ x −γ, that is characterized by power law tails, i.e. by the occurrence of rare but relevant events. In fact, while f(x) → 0 for x → ∞ (i.e. high values of a random variable X are rare), the total probability of rare events is given by , where x is a sufficiently large value. Notice that C(x) is the Complement to the CDF (CCDF), where complement indicates that C(x) = 1 − F(x). Hence, in order to better visualize the behavior of empirical heavy–tailed distributions, we recur to log–log plots of the CCDF.

Bipartite Networks and Community Detection. We consider a bipartite network having as nodes users and affiliation the Facebook pages. A comment to a given information posted by a page determines a link between a user and a page. More formally, a bipartite graph is a triple where A = {a i ∣ i = 1 … n A} and B = {b j ∣ j = 1 … n B} are two disjoint sets of vertices, and E ⊆ A × B is the set of edges—i.e. edges exist only between vertices of the two different sets A and B. The bipartite graph is described by the matrix M defined as

For our analysis we use the co-occurrence matrices C A = MM T and C B = M T M that count, respectively, the number of common neighbors between two vertices of A or B. C A is the weighted adjacency matrix of the co-occurrence graph with vertices on A. Each non-zero element of C A corresponds to an edge among vertices a i and a j with weight . To test the community partitioning we use two well known community detection algorithms based on modularity [55, 56]. The former algorithm is based on multi-level modularity optimization. Initially, each vertex is assigned to a community on its own. In every step, vertices are re-assigned to communities in a local, greedy way. Nodes are moved to the community in which they achieve the highest modularity. Differently, the latter algorithm looks for the maximum modularity score by considering all possible community structures in the network. We apply both algorithms to the bipartite projection on pages.

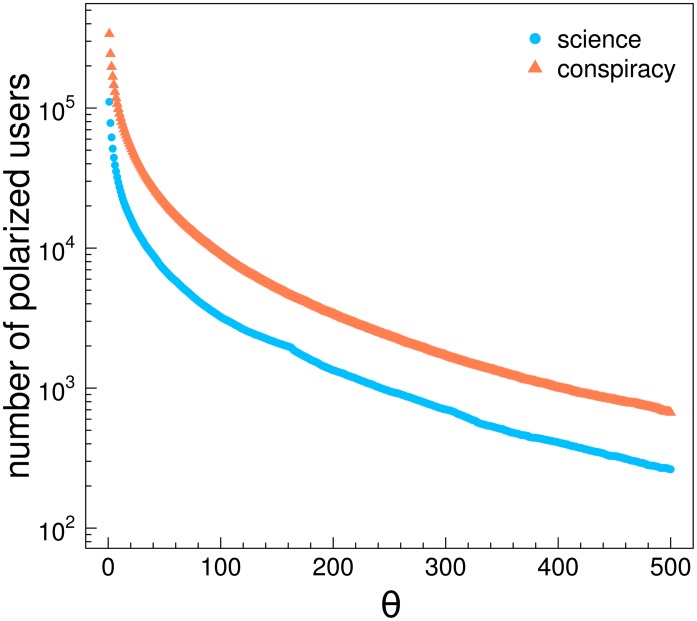

Labeling algorithm. The labeling algorithm can be described as thresholding strategy on the total number of users likes. Considering the total number of likes of a user L u on both posts P in categories S and C. Let l s and l c define the number of likes of a user u on P s or P c, respectively denoting posts from scientific and conspiracy pages. Then, we will have the total like activity of users on one category expressed as . Fixing a threshold θ we can discriminate users with enough activity on one category. More precisely, the condition for a user to be labeled as a polarized user in one category can be described as ∨ . In Fig. 7 we show the number of polarized users as a function of θ. Both curves decrease with a comparable rate.

Fig 7. Polarized users and activity.

Number of polarized users as a function of the thresholding value θ on the two categories.

List of pages

In this section are listed pages of our dataset. In Table 4 the list of scientific news and on Table 5 the list of conspiracy pages.

Table 4. Scientific news sources.

List of Facebook pages diffusing main stream scientific news and their url.

Table 5. Conspiracy news sources.

List of Facebook pages diffusing conspiracy news and their url.

Acknowledgments

Special thanks go to Delia Mocanu, “Protesi di Protesi di Complotto”, “Che vuol dire reale”, “La menzogna diventa verita e passa alla storia”, “Simply Humans”, “Semplicemente me”, Salvatore Previti, Brian Keegan, Dino Ballerini, Elio Gabalo, Sandro Forgione and “The rooster on the trash” for their precious suggestions and discussions.

Data Availability

The entire data collection process has been carried out exclusively through the Facebook Graph API which is publicly available.

Funding Statement

Funding for this work was provided by EU FET project MULTIPLEX nr. 317532 and SIMPOL nr. 610704. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Mccombs ME, Shaw DL (1972) The Agenda-Setting Function of Mass Media. The Public Opinion Quarterly 36: 176–187. 10.1086/267990 [DOI] [Google Scholar]

- 2. Rojecki A, Meraz S (2014) Rumors and factitious informational blends: The role of the web in speculative politics. New Media and Society. 10.1177/1461444814535724 [DOI] [Google Scholar]

- 3. Allpost G, Postman L (1946) An analysis of rumor. Public Opinion Quarterly 10: 501–517. 10.1086/265813 [DOI] [Google Scholar]

- 4. Kuklinski JH, Quirk PJ, Jerit J, Schwieder D, Rich RF (2000) Misinformation and the currency of democratic citizenship. The Journal of Politics 62: 790–816. 10.1111/0022-3816.00033 [DOI] [Google Scholar]

- 5. Bessi A, Caldarelli G, Del Vicario M, Scala A, Quattrociocchi W (2014) Social determinants of content selection in the age of (mis)information. In: Aiello L, McFarland D, editors, Social Informatics, Springer International Publishing, volume 8851 of Lecture Notes in Computer Science pp. 259–268. URL 10.1007/978-3-319-13734-6_18 [DOI] [Google Scholar]

- 6. Sunstein CR, Vermeule A (2009) Conspiracy theories: Causes and cures. Journal of Political Philosophy 17: 202–227. 10.1111/j.1467-9760.2008.00325.x [DOI] [Google Scholar]

- 7.McKelvey K, Menczer F (2013) Truthy: Enabling the study of online social networks. In: Proc. CSCW’13. URL http://arxiv.org/abs/1212.4565.

- 8. Meade M, Roediger H (2002) Explorations in the social contagion of memory. Memory & Cognition 30: 995–1009. 10.3758/BF03194318 [DOI] [PubMed] [Google Scholar]

- 9. Mann C, Stewart F (2000) Internet Communication and Qualitative Research: A Handbook for Researching Online (New Technologies for Social Research series). Sage Publications Ltd. [Google Scholar]

- 10. Garrett RK, Weeks BE (2013) The promise and peril of real-time corrections to political misperceptions In: Proceedings of the 2013 conference on Computer supported cooperative work. New York, NY, USA: ACM, CSCW’13, pp. 1047–1058. URL http://doi.acm.org/10.1145/2441776.2441895 [Google Scholar]

- 11. Buckingham Shum S, Aberer K, Schmidt A, Bishop S, Lukowicz P. (2012) Towards a global participatory platform. The European Physical Journal Special Topics 214: 109–152. 10.1140/epjst/e2012-01690-3 [DOI] [Google Scholar]

- 12. Centola D (2010) The spread of behavior in an online social network experiment. Science 329: 1194–1197. 10.1126/science.1185231 [DOI] [PubMed] [Google Scholar]

- 13. Paolucci M, Eymann T, Jager W, Sabater-Mir J, Conte R, et al. (2009) Social Knowledge for e-Governance: Theory and Technology of Reputation. Roma: ISTC-CNR. [Google Scholar]

- 14. Quattrociocchi W, Conte R, Lodi E (2011) Opinions manipulation: Media, power and gossip. Advances in Complex Systems 14: 567–586. 10.1142/S0219525911003165 [DOI] [Google Scholar]

- 15. Quattrociocchi W, Paolucci M, Conte R (2009) On the effects of informational cheating on social evaluations: image and reputation through gossip. IJKL 5: 457–471. 10.1504/IJKL.2009.031509 [DOI] [Google Scholar]

- 16. Bekkers V, Beunders H, Edwards A, Moody R (2011) New media, micromobilization, and political agenda setting: Crossover effects in political mobilization and media usage. The Information Society 27: 209–219. 10.1080/01972243.2011.583812 [DOI] [Google Scholar]

- 17. Quattrociocchi W, Caldarelli G, Scala A (2014) Opinion dynamics on interacting networks: media competition and social influence. Scientific Reports 4 10.1038/srep04938 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Byford J (2011) Conspiracy Theories: A Critical Introduction. Palgrave Macmillan; URL http://books.google.it/books?id=vV-UhrQaoecC [Google Scholar]

- 19. Fine G, Campion-Vincent V, Heath C Rumor Mills: The Social Impact of Rumor and Legend Social problems and social issues. Transaction Publishers; URL http://books.google.it/books?id=dADxBwgCF5MC [Google Scholar]

- 20. Hogg M, Blaylock D (2011) Extremism and the Psychology of Uncertainty Black-well/Claremont Applied Social Psychology Series. Wiley; URL http://books.google.it/books?id=GTgBQ3TPwpAC [Google Scholar]

- 21. Bauer M (1997) Resistance to New Technology: Nuclear Power, Information Technology and Biotechnology. Cambridge University Press; URL http://books.google.it/books?id=WqlRXkxS36cC [Google Scholar]

- 22. Surowiecki J (2005) The Wisdom of Crowds: Why the Many Are Smarter Than the Few. Abacus; URL http://books.google.it/books?id=\_EqBQgAACAAJ [Google Scholar]

- 23. Welinder P, Branson S, Belongie S, Perona P (2010) The multidimensional wisdom of crowds. In: NIPS. pp. 2424–2432. [Google Scholar]

- 24.Howell L (2013) Digital wildfires in a hyperconnected world. In: Report 2013. World Economic Forum.

- 25. Koriat A, Goldsmith M, Pansky A (2000) Toward a psychology of memory accuracy. Annu Rev Psychol 51: 481–537. 10.1146/annurev.psych.51.1.481 [DOI] [PubMed] [Google Scholar]

- 26. Ayers M, Reder L (1998) A theoretical review of the misinformation effect: Predictions from an activation-based memory model. Psychonomic Bulletin & Review 5: 1–21. 10.3758/BF03209454 [DOI] [Google Scholar]

- 27. Zhu B, Chen C, Loftus EF, Lin C, He Q, et al. (2010) Individual differences in false memory from misinformation: Personality characteristics and their interactions with cognitive abilities. Personality and Individual Differences 48: 889–894. 10.1016/j.paid.2010.02.016 [DOI] [Google Scholar]

- 28. Frenda SJ, Nichols RM, Loftus EF (2011) Current Issues and Advances in Misinformation Research. Current Directions in Psychological Science 20: 20–23. 10.1177/0963721410396620 [DOI] [Google Scholar]

- 29. Onnela JP, Reed-Tsochas F (2010) Spontaneous emergence of social influence in online systems. Proceedings of the National Academy of Sciences 107: 18375–18380. 10.1073/pnas.0914572107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ugander J, B L, M C, Kleinberg J (2012) Structural diversity in social contagion. Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed]

- 31. Lewis K, Gonzalez M, Kaufman J (2012) Social selection and peer influence in an online social network. Proceedings of the National Academy of Sciences 109: 68–72. 10.1073/pnas.1109739109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Mocanu D, Baronchelli A, Gonçalves B, Perra N, Zhang Q, et al. (2013) The twitter of babel: Mapping world languages through microblogging platforms. PLOS ONE 8: e61981 10.1371/journal.pone.0061981 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Adamic L, Glance N (2005) The political blogosphere and the 2004 u.s. election: Divided they blog. In: In LinkKDD?05: Proceedings of the 3rd international workshop on Link discovery. pp. 36–43.

- 34. Kleinberg J (2013) Analysis of large-scale social and information networks. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 371 10.1098/rsta.2012.0378 [DOI] [PubMed] [Google Scholar]

- 35. Bond RM, Fariss CJ, Jones JJ, Kramer ADI, Marlow C, et al. (2012) A 61-million-person experiment in social influence and political mobilization. Nature 489: 295–298. 10.1038/nature11421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Borge-Holthoefer J, Rivero A, García I, Cauhé E, Ferrer A, et al. (2011) Structural and dynamical patterns on online social networks: The spanish may 15th movement as a case study. PLoS One 6: e23883 10.1371/journal.pone.0023883 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Castellano C, Fortunato S, Loreto V (2009) Statistical physics of social dynamics. Reviews of Modern Physics 81: 591+. 10.1103/RevModPhys.81.591 [DOI] [Google Scholar]

- 38. Ben-naim E, Krapivsky PL, Vazquez F, Redner S (2003) Unity and discord in opinion dynamics. Physica A. 10.1016/j.physa.2003.08.027 [DOI] [Google Scholar]

- 39. Friggeri A, Adamic L, Eckles D, Cheng J (2014) Rumor Cascades. AAAI Conference on Weblogs and Social Media (ICWSM).

- 40. Hannak A, Margolin D, Keegan B, Weber I (2014) Get Back! You Don’t Know Me Like That: The Social Mediation of Fact Checking Interventions in Twitter Conversations In: Proceedings of the 8th International AAAI Conference on Weblogs and Social Media (ICWSM’14). Ann Arbor, MI. [Google Scholar]

- 41. Cheng J, Adamic L, Dow PA, Kleinberg JM, Leskovec J (2014) Can cascades be predicted? In: Proceedings of the 23rd International Conference on World Wide Web. Republic and Canton of Geneva, Switzerland: International World Wide Web Conferences Steering Committee, WWW’14, pp. 925–936. URL 10.1145/2566486.2567997 [DOI] [Google Scholar]

- 42. Mocanu D, Rossi L, Zhang Q, Karsai M, Quattrociocchi W (2014) Collective attention in the age of (mis)information. Computers in Human Behavior. [Google Scholar]

- 43. Bessi A, Scala A, Zhang Q, Rossi L, Quattrociocchi W (to appear) The economy of attention in the age of (mis)information. Journal of Trust Management. [Google Scholar]

- 44.simplyhumans (2014). Simply humans—benefic effect of lemons. Website. URL https://www.facebook.com/HumanlyHuman/photos/a.468232656606293.1073741826.466607330102159/494359530660272/?type=1&theater. Last checked: 31.07.2014.

- 45.simplyhumans (2014). Dieta personalizzata—benefit and effect of lemons. Website. URL https://www.facebook.com/dietapersonalizzata/photos/a.114020932014769.26792.113925262024336/627614477322076/?type=1&theater. Last checked: 31.07.2014.

- 46.simplyhumans (2014). Simply humans—benefic effect of lemons. Website. URL https://www.facebook.com/HumanlyHuman/photos/a.468232656606293.1073741826.466607330102159/494343470661878/?type=1&theater. Last checked: 31.07.2014.

- 47.Ambrosetti G (2013). I forconi: “il senato ha approvato una legge per i parlamentari in crisi”. chi non verrà rieletto, oltre alla buonuscita, si beccherà altri soldi. sarà vero? Website. URL http://www.linksicilia.it/2013/08/i-forconi-il-senato-ha-approvato-una-legge-per-i-parlamentari-in-crisi-chi-non-verra-rieletto-oltre-alla-buonuscita-si-becchera-altri-soldi-sara-vero/ Last checked: 19.01.2014.

- 48. Ellison NB, Steinfield C, Lampe C (2007) The benefits of facebook? friends:? social capital and college students’ use of online social network sites. Journal of Computer-Mediated Communication 12: 1143–1168. 10.1111/j.1083-6101.2007.00367.x [DOI] [Google Scholar]

- 49. Joinson AN (2008) Looking at, looking up or keeping up with people?: Motives and use of facebook In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York, NY, USA: ACM, CHI’08, pp. 1027–1036. URL http://doi.acm.org/10.1145/1357054.1357213 [Google Scholar]

- 50. Viswanath B, Mislove A, Cha M, Gummadi KP (2009) On the evolution of user interaction in facebook In: Proceedings of the 2Nd ACM Workshop on Online Social Networks. New York, NY, USA: ACM, WOSN’09, pp. 37–42. URL http://doi.acm.org/10.1145/1592665.1592675 [Google Scholar]

- 51. Deffuant G, Neau D, Amblard F, Weisbuch G (2001) Mixing beliefs among interacting agents. Advances in Complex Systems 3: 87–98. 10.1142/S0219525900000078 [DOI] [Google Scholar]

- 52.Patrick J Leman MC (2013). Beliefs in conspiracy theories and the need for cognitive closure. URL http://www.frontiersin.org/Journal/10.3389/fpsyg.2013.00378/full. [DOI] [PMC free article] [PubMed]

- 53. Epstein S, Pacini R, Denes-Raj V, Heier H (1996) Individual differences in intuitive?experiential and analytical?rational thinking styles. Journal of Personality and Social Psychology 71: 390–405. 10.1037/0022-3514.71.2.390 [DOI] [PubMed] [Google Scholar]

- 54. Webster DM, Kruglanski AW (1994) Individual differences in need for cognitive closure. Journal of Personality and Social Psychology 67: 1049–1062. 10.1037/0022-3514.67.6.1049 [DOI] [PubMed] [Google Scholar]

- 55.Blondel VD, Guillaume JL, Lambiotte R, Lefebvre E (2008). Fast unfolding of community hierarchies in large networks. URL http://arxiv.org/abs/0803.0476. 0803.0476.

- 56. Clauset A, Newman MEJ, Moore C (2004) Finding community structure in very large networks. Physical Review E: 1–6. [DOI] [PubMed] [Google Scholar]

- 57.Slate (2014). Study explains why your stupid facebook friends are so gullible. URL http://www.slate.com/blogs/the_world_/2014/03/18/facebook_and_misinformation_study_explains_why_your_stupid_facebook_friends.html.

- 58.Global Polis (2014). Facebook, trolls, and italian politics. URL http://www.globalpolis.org/facebook-trolls-italian-politics/.

- 59. Atran S, Ginges J (2012) Religious and sacred imperatives in human conflict. Science 336: 855–857. 10.1126/science.1216902 [DOI] [PubMed] [Google Scholar]

- 60. Lewandowsky S, Cook J, Oberauer K, Marriott M (2013) Recursive fury: Conspiracist ideation in the blogosphere in response to research on conspiracist ideation. Frontiers in Psychology 4 10.3389/fpsyg.2013.00073 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 61.Facebook (2013). Using the graph api. Website. URL https://developers.facebook.com/docs/graph-api/using-graph-api/. Last checked: 19.01.2014.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The entire data collection process has been carried out exclusively through the Facebook Graph API which is publicly available.