Abstract

Spatial hearing evolved independently in mammals and birds, and is thought to adapt to altered developmental input in different ways. We found, however, that ferrets possess multiple forms of plasticity that are expressed according to which spatial cues are available, suggesting that the basis for adaptation may be similar across species. Our results also provide insight into the way sound source location is represented by populations of cortical neurons.

Sound localization mechanisms are remarkably plastic early in life, as demonstrated by the effects of monaural deprivation on the development of spatial hearing1-6. Different forms of adaptation, however, have been demonstrated in different species7. Barn owls show a compensatory adjustment in neuronal sensitivity to the binaural cues that are altered by hearing loss3-5. In contrast, ferrets become more dependent on the unchanged spectral cues provided by the intact ear for horizontal localization6. These results imply that the basis for developmental plasticity may vary across species, which is consistent with evidence that sound localization evolved independently8 and differs in several respects9 between mammals and birds. However, if the auditory system can express multiple forms of plasticity, spatial processing in different species may be more similar than previously thought. Here we show that monaurally deprived ferrets can also adapt to abnormal binaural cues, a process comparable to that used by barn owls. The nature of this adaptation has important implications for the encoding of spatial information by neuronal populations in primary auditory cortex (A1).

We reared ferrets, each with an earplug in one ear. This alters the binaural cues that normally dominate how animals perceive the azimuth of a sound but preserves the monaural localization cues at the intact ear6. Because monaural cues require a comparison of input at different sound frequencies to provide reliable spatial information10, they cannot be used to localize narrowband sounds. If ferrets can adapt to unilateral hearing loss only by becoming more reliant on the intact monaural spectral cues, they should therefore be unable to localize narrowband stimuli.

We measured the ability of ferrets to judge the azimuth of high-frequency narrowband sounds. Like humans11 and other mammals12, ferrets rely primarily on interaural level differences (ILDs) to localize these sounds13. We observed clear differences in performance across groups, even for individual animals (Fig. 1a-c). Control ferrets performed this task accurately under normal hearing conditions (Fig. 1a,d), but made large errors when the left ear was occluded with an earplug (Fig. 1b,d). However, much smaller errors were made by monaurally deprived ferrets whose left ears had been plugged from hearing onset (juvenile-plugged ferrets) (Fig. 1c,d), suggesting that an adaptive change in ILD processing had occurred in these animals.

Figure 1.

Behavioral judgments of sound azimuth using narrowband high-frequency stimuli. (a)-(c) Joint distributions of stimulus and response, expressed as degrees (deg) azimuth, for a control ferret with normal hearing (a) and a control (b) and juvenile-plugged (JP) ferret (c) wearing an earplug in the left ear. Grayscale represents the number of trials (n) corresponding to each stimulus-response combination. (d) Mean unsigned error for control and earplugged ferrets, normalized so that 0 and 1 correspond to perfect and chance performance, respectively. Error bars show bootstrapped 95% confidence intervals. Controls wearing an earplug (n = 6 ferrets) made larger errors than normal hearing controls (n = 4; P < 0.001, bootstrap test). While wearing an earplug, juvenile-plugged ferrets (n = 2) made smaller errors than acutely plugged controls (P < 0.001, bootstrap test).

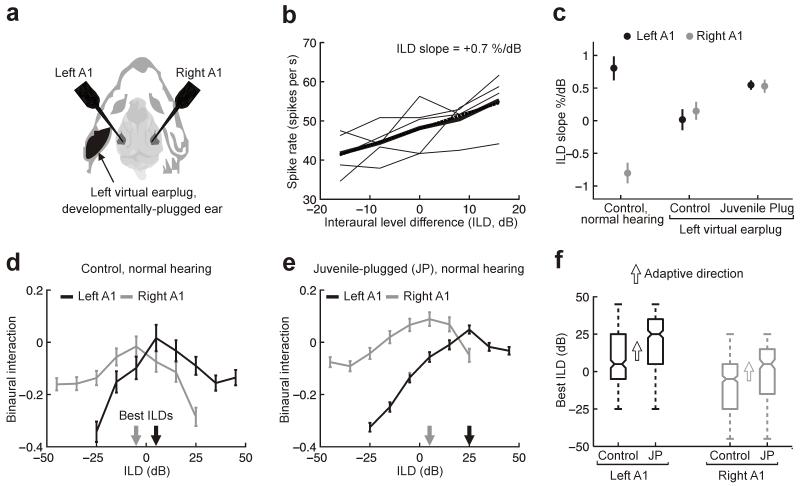

We tested this by performing bilateral extracellular recordings in A1 of juvenile-plugged and control ferrets (Fig. 2a). We measured ILD sensitivity by calculating the slope of each neuron’s firing rate-ILD function (Fig. 2b; Online Methods). A positive ILD indicates that the sound level was higher in the right ear (right-ear greater); thus, a positive ILD slope indicates that the neuron preferred right-ear greater stimuli.

Figure 2.

Adaptive processing of ILDs in A1. (a) Bilateral extracellular recordings were made under normal hearing conditions or with a virtual earplug in the left ear. (b) Spike rate versus ILD, for one unit (i.e., activity recorded on a single electrode), for different average binaural levels (thin lines) plus mean rate-ILD function (thick). Linear regression (dotted line) was used to quantify the slope of the mean rate-ILD function, which was then normalized (Online Methods). (c) ILD slopes (±95% confidence intervals) obtained for neurons recorded ipsilaterally (left A1; black symbols) or contralaterally (right A1; gray symbols) to the virtual earplug. In normal hearing controls, neurons in both hemispheres preferred stimuli whose levels were higher in the opposite ear (total n = 292 units; P < 0.001; bootstrap test). ILD sensitivity in controls declined when a virtual earplug was introduced (left hemisphere, n = 126 units, P < 0.001; bootstrap test; right hemisphere, n = 166 units, P < 0.001; bootstrap test). In contrast, ILD slopes in juvenile-plugged animals were steeper under plugged conditions (left hemisphere, n = 375 units, P < 0.001; right hemisphere, n = 209 units, P < 0.001; bootstrap test). (d) Mean binaural interaction (± s.e.m.) as a function of ILD across all units recorded from controls under normal hearing conditions. Data are plotted separately for left (n = 142 units, black) and right (n = 177 units, gray) A1. Best ILDs for each hemisphere are indicated by arrows. (e) Binaural interaction functions (mean ± s.e.m.) in juvenile-plugged ferrets under normal hearing conditions. (f) Unit best ILDs in each A1 of control and juvenile-plugged (JP) animals. Horizontal lines indicate medians (± interquartile range). Range (whiskers) and approximate 95% confidence intervals (tapers) are also shown. Arrows indicate the adaptive direction in which binaural interaction functions should shift, relative to controls, for JP animals to compensate for the hearing loss experienced during development. In juvenile-plugged ferrets, best ILDs shifted in the predicted direction in both left (n = 379 units; P < 0.001; bootstrap test) and right (n = 226 units; P < 0.001; bootstrap test) A1.

Neurons recorded in left and right A1s of seven control ferrets under normal hearing conditions had, on average, positive and negative ILD slopes, respectively, demonstrating that they preferred stimuli whose levels were higher in the opposite ear (Fig. 2c). When we used digital filtering to introduce a ‘virtual earplug’ to the left ear, ILD sensitivity in both hemispheres declined (Fig. 2c), indicated by ILD slopes that approached 0. In contrast, neurons recorded from seven juvenile-plugged ferrets in the presence of a virtual earplug had steeper ILD slopes, showing that they retained sensitivity to ILD. In these animals, however, neurons recorded from both hemispheres preferred right-ear-greater stimuli (slope > 0) (Fig. 2c), suggesting that juvenile plugging shifted ILD sensitivity toward the nonplugged ear.

We next measured the way inputs from the two ears interact in the absence of the virtual earplug by varying the sound level in the ipsilateral ear while keeping the contralateral level fixed. In controls, this revealed the presence of binaural suppression (Online Methods) at large positive and negative ILDs, resulting in mean binaural interaction functions that peaked (best ILDs) when the sound level was greater in the ear opposite the recording site (n = 319, Fig. 2d,f). We hypothesized that compensation for the attenuating effects of the earplug in the juvenile-plugged ferrets would be manifest as shifts in the best ILD (Supplementary Fig. 1). Although the changes observed were insufficient to fully compensate for the ~45 dB attenuation produced by the earplug, we found that the best ILD shifted in the predicted direction in both left (10 dB shift in median; n = 379; P < 0.001; bootstrap test) and right (20 dB shift in median; n = 226; P < 0.001; bootstrap test) A1 (Fig. 2e,f), confirming that adaptive changes in binaural processing had taken place.

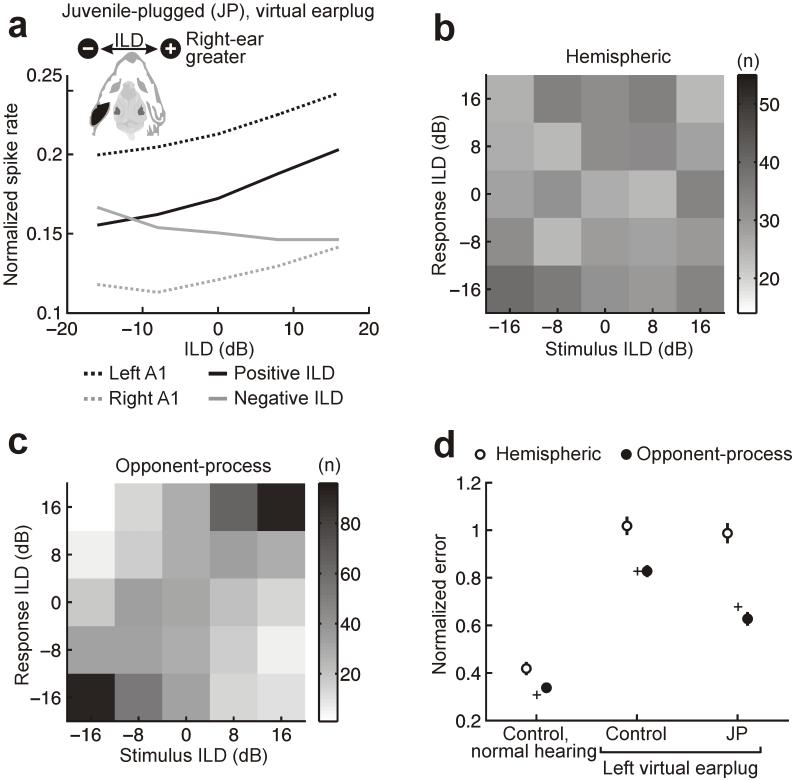

A popular model of sound localization in mammals is based on the relative activity of neuronal populations in each hemisphere, each with a preference for contralateral sound sources14,15. We therefore built a population decoder (Supplementary Fig. 2) that classifies ILDs on single trials using the difference in mean activity between the two hemispheres (‘hemispheric decoder’; Online Methods), and found that this decoder worked well for controls under normal hearing conditions (Fig. 3 and Supplementary Fig. 3). However, in the presence of a virtual earplug, neurons in each A1 of juvenile-plugged animals tended to prefer ILDs that favor the nonplugged right ear (Figs. 2c and 3a). Hemispheric differences in activity therefore remained relatively constant as a function of ILD (Fig. 3a), causing the hemispheric decoder to perform very poorly for juvenile-plugged animals (Fig. 3b and Supplementary Fig. 4).

Figure 3.

Population coding of ILDs in A1. (a) Population-averaged rate-ILD functions in juvenile-plugged animals with a virtual earplug in the left ear. Subpopulations of neurons were defined according to their hemispheric location (left A1, dotted black line; right A1, dotted gray line) or their preference for right-ear-greater (positive ILD, solid black line) or left-ear-greater (negative ILD, solid gray line) stimuli. The virtual earplug produced hemispheric differences in firing rate, but these differences did not vary with ILD. (b,c) Joint distribution of stimulus and response for hemispheric decoder (b) or opponent-process decoder (c) in juvenile-plugged ferrets. (d) Performance of the two decoders was quantified for each group of ferrets by measuring the mean unsigned error (± 95% confidence intervals), normalized so that scores of 0 and 1 denote perfect and chance performance, respectively. Data are shown for control and juvenile-plugged (JP) ferrets with a virtual earplug in the left ear and for controls under normal hearing conditions. For comparison purposes, mean unsigned errors in localization behavior (Fig. 1d) are shown (+). The opponent-process decoder performed much better than the hemispheric decoder, particularly under plugged conditions (P < 0.001; bootstrap test).

Within each hemisphere of the juvenile-plugged ferrets, however, we observed some neurons (left A1, 71/375, 18.9%; right A1, 43/209, 20.6%) that preferred left-ear greater stimuli. We therefore created a second population decoder (‘opponent-process decoder’) that classified ILDs using the difference in mean activity between neurons that preferred right-ear greater and left-ear greater stimuli, irrespective of the hemisphere in which neurons were recorded. The opponent-process decoder performed much better than the hemispheric decoder (P < 0.001; bootstrap test, Fig. 3c,d). This representation of ILD was robust to changes in both population size and stimulus-independent interneuronal correlation, and remained when the analysis was restricted to neurons recorded from a single hemisphere (Supplementary Fig. 5) or when the decoder was required to first learn the ILD preferences of different neurons (Supplementary Figs. 6 and 7).

Previous studies have indicated that intrahemispheric opponent processing16 and population codes that exploit tuning-curve heterogeneity17-19 might have an important role in spatial hearing. Our results show that this may be even more important after unilateral hearing loss, as a decoder that compares activity between neurons with opposing ILD preferences provided a close match to the animals’ behavioral performance (Fig. 3d). Despite differences in the cortical representation of ILD, the opponent-process decoder also provided a better match to the behavioral performance of controls, particularly in the presence of the virtual earplug (Fig. 3d). Thus, some form of opponent processing may be a crucial aspect of representing auditory space, particularly in A1, irrespective of the animals’ experience.

Our results show that ferrets can localize high-frequency sounds accurately using abnormal binaural cues and that a concomitant remodeling of ILD representations in auditory cortex may underlie this process. Because ferrets also adapt to unilateral hearing loss, both during development6 and in adult life20, by becoming more dependent on the unchanged spectral cues provided by the intact ear, these data show that different processes can contribute to the developmental plasticity of spatial hearing within the same species. If spectral cues provide sufficiently precise information about object location, abnormal binaural cues may be ignored or given very little weight. Conversely, if, as in this study, spectral cues are compromised or unavailable, the auditory system may rely more on the abnormal binaural cues to localize sounds accurately. Our finding that the brain can utilize different strategies to adapt to altered inputs therefore highlights the remarkable flexibility of auditory spatial processing.

Online Methods

General

Twenty three ferrets, both male and female, were used in this study, of which seven were raised with an earplug, whilst the remaining animals were raised normally. All sample sizes were chosen based on previous behavioral and neurophysiological studies in our lab. Animals were assigned to different experimental groups by technicians who were blind to the purposes of the study. Portions of the data were also collected by individuals who were unaware of either the project aims or the experimental group. However, group allocation was necessarily known to the lead investigator throughout the study. All behavioral and neurophysiological testing was performed in animals that were at least 5 months old, some of whom had been tested previously on a broadband sound localization task. All procedures were performed under licenses granted by the UK Home Office and met with ethical standards approved by the University of Oxford. General details concerning animal welfare and behavioral testing have been described previously21. Briefly, ferrets were housed either individually or in small groups (< 8) within environmentally enriched laboratory cages. Each period of behavioral testing lasted for up to 14 days, during which drinking water was provided through correct performance of the behavioral task and via additional supplements provided at the end of each day. Dry food was made available at all times, and free access to water was given between testing periods.

The left ear of the juvenile-plugged ferrets was first fitted with an earplug (EAR Classic) between postnatal day 25 (P25) and P29, shortly after the age of hearing onset in this species22, and was thereafter monitored routinely and replaced as necessary. All details relating to earplugging have been described previously6. Briefly, earplugs were replaced either with or without sedation (Domitor, 0.1 mg per kilogram of body weight (mg/kg); Pfizer). To secure the earplug in place, the concha of the external ear was additionally filled with an ear mold impression compound (Otoform-K2, Dreve Otoplastik). The status of both ears was checked routinely using otoscopy and tympanometry, with any accumulation of cerumen removed under sedation. Earplugs were periodically removed so that normal hearing was experienced ~20% of the time, amounting to a total of 3 d in any 15-d period, with periods of nonocclusion evenly spread throughout the diurnal light cycle, which exhibited seasonal variation that sought to replicate that found naturally. This intermittent hearing-loss paradigm is the same as that used in our previous study in which adaptive reweighting of spatial cues was demonstrated6 and was followed because (i) previous studies have reported maladaptive changes in neuronal ILD sensitivity in mammals raised with a continuous unilateral hearing loss2,23 and (ii) we wanted to more closely replicate the type of fluctuating hearing loss that is prevalent among children with otitis media with effusion24. This procedure was repeated continuously until the conclusion of the experiments. Earplugs were kept in place for at least 4 d before terminal electrophysiological experiments, and were only removed immediately before surgery.

Behavioral testing

Behavioral testing was performed during the hours of daylight in a sound-proof chamber, within which was situated a custom-built circular mesh enclosure with a diameter of 1.25 m. Details of this setup have been described elsewhere6. Animals initiated each trial by standing on a platform located in the middle of the testing chamber and licking a spout positioned at the front of this platform for a variable delay, following which a stimulus was presented from one of 12 loudspeakers (FRS 8; Visaton) equally spaced around the periphery of the enclosure. Animals registered their response by approaching the location of one of the loudspeakers. Behavioral responses and trial initiation were monitored using infrared detectors, with experimental contingencies controlled using Matlab (The Mathworks). Correct responses were rewarded with water delivered via spouts situated beneath each loudspeaker. Animals were trained from ~P150 onwards to approach the location of sounds in order to receive a water reward.

All stimuli were 200 ms in duration, generated with a sampling rate of 97.6 kHz, and had 10 ms cosine ramps applied to the onset and offset. Stimuli were either broadband (0.5-30 kHz) or narrowband (1/6th of an octave wide with a center frequency of 15 kHz) bursts of noise, with different stimulus types randomly interleaved across trials. On the majority (~75%) of trials, broadband noise stimuli were presented, the level of which varied between 56 and 84 dB SPL in increments of 7 dB. For these stimuli, and these stimuli alone, incorrect responses were followed by correction trials on which the same stimulus was presented. Persistent failure to respond accurately to correction trials was followed by ‘easy trials’ on which the stimulus was repeated continuously until the animal made a response. The addition of broadband stimuli, which are easier to localize since all available cues are present, was necessary because some animals found it very difficult to localize narrowband stimuli whilst wearing an earplug, performing very close to chance. The provision of broadband stimuli, and corresponding easy trials, therefore helped keep animals motivated to perform the sound localization task accurately.

In most test sessions, narrowband stimuli were presented at 84 dB SPL. In some sessions, however, narrowband stimuli were also presented at 56 dB SPL. This was done primarily to determine whether plugged animals were relying on monaural level cues available to the nonplugged ear to guide behavioral responses. If this was the case, changes in sound level would be expected to produce shifts in response bias, which can be measured by the mean signed error. Although controls wearing an earplug showed shifts in the mean signed error when sound level was changed (P < 0.05; post-hoc test; interaction between group and sound level, P = 0.039; ANOVA), implying some reliance on monaural level cues available to the intact ear, this was not the case in juvenile-plugged ferrets wearing an earplug or normally-reared animals tested under normal hearing conditions (P > 0.05; post-hoc test).

Behavioral analyses

For analysis purposes, stimulus and response locations in the front and rear hemifields were collapsed. This provided a measure of performance that is unaffected by front-back errors, which are unavoidable when localizing short-duration narrowband stimuli, and which are thought to primarily reflect a failure in spectral, rather than binaural, processing. We then calculated the discrepancy between the lateral position of the stimulus and that of the response to generate estimates of behavioral error for each trial. The mean unsigned error, which measures error magnitude, was then calculated to determine the ability of animals to judge the lateral position of narrowband sounds. When testing controls wearing an earplug, we wanted to measure performance in animals that were relatively naïve to the earplug. For this reason, each of these animals was tested in only a few sessions, which meant that very few narrowband trials were obtained for each individual. It was therefore necessary to pool the data across animals before applying bootstrap procedures to the data obtained on individual trials.

Neurophysiological recordings

Ferrets were anesthetized for recordings to provide the stability needed for presenting large stimulus sets via earphones a sufficient number of times to obtain reliable estimates of ILD sensitivity. This also meant that we could eliminate changes in arousal level or attentional modulation as factors contributing to any differences observed between the control and juvenile-plugged ferrets or between the normal hearing and virtual earplug conditions.

Procedures used for obtaining neurophysiological recordings have been described in detail previously6. Briefly, animals were anesthetized with Domitor and ketamine hydrochloride (Ketaset; Fort Dodge Animal Health), and given a subcutaneous dose of atropine sulfate (0.01 mg/kg; C-Vet Veterinary Products). Anesthesia was maintained using a continuous intravenous infusion of Domitor (22 μg/kg/hr) and ketamine (5 mg/kg/hr) in 0.9% saline supplemented with 5% glucose and dexamethasone (0.04 mg/kg/hr Dexadreson; Intervet UK). Animals were intubated and artificially ventilated with oxygen. End-tidal CO2 and heart rate were monitored and body temperature was maintained at ~38.5°C.

The animal was placed in a stereotaxic frame fitted with blunt ear bars, and the skull was exposed. A stainless steel bar was subsequently attached to the caudal midline of the skull and secured in place using a combination of bone screws and dental cement. The temporal muscles were then removed and bilateral craniotomies were made over the auditory cortex. The overlying dura was removed, and silicone oil was applied to the cortical surface. In each hemisphere, a single-shank silicon probe (Neuronexus Technologies) with 16 recording sites spread over a length of 1.5 mm was lowered into A1. The position of the probe was confirmed by measuring frequency response areas, which were used to derive the characteristic tonotopic gradients that delineate cortical fields in the ferret25.

Neural signals were band-pass filtered (500 Hz – 3 kHz), amplified, and digitized (25 kHz) using TDT System 3 processors (Tucker-Davis Technologies). Stimuli were generated in Matlab, amplified (TDT headphone amplifier) and presented via earphones (Panasonic, RP-HV298) that were situated at the entrance to each ear canal. Action potentials were extracted in Brainware (Tucker-Davis Technologies) from a mixture of single units and small multi-unit clusters, which were pooled for subsequent analyses to increase statistical power. All further data analyses were carried out using Matlab.

Stimuli consisted of 200-ms bursts of spectrally-flat broadband (0.5–30 kHz) noise, generated at a sampling rate of 97.6 kHz, and with 10 ms cosine ramps applied to the onset and offset. Average binaural level (ABL) was varied between 62 and 78 dB SPL in increments of 4 dB and interaural level differences (ILDs) were set to vary between ± 16 dB (before any effects of a virtual earplug), varying in increments of 8 dB. Specific ILDs were created by adjusting the sound level in opposite directions in the two ears, which meant that the sound level presented to a given ear varied between 54 and 86 dB SPL. ILD stimuli were presented either under normal hearing conditions or with a virtual earplug in the left ear. Virtual earplugs were created by applying an additional filter to the signal presented to the left ear, which delayed the input by 110 μs and attenuated it by 15–45 dB (varying as a function of frequency, as shown in ref. 6), similar to the effects of a real earplug26. Each unique stimulus was repeated 30 times, and different acoustical conditions were presented in blocks.

Neurophysiological analyses

Spike rates were calculated for each trial within a 75-ms window spanning the onset response of each acoustically driven unit. These were then used to assess the ILD sensitivity of individual units as well as neuronal populations. ILD slope was estimated, for each unit, by calculating the mean spike rate as a function of ILD. The slope of this mean rate-ILD function was then quantified using linear regression, with the resulting slope value expressed, as in previous studies of ILD coding27,28, as a percentage of the maximal response for each unit (rmax). The equation defining this procedure was therefore as follows:

where r′max and r′min represent the maximum and minimum values of the linear fit and ILDmax and ILDmin denote the maximum and minimum ILD values presented. Positive ILDs were more intense in the right ear (right-ear greater). Positive slope values were therefore assigned to units that responded more strongly to right-ear greater ILDs, with negative slope values assigned to units that preferred left-ear greater ILDs.

Binaural interaction (β) was calculated, for each unit and ILD, as follows:

where rILD represents the spike rate elicited by a particular ILD, rIpsi and rContra indicate the spike rates elicited by equivalent monaural stimulation of the ears ipsilateral and contralateral to the recording site (i.e. presenting the same left- and right-ear inputs separately), respectively, and rmax denotes the maximum spike rate exhibited by a particular unit. Negative binaural interaction values therefore reflected sublinear summation of monaural inputs, indicating more suppressive binaural interaction, with positive values indicating supralinear summation, and therefore binaural facilitation. Under normal hearing conditions, similar mean firing rates were observed for both hemispheres in juvenile-plugged ferrets (n = 379, n = 226; P = 0.38; bootstrap test) and controls (n = 177, n = 142; P = 0.12; bootstrap test).

Population decoders classified responses by comparing the mean activity between two distinct sub-populations of units in a manner similar to that described previously16. In all cases, population decoders were implemented using custom-written Matlab code. Sub-populations of units were defined either on the basis of the hemisphere in which they were recorded (‘hemispheric decoder’) or whether they preferred right-ear greater ILDs or left-ear greater ILDs (‘opponent-process decoder’) (Supplementary Fig. 2). For the opponent-process decoder, analyses were applied to the data obtained from either one or both hemispheres. In all cases, spike rates were normalized for each unit by dividing by the maximum single-trial response exhibited by that unit across trials. This normalization step effectively regularized the weight given to each unit. Without it, units with low firing rates would have contributed very little to the decoder’s decision, even if those units were very sensitive to ILDs. Although the precise details of this normalization procedure had very little impact on decoder performance, we opted for the procedure reported here because it produced marginally smaller errors (across all groups of animals) than alternative approaches. In the training phase, a population-averaged rate-ILD function was computed by calculating, for each ILD, the mean normalized spike rate across all units belonging to each sub-population. In doing so, data were averaged across different trials and ABLs.

The difference between these population-averaged rate-ILD functions was then used, during the test phase, to infer stimulus ILD from single-trial population responses as both ILD and ABL were varied. This was done by calculating, for a single trial, the difference in mean activity between the two neuronal sub-populations, comparing this value with the difference between population-averaged rate-ILD functions, and identifying the ILD that was associated with the most similar population-averaged response. In all cases, data were cross-validated using a leave-one-out procedure. Decoder performance was quantified by calculating the discrepancy between the stimulus ILD and the ILD-classification given by the decoder on each trial. The mean unsigned error across trials was then calculated and normalized so that values of zero and one respectively indicated perfect and chance performance.

Because different numbers of units were recorded for different subpopulation types and groups of animals, which would be expected to affect decoder performance, we ran the population analysis using a fixed number of units chosen at random from the total number recorded for each subpopulation type in a particular group. We then repeated this sampling procedure 1,000 times and averaged performance across repetitions to generate a mean estimate of decoder performance for each group. To assess the relationship between decoder performance and the number of units used, this analysis was repeated for different population sizes (Supplementary Fig. 5). For formal comparisons between different decoder types (Fig. 3), we elected to use a population size of 200 units. Although this choice was to a certain extent arbitrary, it was guided by the fact that the performance associated with this population size lies on the asymptotic portion of the exponential fits. In other words, performance does not improve dramatically as population size is increased further. On the other hand, smaller population sizes enable better estimates of the variability produced by sampling different units.

Averaging the activity of many neurons can improve decoder performance because the activity of individual neurons is noisy. When this noise is independently distributed for each neuron, it can be cancelled out by averaging. However, noise that is shared by many neurons recorded simultaneously does not cancel when averaged, which can limit decoder performance29. This type of correlated noise can be produced in neuronal populations by common fluctuations in background activity that is independent of the stimulus. We investigated the effects of these noise correlations by measuring decoder performance while the order of stimulus presentation was randomized independently (‘shuffled’) for each simultaneously recorded unit, a procedure which removes stimulus-independent correlations between units30. Performance was then compared with that of decoders applied to the unshuffled data (Supplementary Fig. 5)

It is important to note that, whereas our unshuffled data contain noise correlations in the activity of simultaneously recorded data, noise correlations are necessarily absent for units recorded at different times. Our unshuffled data can therefore be understood as a set of neuronal ensembles recorded separately, with noise correlations existing within but not between these different ensembles. This means that our data most likely underestimate the impact of noise correlations, which means that real neural performance may be slightly worse than that obtained by applying our decoders to unshuffled data. Previous work, however, has shown that noise correlations diminish as the spatial separation of neurons is increased31. It is therefore likely that noise correlations in the brain exist within, but to a much lesser extent between, local neuronal ensembles.

In real neural systems, the readout of neural activity is also likely to be corrupted by noise intrinsic to the readout process. To simulate this, we therefore added a small amount of normally-distributed random noise to the decision making process described above in order to estimate the robustness of population representations. In the presence of small amounts of noise, however, performance changed very little from that observed in its absence.

Statistical analyses

Confidence intervals at the 95% level were estimated empirically for different measures using 10,000 bootstrapped samples, each of which was obtained by resampling with replacement from the original data. These samples were then used to construct bootstrapped distributions of the desired measure, from which confidence intervals were derived32. A bootstrap procedure was also used to assess the significance of group differences. First, the difference between two groups was measured using an appropriate statistic (for example, difference in means, t-statistic, or rank-sum statistic). The data from different groups were then pooled and resampled with replacement to produce two new samples, and the difference between these samples was measured using the same statistic as before. This procedure was subsequently repeated 10,000 times, which provided an empirical estimate of the distribution that would be expected for the statistic of interest under the null hypothesis. This bootstrapped distribution was then used to derive a P value for the difference observed in the original sample. In all cases, two-sided tests of significance were used. Although bootstrap tests were used because they make fewer distributional assumptions about the data, conventional parametric and non-parametric statistical tests were also performed and produced very similar results to those obtained by bootstrapping.

Code availability

Matlab code was used to control behavioral testing, create stimuli for neurophysiological testing, and perform all analyses. In all cases, Matlab version 7.0.4 or 2010a was used (Mathworks). Portions of the code used, for which Mathworks does not own the copyright, can be made available on request. A Supplementary Methods Checklist is available.

Supplementary Material

Acknowledgements

This work was supported by the Wellcome Trust through a Principal Research Fellowship (WT076508AIA) to A.J.K. and by a Newton Abraham Studentship to P.K. We are grateful to Sue Spires, Dan Kumpik, Fernando Nodal, and Victoria Bajo for their contributions to behavioral testing, Rob Campbell for helpful discussion, and to Ben Willmore and Gwenaëlle Douaud for commenting on the manuscript.

References

- 1.Polley DB, Thompson JH, Guo W. Nat Comm. 2013;4:2547. doi: 10.1038/ncomms3547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Popescu MV, Polley DB. Neuron. 2010;65:718–731. doi: 10.1016/j.neuron.2010.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Knudsen EI, Knudsen PF. Science. 1985;230:545–548. doi: 10.1126/science.4048948. [DOI] [PubMed] [Google Scholar]

- 4.Knudsen EI, Knudsen PF, Esterly SD. J Neurosci. 1984;4:1012–1020. doi: 10.1523/JNEUROSCI.04-04-01012.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gold JI, Knudsen EI. J Neurosci. 2000;20:862–877. doi: 10.1523/JNEUROSCI.20-02-00862.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Keating P, Dahmen JC, King AJ. Curr Biol. 2013;23:1291–1299. doi: 10.1016/j.cub.2013.05.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Keating P, King AJ. Front Syst Neurosci. 2013;7:123. doi: 10.3389/fnsys.2013.00123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Grothe B, Pecka M, McAlpine D. Physiol Rev. 2010;90:983–1012. doi: 10.1152/physrev.00026.2009. [DOI] [PubMed] [Google Scholar]

- 9.McAlpine D, Grothe B. Trends Neurosci. 2003;26:347–350. doi: 10.1016/S0166-2236(03)00140-1. [DOI] [PubMed] [Google Scholar]

- 10.Wightman FL, Kistler DJ. J Acoust Soc Am. 1997;101:1050–1063. doi: 10.1121/1.418029. [DOI] [PubMed] [Google Scholar]

- 11.Middlebrooks JC, Green DM. Annu Rev Psychol. 1991;42:135–159. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- 12.Heffner HE, Heffner RS. Audition. In: Davis SF, editor. Handbook of Research Methods in Experimental Psychology. Blackwell; Oxford: 2003. pp. 413–440. [Google Scholar]

- 13.Keating P, Nodal FR, King AJ. Eur J Neurosci. 2014;39:197–206. doi: 10.1111/ejn.12402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lesica NA, Lingner A, Grothe B. J Neurosci. 2010;30:11696–11702. doi: 10.1523/JNEUROSCI.0846-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McAlpine D, Jiang D, Palmer AD. Nat Neurosci. 2001;4:396–401. doi: 10.1038/86049. [DOI] [PubMed] [Google Scholar]

- 16.Stecker GC, Harrington IA, Middlebrooks JC. PLoS biology. 2005;3:e78. doi: 10.1371/journal.pbio.0030078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Day ML, Delgutte B. J Neurosci. 2013;33:15837–15847. doi: 10.1523/JNEUROSCI.2034-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Goodman DF, Benichoux V, Brette R. eLife. 2013;2:e01312. doi: 10.7554/eLife.01312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Miller LM, Recanzone GH. Proc Natl Acad Sci USA. 2009;106:5931–5935. doi: 10.1073/pnas.0901023106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kacelnik O, Nodal FR, Parsons CH, King AJ. PLoS biology. 2006;4:e71. doi: 10.1371/journal.pbio.0040071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nodal FR, Bajo VM, Parsons CH, Schnupp JW, King AJ. Neuroscience. 2008;154:397–408. doi: 10.1016/j.neuroscience.2007.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moore DR, Hine JE. Brain Res Dev Brain Res. 1992;66:229–235. doi: 10.1016/0165-3806(92)90084-a. [DOI] [PubMed] [Google Scholar]

- 23.Silverman MS, Clopton BM. J Neurophysiol. 1977;40:1266–1274. doi: 10.1152/jn.1977.40.6.1266. [DOI] [PubMed] [Google Scholar]

- 24.Hogan SC, Stratford KJ, Moore DR. BMJ. 1997;314:350–353. doi: 10.1136/bmj.314.7077.350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bizley JK, Nodal FR, Nelken I, King AJ. Cereb Cortex. 2005;15:1637–1653. doi: 10.1093/cercor/bhi042. [DOI] [PubMed] [Google Scholar]

- 26.Moore DR, Hutchings ME, King AJ, Kowalchuk NE. J Neurosci. 1989;9:1213–1222. doi: 10.1523/JNEUROSCI.09-04-01213.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mogdans J, Knudsen EI. Hear Res. 1994;74:148–164. doi: 10.1016/0378-5955(94)90183-x. [DOI] [PubMed] [Google Scholar]

- 28.Dahmen JC, Keating P, Nodal FR, Schulz AL, King AJ. Neuron. 2010;66:937–948. doi: 10.1016/j.neuron.2010.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zohary E, Shadlen MN, Newsome WT. Nature. 1994;370:140–143. doi: 10.1038/370140a0. [DOI] [PubMed] [Google Scholar]

- 30.Bizley JK, Walker KM, King AJ, Schnupp JW. J Neurosci. 2010;30:5078–5091. doi: 10.1523/JNEUROSCI.5475-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rothschild G, Nelken I, Mizrahi A. Nat Neurosci. 2010;13:353–360. doi: 10.1038/nn.2484. [DOI] [PubMed] [Google Scholar]

- 32.Davison AC, Hinkley DV. Bootstrap methods and their application. Cambridge University Press; Cambridge: 1997. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.