Abstract

Background

Redundant training and feedback are crucial for successful acquisition of skills in simulation trainings. It is still unclear how or how much feedback should best be delivered to maximize its effect, and how learners’ activity and feedback are optimally blended. To determine the influence of high- versus low-frequency expert feedback on the learning curve of students’ clinical procedural skill acquisition in a prospective randomized study.

Methods

N = 47 medical students were trained to insert a nasogastric tube in a mannequin, including structured feedback in the initial instruction phase at the beginning of the training (T1), and either additional repetitive feedback after each of their five subsequent repetitions (high-frequency feedback group, HFF group; N = 23) or additional feedback on just one occasion, after the fifth repetition only (low-frequency feedback group, LFF group; N = 24). We assessed a) task-specific clinical skill performance and b) global procedural performance (five items of the Integrated Procedural Performance Instrument (IPPI); on the basis of expert-rated videotapes at the beginning of the training (T1) and during the final, sixth trial (T2).

Results

The two study groups did not differ regarding their baseline data. The calculated ANOVA for task-specific clinical skill performance with the between-subject factor ‘Group’ (HFF vs. LFF) and within-subject factors ‘Time’ (T1 vs. T2) turned out not to be significant (p < .147). An exploratory post-hoc analyses revealed a trend towards a superior performance of HFF compared to LFF after the training (T2; p < .093), whereas both groups did not differ at the beginning (T1; p < .851). The smoothness of the procedure assessed as global procedural performance, was superior in HFF compared to LFF after the training (T2; p < .004), whereas groups did not differ at the beginning (T1; p < .941).

Conclusion

Deliberate practice with both high- and low-frequency intermittent feedback results in a strong improvement of students’ early procedural skill acquisition. High-frequency intermittent feedback, however, results in even better and smoother performance. We discuss the potential role of the cognitive workload on the results. We advocate a thoughtful allocation of tutor resources to future skills training.

Keywords: Cognitive workload, Procedural skills acquisition, Expert feedback, Learning curve, Nasogastric tube, Proficiency level, Skills training, Undergraduate medical education, Simulation

Background

Skills lab facilities provide an effective and safe learning environment for undergraduate medical students to acquire clinical technical skills. Skills lab training leads to improved knowledge, skills, and behaviors when compared to standard clinical training or no training, with a moderate general effect for patient-related outcomes [1]. Skills lab training enables trainees to perform procedural skills faster, more accurately and more professionally on patients in terms of both technical and communicational aspects as compared to standard clinical training [2]. Furthermore, skills lab training leads to superior objective structured clinical examination (OSCE) results, both in longitudinal [3] and prospective controlled designs [4] even for long-time follow-up [5]. With regard to potential transfer, skills lab training sessions provide a better preparation for clinical clerkships [6] and result in a higher number of procedural skills being performed at bedside on wards [7]. A prerequisite for such transfer is to exercise great care in designing training models and scenarios in order to prove their validity with regard to the real clinical setting.

Four factors are described to enhance the learning of motor skills: observational practice, the learner’s focus of attention, feedback, and self-controlled practice [8]. Accordingly, in their Best Evidence Medical Education (BEME) guide, Issenberg et al. [9] identified similar factors leading to a maximum benefit of simulation-based medical education. The most relevant factors were a) repetitive, active and standardized educational experiences, to prevent trainees from being passive bystanders, b) educational feedback, and c) embedding the training in the standard curriculum. The majority of relevant articles found in their review agree on these three factors, and a plethora of data is available on the latter two. The fact that repetition and trainees’ activity per se are important factors to promote long-term retention is also unquestioned, both for low-complexity skills [10] and high-complexity skills [11].

Regarding learning curves in simulation-based education and motor-skills training, a dose-response relationship is assumed to exist, with a rising number of repetitions resulting in an increasingly superior performance until a performance plateau is reached [12,13]. So far, learning curves in skills lab training have been examined in different study populations for differing skills in various settings. For example, undergraduate students were shown to reach a plateau in endoscopic sinus surgery simulation or simulated peripheral venous cannulation after 5 to 10 trials [14,15], with fewer trials required as subjects progressed in their medical training. In a postgraduate clinical setting of anesthesia first-year residents, a rapid improvement of success for anesthesia procedures was observed during the first 20 attempts, leading to a success rate between 65 and 85% [12]. The model of Peyton seems to add an additional advantage in very early skill acquisition [5,16], serving as a springboard which enhances the benefit from subsequent repetitive practice. However, it remains unclear how subsequent practice should be optimally timed and designed.

Although most studies show feedback to be crucial for learning, many issues of how best to deliver feedback remain a matter of debate: how much feedback is required to attain a maximum benefit from repetitive skills training or to reach a proficiency level in the early acquisition of procedural skills; the ideal frequency, or mode of delivery of repetitive feedback; and how repetitions and feedback are optimally blended. Moreover, it is unclear whether repeating the feedback necessarily substantially improves performance at all [17-21].

Therefore, our randomized prospective study was designed to determine the influence of high- versus low-frequency expert feedback on the learning curve of students’ clinical procedural skill acquisition. Our hypothesis was that repetitive practice is beneficial for reaching a proficiency level, with an additional benefit when intermittent feedback is given at a higher frequency as opposed to intermittent feedback at a lower frequency.

Methods

Study design

The presented randomized prospective study investigated the influence of repetitive expert feedback in skills training on the learning curve of students in the early acquisition of procedural skills. Nasogastric tube placement was selected as the clinical task as this skill represents a pivotal routine procedure in internal medicine. If it is not performed accurately, severe complications may occur, resulting in considerable costs [22,23], and it is therefore an integral part of undergraduate skills training curricula [24,25]. The study was conducted over a period of two and a half weeks alongside the regular curriculum at our faculty.

Participants

Trainees were recruited via advertisements among medical students in their first or second year of medical training. A total of N = 50 participants volunteered to participate. Only right-handed individuals were eligible for inclusion in order to standardize the camera position and facilitate raters’ assessment by not needing to change perspectives. Written consent was provided by all participants and data from all participants were treated anonymously. The trainees were informed that the purpose of the study was to assess their skill performance, but no further details were provided. All participants received a minor financial compensation for their study participation. Ethical principles according to the World Medical Association Declaration of Helsinki Ethical Principles for Medical Research Involving Human Subjects of 2008 were adhered to. Ethics approval was granted by the ethic committee of the University of Heidelberg (Nr. S-211/2009). Students with previous experience in inserting nasogastric tubes were excluded from the study. Refusal to participate had no impact on the subsequent evaluations or other assessments in the curriculum.

Assessment prior to the training (T0)

To control for potential confounding variables, each study participant provided data on their age, sex, handedness, previous clinical experience regarding clerkships, qualification as a paramedic or nurse, as well as a general self-efficacy rating using the General Self-Efficacy Scale and individual learning styles using the Kolb Learning Style Inventory (KLSI) in order to prove that conditions in the two groups were equal.

General self-efficacy scale

This questionnaire consists of ten positively worded items rated on a four-point Likert scale (4 = “I agree” to 1 = “I disagree”). It assesses perceived self-efficacy in the event of adversity and stressful life events [26].

Kolb Learning Style Inventory (KLSI)

The Kolb Learning Style Inventory (KLSI) of 2005 [27] showed an even distribution of learning styles as a potential confounder in skill acquisition although it’s immediate effect is unclear [28]. On the other hand, well-organized and strategic learning styles assessed with other inventories (which may be compared to the steps reflective observation and abstract conceptualization of the KLSI) have been shown to provide a benefit for students' later performance [29,30]. Additionally, learning settings should accommodate individual learning styles to maximize individual learning achievement [31] as learning styles not only differ but may shift across cognitive and motor settings [32].

Assignment to study groups

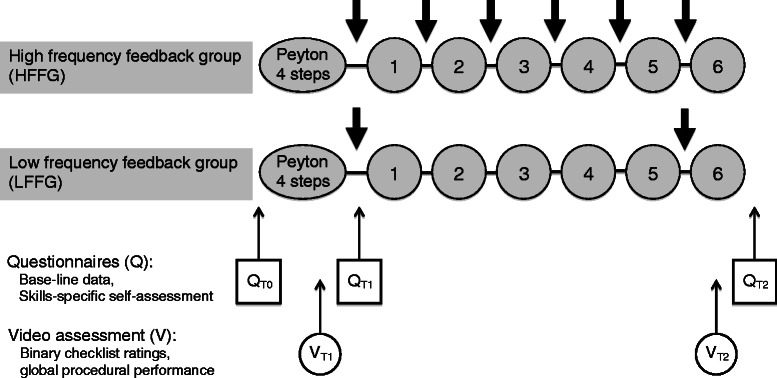

N = 50 participants were randomly assigned to one of the two study groups, one receiving high-frequency feedback (high-frequency feedback group, HFF group; N = 25) after each of the five repetitive practice trials before the final, sixth repetition, and one receiving low-frequency feedback only twice, i.e. after the first independent skill performance and just before the final, sixth repetition (low-frequency feedback group, LFF group; N = 25; for details see “skill training session” below; see Figure 1). After participants had been included in the study, three students opted not to participate without stating reasons or due to illness, resulting in a dropout of N = 2 in the HFF group and N = 1 in the LFF group. Thus, the final sample consisted of N = 23 in the HFF group and N = 24 in the LFF group.

Figure 1.

Study design. The study employed a randomized controlled design: high-frequency feedback group (HFF group, N = 23) and low-frequency feedback group (LFF group, N = 24). T0 assessment before training, T1 assessment after step 4 of Peyton and T2 assessment after the final repetition. Q assessment via questionnaire, V video assessment. The numbers indicate the six successive repetitions of inserting a nasogastric tube; the thick arrows indicate feedback given by the tutor. The assessment at T0 included questionnaires assessing a) general self-efficacy rating, b) the Kolb Learning Style Inventory (KLSI), and c) skill-specific self-efficacy ratings (QT0). The assessments at T1 and T2 included questionnaires assessing c) skill-specific self-efficacy ratings (QT1 and QT2), and d) objective video ratings of participants’ performances regarding clinical performance (binary checklists) and overall procedural performance (global rating; VT1 and VT2). In addition, the assessment at T2 included questionnaires assessing e) acceptance, f) subjective skill-related demands during skill performance, g) value of feedback (QT2).

Skill training session

The skill training was conducted with a student-teacher ratio of 1:1 in analogy to previous studies [24,33] and under consideration of previously published checklists for nasogastric tube placement [24,34]. Training sessions were structured as follows: Both groups received a short case history and role-play directives (i.e. including talking to the mannequin as if it were a real patient), both of which have been previously shown to enhance perceived realism in the training as well as the patient-physician communication [24,33]. Both groups were then instructed to insert a nasogastric tube in a mannequin using the four steps of Peyton’s Four-Step Approach [16,35] and subsequently performed six further repetitions of inserting a nasogastric tube. Both groups received structured feedback from the skills lab trainers [9,36] after the first independent performance of nasogastric tube placement (step 4 of Peyton’s four-step approach; T1). However, the HFF group received performance-related feedback after each of their following five repetitions, whereas the LFF group received further feedback once, after the fifth repetition only. Finally, both groups performed a final, sixth repetition of nasogastric tube placement (T2; see Figure 1). The feedback was always given immediately after the respective repetition of the skill, as proximate feedback enhances its effectiveness [37]. Feedback was positively worded and aimed at inducing an external focus, i.e. aiming at the movement effect to facilitate automaticity in motor control and promote movement efficiency (for detailed reviews, see [8,38]).

Skills lab teachers

Both the HFF and LFF group were both trained by four certified skills lab peer teachers, all of whom had at least one year of experience as skills lab trainers. Two tutors were male (both aged 22 years), and two were female (aged 21 and 22 years). The four tutors were randomly assigned to the students in the study groups. As previously shown, trained medical students as peer teachers deliver training and feedback on a par with that of faculty staff in skills training [39-41]. All trainers were blinded to the study design and received an introductory course including training in nasogastric tube placement and delivering feedback prior to the study.

Assessment of training

Assessment of the skills lab training encompassed a) acceptance ratings including value of tutor’s feedback, b) subjective skill-related demands during skill performance (cognitive workload), c) skill-specific self-efficacy ratings related to nasogastric tube placement competencies, and d) objective video expert ratings of participants’ performances by blinded independent assessors (N = 2).

Acceptance ratings and value of trainer’s feedback

For the evaluation of acceptance of the training session and the tutor’s feedback, participants completed a questionnaire with five positively worded items rated on a six-point Likert scale (6 = fully agree; 1 = completely disagree) after completing the training (after T2, see Figure 1). For the specific pre- and post-evaluation of the value of the trainer’s feedback, the participants completed an additional questionnaire with ratings on a six-point Likert scale (6 = fully agree; 1 = completely disagree) after step 4 of Peyton’s four-step approach but before beginning the repetitions (T1, see Figure 1; 5 items) and after the final, sixth repetition (T2; 12 items).

Cognitive workload/skill-related demands

We assessed the perceived overall cognitive workload using the National Aeronautics and Space Administration Task Load Index (NASA-TLX) [42] as a score of six subscales: mental, physical, and temporal demands, as well as own performance, effort and frustration. Assessment took place after step 4 of Peyton’s four-step approach (T1) and after the final, sixth repetition (T2), with ratings on Likert scales from 5 (very low demands) to 100 (very high demands), resulting in a sum score between 0 (very low demands) and 100 (very high demands).

Skill-specific self-efficacy ratings

Skill-specific self-efficacy ratings related to nasogastric tube placement competencies were assessed as in previous studies [33], with five items referring to a) knowledge of the anatomical structures required to insert a nasogastric tube, b) knowledge of the materials required to insert a nasogastric tube, c) knowledge of the steps involved in inserting a nasogastric tube, d) competence in inserting a nasogastric tube in a mannequin, and e) competence in inserting a nasogastric tube in a patient (6 = fully agree; 1 = completely disagree). Skill-specific self-efficacy ratings were assessed prior to the training (T0) as well as after step 4 of Peyton’s four-step approach (T1) and after the final, sixth repetition (T2).

Independent video assessment of performance

Participants’ performance in step 4 of Peyton’s four-step approach (T1) and in the final, sixth repetition (T2) were videotaped in both the HFF group and the LFF group using high-resolution digital cameras with optical zoom to capture all of the details required for a precise evaluation. The videos were digitally processed and were independently rated by two clinically experienced and trained video assessors who were blinded to both the aim and design of the study as well as the assignment of participants to the study groups. Raters were provided with binary checklists and global rating forms. The binary checklist consisted of 16 items reflecting the procedural steps of inserting a nasogastric tube [24,34,43]. Regarding binary checklists, video raters were asked to indicate whether single procedural steps were performed correctly or incorrectly. A global rating form, which was based on the Integrated Procedural Performance Instrument (IPPI) proposed by Kneebone et al. for the assessment of procedural skills in a clinical context, was also used [44]. The IPPI was designed to evaluate global professional and confident performance of clinical technical skills. Items of the IPPI considered relevant for the procedure were selected (items 4, 5, 9, 10, 11; six-point Likert scale; 6 = very good to 1 = unsatisfactory).

Statistical analysis

Primary endpoint was the global procedural performance. Secondary endpoints were task-specific clinical skill performance, skill-specific self-efficacy ratings, and pre- and post-assessment of trainer’s feedback (T1 and T2). Data are presented as means and standard deviation. Continuous data serving sample description were compared using a Student’s t-test (assuming equal variances), whereas ordinal data were assessed using Mann-Whitney U test (M-W-U-Test)). Differences in group characteristics pertaining to sex, previous education in a medical profession, and completed medical electives were compared using chi-square tests. For repeated measures, ordinal data (acceptance ratings, cognitive workload assessed with NASA-LTX, skills-specific self-efficacy ratings, and global skills performance assessed with the IPPI) were calculated using Wilcoxon signed-rank test or Friedman test where appropriate. Group comparisons at T1 and at T2 were calculated using M-W-U-Tests. For interval data (task-specific clinical skills performance reflected in binary checklist ratings), an ANOVA with the between-subject factor ‘Group’ (HFF vs. LFF) and the within-subject factor ‘Time’ (T1 vs. T2) was conducted. LSD-post-hoc tests were used where appropriate. A p-value < .05 was considered statistically significant. Inter-rater reliability for the two video assessors was calculated using a Pearson’s-Correlation. The software package STATISTICA 8, 2007 (Statsoft, Inc., Tulsa, OK) was used for statistical analysis.

Results

Participants

There were no statistically significant differences between the two groups with regard to age, sex, completed education in a medical profession, or completed medical electives and general self-efficacy rating prior to skills training, as well as learning styles (see Table 1), with a distribution of subscales of learning styles as described previously [28].

Table 1.

Group characteristics of the study groups

| Group characteristics | High-frequency feedback group (HFF group) N = 23 | Low-frequency feedback group (LFF group) N = 24 | p-value |

|---|---|---|---|

| Age (years) | 21.00 ± 2.94 | 20.62 ± 1.74 | .5961 |

| Sex (m/f) | 12 (52.17%)/11 (47.82%) | 12 (50.00%)/12 (50.00%) | .8822 |

| General self-efficacy rating | 30.83 ± 3.42 | 30.42 ± 4.15 | .8421 |

| Education in a medical profession | 3 (13.04%) | 1 (4.16%) | .2762 |

| Medical electives | 20 (86.95%) | 21 (87.50%) | .9552 |

| Kolb learning style inventory | |||

| Concrete experience (feeling) | 24.61 ± 7.65 | 24.79 ± 5.85 | .9271 |

| Reflective observation (watching) | 30.30 ± 6.88 | 29.88 ± 5.54 | .8151 |

| Abstract conceptualization (thinking) | 31.34 ± 8.61 | 32.37 ± 7.43 | .6631 |

| Active experimentation (doing) | 33.30 ± 7.55 | 32.95 ± 5.28 | .8561 |

1t-test.

2χ2 test.

Group characteristics of the high-frequency feedback group (HFF group, N = 23) and the low-frequency feedback group (LFF group, N = 24) are depicted regarding:

• age (age; mean ± standard deviation; Student’s t-test, p-values).

• sex (male/female; N and %, chi-square test, p-values).

• general self-efficacy rating prior to skills training (score of 10 items using Likert-scale ratings; 4 = I fully agree; 1 = I completely disagree; mean ± standard deviation; M-W-U-Test, p-values).

• completed education as paramedic, medical secretary, nurse, or occupational therapist.

• (N, % and chi-square test p-values).

• completed electives in surgery, internal medicine, pediatrics, or psychiatry (N, % and chi-square test, p-values).

Acceptance of training (rated at T2) and assessment of trainer’s feedback (T1 and T2)

Participants of both study groups confirmed a high training acceptance after the skills training session. Participants rated the training session as realistic (HFF group 4.66 ± .98, LFF group 4.34 ± 1.13, p = .285) and the tutor’s feedback as objective (HFF group 5.96 ± .21, LFF group 5.96 ± .20, p = .992), motivating (HFF group 5.74 ± .45, LFF group 5.67 ± .76, p = .858), supportive (HFF group 5.96 ± .21, LFF group 5.92 ± .28, p = .825), and courteous (HFF group 6.0 ± .0, LFF group 6.0 ± .0, p = 0.992), with a positive effect on learning success (HFF group 6.0 ± .0, LFF group 5.92 ± .28, p = 0.635) all ratings are given as mean of Likert scale ratings from 6 = fully agree; 1 = completely disagree).

In the pre- and post-assessment, both groups assessed trainers’ feedback as very valuable after step 4 of Peyton’s four-step approach (T1) as well as after the final, sixth repetition (T2), with no significant difference between the study groups (see Table 2). Both groups assessed trainers’ feedback as more valuable after the final, sixth repetition (T2) compared to after step 4 of Peyton’s four-step approach (T1) (HFF p = .004; LFF p = .018).

Table 2.

Pre- and post-assessment of trainer’s feedback (T1 and T2) High-frequency feedback group

| High-frequency feedback group (HFF group) N = 23 | Low-frequency feedback group (LFF group) N = 24 | p-value 1 | |

|---|---|---|---|

| After Peyton’s step 4 (T1) | |||

| Item 1 The tutor’s feedback was comprehensible. | 5.83 ± .38 | 5.84 ± .38 | .931 |

| Item 2 I could easily follow the tutor’s instructions. | 5.39 ± .66 | 5.17 ± .96 | .077 |

| Item 3 The feedback was helpful for improving skills. | 5.78 ± .42 | 5.79 ± .51 | .382 |

| Item 4 The tutor was attentive and concentrated. | 5.83 ± .39 | 5.83 ± .38 | .931 |

| Item 5 The tutor seemed competent during feedback. | 5.83 ± .39 | 5.87 ± .34 | .518 |

| Mean Items 1-5 | 5.73 ± .31 | 5.70 ± .46 | .876 |

| After the final, sixth repetition (T2) | |||

| Item 1 The tutor’s feedback was comprehensible. | 5.96 ± .21 | 6.00 ± .00 | 1.000 |

| Item 2 I could easily follow the tutor’s instructions. | 5.83 ± .39 | 5.58 ± .50 | .224 |

| Item 3 The feedback was helpful for improving skills. | 5.91 ± .29 | 5.96 ± .20 | .108 |

| Item 4 The tutor was attentive and concentrated. | 5.87 ± .34 | 5.92 ± .28 | .351 |

| Item 5 The tutor seemed competent during feedback. | 6.00 ± .00 | 5.92 ± .28 | 1.000 |

| Mean Items 1-5 | 5.91 ± .17 | 5.88 ± .19 | .341 |

| p-value 2 | .004 | .018 | |

1Mann Whitney U test.

2Wilcoxon signed rank test.

Perceived value of the feedback between the two groups after Peyton’s step 4 (T1), and after the final, 6th repetition (T2; mean and standard deviation of six-point Likert scales from 6 = fully agree to 1 = completely disagree; M-W-U-Test, p-values).

Workload/skill-related demands perceived at T1 and T2

Both groups rated the overall skill-related demands as high, with no differences between the HFF and LFF group after step 4 of Peyton’s four-step approach (T1; HFF group 50.78 ± 12.0%; LFF group 47.15 ± 17.3%; p = .395; mean of scores from 0 = very low to 100 = very high using the National Aeronautics and Space Administration Task Load Index, NASA-TLX). After the final, sixth repetition (T2), both groups rated the task as less demanding compared to T1 (HFF group T1 50.78 ± 12.02, T2 40.51 ± 18.74, p.003; LFF group T1 47.15 ± 17.35, T2 35.97 ± 16.58, p = <.001), with no differences emerging between the HFF group and the LFF group at T2 (HFF 40.51 ± 18.74; LFF 35.97 ± 16.58; p = .407).

Skill-specific self-efficacy ratings (T0, T1 and T2)

Self-efficacy ratings related to competencies regarding nasogastric tube placement improved substantially over the course of the training (HFF p < .001; LFF p < .001), but there was no significant difference between the study groups prior to the training (T0), after step 4 of Peyton’s four-step approach (T1) or after the final, sixth repetition (T2; see Table 3).

Table 3.

Skill-specific self-efficacy ratings

| High-frequency feedback group (HFF group) N = 23 | Low-frequency feedback group (LFF group) N = 24 | MWU p-value 1 | |

|---|---|---|---|

| Prior to the training (T0) | 2.35 ± .71 | 2.33 ± .64 | .640 |

| After Peyton’s step 4 (T1) | 4.70 ± .59 | 4.86 ± .74 | .296 |

| After the final, sixth repetition (T2) | 5.27 ± .39 | 5.37 ± .40 | .872 |

| p-value 2 | <.001 | <.001 |

1Mann Whitney U test.

2Friedman test.

Self-efficacy ratings relating to five competencies in inserting a nasogastric tube between the two groups before the training (T0), after Peyton’s step 4 (T1), and after the final, 6th repetition (T2; mean and standard deviation of six-point Likert scales from 6 = fully agree to 1 = completely disagree; M-W-U-Test, p-values).

Independent video assessment: task-specific clinical skill performance by expert binary checklist rating (T1, T2)

For expert binary checklist ratings, an ANOVA with the between-subject factor ‘Group’ (HFF vs. LFF) and within-subject factors ‘Time’ (T1 vs. T2) was conducted. The calculated ANOVA was not statistically significant for all effects (main effects and interaction; p = .147). Nevertheless, an exploratory post-hoc analysis was performed according to our predefined hypothesizes. As expected, during step 4 of Peyton’s four-step approach (T1), no difference was found between the HFF group and the LFF group in the total score for specific clinical skill performance (p = .851; see Table 4). At T2, both groups scored higher on binary checklist ratings compared to T1 (p < .001) with the HFF group showing higher scores at T2, although not significantly (p < .093; see Table 4).

Table 4.

Task-specific clinical skill performance and global procedural performance

| Task-specific clinical skill performance (binary checklists) | |||

|---|---|---|---|

| High-frequency feedback group (HFF group) N = 23 | Low-frequency feedback group (LFF group) N = 24 | p-value 1 | |

| Peyton’s step 4 (T1) | 91.06 ± 7.48 | 91.42 ± 9.14 | .851 |

| Final, 6th repetition (T2) | 99.22 ± 2.25 | 96.04 ± 4.96 | .093 |

| p value1 | <.001 | <.001 | |

| Global procedural performance (global rating) | |||

| High-frequency feedback group (HFF group) N = 23 | Low-frequency feedback group (LFF group) N = 24 | p-value 2 | |

| Peyton’s step 4 (T1) | 5.31 ± 0.50 | 5.30 ± .64 | .941 |

| Final, 6th repetition (T2) | 5.95 ± 0.07 | 5.65 ± .48 | <.004 |

| p value3 | <.001 | .002 | |

1LSD-post-hoc Tests.

2Mann-Whitney-U Test.

3Wilcoxon signed-rank test (T1 vs. T2).

Performance ratings of the two groups in Peyton’s step 4 (T1) and in the final, 6th repetition (T2) in task-specific clinical skill performance (binary checklist rating as mean score in percent of maximum achievable points and standard deviation; checklist of 16 items with a minimum of 0 and a maximum of 16 points; ANOVA, p-values) and global procedural performance (global performance rating as mean score of global rating scales ± standard deviation; six-point Likert scale from 6 = very good to 1 = unsatisfactory; M-W-U-Test, p-values).

Independent video assessment: global procedural performance rating (T1, T2)

Global ratings of procedural performance assessed with the Integrated Procedural Performance Instrument (IPPI) also revealed no difference at the beginning of the training during step 4 of Peyton’s four-step approach (T1; p = .941). At T2, both groups scored higher in their global procedural performance compared to T1 (HFF group p < .001; LFF group p = .002). In addition, after the final, sixth repetition (T2), the HFF group achieving better scores than the LFF group (p = .004; see Table 4).

Inter-rater reliability for independent video raters

Standardized inter-rater reliability for independent video raters was .79 for the assessment of step 4 of Peyton’s four-step approach, and .75 for the evaluation of the final, sixth repetition when using binary checklist ratings, and .76 for the assessment of step 4 of Peyton’s four-step approach, and .81 for the evaluation of the final, sixth repetition when using global performance ratings.

Discussion

In this randomized prospective study, we assessed the influence of frequency of expert feedback during redundant practice in the early acquisition of students’ clinical procedural skills. Both high- and low-frequency intermittent feedback resulted in a significant improvement of students’ clinical procedural skill performance as primary endpoint, in line with earlier findings [1,2,4,45]. High-frequency intermittent feedback, however, proved to result in an even better procedural performance compared to low-frequency intermittent feedback. Regarding the exploratory analyses of task specific performance (as reflected by our binary checklist ratings), both groups benefited from the training but with no significant difference between the two groups. A limitation of our results is that high scores in skill performance were already found during the very first independent performance, which we attribute to the success of Peyton’s four-step framework of deconstruction and learners’ comprehension before actually performing the skill.

Regarding the investigated cohort, there was no difference between the two study groups prior to the experiment with respect to age, sex, prior medical education, number and field of chosen electives, general and skill-specific self-efficacy ratings relating to nasogastric tube placement, or individual learning styles. Pre- and post-skill-specific self-efficacy ratings were higher in both groups, with no significant difference. Although the correlation between self-efficacy – reflecting a modification of physicians’ perception, motivation, and activity [46] – and superior objective performance measures is called into doubt in the literature [47], previously published research implies that higher self-efficacy in skills training results in more rigorous demands by the students for supervision during the performance of clinical skills at bedside on patient wards [46-48]. Our results strengthen the findings that – irrespective of the frequency of intermittent feedback – repetitive deliberate practice represents one of the most important factors to enhance self-efficacy.

Taking a closer look at the learning curve (dependent on the complexity of the motor skill), both groups’ performance started from the same level at beginning of the training with relatively high scores after the first 4-step Peyton training, even before the first feedback (90%, see Table 4) and reaching a proficiency level of 95% after five consecutive repetitions. Thus we assume a medium degree of complexity compared to other settings [49-52].

The feedback of trainers aimed at aspects of trainees’ performance with better than average performance and the trainees’ very high rating of this feedback associated to their improved performance is in line with previous findings suggesting both informational and motivational influences of such feedback (for a comprehensive review, see [8]). As the two groups rated the value of trainers’ feedback equally high, we attribute effects of the study to the variance in frequency of feedback. Notably, more feedback, more frequent feedback, or even concurrent feedback, may not always necessarily have a positive impact [19] or even degrade learning [53]. In our study, we were able to show that intermittent feedback – at both low and high frequency – supports trainees’ performance in a complex motor skill.

This increase in performance among both study groups in terms of smoothness of the procedure (as reflected by our global rating scores), was more pronounced after a higher frequency of intermittent feedback. This is in contrast to other findings: Receiving no feedback in a surgical simulator setting led to more instrument smoothness than with receiving feedback – even though, as expected, more mistakes occurred [21]. Too intense feedback during the early stages of skill acquisition may even hinder learning [20]. This fact that reducing the frequency of feedback related to performance enhances motor skills learning has been described previously [54] and has not yet been satisfactorily explained. In our study high frequency intermittent feedback more affects the secureness and smoothness of the procedure to be performed, than the accurate performance of the single sub-steps (task-specific clinical skill performance).

Limitations

Both groups already achieved high scores in their nasogastric skill performance during the very first independent performance, which could be attributed to the success of Peyton’s four-step framework of deconstruction and the learners’ comprehension before actually performing the skill. This only leaves small scope for improvement – but of note, there is still a significant effect for both groups. Notably, within Peyton’s four-step framework, there is no scheduled specific feedback. In line with previous findings, our undergraduates reached mastery after their sixth repetition. For more experienced members of the medical community, i.e. graduates, reduced or delayed feedback as well as a more complex instruction might be more successful [19].

We measure training performance during practice and during early stages of skills learning; currently, a final conclusion for retention and transfer of this performance to future practice is hard to draw. Our work group has addressed this apparent gap in literature previously; our setting seems to provide more potential for retention than the traditional bedside teaching [2,5].

Conclusion

As a conclusion, the optimal benefit from feedback seems to be a question of timing and dosing. Regarding the pros and cons of frequency or timing of feedback, we were able to show that both high- and low-frequency intermittent positive feedback leave sufficient time for the learner for self-controlled practice as compared to continuous concurrent (= permanent) feedback [8]. Our intermittent feedback results in a positive impact on the on skill-specific self-efficacy and the learning curve of students’ clinical procedural skill acquisition, with a moderate reduction of cognitive workload over the training. In contrast, continuous concurrent (= permanent) feedback during skill acquisition may degrade the learning of skills: “It may be better to wait” [55] for a trainee to finish performing a defined sequence. In this sense, we conclude that both low-frequency and high-frequency positive feedback leave sufficient resources for the learner’s cognitive processing, with high-frequency positive feedback potentially being more demanding in this sense. However, apparently, this still leaves sufficient resources for this group to take advantage of their additional intermittent feedback in order to achieve mastery.

Acknowledgments

We acknowledge financial support by Deutsche Forschungsgemeinschaft and Ruprecht-Karls-Universität Heidelberg within the funding programme Open Access Publishing.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors substantially contributed to the conception and planning of study as well as to the drafting of the manuscript. All authors read and approved the final manuscript. CN, JM, and HMB were responsible for conception and design of the study. JM undertook the video documentation and blinding for rating; blinded video rating was performed by HMB, MK, and PW. BB, JM, and CN organized the training sessions and served as tutors for instruction and feedback. CN and JM organised the assessment. CN performed the statistical analysis. CN, HMB, JJ, PW and WH contributed to interpretation of data. CN, HMB, and JM drafted the manuscript, CN, HMB, JJ, JM, and WH wrote the manuscript.

Contributor Information

Hans Martin Bosse, Email: hansmartin.bosse@med.uni-duesseldorf.de.

Jonathan Mohr, Email: jonathan-mohr@gmx.com.

Beate Buss, Email: beate.buss@web.de.

Markus Krautter, Email: markus.krautter@med.uni-heidelberg.de.

Peter Weyrich, Email: pweyrich@hotmail.com.

Wolfgang Herzog, Email: wolfgang.herzog@med.uni-heidelberg.de.

Jana Jünger, Email: jana.juenger@med.uni-heidelberg.de.

Christoph Nikendei, Email: christoph.nikendei@med.uni-heidelberg.de.

References

- 1.Lynagh M, Burton R, Sanson-Fisher R. A systematic review of medical skills laboratory training: where to from here? Med Educ. 2007;41:879–887. doi: 10.1111/j.1365-2923.2007.02821.x. [DOI] [PubMed] [Google Scholar]

- 2.Lund F, Schultz JH, Maatouk I, Krautter M, Moltner A, Werner A, et al. Effectiveness of IV cannulation skills laboratory training and its transfer into clinical practice: a randomized, controlled trial. PLoS One. 2012;7:e32831. doi: 10.1371/journal.pone.0032831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bradley P, Bligh J. One year’s experience with a clinical skills resource centre. Med Educ. 1999;33:114–120. doi: 10.1046/j.1365-2923.1999.00351.x. [DOI] [PubMed] [Google Scholar]

- 4.Jünger J, Schäfer S, Roth C, Schellberg D, Friedman Ben-David M, Nikendei C. Effects of basic clinical skills training on objective structured clinical examination performance. Med Educ. 2005;39:1015–1020. doi: 10.1111/j.1365-2929.2005.02266.x. [DOI] [PubMed] [Google Scholar]

- 5.Herrmann-Werner A, Nikendei C, Keifenheim K, Bosse HM, Lund F, Wagner R, et al. “Best practice” skills lab training vs. a “see one, do one” approach in undergraduate medical education: an RCT on students’ long-term ability to perform procedural clinical skills. PLoS One. 2013;8:e76354. doi: 10.1371/journal.pone.0076354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Remmen R, Scherpbier A, van der Vleuten C, Denekens J, Derese A, Hermann I, et al. Effectiveness of basic clinical skills training programmes: a cross-sectional comparison of four medical schools. Med Educ. 2001;35:121–128. doi: 10.1046/j.1365-2923.2001.00835.x. [DOI] [PubMed] [Google Scholar]

- 7.Remmen R, Derese A, Scherpbier A, Denekens J, Hermann I, van der Vleuten C, et al. Can medical schools rely on clerkships to train students in basic clinical skills? Med Educ. 1999;33:600–605. doi: 10.1046/j.1365-2923.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- 8.Wulf G, Shea C, Lewthwaite R. Motor skill learning and performance: a review of influential factors. Med Educ. 2010;44:75–84. doi: 10.1111/j.1365-2923.2009.03421.x. [DOI] [PubMed] [Google Scholar]

- 9.Issenberg S, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27:10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 10.Lammers RL. Learning and retention rates after training in posterior epistaxis management. Acad Emerg Med. 2008;15:1181–1189. doi: 10.1111/j.1553-2712.2008.00220.x. [DOI] [PubMed] [Google Scholar]

- 11.Ericsson KA. An expert-performance perspective of research on medical expertise: the study of clinical performance. Med Educ. 2007;41:1124–1130. doi: 10.1111/j.1365-2923.2007.02946.x. [DOI] [PubMed] [Google Scholar]

- 12.Konrad C, Schüpfer G, Wietlisbach M, Gerber H. Learning manual skills in anesthesiology: is there a recommended number of cases for anesthetic procedures? Anesth Analg. 1998;86:635–639. doi: 10.1213/00000539-199803000-00037. [DOI] [PubMed] [Google Scholar]

- 13.McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. Effect of practice on standardised learning outcomes in simulation-based medical education. Med Educ. 2006;40:792–797. doi: 10.1111/j.1365-2929.2006.02528.x. [DOI] [PubMed] [Google Scholar]

- 14.Loukas C, Nikiteas N, Kanakis M, Moutsatsos A, Leandros E, Georgiou E. A virtual reality simulation curriculum for intravenous cannulation training. Acad Emerg Med. 2010;17:1142–1145. doi: 10.1111/j.1553-2712.2010.00876.x. [DOI] [PubMed] [Google Scholar]

- 15.Uribe JI, Ralph WM, Jr, Glaser AY, Fried MP. Learning curves, acquisition, and retention of skills trained with the endoscopic sinus surgery simulator. Am J Rhinol. 2004;18:87–92. [PubMed] [Google Scholar]

- 16.Peyton JWR. Teaching & Learning in Medical Practice. Manticore Europe: Rickmansworth; 1998. [Google Scholar]

- 17.Auerbach M, Kessler D, Foltin JC. Repetitive pediatric simulation resuscitation training. Pediatr Emerg Care. 2011;27:29–31. doi: 10.1097/PEC.0b013e3182043f3b. [DOI] [PubMed] [Google Scholar]

- 18.Kruglikova I, Grantcharov TP, Drewes AM, Funch-Jensen P. The impact of constructive feedback on training in gastrointestinal endoscopy using high-fidelity Virtual-Reality simulation: a randomised controlled trial. Gut. 2010;59:181–185. doi: 10.1136/gut.2009.191825. [DOI] [PubMed] [Google Scholar]

- 19.Vickers JN, Livingston LF, Umeris-Bohnert S, Holden D. Decision training: the effects of complex instruction, variable practice and reduced delayed feedback on the acquisition and transfer of a motor skill. J Sports Sci. 1999;17:357–367. doi: 10.1080/026404199365876. [DOI] [PubMed] [Google Scholar]

- 20.Stefanidis D, Korndorffer JR, Jr, Heniford BT, Scott DJ. Limited feedback and video tutorials optimize learning and resource utilization during laparoscopic simulator training. Surgery. 2007;142:202–206. doi: 10.1016/j.surg.2007.03.009. [DOI] [PubMed] [Google Scholar]

- 21.Boyle E, Al-Akash M, Gallagher AG, Traynor O, Hill AD, Neary PC. Optimising surgical training: use of feedback to reduce errors during a simulated surgical procedure. Postgrad Med J. 2011;87:524–528. doi: 10.1136/pgmj.2010.109363. [DOI] [PubMed] [Google Scholar]

- 22.Metheny NA, Meert KL, Clouse RE. Complications related to feeding tube placement. Curr Opin Gastroenterol. 2007;23:178–182. doi: 10.1097/MOG.0b013e3280287a0f. [DOI] [PubMed] [Google Scholar]

- 23.De Aguilar-Nascimento JE, Kudsk KA. Clinical costs of feeding tube placement. J Parenter Enteral Nutr. 2007;31:269–273. doi: 10.1177/0148607107031004269. [DOI] [PubMed] [Google Scholar]

- 24.Nikendei C, Zeuch A, Dieckmann P, Roth C, Schafer S, Volkl M, et al. Role-playing for more realistic technical skills training. Med Teach. 2005;27:122–126. doi: 10.1080/01421590400019484. [DOI] [PubMed] [Google Scholar]

- 25.Nikendei C, Schilling T, Nawroth P, Hensel M, Ho AD, Schwenger V, et al. Integrated skills laboratory concept for undergraduate training in internal medicine. Dtsch Med Wochenschr. 2005;130:1133–1138. doi: 10.1055/s-2005-866799. [DOI] [PubMed] [Google Scholar]

- 26.Schwarzer R, Jerusalem M. Generalized Self-Efficacy Scale. In: Weinman J, Wright S, Johnston M, editors. Measures In Health Psychology: A User’s Portfolio Causal and Control Beliefs (Pp 35-37) Windsor, UK: NFER-NELSON; 1995. pp. 35–37. [Google Scholar]

- 27.Kolb AY. The Kolb Learning Style Inventory–Version 3.1 2005 Technical Specifications. 2005. [Google Scholar]

- 28.Lynch TG, Woelfl NN, Steele DJ, Hanssen CS. Learning style influences student examination performance. Am J Surg. 1998;176:62–66. doi: 10.1016/S0002-9610(98)00107-X. [DOI] [PubMed] [Google Scholar]

- 29.Martin IG, Stark P, Jolly B. Benefiting from clinical experience: the influence of learning style and clinical experience on performance in an undergraduate objective structured clinical examination. Med Educ. 2000;34:530–534. doi: 10.1046/j.1365-2923.2000.00489.x. [DOI] [PubMed] [Google Scholar]

- 30.McManus IC, Richards P, Winder BC, Sproston KA. Clinical experience, performance in final examinations, and learning style in medical students: prospective study. BMJ. 1998;316:345–350. doi: 10.1136/bmj.316.7128.345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Coker CA. Consistency of learning styles of undergraduate athletic training students in the traditional classroom versus the clinical setting. J Athl Train. 2000;35:441–444. [PMC free article] [PubMed] [Google Scholar]

- 32.Coker CA. Learning style consistency across cognitive and motor settings. Percept Mot Skills. 1995;81(3 Pt 1):1023–1026. doi: 10.2466/pms.1995.81.3.1023. [DOI] [PubMed] [Google Scholar]

- 33.Nikendei C, Kraus B, Schrauth M, Weyrich P, Zipfel S, Herzog W, et al. Integration of role-playing into technical skills training: a randomized controlled trial. Med Teach. 2007;29:956–960. doi: 10.1080/01421590701601543. [DOI] [PubMed] [Google Scholar]

- 34.Nikendei C. Legen einer Magensonde. In: Jünger J, Nikendei C, editors. Kompetenzzentrum für Prüfungen in der Medizin OSCE Prüfungsvorbereitung Innere Medizin. Stuttgart: Thieme; 2005. pp. 40–141. [Google Scholar]

- 35.Krautter M, Weyrich P, Schultz JH, Buss SJ, Maatouk I, Junger J, et al. Effects of Peyton’s four-step approach on objective performance measures in technical skills training: a controlled trial. Teach Learn Med. 2011;23:244–250. doi: 10.1080/10401334.2011.586917. [DOI] [PubMed] [Google Scholar]

- 36.Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians’ clinical performance: BEME Guide No. 7. Med Teach. 2006;28:117–128. doi: 10.1080/01421590600622665. [DOI] [PubMed] [Google Scholar]

- 37.Parmar S, Delaney CP. The role of proximate feedback in skills training. Surgeon. 2011;9(Suppl 1):S26–S27. doi: 10.1016/j.surge.2010.11.006. [DOI] [PubMed] [Google Scholar]

- 38.Ende J. Feedback in clinical medical education. JAMA. 1983;250:777–781. doi: 10.1001/jama.1983.03340060055026. [DOI] [PubMed] [Google Scholar]

- 39.Weyrich P, Celebi N, Schrauth M, Moltner A, Lammerding-Koppel M, Nikendei C. Peer-assisted versus faculty staff-led skills laboratory training: a randomised controlled trial. Med Educ. 2009;43:113–120. doi: 10.1111/j.1365-2923.2008.03252.x. [DOI] [PubMed] [Google Scholar]

- 40.Tolsgaard MG, Gustafsson A, Rasmussen MB, Hoiby P, Muller CG, Ringsted C. Student teachers can be as good as associate professors in teaching clinical skills. Med Teach. 2007;29:553–557. doi: 10.1080/01421590701682550. [DOI] [PubMed] [Google Scholar]

- 41.Weyrich P, Schrauth M, Kraus B, Habermehl D, Netzhammer N, Zipfel S, et al. Undergraduate technical skills training guided by student tutors–analysis of tutors’ attitudes, tutees’ acceptance and learning progress in an innovative teaching model. BMC Med Educ. 2008;8:18. doi: 10.1186/1472-6920-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hart SG. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. Volume 50. 2006. NASA-Task Load Index (NASA-TLX); 20 Years Later; pp. 904–908. [Google Scholar]

- 43.Regehr G, MacRae H, Reznick RK, Szalay D. Comparing the psychometric properties of checklists and global rating scales for assessing performance on an OSCE-format examination. Acad Med. 1998;73:993–997. doi: 10.1097/00001888-199809000-00020. [DOI] [PubMed] [Google Scholar]

- 44.Kneebone R, Bello F, Nestel D, Yadollahi F, Darzi A. Training and assessment of procedural skills in context using an Integrated Procedural Performance Instrument (IPPI) Stud Health Technol Inform. 2007;125:229–231. [PubMed] [Google Scholar]

- 45.Cook DA, Hatala R, Brydges R, Zendejas B, Szostek JH, Wang AT, et al. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA. 2011;306:978–988. doi: 10.1001/jama.2011.1234. [DOI] [PubMed] [Google Scholar]

- 46.Bandura A. Perceived self-efficacy in cognitive development and functioning. Educ Psychol. 1993;28:117–148. doi: 10.1207/s15326985ep2802_3. [DOI] [Google Scholar]

- 47.Davis A. MP: Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. JAMA. 2006;296:1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 48.Chen W, Liao S, Tsai C, Huang C, Lin C, Tsai C. Clinical skills in final-year medical students: the relationship between self-reported confidence and direct observation by faculty or residents. Ann Acad Med Singapore. 2008;37:3–8. [PubMed] [Google Scholar]

- 49.Young A, Miller JP, Azarow K. Establishing learning curves for surgical residents using Cumulative Summation (CUSUM) analysis. Curr Surg. 2005;62:330–334. doi: 10.1016/j.cursur.2004.09.016. [DOI] [PubMed] [Google Scholar]

- 50.Van Rij AM, McDonald JR, Pettigrew RA, Putterill MJ, Reddy CK, Wright JJ. Cusum as an aid to early assessment of the surgical trainee. Br J Surg. 1995;82:1500–1503. doi: 10.1002/bjs.1800821117. [DOI] [PubMed] [Google Scholar]

- 51.Schlup MM, Williams SM, Barbezat GO. ERCP: a review of technical competency and workload in a small unit. Gastrointest Endosc. 1997;46:48–52. doi: 10.1016/S0016-5107(97)70209-8. [DOI] [PubMed] [Google Scholar]

- 52.Sutton RM, Niles D, Meaney PA, Aplenc R, French B, Abella BS, et al. Low-dose, high-frequency CPR training improves skill retention of in-hospital pediatric providers. Pediatrics. 2011;128:e145–e151. doi: 10.1542/peds.2010-2105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Schmidt RA, Wulf G. Continuous concurrent feedback degrades skill learning: implications for training and simulation. Hum Factors. 1997;39:509–525. doi: 10.1518/001872097778667979. [DOI] [PubMed] [Google Scholar]

- 54.Winstein CJ, Schmidt RA. Reduced frequency of knowledge of results enhances motor skill learning. J Exp Psychol Learn Mem Cogn. 1990;16:677. doi: 10.1037/0278-7393.16.4.677. [DOI] [Google Scholar]

- 55.Walsh CM, Ling SC, Wang CS, Carnahan H. Concurrent versus terminal feedback: it may be better to wait. Acad Med. 2009;84(10 Suppl):S54–S57. doi: 10.1097/ACM.0b013e3181b38daf. [DOI] [PubMed] [Google Scholar]