Abstract

Traditionally, the neural encoding of vestibular information is studied by applying either passive rotations or translations in isolation. However, natural vestibular stimuli are typically more complex. During everyday life, our self-motion is generally not restricted to one dimension, but rather comprises both rotational and translational motion that will simultaneously stimulate receptors in the semicircular canals and otoliths. In addition, natural self-motion is the result of self-generated and externally generated movements. However, to date, it remains unknown how information about rotational and translational components of self-motion is integrated by vestibular pathways during active and/or passive motion. Accordingly, here, we compared the responses of neurons at the first central stage of vestibular processing to rotation, translation, and combined motion. Recordings were made in alert macaques from neurons in the vestibular nuclei involved in postural control and self-motion perception. In response to passive stimulation, neurons did not combine canal and otolith afferent information linearly. Instead, inputs were subadditively integrated with a weighting that was frequency dependent. Although canal inputs were more heavily weighted at low frequencies, the weighting of otolith input increased with frequency. In response to active stimulation, neuronal modulation was significantly attenuated (∼70%) relative to passive stimulation for rotations and translations and even more profoundly attenuated for combined motion due to subadditive input integration. Together, these findings provide insights into neural computations underlying the integration of semicircular canal and otolith inputs required for accurate posture and motor control, as well as perceptual stability, during everyday life.

Keywords: head motion, rotation, sensory integration, translation, vestibular nuclei, voluntary

Introduction

As we navigate through our environment, our head moves in a complex 6D trajectory (3 rotational, 3 translational; Carriot et al., 2014). Importantly, the spatiotemporal complexity of natural head movements considerably exceeds that of conventional stimuli used in the laboratory. The angular and linear components of this motion are sensed by separate sensors (i.e., semicircular canals and otoliths, respectively) and then coded by distinct afferent fibers in the peripheral vestibular system. However, at the next stage of processing, semicircular canals and otolith afferents converge onto single neurons in the vestibular nuclei. This convergence is critical for the accurate encoding of head motion by these central neurons to ensure gaze and postural stability through the vestibulo-ocular and vestibulo-spinal reflexes, as well as accurate perception and motor coordination (for review, see Cullen, 2012).

The encoding of angular and linear motion by vestibular neurons is traditionally studied by applying each stimulus separately. A major limitation of this approach is that one cannot determine how inputs from the semicircular canals and otoliths are combined when both are simultaneously stimulated, despite this being the most common occurrence in everyday life. Dickman and Angelaki (2002) reported that the responses of vestibular nuclei neurons to passively applied combined motion cannot be predicted from the linear addition of their responses to each component. However, it is not yet understood how neurons combine these inputs. There is evidence that the brain does not have independent access to separate estimates of angular and linear movements. For example, subjects more accurately perceive angular rather than linear motion during combined passive stimulation (Ivanenko et al., 1997; MacNeilage et al., 2010). Moreover, the responses of vestibular nuclei neurons are markedly suppressed for active compared with passive motion when restricted to stimulate a single modality (e.g., canals: Roy and Cullen, 2001a, 2004; otolith: Carriot et al., 2013). However, to date, neuronal responses have not been studied during more complex active motion.

To investigate how vestibular nuclei neurons integrate inputs from semicircular canal and otolith afferents, we recorded from single neurons in the vestibular nuclei during rotational motion, translational motion, and combined motion applied across the physiologically relevant frequency range. We found that neuronal responses to combined motion were consistently subadditive: they were less than predicted by the sum of a given neuron's sensitivities to rotation and translation. In response to passively applied motion, responses were described by a frequency-dependent weighted sum of canal and otolith inputs in which rotational weights decreased and translational weights increased with increasing frequency. During active motion, neuronal responses to rotation and translation alone were markedly suppressed relative to the passive condition (consistent with Roy and Cullen, 2001a, Carriot et al., 2013) and, importantly, were correspondingly subadditive in the combined case. Therefore, our findings describe for the first time how semicircular canal and otolith inputs are integrated by vestibular pathways. We speculate that the frequency dependency of this early computation constrains our perception of rotations versus translations during combined motion.

Materials and Methods

Three male rhesus monkeys (Macaca mulatta) were prepared for chronic extracellular recording using aseptic surgical techniques. All experimental protocols were approved by the McGill University Animal Care Committee and were in compliance with the guidelines of the Canadian Council on Animal Care.

Surgical procedures

Anesthesia protocols and surgical procedures have been described previously by Roy and Cullen (2001a). Briefly, under surgical levels of isoflurane (2–3% initially and 0.8–1.5% for maintenance), an eye coil was implanted behind the conjunctiva and a dental acrylic implant was fastened to the animal's skull using stainless steel screws. The implant held in place a stainless steel post used to restrain the animal's head and a stainless steel recording chamber to access the medial vestibular nucleus. Animals were given 2 weeks to recover from the surgery before any experiments were performed.

Data acquisition

During experiments, monkeys sat comfortably in a primate chair fixed to a linear sled mounted on a rotational servomotor, thereby providing the ability to apply translation along the naso-occipital or interaural axes in the horizontal plane and rotation about the earth-vertical axis. Extracellular single-unit activity was recorded using tungsten microelectrodes (Frederick-Haer) and gaze and head angular positions were measured using a magnetic search coil technique described previously (Brooks and Cullen, 2009). Head and body yaw rotational velocities were measured using gyroscopes (Watson). Linear head and body acceleration were measured in 3D using linear accelerometers (ADXL330Z; Analog Devices). Gyroscopes and 3D linear accelerometers were firmly attached to the animal's head post and chair frame. The unit activity, gaze, head, and body signals from each experimental session were recorded on digital audiotape for later playback. During playback, each unit's isolation was carefully evaluated and action potentials were discriminated using a windowing circuit (BAK Electronics). Gaze, head, and body signals were low-pass filtered at 250 Hz (8-pole Bessel filter) and sampled at 1 kHz. All apparatus and data displays were controlled online with a UNIX-based real-time data-acquisition system (REX; Hayes et al., 1982).

Behavioral paradigms

Vestibular-only neuron characterization.

Monkeys were trained to follow visually a target (HeNe laser) projected, via a system of two galvanometer controlled mirrors, onto a cylindrical screen located 60 cm away. The location of the vestibular nucleus was confirmed relative to that of the abducens nucleus—a structure easily identified based on its stereotypical discharge patterns during eye movements (Cullen and McCrea, 1993; Sylvestre and Cullen, 1999). Once localized, recordings were made from single vestibular nuclei neurons during an initial battery of paradigms designed to identify neurons that responded in a manner consistent with previous characterizations of a subclass of neurons termed vestibular-only (VO) neurons, which are sensitive to passive vestibular stimulation (rotation: Scudder and Fuchs, 1992; translation: Dickman and Angelaki, 2002; Carriot et al., 2013) but are insensitive to eye movements. First, each neuron's sensitivity to passively applied linear acceleration was confirmed by translating the monkey (head and body together in space) on the sled along the naso-occipital or interaural axes (1 Hz, ±0.2 g (g = 9.81 m/s2) in complete darkness. If a neuron responded to translation along at least one axis, we next tested its sensitivity to angular velocity by rotating the monkey in yaw using the vestibular servo-motor (1 Hz, peaked at 40°/s). Finally, we confirmed that neurons were insensitive to eye movements by recording their activity during ocular fixation, saccades (±30°), and pursuit motion (0.5 Hz, 40°/s peak velocity). Only cells that did not exhibit any eye velocity or eye position sensitivity were further studied with the stimuli outlined in the following text.

Externally generated motion.

Neural sensitivities were next more extensively characterized in three different paradigms. First, the monkey was periodically rotated (±40°/s) around the yaw axis (diagram in Fig. 1A, top row) at 6 frequencies: 0.5, 1, 2, 3, 4, and 5 Hz. Second, passive translations (0.2 g) were applied along the naso-occipital and interaural axes of the linear sled (diagrams in Fig. 1B,C, top row) at the same 6 frequencies. Finally, at these frequencies, the monkey was periodically moved during combined translational and rotational movements such that the movement path followed a curved trajectory (black arrow in Fig. 3B, inset). Specifically, while the monkey's head was periodically translated along the naso-occipital axis (blue arrows in Fig. 3B, inset), the axis of translation was concurrently rotated (green arrow in Fig. 3B, inset). The resultant combined movements had a typical peak angular velocity of 40 °/s, a peak naso-occipital acceleration of 0.25 g, and a peak interaural acceleration of 0.1 g.

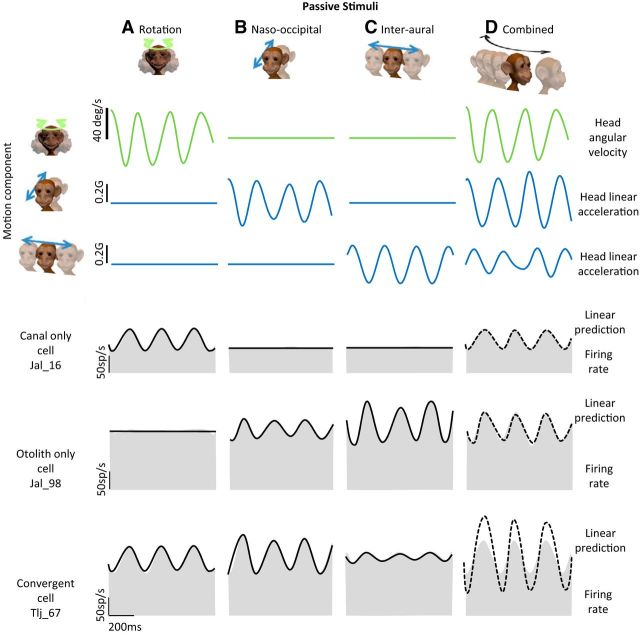

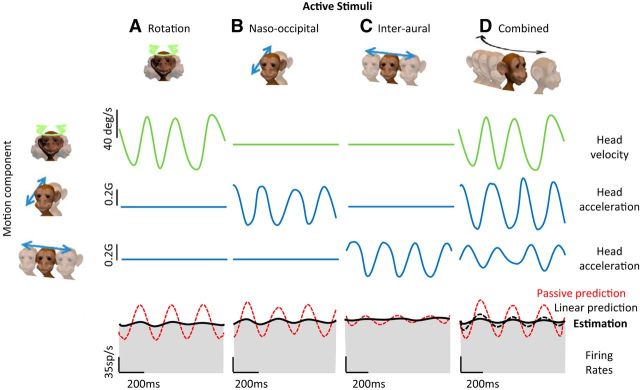

Figure 1.

Response characteristics of example VO neurons. A–D, Activity of three example neurons (unit Jal_16: canal-only cell, unit Jal_98: otolith-only cell and Tlj_67: convergent cell) during: passive yaw rotation (A), passive naso-occipital translation (B), passive interaural translation (C), and combined movements comprising rotation and translation such that the head trajectory followed a curved path (D). The three top rows show the stimulus time courses. The three bottom rows show the cells' responses to these stimuli. An estimate of the firing rate based on response sensitivity and phase measured from Equations 2 and 3 and Equations 4 and 5 (solid black trace) is superimposed on the actual firing rate traces (shaded gray obtained by Kaiser filtering the spike trains; see Materials and Methods) in response to rotational and translational stimuli, respectively. Note that because canal-only and otolith-only neurons did not respond during translation and rotation, respectively, the average firing rate was used as an estimate of the firing rate in these conditions. The dashed line in D represents, for each neuron, a prediction of the firing rate based on a linear summation model that sums the two estimates of firing rates corresponding to the rotational and translational components of the curved path stimuli. Note that for the illustrations here and in Figures 4 and 5, traces representing stimuli were filtered with the same Kaiser filter used to obtain the firing rate.

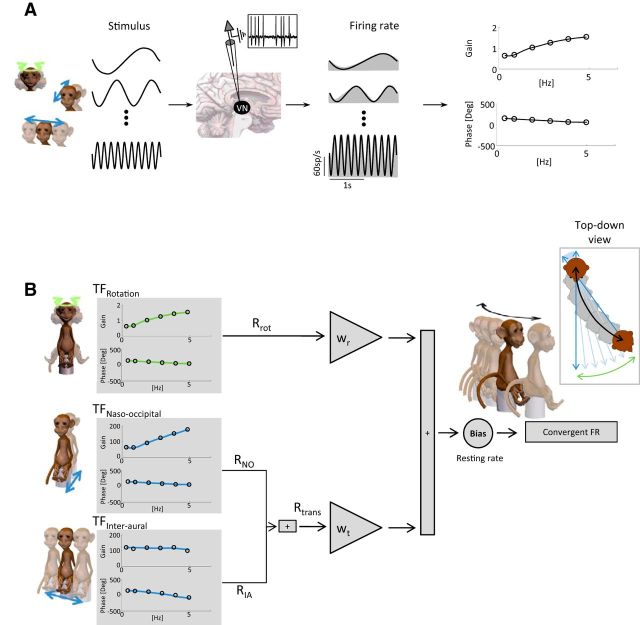

Figure 3.

Description of the weighted summation model. A, Stimuli were applied at different frequencies (left) and corresponding responses were recorded from VO neurons (yaw rotation responses are shown in the middle). For each frequency of the stimuli, the neuronal sensitivity and phase (right, circles) were determined to construct the Bode plot. Subsequently, a transfer function best describing these values was estimated (right, black lines). B, These transfer functions (gray boxes) were used to estimate the Rrot, RNO, and RIA components of the neuronal response. The naso-occipital and interaural components were then combined together to obtain the Rtrans component of the response. wr and wt were systematically varied to optimize the fit to the firing rate of the cell during the combined stimuli. wr and wt were determined as the weights that gave rise to the best fit to the firing rate. Inset, Top-down view of head displacement during the combined motion.

Self-generated motion.

After a neuron had been characterized during the three passive paradigms described above, we slowly and carefully released the rotational brake to let the monkey generate head rotations. Monkeys were trained to generate voluntary rotational head movements to track a presented food target. Once a given neuron's response had been fully characterized during voluntary rotations, we reengaged the rotational brake and released the brake of the linear sled, thereby allowing the monkey to make voluntary head translations along the naso-occipital or interaural axes. Finally, both translational and rotational brakes were released at the same time, allowing the monkey to produce combined movement comprising both translation and rotation. Monkeys were trained to generate movements with similar trajectories to passive stimuli. Specifically, these self-generated head movements had predominant frequencies between 3 and 4.5 Hz (average 3.5 ± 0.8 Hz) and were characterized by an average peak angular velocity of 35 °/s ± 6, an average peak naso-occipital acceleration of 0.24 ± 0.08 g, and an average peak interaural acceleration of 0.07 ± 0.02 g, which were comparable to those of passively generated stimuli (p > 0.05 for both peak velocity and accelerations).

Analysis of neuronal discharges

Data were imported into the MATLAB (The MathWorks) programming environment for analysis. Rotational head velocity and translational head acceleration signals were digitally low-pass filtered at 15 Hz. Estimates of the time-dependent firing rate were obtained by low-pass filtering the spike train using a Kaiser window with cutoff frequency greater than that of the stimulus by 1 Hz (Cherif et al., 2008). The resting discharge of each unit was determined from ∼10 s of unit activity collected while the animal was stationary with its head restrained. To verify that a neuron was unresponsive to eye position and/or velocity, periods of steady fixation and saccade-free smooth pursuit were analyzed using a multiple regression analysis (Roy and Cullen, 1998, 2001b).

A least-squared regression analysis was then used to estimate each unit's response characteristics during rotation or translation as follows:

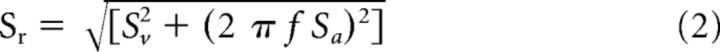

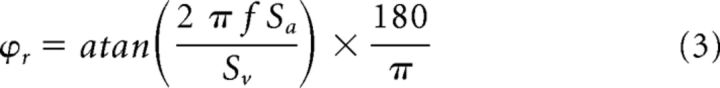

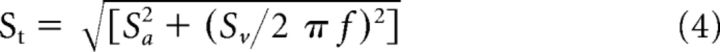

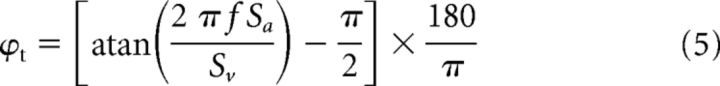

where is the estimated firing rate, Sv and Sa are coefficients representing sensitivities to head velocity and acceleration respectively, b is a bias term representing the resting discharge, and Ḣ (t) and Ḧ (t) are head velocity and head acceleration, respectively. Then to evaluate the model's ability to estimate neuronal firing rate, the variance-accounted-for (VAF) was computed as follows: VAF = 1 − [var ( − fr)/var (fr)], where fr represents the actual firing rate and a VAF of 1 indicates a perfect fit to the data (Cullen et al., 1996). Note that the VAF in such a linear model is equivalent to the square of the correlation coefficient (R2). Only data for which the firing rate was >10 sp/s were included in the optimization to prevent fitting a given neuron's response during epochs where the neuron was driven into cutoff. The coefficients in Equation 1 were then used to determine each cell's head velocity sensitivity [Sr (sp/s)/(°/s)] and phase with respect to head velocity [ϕr (deg)] using the following equations (Sadeghi et al., 2007b; Sadeghi et al., 2009):

|

|

To quantify each unit's response to translation, the same equation (Equation 1) was used. Neurons' head acceleration sensitivity [St (sp/s)/G] and phase with respect to head acceleration [ϕt (deg)] were then computed using the following equations:

|

|

A neuron was judged to be unresponsive to the stimuli (rotation or translation) if it was not significantly modulated by stimulation as quantified using an exclusion criterion VAF of <0.1.

We next characterized responses of central vestibular neurons during combined movements. Our rationale was that: (1) canal and otolith afferent responses to rotational and linear motion are well described by linear transfer functions (for review, see Goldberg, 2000), which predict increasing gain as a function of frequency, and (2) afferent-target neurons in the vestibular nuclei display dynamic responses to rotations or translations when applied alone, similar to those of semicircular canals and otoliths afferents, respectively (Massot et al., 2012 and Carriot et al., 2013). Accordingly, we first assessed whether simple linear addition could explain responses to combined stimulation by predicting the modulation based on a simple “linear summation model” (Alvarado et al., 2007) that sums the two estimates of firing rates due to the rotational and translational components of the self-motion. This model effectively adds the canal- and otolith-driven components of the neuronal response. To characterize each unit's response to the combined stimuli, Bode plots of the sensitivities (Sr and St) and phases (ϕr, ϕt) were used to estimate the best transfer functions for rotation as well as translation (naso-occipital and interaural), respectively (Fig. 3A). Using these transfer functions we first estimated the rotational (Rrot), naso-occipital (RNO), and interaural (RIA) components of the neuronal response. The naso-occipital and interaural components were then combined to obtain the translational (Rtrans) component of the response.

As detailed in the Results, the linear summation model typically overestimated neuronal responses (termed the “linear prediction” in Fig. 1). Accordingly, we next characterized neuronal responses using a “weighted summation model” in which we estimated the weights for rotation (wr) and translation (wt), which gave rise to the best fit to the firing rate () of the cell during the combined stimuli (Fig. 3B) as follows:

Note that this model contrasts with the simple linear summation model because the latter assumes that wr = 1 and wt = 0 for canal-only neurons, whereas wr = 0 and wt = 1 for otolith-only neurons. Similarly, for convergent cells, the linear summation model assumes equal weights of 1 for both wr and wt.

Results are reported (and plotted) as means ± SEM and the level of statistical significance was set at p < 0.05 using Student's t tests.

Results

Single unit recordings were made from a distinct population of vestibular nuclei neurons, termed VO neurons, which are responsive to passive vestibular stimulation but exhibit no sensitivity to eye movement (Scudder and Fuchs, 1992; Cullen and McCrea, 1993; McCrea et al., 1999). Notably, these neurons receive vestibular afferent input and their outputs mediate postural reflexes as well as perception of self-motion and spatial orientation (for review, see Cullen, 2012). Recordings were made from VO neurons (n = 52) while head-restrained monkeys were passively moved—head and body together—relative to space. Based on their responses to rotational or translational stimuli, neurons were classified into three distinct groups: (1) canal-only neurons responded only to rotation (n = 12), (2) otolith-only neurons responded only to translation (n = 13), and (3) convergent (i.e., canal + otolith) neurons responded to both translation and yaw rotation (n = 27).

Figure 1, A–C, shows the responses of three example neurons, specifically canal-only, otolith-only, and convergent neuron to yaw rotation (Fig. 1A), naso-occipital (Fig. 1B), and interaural translation (Fig. 1C). By definition, the canal-only cell was strongly modulated by rotational stimulation [sensitivity: 0.88 (sp/s)/(°/s)] but not translation. In contrast, the otolith-only cell did not respond to rotational stimuli, but responded to translations along both naso-occipital and interaural axes (naso-occipital: 130 (sp/s)/G; interaural: 250 (sp/s)/G). Finally, the convergent cell responded to both rotation and translation, with stronger responses for naso-occipital than interaural translations (rotation: 0.97 (sp/s)/(°/s); naso-occipital: 209 (sp/s)/G; interaural: 42 (sp/s)/G). Note that, overall, the average neuronal sensitivities during naso-occipital and interaural translations were comparable (p > 0.05) for the population of otolith-only (176 ± 5 vs 188 ± 4 (sp/s)/G) and convergent cells (205 ± 4 vs 231 ± 3 (sp/s)/G). Moreover, rotational and translational sensitivities of convergence neurons [rotation: 0.78 ± 0.06 (sp/s)/(°/s); naso-occipital translation: 205 ± 4 (sp/s)/G; inter-aural translation: 231 ± 3 (sp/s)/G) were not significantly different from neuronal sensitivities of canal-only (0.79 ± 0.09 (sp/s)/(°/s)] and otolith-only [naso-occipital: 176 ± 5 (sp/s)/G; inter-aural: 188 ± 4 (sp/s)/G] neurons, respectively (p > 0.1).

Responses during externally applied simultaneous rotation and translation

During natural everyday activities, our semicircular canal and otolith sensors are generally activated simultaneously. Before this study, it was unclear how central vestibular neurons combine these incoming sensory inputs from the periphery. Theoretically, this integration could be additive, subadditive, or supperadditive given that each of these scenarios has been observed for other sensory systems (for review, see Stein and Stanford, 2008). Interestingly, based on perceptual and eye motion responses, Zupan et al. (2002) proposed a “sensory weighting model” in which semicircular canal and otolith inputs are subadditively integrated with visual input. Consistent with this proposal, it has been reported that cortical neurons in area MSTd subadditively integrate otolith and visual inputs during linear self-motion (for review, see Angelaki et al., 2009). Here, to investigate the integration of semicircular canal and otolith inputs at the first stage of central vestibular processing directly, we quantified the responses of vestibular nuclei neurons during combined rotational/linear self-motion. Figure 1D illustrates the example neurons' responses during such combined movements for which the applied head motion followed a periodic and curved path trajectory, as shown in Figure 3B, inset. The linear addition model of each neuron's response based on its sensitivity to pure rotation and pure translation is superimposed (dashed lines). Note that, during the combined stimulus, the firing rates of both the example canal-only and otolith-only neurons were well predicted by their responses to rotation and translation alone, respectively (VAF = 0.77 and 0.75, respectively). However, the firing rate of the convergent neuron was not well predicted by the sum of its responses to rotation and translation applied alone; instead, the linear addition model overestimated the firing rate of the convergent neuron (dashed line in Fig. 1D, bottom).

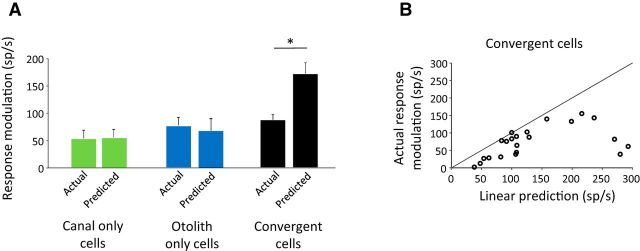

Across our population of convergent neurons, the depth of modulation (i.e., peak to peak modulation) of the actual firing rate was 49% lower (p < 0.001) than that predicted by the linear addition model (Fig. 2A, black bars; 87 sp/s ± 9.9 vs 172 sp/s ± 19.9 for actual vs estimated firing rate). In contrast, the actual depth of modulation for canal-only and otolith-only neurons was not significantly different from that predicted based on the responses to rotation and translation delivered alone, respectively (Fig. 2A, green and blue bars). Figure 2B further illustrates our finding that convergent neuron responses are subadditive during combined stimulation for the neuronal population. Comparison on a cell-by-cell basis revealed that all but one convergent neuron's modulation were lower than that predicted by the linear sum of its responses to rotation and translation applied alone.

Figure 2.

Performances of the linear addition model in predicting VO neurons' modulations in response to passive stimuli. A, Average modulation depth measured from the actual firing rate and from the prediction of the firing rate based on a linear summation model for the three distinct groups of VO neurons. B, Comparison of the depth of modulation between actual firing rate and a prediction of the firing rate based on a linear summation model for populations of convergent neurons during combined passive movements.

As noted above, our primarily goal was to understand how vestibular nuclei neurons integrate inputs from semicircular canal and otolith afferents during self-motion. Accordingly, we next investigated whether the integration of canal and otolith inputs varied as a function of stimulation frequency. To this end, we recorded neuronal responses during either rotation or translation alone across the physiologically relevant frequency range of 0.5–5 Hz (Fig. 3A). We computed neuronal response sensitivities and phases for each frequency of stimulation and then fit transfer functions to characterize the rotational and translational response dynamics of each given neuron (Fig. 3B). In addition, the neuronal responses were recorded during combined rotation and translation across this same frequency range and the corresponding neuronal weights for rotation and translation transfer functions (wr and wt, respectively) that gave rise to the best estimate of firing rate were computed.

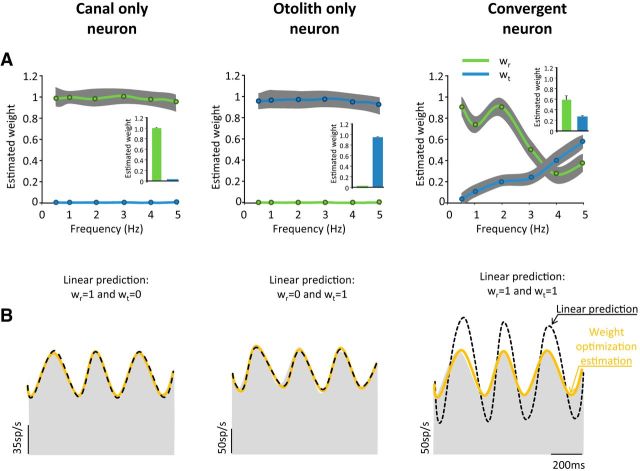

The neuronal weights are shown for our populations of canal-only, otolith-only, and convergent cells in Figure 4. To estimate the response of canal-only neurons, wt was set to zero while wr was varied such that the optimal fit to the neuronal response was obtained. A complementary procedure was performed to estimate the response of the otolith-only cells by setting wr to zero and varying wt. For these two groups of neurons, we found that the optimized weight for the single free parameter was constant and was not significantly different from 1 (p > 0.5) across the entire frequency range (canal-only cells, 1.01 ± 0.06; otolith-only cells 0.96 ± 0.06). We next estimated the response of convergent neurons by allowing both neuronal weights (wr and wt) to vary freely. If these neurons linearly add canal and otolith inputs, then both estimated weights should be 1. However, in striking contrast to the weights estimated for the canal-only and otolith-only neurons, wr and wt were both lower than 1 over the entire frequency range (Fig. 4, right). Therefore, unlike canal-only and otolith-only neurons, convergent neurons subadditively integrate canal and otolith inputs. On average, wt was significantly lower than wr (0.24 ± 0.02 vs 0.56 ± 0.1, respectively; p = 0.001, Fig. 4A, right, inset) for the frequency range tested. We found that wt was significantly lower than wr for lower frequencies (up to 3 Hz; p = 0.01); however, they were comparable for frequencies >3 Hz (p > 0.05, Fig. 4A, right) because wr decreased and wt increased with stimulus frequency. Figure 4B shows the linear (dashed lines) and best fits (orange lines) for the 3 example neurons during 5 Hz stimulation. The example canal-only and otolith-only neurons were well fit by models based on weights of 1 (i.e., wr and wt, respectively) (Fig. 4B, left and center, dashed line), whereas, in contrast, the convergent cell's response was overestimated by a model with both weights set to 1 (Fig. 4B, right, dashed line). This discrepancy is consistent with the subadditive integration of canal and otolith inputs that we observed for convergent neurons.

Figure 4.

Performances of the weighted summation model during combined stimulus. A, Population-averaged weights for rotation (green) and translation (blue) across frequencies. The weights of the canal-only and otolith-only cells did not vary with frequency. In contrast, the weights for the convergent cells changed with frequency: wr decreased and wt increased with frequency. On average, convergent neurons had significantly lower wt than wr (right, inset: 0.24 ± 0.02 vs 0.56 ± 0.1, respectively; p = 0.001). B, Linear predictions (dashed line) and weight optimization estimation (orange line) for the response of the three types of cells presented in Figure 1 during combined stimuli. For the example canal-only and otolith-only neurons, the linear prediction and the weight optimization did not differ. However, for the convergent neuron, although the weighted summation model fit the firing rate well, the linear prediction overestimated the response of the cell.

Together, the findings of our experiments in which combined motion was passively applied show that VO neurons do not linearly combine rotational and translational inputs, but rather that this integration is subadditive.

Responses during self-generated simultaneous rotation and translation

In everyday life, vestibular stimulation is often the result of self-generated motion comprising angular and linear components (Carriot et al., 2014). Accordingly, we next investigated how convergent vestibular nuclei neurons integrate inputs from semicircular canal and otolith afferents during self-generated self-motion. Once a given neuron's passive vestibular sensitivities in response to yaw rotation, translation, and combined stimuli had been fully characterized in the head-restrained condition described above (i.e., Fig. 1), we released the head restraint to allow the monkey to voluntarily rotate its head around the vertical axis, translate its head along both axes (naso-occipital and interaural), and produce rotation and translation at the same time such that the head followed a curved trajectory similar to the passive stimulation (i.e., combined motion). During the transition between head-restrained and head-unrestrained conditions, the waveform of the action potential of each neuron was carefully monitored to ensure that the cell remained undamaged and well isolated (see Materials and Methods). The majority of convergent VO neurons remained sufficiently well isolated (n = 22/27) during voluntary movements (i.e., rotation, translation, and combined movements).

Compared with their modulation in response to either passive rotation or translation alone, neuronal responses to comparable self-generated movements were suppressed. Overall, the reduction in vestibular sensitivity observed in the active condition was consistent with prior characterizations showing an ∼70% decrease in the neuronal modulation during both types of active movements (rotation: Roy and Cullen, 2001a; translation: Carriot et al., 2013). Figure 5, A–C, shows examples of the reduced modulation during active movements for the same example convergent neuron shown in Figure 1. Although this neuron responded robustly to passive rotations, naso-occipital translations, and interaural translations, it was relatively unresponsive to comparable actively generated movement in each of these three dimensions (compare gray-shaded firing rates and red dashed lines).

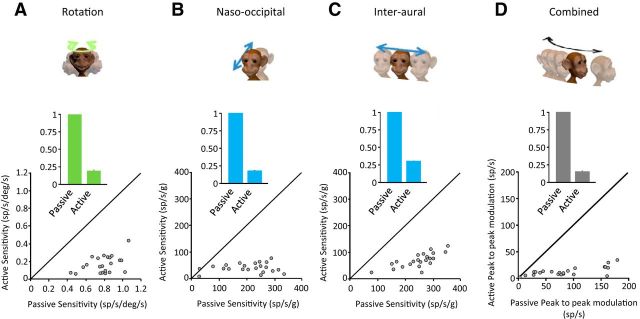

Figure 5.

VO neurons' responses during voluntary movements. A–C, Activity of the same example convergent neuron presented in Figure 1 (unit Tlj_67) during self-generated rotation (A), naso-occipital head translation (B), and interaural head translation (C). Superimposed on the firing rate traces are response predictions based on the neuron's sensitivity to passive stimuli (red dashed lines) and the best fits (solid black trace) provided by the linear model (Eq. 2, 3 for rotation; Eq. 4, 5 for translation). D, Activity of the same example convergent neuron during combined stimuli (i.e., curved path trajectory). The estimation (solid black trace) is the best fit to the neuronal response; the red dashed trace represents the prediction computed based on the Bode plot and weights obtained during passive stimulation (i.e., passive prediction). The black dashed line represents a prediction of the firing rate based on a linear summation model that sums the two estimates of firing rates corresponding to the rotational and translational components of the curved path stimuli during voluntary motion (i.e., linear prediction).

We next quantified responses during combined active motion to determine how convergent neurons integrate simultaneous semicircular canal and otolith afferent input. Figure 5D shows the response of our example convergent neuron to self-generated combined motion. Again, this neuron was typical in that its response was significantly suppressed compared with similar passive motion (cf. shaded firing rate in Figs. 5D, 1D, bottom row). We quantified this attenuation by first obtaining the best estimates of the neuronal responses (Fig. 5D, solid black line) during active movements; then, we computed a prediction of firing rate using a weighted summation model based on the transfer functions and weights obtained during passive stimulation (Fig. 5D, red dashed line). If the response characteristics remained unchanged during active combined motion (relative to the passive condition), one would expect this prediction to match the actual firing rate. However, the responses during voluntarily movement were strongly attenuated (cf. red dashed and solid black lines superimposed on the firing rates in Fig. 5D).

Across our population of neurons, responses during active head motion were significantly attenuated (78.5 ± 4.1%, 81.9 ± 3.9%, 69.9 ± 3.2%, and 85.2 ± 2.1% for rotations, naso-occipital translations, interaural translations, and combined motion, respectively) relative to those predicted by sensitivities to passive motion. This can be seen in the histograms shown in Figure 6 (insets), which compare responses for each motion condition. Comparison on a cell-by-cell basis revealed that individual convergent neurons displayed consistently lower modulation than that predicted by their sensitivities to comparable passive motion. Accordingly, the response of a given individual convergent neuron was significantly reduced during active motion regardless of whether the motion was only rotational, only linear, or a combination of both.

Figure 6.

Population summary of the convergent neurons' responses during active versus passive motion. A–D, Comparison of neuronal sensitivity during active versus passive rotation, translation, and combined stimulation conditions. Note that all data points fall below the unity line, demonstrating a marked reduction in the sensitivity of convergent neurons during active motion. Inset, Mean neuronal sensitivities attenuation during rotation (green), translations (blue), and combined (gray).

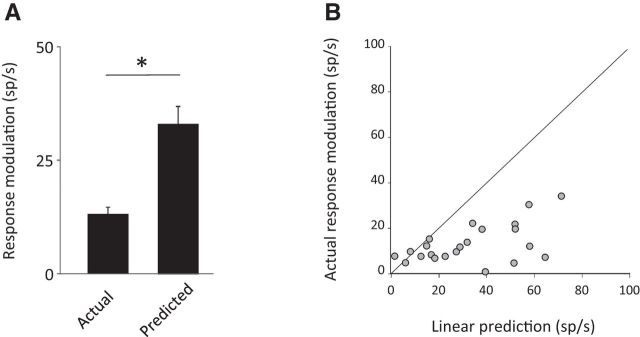

Upon comparing the four plots shown in Figure 6, we hypothesized that neuronal responses are most dramatically suppressed in the combined motion condition during which canal and otolith afferents are simultaneously activated. To address this possibility, we computed a prediction of each neuron's response to active combined motion based on its sensitivity to active rotation and active translations alone. Consistent with this proposal, as can be seen for the example neuron in Figure 5D, the linear-based prediction overestimates its response during active combined motion (cf. black dashed lines, shaded firing rate). Overall, for the population of convergent neurons, the depth of modulation of the actual recorded firing rate was lower than that predicted based on a linear addition model (13 sp/s ± 1.6 vs 33 sp/s ± 3.9 for actual vs estimated firing rate; Fig. 7A). Furthermore, comparison on a cell-by-cell basis (Fig. 7B) revealed that the majority of convergent neurons were subadditive in the active condition. Therefore, together, our results establish that vestibular nuclei neurons subadditively integrate inputs from semicircular canal and otolith afferents during self-motion. Specifically, we showed that integration of self-motion signals is subadditive regardless of whether the head movements are self- or externally generated and that the selective encoding of externally applied versus actively generated motion is a general principle that governs the responses of all types of VO neurons (i.e., canal-only, otolith-only, and convergent) during individual rotation/translation and combined movements.

Figure 7.

Subadditive integration of vestibular inputs during voluntary self-motion. A, Average modulation depth measured from the actual firing rate and from a prediction of the firing rate based on a linear summation model for convergent VO neurons during combined active movements. B, Comparison of the depth of modulation between actual firing rate and a prediction of the firing rate based on a linear summation model for populations of convergent neurons during combined active movements.

Discussion

In this study, we addressed the question of how the brain integrates semicircular canal and otolith information during complex self-motion that simultaneously stimulates both sensory organs. We found that neurons at the first central stage of the vestibular processing subadditively integrate these inputs for both active and passive motion. Notably, a given neuron's response was described by the weighted sum of its responses to passive rotation and translation. In addition, this weighting was frequency dependent; whereas canal inputs were more heavily weighted at low frequencies, the weighting of otolith inputs increased with frequency of the stimuli. We suggest that our findings provide important new insight into neural computations underlying the integration of semicircular canal and otolith inputs required for the control of posture and accurate motor responses, as well as the brain's strategy for calculating an estimate of self-motion.

Canal-otolith sensory integration is subadditive

During everyday activities, our vestibular sensors are typically activated by complex multidimensional motion that simultaneously stimulates both the canals and otoliths. Despite similarities in the anatomical and physiological properties of their hair cells, the canals and otoliths are distinctive sensory organs, which detect different sensory modalities (i.e., linear versus angular motion). Notably, when referenced to the center of mass, linear momentum is mechanically independent of angular motion. Nevertheless, cross-modal sensory integration of angular and linear self-motion is the rule rather than the exception at the first central stage of vestibular processing. Therefore, knowledge of how single neurons integrate the incoming information about head motion from these two modalities is fundamental to further our understanding of how the vestibular system encodes self-motion in everyday life.

Previous behavioral studies generally considered the convergence of canal and otolith signals in the context of how the brain resolves the tilt/translation ambiguity (Glasauer and Merfeld, 1997; Angelaki et al., 1999; Merfeld et al., 1999; Bos and Bles, 2002; Zupan et al., 2002; Green and Angelaki, 2003, 2004; Laurens and Angelaki, 2011). However, our knowledge about the actual neuronal computations that underlie the processing of complex self-motion had been limited. The majority of neurons in the vestibular nuclei, as well as interconnected regions of the cerebellum such as the rostral fastigial nuclei, receive convergent canal and otolith inputs (Kasper et al., 1988; Tomlinson et al., 1996; Angelaki et al., 2004; see Uchino et al., 2005 for review, Siebold et al., 1999, 2001; Yakushin et al., 2006). Neuronal responses are typically characterized during pure rotations or translations (Scudder and Fuchs, 1992; Cullen and McCrea, 1993; McCrea et al., 1999; Roy and Cullen, 2001a; Carriot et al., 2013). Although the few studies addressing how neurons integrate canal and otolith input (i.e., vestibular nuclei; Dickman and Angelaki, 2002; McArthur et al., 2011) found that these inputs are not linearly summed, they did not develop models of integration based on independent responses to each modality. Here, our findings establish that the integration of canal and otolith inputs is subadditive and that the underlying mechanism is characterized by frequency-dependent (nonlinear) weighting of both modalities. We speculate that one benefit of the observed subadditive integration is that it expands the dynamic linear range of vestibular neurons to prevent firing rate saturation or rectification in response to high amplitude natural head movements (Carriot et al., 2014, Schneider et al., 2013). This result builds on other recent work demonstrating a static boosting nonlinearity in the input–output relationship of these neurons for low-frequency rotation when presented concurrently with high-frequency rotation (Massot et al., 2012).

Subadditive integration that approximates the weighted linear sum of multiple inputs has been reported in other sensory areas, including the ventral intraparietal cortex (Avillac et al., 2007; visual and tactile), superior colliculus (Frens and Van Opstal, 1998; Perrault et al., 2005; visual and auditory), dorsal division of the medial superior temporal area (MSTd; Gu et al., 2008; Morgan et al., 2008; visual and vestibular), and temporal association cortex (Dahl et al., 2009; visual and auditory). Most notably, during passive motion, cortical neurons in area MSTd subadditively integrate otolith and visual information (for review, see Angelaki et al., 2009). The investigators speculated that the observed subadditive integration reflects a neural network characterized by divisive normalization in which the weights corresponding to each input change with cue reliability. In the present study, input weights changed with frequency of the passive stimulation: rotational weights decreased, whereas the translational weights increased with increasing frequency. Interestingly, consistent with our findings, prior psychophysical experiments using low-frequency (<1 Hz) self-motion (Ivanenko et al., 1997; MacNeilage et al., 2010) reported that subjects more accurately perceived angular than linear displacement. We predict that subjects may actually perceive linear motion more accurately than rotational motion when experiencing higher frequency head motion comprising both rotation and translation.

Functional implications of input cancellation: postural control and self-motion perception

The vestibular nuclei neurons that were the focus of the present study receive direct input from the vestibular sensory afferents and in turn project to the spinal cord, mediating vestibulo-spinal reflex pathways, and to the thalamus, contributing to self-motion perception (for review, see Cullen, 2011). When we make voluntary movements, it is theoretically advantageous to suppress the responses generated by vestibulo-spinal reflex pathways because this reflex would produce stabilizing motor commands that would effectively oppose the voluntary movement. Indeed, VO neurons are markedly less responsive to active than passive motion when head motion is restricted to stimulate a single vestibular modality (i.e., canals: Roy and Cullen, 2001a, 2004 or otolith: Carriot et al., 2013). Moreover, the suppression of active motion occurs at the level of the vestibular nuclei, because both canal (Cullen and Minor, 2002; Sadeghi et al., 2007a) and otolith (Jamali et al., 2009) afferents similarly encode active and passive rotations and translations, respectively. Evidence to date suggests that the suppression of actively generated vestibular responses is mediated by a mechanism that compares the actual activation of proprioceptors with a motor-generated expectation (for review, see Cullen, 2011, 2012) that involves the cerebellum (Brooks and Cullen, 2009). Our present results extend these findings: comparable response suppression for active angular or linear head motion indicates that the underlying mechanism of neuronal response attenuation is not modality specific.

In response to active combined motion, we further found that convergent neuronal responses are even more attenuated than during active rotations or translations alone. We note that, because our motion platform only applied horizontal rotations/translations, some neurons classified as canal-only or otolith-only may have proven to be convergent had vertical motion been applied. Therefore, because during daily life, our head motion comprises both rotation and translation in 6D (Carriot et al., 2014), we speculate that, as a population, these neurons respond very minimally to our own active self-motion (i.e., vestibular reafference) but are robustly modulated by unexpected multidimensional motion, consistent with their role in mediating reflexes that ensure the maintenance of posture and balance.

The marked attenuation in the neuronal response to vestibular reafference has important implications for self-motion perception (e.g., heading perception, tilt/translation discrimination). During everyday life, most of our movements are self-generated and, as noted above, the neurons characterized in this study are the likely source via thalamus to cortical areas responsible for self-motion perception (for review, see Angelaki and Cullen, 2008). Accordingly, one might expect that a profound reduction in the modulation of central vestibular neurons would impair our perception of self-motion or the ability to distinguish between tilt/translation during self-generated movements. However, during active motion in the absence of vision, subjects not only can perceive head movements but can also distinguish between tilt and translation (Mittelstaedt and Glasauer, 1991). Because our ability to distinguish between tilt and translation requires the integration of canal and otolith information, it had been speculated that the vestibular response attenuation during voluntary movements might be specific to nonconvergent cells (Dickman and Angelaki, 2002). However, our results show that the cancellation of vestibular reafference is a general process that affects all VO neurons regardless of whether they receive inputs from canals and/or otoliths.

If the modulation of convergent neurons is suppressed during active motion, how does the brain distinguish between active tilt and translation and compute an internal estimate of self-motion? One possibility is the brain relies solely on the residual vestibular information available from the small subset of neurons that show less attenuation to active motion (n = 3/22, <30% attenuation). A more likely possibility is that, during active self-motion, the brain integrates head motion information derived from other modalities (i.e., proprioceptive and/or motor efference copy signals; Blouin et al., 1998a, 1998b, 1999). Indeed, there is accumulating evidence that these extravestibular inputs play a critical role in our ability to estimate head orientation in the absence of visual input, for example, when moving in the dark or without salient visual landmarks (Jürgens and Becker, 2006; Frissen et al., 2011). Further experiments will be required to establish how vestibular sensitive neurons at subsequent stages of processing (i.e., thalamo-cortical pathways) encode information about the rotational and translational components of natural self-motion and to determine whether these areas also distinguish actively generated from passive head movements.

Footnotes

This work was supported by Canadian Institutes of Health Research, the National Institutes of Health (Grant DC2390), and Fonds de Recherche du Québec–Nature et Technologies. We thank Alexis Dale, Diana Mitchell, Annie Kwan, Adam Schneider, and Amy Wong for critically reading the manuscript and S. Nuara and W. Kucharski for excellent technical assistance.

The authors declare no competing financial interests.

References

- Alvarado JC, Vaughan JW, Stanford TR, Stein BE. Multisensory versus unisensory integration: contrasting modes in the superior colliculus. J Neurophysiol. 2007;97:3193–3205. doi: 10.1152/jn.00018.2007. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Cullen KE. Vestibular system: the many facets of a multimodal sense. Annu Rev Neurosci. 2008;31:125–150. doi: 10.1146/annurev.neuro.31.060407.125555. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, McHenry MQ, Dickman JD, Newlands SD, Hess BJ. Computation of inertial motion: neural strategies to resolve ambiguous otolith information. J Neurosci. 1999;19:316–327. doi: 10.1523/JNEUROSCI.19-01-00316.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, Shaikh AG, Green AM, Dickman JD. Neurons compute internal models of the physical laws of motion. Nature. 2004;430:560–564. doi: 10.1038/nature02754. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Klier EM, Snyder LH. A vestibular sensation: probabilistic approaches to spatial perception. Neuron. 2009;64:448–461. doi: 10.1016/j.neuron.2009.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel JR. Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci. 2007;27:1922–1932. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blouin J, Okada T, Wolsley C, Bronstein A. Encoding target-trunk relative position: cervical versus vestibular contribution. Exp Brain Res. 1998a;122:101–107. doi: 10.1007/s002210050496. [DOI] [PubMed] [Google Scholar]

- Blouin J, Labrousse L, Simoneau M, Vercher JL, Gauthier GM. Updating visual space during passive and voluntary head-in-space movements. Exp Brain Res. 1998b;122:93–100. doi: 10.1007/s002210050495. [DOI] [PubMed] [Google Scholar]

- Blouin J, Amade N, Vercher JL, Gauthier GM. Opposing resistance to the head movement does not affect space perception during head rotation. In: Becker W, Deubel, Mergner T, editors. Current oculomotor research: physiological and psychological aspects. New York: Plenum; 1999. pp. 193–201. [Google Scholar]

- Bos JE, Bles W. Theoretical considerations on canal-otolith interaction and an observer model. Biol Cybernet. 2002;86:191–207. doi: 10.1007/s00422-001-0289-7. [DOI] [PubMed] [Google Scholar]

- Brooks JX, Cullen KE. Multimodal integration in rostral fastigial nucleus provides an estimate of body movement. J Neurosci. 2009;29:10499–10511. doi: 10.1523/JNEUROSCI.1937-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carriot J, Brooks JX, Cullen KE. Multimodal integration of self-motion cues in the vestibular system: active versus passive translations. J Neurosci. 2013;33:19555–19566. doi: 10.1523/JNEUROSCI.3051-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carriot J, Jamali M, Chacron MJ, Cullen KE. Statistics of the vestibular input experienced during natural self-motion: implications for neural processing. J Neurosci. 2014;34:8347–8357. doi: 10.1523/JNEUROSCI.0692-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherif S, Cullen KE, Galiana HL. An improved method for the estimation of firing rate dynamics using an optimal digital filter. J Neurosci Methods. 2008;173:165–181. doi: 10.1016/j.jneumeth.2008.05.021. [DOI] [PubMed] [Google Scholar]

- Cullen KE. The neural encoding of self-motion. Curr Opin Neurobiol. 2011;21:587–595. doi: 10.1016/j.conb.2011.05.022. [DOI] [PubMed] [Google Scholar]

- Cullen KE. The vestibular system: multimodal integration and encoding of self-motion for motor control. Trends Neurosci. 2012;35:185–196. doi: 10.1016/j.tins.2011.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullen KE, McCrea RA. Firing behavior of brain stem neurons during voluntary cancellation of the horizontal vestibuloocular reflex. I. Secondary vestibular neurons. J Neurophysiol. 1993;70:828–843. doi: 10.1152/jn.1993.70.2.828. [DOI] [PubMed] [Google Scholar]

- Cullen KE, Minor LB. Semicircular canal afferents similarly encode active and passive head-on-body rotations: implications for the role of vestibular efference. J Neurosci. 2002;22:RC226. doi: 10.1523/JNEUROSCI.22-11-j0002.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullen KE, Rey CG, Guitton D, Galiana HL. The use of system identification techniques in the analysis of oculomotor burst neuron spike train dynamics. J Comput Neurosci. 1996;3:347–368. doi: 10.1007/BF00161093. [DOI] [PubMed] [Google Scholar]

- Dahl CD, Logothetis NK, Kayser C. Spatial organization of multisensory responses in temporal association cortex. J Neurosci. 2009;29:11924–11932. doi: 10.1523/JNEUROSCI.3437-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickman JD, Angelaki DE. Vestibular convergence patterns in vestibular nuclei neurons of alert primates. J Neurophysiol. 2002;88:3518–3533. doi: 10.1152/jn.00518.2002. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ. Visual-auditory interactions modulate saccade-related activity in monkey superior colliculus. Brain Res Bull. 1998;46:211–224. doi: 10.1016/S0361-9230(98)00007-0. [DOI] [PubMed] [Google Scholar]

- Frissen I, Campos JL, Souman JL, Ernst MO. Integration of vestibular and proprioceptive signals for spatial updating. Exp Brain Res. 2011;212:163–176. doi: 10.1007/s00221-011-2717-9. [DOI] [PubMed] [Google Scholar]

- Glasauer S, Merfeld DN. Modeling three-dimensional responses during complex motion stimulation. In: Fetter M, Haslwanter T, Misslisch H, Tweed D, editors. Three-dimensional kinematics of eye, head and limb movements. Amsterdam: Harwood Academic; 1997. pp. 387–398. [Google Scholar]

- Goldberg JM. Afferent diversity and the organization of central vestibular pathways. Exp Brain Res. 2000;130:277–297. doi: 10.1007/s002210050033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Angelaki DE. Resolution of sensory ambiguities for gaze stabilization requires a second neural integrator. J Neurosci. 2003;23:9265–9275. doi: 10.1523/JNEUROSCI.23-28-09265.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Angelaki DE. An integrative neural network for detecting inertial motion and head orientation. J Neurophysiol. 2004;92:905–925. doi: 10.1152/jn.01234.2003. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes AV, Richmond BJ, Optican LM. A UNIX-based multiple process system for real-time data acquisition and control. WESCON Conf Proc. 1982;2:1–10. [Google Scholar]

- Ivanenko YP, Grasso R, Israël I, Berthoz A. The contribution of otoliths and semicircular canals to the perception of two-dimensional passive whole-body motion in humans. J Physiol. 1997;502:223–233. doi: 10.1111/j.1469-7793.1997.223bl.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jamali M, Sadeghi SG, Cullen KE. Response of vestibular nerve afferents innervating utricle and saccule during passive and active translations. J Neurophysiol. 2009;101:141–149. doi: 10.1152/jn.91066.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jürgens R, Becker W. Perception of angular displacement without landmarks: evidence for Bayesian fusion of vestibular, optokinetic, podokinesthetic, and cognitive information. Exp Brain Res. 2006;174:528–543. doi: 10.1007/s00221-006-0486-7. [DOI] [PubMed] [Google Scholar]

- Kasper J, Schor RH, Wilson VJ. Response of vestibular neurons to head rotations in vertical planes. I. Response to vestibular stimulation. J Neurophysiol. 1988;60:1753–1764. doi: 10.1152/jn.1988.60.5.1753. [DOI] [PubMed] [Google Scholar]

- Laurens J, Angelaki DE. The functional significance of velocity storage and its dependence on gravity. Exp Brain Res. 2011;210:407–422. doi: 10.1007/s00221-011-2568-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacNeilage PR, Turner AH, Angelaki DE. Canal-otolith interactions and detection thresholds of linear and angular components during curved-path self-motion. J Neurophysiol. 2010;104:765–773. doi: 10.1152/jn.01067.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massot C, Schneider AD, Chacron MJ, Cullen KE. The vestibular system implements a linear-nonlinear transformation in order to encode self-motion. PLoS Biol. 2012;10:e1001365. doi: 10.1371/journal.pbio.1001365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArthur KL, Zakir M, Haque A, Dickman JD. Spatial and temporal characteristics of vestibular convergence. Neuroscience. 2011;192:361–371. doi: 10.1016/j.neuroscience.2011.06.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCrea RA, Gdowski GT, Boyle R, Belton T. Firing behavior of vestibular neurons during active and passive head movements: vestibulo-spinal and other non-eye-movement related neurons. J Neurophysiol. 1999;82:416–428. doi: 10.1152/jn.1999.82.1.416. [DOI] [PubMed] [Google Scholar]

- Merfeld DM, Zupan L, Peterka RJ. Humans use internal models to estimate gravity and linear acceleration. Nature. 1999;398:615–618. doi: 10.1038/19303. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt ML, Glasauer S. Idiothetic navigation in gerbils and humans. Zool Jahrb Allg Zool. 1991;95:427–435. [Google Scholar]

- Morgan ML, Deangelis GC, Angelaki DE. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 2008;59:662–673. doi: 10.1016/j.neuron.2008.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrault TJ, Jr, Vaughan JW, Stein BE, Wallace MT. Superior colliculus neurons use distinct operational modes in the integration of multisensory stimuli. J Neurophysiol. 2005;93:2575–2586. doi: 10.1152/jn.00926.2004. [DOI] [PubMed] [Google Scholar]

- Roy JE, Cullen KE. A neural correlate for vestibulo-ocular reflex suppression during voluntary eye-head gaze shifts. Nat Neurosci. 1998;1:404–410. doi: 10.1038/1619. [DOI] [PubMed] [Google Scholar]

- Roy JE, Cullen KE. Selective processing of vestibular reafference during self-generated head motion. J Neurosci. 2001a;21:2131–2142. doi: 10.1523/JNEUROSCI.21-06-02131.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy JE, Cullen KE. Passive activation of neck proprioceptive inputs does not influence the discharge patterns of vestibular nuclei neurons. Ann N Y Acad Sci. 2001b;942:486–489. doi: 10.1111/j.1749-6632.2001.tb03776.x. [DOI] [PubMed] [Google Scholar]

- Roy JE, Cullen KE. Dissociating self-generated from passively applied head motion: neural mechanisms in the vestibular nuclei. J Neurosci. 2004;24:2102–2111. doi: 10.1523/JNEUROSCI.3988-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadeghi SG, Minor LB, Cullen KE. Response of vestibular-nerve afferents to active and passive rotations under normal conditions and after unilateral labyrinthectomy. J Neurophysiol. 2007a;97:1503–1514. doi: 10.1152/jn.00829.2006. [DOI] [PubMed] [Google Scholar]

- Sadeghi SG, Chacron MJ, Taylor MC, Cullen KE. Neural variability, detection thresholds, and information transmission in the vestibular system. J Neurosci. 2007b;27:771–781. doi: 10.1523/JNEUROSCI.4690-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadeghi SG, Goldberg JM, Minor LB, Cullen KE. Effects of canal plugging on the vestibuloocular reflex and vestibular nerve discharge during passive and active head rotations. J Neurophysiol. 2009;102:2693–2703. doi: 10.1152/jn.00710.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider AD, Carriot J, Chacron MJ, Cullen KE. Statistics of natural vestibular stimuli in monkey: implications for coding. Soc Neurosci Abstr. 2013;43:265.04. [Google Scholar]

- Scudder CA, Fuchs AF. Physiological and behavioral identification of vestibular nucleus neurons mediating the horizontal vestibuloocular reflex in trained rhesus monkeys. J Neurophysiol. 1992;68:244–264. doi: 10.1152/jn.1992.68.1.244. [DOI] [PubMed] [Google Scholar]

- Siebold C, Kleine JF, Glonti L, Tchelidze T, Büttner U. Fastigial nucleus activity during different frequencies and orientations of vertical vestibular stimulation in the monkey. J Neurophysiol. 1999;82:34–41. doi: 10.1152/jn.1999.82.1.34. [DOI] [PubMed] [Google Scholar]

- Siebold C, Anagnostou E, Glasauer S, Glonti L, Kleine JF, Tchelidze T, Büttner U. Canal-otolith interaction in the fastigial nucleus of the alert monkey. Exp Brain Res. 2001;136:169–178. doi: 10.1007/s002210000575. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Sylvestre PA, Cullen KE. Quantitative analysis of abducens neuron discharge dynamics during saccadic and slow eye movements. J Neurophysiol. 1999;82:2612–2632. doi: 10.1152/jn.1999.82.5.2612. [DOI] [PubMed] [Google Scholar]

- Tomlinson RD, McConville KM, Na EQ. Behavior of cells without eye movement sensitivity in the vestibular nuclei during combined rotational and translational stimuli. J Vestib Res. 1996;6:145–158. doi: 10.1016/0957-4271(95)02039-X. [DOI] [PubMed] [Google Scholar]

- Uchino Y, Sasaki M, Sato H, Bai R, Kawamoto E. Otolith and canal integration on single vestibular neurons in cats. Exp Brain Res. 2005;164:271–285. doi: 10.1007/s00221-005-2341-7. [DOI] [PubMed] [Google Scholar]

- Yakushin SB, Raphan T, Cohen B. Spatial properties of central vestibular neurons. J Neurophysiol. 2006;95:464–478. doi: 10.1152/jn.00459.2005. [DOI] [PubMed] [Google Scholar]

- Zupan LH, Merfeld DM, Darlot C. Using sensory weighting to model the influence of canal, otolith and visual cues on spatial orientation and eye movements. Biol Cybern. 2002;86:209–230. doi: 10.1007/s00422-001-0290-1. [DOI] [PubMed] [Google Scholar]