Abstract

Neurons responsive to visual stimulation have now been described in the auditory cortex of various species, but their functions are largely unknown. Here we investigate the auditory and visual spatial sensitivity of neurons recorded in 5 different primary and non-primary auditory cortical areas of the ferret. We quantified the spatial tuning of neurons by measuring the responses to stimuli presented across a range of azimuthal positions and calculating the mutual information (MI) between the neural responses and the location of the stimuli that elicited them. MI estimates of spatial tuning were calculated for unisensory visual, unisensory auditory and for spatially and temporally coincident auditory-visual stimulation. The majority of visually responsive units conveyed significant information about light-source location, whereas, over a corresponding region of space, acoustically responsive units generally transmitted less information about sound-source location. Spatial sensitivity for visual, auditory and bisensory stimulation was highest in the anterior dorsal field, the auditory area previously shown to be innervated by a region of extrastriate visual cortex thought to be concerned primarily with spatial processing, whereas the posterior pseudosylvian field and posterior suprasylvian field, whose principal visual input arises from cortical areas that appear to be part of the ‘what’ processing stream, conveyed less information about stimulus location. In some neurons, pairing visual and auditory stimuli led to an increase in the spatial information available relative to the most effective unisensory stimulus, whereas, in a smaller subpopulation, combined stimulation decreased the spatial MI. These data suggest that visual inputs to auditory cortex can enhance spatial processing in the presence of multisensory cues and could therefore potentially underlie visual influences on auditory localization.

Keywords: Multisensory, Ferret, Single-unit recording, Virtual acoustic space, Mutual information, Sound localization

1. Introduction

A number of studies have now described the presence of neurons in auditory cortex whose responses are modulated by a visual stimulus, but the functions of these inputs remain elusive. Sensitivity to visual stimulation has been demonstrated in the auditory cortex of human (Calvert et al., 1999, Foxe et al., 2000, Foxe et al., 2002, Giard and Peronnet, 1999,Molholm et al., 2004, Molholm et al., 2002 and Murray et al., 2005) and non-human (Brosch et al., 2005, Ghazanfar et al., 2005, Kayser et al., 2007, Schroeder and Foxe, 2002 and Schroeder et al., 2001) primates, ferrets (Bizley et al., 2007) and rats (Wallace et al., 2004). Visual activity is most commonly observed in non-primary auditory cortex, but neurons whose responses are modulated by light are also found in the primary fields (Bizley et al., 2007). The effects of visual stimulation can be either to facilitate or suppress activity to a concurrent auditory stimulus (Bizley et al., 2007 and Ghazanfar et al., 2005).

The origin and function of visual activity within auditory cortex is widely debated. It has been suggested that multisensory inputs might serve to prime auditory cortex, acting to enhance the response to a subsequent auditory stimulus (Schroeder and Foxe, 2005). Somatosensory inputs have been shown to reset the phase of ongoing neural oscillations in primary auditory cortex (A1) (Lakatos et al., 2007), supporting the idea that non-auditory inputs might function to reset ongoing auditory cortex activity (Schroeder and Foxe, 2005). A second hypothesis is that the superior spatial resolution of the somatosensory and visual systems might be used to increase spatial sensitivity in the auditory system, perhaps contributing to the enhanced localization abilities that are observed with multisensory versus unisensory stimuli (Welch and Warren, 1986 and Stein et al., 1988).

Understanding the origin of multisensory inputs to auditory cortex should help to elucidate their function. The majority of auditory–visual interactions in monkey auditory cortex have been shown to be face specific, which was interpreted as evidence for integration being a result of feedback from the superior temporal sulcus (Ghazanfar et al., 2005). However, visual inputs to auditory cortex arise from a number of sources; in addition to inputs from multisensory association areas there are direct connections from both primary and non-primary visual cortex, as well as from multisensory thalamic nuclei (Bizley et al., 2007,Budinger et al., 2006, Budinger et al., 2007, Falchier et al., 2002, Hackett et al., 2007 and Rockland and Ojima, 2003).

The pattern of anatomical connectivity recently identified from visual cortex to auditory cortex in the ferret raises intriguing predictions as to the functional properties of visual–auditory interaction in different fields of the auditory cortex in this species. Bizley et al. (2007) observed sparse direct connections from V1 to A1 and found that non-primary auditory cortical areas are more heavily innervated by extrastriate visual areas. Those on the anterior bank of the ectosylvian gyrus are innervated predominantly by area SSY, thought to be the ferret homologue of the motion processing area MT (Philipp et al., 2005), whereas those on the posterior bank of the gyrus receive larger inputs from area 20, thought to be part of the “what” processing stream (Manger et al., 2004). This suggests that the visual responses recorded in different auditory cortical fields should vary in the amount of spatial information they convey according to the source of these projections.

In order to examine further the role of visual activity in auditory cortex, we mapped spatial receptive fields in different auditory cortical fields of the ferret using acoustic, visual, or combined stimulation. We analyzed these data by estimating the mutual information (MI) between the stimuli and the responses, which enabled us to take into account information in spike timing in addition to overall spike count. Our results show that visual inputs to auditory cortex do indeed increase the information about stimulus location in the responses of the neurons and that the extent to which they do so varies in a predictable fashion according to the source of the inputs from extrastriate visual cortex.

2. Results

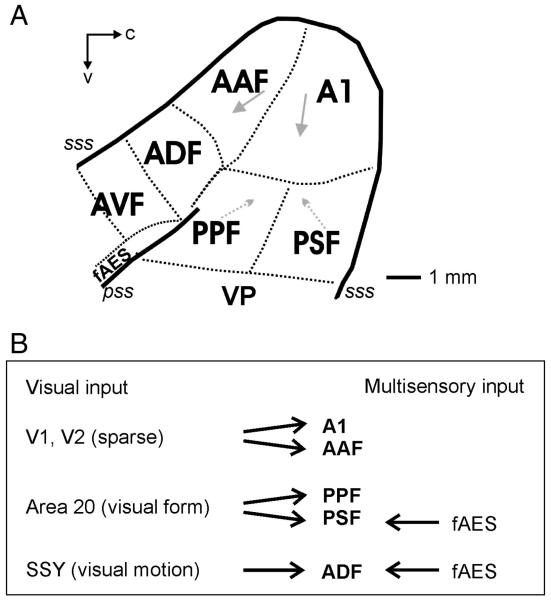

The extracellular responses of 305 single units were recorded from 5 auditory fields in 5 ferrets. Fig. 1 illustrates the location of all the auditory fields that have so far been identified in this species. Units were first classified on the basis of their responses to global light flashes presented from an LED positioned close to the contralateral eye and to broadband noise bursts presented to the contralateral ear. Thirty-six percent of the units (n = 111/305) were classified as bisensory (defined as showing a significant alteration in spike firing to both auditory and visual stimuli presented in isolation), 24% (n = 74/305) responded only to visual stimulation in isolation and the remainders were classified as unisensory auditory (39%, 120/305). Table 1 shows the distribution of each response type by cortical field. Pure tone stimuli were used for mapping frequency-response areas in order to help determine the cortical area in which the recordings were made, but all auditory spatial tuning estimates were carried out with broadband noise bursts. Fig. 1A illustrates the location of each of the auditory cortical fields in the ferret and Fig. 1B summarises the main potential cortical sources and types of visual information to each area.

Fig. 1.

(A) Schematic showing the organization of auditory cortex in the ferret. The primary fields, A1 and AAF, are tonotopically organized (arrows indicate a high-to-low characteristic frequency gradient). Two posterior fields, PPF and PSF, are also tonotopically organized. A1, primary auditory cortex; AAF, anterior auditory field; PPF, posterior pseudosylvian field; PSF, posterior suprasylvian sulcus; VP, ventral posterior; ADF, anterior dorsal field; AVF, anterior ventral field; fAES, anterior ectosylvian sulcal field; pss, pseudosylvian sulcus; sss, suprasylvian sulcus; c, caudal; v, ventral. (B) Summary of visual inputs from visual and multisensory areas into each of the 5 auditory fields recorded from in this study.

Table 1.

Different response categories (unisensory visual, unisensory auditory, and bisensory, defined on the basis of a significant alteration in spike firing to visual and/or auditory stimuli) for the 305 units recorded in the 5 different auditory cortical fields

| Cortical field | Auditory (%) | Visual (%) | Bisensory (%) | Number of units |

|---|---|---|---|---|

| A1 | 67.6 | 0.0 | 32.4 | 34 |

| AAF | 52.9 | 26.5 | 20.6 | 68 |

| PPF | 52.7 | 33.8 | 13.5 | 74 |

| PSF | 32.8 | 4.9 | 62.3 | 61 |

| ADF | 7.0 | 39.4 | 53.5 | 71 |

We have previously shown in a small subpopulation of neurons that at least some auditory cortical neurons exhibit spatially modulated visual sensitivity (Bizley et al., 2007). Here we systematically explored the spatial sensitivity of the visual responses in a much larger population of units while also examining their auditory spatial tuning and the effects of combined visual–auditory stimulation. This was achieved by presenting sounds in virtual acoustic space (VAS) and visual stimuli from an array of 8 LEDs located 100 cm from the animal at locations ranging from 5° (ipsilateral), in 15° intervals, to − 100° (contralateral) to the cortex from which the recordings were made.

Spatial sensitivity of each unit to the different stimuli was determined by measuring the MI, quantified in bits, between the stimulus location and the neuronal response. At least 30 repetitions of each combination of spatial location and stimulus modality (auditory, visual or bisensory) were presented in a pseudorandomly interleaved order. MI estimates were then obtained for each stimulus modality separately, so that the number of bits represents a measure of the spatial modulation of the neuronal response for a given stimulus modality. This enabled the relative amounts of spatial information provided by each to be compared. The MI values obtained were assessed for significance by using a bootstrapping procedure, as described in the Experimental procedures.

2.1. Spatial sensitivity to visual stimuli

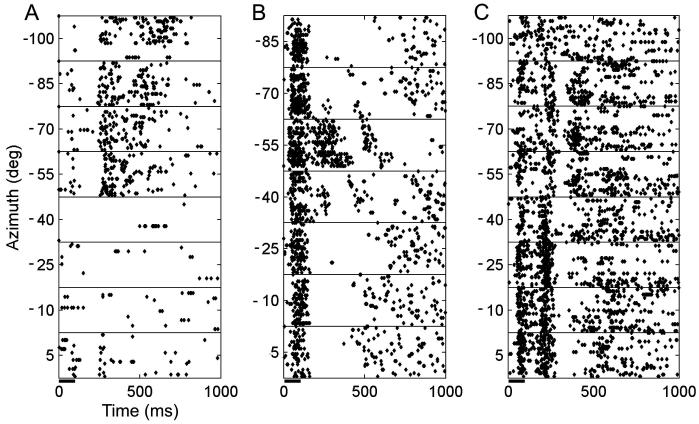

Seventy-one percent (132/185) of units whose responses were modulated by a unisensory visual stimulus contained significant spatial information. The latencies of these responses differed somewhat between different cortical fields and were consistent with those reported for a larger sample of units in Bizley et al. (2007). Example raster plots for 3 units whose responses were modulated by LED location are shown in Fig. 2. The proportion of units whose responses were significantly modulated by the unisensory visual stimulus and transmitted significant information about LED location varied between cortical fields; neurons in the anterior auditory field (AAF) and the anterior dorsal field (ADF) were most likely to be spatially sensitive with 72% (n = 32) and 75% (n = 66), transmitting significant amounts of information about LED location, respectively. In A1, the posterior pseudosylvian field (PPF) and the posterior suprasylvian field (PSF), significant spatial sensitivity was observed in 63% (n = 11), 64% (n = 35) and 62% (n = 41) of visually responsive units, respectively. Fig. 3A plots the distribution of MI values obtained for visually sensitive neurons in each cortical field. In addition to having the largest proportion of spatially sensitive visual units, ADF neurons transmitted significantly more information than those in the other cortical fields (Kruskal–Wallis test, p = 0.0001).

Fig. 2.

(A–C) Raster plots showing the responses of three units recorded in fields PPF, AAF and ADF, respectively, to light flashes from an LED (100 ms duration) presented at a range of azimuthal locations (at a fixed elevation of + 5°). All three units transmitted significant information about LED location in their response (A, 1.54 bits; B, 2.07 bits; C, 1.59 bits).

Fig. 3.

(A–C) Boxplots displaying the amount of information (in bits) transmitted by units in each of the five cortical fields about LED location (A), sound-source location (B) or the location of a combined auditory–visual stimulus (C). Only units for which there was a significant unisensory visual or auditory response are plotted in A and B, respectively, whereas C shows the multisensory MI values for all units recorded, irrespective of their response to unisensory stimulation. The total number of units in each group is shown at the top of each plot. The boxplots show the median (horizontal bar), inter-quartile range (boxes), spread of data (tails) and outliers (cross symbols). The notch indicates the distribution of data about the median. There were significant differences in the distribution of MI values in different cortical fields (Kruskal–Wallis test; LED location, p = 0.0001; auditory location, p = 0.0035; bisensory stimulus location, p < 0.0001). Significant post-hoc pair-wise differences (Tukey–Kramer test, p < 0.05) between individual cortical fields are shown by the lines above each boxplot. (D–F) Voronoi tessellations plotting the spatial information, in bits, on the surface of auditory cortex for every unit recorded. Data from 5 animals have been collapsed onto a single auditory cortex. Each polygon represents a single unit. Where multiple recordings were made at different active sites on the same electrode, recordings are arranged in a circle about the point from which they were recorded. The deepest unit is shown on the right hand side and progressively more superficial units are arranged moving clockwise from this point. Units that conveyed very small amounts of spatial information are plotted in red and the most informative neurons are plotted in yellow.

Fig. 3D shows the distribution across auditory cortex of MI values between visual stimulus location and the responses of these units. Data from the 5 animals were collapsed onto a single auditory cortex, with the location of each unit represented by a single polygon on the cortical surface (see Fig. 1A for the typical location of each cortical field). Where multiple recordings were made at a single point (i.e. when units were recorded on multiple recording sites at different depths on the same electrode), recordings are arranged in a circle, with the deepest unit shown at the right hand side and progressively more superficial recordings plotted in a clockwise direction within the circle. The size of the polygon reflects the density of sampling in that area, while its color indicates the amount of spatial information conveyed in bits (with visually unresponsive units represented by open polygons). This figure clearly demonstrates that most information about the LED direction was conveyed by units located on the anterior bank of the ectosylvian gyrus, i.e. in ADF and AAF.

2.2. Spatial sensitivity to auditory stimuli

In order to compare the auditory and visual responses directly, sensitivity to VAS stimuli was tested over the same range of locations, i.e. from 5° to − 100° azimuth, provided by the LED array. Over this relatively limited region of space, only 23% of the units transmitted significant information about sound-source location and the MI values were generally lower than those obtained with visual stimuli (compare Figs. 3A and B). The distribution across auditory cortex of MI values between auditory stimulus location and response is shown in Figs. 3B and E. As with the visual responses, significantly higher values were found for units recorded in ADF (Fig. 3B; yellow polygons in Fig. 3E), suggesting that spatial sensitivity is greater in this field than in other cortical areas.

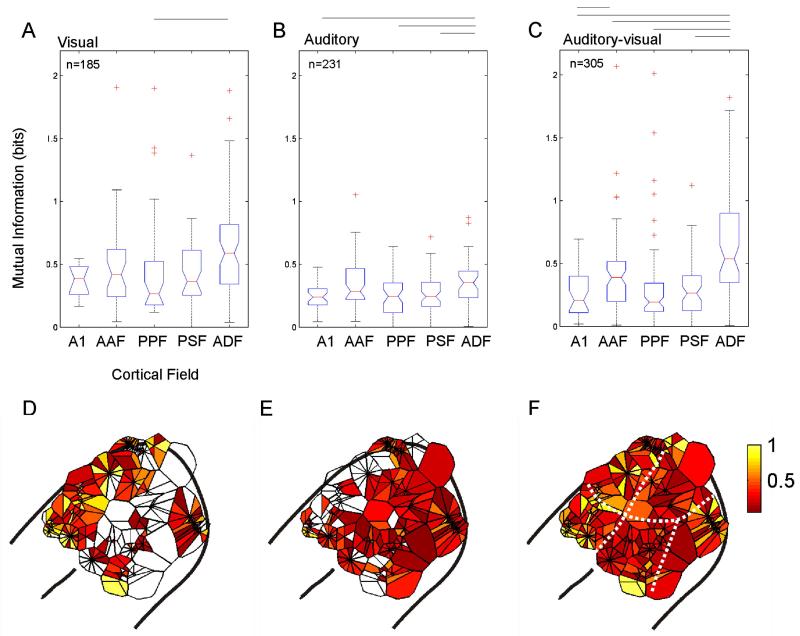

Given the restricted range of azimuths tested relative to the size of the spatial receptive fields reported in previous studies of ferret auditory cortex (Mrsic-Flogel et al., 2005) and the fact that a relatively high sound level was used, this paucity of auditory spatial sensitivity is perhaps not surprising. A subset (n = 93) of units was also tested with a more extensive range of virtual sound directions, from 95° to − 100° azimuth. Whereas only 15% (n = 14) of this subset were found to transmit a significant amount of spatial information when the estimation was based on the restricted range of locations (5° to − 100° azimuth), this increased to 52% (n = 48) over the larger range. These units included all those whose responses were spatially informative across the smaller range of sound directions.

Fig. 4 shows the responses of three units to noise bursts presented at different virtual sound directions. The units shown in Figs. 4A and C exhibited a contralateral preference, whereas the unit in Fig. 4B fired most when sounds were presented from in front of the animal. A comparison of the distribution across cortical areas of units showing significant auditory spatial tuning over the 95° to − 100° range of azimuths revealed that roughly one half of the units recorded in A1 (10/20), AAF (9/20) and PPF (9/17) were spatially tuned, with slightly more in PSF (12/16) and fewer in ADF (7/20). The distribution of MI values obtained in each cortical field is shown in Fig. 4D for all units for which azimuth response profiles were measured over the larger range of stimulus locations and in Fig. 4E for only those units that exhibited significant spatial tuning. There were significant differences between the values obtained in each field (Kruskal–Wallis, p = 0.010), with units in A1, PSF and ADF being more informative than those in AAF and PPF.

Fig. 4.

(A–C) Raster plots showing the responses of three units to broadband noise bursts (100 ms duration) presented in virtual acoustic space at a range of azimuthal locations (at a fixed elevation of + 5°). All three units transmitted significant information about sound-source location in their responses (0.42, 0.20, and 0.45 bits, respectively). Units A and B were recorded in A1, and unit C in ADF. (D and E) Distribution of MI values obtained from all units tested with this wider range of azimuths (D, n = 93) and from only those units whose responses were significantly spatially modulated (E, n = 48).

2.3. Combined auditory–visual stimulation can modulate the spatial information conveyed by auditory cortical neurons

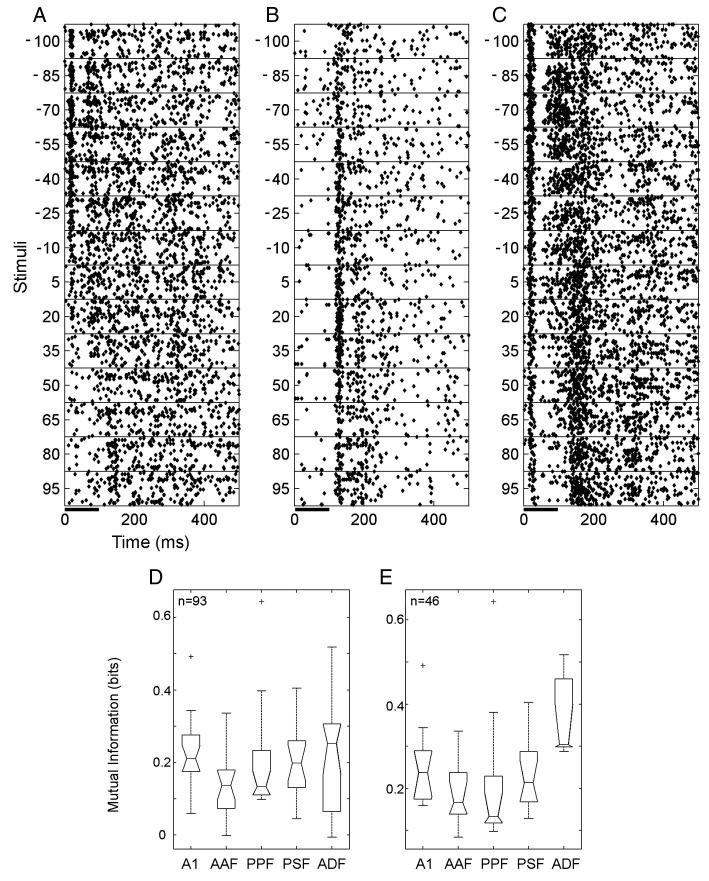

To examine whether the presence of a visual stimulus can alter the spatial information conveyed by auditory cortical neurons, visual and auditory stimuli were presented simultaneously from corresponding spatial locations. This was done for all units irrespective of their responses to unisensory stimulation. In order to quantify the types of interaction observed, we asked whether the amount of spatial information conveyed by combined auditory–visual stimulation was significantly different from the unisensory condition that was the more spatially sensitive. This was achieved using a bootstrapping procedure that first randomized the responses between the auditory and bisensory stimuli and recalculated the MI 1000 times, and then repeated this by randomizing trials between the visual and bisensory stimuli. If the MI for the bisensory condition was greater than both the 90th percentile values, estimated by bootstrapping, then there was said to be a significant positive interaction, i.e. combined visual–auditory stimulation increased the amount of spatial information in the response. However, if unisensory stimulation resulted in a value that was higher than the 90th percentile of the bootstrapped values, then combined stimulation was said to negatively interact, indicating that there was a significant decrease in the amount of spatial information available when bisensory stimulation was used. Forty-seven percent of units did not show a significant interaction, whereas combined stimulation increased the amount of information about stimulus location in 35% of units and decreased it in the remaining 18%.

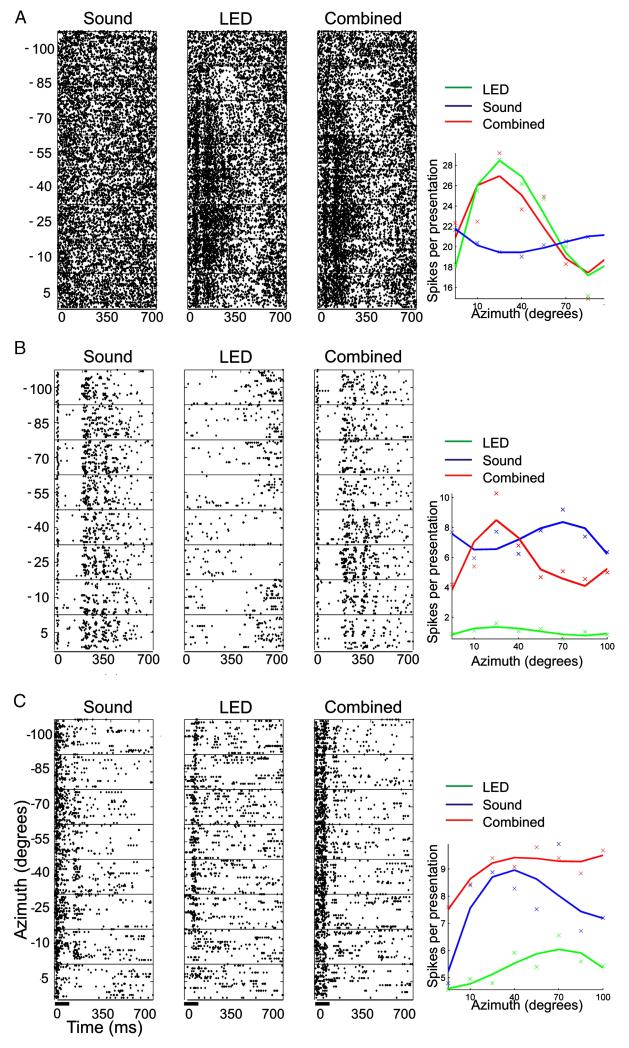

Fig. 5 shows the responses of 3 example units. The unit in Fig. 5A showed no crossmodal interaction with combined stimulation; it was sensitive only to visual stimuli and showed robust spatial tuning that was uninfluenced by a simultaneously presented acoustic stimulus. This is indicated by the similarity between the raster plots and the azimuth-spike-rate functions for visual and bisensory stimulation. Fig. 5B shows a unit that was classified as showing a positive interaction in response to bisensory stimulation. The auditory response did not contain significant information about the direction of the sound source (note the similarity in the raster plots for each azimuth angle). There was some indication that visual stimuli suppressed the spontaneous firing of this unit, although not in a spatially dependent fashion. However, when combined with sound this suppressive effect of the visual stimulus altered the shape of the auditory response profile, increasing the extent to which the spike rate was modulated by stimulus direction. As a result, this unit now transmitted significant spatial information. Finally, Fig. 5C shows an example of a unit that was classified as displaying a negative crossmodal interaction. This unit was responsive to both unisensory visual and auditory stimuli and, in both cases, there was a weak, but significant, modulation of the response with spatial location. However, when the stimuli were presented in combination, a much more robust spiking response was elicited and the unit was strongly driven at previously ineffective locations. As a result, the degree to which the unit’s response was modulated by stimulus location was reduced to such an extent that it no longer contained significant spatial information.

Fig. 5.

Examples of responses to auditory, visual and combined auditory–visual stimulation. For each unit, the response to the three stimulus types presented at different azimuths is shown by the raster plots and by the spike rate functions (plotted over a 300 ms window and fitted with a cubic polynomial). (A) Unisensory visual unit that was spatially tuned and unaffected by simultaneously presented sound. Spatial MI values obtained for this unit were 0.45, 1.9 and 2.01 bits for acoustic, visual and bisensory stimulation, respectively. (B) Acoustically responsive unit that responded in a consistent fashion across the range of azimuths tested. Unisensory visual stimulation suppressed the spontaneous activity of this unit and, when combined with acoustic stimulation, resulted in a spatially tuned response. Spatial MI values obtained for this unit were 0.31, 0.29 and 0.38 bits for acoustic, visual and bisensory stimulation, respectively. (C) Bisensory unit showing broad spatial tuning to both auditory and visual unisensory stimulation. Pairing auditory and visual stimulation produced a more robust spiking response in this unit, which was modulated much less by spatial location. Spatial MI values obtained for this unit were 0. 49, 0.51 and 0.45 bits for acoustic, visual and bisensory stimulation, respectively.

2.4. Visual stimulation enhances auditory spatial tuning

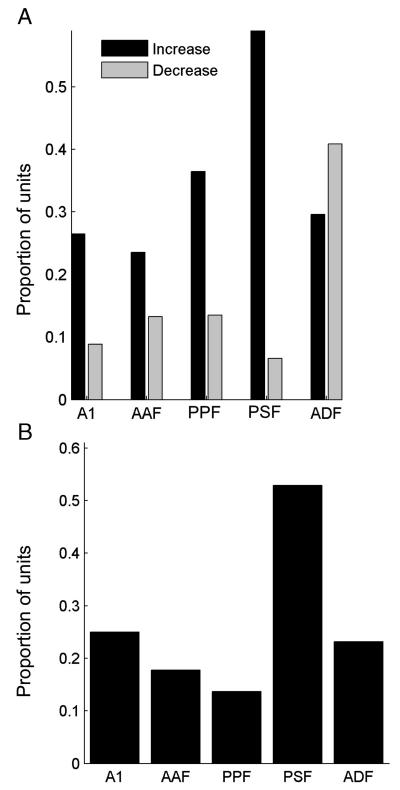

Fig. 6A shows the proportion of units in each cortical field that exhibited either positive (black) or negative (gray) visual–auditory interactions. In nearly all areas, the majority of units showed positive interactions, indicating that combined visual–auditory stimulation increased the amount of spatial information in the response compared to that elicited by the most effective unisensory stimulus. In order to assess the overall effects of the visual stimuli on auditory spatial sensitivity, we also determined the proportion of units in each cortical area whose responses transmitted more information about stimulus location when spatially aligned visual and auditory stimuli were presented together compared to the responses to sound alone (Fig. 6B). Around 10–20% of auditory units in all cortical areas showed enhanced spatial tuning in the presence of bisensory stimuli, with the exception of PSF, where this figure rose to ~ 50%.

Fig. 6.

(A) Bar graph showing the proportion of units in each cortical field where a significant crossmodal interaction was observed. The proportion of units in which combined auditory–visual stimulation resulted in a significant increase or decrease in spatial information, relative to the most spatially informative unisensory response, is shown by the black and gray bars, respectively. (B) Bar graph plotting the proportion of units within each cortical field in which the addition of a spatially and temporally congruent visual stimulus led to an increase in spatial information compared to that estimated from the response to sound alone.

The amount of spatial information available in the unit responses recorded in each of the five cortical fields to combined auditory–visual stimulation is shown in Fig. 3C, while Fig. 3F plots these values for each unit recorded on the surface of the cortex. This illustrates that neurons in auditory cortex tend to transmit most information about stimulus location when bisensory stimuli are present, as indicated by the greater proportion of polygons that are yellow in color. This is evident when compared to unisensory visual stimulation (Fig. 3D), but is most marked when compared to unisensory auditory stimulation (Fig. 3E.). This increase in MI was seen in all auditory cortical fields, but, as with both auditory and visual stimuli presented in isolation, the highest multisensory MI values were associated with units in ADF, suggesting that this is the region of ferret auditory cortex that is most concerned with spatial processing.

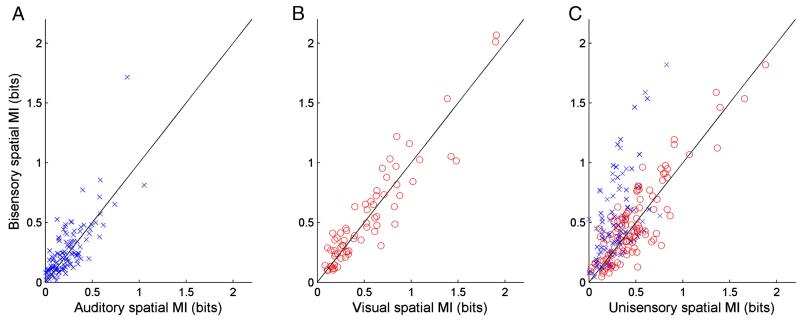

The amount of spatial information available in the responses of units, combined across all 5 cortical fields, classified as unisensory auditory, unisensory visual and bisensory is compared for unisensory and bisensory stimulation in Fig. 7. In the case of the unisensory auditory (Fig. 7A) and unisensory visual (Fig. 7B) units, the addition of the second stimulus modality could result in either an increase or decrease in transmitted information. Fig. 7C plots the change in MI in the bisensory stimulus relative to either the unisensory auditory (blue crosses) or visual (red circles) stimulus. The largest increases in MI were observed when the visual stimulus was added to spatially congruent sounds in the bisensory condition, suggesting that they make the greatest contribution to the enhanced spatial processing observed in the auditory cortex in the presence of multisensory cues.

Fig. 7.

Scatter plots plotting the amount of spatial information available from unisensory stimulation (blue crosses, auditory; red circles, visual) against that obtained with bisensory stimulation for units classified as unisensory auditory (A), unisensory visual (B) or bisensory (C).

3. Discussion

We have demonstrated that neurons within auditory cortex frequently transmit significant amounts of information about the location of a visual stimulus. Moreover, for some neurons spatially and temporally congruent visual stimuli increased the amount of spatial information available from the neural response when compared to acoustic stimulation alone. These data suggest that visual inputs into auditory cortex can enhance the spatial processing capacity of auditory cortical neurons.

As in our previous study (Bizley et al., 2007), visual stimuli were found to drive or modulate the activity of some units in all auditory cortical areas from which recordings were made. However, the incidence of these visual responses and their influence on the spatial sensitivity of the neurons varied across auditory cortex. Neurons in ADF, on the anterior bank of the ectosylvian gyrus, were most likely to convey significant information about light-source location, and tended to have significantly higher MI values than the responses of units recorded in other cortical areas. ADF neurons also generally transmitted more information about sound-source location, suggesting that this region of ferret auditory cortex might be particularly involved in processing spatial information. Combined auditory–visual stimulation revealed evidence for multisensory interactions in a large proportion of ADF units, about half of which transmitted significantly more spatial information relative to the most informative unisensory stimulus. These findings are illustrated in Figs. 3D-F, which show that spatial sensitivity for auditory, visual and bisensory stimulation is highest at the ventral extreme of ADF, close to the boundary with the anterior ventral field (AVF) and the anterior ectosylvian sulcal field (fAES), both of which are known to be multisensory areas (Bizley et al., 2007, Manger et al., 2005 and Ramsay and Meredith, 2004). The fields on the anterior bank of the ectosylvian gyrus are heavily innervated by area SSY (Bizley et al., 2007), located in the caudal bank of the suprasylvian sulcus, and thought to be the ferret homologue of primate visual motion processing area MT (Philipp et al., 2005). Although multisensory thalamic nuclei are also a potential source of visual input to the auditory cortex (Budinger et al., 2007 and Hackett et al., 2007), the concordance between the functional characteristics of visual responses in ADF and the visual cortical areas that innervate the anterior bank supports the idea that these responses are cortical in origin.

It is striking that a far higher proportion of units recorded in PSF exhibited crossmodal spatial interactions and conveyed more information about stimulus location when visual and auditory stimuli were combined than those recorded in other cortical fields (Fig. 6). While revealing a marked influence of visual stimuli on the spatial sensitivity of neurons in this part of the cortex, the MI values obtained for all stimulus conditions, including bisensory stimulation, were far lower than those estimated for ADF neurons and comparable to those in the other fields. However, it is clear from Fig. 3F that some PSF units, particularly near the caudal edge of the gyrus, are relatively informative about stimulus location. PSF, like PPF, is innervated by area 20 (Bizley et al., 2007), which is thought to be part of the visual “what” processing stream (Manger et al., 2004). Although the significance of clusters of spatially informative neurons in this part of auditory cortex is not immediately clear, PSF is, unlike PPF, reciprocally connected with fAES (J. K. Bizley, F. R. Nodal, V. M. Bajo and A.J. King, unpublished observation), which may explain the relatively high incidence of multisensory interactions that are found there.

Thus, while the present results support the general distinction between cortical areas involved in visual localization and identification, it seems likely that this distinction is not complete and that there is an overlap in function between them. Indeed, it has been shown that neurons in macaque V4 are, under certain circumstances, directionally selective (Tolias et al., 2005). The influence of visual inputs on the spatial sensitivity of many neurons in PSF may therefore reflect the particularly broad auditory spatial tuning of these neurons or the presence of limited visual spatial sensitivity, arising either within the ferret’s equivalent of the ventral visual processing stream or from areas, including fAES, that are more concerned with spatial processing.

In this study, visual and auditory stimuli were presented from a range of spatially matched locations. Studies of multisensory integration in the superior colliculus have shown that spatially congruent stimuli tend to result in response enhancement, whereas spatially discordant stimuli give rise to suppressive interactions (e.g. King and Palmer, 1985, Meredith and Stein, 1983, Meredith and Stein, 1986 and Wallace et al., 1996). It is generally assumed that these changes in firing rate contribute to the relative accuracy with which animals orient toward unisensory and bisensory stimuli (Stein et al., 1988), an assumption supported by the behavioral deficits observed following collicular lesions (Burnett et al., 2004). By estimating the MI between the stimuli and the spike trains, we were able to show at the level of individual neurons that combining visual and auditory stimuli from the same direction in space does indeed, in many cases, increase the information transmitted about stimulus location.

Other studies have shown that bisensory stimulation can suppress the responses of cortical neurons (e.g. Bizley et al., 2007, Dehner et al., 2004 and Ghazanfar et al., 2005). The direction of the change in evoked firing rate does not, however, necessarily reveal the functional significance of the multisensory interaction. For example, we observed cases (e.g. Fig. 5C) in which spatially congruent visual and auditory stimuli resulted in stronger but less spatially sensitive responses compared to those evoked by unisensory stimulation. In this case, multisensory facilitation of the spike firing rate was observed across a broad range of azimuths, degrading the information about stimulus location in the response of the neuron. In other cases (Fig. 5B), suppressive interactions between the visual and auditory inputs sharpened the spatial receptive field, which conveyed significantly more spatial information than the responses to unisensory stimulation. These findings stress the importance of adopting objective measures, like estimating the MI between the stimulus and response, which takes into account both the number and the timing of the evoked spikes, when attempting to assess the neuronal consequences of multisensory convergence and integration.

The range of azimuthal locations tested in this study was restricted to locations that fell just within the ferret’s visual field, which extends from ~ 25° ipsilateral to ~ 130° contralateral. Auditory spatial receptive fields of cortical neurons are often larger than this and can respond to sounds presented across the full 360° of azimuth. Nevertheless, the responses of these neurons typically vary within this large receptive field (Benedek et al., 1996, Eordegh et al., 2005, Middlebrooks and Pettigrew, 1981, Mrsic-Flogel et al., 2005, Stecker et al., 2005 and Xu et al., 1998). This study extends this concept by showing that congruent visual stimuli can also alter spatial tuning within the auditory receptive field. However, it is important to note that we have not explored the effects of combining visual stimuli with sounds presented over the full azimuthal extent of each auditory receptive field. Moreover, sensitivity to sound-source elevation is also commonly observed in auditory cortical neurons (Mrsic-Flogel et al., 2005 and Xu et al., 1998) and was not examined here. Further studies exploring a greater range of stimulus locations are therefore required for a full understanding of spatial integration of auditory-visual cues in auditory cortex.

The ferret is increasingly being used as a model species for auditory processing. An important question is to what degree findings in this species can be related to those in another. The cat has been extensively used for studying auditory cortex, and it is now possible to draw homologies between certain auditory fields in these two species. A1 is well conserved across mammalian species, and AAF appears to be equivalent in cat and ferret (Kowalski et al., 1995, Imaizumi et al., 2004 and Bizley et al., 2005). The posterior fields, PPF and PSF, in the ferret share certain similarities with the posterior auditory field (PAF) in the cat, both being tonotopically organized and containing neurons with relatively long response latencies and rich discharge patterns (Phillips and Orman, 1984, Stecker et al., 2003 and Bizley et al., 2005). It remains to be seen, however, whether either of these areas exhibits responses that are particularly suited for spatial analysis, as is the case in cat PAF (Stecker et al., 2003 and Malhotra and Lomber, 2007). Like the cat’s secondary auditory field, A2, (Schreiner and Cynader, 1984), ferret ADF lacks tonotopic organization and contains neurons with broad frequency-response areas (Bizley et al., 2005), while the high incidence of multisensory convergence in ferret AVF suggests that this area is likely to be near or actually homologous to cat fAES (Benedek et al., 1996, Manger et al., 2005, Ramsay and Meredith, 2004 and Wallace et al., 1992).

Although multisensory convergence is widespread in the cortex of a range of species, there is little clear-cut evidence for the functional role played by the interactions observed in specific cortical areas. It has, however, recently been shown that somatosensory inputs can modulate the phase of oscillatory activity in monkey auditory cortex, potentially amplifying the response to auditory signals produced by the same source (Lakatos et al., 2007). Activity in auditory cortex is also modulated by mouth movements, but not by moving circles, in both monkeys (Ghazanfar et al., 2005) and humans (Pekkola et al., 2005), suggesting an involvement in audiovisual communication. Similarly, within the spatial domain, previous studies have proposed that auditory cortex might play a key role in the ventriloquism illusion (Recanzone, 1998 and Woods and Recanzone, 2004). By showing that auditory spatial receptive fields can be shaped by a simultaneously presented visual stimulus, our data reveal a possible neural substrate for vision-induced improvements in sound localization. By the same token, because more information is typically conveyed by the visual responses, it might be expected that conditioning with spatially offset sound and light would reshape auditory spatial receptive fields in ways that could underlie the visual capture of sound-source location.

4. Experimental procedures

4.1. Animal preparation

All animal procedures were approved by the local ethical review committee and performed under licence from the UK Home Office in accordance with the Animal (Scientific Procedures) Act 1986. The electrophysiological results presented in this study were obtained from six adult pigmented ferrets (Mustela putorius), which also contributed data to other studies in our laboratory. All animals received regular otoscopic examinations prior to the experiment to ensure that both ears were clean and disease free.

Anesthesia was induced by a single dose of a mixture of medetomidine (Domitor; 0.022 mg/kg/h; Pfizer, Sandwich, UK) and ketamine (Ketaset; 5 mg/kg/h; Fort Dodge Animal Health, Southampton, UK). The left radial vein was cannulated and a continuous infusion (5 ml/h) of a mixture of medetomidine and ketamine in physiological saline containing 5% glucose was provided throughout the experiment. The ferret also received a single, subcutaneous dose of 0.06 mg/kg/h atropine sulphate (C-Vet Veterinary Products, Leyland, UK) and 12-hourly subcutaneous doses of 0.5 mg/kg dexamethasone (Dexadreson; Intervet UK Ltd., Milton Keynes, UK) in order to reduce the risk of bronchial secretions and cerebral oedema, respectively. The ferret was intubated and placed on a ventilator. Body temperature, end-tidal CO2 and the electrocardiogram (ECG) were monitored throughout the experiment.

The animal was placed in a stereotaxic frame and the temporal muscles on both sides were retracted to expose the dorsal and lateral parts of the skull. A metal bar was cemented and screwed onto the right side of the skull, holding the head without further need of a stereotaxic frame. The right pupil was protected with a zero-refractive power contact lens. On the left side, the temporal muscle was largely removed to gain access to the auditory cortex, which is bounded by the suprasylvian sulcus (Fig. 1) (Kelly et al., 1986). The suprasylvian and pseudosylvian sulci were exposed by a craniotomy. The overlying dura was removed and the cortex covered with silicon oil. The animal was then transferred to an anechoic chamber (IAC Ltd, Winchester, UK).

Eye position measurements were taken from 3 animals (5 eyes) approximately 7 h after anesthesia was induced by back-reflecting the location of the optic disc using a reversible ophthalmoscope fitted with a corner-cube prism. The mean ± SD azimuth values were 33.4 ± 3.5°. In one animal (one eye), the values obtained from repeated measurements taken over a 48-hour period were 33.6 ± 2.0° azimuth, indicating that eye position remained constant.

4.2. Stimuli

Acoustic stimuli were generated using TDT system 3 hardware (Tucker-Davis Technologies, Alachua, FL). Panasonic headphone drivers (RPHV297, Panasonic, Bracknell, UK) were used. Closed-field calibrations were performed using an 1/8th inch condenser microphone (Brüel and Kjær, Naerum, Denmark), placed at the end of a model ferret ear canal, to create an inverse filter that ensured the driver produced a flat (< ±5 dB) output up to 25 kHz.

Pure tone stimuli were used to obtain frequency-response areas, both to characterize individual units and to determine tonotopic gradients in order to identify in which cortical field any given recording was made. The tone frequencies used ranged, in 1/3-octave steps, from 200 Hz to 24 kHz. Intensity levels were varied between 10 and 80 dB SPL in 10 dB increments. Each frequency-level combination was presented pseudorandomly ≥ 3 times at a rate of once per second. Broadband noise bursts (40 Hz–30 kHz bandwidth and cosine ramped with a 10 ms rise/fall time), generated afresh on every trial, were used as a search stimulus in order to establish whether each unit was acoustically responsive.

Virtual acoustic space (VAS) stimuli were used to vary the location of the closed-field noise bursts. A series of measurements, including head size, sex, body weight and pinna size, were taken from each ferret in order to select the best match from our extensive library of ferret head-related transfer functions (HRTFs). It has previously been shown that individual differences in the acoustical cues can be significantly reduced by frequency scaling the HRTFs according to differences in body size (Xu and Middlebrooks, 2000 and Schnupp et al., 2003). Basing the stimuli on measurements from animals that most closely matched the ones used in the present study will therefore improve the fidelity of the VAS and avoids the need for making individual acoustic measurements. The visual stimuli were presented from an array of 8 LEDs, positioned 1 m from the animal and at 15° intervals from 5° ipsilateral of the midline (denoted as 5° azimuth) to 100° contralateral to the midline (− 100° azimuth). Broadband noise was presented in VAS from these same positions. Acoustic and visual stimuli were presented alone and in combination, pseudorandomly, at a rate of 1 presentation per second.

4.3. Data acquisition

Recordings were made with silicon probe electrodes (Neuronexus Technologies, Ann Arbor, MI). We used probes with either 16 or 32 active sites, in a 1 × 16, 2 × 16 or 4 × 8 configuration (number of shanks × number of positions on each shank). Multiple shanks were separated by 200 μm and active sites were separated vertically by 150 μm. The electrodes were positioned so that they entered the cortex orthogonal to the surface of the ectosylvian gyrus. Recordings were made in five of the seven identified acoustically responsive areas: the tonotopically organized primary fields A1 and AAF, located on the middle ectosylvian gyrus; PPF and PSF, which are tonotopically organized areas on the posterior bank, and ADF, a non-tonotopic area located on the anterior bank of the ectosylvian sulcus. In each animal sufficient penetrations were made to visualize the tonotopic organization of auditory cortex. While there is a degree of inter-animal variability in the location of different auditory fields within the ectosylvian gyrus, the auditory cortex in the ferret is clearly separated from surrounding visual and somatosensory areas by the suprasylvian sulcus. The five cortical areas are readily distinguished from each other on the basis of the tonotopic gradients and their position with respect to the suprasylvian sulcus. Thus, there is a clear low frequency reversal at the ventral edge of the primary fields and a second separating the two posterior fields, which are also tonotopically ordered. ADF can be distinguished on the basis that it lies ventral to the low frequency edge of AAF. Typically, ADF neurons have very broad frequency-response areas and have higher thresholds for pure tones than neurons in the primary and posterior fields. Neurons in ADF can be distinguished from those in AVF as the latter are not well driven by pure tones. Neurons in AAF and ADF have shorter response latencies than those in A1 and the posterior fields, respectively. Moreover, neurons recorded in PSF and PPF tend to exhibit more sustained firing.

The neuronal recordings were bandpass filtered (500 Hz–5 kHz), amplified (up to 20,000×) and digitized at 25 kHz. Data acquisition and stimulus generation were performed using BrainWare (Tucker-Davis Technologies).

4.4. Data analysis

Spike sorting was performed offline. Single units were isolated from the digitized signal by manually clustering data according to spike features such as amplitude, width and area. We also inspected auto-correlation histograms and only cases in which the inter-spike-interval histograms revealed a clear refractory period were classed as single units.

We have previously shown that MI estimates are a robust and sensitive method of quantifying multisensory interactions (Bizley et al., 2007). Briefly, the stimulus S and neural response R were treated as random variables and the MI between them, I(S;R), measured in bits, was calculated as a function of their joint probability p(s, r) and defined as:

where p(s), p(r) are the marginal distributions (Cover and Thomas, 1991). The MI is zero if the two values are independent (that is p(s, r) = p(s)p(r) for every r and s) and is positive otherwise.

The degree to which a neuron was spatially informative was estimated for the bisensory, unisensory visual and unisensory auditory responses separately using the following method. Neural responses were divided into n time bins of length t. For each time bin the spike count was used in order to calculate the MI between stimulus location and the spike count in that bin. This was repeated for all time bins over a response duration of 600 ms. The MI was then summed across all time bins to give the final value. This procedure was repeated for time bins of length 20, 35, 100, 300 and 600 ms. In order to estimate whether the amount of information calculated (in bits) was “significant”, a bootstrapping procedure was performed whereby within each time bin responses were randomly assigned to spatial position and the MI recalculated. This was repeated 1000 times and if the actual value exceeded the 90th percentile of the bootstrapped values the response was considered to be significantly spatially informative. The MI value used for subsequent analysis was the one at which the highest significant value was obtained. MI estimates can be subject to significant positive sampling bias (Nelken et al., 2005 and Rolls and Treves, 1998). Standard bias correction procedures were used (Treves and Panzeri, 1995) and the MI values reported were all bias-corrected.

The advantages of calculating MI values are, firstly this yields a value, in bits, which is in itself readily interpretable; the number of stimuli (in this case spatial locations) which are discriminable is 2MI(bits). Secondly, we may use the MI value obtained in different stimulus conditions (i.e. unisensory visual, unisensory auditory and bisensory stimulation) to make quantitative comparisons of the information transmission in different stimulus conditions.

In order to compare the MI values in different auditory cortical fields, composite auditory maps were created by pooling data from the 5 animals. Each electrode penetration was first assigned to a cortical field on the basis of the frequency-response areas and tonotopic organization recorded in that animal, together with photographs documenting the location of each recording site with respect to sulcal landmarks on the cortical surface. These data were then placed onto a single auditory cortex using the frequency organization revealed by the intrinsic optical imaging study of Nelken et al. (2004), with the individual frequency maps overlaid to create the composite views shown in Fig. 3.

Acknowledgments

We are grateful to Kerry Walker, Fernando Nodal and Jan Schnupp for their assistance with data collection and to the Wellcome Trust (Principal Research Fellowship to A. J. King) and BBSRC (grant BB/D009758/1 to J. Schnupp, A. J. King and J. K. Bizley) for the financial support.

Abbreviations

- A1

primary auditory cortex

- AAF

anterior auditory field

- ADF

anterior dorsal field

- AVF

anterior ventral field

- MI

mutual information

- PPF

posterior pseudosylvian field

- PSF

posterior suprasylvian field

References

- Benedek G, Fischer-Szatmari L, Kovacs G, Perenyi J, Katoh YY. Visual, somatosensory and auditory modality properties along the feline suprageniculate-anterior ectosylvian sulcus/insular pathway. Prog. Brain. Res. 1996;112:325–334. doi: 10.1016/s0079-6123(08)63339-7. [DOI] [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Nelken I, King AJ. Functional organization of ferret auditory cortex. Cereb. Cortex. 2005;15:1637–1653. doi: 10.1093/cercor/bhi042. [DOI] [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Bajo VM, Nelken I, King AJ. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb. Cortex. 2007;17:2172–2189. doi: 10.1093/cercor/bhl128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosch M, Selezneva E, Scheich H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J. Neurosci. 2005;25:6797–6806. doi: 10.1523/JNEUROSCI.1571-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Budinger E, Heil P, Hess A, Scheich H. Multisensory processing via early cortical stages: connections of the primary auditory cortical field with other sensory systems. Neuroscience. 2006;143:1065–1083. doi: 10.1016/j.neuroscience.2006.08.035. [DOI] [PubMed] [Google Scholar]

- Budinger E, Laszcz A, Lison H, Scheich H, Ohl FW. Non-sensory cortical and subcortical connections of the primary auditory cortex in Mongolian gerbils: bottom-up and top-down processing of neuronal information via field AI. Brain Res. 2007 Aug 22; doi: 10.1016/j.brainres.2007.07.084. Electronic publication ahead of print. [DOI] [PubMed] [Google Scholar]

- Burnett LR, Stein BE, Chaponis D, Wallace MT. Superior colliculus lesions preferentially disrupt multisensory orientation. Neuroscience. 2004;124:535–547. doi: 10.1016/j.neuroscience.2003.12.026. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Brammer MJ, Bullmore ET, Campbell R, Iversen SD, David AS. Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport. 1999;10:2619–2623. doi: 10.1097/00001756-199908200-00033. [DOI] [PubMed] [Google Scholar]

- Cover TM, Thomas JA. Elements of Information Theory. Wiley & Sons; 1991. [Google Scholar]

- Dehner LR, Keniston LP, Clemo HR, Meredith MA. Cross-modal circuitry between auditory and somatosensory areas of the cat anterior ectosylvian sulcal cortex: a ‘new’ inhibitory form of multisensory convergence. Cereb. Cortex. 2004;14:387–403. doi: 10.1093/cercor/bhg135. [DOI] [PubMed] [Google Scholar]

- Eordegh G, Nagy A, Berenyi A, Benedek G. Processing of spatial visual information along the pathway between the suprageniculate nucleus and the anterior ectosylvian cortex. Brain Res. Bull. 2005;67:281–289. doi: 10.1016/j.brainresbull.2005.06.036. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J. Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe JJ, Morocz IA, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res. Cogn. Brain Res. 2000;10:77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Wylie GR, Martinez A, Schroeder CE, Javitt DC, Guilfoyle D, Ritter W, Murray MM. Auditory–somatosensory multisensory processing in auditory association cortex: an fMRI study. J. Neurophysiol. 2002;88:540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J. Neurosci. 2005;25:5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory–visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Hackett TA, De La Mothe LA, Ulbert I, Karmos G, Smiley J, Schroeder CE. Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J. Comp. Neurol. 2007;502:924–952. doi: 10.1002/cne.21326. [DOI] [PubMed] [Google Scholar]

- Imaizumi K, Priebe NJ, Crum PA, Bedenbaugh PH, Cheung SW, Schreiner CE. Modular functional organization of cat anterior auditory field. J. Neurophysiol. 2004;92:444–457. doi: 10.1152/jn.01173.2003. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Functional imaging reveals visual modulation of specific fields in auditory cortex. J. Neurosci. 2007;27:1824–1835. doi: 10.1523/JNEUROSCI.4737-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly JB, Judge PW, Phillips DP. Representation of the cochlea in primary auditory cortex of the ferret (Mustela putorius) Hearing Res. 1986;24:111–115. doi: 10.1016/0378-5955(86)90054-7. [DOI] [PubMed] [Google Scholar]

- King AJ, Palmer AR. Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp. Brain Res. 1985;60:492–500. doi: 10.1007/BF00236934. [DOI] [PubMed] [Google Scholar]

- Kowalski N, Versnel H, Shamma SA. Comparison of responses in the anterior and primary auditory fields of the ferret cortex. J. Neurophysiol. 1995;73:1513–1523. doi: 10.1152/jn.1995.73.4.1513. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O’Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malhotra S, Lomber SG. Sound localization during homotopic and heterotopic bilateral cooling deactivation of primary and nonprimary auditory cortical areas in the cat. J. Neurophysiol. 2007;97:26–43. doi: 10.1152/jn.00720.2006. [DOI] [PubMed] [Google Scholar]

- Manger PR, Engler G, Moll CK, Engel AK. The anterior ectosylvian visual area of the ferret: a homologue for an enigmatic visual cortical area of the cat? Eur. J. Neurosci. 2005;22:706–714. doi: 10.1111/j.1460-9568.2005.04246.x. [DOI] [PubMed] [Google Scholar]

- Manger PR, Nakamura H, Valentiniene S, Innocenti GM. Visual areas in the lateral temporal cortex of the ferret (Mustela putorius) Cereb. Cortex. 2004;14:676–689. doi: 10.1093/cercor/bhh028. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 1986;365:350–354. doi: 10.1016/0006-8993(86)91648-3. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Pettigrew JD. Functional classes of neurons in primary auditory cortex of the cat distinguished by sensitivity to sound location. J. Neurosci. 1981;1:107–120. doi: 10.1523/JNEUROSCI.01-01-00107.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory–visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res. Cogn. Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt DC, Foxe JJ. Multisensory visual–auditory object recognition in humans: a high-density electrical mapping study. Cereb. Cortex. 2004;14:452–465. doi: 10.1093/cercor/bhh007. [DOI] [PubMed] [Google Scholar]

- Mrsic-Flogel TD, King AJ, Schnupp JWH. Encoding of virtual acoustic space stimuli by neurons in ferret primary auditory cortex. J. Neurophysiol. 2005;93:3489–3503. doi: 10.1152/jn.00748.2004. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory–somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb. Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Nelken I, Bizley JK, Nodal FR, Ahmed B, Schnupp JWH, King AJ. Large-scale organization of ferret auditory cortex revealed using continuous acquisition of intrinsic optical signals. J. Neurophysiol. 2004;92:2574–2588. doi: 10.1152/jn.00276.2004. [DOI] [PubMed] [Google Scholar]

- Nelken I, Chechik G, Mrsic-Flogel TD, King AJ, Schnupp JWH. Encoding stimulus information by spike numbers and mean response time in primary auditory cortex. J. Comput. Neurosci. 2005;19:199–221. doi: 10.1007/s10827-005-1739-3. [DOI] [PubMed] [Google Scholar]

- Pekkola J, Ojanen V, Autti T, Jääskeläinen IP, Möttönen R, Tarkiainen A, Sams M. Primary auditory cortex activation by visual speech: an fMRI study at 3T. Neuroreport. 2005;16:125–128. doi: 10.1097/00001756-200502080-00010. [DOI] [PubMed] [Google Scholar]

- Philipp R, Distler C, Hoffmann KP. A motion-sensitive area in ferret extrastriate visual cortex: an analysis in pigmented and albino animals. Cereb. Cortex. 2006 Jun;16(6):779–790. doi: 10.1093/cercor/bhj022. 2005. Electronic publication 2005 Aug 31. [DOI] [PubMed] [Google Scholar]

- Phillips DP, Orman SS. Responses of single neurons in posterior field of cat auditory cortex to tonal stimulation. J. Neurophysiol. 1984;51:147–163. doi: 10.1152/jn.1984.51.1.147. [DOI] [PubMed] [Google Scholar]

- Ramsay AM, Meredith MA. Multiple sensory afferents to ferret pseudosylvian sulcal cortex. Neuroreport. 2004;15:461–465. doi: 10.1097/00001756-200403010-00016. [DOI] [PubMed] [Google Scholar]

- Recanzone GH. Rapidly induced auditory plasticity: the ventriloquism aftereffect. Proc. Natl. Acad. Sci. U. S. A. 1998;95:869–875. doi: 10.1073/pnas.95.3.869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rockland KS, Ojima H. Multisensory convergence in calcarine visual areas in macaque monkey. Int. J. Psychophysiol. 2003;50:19–26. doi: 10.1016/s0167-8760(03)00121-1. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Treves A. Neural Networks and Brain Function. Oxford University Press; Oxford: 1998. [Google Scholar]

- Schnupp JWH, Booth J, King AJ. Modeling individual differences in ferret external ear transfer functions. J. Acoust. Soc. Am. 2003;113:2021–2030. doi: 10.1121/1.1547460. [DOI] [PubMed] [Google Scholar]

- Schreiner CE, Cynader MS. Basic functional organization of second auditory cortical field (AII) of the cat. J. Neurophysiol. 1984;51:1284–1305. doi: 10.1152/jn.1984.51.6.1284. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res. Cogn. Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr. Opin. Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 2001;85:1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Mickey BJ, Macpherson EA, Middlebrooks JC. Spatial sensitivity in field PAF of cat auditory cortex. J. Neurophysiol. 2003;89:2889–2903. doi: 10.1152/jn.00980.2002. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Harrington IA, Macpherson EA, Middlebrooks JC. Spatial sensitivity in the dorsal zone (area DZ) of cat auditory cortex. J. Neurophysiol. 2005;94:1267–1280. doi: 10.1152/jn.00104.2005. [DOI] [PubMed] [Google Scholar]

- Stein BE, Huneycutt WS, Meredith MA. Neurons and behavior: the same rules of multisensory integration apply. Brain. Res. 1988;448:355–358. doi: 10.1016/0006-8993(88)91276-0. [DOI] [PubMed] [Google Scholar]

- Tolias AS, Keliris GA, Smirnakis SM, Logothetis NK. Neurons in macaque area V4 acquire directional tuning after adaptation to motion stimuli. Nat. Neurosci. 2005;8:591–593. doi: 10.1038/nn1446. [DOI] [PubMed] [Google Scholar]

- Treves A, Panzeri S. The upward bias in measures of information derived from limited data samples. Neural Comput. 1995;7:399–407. [Google Scholar]

- Wallace MT, Meredith MA, Stein BE. Integration of multiple sensory modalities in cat cortex. Exp. Brain Res. 1992;91:484–488. doi: 10.1007/BF00227844. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Wilkinson LK, Stein BE. Representation and integration of multiple sensory inputs in primate superior colliculus. J. Neurophysiol. 1996;76:1246–1266. doi: 10.1152/jn.1996.76.2.1246. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE. A revised view of sensory cortical parcellation. Proc. Natl. Acad. Sci. U. S. A. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Welch RB, Warren DH. Intersensory interactions. In: Boff KR, Kaufman L, Thomas JP, editors. Handbook of Perception and Human Performance. Vol. 1: Sensory Processes and Perception. Wiley; New York: 1986. pp. 1–36. [Google Scholar]

- Woods TM, Recanzone GH. Visually induced plasticity of auditory spatial perception in macaques. Curr. Biol. 2004;14:1559–1564. doi: 10.1016/j.cub.2004.08.059. [DOI] [PubMed] [Google Scholar]

- Xu L, Middlebrooks JC. Individual differences in external-ear transfer functions of cats. J. Acoust. Soc. Am. 2000;107:1451–1459. doi: 10.1121/1.428432. [DOI] [PubMed] [Google Scholar]

- Xu L, Furukawa S, Middlebrooks JC. Sensitivity to sound-source elevation in nontonotopic auditory cortex. J. Neurophysiol. 1998;80:882–894. doi: 10.1152/jn.1998.80.2.882. [DOI] [PubMed] [Google Scholar]