Abstract

Objective

Royall and colleagues identified a latent dementia phenotype, “δ”, reflecting the “cognitive correlates of functional status.” We sought to cross-validate and extend these findings in a large clinical case series of adults with and without dementia.

Method

A confirmatory factor analysis (CFA) model for δ was fit to National Alzheimer’s Coordinating Center data (n=26,068). Factor scores derived from δ were compared to the Clinical Dementia Rating Sum of Boxes (CDR-SB) and to clinically diagnosed dementia. A longitudinal parallel-process growth model compared changes in δ with changes in CDR-SB over six annual evaluations.

Results

The CFA model fit well; CFI=0.971, RMSEA=0.070. Factor scores derived from δ discriminated between demented and non-demented participants with an area under the curve of .96. The growth model also fit well, CFI=0.969, RMSEA=0.032.

Conclusions

The δ construct represents a novel approach to measuring dementia-related changes and has potential to improve cognitive assessment of neurodegenerative diseases.

Keywords: Dementia; Activities of Daily Living; Longitudinal Studies; Factor Analysis, Statistical; Validation Studies

In 2010, an estimated 4.7% of people aged 60+ had dementia (Sosa-Ortiz, Acosta-Castillo, & Prince, 2012), leading to a global economic burden estimated at $604 billion (Wimo, Jönsson, Bond, Prince, & Winblad, 2013). The greatest predictor of dementia is increasing age (Plassman et al., 2007); as the number of older adults in the population continues to grow (Sosa-Ortiz, Acosta-Castillo, & Prince, 2012), early identification of neurodegenerative disease is increasingly essential. The rise in dementia prevalence creates the need for parallel improvements in treatment and palliative care. Research investment in the early characterization and treatment of dementia is an important public health goal.

One challenge related to the early detection of dementia continues to be the identification of subtle cognitive changes in what is considered its “pre-clinical” phase. These subtle cognitive deficits may occur years before a clinical diagnosis is made, as biomarker evidence suggests that Alzheimer’s disease (AD) and related pathologies accumulate decades before clinical diagnosis (Albert et al., 2011; Howieson et al., 2008; Sperling et al., 2011). Detection of the earliest and most subtle cognitive changes should coincide with detection of the earliest and most subtle functional changes, which affect an individual’s ability to perform daily tasks in the natural environment (Chaytor & Schmitter-Edgecombe, 2003).

A promising area for additional research in this field involves the latent dementia construct referred to as “δ” by Royall and colleagues (Royall & Palmer, 2012; Royall, Palmer, & O’Bryant, 2012), which may represent a recent advancement toward the goal of detecting early and subtle cognitive changes and concomitant functional decline. This construct, which is derived from Spearman’s general intelligence factor g (Spearman, 1904), represents a further subdivision of Spearman’s g into the two independent factors g’ (g prime) and δ. Royall et al. describe the δ construct, the focus of the current investigation, as representing the “cognitive correlates of functional status” (Royall et al., 2007; Royall & Palmer, 2012); as such, g’ reflects the component of one’s general cognitive ability that is separate from the functional decline caused by neurodegenerative disease. The δ and g’ constructs differ only slightly. Though both are thought to represent the latent variables underlying cognitive task performance, only δ is theorized to underlie one’s ability to function independently and perform activities of daily living (ADL). According to Royall and colleagues, g’ explains the largest proportion of the variance in cognitive test results, but δ most strongly correlates with dementia severity (Royall & Palmer, 2012).

Most approaches to cognitive assessment of dementia focus on observed scores, which contain measurement error. In contrast, the latent variable modeling approach taken by Royall, which utilizes confirmatory factor analysis (CFA), can be used to quantify dementia severity with error in estimation but not in measurement (McArdle, 2009; Weston & Gore, 2006;). Latent variable models that fit the data well can be interpreted as providing evidence for a latent trait underlying observed test scores. The model proposed by Royall and colleagues should not be dependent upon the use of specific cognitive tests to measure the construct of dementia, which has a categorical latent structure (Gavett & Stern, 2012).

If the model proposed by Royall and colleagues provides a valid representation of the latent dementia phenotype, then δ represents an important construct that could advance neurodegenerative disease research, especially as it pertains to the use of cognitive tests to aid in the detection of the earliest co-occurring changes in cognition and ADLs (Albert et al., 2011; Howieson et al., 2008; Sperling et al., 2011). To ensure that the δ construct is invariant to sample and assessment methods (i.e., cognitive test battery), Royall and colleagues’ model for δ requires validation in other samples and with different cognitive tests. The hypothesized model, which treats δ and g’ as independent constructs, has been validated in demented individuals (Royall, Palmer, & O’Bryant, 2012), cognitively healthy adults (Royall & Palmer, 2012) and in an ethnically diverse sample (Royall & Palmer, 2013). The goal of the current study is to further cross-validate the δ construct in a national clinical case series of older adult participants from the National Alzheimer’s Coordinating Center (NACC) Uniform Data Set (UDS) (Beekly, Ramos, & Lee, 2007; Morris et al., 2006; Weintraub et al., 2009), both cross-sectionally and longitudinally.

The UDS, maintained by NACC, contains longitudinal assessment data that reflect both the cognitive and functional changes that occur in healthy aging and as a result of AD, mild cognitive impairment (MCI), and other neurodegenerative diseases (Morris et al., 2006). As such, the UDS provides a unique opportunity for cross-validation of the δ construct through use of its large, diverse, and cognitively heterogeneous sample. In addition, the UDS includes a different battery of neuropsychological and functional tests than Royall’s initial δ validations (Royall & Palmer, 2012; Royall & Palmer, 2013; Royall, Palmer, & O’Bryant, 2012) and maintains approximately annual data relevant to ADLs and multiple cognitive domains, including attention, speed of processing, executive function, episodic memory, language, and behavioral symptoms (Weintraub et al., 2009).

In validating the δ construct cross-sectionally, we hypothesize that the Royall et al. (Royall & Palmer, 2012; Royall, Palmer & O’Bryant, 2012) model, where δ represents the latent variable underling both cognitive and functional status and g’ represents the latent variable underlying the component of cognitive ability not related to functional decline, will fit the baseline data from the NACC UDS well. In cross-validating the δ construct longitudinally, we hypothesize that participants’ latent dementia status, as measured by δ on an approximately annual basis, will change in conjunction with participants’ latent dementia status as measured approximately annually by the Clinical Dementia Rating Sum of Boxes (CDR-SB), one of the most commonly used methods of rating an individual’s cognitive and functional status in the context of a neurodegenerative disease (O’Bryant et al., 2010).

Method

We obtained archival data for this study through a request to NACC. The NACC database contains data from 34 past and present Alzheimer’s Disease Centers (ADC). Initial (baseline) and follow-up visit packets completed between September 2005 and August 2013 were used in the current analyses.

Participants

The total sample size of all initial participant visits was 29,004; we excluded participants whose primary language was not English (n = 2,398) and another 538 participants under the age of 50, leaving 26,068 baseline visits for analysis. From these data, we extracted the relevant cognitive variables, described in the Materials section below. We included all participants - with and without cognitive impairment - for whom data were available on the cognitive variables shown below. This inclusivity was preferred for two reasons. First, as discussed by Delis et al. (2003) latent variable analysis that does not include trait heterogeneity of the constructs being measured may produce misleading results (e.g., the factor structure for immediate and delayed memory may vary depending on whether healthy controls or patients with AD are studied). Second, because we sought to cross-validate the δ latent dementia phenotype, which is theorized to be a continuous trait, we sought to include individuals across the entire continuum of cognitive health.

Of those participants in the sample who were clinically diagnosed with dementia (n = 9,748; 37.4%), 6,335 (65.0%) were diagnosed with a primary diagnosis of probable AD, 953 (9.8%) with possible AD, 1,047 (10.7%) with frontotemporal dementia and its variants (e.g., primary progressive aphasia), 523 (5.4 %) with dementia with Lewy bodies, 183 (1.9%) with probable and possible vascular dementia, and 707 (7.3%) with other causes (e.g., medical illness, brain injury, Parkinson’s disease, alcohol abuse). A total of 5,431 (20.8%) participants were diagnosed with MCI. Of these, 2,081 (38.3%) were diagnosed with the amnestic single domain subtype; 2,271 (41.8%) with the amnestic multiple domain subtype, 676 (12.4%) with the nonamnestic single domain subtype, and 403 (7.4%) with the non-amnestic multiple domain subtype. There were 9,766 participants (37.5%) classified as cognitively normal.

Materials

The Mini-Mental State Examination (MMSE; Folstein, Folstein, & McHugh, 1975) is a 30-point global screening measure used in the assessment of neurodegenerative disease.

Semantic fluency (animals and vegetables) tests involve rapid verbal generativity of words belonging to a specific semantic category.

The Boston Naming Test (BNT 30-item version; Kaplan, Goodglass, & Weintraub, 1983; Goodglass, Kaplan, & Barresi, 2001) is a test of visual confrontation naming. This test, which uses the odd items from the 60-item BNT, measures naming ability.

Logical Memory, Immediate and Delayed (LM-I and LM-D, respectively; Wechsler, 1987) provide a measurement of contextual memory for stories, in both immediate and delayed (20-30 min) free recall formats.

Digit Span Forward and Backward (DS-F and DS-B; Wechsler, 1981) are used to measure auditory attention span and working memory. Participants are read a series of digits that progressively increase in span length and must repeat the digit sequence in either forward (DS-F) or reverse (DS-B) order.

Digit Symbol Coding (Wechsler, 1981) requires participants to fill in empty boxes with symbols below the numbers 1 through 9, based on a matching key. The number of correctly drawn matches completed in 90 seconds is used to measure visuomotor and graphomotor speed.

Trail Making Test Parts A and B (TMT-A and TMT-B; Reitan & Wolfson, 1993) involve rapid graphomotor sequencing of numbers (Part A) or numbers and letters (Part B) that have been pseudorandomly scattered across a page. TMT-A provides a measure of visual attention and processing speed, whereas TMT-B measures those abilities plus cognitive flexibility and maintenance of mental set.

The Functional Activities Questionnaire (FAQ; Pfeffer, Kurosaki, Harrah, Chance, & Filos, 1982) is an informant-report measure of a participant’s ability to be independent in completing instrumental activities of daily living (IADLs). The FAQ is commonly used in dementia evaluations due to its reliability, validity, sensitivity, and specificity (Juva et al., 1997; Olazarán, Mouronte, & Bermejo, 2005; Teng, Becker, Woo, Cummings, & Lu, 2010).

Procedure

We sought to validate the latent construct δ using both cross-sectional and longitudinal methods. We began by replicating Royall’s latent variable model for g’ and δ at the baseline visit and then generated factor scores for each participant based on the factor loadings obtained from the model. We examined the degree to which these factor scores predicted clinical outcomes both in cross-section (baseline visit) and over time.

For the TMT-A, TMT-B, and FAQ tests, we recoded the variables to match the direction of all other variables (higher scores being indicative of better performance). All three scores were recoded by subtracting the observed score from the maximum score (150 for TMT-A, 300 for TMT-B, and 30 for FAQ, respectively).

For cross-sectional validation, we first excluded participants with missing data on any of the cognitive variables of interest (n = 12,030), leaving 14,038 cases for the initial cross-sectional validation procedures. We randomly divided this sample into two smaller subsamples of equal size: a training set and a validation set of equal sample size (each n = 7,019). We fit the CFA model (Figure 1) to the training set and then used the parameter estimates derived from the training set to examine the model’s fit to the validation set. Cross-sectional validation procedures for δ are described in the Data Analysis section below.

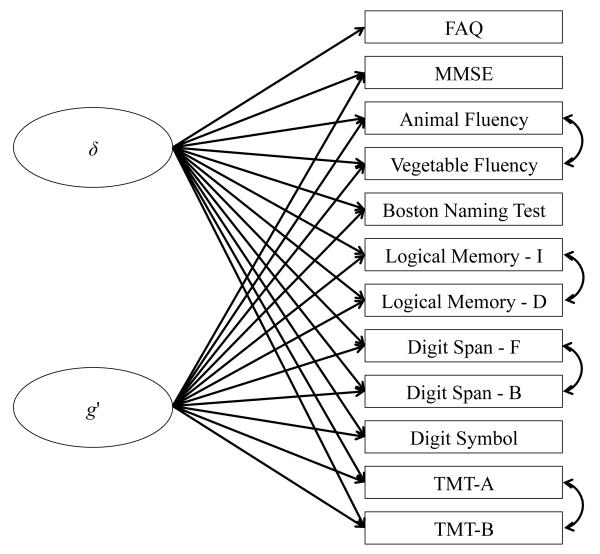

Figure 1.

Measurement model proposed by Royall et al. [9] and validated in the current study using confirmatory factor analysis. δ is the latent variable representing the dementia phenotype and g’ is the latent variable representing the portion of general cognitive ability that is independent of dementia status. These latent variables are modeled as uncorrelated factors. FAQ = Functional Activities Questionnaire; MMSE = Mini-Mental State Examination; Logical Memory - I = Immediate Recall of Logical Memory Story A; Logical Memory - D = Delayed Recall of Logical Memory Story A; Digit Span - F = Forward Digit Span; Digit Span - B = Backward Digit Span; TMT-A = Trail Making Test part A; TMT-B = Trail Making Test part B.

For longitudinal validation, we used all available data from participants with at least one visit (baseline), with one exception. We excluded any participant who did not have an FAQ score available at a given visit, due to the fact that derivation of δ is dependent upon the use of one or more variables measuring ADLs. For all approximately annual visits (up to a maximum of six visits), we derived factor scores for δ based on the factor loadings obtained during the cross-sectional analyses. Longitudinal analysis was based on a sample size of 18,297 participants that included 11,211 women and 7,086 men, with an average age at baseline of 73.39 (SD = 9.66). Additional demographic characteristics of the longitudinal sample are provided in Table 1. Across the six visits, the sample included 18,297 visits at Time 1, 12,439 visits at Time 2, 8,728 visits at Time 3, 6,179 visits at Time 4, 4,230 visits at Time 5, and 2,783 visits at Time 6. Of this sample, a total of 8,691 (47.5%) participants were clinically diagnosed with dementia during at least one visit.

Table 1.

Demographic Characteristics of the Cross-Sectional and Longitudinal Samples.

| Total Sample (CS) |

Training Sample (CS) |

Validation Sample (CS) |

Longitudinal Samplea |

|

|---|---|---|---|---|

|

|

||||

| n | 14,038 | 7,019 | 7,019 | 18,297 |

| Age, years; M (SD) | 73.09 (9.35) | 73.05 (9.36) | 73.14 (9.34) | 73.39 (9.66) |

| Education, years; M (SD) | 15.78 (6.24) | 15.75 (5.96) | 15.82 (6.51) | 15.71 (7.08) |

| Sex, Female; n (%) | 8,743 (62.3%) | 4,390 (62.5%) | 4,353 (62.0%) | 11,211 (61.3%) |

| Race, Caucasian; n (%) | 11,525 (82.1%) | 5,778 (82.3%) | 5,747 (81.9%) | 15,067 (82.3%) |

| Ethnicity, Hispanic; n (%) | 324 (2.3%) | 167 (2.4%) | 157 (2.2%) | 414 (2.3%) |

| Dementia, n (%) | 2,960 (21.1%) | 1,500 (21.4%) | 1,460 (20.8%) | 6,126 (33.5%) |

| Probable AD, n (%) | 1,968 (14.0%) | 971 (13.8%) | 997 (14.2%) | 3,878 (21.2%) |

| Possible AD, n (%) | 297 (2.1%) | 177 (2.5%) | 120 (1.7%) | 600 (3.3%) |

| MCI, n (%) | 2,991 (21.3%) | 1,532 (21.8%) | 1,459 (20.8%) | 3,443 (18.8%) |

| Cognitively Healthy, n (%) | 7,364 (52.5%) | 3,651 (52.0%) | 3,713 (52.9%) | 7,939 (43.4%) |

Note. CS = cross-sectional; n = sample size; M = mean; SD = standard deviation; MCI = mild cognitive impairment; AD = Alzheimer’s disease.

Data from baseline visit.

Data analysis

All analyses, with the exception of the latent variable modeling, were performed in R version 3.0.2 (R Core Team, 2013). The work of Royall and colleagues (2012) was used to generate a hypothesized CFA model that included the 12 observed test scores described above (see Figure 1). This two-factor model included the latent constructs g’ and δ. The g’ construct is measured by all of the above variables with the exception of the FAQ. In contrast, the δ construct is measured by all of the above variables, including the FAQ. We hypothesized the presence of method effects between several pairs of variables: animal and vegetable fluency, LM-I and LMD, DS-F and DS-B, and TMT-A and TMT-B. In other words, we assumed that scores on these four pairs of variables would covary for reasons other than a person’s standing on the g’ and δ constructs because of methodological similarities between the pairs of tests. Therefore, we added residual correlation terms to the hypothesized model in order to account for these method effects (Figure 1).

Model fit was estimated on the training sample using a robust maximum likelihood estimator in Mplus version 6.11 (Muthén & Muthén, 1998-2010). We fixed the variance of the latent variables to 1.0 to scale the resulting factor scores as z-scores (M = 0.0, SD = 1.0). The final parameter estimates from the CFA model fit to the training data were then used to assess the model’s fit to the validation sample.

After the identification of the appropriate factor loadings for each parameter in the model, we estimated latent δ factor scores (M = 0.0, SD = 1.0) for each participant and validated these factor score against two external criteria: the CDR-SB (O’Bryant et al., 2010) and the absence or presence of a clinical diagnosis of dementia as made by either a single clinician or by consensus diagnosis at each ADC and reported in the UDS data. We used Spearman’s ρ to evaluate the strength of the association between δ factor scores and CDR-SB. Using the OptimalCutpoints package in R, we employed receiver operating characteristic (ROC) curve analysis to determine the ability of the δ factor scores to discriminate between individuals with and without a clinical diagnosis of dementia. Based on ROC curve analysis, we identified a cutoff score that maximizes diagnostic efficiency (Feinstein, 1975; Greiner, Pfeiffer, & Smith, 2000).

For additional cross-sectional validation, we compared the fit of Royall’s model (containing both g’ and δ) to a similar model containing only one factor - Spearman’s g - from which g’ and δ are derived. Based on model of Spearman’s g, we also generated factor scores and made comparisons between the factor scores derived from Spearman’s g with those derived from Royall’s δ. In particular, we hypothesized that FAQ and CDR-SB scores would be more strongly associated with δ factor scores than with Spearman’s g factor scores.

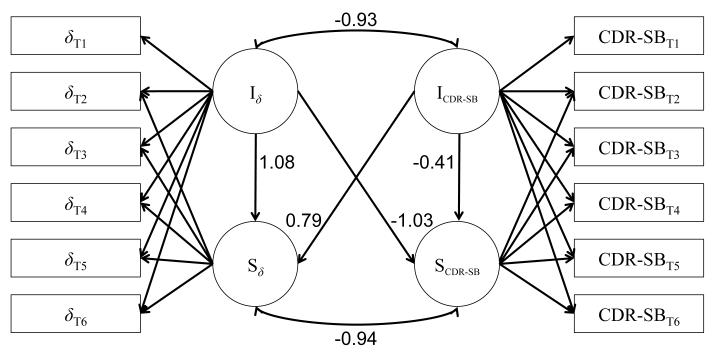

For longitudinal validation, we used the factor scores for δ in a parallel process growth model, shown in Figure 2, which compared the latent intercepts and slopes for δ factor scores with those derived from the CDR-SB.

Figure 2.

Parallel process growth model examining the relationship between latent intercepts (I) and slopes (S) for both δ, the latent variable representing the dementia phenotype, and the Clinical Dementia Rating Scale Sum of Boxes (CDR-SB) across six approximately annual evaluations (T1 - T6). Numbers associated with each path represent standardized parameter estimates. δT1 = observed factor score for δ at time 1; CDR-SBT1 = observed CDR-SB score at time 1; Iδ = latent intercept of δ; Sδ = latent slope of δ; ICDR-SB = latent intercept of CDR-SB; SCDR-SB = latent slope of CDR-SB.

For both cross-sectional and longitudinal validation, we evaluated model fit using the comparative fit index (CFI), Tucker-Lewis Index (TLI), root mean square error of approximation (RMSEA) and its 90% confidence intervals, and the standardized root mean square residual (SRMR). CFI and TLI values of 0.95 and above are suggestive of good fit, as are RMSEA values of 0.06 and lower and SRMR values of 0.08 and lower (Hu & Bentler, 1999).

Results

Cross-sectional validation

The demographic characteristics of the training sample and the validation sample are provided in Table 1. The fit of the CFA model to the training sample was generally good: CFI = 0.971, TLI = 0.952, RMSEA = 0.070 (90% CI [0.067, 0.073]), SRMR = 0.025. The standardized parameter estimates obtained from the model fit to the training data are presented in Table 2. This model held up to cross-validation in the validation sample, yielding model fit indices that were essentially equivalent or slightly superior to those found in the training sample: CFI = 0.972, TLI = 0.980, RMSEA = 0.045 (90% CI [0.043, 0.047]), SRMR = 0.060.

Table 2.

Standardized Parameter Estimates for the Cross-sectional CFA Model Depicted in Figure 1

|

δ

|

g’

|

|||||||

|---|---|---|---|---|---|---|---|---|

| Variable | β | SEE | β/SEE | p | β | SEE | β/SEE | p |

| MMSE | 0.87 | 0.007 | 123.94 | < .001 | −0.00 | 0.019 | −0.20 | 0.832 |

| Animal Fluency | 0.68 | 0.011 | 64.85 | < .001 | 0.20 | 0.026 | 7.57 | < .001 |

| Vegetable Fluency | 0.68 | 0.009 | 79.38 | < .001 | 0.14 | 0.021 | 6.74 | < .001 |

| BNT | 0.69 | 0.012 | 56.93 | < .001 | 0.08 | 0.032 | 2.42 | < .001 |

| LM-I | 0.75 | 0.007 | 105.65 | < .001 | 0.04 | 0.023 | 1.55 | 0.299 |

| LM-D | 0.73 | 0.007 | 104.39 | < .001 | 0.03 | 0.022 | 1.54 | 0.294 |

| DS-F | 0.39 | 0.013 | 29.06 | < .001 | 0.12 | 0.026 | 4.50 | < .001 |

| DS-B | 0.50 | 0.012 | 42.96 | < .001 | 0.21 | 0.024 | 8.94 | < .001 |

| Digit Symbol | 0.68 | 0.013 | 51.06 | < .001 | 0.58 | 0.020 | 28.24 | < .001 |

| TMT-A | 0.60 | 0.015 | 40.25 | < .001 | 0.43 | 0.025 | 17.07 | < .001 |

| TMT-B | 0.74 | 0.012 | 60.69 | < .001 | 0.41 | 0.028 | 14.67 | < .001 |

| FAQ | 0.77 | 0.009 | 85.43 | < .001 | -- | -- | -- | -- |

Note. β = standardized parameter estimate; SEE = standard error of the estimate; MMSE = Mini-Mental State Examination; BNT = Boston Naming Test; LM-I = Immediate Recall of Logical Memory; LM-D = Delayed Recall of Logical Memory; DS-F = Digit Span Forward; DS-B = Digit Span Backward; TMT-A = Trail Making Test part A; TMT-B = Trail Making Test part B; FAQ = Functional Activities Questionnaire.

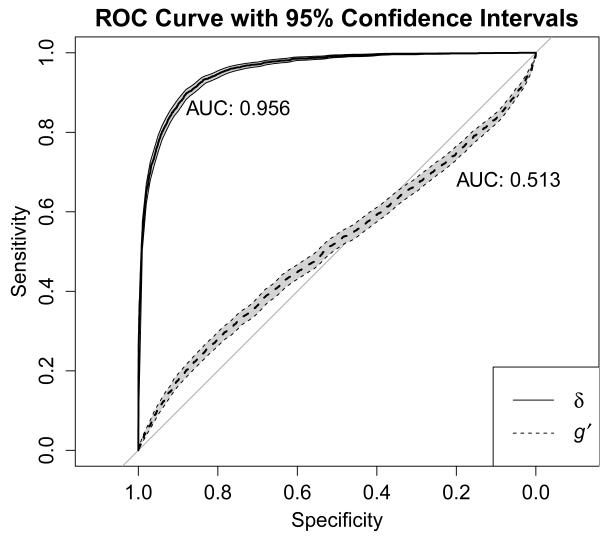

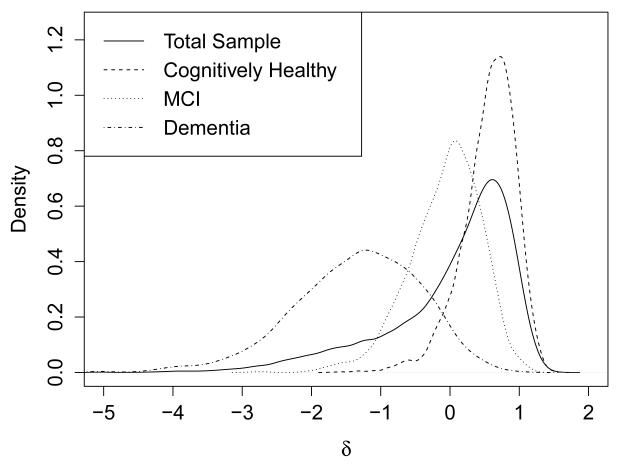

In the full sample, the average factor score for δ in participants with a clinical diagnosis of dementia was −1.34 (SD = 0.91), whereas in individuals without a diagnosis of dementia, the average δ factor score was 0.37 (SD = 0.52), which was a statistically significant difference, t (df = 3484.12) = −97.87, p = < .001, d = −2.75, 95% CI [−2.80, −2.70]. The δ factor scores derived from this model demonstrated a strong negative correlation with CDR-SB scores (ρ = −.74, p < .001). In contrast, the correlation between g’ and CDR-SB was much smaller (ρ = −.07, p < .001). ROC curve analysis (Figure 3) revealed that using δ factor scores to differentiate between all-cause dementia and no dementia (including MCI) produced an estimated area under the curve (AUC) of .96, 95% CI [.95, .96], which was markedly superior to that produced by g’ (AUC = .51, 95% CI [.50, .53]). The factor score cutoff that maximized diagnostic efficiency was δ = −0.765, which yielded a sensitivity of .967 (95% CI [.964, .970]) and a specificity of .719 (95% CI [.702, .735]) for discriminating between those with all-cause dementia and without dementia (including MCI). Using the base rate of dementia in the current sample (21.09%), the above sensitivity and specificity values were associated with positive and negative predictive values of .928 (95% CI [.923, .933]) and .854 (95% CI [.840, .867]), respectively. The distribution of δ factor scores in the full sample, as well as the same distribution broken down by participants diagnosed as cognitively normal, with dementia, and with MCI, is shown in Figure 4.

Figure 3.

Cross-sectional (at baseline) receiver operating characteristic curve with 95% confidence intervals (shaded in gray) demonstrating the ability of factor scores based on δ and g’ to discriminate between participants with and without clinically-diagnosed dementia.

Figure 4.

Cross-sectional (at baseline) distribution of δ factor scores in the total sample and in three different diagnostic groups: the cognitively healthy group, the group diagnosed with mild cognitive impairment (MCI), and the group diagnosed with dementia. The x-axis represents factor scores for δ, scaled as a z-score (M = 0, SD = 1).

Using the same data, a single factor model for Spearman’s g, CFI = 0.954, TLI = 0.937, RMSEA = 0.083 (90% CI [0.080, 0.086]), SRMR = 0.034, did not fit the data as well as Royall’s model. Factor scores for δ were more strongly related to FAQ and CDR-SB scores than g’ or Spearman’s g (Table 3). An ordinal logistic regression using both g’ and δ factor scores to predict CDR-SB scores revealed that δ factor scores (Odds Ratio [OR] = 0.069, 95% CI [.066, 0.073]) were stronger predictors of CDR-SB than g’ factor scores (OR = 1.34, 95% CI [1.29, 1.40]). Similarly, Spearman’s g factor scores (OR = 0.138, 95% CI [0.131, 0.144]) were weaker predictors of CDR-SB rating than δ factor scores.

Table 3.

Proportion of variance in FAQ and CDR-SB test scores accounted for by latent variable factor scores for δ, g’, and Spearman’s g.

| Latent variable | FAQ | CDR-SB |

|---|---|---|

| δ | 65.0% | 66.3% |

| g’ | 0.00% | 0.00% |

| Spearman’s g | 46.9% | 50.5% |

Note. FAQ = Functional Activities Questionnaire; CDR-SB = Clinical Dementia Rating Sum of Boxes.

Longitudinal validation

Individual growth curve models for δ and CDR-SB were first tested to ensure that change in each variable could be modeled with latent intercepts and (linear) slopes. The growth models fit well for both δ (CFI = 0.969, TLI = 0.971, RMSEA = 0.031 (90% CI [0.028, 0.034]), SRMR = 0.058) and CDR-SB (CFI = 0.967, TLI = 0.969, RMSEA = 0.037 (90% CI [0.034, 0.040]), SRMR = 0.056) individually. We then combined the two growth curve models into a parallel process growth model, which also fit the data well, CFI = 0.969, TLI = 0.968, RMSEA = 0.032 (90% CI [0.030, 0.033]), SRMR = 0.065. Standardized parameter estimates taken from the parallel process growth model are shown in Figure 2.

Discussion

The current investigation sought to cross-validate and extend the Royall et al. (2012) model for δ, a variable representing the latent dementia phenotype, both cross-sectionally and longitudinally in a large, nationally representative sample of older adults. The results of this study provide strong support for this model, suggesting that the latent variable δ is invariant not only to sample, but also to the cognitive and functional assessment measures used as indicators of dementia.

Prior to this study, the δ construct had been validated by Royall’s group in samples that included participants with AD, cognitively healthy participants, and in an ethnically diverse sample (i.e., Mexican-Americans) using a variety of different measures of cognition and ADLs (Royall & Palmer, 2012; Royall & Palmer, 2013; Royall, Palmer & O’Bryant, 2012). In the current study, we used the FAQ as the functional indicator for δ and a different (although partially overlapping) battery of tests as indicators of cognitive status related to δ and g’. Ours is the first known study performed outside of Royall’s group to provide converging evidence for the contention that δ is invariant to sample as well as to the cognitive and functional tests used in its construction. Furthermore, the sample used in the current study not only included participants with dementia due to AD, but dementia due to a number of other neurodegenerative diseases (e.g., frontotemporal dementia, dementia with Lewy bodies, vascular dementia). This heterogeneity in terms of dementia etiology indicates that Royall’s model for δ is not specific to AD.

Our results also provide converging evidence that δ is an excellent marker of clinical dementia. Factor scores based on δ correlate highly with the CDR-SB, both cross-sectionally and longitudinally, and discriminate between individuals with and without clinically diagnosed dementia. Royall et al. (2012; 2013) reported strong correlations between δ factor scores and CDR-SB scores, similar to the correlation of ρ = −.74 that we report here. We also found that the use of δ factor scores to identify clinically-diagnosed dementia was associated with an AUC of .96 (95% CI [.95, .96]), which is similar to the AUCs of .942, .96, and .93 reported by Royall et al. (2012; 2013) and reflects an excellent ability to correctly classify individuals with and without dementia. Similarly, Royall, Palmer, & O’Bryant (2012) reported an AUC for g’ of .79; our results (AUCg’ = .51, 95% CI [.50, .53]) also support the claim that δ is superior to g’ for use in identifying clinical dementia. Our analyses identified a δ factor score of −0.765 as the optimal cutpoint for differentiating between all-cause clinical dementia and cases without dementia (including MCI). This cutoff scored was associated with a positive predictive power of .928 (95% CI [.923, .933]) and a negative predictive power of .854 (95% CI [.840, .867]). Readers are reminded, however, that positive and negative predictive power are dependent upon the base rate of the condition, which was 21.09% in this study’s ROC curve analysis. In the general population of older adults ages 60+, however, the base rate of dementia is estimated to be 4.7% (Sosa-Ortiz, Acosta-Castillo, & Prince, 2012), which yields positive and negative predictive power estimates of .145 and .998, respectively. Along those same lines, different cutoff scores for δ will also affect classification accuracy, with higher δ factor scores producing better sensitivity (and negative predictive power) at the expense of specificity (and positive predictive power), and vice versa (e.g., Gavett et al., 2012; Glaros & Kline, 1988).

An examination of the parameter estimates produced by the model under investigation reveals that all of the cognitive tests, as well as the FAQ, are useful indicators of δ (Table 2). In contrast, the MMSE and the two Logical Memory subtests (immediate and delayed recall) did not produce significant loadings on the g’ factor. This pattern of results suggests that the MMSE and Logical Memory subtests are distinct from other cognitive tests in the UDS, in that their contributions are largely made as indicators of dementia severity and not as indicators of global cognitive ability. These findings are consistent with other data suggesting that - because of its substantial ceiling effects - the MMSE is most informative for individuals with moderate cognitive impairment (Mungas & Reed, 2000; Pendlebury et al., 2012), and also that episodic memory is one of the most sensitive markers of Alzheimer’s disease and other dementias (Butters et al., 1987; Rabin et al., 2009).

The cross-sectional factor scores estimated from δ showed a clear pattern amongst cognitively healthy participants, those with clinically diagnosed MCI, and those with clinically diagnosed dementia (Figure 4). Of note is the fact that the participants with a clinical diagnosis of MCI have δ factor scores that are in an intermediate position between the factor scores in the cognitively healthy and dementia groups. Given that δ is theorized to represent the cognitive correlates of functional status, and that a diagnosis of MCI hinges upon a distinction between “significant” and non-significant functional changes (Albert et al., 2011; Winblad et al., 2004), it is no surprise to see such a distribution amongst the MCI factor scores. Because there is no operational definition of functional impairment that objectively distinguishes dementia from MCI, the ADCs in NACC are likely to have used slightly different thresholds for identifying functional impairment across each center. Royall et al. (2007) have discussed the relative advantages of IADLs over basic ADLs in identifying dementia; it should be noted that the FAQ, which was used as the functional indicator of δ in the current study, addresses only IADLs and not basic ADLs. Further research into δ, including how it is constructed (e.g., using measures of IADLs, basic ADLs, or both) and its ability to predict incident dementia, may help establish an objective threshold that distinguishes neuropathologically healthy people from those with neurodegenerative disease, thus decreasing the importance of the MCI diagnosis as a marker of pre-clinical dementia. Future research that adds biomarker data as indicators of the δ factor may be advantageous as well.

Longitudinal analyses used latent intercepts and slopes to model concomitant changes in δ and the CDR-SB across six approximately annual visits. This parallel process growth model tested the hypothesis that the latent process driving changes in CDR-SB scores occurs in conjunction with the latent process driving changes in δ factor scores, and that the latent intercepts and slopes directly affect one another. Because dementia-causing neuropathological changes are believed to produce clinical changes in both CDR-SB scores and δ factor scores, the current results provide support for the validity of δ as an indicator of the co-occurring cognitive and functional decline that is characteristic of dementia. These longitudinal results are similar to past findings, which have indicated that δ is strongly related to three-year changes on a number of cognitive outcome variables (Royall & Palmer, 2012).

The following study limitations should be noted. The results of the ROC curve analysis may be biased by the fact that the cognitive and functional tests used to construct δ were the same tests available to ADC researchers when applying clinical diagnostic criteria to identify participants with dementia. Although the participants’ standing on the δ construct was not known to ADC researchers at the time of clinical diagnosis, this lack of independence may create some criterion contamination. However, because the same limitation is true for the g’ factor scores, the superiority of the δ factor scores (AUC = .96) in dementia classification compared to the g’ factor scores (AUC = .51) suggests a clear advantage of partitioning Spearman’s g into the components of cognitive functioning that are (δ) and are not (g’) related to functional status. An additional limitation of this study is that complete data were not available for all participants across all six approximately annual time points, either due to attrition or because – at the time this research was conducted – some participants had not been enrolled in the UDS for six years. Attrition is likely to result in missing data patterns that are not random, but that vary systematically with participants’ standing on the δ construct.

Because dementia is primarily a disease of the elderly, it is often difficult to determine whether early subtle cognitive changes are the result of a neurodegenerative disease or a reflection of normal aging. The construct δ, with its emphasis on the joint changes that occur in both cognitive skills and IADLs, may provide a novel target for future approaches to detecting the earliest signs of neurodegenerative disease via neuropsychological assessment of the elderly. As the current results demonstrate, the ability of δ to be applied toward the longitudinal measurement of cognitive change suggests that it has enormous potential as an outcome measure in studies seeking to understand the effects of a number of variables, such as remote traumatic brain injury, on trajectories of co-occurring cognitive and functional change in elderly individuals. Additionally, because of its ability to jointly measure cognitive and functional capacity, δ may be especially useful as an outcome variable for randomized clinical trials investigating neurodegenerative disease treatments. Future research is needed to better characterize the longitudinal changes in δ across various populations, both with and without neurodegenerative disease, and to determine the utility of δ in predicting conversion to dementia.

Acknowledgments

The NACC database is funded by NIA Grant U01 AG016976.

Footnotes

The authors have no conflicts of interests to disclose.

References

- Albert MS, DeKosky ST, Dickson D, Dubois B, Feldman HH, Fox NC, Phelps CH. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s & Dementia. 2011;7:270–279. doi: 10.1016/j.jalz.2011.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beekly D, Ramos E, Lee W. The National Alzheimer’s Coordinating Center (NACC) database: The uniform data set. Alzheimer Disease & Associated Disorders. 2007;21:249–258. doi: 10.1097/WAD.0b013e318142774e. [DOI] [PubMed] [Google Scholar]

- Butters N, Granholm E, Salmon DP, Grant I, Wolfe J. Episodic and semantic memory: a comparison of amnesic and demented patients. Journal of Clinical and Experimental Neuropsychology. 1987;9:479–497. doi: 10.1080/01688638708410764. [DOI] [PubMed] [Google Scholar]

- Chaytor N, Schmitter-Edgecombe M. The ecological validity of neuropsychological tests: A review of the literature on everyday cognitive skills. Neuropsychology Review. 2003;13:181–197. doi: 10.1023/b:nerv.0000009483.91468.fb. [DOI] [PubMed] [Google Scholar]

- Delis DC, Jacobson M, Bondi MW, Hamilton JM, Salmon DP. The myth of testing construct validity using factor analysis or correlations with normal or mixed clinical populations: Lessons from memory assessment. Journal of the International Neuropsychological Society. 2003;9:936–946. doi: 10.1017/S1355617703960139. [DOI] [PubMed] [Google Scholar]

- Feinstein SH. The accuracy of diver sound localization by pointing. Undersea Biomedical Research. 1975;2:173–184. [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Gavett BE, Lou KR, Daneshvar DH, Green RC, Jefferson AL, Stern RA. Diagnostic accuracy statistics for seven Neuropsychological Assessment Battery (NAB) test variables in the diagnosis of Alzheimer’s disease. Applied Neuropsychology: Adult. 2012;19:108–115. doi: 10.1080/09084282.2011.643947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gavett BE, Stern RA. Dementia has a categorical, not dimensional, latent structure. Psychology and Aging. 2012;27:791–797. doi: 10.1037/a0027687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glaros AG, Kline RB. Understanding the accuracy of tests with cutting scores: The sensitivity, specificity, and predictive value model. Journal of Clinical Psychology. 1988;44:1013–1023. doi: 10.1002/1097-4679(198811)44:6<1013::aid-jclp2270440627>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- Goodglass H, Kaplan E, Barresi B. The assessment of aphasia and related disorders. Lippincott Williams & Wilkins; Philadelphia: 2001. [Google Scholar]

- Greiner M, Pfeiffer D, Smith RD. Principles and practical application of the receiver-operating characteristic analysis for diagnostic tests. Preventive Veterinary Medicine. 2000;45:23–41. doi: 10.1016/s0167-5877(00)00115-x. [DOI] [PubMed] [Google Scholar]

- Howieson DB, Carlson NE, Moore MM, Wasserman D, Abendroth CD, Payne-Murphy J, Kaye JA. Trajectory of mild cognitive impairment onset. Journal of the International Neuropsychological Society. 2008;14:192–198. doi: 10.1017/S1355617708080375. [DOI] [PubMed] [Google Scholar]

- Hu L, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling. 1999;6:1–55. [Google Scholar]

- Juva K, Mäkelä M, Erkinjuntti T, Sulkava R, Ylikoski R, Valvanne J, Tilvis R. Functional assessment scales in detecting dementia. Age and Ageing. 1997;26:393–400. doi: 10.1093/ageing/26.5.393. [DOI] [PubMed] [Google Scholar]

- Kaplan E, Goodglass H, Weintraub S. The Boston Naming Test. 2nd Ed Lea & Febiger; Philadelphia: 1983. [Google Scholar]

- McArdle JJ. Latent variable modeling of differences and changes with longitudinal data. Annual Review of Psychology. 2009;60:577–605. doi: 10.1146/annurev.psych.60.110707.163612. [DOI] [PubMed] [Google Scholar]

- Morris JC, Weintraub S, Chui HC, Cummings J, Decarli C, Ferris S, Kukull WA. The Uniform Data Set (UDS): Clinical and cognitive variables and descriptive data from Alzheimer disease centers. Alzheimer Disease and Associated Disorders. 2006;20:210–216. doi: 10.1097/01.wad.0000213865.09806.92. [DOI] [PubMed] [Google Scholar]

- Mungas D, Reed BR. Application of item response theory for development of a global functioning measure of dementia with linear measurement properties. Statistics in Medicine. 2000;19:1631–1644. doi: 10.1002/(sici)1097-0258(20000615/30)19:11/12<1631::aid-sim451>3.0.co;2-p. [DOI] [PubMed] [Google Scholar]

- Muthén L, Muthén B. Mplus User’s Guide. Muthén & Muthén; Los Angeles: 1998-2010. [Google Scholar]

- O’Bryant SE, Lacritz LH, Hall J, Waring SC, Chan W, Khodr ZG, Cullum CM. Validation of the new interpretive guidelines for the Clinical Dementia Rating Scale sum of boxes score in the National Alzheimer’s Coordinating Center database. Archives of Neurology. 2010;67:746–749. doi: 10.1001/archneurol.2010.115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olazarán J, Mouronte P, Bermejo F. Clinical validity of two scales of instrumental activities in Alzheimer’s disease. Neurologia. 2005;20:395–401. [PubMed] [Google Scholar]

- Pendlebury ST, Marwick A, De Jager CA, Zamboni G, Wilcock GK, Rothwell PM. Differences in cognitive profile between TIA, stroke and elderly memory research subjects: A comparison of the MMSE and MoCA. Cerebrovascular Diseases. 2012;34:48–54. doi: 10.1159/000338905. [DOI] [PubMed] [Google Scholar]

- Pfeffer RI, Kurosaki TT, Harrah CH, Chance JM, Filos S. Measurement of functional activities in older adults in the community. Journal of Gerontology. 1982;37:323–329. doi: 10.1093/geronj/37.3.323. [DOI] [PubMed] [Google Scholar]

- Plassman BL, Langa KM, Fisher GG, Heeringa SG, Weir DR, Ofstedal MB. Prevalence of dementia in the United States: The aging, demographics, and memory study. Neuroepidemiology. 2007;29:125–32. doi: 10.1159/000109998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team . R: A language and environment for statistical computing. Version 3.02. R Foundation for Statistical Computing; Vienna, Austria: 2013. [Software] Available from http://www.R-project.org/ [Google Scholar]

- Rabin LA, Paré N, Saykin AJ, Brown MJ, Wishart HA, Flashman LA, Santulli RB. Differential memory test sensitivity for diagnosing amnestic mild cognitive impairment and predicting conversion to Alzheimer’s disease. Aging, Neuropsychology, and Cognition. 2009;16:357–376. doi: 10.1080/13825580902825220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reitan R, Wolfson D. The Halstead-Reitan neuropsychological test battery: Theory and clinical applications. Neuropsychology Press; Tucson, AZ: 1993. [Google Scholar]

- Royall DR, Lauterbach EC, Kaufer D, Malloy P, Coburn KL, Black KJ, The Committee on Research of the American Neuropsychiatric Association The cognitive correlates of functional status: A review from the Committee on Research of the American Neuropsychiatric Association. Journal of Neuropsychiatry and Clinical Neurosciences. 2007;19:249–265. doi: 10.1176/jnp.2007.19.3.249. [DOI] [PubMed] [Google Scholar]

- Royall DR, Palmer RF, O’Bryant SE. Validation of a latent variable representing the dementing process. Journal of Alzheimer’s Disease. 2012;30:639–649. doi: 10.3233/JAD-2012-120055. [DOI] [PubMed] [Google Scholar]

- Royall DR, Palmer RF. Getting past “g”: Testing a new model of dementing processes in dementia. Journal of Neuropsychiatry and Clinical Neurosciences. 2012;24:37–46. doi: 10.1176/appi.neuropsych.11040078. [DOI] [PubMed] [Google Scholar]

- Royall DR, Palmer RF. Validation of a latent construct for dementia case-finding in Mexican-Americans. Journal of Alzheimer’s Disease. 2013;37:89–97. doi: 10.3233/JAD-130353. [DOI] [PubMed] [Google Scholar]

- Sosa-Ortiz AL, Acosta-Castillo I, Prince MJ. Epidemiology of dementias and Alzheimer’s disease. Archives of Medical Research. 2012;43:600–608. doi: 10.1016/j.arcmed.2012.11.003. [DOI] [PubMed] [Google Scholar]

- Spearman CE. ‘General intelligence’, objectively determined and measured. American Journal of Psychology. 1904;15:201–293. [Google Scholar]

- Sperling RA, Aisen PS, Beckett LA, Bennett DA, Craft S, Fagan AM, Phelps CH. Toward defining the preclinical stages of Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s & Dementia. 2011;7:280–292. doi: 10.1016/j.jalz.2011.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teng E, Becker BW, Woo E, Cummings JL, Lu PH. Subtle deficits in instrumental activities of daily living in subtypes of mild cognitive impairment. Dementia & Geriatric Cognitive Disorders. 2010;30:189–197. doi: 10.1159/000313540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Adult Intelligence Scale-Revised. Psychological Corporation; San Antonio: 1981. [Google Scholar]

- Wechsler D. WMS-R: Wechsler Memory Scale-Revised. Psychological Corporation; San Antonio: 1987. [Google Scholar]

- Weintraub S, Salmon D, Mercaldo N, Ferris S, Graff-Radford NR, Chui H, Morris JC. The Alzheimer’s Disease Centers’ Uniform Data Set (UDS): The neuropsychologic test battery. Alzheimer Disease and Associated Disorders. 2009;23:91–101. doi: 10.1097/WAD.0b013e318191c7dd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weston R, Gore PA. A brief guide to structural equation modeling. The Counseling Psychologist. 2006;34:719–751. [Google Scholar]

- Wimo A, Jönsson L, Bond J, Prince M, Winblad B. The worldwide economic impact of dementia 2010. Alzheimer’s & Dementia. 2010;9:1–11. doi: 10.1016/j.jalz.2012.11.006. [DOI] [PubMed] [Google Scholar]

- Winblad B, Palmer K, Kivipelto M, Jelic V, Fratiglioni L, Wahlund LO, Petersen RC. Mild cognitive impairment – beyond controversies, towards a consensus: Report of the International Working Group on Mild Cognitive Impairment. Journal of Internal Medicine. 2004;256:240–246. doi: 10.1111/j.1365-2796.2004.01380.x. [DOI] [PubMed] [Google Scholar]