Abstract

Careful investigation of the form of animal signals can offer novel insights into their function. Here, we deconstruct the face patterns of a tribe of primates, the guenons (Cercopithecini), and examine the information that is potentially available in the perceptual dimensions of their multicomponent displays. Using standardized colour-calibrated images of guenon faces, we measure variation in appearance both within and between species. Overall face pattern was quantified using the computer vision ‘eigenface’ technique, and eyebrow and nose-spot focal traits were described using computational image segmentation and shape analysis. Discriminant function analyses established whether these perceptual dimensions could be used to reliably classify species identity, individual identity, age and sex, and, if so, identify the dimensions that carry this information. Across the 12 species studied, we found that both overall face pattern and focal trait differences could be used to categorize species and individuals reliably, whereas correct classification of age category and sex was not possible. This pattern makes sense, as guenons often form mixed-species groups in which familiar conspecifics develop complex differentiated social relationships but where the presence of heterospecifics creates hybridization risk. Our approach should be broadly applicable to the investigation of visual signal function across the animal kingdom.

Keywords: visual signalling, species recognition, individual recognition, multicomponent signals, guenon, face recognition

1. Introduction

Animal and plant signals frequently have complex forms that contain information in multiple components either within or across sensory modalities. Understanding the form of signals can provide important clues to signal function and evolution [1], but the very complexity of multicomponent signals creates considerable technical challenges for empirical studies [2–4]. One common approach for investigating signal form is to assess the variation within and between signal components through cue isolation experiments, where single parameters of the signal are manipulated and responses observed [5]. However, this approach does not examine the full range of natural variation in signal components [6], and investigating the responses of a wide range of potential receivers (e.g. potential mates, competitors and kin) would be extremely time-consuming.

In this study, we take a complementary approach by examining the classification performance of a machine learning algorithm to establish whether there are differences in signal components between species, sexes, age groups and individuals that could be reliably distinguished by a model receiver. The presence of consistent differences that are able to separate different categories reliably can be highly informative in determining the selection pressures that have driven signal evolution, and the function of signal components [1]. For example, if the colour of a signal affords reliable discrimination between conspecific individuals that encounter each other, this suggests that selective pressures on individual discrimination may have been involved in its evolution [7,8]. Similarly, if there are conspicuous and reliable differences between males and females, this suggests a potentially sexually selected trait that functions in reproductive decision-making [9]. Understanding the basis of variation in signal appearance can also explain why signals are multicomponent: information redundancy is indicated if two components can both be used to reliably predict the same attribute, whereas signal components that predict different attributes may be communicating multiple messages [10].

A first objective in analysing the design of multicomponent signals is separating and measuring the constituent components. In this study, we focus on multicomponent signals in the visual modality. Typically, studies of visual multicomponent signalling consider a component to be a spatially and/or temporally isolated patch of contrasting coloration, with a multicomponent signal comprising two or more such patches [11]. In other frameworks, a single patch of colour can be considered a potentially multicomponent signal [12]. Our approach is to consider both single and multiple patches of colour as potentially multicomponent signals by using a definition based on appearance to the receiver(s), where signal components are the perceptual dimensions of signal appearance. These could potentially include the size, shape, texture, number, colour, brightness, contrast, and relative and absolute spatial and/or temporal location of a signal. Under this formulation, a visual signal is multicomponent if information is communicated by more than one perceptual dimension.

The subjects of our study are the visual signals displayed on the faces of guenons (tribe: Cercopithecini), a group of 25–36 Old World primate species [13–15]. The faces of guenons are renowned for their remarkably varied appearances (figure 1) [17]. Such a variety of visual signals exhibited in social species with trichromatic colour vision and advanced cognitive capabilities makes the Cercopithecini an ideal group in which to study signal appearance. The primary hypothesis for the functional significance of guenon face patterns is that they communicate conspecific status [17,18] to promote reproductive isolation [19]. In support of this, we have previously shown that guenon face patterns are more distinctive and more recognizable with increasing degrees of range overlap between species [16].

Figure 1.

Examples of inter- and intraspecific variation of face patterns in five species of guenon. For each species, four different individuals are shown, two males and two females. For example images of the guenon species not shown see [16]. All photographs taken by W. Allen. (Online version in colour.)

Our present aim is to find out what design elements enable guenon face patterns to work as species recognition signals and whether they are also potentially informative about characteristics other than species identity. To be effective, species recognition signals should differ significantly in appearance between sympatric species but maintain similarity within species because stabilizing selection for easily identifiable signals should reduce phenotypic variation [20]. However, because multicomponent signals can vary on several non-redundant visual dimensions, it is possible that information additional to conspecific status is contained within face patterns [21]. Specifically, we hypothesize that guenon face patterns may also be informative about the individual identity, sex and age of the sender. Two general types of guenon society have been identified. Miopithecus talapoin, Allenopithecus nigroviridis and Chlorocebus aethiops generally form multimale multifemale social groups [22]. For Erythrocebus patas and the forest guenons in the genus Cercopithecus, the typical system is female philopatry within small groups containing a single adult male [23,24]. Both types of system often undergo reorganization during the breeding season as males undertake temporary invasions of female groups [22]. Invading males are often unknown to females, who are sexually receptive towards them, leading to intense male–male competition for mates [25]. Together, these features of guenon social and mating systems would lead to situations where there might be an expected benefit to (i) having a signal that can rapidly communicate individual identity within a social group [8], (ii) signalling whether an unfamiliar individual is a potential mate (i.e. a sexually mature individual of the opposite sex), and (iii) signalling quality or status, especially for males. Table 1 outlines the different predictions each of these hypotheses makes regarding the appearance of a signal and its variability between groups.

Table 1.

Summary of the potential functions of guenon face pattern coloration and the different predictions each hypothesis makes for the appearance and variability of visual signals within and between species. Adapted from [9].

| predicted appearance |

|||

|---|---|---|---|

| signal function | description | within species | between species |

| individual identity | recognition and discrimination of familiar individuals | continuous variation on multiple dimensions resulting from negative frequency-dependent selection for rare phenotypes | no prediction |

| species identity | recognition and discrimination of con- and heterospecifics | consistent appearances resulting from stabilizing selection for phenotypes that match receivers' species recognition templates | continuous variation on multiple dimensions resulting from disruptive selection for unique species appearances that aid discrimination |

| intraspecific mate selection | identification of potential mates and competitors | discrete variation on a single dimension between age and sex classes | no prediction |

| quality | determination of the fitness of potential mates and competitors | continuous variation on a single dimension that varies within age and sex classes | no prediction |

We tested these predictions using permuted discriminant function analysis (pDFA) as a simple machine learning algorithm that attempts to classify groups on the basis of signal component trait scores. We used classification performance to evaluate whether there is potential information in either overall face pattern appearance or (separately) the appearance of focal traits (nose-spots and eyebrow patches) that could be used to determine individual, age or sex category membership reliably in different guenon species. This strategy is regularly used with great success in acoustic communication studies [26–28], but to our knowledge has not yet been applied to visual signals. Key to the success of this strategy is the decomposition of a multicomponent signal into its constituent perceptual dimensions. Previously, this has been a challenging task for complex visual signals [4]. Here, we demonstrate two approaches to achieving this. First, we use the ‘eigenface’ technique, which was initially developed for human face recognition systems [29,30], to describe overall face pattern in a manner consistent with how primates represent face information [31]. The second approach uses an image segmentation algorithm inspired by mammalian cortical vision to define focal traits, which are then assessed using biologically inspired measures of segment colour, shape, size and brightness.

2. Material and methods

(a). Image data collection

We took digital images of Cercopithecini primates held in captivity in US and UK zoos and the CERCOPAN wildlife sanctuary in Nigeria [16] (see Acknowledgements) using a calibrated camera set up to enable estimation of guenon photoreceptor catches and subject illumination (electronic supplementary material). We used a Canon T2i with Canon EF-S lenses with focal length of either 18–55 mm, 55–250 mm or 75–300 mm and a circular polarizing filter to reduce the appearance of specular reflections off hair and glass. We collected at least three in-focus images of individual subjects from each species in a frontal body position (within 10° of ideal) under indirect light (i.e. overcast conditions outdoors or diffuse artificial light indoors). Only one photo was used from each series of images taken while the subject remained in the same position, and we collected photographs of each individual at multiple time points (normally over the course of a day) to help ensure that replicate photographs were independent. This meant subsequent classification was based on invariant features of each individual's appearance rather than their particular appearance at a limited point in time and space.

Our analyses included 541 images of 110 individuals from 12 Cercopithecini species that we collected as part of a larger published dataset [16]: A. nigroviridis (5 male, 3 female, 1 juvenile), Cercopithecus ascanius (5, 7, 0), C. diana and C. roloway (3, 4, 2), C. mitis (1, 2, 0), C. mona (7, 6, 3), C. neglectus (2, 4, 0), C. nictitans (7, 12, 1), C. petaurista (3, 4, 0), C. wolfi (3, 4, 0), C. sclateri (2, 4, 2), E. patas (2, 2, 0), M. talapoin (3, 2, 0). None of these species have been split in either of the two most recent taxonomies [13,14], though we combined data for the former subspecies C. diana and C. roloway on the basis of their observed visual similarity and former subspecies status. For each individual photographed, we recorded individual identity, sex (male or female) and age class (juvenile, 1–5 years; young adult, 6–10 years; old adult, 11+ years). In sex and age classification analyses, we excluded data on juveniles and analysed images from adults only.

(b). Image processing

We modelled the retinal responses of a guenon receiver to the visual scene recorded by the camera using standard camera calibration and image transformation techniques to transform the camera's colour space to guenon LMS (long, medium and short wavelength photoreceptor) colour space and normalize for differences in lighting [32,33] (electronic supplementary material). Normalizing for lighting conditions is an essential step for ensuring that classifications are based on invariant features of a subject's appearance rather than local lighting conditions. Next, we segmented out each individual's head from the background using a semi-automated outlining program in MATLAB (electronic supplementary material), and standardized the cropped faces for size, orientation and position by selecting the outside corner of each eye as two landmarks and transforming the image using bilinear interpolation so that these points had fixed coordinates. Images were then all trimmed to the same size (297 × 392 pixels). From the set of aligned standardized faces of each individual, we calculated the mean face of each individual (mean LMS responses at each pixel coordinate), then from each species' set of individual mean faces, we calculated the species mean face (weighted to account for variation in the relative number of males and females) and the guenon mean face from the set of species mean faces.

(c). Measuring overall face pattern: guenon eigenface analysis

We calculated interspecific guenon eigenfaces from species average faces and intraspecific eigenfaces from the average faces of each individual for each species. It uses principal components analysis (PCA) on image vectors to establish the dimensions of overall face pattern variation (electronic supplementary material). We obtained inter- and intraspecific eigenface scores for each original image by projecting them into inter- and intraspecific eigenface space, respectively. The position of species and individuals within each face-space describes their overall appearance, and the relationship of their appearance to other species in the tribe and to other individuals of the same species.

(d). Measuring focal patches: eyebrow and nose-spot patch segmentation

For the analysis of focal traits, we selected all individuals from the four species that were subjectively determined to present nose-spots (C. ascanius, C. nictians, C. petaurista, C. sclateri; n = 47) and eyebrow patches (C. diana, C. mona, C. neglectus, C. wolfi; n = 38). We segmented the regions on monkeys' faces into constituent parts using a pulse-coupled neural network (PCNN) [34], a class of image segmentation algorithm based on mammalian early vision (electronic supplementary material). Each neuron in the network corresponds to a pixel in the image and receives input based on the pixel's colour as well as input from neighbouring neurons. Inputs accumulate until a threshold is reached and the neuron fires, pulsing output to neighbouring neurons. Over a number of iterations, the process results in a map of neuron firing times, with spatially contiguous regions firing together enabling image segmentation [35]. Each nose-spot or eyebrow patch region was determined by selecting the largest segment in the region with only one subregion from this map (figure 2).

Figure 2.

The image segmentation process. On the left is an example of a C. petaurista male image in LMS colour space that has been cropped and standardized for size, position and orientation. On the right is the PCNN neuron firing time map with the selected region outlined in green. The region selected is the largest nose-spot segment that contains only one subregion. (Online version in colour.)

While PCNNs are clearly a simplification of mammalian early vision, given that we do not know how guenons segment face patterns, and so whether human-mediated hand segmentations are more or less similar to guenon segmentation than PCNN segmentations, a generalized model provides a representation of an object's outline that has several advantages. The algorithm itself is fast, automatic, resistant to noise, replicable and does not require a training set or a user-specified set of features to detect.

(e). Measuring the perceptual dimensions of eyebrow and nose-spot patches

After selecting the nose-spot or eyebrow region, we recorded the colour of the region as the mean LMS value of all pixels. From this we calculated luminance as (L + M)/2 and the response of red-green (R/G) and blue-yellow (B/Y) colour opponency channels (R/G = (L − M)/(L + M), B/Y = (((L + M)/2) − S)/(((L + M)/2) + S)) that constitute the input to the colour vision systems of Old World primates [36]. The size of the region was recorded as the total number of pixels in the region.

We analysed shape using elliptical Fourier analysis (EFA; electronic supplementary material) [37,38]. This is a well-established method for analysis of complex biological shapes that do not feature easily described homologous landmarks. The shape outline is decomposed into a series of mathematically defined ellipses (called harmonics) of varying sizes, eccentricities and orientations that describe increasingly finer shape details. EFA has successfully been used to classify biological shape previously, for example, the species identity of corals [39]. We found that we needed to extract 16 harmonics (which each have four coefficients) to achieve a close match to segmented shapes. The dimensionality of shape descriptors was then reduced using PCA [40]. PCA analyses were conducted on both the whole dataset to find the axes of interspecific shape variation and separately on the data for each species to find compact descriptors that described intraspecific variation in shape.

(f). Classification analyses

Data for classification analyses (Dryad doi:10.5061/dryad.4rb0r) consisted of the four response variables associated with each image (species identity, individual identity, sex and age class) and a set of potential predictor variables as follows. All images had overall face pattern descriptors, represented by both inter- and intraspecific eigenface scores. For those images that possessed one or both of the focal traits, we had as another set of predictors the shape of the focal patch (represented by between one and five shape components), the colour of the focal patch (mean R/G and B/Y), the brightness of the focal patch (mean luminance) and the relative size of the focal patch (mean number of pixels).

The basic analysis strategy was to conduct multiple DFA analyses to establish whether each predictor variable could reliably classify cases correctly for each of the categorical response variables. For each of the response variables we then examined two DFA models with multiple predictors; the first contained the three ‘top’ predictors that performed best in single-predictor models (regardless of their individual classification performance) and the second included all predictors that performed significantly above chance. To meet the requirement that the number of predictors is smaller than the minimum number of cases in each class, and to increase the likelihood that our results are generalizable to the population as a whole, we only conducted DFA analyses when there were at least five cases in each class. For species identity, this meant at least five individuals from each species were sampled, for sex classification at least five males and five females, and for individual identity at least five example photos of each individual were required. Owing to this, we were unable to include M. talapoin in individual identity classification and E. patas and C. mitis in species identity classification from eigenfaces.

As we were able to analyse more than one photo per individual in species, sex and age classification analyses, the samples in each class are not independent, as individual identity is an additional factor nested within the species age or sex identity factors. This violates an assumption of traditional DFA, potentially leading to incorrect results. To overcome this, we employed permuted DFA [41], a technique that uses a nested random selection of samples and random assignment of samples to classes to calculate a null distribution of the level of classification performance expected by chance (electronic supplementary material). The cross-validated classification accuracy of observed data can then be compared with this distribution to assess whether performance is significantly above chance. For consistency, we also used this test to analyse individual identity classifications. Analyses were carried out using a script written by R. Mundry (MPI for Evolutionary Anthropology, Leipzig, Germany) in R with the MASS library loaded [42,43]. Performance was judged significant if cross-validated classification accuracy of the observed data was higher than 5% of the randomized datasets. We evaluated classification performance on the basis of (i) the significance of single and multipredictor pDFA models, (ii) the percentage above chance of cross-validated classification performance and (iii) whether strong classification performance was achieved with single or multiple predictors.

3. Results

(a). Species classification

Classification of species identity was performed with a high degree of reliability on the basis of the eigenface scores, as well as the nose-spot and, to a lesser extent, eyebrow patch focal traits (table 2). For the 10 species with at least five individuals sampled, single-predictor models for overall face pattern showed that each eigenface dimension alone was able to classify species identity significantly above chance (between 146% and 247%; electronic supplementary material, table S1), but this was much lower than when all predictors were included (443%). For nose-spots, shape PCs three and five, as well as both colour and the area perceptual dimensions, performed significantly above chance (between 68% and 119%); however, this was also considerably lower than when all significant predictors were included (179%). Single component classification of species identity from eyebrows was significantly above chance for both colour traits (R/G and B/Y), but not for others.

Table 2.

Summary of species identity classification performance on the basis of either the perceptual dimensions of overall face pattern (eigenface scores), nose-spot appearance or eyebrow patch appearance. The pDFA models include all predictors that resulted in significant single-predictor models. Percentage correct is the mean percentage of cross-validated cases classified correctly after 100 test iterations. The percentage chance column shows the mean correct classification performance of 1000 random nested permutations of the test data.

| signal | predictors | % correct | % chance | % above chance | p-values |

|---|---|---|---|---|---|

| eigenfaces (10 species, 99 individuals, 490 cases) | eigenface 1–5 | 81.47 | 15.02 | 442.61 | <0.001 |

| nose-spots (4 species, 47 individuals, 230 cases) | R/G, B/Y, area, shape PCs 3 & 5 | 88.65 | 32.58 | 172.09 | <0.001 |

| eyebrow patches (4 species, 38 individuals, 188 cases) | R/G, B/Y | 64.84 | 28.03 | 130.87 | <0.001 |

(b). Sex classification

In the species we studied for which we had a sample size of at least five adult males and five adult females, we found no evidence for reliable differences that could be used to determine sex on the basis of eigenface scores (three species) nose-spots (one species) or eyebrow patches (one species; electronic supplementary material, tables S2–S4).

(c). Age group classification

There was no evidence that the age group of individuals could be classified reliability. In the study species with at least five individuals in the young adult and old adult categories, no single predictor alone could classify age category at a level significantly above chance on the basis of overall face pattern (two species), nose-spots (one species) or eyebrow patches (one species; electronic supplementary material, tables S5–S7). Even when suites of the top predictors (up to three traits that performed above chance in single-predictor models, but not significantly so) were used, the best-performing model was only able to discriminate C. nictitans age classes 28% above chance (p = 0.08).

(d). Individual classification

Individual identity classification from eigenfaces was possible in 10 of the 11 species tested (table 3). Classification was highly reliable; on average, individuals were classified correctly 70.89% of the time, 310.43% above chance. Performance was particularly impressive for A. nigroviridis (figure 3; 95.20% of cross-validated cases correctly assigned, chance 14.17%, p < 0.001) and C. wolfi (100% of cross-validated cases correctly assigned, chance 15.60%, p < 0.001). From the perceptual dimensions of nose-spots and eyebrow patches, significant individual identity classification models were found for all study species (table 3).

Table 3.

Summary of individual identity classification performance showing pDFA models that include all predictors that produced significant single-predictor models (see electronic supplementary material, tables S8–S10, for single-predictor models). Numbers in brackets after the species name denote the number of individuals and the number of cases in the sample, respectively.

| species | predictors | % correct | % chance | % above chance | p-values |

|---|---|---|---|---|---|

| overall face pattern | |||||

| A. nigroviridis (7, 45) | eigenface 1–5 | 95.2 | 14.17 | 572.07 | <0.001 |

| C. ascanius (7, 36) | eigenface 1 & 2 | 47.50 | 8.40 | 465.64 | 0.003 |

| C. diana (4, 36) | eigenface 4 | 71.83 | 25.41 | 182.75 | 0.027 |

| C. mitis (3, 30) | eigenface 1 | 92.83 | 32.71 | 167.46 | 0.011 |

| C. mona (13, 62) | eigenface 1 & 5 | 35.67 | 8.28 | 330.64 | <0.001 |

| C. neglectus (4, 22) | eigenface 3–5 | 75 | 23.88 | 214.14 | 0.047 |

| C. nictitans (11, 60) | eigenface 1, 3 & 5 | 31.31 | 6.51 | 60.83 | <0.001 |

| C. petaurista (4, 27) | eigenface 1 & 2 | 88.14 | 24.53 | 259.31 | <0.001 |

| C. sclateri (6, 39) | eigenface 1, 3 & 4 | 71.44 | 17.40 | 310.49 | <0.001 |

| C. wolfi (6, 34) | eigenface 1–3 | 100 | 15.60 | 541.03 | <0.001 |

| E. patas (3, 17) | none qualified | ||||

| nose-spots | |||||

| C. ascanius (7, 36) | R/G | 34.25 | 8.26 | 314.69 | 0.016 |

| C. nictitans (11, 60) | area, shape PC 5 | 39.40 | 9.30 | 225.30 | 0.035 |

| C. petaurista (4, 27) | PC 2 & 4 | 85.57 | 25.09 | 241.12 | 0.004 |

| C. sclateri (5, 30) | R/G, B/Y | 63.60 | 20.68 | 207.49 | 0.011 |

| eyebrow patches | |||||

| C. diana (4, 36) | luminance | 89 | 24.46 | 263.92 | 0.002 |

| C. mona (12, 64) | B/Y | 23.19 | 7.54 | 207.70 | 0.026 |

| C. neglectus (4, 22) | R/G, B/Y, area | 60 | 24.26 | 147.32 | 0.044 |

| C. wolfi (6, 34) | R/G, luminance | 63 | 16.38 | 284.66 | 0.014 |

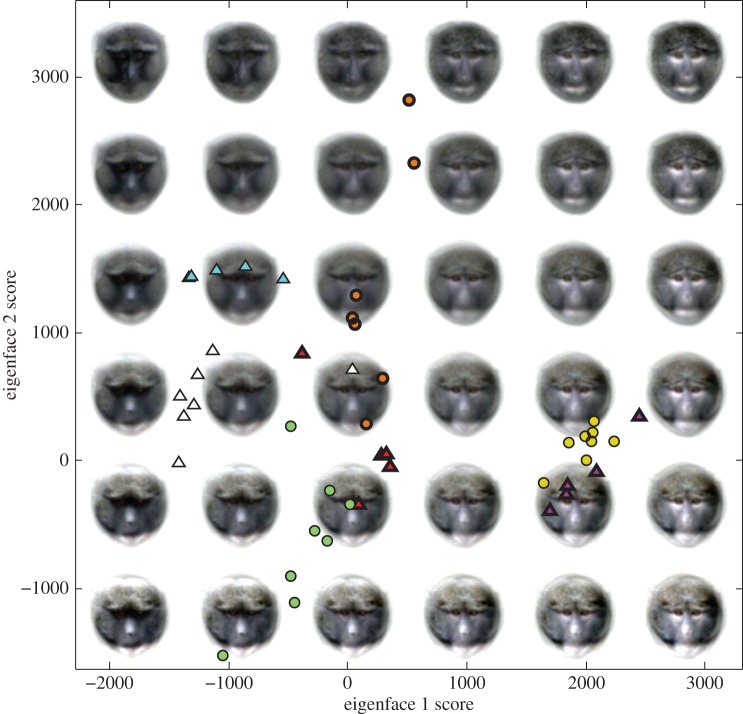

Figure 3.

How the first two eigenface dimensions were together able to classify individuals of A. nigroviridis with 95.20% cross-validated accuracy (chance = 14.17%). Differently coloured symbols represent different individuals (n = 7). Repeat photographs of each individual are generally clustered together, enabling accurate classification. Eigenface 1 corresponds closely with skin colour and eigenface 2 relates to fur colour. Triangle symbols show males and circles show females. Thick symbol outlines show older adults and thin outlines show younger adults. Note that although we did not have sufficient sample size to run classification analyses on A. nirgroviridis age and sex identity, males and females and younger and older adults overlap in space, so that individuals cannot be easily separated into these categories on the basis of these two eigenface dimensions. (Online version in colour.)

The perceptual dimensions included in models varied between species; none of the components contributed to species identification in all of the studied species and all types of component were included in the final discriminant model of multiple species. Focal trait models that only included one perceptual dimension generally performed relatively poorly compared with the multi-trait models (electronic supplementary material, tables S8–S10). The typical pattern was like that of the species classification results, with some individuals being identifiable on one dimension, for example by having a distinctively light or dark nose-spot, but with other individuals being poorly separated on this dimension (but perhaps distinctive on another dimension), explaining the higher performance when all predictors were included.

4. Discussion

We took a novel machine learning approach to investigating the potential information content of complex multicomponent visual signals, and found that this offered new insight into the types of selection pressures that are likely to have led to the extraordinary diversity in guenon face pattern appearance. Deconstructing and measuring the detailed form of 12 species' signals and investigating whether their perceptual dimensions could reliably predict basic biological information showed that species and individual identity could be consistently established correctly for the large majority of guenon species, while age and sex could not be determined in the analysed species.

Eigenface scores, nose-spots and eyebrow patches could all be used to classify species identity reliably. This is consistent with our previous finding [16] that guenons have evolved face patterns to have distinctive appearances from the species that they are sympatric with, and our conclusion that this is in order to promote reproductive isolation by functioning as a species recognition signal that reduces the likelihood of hybridization [17,18,44].

For 9/10 species, individual identity classification performance from intraspecific eigenfaces was comparable in performance to that of determining species identity from interspecific eigenfaces. The reliability of individual identity classification afforded by interindividual variation in guenon face pattern appearance strongly suggests that both overall face patterning and the appearance of focal traits have another function in supporting individual recognition [8], despite the difficulty human observers might have in distinguishing individual guenons from one another. As highly social species with complex social relationships framed at the level of the individual, guenons could gain several benefits by being able to track the individual identity of peers visually, including stabilization of dominance hierarchies, increased altruism from familiar individuals, improved mating success, improved group defence and a reduction in the frequency or intensity of agonistic interactions [8,45]. Confirmation that guenons use face patterns as a signal to individual identity and rejection of the hypothesis that interindividual variation in face patterns is a potential cue that is nevertheless not used in individual discrimination [46] will require experimental work with guenon receivers. Comparative evidence from other primates, however, indicates that this confirmation and rejection, respectively, are likely to be correct. Interindividual discrimination on the basis of visual information is relatively widespread (reviewed in [8]), especially in species with complex social systems [47]. Our own experiences suggest that in humans, face appearance has a key role in recognizing individuals, and chimpanzees and macaques have both been shown to perform individual recognition on the basis of face appearance [48]. Individual recognition using face patterns has also been shown in ungulates [49] and invertebrates: queens of the paper wasp Polistes fuscatus, who, like guenons, have linear female dominance hierarchies, also use variable face patterns to signal individual identity and dominance to conspecific females [50,51]. We also know that guenons can recognize individual identity from other sensory modalities. For example, vervet monkeys (Ch. pygerythrus) use acoustic information to discriminate between individuals' calls and can incorporate identity information with other knowledge when making social decisions [52]. Variability in call structure suggests that other guenon species also use interindividual differences in call structure to determine the identity of the sender [53].

If face appearance is used for individual recognition in guenons, a further question is whether intraspecific variation in guenon face patterns is maintained by evolution to support intraindividual discrimination, or whether it is an incidental cue. Signalling traits involved in individual recognition should vary on multiple dimensions and be highly variable within each dimension so that a sufficient number of individuals can be reliably discriminated (table 1) [7,8]. Thus, phenotypes that have evolved to support identity signalling should be under negative frequency-dependent selection, where rare phenotypes are more successful [54,55] as they will make for more distinctive faces. The evidence collected in our study was consistent with this [9]; more than one eigenface was a significant predictor of individual identity in 8/11 species, more than one nose-spot dimension was a predictor in 3/4 species and more than one eyebrow patch dimension predicted individual identity in 3/4 species. It is also possible that each eigenface dimension encodes multiple correlated signal components, and that eyebrow patches and nose-spots are just two of multiple face pattern traits that have perceptual dimensions that contribute to the encoding of individual identity. Furthermore, in accordance with the predictions made by the individual identity signalling hypothesis (table 1), eigenface scores and the perceptual dimensions of focal patches showed continuous distributions and sometimes considerable variability (figure 3). It should also be recognized that the expected degree of variation in traits will also depend on the ability of receivers to discriminate. Less able receivers will require senders to have more varied appearances than more able receivers. The lighting environment through its influence on signal transmission will also modify the level of variability in appearance required for receivers to discriminate [56].

Our evidence that guenon face patterns support both species and individual identity signalling has interesting implications for how evolution shapes the design of signals that have functions with conflicting requirements. Species recognition signals are expected to be created and maintained by disruptive selection on signal phenotypes between populations leading to distinctive species-specific signals [57]. However, they should also undergo stabilizing selection within populations in order to facilitate the matching of individuals to a species template, which would work against production of variation required for individual recognition. Given the evidence that interspecific variation in face patterns is maintained by the requirements of discrimination between sympatric heterospecific guenons [16], it is likely that guenon face patterns reflect a compromise. Such a trade-off may be made possible by specialized cognitive architecture for face processing, allowing finer differences in appearance to be discriminated for individual identity judgements between conspecifics, like that identified in humans, chimpanzees and perhaps other non-human primates [58–60]. Even without specific adaptations for face processing, expertise at face processing and a sufficiently large perceptual face-space may allow for both species-specificity and interindividual distinctiveness [61]. Greater sensitivity to differences in the appearance of conspecific compared with heterospecific faces is likely to be key to supporting this dual role [62], allowing gross features to be used for coding species identity and finer features to encode individual identity.

Although we did not have sufficient sample sizes to attempt classification of the age and sex of most species, for those that we did study, classification performance was poor (see also figure 3). This suggests that guenons do not directly signal quality or status to potential mates and competitors with their face patterns (table 1). The lack of direct cues to sex or age is perhaps not surprising given the evidence we present that reliable individual recognition is possible from face patterns. Individual identification would allow age and sex information, as well as information on quality or status, to be learnt in other ways and associated with the corresponding face pattern identity template. Signals of age, sex or quality are more likely to be needed when communicating with unfamiliar individuals because prior experience, learning and memory are not required for them to function effectively; rather, a signal can be compared with a template that may be either learned or innate [63].

In most cases, the messages of both species and individual identity were carried by several of the signal components we measured, suggesting that guenon face patterns contain both redundant and multiple messages. The increase in classification performance when multiple components were used indicates that the multicomponent designs would enhance responses over any potential single component design [10]. Three main factors are likely to promote multicomponent signalling. First, multiple components that contain some redundancy will be more resistant to environmental noise [10]. For example, while the information content of a signal based on colour decreases greatly as light levels decrease at dusk, information based on shape will degrade to a much lesser extent. Conversely, shape information may be difficult to retrieve from an atypical viewing angle, whereas colour information is less affected. Second, signalling on more than one dimension increases the overall span of phenotypic face-space. This allows for more perceptually salient variation and enables increased distinctiveness of more units, whether species or individuals. Third, there may be cognitive effects of multicomponent signalling that lead to improved detection, learning and memory of a multicomponent signal compared with a unicomponent signal of equivalent perceptual distinctiveness [2]. Unexplored in this study is how visual species and individual identity signals or cues interact with species and identity signals in other modalities [52,64]. Multimodal communication is extremely common in primates, and it would be informative to understand the similarities and differences between signalling modalities.

In summary, we present a simple machine learning approach that is straightforward to implement but provides a powerful tool for understanding the potential information content of visual signals. This was combined with perceptually inspired decompositions of both overall face pattern and the appearance of focal traits that are both simple to implement and have broad use. For example, the eigenface technique might also be applied to describing primate facial expressions [31] or lepidopteran wing colour variation, and the analysis of focal traits could be used on photos of any colour patch. We showed that for the large majority of guenon species studied, face patterns probably have a dual function of communicating species and individual identity. Interpretation of differences between groups in terms of classification accuracy is intuitive and gives insight into how the perceptual space is occupied and divided by groups.

Supplementary Material

Supplementary Material

Acknowledgements

We would like to thank Tim Caro, Innes Cuthill, Michael Sheehan and an anonymous reviewer for providing helpful comments on previous versions of the manuscript, Auralius Manurung for providing Elliptic Fourier functions for MATLAB, and Audubon Zoo, Bronx Zoo, CERCOPAN (Nigeria), Cleveland Metroparks Zoo, Edinburgh Zoo, Erie Zoo, Fort Wayne Children's Zoo, Gulf Breeze Zoo, Houston Zoo, Jackson Zoological Park, Louisiana Purchase Zoo, Little Rock Zoo, Los Angeles Zoo, Oakland Zoo, Paignton Zoo, Pittsburgh Zoo, Potawatomi Zoo, San Diego Zoo, Twycross Zoo and Zoo of Acadiana for their support with data collection.

Data accessibility

All data used for the classification analyses has been uploaded to Dryad at doi:10.5061/dryad.4rb0r.

Funding statement

The work was funded by intramural funds from New York University to J.P.H.

References

- 1.Marler P. 1961. The logical analysis of animal communication. J. Theor. Biol. 1, 295–317. ( 10.1016/0022-5193(61)90032-7) [DOI] [PubMed] [Google Scholar]

- 2.Hebets EA, Papaj DR. 2005. Complex signal function: developing a framework of testable hypotheses. Behav. Ecol. Sociobiol. 57, 197–214. ( 10.1007/s00265-004-0865-7) [DOI] [Google Scholar]

- 3.Endler JA, Mielke PW. 2005. Comparing entire colour patterns as birds see them. Biol. J. Linn. Soc. 86, 405–431. ( 10.1111/j.1095-8312.2005.00540.x) [DOI] [Google Scholar]

- 4.Allen WL, Higham JP. 2013. Analyzing visual signals as visual scenes. Am. J. Primatol. 75, 664–682. ( 10.1002/ajp.22129) [DOI] [PubMed] [Google Scholar]

- 5.De Luna AG, Hödl W, Amézquita A. 2010. Colour, size and movement as visual subcomponents in multimodal communication by the frog Allobates femoralis. Anim. Behav. 79, 739–745. ( 10.1016/j.anbehav.2009.12.031) [DOI] [Google Scholar]

- 6.Smith CL, Evans CS. 2013. A new heuristic for capturing the complexity of multimodal signals. Behav. Ecol. Sociobiol. 67, 1389–1398. ( 10.1007/s00265-013-1490-0) [DOI] [Google Scholar]

- 7.Beecher MD. 1989. Signalling systems for individual recognition: an information theory approach. Anim. Behav. 38, 248–261. ( 10.1016/S0003-3472(89)80087-9) [DOI] [Google Scholar]

- 8.Tibbetts EA, Dale J. 2007. Individual recognition: it is good to be different. Trends Ecol. Evol. 22, 529–537. ( 10.1016/j.tree.2007.09.001) [DOI] [PubMed] [Google Scholar]

- 9.Dale J. 2006. Intraspecific variation in coloration. In Bird coloration, vol. 2: function and evolution (eds Hill GE, McGraw KJ.), pp. 36–86. Cambridge, MA: Harvard University Press. [Google Scholar]

- 10.Partan SR, Marler P. 2005. Issues in the classification of multimodal communication signals. Am. Nat. 166, 231–245. ( 10.1086/431246) [DOI] [PubMed] [Google Scholar]

- 11.Moller A, Pomiankowski A. 1993. Why have birds got multiple sexual ornaments? Behav. Ecol. Sociobiol. 32, 167–176. ( 10.1007/BF00173774) [DOI] [Google Scholar]

- 12.Grether GF, Kolluru GR, Nersissian K. 2004. Individual colour patches as multicomponent signals. Biol. Rev. 79, 583–610. ( 10.1017/S1464793103006390) [DOI] [PubMed] [Google Scholar]

- 13.Grubb P, Butynski TM, Oates JF, Bearder SK, Disotell TR, Groves CP, Struhsaker TT. 2003. Assessment of the diversity of African primates. Int. J. Primatol. 24, 1301–1357. ( 10.1023/B:IJOP.0000005994.86792.b9) [DOI] [Google Scholar]

- 14.Groves CP. 2005. Order primates. In Mammal species of the world: a taxonomic and geographic reference (eds Wilson DE, Reeder DM.), pp. 111–184. Baltimore, MD: Johns Hopkins University Press. [Google Scholar]

- 15.Hart JA, et al. 2012. Lesula: a new species of Cercopithecus monkey endemic to the Democratic Republic of Congo and implications for conservation of Congo's central basin. PLoS ONE 7, e44271 ( 10.1371/journal.pone.0044271) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Allen WL, Stevens M, Higham JP. 2014. Character displacement of Cercopithecini primate visual signals. Nat. Commun. 5, 4266 ( 10.1038/ncomms5266) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kingdon J. 1980. The role of visual signals and face patterns in African forest monkeys (guenons) of the genus Cercopithecus. Trans. Zool. Soc. Lond. 35, 425–475. ( 10.1111/j.1096-3642.1980.tb00062.x) [DOI] [Google Scholar]

- 18.Kingdon J. 1988. What are face patterns and do they contribute to reproductive isolation in guenons? In A primate radiation: evolutionary biology of the African guenons (eds Gautier-Hion A, Bourliere F, Gautier JP, Kingdon J.), pp. 227–245. New York, NY: Cambridge University Press. [Google Scholar]

- 19.Coyne J, Orr H. 2004. Speciation. Sunderland, MA: Sinauer Associates. [Google Scholar]

- 20.McPeek MA, Symes LB, Zong DM, McPeek CL. 2011. Species recognition and patterns of population variation in the reproductive structures of a damselfly genus. Evolution 65, 419–428. ( 10.1111/j.1558-5646.2010.01138.x) [DOI] [PubMed] [Google Scholar]

- 21.Pfennig KS. 1998. The evolution of mate choice and the potential for conflict between species and mate-quality recognition. Proc. R. Soc. Lond. B 265, 1743–1748. ( 10.1098/rspb.1998.0497) [DOI] [Google Scholar]

- 22.Cords M. 2000. The number of males in guenon groups. In Primate males: causes and consequences of variation in group composition (ed. Kappeler PM.), pp. 84–96. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 23.Carlson AA, Isbell LA. 2001. Causes and consequences of single-male and multimale mating in free-ranging patas monkeys, Erythrocebus patas. Anim. Behav. 62, 1047–1058. ( 10.1006/anbe.2001.1849) [DOI] [Google Scholar]

- 24.Cords M. 2012. The 30-year blues: what we know and don't know about life history, group size, and group fission of blue monkeys in the Kakamega Forest, Kenya. In Long-term field studies of primates (eds Kappeler PM, Watts DP.), pp. 289–311. Berlin, Germany: Springer. [Google Scholar]

- 25.Chism J, Rogers W. 1997. Male competition, mating success and female choice in a seasonally breeding primate (Erythrocebus patas). Ethology 103, 109–126. ( 10.1111/j.1439-0310.1997.tb00011.x) [DOI] [Google Scholar]

- 26.Hafner GW. 1979. Signature information in the song of the humpback whale. J. Acoust. Soc. Am. 66, 1 ( 10.1121/1.383072) [DOI] [Google Scholar]

- 27.Blumstein DT, Munos O. 2005. Individual, age and sex-specific information is contained in yellow-bellied marmot alarm calls. Anim. Behav. 69, 353–361. ( 10.1016/j.anbehav.2004.10.001) [DOI] [Google Scholar]

- 28.Cheng J, Xie B, Lin C, Ji L. 2012. A comparative study in birds: call-type-independent species and individual recognition using four machine-learning methods and two acoustic features. Bioacoustics 21, 157–171. ( 10.1080/09524622.2012.669664) [DOI] [Google Scholar]

- 29.Turk M, Pentland A. 1991. Eigenfaces for recognition. J. Cogn. Neurosci. 3, 71–86. ( 10.1162/jocn.1991.3.1.71) [DOI] [PubMed] [Google Scholar]

- 30.Kirby M, Sirovich L. 1990. Application of the Karhunen-Loeve procedure for the characterization of human faces. IEEE Trans. Pattern Anal. Mach. Intell. 12, 103–108. ( 10.1109/34.41390) [DOI] [Google Scholar]

- 31.Tsao DY, Livingstone MS. 2008. Mechanisms of face perception. Annu. Rev. Neurosci. 31, 411–437. ( 10.1146/annurev.neuro.30.051606.094238) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stevens M, Párraga CA, Cuthill IC, Partridge JC, Troscianko TS. 2007. Using digital photography to study animal coloration. Biol. J. Linn. Soc. 90, 211–237. ( 10.1111/j.1095-8312.2007.00725.x) [DOI] [Google Scholar]

- 33.Stevens M, Stoddard MC, Higham JP. 2009. Studying primate color: towards visual system-dependent methods. Int. J. Primatol. 30, 893–917. ( 10.1007/s10764-009-9356-z) [DOI] [Google Scholar]

- 34.Eckhorn R, Reitboeck HJ, Arndt M, Dicke P. 1990. Feature linking via synchronization among distributed assemblies: simulations of results from cat visual cortex. Neural Comput. 2, 293–307. ( 10.1162/neco.1990.2.3.293) [DOI] [Google Scholar]

- 35.Lindbald T, Kinser J. 2005. Image processing using pulse-coupled neural networks. New York, NY: Springer. [Google Scholar]

- 36.Mollon J. 1989. ‘Tho’ she kneel'd in that place where they grew…’ The uses and origins of primate colour vision. J. Exp. Biol. 146, 21–38. [DOI] [PubMed] [Google Scholar]

- 37.Kuhl F, Giardina C. 1982. Elliptic Fourier features of a closed contour. Comput. Graph. Image Process. 18, 236–258. ( 10.1016/0146-664X(82)90034-X) [DOI] [Google Scholar]

- 38.McLellan T, Endler JA. 1998. The relative success of some methods for measuring and describing the shape of complex objects. Syst. Biol. 47, 264–281. ( 10.1080/106351598260914) [DOI] [Google Scholar]

- 39.Carlo JM, Barbeitos MS, Lasker HR. 2011. Quantifying complex shapes: elliptical Fourier analysis of octocoral sclerites. Biol. Bull. 220, 224–237. [DOI] [PubMed] [Google Scholar]

- 40.Rohlf FJ, Archie JW. 1984. A comparison of Fourier methods for the description of wing shape in mosquitoes (Diptera: Culicidae). Syst. Zool. 33, 302 ( 10.2307/2413076) [DOI] [Google Scholar]

- 41.Mundry R, Sommer C. 2007. Discriminant function analysis with nonindependent data: consequences and an alternative. Anim. Behav. 74, 965–976. ( 10.1016/j.anbehav.2006.12.028) [DOI] [Google Scholar]

- 42.Venables WN, Ripley BD. 2002. Modern applied statistics with S, 4th edn New York, NY: Springer. [Google Scholar]

- 43.R Development Core Team. 2013. R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. [Google Scholar]

- 44.Santana SE, Alfaro JL, Noonan A, Alfaro ME. 2013. Adaptive response to sociality and ecology drives the diversification of facial colour patterns in catarrhines. Nat. Commun. 4, 2765 ( 10.1038/ncomms3765) [DOI] [PubMed] [Google Scholar]

- 45.Barnard CJ, Burk T. 1979. Dominance hierarchies and the evolution of ‘individual recognition’. J. Theor. Biol. 81, 65–73. ( 10.1016/0022-5193(79)90081-X) [DOI] [PubMed] [Google Scholar]

- 46.Schibler F, Manser MB. 2007. The irrelevance of individual discrimination in meerkat alarm calls. Anim. Behav. 74, 1259–1268. ( 10.1016/j.anbehav.2007.02.026) [DOI] [Google Scholar]

- 47.Dale J, Lank DB, Reeve HK. 2001. Signaling individual identity versus quality: a model and case studies with ruffs, queleas, and house finches. Am. Nat. 158, 75–86. ( 10.1086/320861) [DOI] [PubMed] [Google Scholar]

- 48.Parr LA, Winslow JT, Hopkins WD, de Waal FBM. 2000. Recognizing facial cues: Individual discrimination by chimpanzees (Pan troglodytes) and rhesus monkeys (Macaca mulatta). J. Comp. Psychol. 114, 47–60. ( 10.1037/0735-7036.114.1.47) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kendrick KM, Atkins K, Hinton MR, Heavens P, Keverne B. 1996. Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav. Process. 38, 19–35. ( 10.1016/0376-6357(96)00006-X) [DOI] [PubMed] [Google Scholar]

- 50.Tibbets EA, Sheehan MJ. 2013. Individual recognition and the evolution of learning and memory in Polistes paper wasps. In Handbook of invertebate learning and memory (eds Menzel R, Benjamin P.), pp. 561–570. Waltham, MA: Academic Press. [Google Scholar]

- 51.Tibbetts EA. 2002. Visual signals of individual identity in the wasp Polistes fuscatus. Proc.R. Soc. Lond. B 269, 1423–1428. ( 10.1098/rspb.2002.2031) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cheney DL, Seyfarth RM. 1988. Assessment of meaning and the detection of unreliable signals by vervet monkeys. Anim. Behav. 36, 477–486. ( 10.1016/S0003-3472(88)80018-6) [DOI] [Google Scholar]

- 53.Price T, Arnold K, Zuberbühler K, Semple S. 2009. Pyow but not hack calls of the male putty-nosed monkey (Cercopithcus nictitans) convey information about caller identity. Behaviour 146, 871–888. ( 10.1163/156853908X396610) [DOI] [Google Scholar]

- 54.Sheehan MJ, Tibbetts EA. 2009. Evolution of identity signals: frequency-dependent benefits of distinctive phenotypes used for individual recognition. Evolution 63, 3106–3113. ( 10.1111/j.1558-5646.2009.00833.x) [DOI] [PubMed] [Google Scholar]

- 55.Sheehan MJ, Nachman MW. 2014. Morphological and population genomic evidence that human faces have evolved to signal individual identity. Nat. Commun. 5, 4800 ( 10.1038/ncomms5800) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Endler JA. 1993. The color of light in forests and its implications. Ecol. Monogr. 63, 2–27. ( 10.2307/2937121) [DOI] [Google Scholar]

- 57.Pfennig KS, Pfennig DW. 2009. Character displacement: ecological and reproductive responses to a common evolutionary problem. Q. Rev. Biol. 84, 253–276. ( 10.1086/605079) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Tate AJ, Fischer H, Leigh AE, Kendrick KM. 2006. Behavioural and neurophysiological evidence for face identity and face emotion processing in animals. Phil. Trans. R. Soc. B 361, 2155–2172. ( 10.1098/rstb.2006.1937) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Leopold DA, Rhodes G. 2010. A comparative view of face perception. J. Comp. Psychol. 124, 233–251. ( 10.1037/a0019460) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Parr LA, Heintz M, Pradhan G. 2008. Rhesus monkeys (Macaca mulatta) lack expertise in face processing. J. Comp. Psychol. 122, 390–402. ( 10.1037/0735-7036.122.4.390) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.McKone E, Kanwisher N, Duchaine BC. 2007. Can generic expertise explain special processing for faces? Trends Cogn. Sci. 11, 8–15. ( 10.1016/j.tics.2006.11.002) [DOI] [PubMed] [Google Scholar]

- 62.Dufour V, Pascalis O, Petit O. 2006. Face processing limitation to own species in primates: a comparative study in brown capuchins, Tonkean macaques and humans. Behav. Process. 73, 107–113. ( 10.1016/j.beproc.2006.04.006) [DOI] [PubMed] [Google Scholar]

- 63.Bergman TJ, Sheehan MJ. 2013. Social knowledge and signals in primates. Am. J. Primatol. 75, 683–694. ( 10.1002/ajp.22103) [DOI] [PubMed] [Google Scholar]

- 64.Partan SR. 2013. Ten unanswered questions in multimodal communication. Behav. Ecol. Sociobiol. 67, 1523–1539. ( 10.1007/s00265-013-1565-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data used for the classification analyses has been uploaded to Dryad at doi:10.5061/dryad.4rb0r.