Abstract

The eyes convey a wealth of information in social interactions. This information is analyzed by multiple brain networks, which we identified using functional magnetic resonance imaging (MRI). Subjects attempted to detect a particular directional cue provided either by gaze changes on an image of a face or by an arrow presented alone or by an arrow superimposed on the face. Another control condition was included in which the eyes moved without providing meaningful directional information. Activation of the superior temporal sulcus accompanied extracting directional information from gaze relative to directional information from an arrow and relative to eye motion without relevant directional information. Such selectivity for gaze processing was not observed in face-responsive fusiform regions. Brain activations were also investigated while subjects viewed the same face but attempted to detect when the eyes gazed directly at them. Most notably, amygdala activation was greater during periods when direct gaze never occurred than during periods when direct gaze occurred on 40% of the trials. In summary, our results suggest that increases in neural processing in the amygdala facilitate the analysis of gaze cues when a person is actively monitoring for emotional gaze events, whereas increases in neural processing in the superior temporal sulcus support the analysis of gaze cues that provide socially meaningful spatial information.

Keywords: Superior temporal sulcus (STS), Amygdala, fMRI, Social cognition, Emotion, Face perception, Joint attention, Theory of mind, Autism, Schizophrenia

1. Introduction

The eyes move not only in the service of visual perception but also to support communication by indicating direction of attention, intention, or emotion [7]. Infants stare longer at the eyes than other facial features [39] and they spontaneously follow someone else’s gaze as early as 10 weeks of age [28]. Likewise, adults tend to automatically shift their attention in the direction of another person’s gaze [20], and when this happens, the outcome is that both people are attending to the same thing. This phenomenon of joint attention has been shown to facilitate the development of language and social cognition in children, and to particularly facilitate theory of mind skills—the understanding of another person’s mental state [5,38,41]. In addition, developmental delays in gaze-following have been shown to predict a later diagnosis of autism [4], so the infant’s behavioral response to gaze cues has become an important developmental marker.

Direct eye contact—when two people gaze directly at one another—is also an important aspect of gaze behavior [35]. Perceiving the direct gaze of another person, unlike averted gaze, indicates that the direction of attention is focused on the viewer. Perceiving eye contact directs and fixes attention on the observed face [23] and in visual search paradigms, it is detected faster than averted gaze [52]. Eye contact has been shown to increase physiological response in social interactions [43], and the amount and quality of eye contact are considered important indicators of social and emotional functioning [35]. Poor eye contact is a specific diagnostic feature of autism [3] and a key component of the negative symptom syndrome in schizophrenia [2]. Thus, investigating neural mechanisms of gaze may provide key insights for understanding neurobiological factors that mediate social development, social interactions, and, ultimately, how dysfunctions in these mechanisms might be related to symptoms observed in disorders such as autism and schizophrenia.

There is mounting evidence to suggest that specific regions of the temporal lobe, such as the fusiform gyrus, superior temporal sulcus (STS), and the amygdala, are involved in gaze processing [1,26,32,33,46,47,54]. Gaze is usually perceived in the context of a face, and faces are known to activate both the fusiform gyrus and the STS [1,32,46]. However, the fusiform gyrus responds more to whole faces and the STS responds more to facial features, particularly the eyes. Face identity judgments produce relatively more fusiform gyrus activity whereas gaze direction judgments of the same visual stimuli produce relatively more STS activity [26,27]. Furthermore, lesions in the region of the fusiform gyrus can produce prosopagnosia, the inability to recognize familiar faces [16,19,31,57]. Deficits in gaze direction discrimination are generally not found after fusiform damage but are found after STS damage [11].

Despite this evidence implicating the STS region in gaze perception, the exact nature of its contribution remains unresolved. Given that the STS responds to various kinds of biological motion, Haxby and colleagues [26] proposed that STS activity to gaze reflects processing of eyes as one of several movable facial features that is useful in social communication; in contrast, fusiform activity reflects processing of invariant facial features that are most useful for discerning personal identity. STS activation to eye and mouth movements [47] is consistent with this hypothesis, as is STS activation to passive viewing of averted and direct gaze [54] given that movable facial features have implied motion even when viewed as static pictures [37].

Another hypothesis about the STS, derived from neuronal recordings of STS activity in monkeys, emphasizes that this region processes cues about the direction of attention of others [44,45]. STS cells show varying activity to pictures of different head orientations and gaze directions but show maximum firing when head and gaze are oriented in the same direction. Perrett and colleagues interpreted these data as evidence that the cells respond to the direction of attention of the observed individual [44,45]. This idea has been especially influential because it relates to joint attention and the associated deficits in autism.

However, people automatically shift their own attention in response to the directional information in gaze [20], so it is difficult to separate perception of direction of attention of another from perception of the directional information inherent in that stimulus. Thus an alternative hypothesis is that the STS responds to any type of directional cue. This notion is supported by research showing activity in the STS and adjacent areas to directional attention cues that are not biological [34]. In addition, lesions in the posterior portion of the STS, e.g. the temporal parietal junction (TPJ), can compromise spatial attention skills [40]. Furthermore, during passive viewing of averted gaze [23], activity in the STS region correlates with activity in the intraparietal sulcus (IPS)—a brain area that has been consistently implicated in neural networks of spatial attention [14].

To obtain information about specific visual analyses taking place in STS, we designed an experiment to determine whether differential STS activity would be elicited by repetitive eye motion vs. eye motion providing relevant directional information vs. directional information from a nonfacial source. Similar STS responses to all types of eye motion would implicate a basic visual motion function, whereas preferential response to cues to direction of attention would implicate analyses more closely tied to the attentional relevance of the stimuli.

The amygdala is also centrally involved in gaze processing. Patients with bilateral amygdala damage experience difficulty identifying gaze direction [56]. Amygdala activation measured with positron emission tomography (PET) has been reported to passive viewing of both direct and averted gaze [54] and to active detection of eye contact (bilateral amygdala) and averted gaze (left amygdala) [33]. These results illustrate that the amygdala is involved in monitoring gaze and suggest that the right amygdala is instrumental in the perception of direct gaze. However, it is still unclear from these studies whether the aymgdala is responding to the presence of direct gaze or the process of monitoring for its appearance. Prior fMRI gaze studies were not able to contribute to these ideas because the region of the amygdala was not scanned [27,47].

We investigated brain activations associated with gaze processing in two experiments. Although emotional facial expression was not of primary interest, we used happy and angry expressions in different blocks in both experiments. This design feature allowed us to minimize between-subject variability in spontaneous judgments of facial expression that tends to occur with neutral faces [18], and also allowed us to investigate effects of emotional expression on gaze processing.

In the first experiment we simulated the use of gaze as a cue to direction of attention. We directly tested whether regions such as STS would exhibit differential activation to gaze cues indicating direction of attention compared to nongaze cues providing directional information or to eye motion not providing directional information. In the primary condition, subjects viewed a face while the eyes of the face shifted in a fashion that implied eye motion, as if the individual was looking sequentially at different spatial locations. This Gaze task required that subjects discriminate whether or not the eyes gazed at a particular target location. Whereas prior fMRI experiments typically included only left and right gaze, we included ten different gaze positions such that fine-grained discriminations were required, thus approximating a more demanding and ecologically valid perceptual analysis of gaze cues. In control tasks an arrow, isolated or superimposed on a face, provided directional information instead of the eyes, or the eyes moved without providing relevant directional information (see Fig. 1 for examples of stimuli).

Fig. 1.

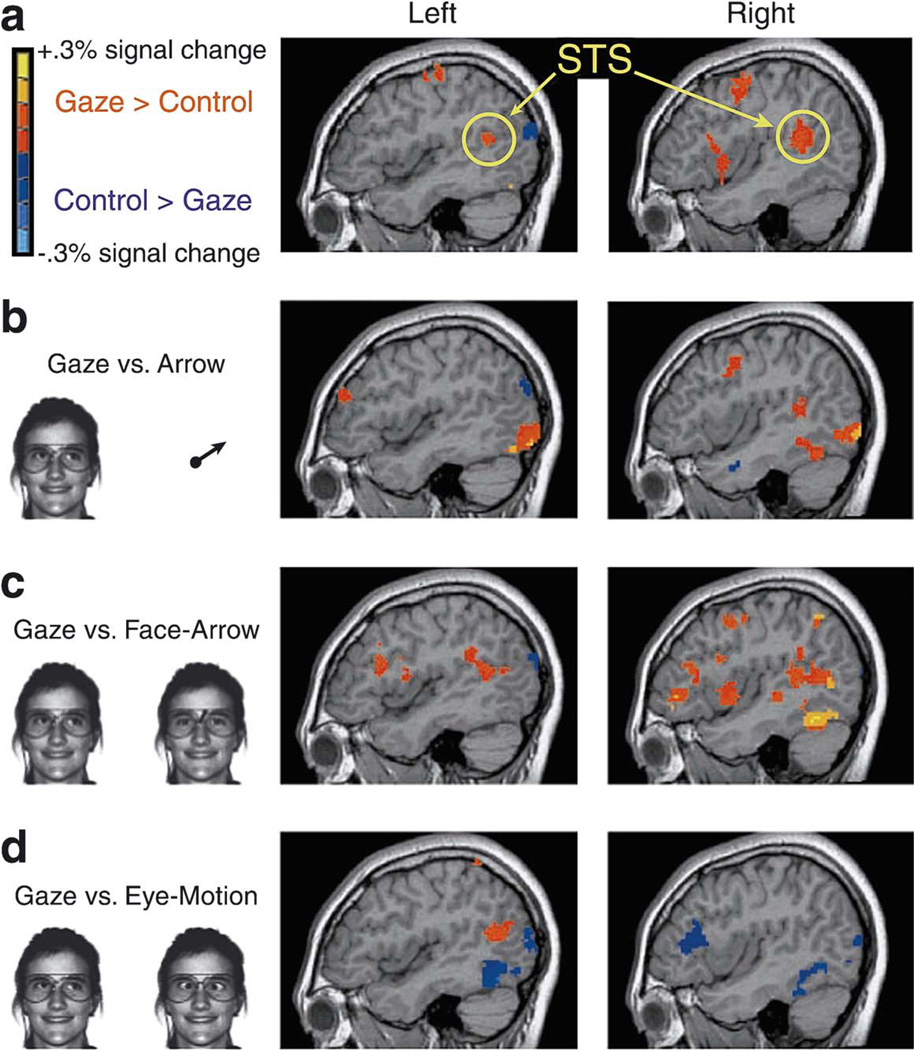

Average signal change across participants in Experiment 1, displayed on sagittal slices of a single subject’s structural MRI scan (x=±44). Only activations above threshold are shown, as indicated by the legend at the upper left. (a) Activations across all runs, collapsing across the different control conditions. (b) Activations for analyzing directional information from gaze versus directional information from an arrow (Gaze vs. Arrow). (c) Activations for analyzing directional information from gaze versus directional information from an arrow on a face (Gaze vs. Face-Arrow). (d) Activations for eye motion that provides directional information versus eye motion that does not (Gaze vs. Eye-Motion).

In a second experiment, we sought to determine whether amygdala activation was specifically associated with viewing direct gaze or with the act of monitoring gaze. Subjects identified direct gaze among direct and averted gaze trials, and trials were blocked so that direct gaze occurred on either 40% or 0% of the trials.

2. Materials and methods

2.1. Tasks

In the Gaze task, a face remained continuously on the screen and the eyes of the face looked to a certain spatial location for 300 ms and looked back to the viewer for 900 ms. The subject pressed one button when the eyes shifted to a target location and another button when the eyes shifted towards any nontarget location. All gaze cues were based on a clock template such that the target location assigned on each run corresponded to one quadrant of the clock (i.e. 1 o’clock and 2 o’clock for upper right quadrant, 4 o’clock and 5 o’clock for lower right quadrant, 7 o’clock and 8 o’clock for lower left quadrant, 10 o’clock and 11 o’clock for upper left quadrant). Eye positions for 3 o’clock and 9 o’clock always served as nontargets. Eye positions for 6 o’clock and 12 o’clock were not presented. Each eye position occurred equally often, but in a randomized order, such that target probability was 20%. In the Arrow task, a dot remained centrally on the screen and an arrow jutted out from the dot to indicate a spatial location on each trial. In the Face Arrow task, the arrow indicated spatial locations in the same manner but was superimposed in between the eyes of the face, which remained fixated forward. In the Eye Motion task, the eyes of the face moved inward toward the nose in a cross-eyed fashion on every trial. The target in this condition occurred when the eyes simultaneously changed to a slightly lighter shade of gray. The presentation rate (1 trial every 1200 ms) and the 1:4 ratio of targets to nontargets was equivalent in all tasks.

In Experiment 2, subjects were instructed to detect when the eyes of the stimulus face were looking directly at them. The stimuli in this task thus closely resembled those in the Gaze task, except that direct gaze was not used between gaze cues. After the eyes looked at a spatial location for 300 ms, the eyes closed for 900 ms. Also, trials with direct gaze were included along with the 10 averted eye positions corresponding to the clock face. Trials were blocked so that direct gaze occurred on either 0% or 40% of the trials.

The face was displayed with a static happy expression in half of the blocks and with a static angry expression in the other half of the blocks. The intensity of the two emotional expressions was roughly equivalent, based on ratings made by each subject using a 5-point scale. The emotional expression remained constant throughout each block of trials. The different eye positions of each trial were created by ‘cutting and pasting’ the eyes from another picture into the stimulus face. The glasses on the model ensured that the eyes were always placed in the same location.

Subjects practiced each task before entering the scanner. Practice stimuli for the Gaze task, Arrow task, and Face Arrow task were shown surrounded by a clock outline in order to define the spatial location of the targets. Clock numbers which were on the practice stimuli were not present in the main experiments when subjects performed the tasks in the scanner. Subjects were instructed to keep their eyes fixated during the experiment.

In Experiment 1, Gaze was paired with each control condition in a different run (Gaze and Arrow; Gaze and Face Arrow; Gaze and Eye Motion), and there were two runs of each task pair. Experiment 2 consisted of two runs with alternating blocks of 0% and 40% direct gaze. For both experiments, each run consisted of eight alternating blocks of trials, and each block consisted of 30 trials and lasted 36 s. Run and task order were counterbalanced across subjects within each experiment. Experiment 1 preceded Experiment 2 for all subjects. The opposite order would have likely given the direct gaze cue undue relevance in Experiment 1, because direct gaze was meant to be a ‘neutral’ stimulus in Experiment 1, but was a ‘salient’ stimulus in Experiment 2.

The target detection tasks were designed to ensure that subjects would keep attention focused on relevant aspects of the stimuli. Behavioral results indicated that subjects attended to the stimuli in all conditions [mean percent accuracy (min–max): Gaze=64% (40–85), Face Arrow=78% (50–96), Arrow=83% (53–95), Eye Motion=64% (35–92), Direct Gaze=66% (22–93)]. Debriefing suggested that most of the errors were not due to failures to accurately discriminate target and nontarget events. Rather, many responses were made too late. In particular, the high proportion of nontargets encouraged a repetitive motor response that became habitual, such that extra time was needed to disengage from this habitual response. Many correct responses did not occur prior to the beginning of the next trial. Unfortunately, late responses were not registered and so those trials were scored as incorrect. Most importantly for present purposes, however, participants’ reports suggest that their attention was actively engaged in the tasks even if they were making errors, and that they were differentially attending to different stimulus dimensions according to the task requirements.

2.2. Imaging

We scanned 10 healthy, right-handed volunteers (six females and four males with a mean age of 24 years, S.D.=3) on a Siemens 1.5 Tesla Vision Scanner. The institutional review board of Northwestern University approved the protocol. Each subject gave informed consent and received monetary compensation for their participation. A vacuum bag assisted in keeping the subject’s head stationary throughout the scan. Subjects registered their task responses using a button box held in their right hand.

Images were projected onto a custom-designed, non-magnetic rear projection screen. Subjects viewed the screen, located approximately 54 in. away, via a mirror placed above their eyes. Functional T2* weighted images were acquired using echo-planar imaging with a 3000 ms TR, 40 ms TE, 90° flip angle, 240 mm FOV, a 64×64 pixel matrix for 24 contiguous 6-mm thick axial slices with a resulting voxel size of 3.75×3.75×6 mm. Each run lasted 303 s including 9 s of initial fixation (with a static stimulus face on the screen), eight alternating 36-s task blocks and 6 s of an ending fixation point. In all functional runs, the MR signal was allowed to achieve equilibrium over four initial scans that were excluded from the analysis. Thus data from each scanning run consisted of 97 images.

Anatomic images were acquired using a T1-weighted 3D FLASH sequence with a 22 ms TR, 5.6 ms TE, 25° flip angle, 240 mm FOV, and a 256×256 pixel matrix, with 1-mm thick axial slices.

2.3. Data analysis

MRI data were analyzed using AFNI software [15]. Images were co-registered through time using a three-dimensional registration algorithm. Within each run, voxels containing a signal change of greater than 10% in one repetition (3 s) were assumed to be contaminated by motion or other artifact and those voxels were eliminated from further analysis. Linear drift over the time course of each run was removed. Each slice was spatially smoothed using a Gaussian filter (full-width half-maximum (FWHM)=7.5 mm). Data from the 10 subjects were normalized using the Montreal Neurological Institute Autoreg program [13]. Functional runs were visually checked for artifact and motion contamination. The last run for two subjects was dropped from analyses due to extensive signal loss from motion contamination, such that only one run was entered in the analysis for Experiment 2.

Brain areas exhibiting task-specific activity were identified by correlating the observed time course of each voxel against an idealized reference function derived from the alternating task blocks and adjusted to reflect the lag between neural activity and hemodynamic response. The signal change for each subject in each run was averaged in a random effects analysis to identify areas of significant activation across subjects for each task contrast. This group analysis was then thresholded to identify voxels that reached the minimum statistical requirement of t(9)=3.25, P<0.01 (uncorrected) occurring within a cluster of adjacent voxels at least 650 mm3 in volume (>41 voxels in the normalized data or approximately 8 voxels in the original anatomical space). All task comparisons and stated activations used this statistical threshold.

3. Results

3.1. Experiment 1

A comparison between the Gaze task and all three control conditions combined gave an overview of neural regions responsive to gaze cues that indicate the direction of another person’s attention. This combined contrast controls for visual processing of faces per se, for non-resulting meaningful eye motion, and for generic cognitive demands of extracting directional information from a stimulus. The neural network for gaze processing isolated by this analysis included three key regions: the posterior portion of the STS bilaterally, corresponding to Brodmann’s areas 22 and 39 (BA 22,39), the same area previously identified in gaze studies [27,47]; a right prefrontal region centered in the frontal eye fields (BA 8,9); and a ventral prefrontal region centered in the inferior frontal gyrus (BA 44,45). These activations are shown in Fig. 1 and listed in Table 1. However, it is essential to also evaluate contrasts with each control condition individually.

Table 1.

Significant activations for Gaze vs. combined control conditions, using all runs in Experiment 1

| Contrast | Brain regions | Brodmann’s Area |

Talairach coordinates (mm) | Volume (mm3) |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Gaze>Control | Frontal | |||||

| Left Precentral Gyrus | 4 | −47 | −18 | 60 | 1297 | |

| Right Precentral Sulcus/Precentral Gyrus | 4, 6 | 46 | −1 | 52 | 3734 | |

| Bilateral Superior Frontal Gyrus | 8 | 4 | 23 | 44 | 2172 | |

| Right Inferior Frontal Gyrus | 44, 45 | 37 | 18 | 4 | 4016 | |

| Temporal | ||||||

| Bilateral Superior Temporal Sulcus | 22, 39 | |||||

| Right | 50 | −45 | 16 | 6469 | ||

| Left | −49 | −61 | 16 | 1188 | ||

| Parietal | ||||||

| Left Middle Cingulate | 24 | −19 | 19 | 25 | 703 | |

| Occipital | ||||||

| Bilateral Occipital Cortex | 17, 18 | |||||

| Right | 21 | −97 | 3 | 7906 | ||

| Left | −21 | −94 | −6 | 12016 | ||

| Control>Gaze | Frontal | |||||

| Left Superior Frontal Gyrus | 9 | −15 | 55 | 30 | 906 | |

| Temporal/Occipital | ||||||

| Bilateral Occipital Temporal Gyrus | 36 | |||||

| Right | 28 | −43 | −17 | 1219 | ||

| Left | −30 | −48 | −17 | 922 | ||

| Parietal | ||||||

| Bilateral Inferior Parietal Gyrus | 19 | |||||

| Right | 32 | −85 | 22 | 984 | ||

| Left | −43 | −87 | 20 | 1062 | ||

When the Gaze condition was compared separately to each control condition, STS activation was also evident (Fig. 1 and Table 2). STS was more active on the right with the control condition involving analysis of direction from an arrow (Gaze–Arrow). STS was more active bilaterally with the control condition in which the arrow was superimposed on the face (Gaze–Face Arrow). And STS was more active on the left with the control condition involving eye motion that did not provide directional information (Gaze–Eye Motion). Right fusiform gyrus and multiple areas of bilateral prefrontal cortex (PFC) were activated in the Gaze task compared to both arrow tasks. However, this pattern was reversed for the Eye Motion task such that both the fusiform gyrus and the dorsolateral prefrontal cortex (primarily BA 46) were more active in the Eye Motion task than in the Gaze task.

Table 2.

Significant activations for Gaze vs. each control condition separately in Experiment 1

| Contrast | Brain regions |

Brodmann’s Area |

Talairach coordinates (mm) | Volume (mm3) |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Gaze>Arrow | Frontal | |||||

| Right Precentral Gyrus | 6 | 49 | 3 | 44 | 2156 | |

| Left Middle Frontal Gyrus | 10 | −41 | 50 | 19 | 1219 | |

| Right Inferior Frontal Gyrus | 46 | 44 | 20 | 16 | 578a | |

| Temporal | ||||||

| Right Fusiform Gyrus | 20, 37 | 46 | −55 | −19 | 1188 | |

| Right Superior Temporal Sulcus | 22, 39 | 45 | −44 | 11 | 766 | |

| Occipital | ||||||

| Bilateral Occipital Cortex | 17, 18, 19 | |||||

| Right | 25 | −94 | 1 | 20312 | ||

| Left | −23 | −94 | −5 | 26156 | ||

| Arrow>Gaze | Frontal | |||||

| Left Superior Frontal Gyrus (Anterior) | 9 | −11 | 51 | 35 | 2984 | |

| Bilateral Superior Frontal Gyrus | 8 | 0 | 65 | 14 | 1234 | |

| Temporal | ||||||

| Right Middle Temporal Gyrus (Anterior) | 21 | 58 | −12 | −20 | 3547 | |

| Parietal | ||||||

| Left Inferior Parietal Gyrus | 39, 19 | −46 | −83 | 27 | 984 | |

| Occipital | ||||||

| Bilateral Precuneous | 30, 7, 19 | −3 | −71 | 10 | 19984 | |

| Left Cerebellum | −34 | −85 | −37 | 656 | ||

| Gaze>Face Arrow | Frontal | |||||

| Right Precentral Gyrus | 4, 6 | 49 | 4 | 46 | 2766 | |

| Bilateral Superior Frontal Gyrus | 6, 8 | 1 | 21 | 46 | 1000 | |

| Right Middle Frontal Gyrus | 46 | 48 | 33 | 17 | 2062 | |

| Left Middle Frontal Sulcus | 46 | −53 | 16 | 15 | 6547 | |

| Right Inferior Frontal Gyrus | ||||||

| Operculum | 44, 45 | 49 | 11 | 0 | 2766 | |

| Triangular | 10 | 45 | 43 | −5 | 2453 | |

| Anterior portion | 45, 46 | 30 | 21 | −3 | 1188 | |

| Right Inferior Frontal Sulcus | 44, 46 | 42 | 13 | 23 | 969 | |

| Temporal | ||||||

| Bilateral Superior Temporal Sulcus | 22 | |||||

| Right | 54 | −51 | 11 | 16422 | ||

| Left | −53 | −55 | 15 | 5203 | ||

| Right Middle Temporal Gyrus | 22 | 50 | −25 | −4 | 969 | |

| Right Fusiform Gyrus | 20, 37 | 45 | −57 | −20 | 2984 | |

| Parietal | ||||||

| Right Inferior Parietal Lobule | 7 | 47 | −57 | 50 | 750 | |

| Face Arrow>Gaze | Parietal | |||||

| Left Intraparietal Sulcus | 7 | −22 | −68 | 53 | 3141 | |

| Occipital | ||||||

| Bilateral Lateral Occipital Cortex | 39, 19 | |||||

| Right | 36 | −89 | 18 | 1078 | ||

| Left | −38 | −91 | 19 | 1312 | ||

| Gaze>Eye Motion | Frontal | |||||

| Right Precentral Gyrus | 6 | 22 | −8 | 51 | 828 | |

| Left Post Central Sulcus | 7 | −37 | −52 | 66 | 781 | |

| Left Inferior Frontal Sulcus | 44 | −34 | −10 | 28 | 703 | |

| Temporal | ||||||

| Left Superior Temporal Sulcus | 39 | −47 | −67 | 17 | 4875 | |

| Left Parahippocampal Gyrus | 28 | −13 | −33 | −17 | 1625 | |

| Right Parahippocampal Region | 28 | 19 | −22 | −22 | 1156 | |

| Parietal | ||||||

| Left Posterior Cingulate | 23 | −24 | −47 | 17 | 969 | |

| Occipital | ||||||

| Right Mid/Superior Occipital Cortex | 7 | 11 | −70 | 54 | 1766 | |

| Eye Motion>Gaze | Frontal | |||||

| Bilateral Middle Frontal Gyrus | 46 | |||||

| Right | 47 | 32 | 11 | 3953 | ||

| Left | −47 | 29 | 9 | 531a | ||

| Temporal/Occipital | ||||||

| Bilat. Occipital Cortex–Fusiform Gyrus | 17, 20, 37 | |||||

| Right | 36 | −73 | −2 | 8281 | ||

| Left | −38 | −77 | −4 | 12125 | ||

This cluster is below volume threshold and is listed because of theoretical importance.

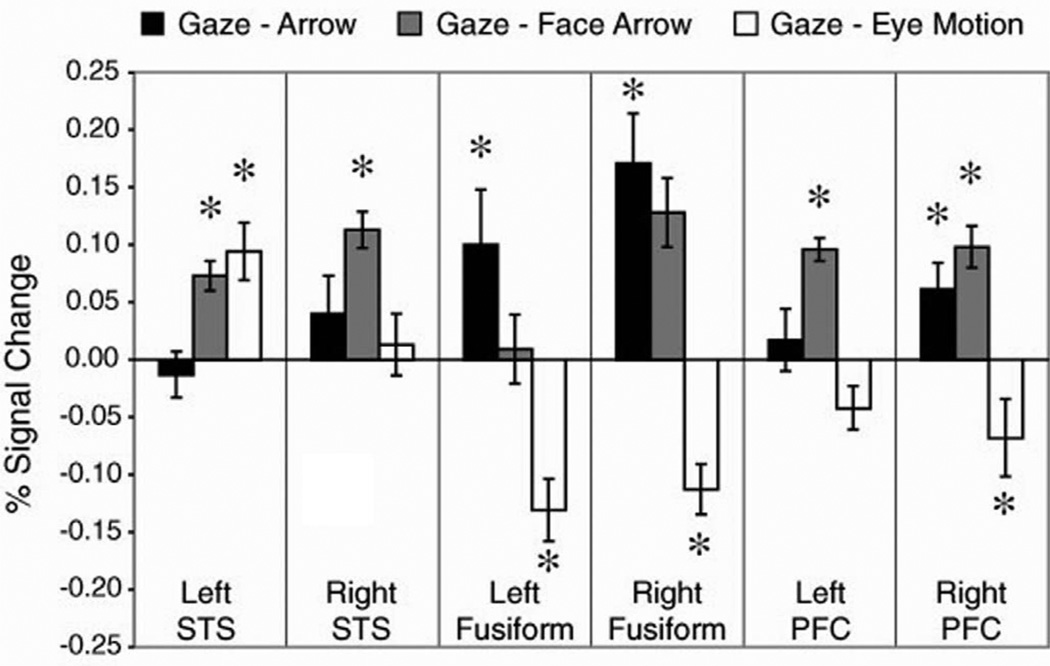

To further quantify STS, fusiform gyrus, and PFC activations, we performed a region-of-interest (ROI) analysis for these three regions. ROIs were defined by creating a union of all three contrasts. This procedure identified brain regions activated by either the Gaze task or the control condition in each contrast. The average signal change across participants was computed bilaterally for STS, fusiform gyrus, and PFC (BA46 and posterior BA10, extending in the left hemisphere to superior BA44, 45). Fig. 2 shows the amount of signal change for each region in each contrast. This single analysis confirms the pattern of activity seen in the whole-brain contrasts. A repeated-measures ANOVA showed a significant Region by Task interaction [F(4,36)=22, P<0.001], substantiating the different patterns. To further elucidate this interaction, we performed one group t-tests for each task contrast in each region of interest. These effects all indicated greater responses in the Gaze condition than in the control condition, with the exception of the activations in the contrast with the Eye Motion condition, where the fusiform and PFC responses were larger in the Eye Motion condition. Specifically, significant activations for the Gaze task in the Gaze–Arrow contrast were shown in bilateral fusiform and right PFC, in the Gaze–Face Arrow contrast in bilateral STS, right fusiform, and bilateral PFC, and for the Gaze–Eye Motion contrast in left STS. There was significant activation for the Eye Motion condition in the Gaze–Eye Motion contrast in the bilateral fusiform, and right PFC (see Fig. 2 for response magnitude).

Fig. 2.

Percent signal change in functionally defined regions of interest for each contrast in Experiment 1. Activations greater than zero at P<0.05 are denoted by *.

In addition, we examined the relationship of the hemodynamic response patterns in the six regions of interest. For this analysis, we correlated the time series for each region of interest within each subject, and then identified the strength of the correlations across all subjects. This showed a significant correlation of brain activity between the specified regions. All regions of interest (STS, FFA and PFC bilaterally) were significantly correlated (t(9)>2.82, P<0.05), with the exception of the left FFA and the right PFC.

Whole brain results were also analyzed as a function of whether a happy or angry face appeared in the Gaze task. Angry faces produced more activation than happy faces in gaze processing regions, including the STS and prefrontal cortex, primarily in the right hemisphere (Table 3). The effect of expression was strongest in the Gaze task, but angry faces also elicited more activity in the control conditions (Face Arrow and Eye Motion).

Table 3.

Significant activations between happy and angry faces

| Condition and contrast |

Brain regions |

Brodmann’s Area |

Talairach coordinates (mm) | Volume (mm3) |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| GAZE: Angry>Happy | Frontal | |||||

| Bilateral Middle Frontal Gyrus | ||||||

| Right Anterior Portion | 46 | 48 | 27 | 21 | 3266 | |

| Right Middle Portion | 8, 9 | 31 | 26 | 45 | 3172 | |

| Left Anterior Portion | 8, 9 | −47 | 15 | 38 | 1266 | |

| Left Middle Portion | 9 | −49 | 23 | 24 | 688 | |

| Left Middle Frontal Sulcus | ||||||

| Anterior Portion | 10, 46 | −47 | 39 | 2 | 5578 | |

| Middle/Inferior Portion | 44 | −40 | 5 | 26 | 1984 | |

| Temporal | ||||||

| Bilateral Superior Temporal Sulcus | 22, 39 | 35641a | ||||

| Right | 42 | −57 | 26 | |||

| Left | −35 | −67 | 31 | |||

| Bilateral Middle Temporal Sulcus | 21 | |||||

| Right | 54 | −52 | −5 | 1578 | ||

| Left | −60 | −54 | −5 | 703 | ||

| Occipital | ||||||

| Bilateral Precuneous | 7 | 1 | −67 | 42 | 35641 | |

| Bilateral Precuneous (Inferior) | 30 | 9 | −60 | 16 | 1969 | |

| FACE ARROW: Angry>Happy | Frontal | |||||

| Left Post Central Gyrus and Sulcus | 4, 6 | −34 | −43 | 60 | 859 | |

| Temporal | ||||||

| Left Posterior Lateral Sulcus | 40 | −38 | −36 | 16 | 719 | |

| Occipital | ||||||

| Right Occipital Lobe, Cuneous | 18, 19 | 15 | −90 | 32 | 812 | |

| FACE ARROW: Happy>Angry | Right Medial Cerebellum | 11 | −55 | −37 | 859 | |

| EYE MOTION: Angry>Happy | Frontal | |||||

| Right Middle Frontal Sulcus | 44, 45, 46 | 34 | 40 | 8 | 4047 | |

| Right Middle Frontal Gyrus | 46, 9 | 45 | 32 | 27 | 969 | |

| Left Middle Frontal Sulcus and Gyrus | 10 | −44 | 51 | 2 | 1375 | |

| DIRECT GAZE: Angry>Happy | Frontal | |||||

| Right Middle Cingulate | 23,24 | 5 | −14 | 24 | 2562 | |

| DIRECT GAZE: Happy>Angry | Frontal | |||||

| Right Anterior Cingulate | 12,32 | 6 | 47 | −7 | 3188 | |

This cluster extended from the STS up through the IPS bilaterally.

3.2. Experiment 2

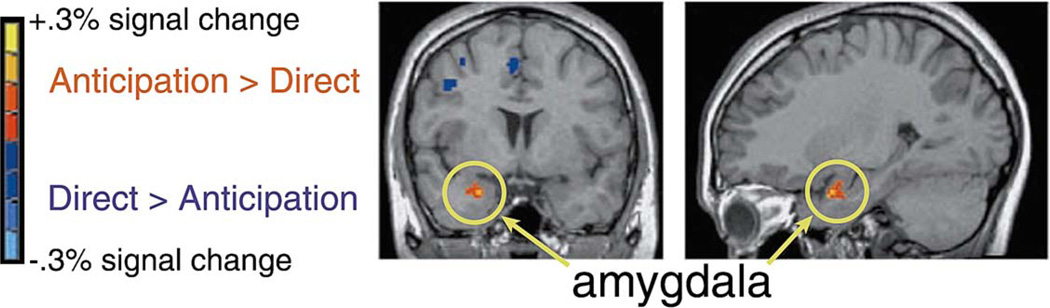

In the comparison between blocks in which the direct gaze target occurred on 40% vs. 0% of the trials, the right amygdala was more active during the 0% condition, when the unfulfilled anticipation of direct gaze was most prominent (Fig. 3). Additional activation in the left temporal lobe was lateral to the amygdala, centered in the inferior temporal gyrus. Significant activations for direct gaze blocks were concentrated in frontal cortex (Table 4).

Fig. 3.

Average signal change across participants for Experiment 2, showing the contrast between 0% and 40% Direct Gaze conditions, displayed on a coronal (y=4) and a sagittal (x=26) slice from a single subject’s structural MRI scan.

Table 4.

Significant activations in Experiment 2

| Contrast | Brain regions |

Brodmann’s Area |

Talairach coordinates (mm) | Volume (mm3) |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Anticipation>Direct | Frontal | |||||

| Left Superior Frontal Gyrus | 9 | −19 | 43 | 49 | 1891 | |

| Temporal | ||||||

| Right Amygdala | 28 | 3 | −27 | 672 | ||

| Left Inferior Temporal Gyrus | 20, 21 | −48 | −7 | −31 | 672 | |

| Direct>Anticipation | Frontal | |||||

| Right Precentral Sulcus | 4, 6 | 39 | −4 | 52 | 2344 | |

| Left Post Central Gyrus | 2, 3, 5 | −50 | −33 | 55 | 1828 | |

| Bilateral Superior Frontal Gyrus | 6 | 1 | 8 | 49 | 1391 | |

| Parietal | ||||||

| Right Intraparietal Sulcus | 7 | 36 | −60 | 46 | 734 | |

| Left Cerebellum | −40 | −53 | −37 | 953 | ||

4. Discussion

Patterns of activation revealed with fMRI in these two experiments showed that distinct contributions arise from brain networks in four regions thought to be involved in gaze processing. Face and gaze perception is accomplished via contributions from the STS, amygdala, fusiform gyrus, and PFC. Several insights into how gaze information is extracted can be gained by considering the distinct ways in which facial input is analyzed in these regions.

4.1. Gaze as a directional cue

4.1.1. STS

One key portion of the distributed networks for gaze processing is centered in the posterior STS region of the temporal lobe. The STS was more active when subjects analyzed direction from gaze than when they analyzed comparable directional information from an arrow, even when that arrow was superimposed on a face with the eyes clearly visible. Furthermore, the STS was more active to eye motion that indicated direction of attention than eye motion that did not. The most likely functional role for STS activity is therefore in the analysis of meaningful eye motion that can indicate information of social importance such as the intentions of another person or their direction of attention.

Our results address three hypotheses concerning the function of the STS, namely, that the STS is specifically responsive to moving or movable facial features [26], to the direction of attention of another person [44,45], or to any type of directional cue. Our findings support the hypothesis that STS responds to gaze cues that indicate the direction of attention of another individual. The finding that eye motion activated STS more when it provided directional information than when it did not implies that this region is sensitive not simply to motion cues but that it preferentially responds to certain types of eye motion. Eye motion that provided task-relevant information about spatial location was more meaningful. Subjects attended to moving eyes in both the Gaze and Eye Motion tasks. Therefore, STS activity cannot be described simply as a response to this movable facial feature. A satisfactory explanation for increased STS activity in the Gaze task relative to the Eye Motion task must go beyond hypotheses emphasizing either movable facial features or generic biological motion.

The hypothesis that STS is central to the analysis of gaze indicators of direction of attention does not rule out a broader role in analyzing various biological signals important for human social communication [9]. STS activity has been observed in response to several types of human movements, including hand and whole-body movements [8,24,25,48]. STS activity in these contexts may reflect the analysis of biological cues that provide meaningful social signals. In other words, STS may analyze meaningful biological motion, where ‘meaning’ depends on the social situation within which the movement occurs. This idea is supported by several studies comparing meaningful and non-meaningful human movements. When videos of American Sign Language were viewed, the STS was more active for viewers who understood the signals than for those who didn’t [42]. STS activation has also been reported in response to possible versus impossible human movements [50] as well as meaningful vs. non-meaningful hand motions [17].

The extant data thus indicate that STS processing is concerned with more than just perceptual aspects of moving or movable body parts. Rather, networks in this brain region may analyze gaze and other movements to the extent that these cues meaningfully contribute to social communication. Our findings suggest that achieving joint attention, a pivotal skill in social cognition, is facilitated by the analysis of sensory cues in the STS. Furthermore, given that gaze provides a highly informative window into mental state [7,51], the STS could be part of a larger neural network mediating theory of mind [10].

4.1.2. Fusiform gyrus and dorsolateral PFC

Our findings demonstrated a different functional role for the fusiform gyrus. The pattern of fusiform activations across the three contrasts illustrates that this region, while engaged in face and gaze processing, is not specifically responsive to directional gaze information. Both fusiform gyrus and dorsolateral portion of the PFC (BA 46) were more active in the Eye Motion task than in the Gaze task (Fig. 1d). Given that both tasks required attention to the eyes, fusiform activity cannot simply be explained as a response to invariant aspects of a face. There are several reasons why the Eye Motion task may have provoked such strong fusiform activity.

One possibility is that fusiform and dorsolateral PFC are engaged in analyzing perceptual properties of faces. In the Eye Motion task, subjects attended to moving eyes while vigilant for a gray-scale color change of the pupil. Perceptual processing of these facial features could have provoked fusiform activity. Previous fMRI studies indicate that color perception provokes activity in inferior temporal regions that are adjacent to and partially overlapping with face sensitive areas [12]. Because subjects here were attending to color within the face, inferior temporal regions sensitive to both faces and color may have been active. The dorsolateral PFC has been shown to modulate activity in visual cortex [6], so the dorsolateral PFC activity in this task could reflect the monitoring and identification of perceptual changes in the face stimuli.

Another possibility is that fusiform and dorsolateral PFC responses may reflect stimulus novelty. Given that people seldom view eyes moving repetitively in a cross-eyed fashion, the novelty of this perceptual stimulus may have increased activity in the fusiform region and visual cortex generally. The PFC region activated in this task (BA 46) is likewise active in response to novel stimuli [36]. However a novelty hypothesis cannot explain the prominent activity of both fusiform and this PFC region for Gaze as compared to the Arrow and Face Arrow conditions.

The finding that the fusiform gyrus was more active when subjects were analyzing direction from gaze than when analyzing direction from an arrow, by itself or superimposed on a face, is consonant with other findings that the fusiform face responsive area is more active during selective attention to faces than selective attention to objects [26,31,32,46]. Our study adds to these data by showing that selective attention to a specific facial feature can also produce differential fusiform activation. Clearly the fusiform response to a face is not uniform and automatic but rather is sensitive to attention [55]. The present data also support prior suggestions that this fusiform area is relatively less important for gaze perception than for facial perception in general.

4.1.3. Medial and ventral PFC

Medial and lateral portions of the superior frontal gyrus (BA 8,9), precentral gyrus (BA 4,6) and inferior frontal gyrus (BA 44, 45) were active to meaningful gaze cues while controlling for eye motion and nonbiological directional information (Table 1; Fig. 1a). These areas may facilitate understanding another person’s mental state. The superior frontal gyrus (BA 8,9) has been consistently activated in a variety of theory of mind tasks [22,49]. In addition, the precentral gyrus and inferior frontal gyrus have been identified as ‘mirror system’ areas [29]—brain regions that are active to both the observation and execution of an action. This neural ‘mirroring’ may facilitate our understanding of another person’s behavior by simulating the observed action [48]. Because we did not specifically manipulate theory of mind processes in this experiment, it is unclear whether these regions are engaged in analyzing mental state. However, future studies aimed specifically at identifying mental state from gaze cues may be helpful in further identifying the contribution of medial and ventral PFC to gaze processing.

4.2. Emotion effects

Angry faces in the Gaze task elicited more activity in the STS region than did happy faces, and this activation extended from the STS dorsally through the intraparietal sulcus (IPS). Although this finding was not predicted, this influence of facial expression on STS activity adds support to our interpretation that the STS is responsive to gaze cues that are meaningful to social interaction. Gaze that provides information about the direction of attention of an angry person may, perhaps automatically, be more meaningful than that same information from a happy person [21,30]. Understanding where an angry person is attending and what they might be angry about has immediate relevance to one’s own ability to successfully navigate a potentially threatening or aversive environment. In support of this idea, the IPS activity may reflect recruitment of neural networks supporting spatial attention.

4.3. Direct gaze

The aim of Experiment 2 was to disentangle monitoring for the appearance of direct gaze from actual observation of direct gaze. During the direct gaze detection task, the amygdala was more active during blocks when direct gaze never occurred than it was during blocks when it occurred on 40% of the trials. These results are consistent with the idea that the amygdala is involved in monitoring gaze [33]. Moreover, amygdala activity may be heightened when a person is particularly vigilant for direct gaze.

Specifically, the intriguing pattern of amygdala activity revealed in the present design suggests that the right amygdala responds less to the experience of direct gaze per se than to circumstances in which one is awaiting such social contact to happen momentarily. Sensory processing of gaze information is thus not the key factor eliciting amygdala activity. This view of the amygdala as important for sensory monitoring provides a reinterpretation of previous data showing amygdala activity to various kinds of gaze cues [54] and gaze tasks [33]. Our results support the general notion that the amygdala is part of a vigilance system that can facilitate the analysis of sensory input for emotionally or socially salient information [53].

Acknowledgements

This research was supported by an NIMH Predoctoral Fellowship F31-MH12982 (CIH). The authors would like to thank Rick Zinbarg, William Revelle, and Marcia Grabowecky for helpful suggestions on experimental design, Katherine Byrne for assistance with stimuli production, and Joseph Coulson for assistance with manuscript preparation.

References

- 1.Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends Cognit. Sci. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- 2.Andreasen NC. Negative symptoms in schizophrenia. Definition and reliability. Arch. Gen. Psychiatry. 1982;39:784–788. doi: 10.1001/archpsyc.1982.04290070020005. [DOI] [PubMed] [Google Scholar]

- 3.American Psychiatric Association (APA) Diagnostic and Statistical Manual of Mental Disorders. 4th Edition. Washington, DC: American Psychiatric Association; 1994. [Google Scholar]

- 4.Baird G, Charman T, Baron-Cohen S, Cox A, Swettenham J, Wheelwright S, Drew A. A screening instrument for autism at 18 months of age: a 6-year follow-up study. J. Am. Acad. Child Adolescent Psychiatry. 2000;39:694–702. doi: 10.1097/00004583-200006000-00007. [DOI] [PubMed] [Google Scholar]

- 5.Baldwin D. Understanding the link between joint attention and language. In: Moore C, Dunham PJ, editors. Joint Attention: Its Origins and Role in Development. Hillsdale, NJ: Lawrence Erlbaum Associates; 1995. [Google Scholar]

- 6.Barcelo F, Suwazono S, Knight RT. Prefrontal modulation of visual processing in humans. Nat. Neurosci. 2000;3:399–403. doi: 10.1038/73975. [DOI] [PubMed] [Google Scholar]

- 7.Baron-Cohen S. Mindblindness: An Essay On Autism and Theory of Mind. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- 8.Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J. Neurosci. 1996;16:3737–3744. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brothers L. The social brain: a project for integrating primate behaviour and neurophysiology in a new domain. Concep. Neurosci. 1990;1:27–51. [Google Scholar]

- 10.Calder AJ, Lawrence AD, Keane J, Scott SK, Owen AM, Christoffels I, Young AW. Reading the mind from eye gaze. Neuropsychologia. 2002;40:1129–1138. doi: 10.1016/s0028-3932(02)00008-8. [DOI] [PubMed] [Google Scholar]

- 11.Campbell R, Heywood C, Cowey A, Regard M, Landis T. Sensitivity to eye gaze in prosopagnosia patients and monkeys with superior temporal sulcus ablation. Neuropsychologia. 1990;28:1123–1142. doi: 10.1016/0028-3932(90)90050-x. [DOI] [PubMed] [Google Scholar]

- 12.Clark VP, Parasuraman R, Keil K, Kulansky R, Fannon S, Maisog JM, Ungerleider LG, Haxby JV. Selective attention to face identity and color studied with fMRI. Hum. Brain Mapping. 1997;5:293–297. doi: 10.1002/(SICI)1097-0193(1997)5:4<293::AID-HBM15>3.0.CO;2-F. [DOI] [PubMed] [Google Scholar]

- 13.Collins DL, Neelin P, Peters TM, Evans AC. Automatic 3D inter-subject registration of MR volumetric data in standardized Talairach space. J. Comput. Assisted Tomogr. 1994;18:192–205. [PubMed] [Google Scholar]

- 14.Corbetta M. Frontoparietal cortical networks for directing attention and the eye to visual locations: identical, independent, or overlapping neural systems? Proc. Natl. Acad. Sci. 1998;95:831–838. doi: 10.1073/pnas.95.3.831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- 16.De Renzi E, Faglioni P, Grossi D, Nichelli P. Apperceptive and associative forms of prosopagnosia. Cortex. 1991;27:213–221. doi: 10.1016/s0010-9452(13)80125-6. [DOI] [PubMed] [Google Scholar]

- 17.Decety J, Grezes J, Costes N, Perani D, Jeannerod M, Procyk E, Grassi F, Fazio F. Brain activity during observation of actions. Influence of action content and subject’s strategy. Brain. 1997;120:1763–1777. doi: 10.1093/brain/120.10.1763. [DOI] [PubMed] [Google Scholar]

- 18.Ekman P, Friesen WV. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- 19.Farah MJ, Wilson KD, Drain HM, Tanaka JR. The inverted face inversion effect in prosopagnosia: evidence for mandatory, face-specific perceptual mechanisms. Vis. Res. 1995;35:2089–2093. doi: 10.1016/0042-6989(94)00273-o. [DOI] [PubMed] [Google Scholar]

- 20.Friesen CK, Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonom. Bull. Rev. 1998;5:490–495. [Google Scholar]

- 21.Frodi LM, Lamb ME, Leavitt LA, Donovan WL. Fathers’ and mothers’ responses to infants’ smiles and cries. Infant Behav. Dev. 1978;1:187–198. [Google Scholar]

- 22.Gallagher HL, Frith CD. Functional imaging of ‘theory of mind’. Trends Cognit. Sci. 2003;7:77–83. doi: 10.1016/s1364-6613(02)00025-6. [DOI] [PubMed] [Google Scholar]

- 23.George N, Driver J, Dolan R. Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage. 2001;13:1102–1112. doi: 10.1006/nimg.2001.0769. [DOI] [PubMed] [Google Scholar]

- 24.Grezes J, Costes N, Decety J. The effects of learning and intention on the neural network involved in the perception of meaningless actions. Brain. 1999;122:1875–1887. doi: 10.1093/brain/122.10.1875. [DOI] [PubMed] [Google Scholar]

- 25.Grossman E, Donnelley M, Price R, Pickens D, Morgan V, Neighbor G, Blake R. Brain areas involved in the perception of biological motion. J. Cognit. Neurosci. 2000;12:711–720. doi: 10.1162/089892900562417. [DOI] [PubMed] [Google Scholar]

- 26.Haxby JV, Hoffman EA, Gobbini IM. The distributed human neural system for face perception. Trends Cognit. Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- 27.Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 2000;3:80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- 28.Hood BM, Willen JD, Driver J. Adult’s eyes trigger shifts of visual attention in human infants. Psychol. Sci. 1998;9:131–134. [Google Scholar]

- 29.Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- 30.Kahneman D, Tversky A. Choices, values, and frames. Am. Psychol. 1984;39:341–350. [Google Scholar]

- 31.Kanwisher N. Domain specificity in face perception. Nat. Neurosci. 2000;3:759–763. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]

- 32.Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Ito K, Fukuda H, Kojima S, Nakamura K. The human amygdala plays an important role in gaze monitoring. A PET study. Brain. 1999;122:779–783. doi: 10.1093/brain/122.4.779. [DOI] [PubMed] [Google Scholar]

- 34.Kim YH, Gitelman DR, Nobre AC, Parrish TB, LaBar KS, Mesulam MM. The large-scale neural network for spatial attention displays multifunctional overlap but differential asymmetry. Neuroimage. 1999;9:269–277. doi: 10.1006/nimg.1999.0408. [DOI] [PubMed] [Google Scholar]

- 35.Kleinke CL. Gaze and eye contact: a research review. Psychol. Bull. 1986;100:78–100. [PubMed] [Google Scholar]

- 36.Knight RT. Decreased response to novel stimuli after prefrontal lesions in man. Electroencephalogr. Clin. Neurophysiol. 1984;59:9–20. doi: 10.1016/0168-5597(84)90016-9. [DOI] [PubMed] [Google Scholar]

- 37.Kourtzi Z, Kanwisher N. Activation in human MT/MST by static images with implied motion. J. Cognit. Neurosci. 2000;12:48–55. doi: 10.1162/08989290051137594. [DOI] [PubMed] [Google Scholar]

- 38.Loveland K, Landry S. Joint attention and language in autism and developmental language delays. J. Autism Dev. Disord. 1986;16:335–349. doi: 10.1007/BF01531663. [DOI] [PubMed] [Google Scholar]

- 39.Maurer D. Infants perception of facedness. In: Field T, Fox N, editors. Social Perception of Infants. Norwood, NJ: Ablex; 1985. [Google Scholar]

- 40.Mesulam MM. Spatial attention and neglect: parietal, frontal and cingulate contributions to the mental representation and attentional targeting of salient extrapersonal events [published erratum appears in Philos Trans R Soc Lond B Biol Sci 1999 Dec 29;354(1352):2083] Philos. Trans. R. Soc. Lond. B: Biol. Sci. 1999;354:1325–1346. doi: 10.1098/rstb.1999.0482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mundy P, Crowson M. Joint attention an early social communication: Implications for research on intervention with autism. J. Autism Dev. Disord. 1997;27:653–676. doi: 10.1023/a:1025802832021. [DOI] [PubMed] [Google Scholar]

- 42.Neville HJ, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, Braun A, Clark V, Jezzard P, Turner R. Cerebral organization for language in deaf and hearing subjects: biological constraints and effects of experience. Proc. Natl. Acad. Sci. USA. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Nichols K, Champness B. Eye Gaze and GSR. J. Exp. Soc. Psychol. 1971;7:623–626. [Google Scholar]

- 44.Perrett DI, Hietanen JK, Oram MW, Benson PJ. Organization and functions of cells responsive to faces in the temporal cortex. Philos. Trans. R. Soc. Lond. B: Biol. Sci. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- 45.Perrett DI, Smith PA, Potter DD, Mistlin AJ, Head AS, Milner AD, Jeeves MA. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc. R. Soc. Lond. B: Biol. Sci. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- 46.Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J. Neurosci. 1996;16:5205–5215. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. J. Neurosci. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Rizzolatti G, Fadiga L, Matelli M, Bettinardi V, Paulesu E, Perani D, Fazio F. Localization of grasp representations in humans by PET: 1. Observation versus execution. Exp. Brain Res. 1996;111:246–252. doi: 10.1007/BF00227301. [DOI] [PubMed] [Google Scholar]

- 49.Siegal M, Varley R. Neural systems involved in ‘theory of mind’. Nat. Rev. Neurosci. 2002;3:463–471. doi: 10.1038/nrn844. [DOI] [PubMed] [Google Scholar]

- 50.Stevens JA, Fonlupt P, Shiffrar M, Decety J. New aspects of motion perception: selective neural encoding of apparent human movements. Neuroreport. 2000;11:109–115. doi: 10.1097/00001756-200001170-00022. [DOI] [PubMed] [Google Scholar]

- 51.Tomasello M. Joint attention as social cognition. In: Moore C, Dunham PJ, editors. Joint Attention: Its Origins and Role in Development. Hillsdale, NJ: Lawrence Erlbaum Associates; 1995. pp. 103–130. [Google Scholar]

- 52.Von Grunau M, Anston C. The detection of gaze direction: A stare in the crowd effect. Perception. 1995;24:1297–1313. doi: 10.1068/p241297. [DOI] [PubMed] [Google Scholar]

- 53.Whalen P. Fear, vigilance and ambiguity: Initial neuroimaging studies of the human amygdala. Curr. Direct. Psychol. Sci. 1998;7:177–188. [Google Scholar]

- 54.Wicker B, Michel F, Henaff MA, Decety J. Brain regions involved in the perception of gaze: a PET study. Neuroimage. 1998;8:221–227. doi: 10.1006/nimg.1998.0357. [DOI] [PubMed] [Google Scholar]

- 55.Wojciulik E, Kanwisher N, Driver J. Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. J. Neurophysiol. 1998;79:1574–1578. doi: 10.1152/jn.1998.79.3.1574. [DOI] [PubMed] [Google Scholar]

- 56.Young AW, Aggleton JP, Hellawell DJ, Johnson M, Broks P, Hanley R. Face processing impairments after amygdalotomy. Brain. 1995;118:15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]

- 57.Young AW, Newcombe F, de Haan EH, Small M, Hay DC. Face perception after brain injury. Selective impairments affecting identity and expression. Brain. 1993;116:941–959. doi: 10.1093/brain/116.4.941. [DOI] [PubMed] [Google Scholar]