Highlights

-

•

Overlap between semantic control and action understanding revealed with fMRI.

-

•

Overlap found in left inferior frontal and posterior middle temporal cortex.

-

•

Peaks for action and difficulty were spatially identical in LIFG.

-

•

Peaks for action and difficulty were distinct in occipital–temporal cortex.

-

•

Difficult trials recruited additional ventral occipital–temporal areas.

Keywords: Semantic, Action, Control, Executive, fMRI

Abstract

Executive–semantic control and action understanding appear to recruit overlapping brain regions but existing evidence from neuroimaging meta-analyses and neuropsychology lacks spatial precision; we therefore manipulated difficulty and feature type (visual vs. action) in a single fMRI study. Harder judgements recruited an executive–semantic network encompassing medial and inferior frontal regions (including LIFG) and posterior temporal cortex (including pMTG). These regions partially overlapped with brain areas involved in action but not visual judgements. In LIFG, the peak responses to action and difficulty were spatially identical across participants, while these responses were overlapping yet spatially distinct in posterior temporal cortex. We propose that the co-activation of LIFG and pMTG allows the flexible retrieval of semantic information, appropriate to the current context; this might be necessary both for semantic control and understanding actions. Feature selection in difficult trials also recruited ventral occipital–temporal areas, not implicated in action understanding.

1. Introduction

Our conceptual knowledge encompasses a large body of information but only particular aspects of concepts will be useful in any given context or task: as a consequence, executive control processes are engaged to guide conceptual processing in a context-dependent manner (Badre, Poldrack, Paré-Blagoev, Insler, & Wagner, 2005; Jefferies, 2013; Noonan, Jefferies, Corbett, & Lambon Ralph, 2010). We can match objects on the basis of specific features, even when these are not prominent aspects of the items, and this is crucial for intelligent behaviour – for example, when trying to pitch a tent, we can understand that a shoe has properties that make it suitable for banging pegs into the ground, even though these properties are not directly related to its dominant associations. Semantic control processes in left inferior frontal gyrus (LIFG) are thought to be critical for this selection of task-relevant attributes (Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997) and the controlled retrieval of weak associations (Noonan, Jefferies, Visser, & Lambon Ralph, 2013; Wagner, Paré-Blagoev, Clark, & Poldrack, 2001). However, little is known about how control processes are deployed to focus neural activity on specific, task-relevant aspects of knowledge – and whether the same mechanisms are recruited for different types of features (e.g., action vs. visual properties).

Contemporary theories of semantic cognition agree that modality-specific sensory and motor areas, plus multi-modal regions capturing specific features, contribute to semantic representation (Meteyard, Rodriguez Cuadrado, Bahrami, & Vigliocco, 2012; Patterson, Nestor, & Rogers, 2007; Pobric, Jefferies, & Lambon Ralph, 2010; Pulvermüller, 2013). As a result, semantic judgements about manipulable objects are thought to draw on representations across the cortex, including inferior parietal, premotor and posterior middle temporal (pMTG) regions, which support motor and praxis features (Chouinard & Goodale, 2012; Liljeström et al., 2008; Pobric et al., 2010; Rueschemeyer, van Rooij, Lindemann, Willems, & Bekkering, 2010; Vitali et al., 2005; Watson, Cardillo, Ianni, & Chatterjee, 2013; Yee, Drucker, & Thompson-Schill, 2010; Zannino et al., 2010). Although some research suggests that sensory and motor regions are recruited rapidly and automatically following word presentation (Hauk & Pulvermüller, 2004; Shtyrov, Butorina, Nikolaeva, & Stroganova, 2014), recent neuroimaging studies have examined how activity within modality-specific areas might be modulated on the basis of task demands (Hoenig, Sim, Bochev, Herrnberger, & Kiefer, 2008; Rüeschemeyer, Brass, & Friederici, 2007; Tomasino & Rumiati, 2013). Action words (e.g., kick) and their semantic associates do not necessarily activate motor regions when presented in isolation; this response is seen more strongly for literal sentences (‘kick the ball’) in which the action properties are relevant to the task (Raposo, Moss, Stamatakis, & Tyler, 2009; Schuil, Smits, & Zwaan, 2013; van Dam, van Dijk, Bekkering, & Rueschemeyer, 2012). Such findings challenge the assumptions of strong ‘embodied’ accounts of semantic cognition, in which neural connections between distributed sensory and motor features are sufficient for conceptual representation. Furthermore, they raise questions about how semantic representations are applied in a controlled way, to suit the particular task or context.

In addition to the role of distributed visual and motor/praxis representations in object knowledge, some theories suggest these disparate features are drawn together in an amodal semantic ‘hub’ in the anterior temporal lobes (ATL; Patterson et al., 2007). This proposal remains controversial (Simmons & Martin, 2009) because although data from multiple methods – including patients with semantic dementia (Bozeat, Lambon Ralph, Patterson, Garrard, & Hodges, 2000), TMS (Ishibashi, Lambon Ralph, Saito, & Pobric, 2011; Pobric et al., 2010) and PET (Devlin et al., 2002) – reveal a contribution of ATL to conceptual knowledge across modalities, fMRI is relatively insensitive to signals from ATL due to magnetic susceptibility artefacts that produce signal loss and distortion in this brain region (Visser, Jefferies, Embleton, & Lambon Ralph, 2012; Visser, Jefferies, & Lambon Ralph, 2010). Consequently the fMRI literature does not uniformly emphasise a role for ATL and instead focuses on the contribution of pMTG to multimodal tool/action knowledge, with some recent studies suggesting pMTG is a semantic hub for tool and action understanding (Martin, 2007; Martin, Kyle Simmons, Beauchamp, & Gotts, 2014; van Elk, van Schie, & Bekkering, 2014).

An alternative view about the contribution of pMTG to semantic cognition is provided by work on semantic control (for reviews, see Jefferies, 2013; Noonan et al., 2013). Although this research has largely focussed on the role of LIFG in selection and controlled semantic retrieval (Badre et al., 2005; Hoffman, Jefferies, & Lambon Ralph, 2010; Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997; Wagner et al., 2001), a recent meta-analysis revealed that manipulations of the executive demands of semantic tasks activate a distributed cortical network, including left and right inferior frontal gyrus (LIFG; RIFG), medial PFC (pre-SMA), dorsal angular gyrus (dAG) bordering intraparietal sulcus (IPS) and, most notably, pMTG (Noonan et al., 2013). These sites all show greater activation during difficult tasks that tap less prominent aspects of meaning, or require strongly related distracters to be suppressed (Rodd, Johnsrude, & Davis, 2010; Wagner et al., 2001; Whitney, Kirk, O’Sullivan, Lambon Ralph, & Jefferies, 2011). Moreover, inhibitory TMS to LIFG and pMTG produces equivalent disruption of semantic tasks tapping controlled retrieval, but has no effect on semantic judgements to highly-associated word pairs, which rely largely on automatic spreading activation (Whitney et al., 2011). This network for semantic control overlaps with the “fronto-parietal control network” involved in cognitive control across domains – which includes inferior frontal sulcus, intraparietal sulcus and occipital–temporal regions (Duncan, 2010; Woolgar, Hampshire, Thompson, & Duncan, 2011; Yeo et al., 2011), although some sites appear to make a relatively restricted contribution to control processes important for semantic cognition, particularly anterior parts of LIFG and pMTG (Devlin, Matthews, & Rushworth, 2003; Noonan et al., 2013; Whitney, Jefferies, & Kircher, 2011; Whitney et al., 2011, 2012).

In summary, separate literatures on executive–semantic processing and action understanding have linked similar left hemisphere networks – encompassing IFG/premotor cortex, IPL and pMTG – with diverse aspects of semantic cognition (Noonan et al., 2010; Watson et al., 2013). Since these regions are associated with understanding actions, tools, verbs and events, it has been suggested they might represent motion, action, or praxis features (Chouinard & Goodale, 2010; Liljeström et al., 2008; Spunt & Lieberman, 2012; Watson et al., 2013). However, left IFG, pMTG, and dorsal IPL are also activated during semantic tasks with high executive demands, suggesting they might support controlled retrieval/selection processes that shape semantic processing to suit the current context (Noonan et al., 2013). Damage to this network in semantic aphasia (SA) produces difficulty controlling conceptual retrieval to suit the task or context, both in verbal tasks like picture naming and non-verbal tasks like object use (Jefferies & Lambon Ralph, 2006; Noonan et al., 2010). These deficits can be overcome through the provision of cues that reduce the need for internally-generated control (i.e., phonological cues for picture naming; photographs of the recipients of actions in object use; Corbett, Jefferies, Ehsan, & Lambon Ralph, 2009; Corbett, Jefferies, & Lambon Ralph, 2011), suggesting that damage to this network does not produce a loss of semantic information about words or actions, but instead poor control over conceptual retrieval. However, both neuropsychological studies and neuroimaging meta-analyses have poor spatial resolution, and thus it is not yet known whether semantic control and action understanding recruit adjacent (yet distinct) or overlapping regions in pMTG and LIFG.

We addressed this question in an fMRI study with a 2 × 2 design that (1) contrasted decisions about action and non-action (visual) features and (2) compared easy, low-control judgements, in which participants selected a globally semantically-related item with more difficult, high-control judgements, in which the target was only related via a specific feature. We predicted that the recruitment of sensory/motor regions would vary according to the feature, with more activity within visual areas for visual decisions (e.g., lateral occipital cortex, occipital pole), and within motor/praxis areas for action decisions (e.g., precentral gyrus; IPL; pMTG). Executive–semantic regions were expected to show stronger responses for more demanding judgements irrespective of the feature to be matched. Furthermore, we examined whether brain regions recruited during the retrieval of action knowledge would overlap with those implicated in semantic control in both group analyses and at the single-subject level.

2. Method

2.1. Participants

20 right-handed, native English speaking participants were recruited from the University of York, UK. All subjects had normal/corrected to normal vision. Three participants had to be excluded from the final analysis due to head movement (>2 mm) and poor accuracy. A total of 17 participants were entered into the analysis (mean age = 22.7 years, 10 females).

2.2. Study design

A fully-factorial 2 × 2 within-subjects design was used. The two factors were judgement type (action or visual form matching) and control demands (contrasting easy decisions about globally related items with difficult decisions based on specific features).

In action judgement trials, participants were asked to match the probe and target words on the basis of shared or similar action features involved in stereotypical use (e.g., selecting screwdriver for the probe key, because both involve a precise twisting action). In visual judgement trials, participants performed a match on the basis of shared visual characteristics (e.g., screwdriver with pen, because these objects both have a long, thin rounded shape). We also contrasted ‘easy’ trials in which the probe and target were taken from the same semantic category and shared either overlapping action or visual properties (i.e., kettle and jug share action properties and are both kitchen items) with ‘difficult’ trials in which the probe and target were not semantically related and only shared an action or visual feature (e.g., kettle with hourglass, which only share a tipping action). Moreover, in the difficult trials, there were globally-related distracters which shared category membership with the probe but not the relevant feature (e.g., scales and toaster are categorically related to kettle but are not targets because they do not share action features). In both types of trial, there were two response options that were globally-semantically related, and two that were not, but the trials varied as to whether these constituted the target or distracters. A complete list of probes and targets is provided in the supplementary materials (Table S1).

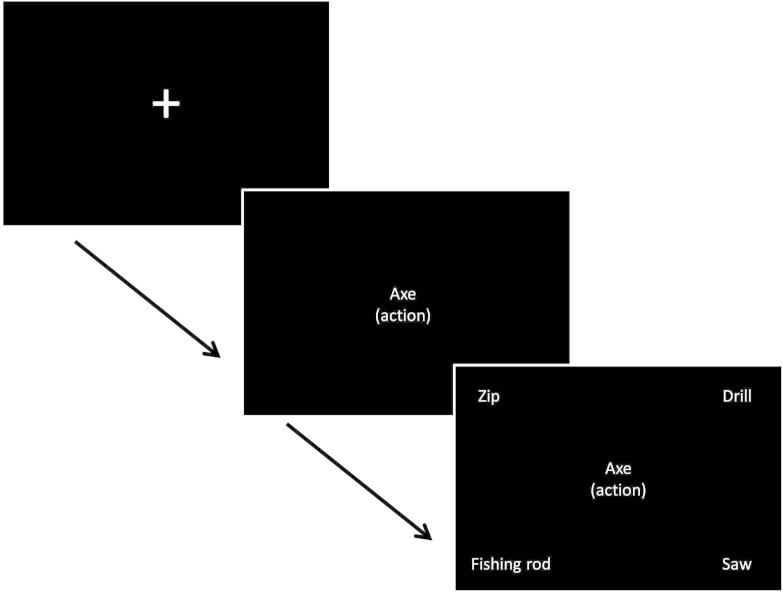

A four-alternative forced-choice paradigm was used; participants matched centrally presented probe words to one of four potential items, based on the nature of the association for that block. A reminder of the association being probed was present on every trial, in parentheses underneath the probe word. The experiment was organised into sixteen blocks; eight blocks for each feature type (action or vision) with control demands randomised within a block. An instruction slide stating the relevant feature to be matched (action/vision) appeared before each block for 1000 ms. Blocks contained seven or nine events. In blocks with seven events, there were six semantic decisions with one null event (screen was blank for 6000 ms). In blocks with nine events, there were seven trials with two null events. Probe words were presented for one second, and then the response options appeared and remained on the screen until the participant responded via a button press, with a maximum duration of 7.5 s. There was a jittered inter-trial interval of 4000–6000 ms between all events (including null events) with 10–12 s of rest between each block. Null events were combined with the rest between blocks to provide a baseline measure for analysis. Before participants took part in the fMRI experiment they were given a practice session, equivalent to one fMRI run (see Fig. 1).

Fig. 1.

An example of the trial format. Here, “axe” is the probe, and the target is “fishing rod” (both involve a chopping action). Given that axe and fishing rod are not globally related, this is a trial from the difficult, high control action feature condition.

2.3. Stimuli

Each condition had 25 targets (100 in total; see Table S1). In the easy condition, 25 semantically related items were used as distracters, combined with 50 unrelated distracter items. In the hard conditions, 50 semantically related items were used, with the remaining 25 distracters consisting of semantically unrelated items. All of the words were concrete nouns denoting manipulable objects. Individual words were used a maximum of four times throughout the experiment. Target words were matched across conditions for frequency (CELEX database, Max Planck Institute for Psycholinguistics, 2001), number of letters, and imageability, with no significant differences between conditions. Frequency and letter length were obtained using the program N-watch (Davis, 2005). Details of imageability ratings, descriptive statistics and ANOVA results can be found in the supplementary materials (Tables S2 and S3).

2.4. Data acquisition

Brain images were acquired using a 3T GE HDx Excite MRI scanner, utilising an 8 channel head coil. We obtained high-resolution structural images for every participant (3D FSPGR MRI). Functional data were recorded from the whole brain using gradient-echo EPI (FOV: 192 × 192, matrix: 64 × 64, slice thickness: 4.5 mm, voxel size; 3 × 3 × 4.5 mm, flip angle: 90°, TR: 2000 ms, TE: 30 ms) with bottom-up sequential data acquisition. Each session was split into two 14 min runs, with a total of 420 volumes for each run. Co-registration between structural and functional scans was improved using an intermediary scan (T1 FLAIR) with the same parameters as the functional scan. NBS Presentation version 14 (Neurobehavioral Systems Inc., 2012) was used to present stimuli and capture responses (reaction time and accuracy) during fMRI. Stimuli were projected using a Dukane 8942 ImagePro 4500 Lumens LCD projector onto an in-bore screen with a 45 × 30 visual degree angle. Responses were collected using two Lumitouch two button response boxes, in a custom built case allowing all four buttons to be operated using the left hand.

2.5. Data analysis

The analysis used an event-related design to examine the transient responses to each trial separately. fMRI analysis was conducted using FSL 4.1.9 (Analysis Group, FMRIB, Oxford, UK; Jenkinson, Beckmann, Behrens, Woolrich, & Smith, 2012; Smith et al., 2004; Woolrich et al., 2009). First and higher level analyses were conducted using FEAT (fMRI Expert Analysis Tool). Pre-processing of the data included McFLIRT motion correction (Jenkinson, Bannister, Brady, & Smith, 2002), skull-brain segmentation (Smith, 2002), slice timing correction, spatial smoothing using a Gaussian kernel FWHM of 5 mm, and high-pass temporal filtering (100 s). Time-series data were modelled using a general linear model (FILM; FMRIB Improved Linear Model), correcting for local autocorrelation (Woolrich, Ripley, Brady, & Smith, 2001). Each experimental variable (EV) was entered as a boxcar function, convolved with a hemodynamic response gamma function, using a variable epoch model (Grinband, Wager, Lindquist, Ferrera, & Hirsch, 2008): the start of each epoch was defined as the onset of the probe word, with epoch duration determined by the response time on each trial. The following EVs were used: correct responses from each of the four conditions, rest (null events and time between blocks, modelled independently since we were initially interested in potential differences between them, driven by the instructions, but these were not observed) and errors (a temporal derivative was added to all variables). Four contrasts were defined from the correct responses; individual conditions > rest (easy action, hard action, easy visual, hard visual).

2.5.1. Whole brain group analysis

A first analysis examined the effect of feature type by comparing brain activity to action and visual decisions separately. Analysis of the complete behavioural data from the scanner revealed a small but significant difference in accuracy between the action and visual conditions. Therefore, the whole brain analysis was conducted on a subset of 84 trials (i.e., 21 trials per condition, using the same probe words across conditions). All of the trials related to four specific probe words were removed across all conditions and participants, and entered as a covariate of no interest. The trials in the analysis were matched for psycholinguistic properties, accuracy and RT (see Tables S1, S2 and S3). Contrasts of each of the conditions over rest were entered into a higher level contrast of action decisions (hard action > rest + easy action > rest) vs. visual decisions (hard visual > rest + easy visual > rest) and vice versa. To control for multiple comparisons, cluster-based thresholding was applied to all analyses. Voxel inclusion was set at z = 2.3 with a cluster significance threshold at FWE p < .05. The minimum cluster size for significance at p = .05 was 615 contiguous voxels.

In a second analysis, the manipulation of difficulty was maximised by selecting 60/100 trials with accurate responses which generated the fastest and slowest decisions for each participant. This was done in order to maximise the sensitivity of the study to the effects of this variable. These trials were divided evenly between the action/visual conditions (15 easy action; 15 easy visual; 15 hard action; 15 hard visual). The fastest trials were based on global semantic similarity while the slowest were based on a specific feature in the presence of globally-related distracters. The same contrasts described above were repeated using these 60 trials. Voxel inclusion was set at z = 2.3 with a cluster significance threshold at p < .05. The minimum cluster size for significance at p = .05 was 561 contiguous voxels.

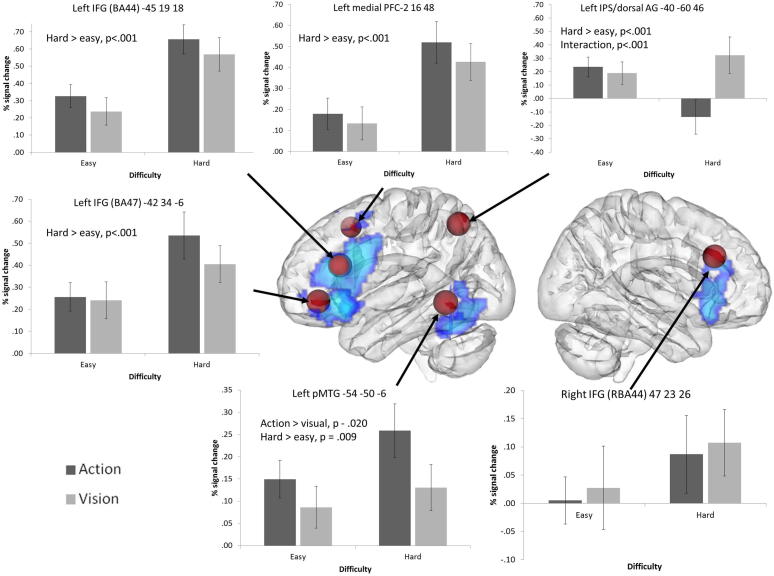

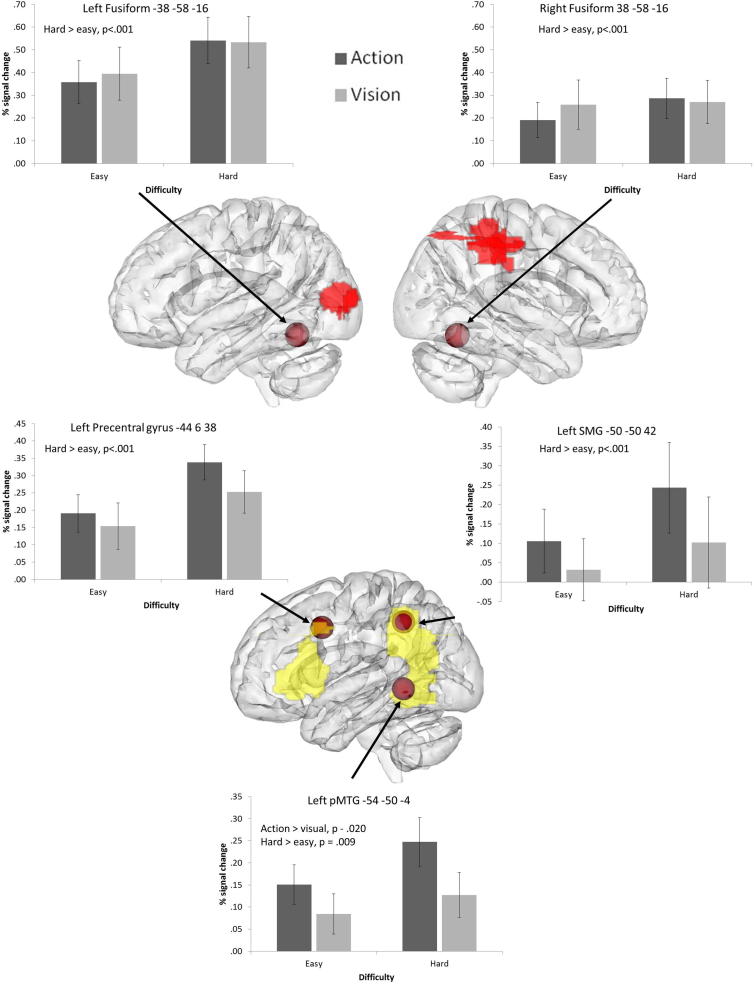

2.5.2. Regions of interest (ROI) group analysis

We examined 8 mm spherical ROIs placed at key coordinates taken from the literature. The coordinates used in this analysis are shown in Figs. 4 and 5. The FEATquery tool in FSL was used to extract unthresholded percentage signal change for each ROI and each of the four conditions using the matched set of 84 items. The average change across all voxels within the ROI was computed and subjected to ANOVA to examine the effects of difficulty and task, and their interaction at each location.

-

(i)

The first set of ROIs focussed on regions implicated in executive–semantic control by a recent meta-analysis of neuroimaging studies (Noonan et al., 2013). This highlighted a distributed network, involving left posterior and anterior IFG (corresponding to BA44, BA45, and BA47 respectively), right posterior IFG (RBA44), medial PFC (pre-SMA), pMTG and dAG/IPS.

-

(ii)

In addition, we included peaks designed to localise additional brain responses involved in understanding actions. These were taken from a study which contrasted responses to action and object pictures (Liljeström et al., 2008) and from a meta-analysis investigating action concepts in the brain (Watson et al., 2013). In the Liljeström et al. (2008) study, the strongest action-selective peak was in left precentral gyrus, motivating the choice of this site as an ROI. We also examined the strongest peak in left IPL identified from the same contrast. Finally, we examined a pMTG site for actions identified in a recent meta-analysis (Watson et al. (2013).

-

(iii)

We examined a left fusiform peak implicated in the retrieval of visual features (Thompson-Schill, Aguirre, D’Esposito, & Farah, 1999). This was transformed to the right hemisphere to investigate bilateral fusiform contributions to visual and action judgements.

Fig. 4.

8 mm ROI spheres placed around peaks from a ALE meta-analysis examining executive–semantic demands (Noonan et al., 2013). To allow the location of the ROIs to be compared with the independent whole-brain contrast for hard > easy trials (in blue), they are displayed together on a glass brain using DV3D, with depth information characterised by transparency (Gouws, Woods, Millman, Morland, & Green, 2009). Graphs show the mean percentage signal change for each condition at each ROI; error bars represent the standard error of the mean. All significant effects are noted on each graph. The left hemisphere is shown on the left side of the image. ROI coordinates are given in MNI space.

Fig. 5.

8 mm ROI spheres placed around peaks from the literature implicated in action knowledge (from Liljeström et al., 2008, Watson et al., 2013), and visual semantics from Thompson-Schill, Aguirre, D’Esposito, and Farah (1999). Activation from the whole-brain contrast for action > visual trials is projected onto a glass brain in yellow, while the visual > action response is shown in red, using DV3D, with depth information characterised by transparency (Gouws et al., 2009). Graphs display the mean percentage signal change for each condition at each ROI; error bars represent the standard error of the mean. All significant effects are noted on each graph. ROI coordinates are given in MNI space.

2.5.3. Individual analysis

Overlap between the feature and difficulty contrasts in the whole-brain group analysis would be consistent with a shared functional system for the executive control of semantic processing and action understanding. However, it is still possible that these contrasts activate non-overlapping voxels in individual subjects, due to variability in functional organisation and anatomy (Fedorenko, Duncan, & Kanwisher, 2013). We therefore repeated these analyses at the individual level, within two anatomical masks that examined regions implicated in semantic/cognitive control (e.g., by the meta-analysis of Noonan et al., 2013): LIFG (including adjacent precentral gyrus) and left pMTG/ITG. Both masks were created using the Harvard-Oxford structural atlas (Desikan et al., 2006; Frazier et al., 2005; Goldstein et al., 2007; Makris et al., 2006) and transformed into each individual’s native brain space (supplementary materials, Figs. S1 and S2). Each voxel within these masks in each individual was classified as responding to either (i) a conjunction of both contrasts of feature type (action > visual) and difficulty (hard > easy); (ii) feature type only; (iii) difficulty only and (iv) neither contrast. This was done by using the Cluster command in FSL to extract the total number of continuous voxels that were above threshold for the conjunction term, feature type, and difficulty effects separately – and then subtracting these activated voxel counts from the total number of voxels in each mask for each participant. Following Fedorenko et al. (2013), we used a voxel inclusion threshold of p < 0.05 (z = 1.96), which was uncorrected for multiple comparisons, since we were not seeking to establish whether any of the voxels in the mask showed significant effects (which would require correction for multiple comparisons), but instead which voxels responding to one contrast also responded to the other contrast. We also used MANOVA to establish whether there were any differences in the location of peak responses for the feature and difficulty contrasts within LIFG and posterior temporal cortex across individuals (Woo, Krishnan, & Wager, 2014), again using the Cluster command in FSL.

3. Results

3.1. Behavioural results

Descriptive statistics are provided in the supplementary materials (Table S4). A repeated-measures ANOVA on the set of 84 trials revealed significant main effects of difficulty for both reaction time and accuracy (RT: F(1, 16) = 61.70, p < .001, eta2 = 0.79; accuracy: F(1, 16) = 35.86, p < .001, eta2 = 0.69). Participants took longer and were less accurate in the hard conditions compared to the easy conditions, irrespective of feature type. There were no significant main effects of feature type (RT: F(1, 16) = 1.26, p = .28, eta2 = 0.07; accuracy: F(1, 16) = 1.21, p = .29, eta2 = 0.07) and no interactions (RT: F(1, 16) = 0.41, p = .53, eta2 = 0.03; accuracy: F(1, 16) = 0.10, p = .76, eta2 = 0.01).

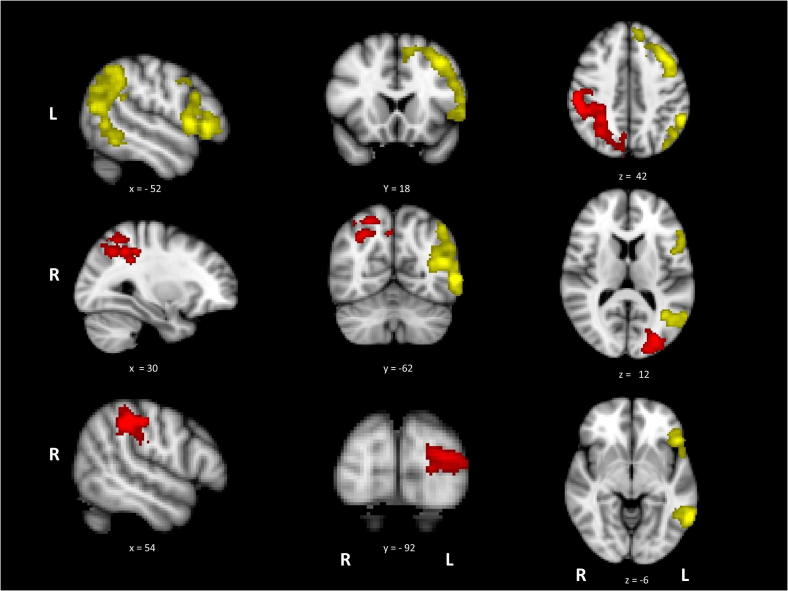

3.2. Whole brain analysis: Action vs. visual decisions

To examine differences between action and visual feature judgements, direct contrasts of these two tasks were performed. Fig. 2 shows the activation maps for the contrasts of action > visual judgements and visual > action judgements. Cluster maxima and sub-peaks are in the supplementary materials (Table S5). A contrast of actions > rest and visual > rest can be found in the supplementary materials S3 and S4. The action > visual contrast revealed large clusters in left hemisphere areas previously implicated in action processing and semantic cognition, including LIFG, premotor cortex, IPL and pMTG. The opposite contrast of visual over action judgements revealed bilateral areas involved in visual processing, including right supramarginal gyrus, left lateral occipital cortex (LO) and left occipital pole.

Fig. 2.

Activation maps for action > visual (yellow) and visual > action (red), presented on the MNI-152 standard brain with cluster correction applied (voxel inclusion threshold z = 2.3, cluster significance threshold p < .05). Image is presented using radiological convention (left hemisphere on the right-hand side).

3.3. Whole brain analysis: The effects of task difficulty

The activation map for the contrast of hard > easy decisions is shown in Fig. 3. Coordinates for cluster maxima and sub-peaks can be found in the supplementary materials (Table S6). Consistent with our predictions, the manipulation of difficulty for the semantic judgements produced activation in a distributed network associated with executive control of semantic decisions. The most extensive and strongest activity was in LIFG, but the network was bilateral, extending to RIFG, medial PFC/anterior cingulate/paracingulate and posterior temporal areas in left posterior ITG/MTG/fusiform gyrus.

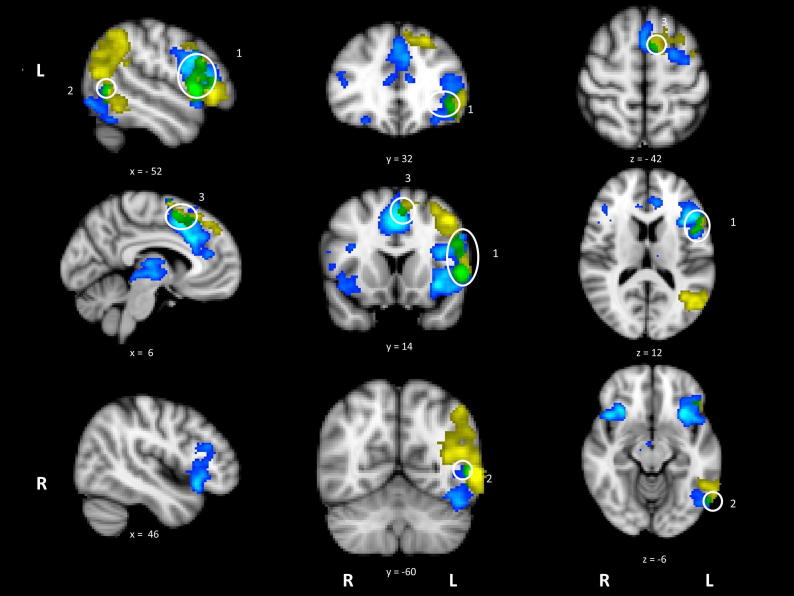

Fig. 3.

Activation maps for high difficulty > low difficulty (blue/light blue) and action > visual (yellow), with the overlap in green. White circles have been placed around the overlap foci; [1] LIFG, [2] pMTG and [3] anterior cingulate. Data is presented on MNI-152 standard brain with cluster correction applied (voxel inclusion threshold z = 2.3, cluster significance threshold p < .05). Image is presented using radiological convention (left hemisphere on the right-hand side).

Activation revealed by the hard > easy contrast partially overlapped with several regions also activated by action > visual judgements (see Fig. 3). These areas of overlap were found in LIFG, extending into left precentral gyrus and superior frontal gyrus (site 1), pMTG (site 2) and left paracingulate gyrus/medial PFC (site 3). In contrast, there was no overlap between the difficulty and visual > action contrasts. These findings suggest common brain regions are involved in action understanding and in dealing with the executive demands of semantic tasks.

We also explored the possibility of an interaction between task (action vs. visual) and difficulty in the whole brain analysis; however no such effects were found.

3.4. ROI analysis

Within each ROI, we extracted the mean percentage signal change for the four conditions (easy action, hard action, easy visual, and hard visual) for each participant and submitted the data to a 2 × 2 repeated-measures ANOVA, examining the factors of task (action vs. visual judgements) and difficulty (easy vs. hard). ANOVA results are shown in Table 1, while Figs. 4 and 5 display ROI locations on a rendered 3D brain, plus graphs displaying mean percentage signal change for each condition.

Table 1.

ANOVA results for the ROI analysis.

| Location | Task |

Difficulty |

Interaction |

||||||

|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | Effect size (eta2) | F | Sig. | Effect size (eta2) | F | Sig. | Effect size (eta2) | |

| Action ROIs | |||||||||

| Left precentral gyrus | 2.01 | .175 | .120 | 10.13 | .006 | .380 | .693 | .417 | .040 |

| Left SMG | 5.81 | .028 | .270 | 4.22 | .057 | .210 | .805 | .383 | .050 |

| Left pMTG | 6.18 | .024 | .280 | 9.22 | .008 | .360 | 1.10 | .309 | .070 |

| Visual ROIs | |||||||||

| Left fusiform gyrus | .265 | .614 | .010 | 33.68 | <.001 | .690 | 1.49 | .240 | .080 |

| Right fusiform gyrus | .537 | .474 | .030 | 4.85 | .048 | .220 | 3.35 | .086 | .170 |

| Control ROIs | |||||||||

| Left IFG (BA 44) | 2.42 | .139 | .132 | 53.58 | <.001 | .770 | .000 | .984 | .000 |

| Left IFG (BA 47) | 3.45 | .082 | .117 | 21.11 | <.001 | .559 | 1.59 | .225 | .091 |

| Left medial PFC | 1.59 | .226 | .090 | 46.97 | <.001 | .750 | .629 | .439 | .040 |

| Left IPS/dorsal AG | 12.80 | .003 | .450 | 3.02 | .101 | .160 | 19.06 | <.001 | .540 |

| Left pMTG | 6.71 | .020 | .300 | 8.69 | .009 | .350 | 1.59 | .226 | .090 |

| Right IFG (BA 44) | .191 | .668 | .012 | 3.12 | .096 | .163 | .001 | .976 | .000 |

Table reports results for 2 × 2 repeated measures ANOVAs examining the effects of task (visual vs. action feature selection) and difficulty (easy vs. hard) plus their interaction. All significant effects are reported in bold text.

3.4.1. Executive–semantic control peaks

The ROI analysis revealed significantly greater signal change for difficult vs. easy trials for all left hemisphere PFC/IFG sites (there were no significant effects in right BA44). In addition, left BA 47 showed a near-significant effect of task, reflecting somewhat greater signal change for action than visual trials. No other effects of task were observed and there were no significant interactions.

Left dorsal AG/IPS showed a highly significant interaction between control demands and task. While difficult visual feature decisions involved increased recruitment of left dAG/IPS, this site showed deactivation for hard action decisions: there was a highly significant difference between hard action and visual trials (t(16) = −5.32, uncorrected p < .001) but no difference between easy action and visual judgements.

Left pMTG displayed significant effects of task and difficulty, with a greater response for action trials compared to visual trials, and for difficult trials compared to easy trials, with no interaction.

3.4.2. Action peaks

Left precentral gyrus demonstrated a significant effect of control, with a stronger response to hard judgements compared to easy judgements. There were no significant effects or interactions with task: therefore, although this site has been previously implicated in action understanding, it is also involved in executive–semantic control, even when the task involves visual feature matching.

Left SMG, a site implicated in hand praxis, showed a stronger response to action than visual trials. No significant main effects or interactions with difficulty were observed, indicating that this site is recruited by action judgements irrespective of difficulty.

The pMTG peak from Watson et al. (2013) demonstrated significant effects of both task and category, with no interaction. Greater signal change was observed for action trials relative to visual trials, and for harder trials relative to easy trials. The pattern of results mirrors those observed for the semantic control peak in pMTG from Noonan et al. (2013), and indeed, these two ROIs selected from different literatures were spatially similar and partly overlapping.

3.4.3. Visual peaks

The fusiform gyrus bilaterally demonstrated significant effects of control with stronger responses to hard than easy trials, irrespective of task.

3.5. Individual overlap between contrasts examining task difficulty and action retrieval

3.5.1. LIFG

88% of participants (N = 15) showed a response for both feature type (action > visual) and difficulty (hard > easy). For these participants, we counted the number of voxels within the mask responding to (i) both contrasts, (ii) feature type only, (iii) difficulty only and (iv) neither contrast, in order to establish whether the number of voxels showing effects of both contrasts was greater than would be expected by chance (supplementary materials; Table S7). Nine participants (60% of the sample) showed a significant conjunction between the two contrasts when each contrast was thresholded at p = 0.05 (z = 1.96) (i.e., number of voxels > 0; mean cluster size 47.6 voxels, s.d. = 60.6, mean MNI coordinates; −50 22 6, pars triangularis); however, the number of voxels showing a conjunction was highly variable across subjects. Loglinear analysis examined the frequencies of voxels responding to difficulty and feature type in the 15 participants who showed both effects, with participant identity included as an additional predictor. The final model retained all three effects and their interaction terms (for k = 3, χ2(14) = 369.7, p < .001), with a significant partial association between voxels responding to difficulty and those responding to feature type (χ2(1) = 55.9, p < .001). Follow-up chi-square analyses confirmed that across subjects, more voxels responded to both difficulty and feature type than would be expected by chance (χ2(1) = 9.19, p = .002 with continuity correction; see Table S7). In addition, within-subjects MANOVA was used to examine the coordinates of the peak responses for each contrast across participants (N = 15). Descriptive statistics are provided in Table S8. There was no difference in the location of the peaks associated with difficulty and action feature retrieval, F(3, 12) = 1.89, p = .19, suggesting overlapping responses.

3.5.2. Posterior temporal cortex

94% of participants (N = 16) showed a response to both feature type and difficulty. 8 individuals (50%) showed a significant conjunction between the two contrasts that reached p = 0.05 (mean cluster size 42.1 voxels, s.d. = 57.1, mean MNI coordinates; −50 −60 0 pMTG); however, as for LIFG, the number of voxels showing a conjunction was highly variable across subjects. Loglinear analysis was conducted using the model described above for LIFG. The final model retained all three effects and their interaction terms (for k = 3, χ2(15) = 1011.6, p < .001), with a significant partial association between voxels responding to difficulty and those responding to feature type (χ2(1) = 41.6, p < .001). Follow-up chi-square analyses confirmed that across subjects, significantly more voxels responded to both difficulty and feature type than would be expected by chance (χ2(1) = 209.6, p = .002 with continuity correction; see Table S7). Within-subjects MANOVA was used to examine the coordinates of the peak responses for each contrast across participants (N = 16). Descriptive statistics are provided in Table S8. This analysis revealed that difficulty and action retrieval elicited overlapping yet spatially distinct peaks, F(3, 13) = 4.75, p = .02, with significant differences in the x (F(1, 15) = 8.83, p = .01) and the z dimension (F(1, 15) = 6.98, p = .02). The peak for difficulty was more ventral and medial than the peak for action retrieval.

In conclusion, overlapping voxels responded to difficulty and feature type (action > visual) in both LIFG and pMTG. The location of the peak responses for these two contrasts across individual participants did not differ within LIFG yet was spatially distinct in posterior MTG/ITG.

4. Discussion

Neuropsychological studies (Corbett, Jefferies, & Lambon Ralph, 2009; Corbett et al., 2011) and neuroimaging meta-analyses have identified apparently overlapping left-hemisphere sites which respond to both action knowledge (Watson et al., 2013) and semantic tasks with high executive demands (Noonan et al., 2013), yet both of these methods lack spatial resolution. In the current study, group-level and single-subject analyses examined the extent to which the brain regions implicated in difficult semantic judgements also responded to the requirement to retrieve action as opposed to visual features. We established that there is significant overlap between these contrasts in both LIFG and posterior temporal cortex (with peaks in pars triangularis and pMTG respectively). However, while the response to these contrasts in LIFG was spatially identical, there were overlapping yet distinct responses to difficulty and action retrieval in posterior temporal cortex. These findings suggest that there is a common distributed functional system for executive control over semantic processing and action understanding, involving both prefrontal and posterior temporal components; however, the data also point to differences in the roles and organisation of these regions.

First, the study revealed differential activation in modality-specific areas during action and visual feature judgements, which was flexibly driven by the task instructions. The retrieval of action features over visual features revealed an exclusively left-hemisphere network, including left inferior frontal and precentral cortex, inferior parietal lobule (IPL) and pMTG – regions linked to action processing and representation (Ghio & Tettamanti, 2010; Liljeström et al., 2008; Sasaki, Kochiyama, Sugiura, Tanabe, & Sadato, 2012; Watson et al., 2013; Yoon, Humphreys, Kumar, & Rotshtein, 2012). Left IPL has been implicated in the planning of tool use (Johnson-Frey, Newman-Norlund, & Grafton, 2005) and in tool-action observation and naming (Liljeström et al., 2008; Peeters et al., 2009), while pMTG is thought to be important for action, tool and event knowledge and responds across a variety of modalities (Chao, Haxby, & Martin, 1999; Liljeström et al., 2008; Noppeney, Price, Penny, & Friston, 2006). In contrast, visual > action decisions yielded bilateral activation in lateral occipital (LO) cortex implicated in object perception (Grill-Spector, Kourtzi, & Kanwisher, 2001; Grill-Spector et al., 1999), while right IPL and occipital pole were recruited during visual judgements (Liljeström et al., 2008). These findings confirm that participants were able to selectively focus their semantic processing for tools on task-relevant sensory and motor areas: activation was enhanced in sensory/motor areas relevant to the decision being performed (Schuil et al., 2013; van Dam, Rueschemeyer, Lindemann, & Bekkering, 2010).

An interesting question to emerge from these findings is how participants are able to focus attention on specific semantic features in a flexible way, depending on the task. There was little evidence that sites specifically implicated in processing visual and action features showed a selective response to task difficulty for those features. In fact, posterior fusiform cortex, associated in previous studies with visual-semantic processing (Thompson-Schill, Aguirre, D’Esposito, & Farah, 1999), showed an increased response when hard trials were contrasted with easy trials for both visual and action features; possibly reflecting increased use of visual imagery in both visual and action trials, and/or an increased response linked to word reading when the decision was hard. Instead, the goal-driven retrieval of both visual and action features in difficult trials recruited a network of regions implicated in controlled semantic processing (and, in many cases, other aspects of cognitive control), including LIFG, RIFG, medial PFC, pMTG and ventral temporal-occipital cortex. Trials in the ‘easy’ condition were relatively undemanding of executive–semantic processes, because the target items that shared the relevant action or visual feature were globally semantically related to the probe word. In contrast, for more difficult decisions, participants had to identify a target word on the basis of the task-relevant features and inhibit globally-related distracters that shared task-irrelevant features. This required the application of a varying ‘goal set’ to control the allocation of attention and to bias selection processes in a task-appropriate way.

Posterior MTG has been implicated in executive–semantic control, along with LIFG, by convergent lines of evidence: first, patients with semantic aphasia show deregulated semantic cognition in the absence of degraded semantic knowledge following either left prefrontal or left temporoparietal lesions (Jefferies & Lambon Ralph, 2006; Noonan et al., 2010); secondly, TMS to both LIFG and pMTG specifically disrupts semantic decisions that maximise controlled retrieval/selection but not automatic aspects of semantic retrieval (Whitney et al., 2011); (iii) a recent meta-analysis of fMRI studies found that left IFG and pMTG were reliably activated across different manipulations of executive–semantic demands (Noonan et al., 2013). However, the proposal that pMTG helps to support executively-demanding semantic decisions remains controversial, because differing theoretical perspectives ascribe alternative roles to pMTG, including the view that pMTG captures aspects of semantic representation linked to action/event/tool knowledge (Kellenbach, Brett, & Patterson, 2003; Kilner, 2011; Martin, 2007; Peelen, Romagno, & Caramazza, 2012; Romagno, Rota, Ricciardi, & Pietrini, 2012). Moreover, prior to this investigation, the role of pMTG in action/event knowledge and in semantic control has always been examined in separate studies.

Given this controversy, perhaps the most significant finding to emerge from the current study was the overlap between the regions implicated in executive–semantic judgements and retrieving actions (as opposed to visual features). In the whole-brain analysis, areas of overlap were observed in left IFG/precentral gyrus, medial PFC (pre-SMA) and pMTG. Significant overlap was also confirmed for individual participants in LIFG and left posterior temporal lobe. In contrast, there were no areas of overlap between executive–semantic processing and the retrieval of visual features. To explain these findings, we tentatively suggest that action retrieval and executively-demanding semantic tasks may share some cognitive processes that are supported by the network revealed here. Representations of actions and events must be flexibly controlled to suit the context or task – for example, we can retrieve very different actions for the object ‘shoe’ if the task is to bang in tent pegs rather than fasten our laces. The action decisions in this experiment required participants to establish contexts in which the probe and target objects could be used in a similar way, and in many trials this would have involved linking actions to their recipients (e.g., easy action trials involved recognising that both a highlighter and a felt tip are drawn across a sheet of paper; hard action trials involved recognising that a similar action is made when drawing a match across the box). Arguably, the matching of visual features for tools in the easy condition did not involve retrieval of a spatiotemporal context to the same degree – e.g., when thinking about the shape of a “TV remote”, it is perhaps not necessary to think about the object interacting with other objects within its environment to see the shape similarity with “mobile phone”. However, for more difficult trials loading semantic control, even those involving visual decisions, there was a requirement to match items on a specific feature and disregard a globally-related distracter (e.g., “TV remote” with “soap bar” not “radio”): thus, activation within the semantic system had to be tailored to suit the context specified by the instructions within each block. Manipulations of semantic control demands generally have this quality: they require participants to retrieve specific associations and features which may be non-dominant but which are required for that trial or task (e.g., associations such as “slippery” and “mud” must be retrieved for the word “bank”, in the context of “river”). This might explain why action retrieval (in both easy and hard trials) and specific feature matching on harder trials (irrespective of feature type) recruited an LIFG-pMTG network. We propose that this network shows activation when semantic cognition is tailored in a flexible way to suit the context in which retrieval occurs. These sites may be involved in the creation and maintenance of a task set or semantic ‘context’ which facilitates the controlled and flexible retrieval of stored multimodal semantic information such that it is appropriate to ongoing goals. This proposal is compatible with Turken and Dronkers’s (2011) suggestion that interactions between ventral PFC and pMTG allow selected aspects of meaning to be sustained in short-term memory such that they can be integrated into the overall context.

Although we propose that the sites within this functional network are recruited together, and that controlled aspects of semantic cognition emerge from their interaction, it is also likely that they each make a unique contribution to our flexible retrieval of concepts. Indeed, there were some differences in their responses in the current study. ROIs in posterior LIFG and medial PFC demonstrated strong effects of control demands irrespective of the semantic feature to be retrieved. This pattern was observed not only for LIFG (within ROIs determined by the semantic control literature) but also in left premotor cortex (within an ROI associated with action understanding). Moreover, individual participants’ peak responses to contrasts examining difficulty and action retrieval were not spatially distinct in LIFG, suggesting that the same voxels were recruited in both action understanding and difficult feature selection. In contrast, in our pMTG ROI, there was a main effect of both difficulty and feature type – i.e., pMTG showed greater activity for hard relative to easy trials, and for action decisions compared with visual decisions. Individual participants’ peak responses to these contrasts were overlapping in pMTG yet spatially distinct within posterior temporal cortex, suggesting that LIFG co-activates with somewhat different neuronal populations during action retrieval and difficult feature selection. One possibility is that while LIFG and pMTG both contribute to the shaping of semantic retrieval in line with a semantic context (driving their engagement in both action understanding and difficult trials across feature types, according to the arguments above), posterior ITG is additionally recruited during difficult feature selection: resting-state functional connectivity analyses show coupling of this region with networks implicated in semantic control (Spreng, Stevens, Chamberlain, Gilmore, & Schacter, 2010; Yeo et al., 2011), and there is common recruitment of this site across executively-demanding tasks involving visual inputs (Duncan & Owen, 2000). This could potentially pull the peak for the difficulty contrast in a ventral and medial direction, relative to the peak for the action contrast in single subject analyses, in line with our observations. Interestingly, in this way, our data hints at the possibility that there might be more than one response in posterior temporal cortex associated with semantic control: a region in pMTG within Yeo et al.’s (2011) ‘frontoparietal control system’ which might support the retrieval of contextually-appropriate but non-dominant semantic information, and an adjacent region in ITG within the ‘dorsal attention network’, which might be recruited to resolve competition during feature selection more widely (Hindy, Altmann, Kalenik, & Thompson-Schill, 2012; Hindy, Solomon, Altmann, & Thompson-Schill, 2013), and which is also recruited when non-semantic tasks are executively demanding (e.g., Duncan, 2013).

Sites within left inferior parietal cortex are also variably implicated in knowledge of events and semantic associations, praxis for tools, and semantic control (Binder, Desai, Graves, & Conant, 2009; Humphries, Binder, Medler, & Liebenthal, 2007; Kim, 2011; Kim, Karunanayaka, Privitera, Holland, & Szaflarski, 2011; Noonan et al., 2013; Pobric et al., 2010; Wirth et al., 2011). However, a common area of activation across contrasts examining action retrieval and semantic control was not observed in this study, presumably because there are multiple regions within left IPL with different response profiles (Noonan et al., 2013; Seghier, Fagan, & Price, 2010). Anterior SMG/IPS is associated with action observation and tool praxis (Caspers, Zilles, Laird, & Eickhoff, 2010; Watson et al., 2013), while dorsal AG/IPS emerged as part of the semantic control network in the meta-analysis of Noonan et al. (2013). In contrast to both of these sites, more ventral/posterior aspects of AG show a stronger response to semantic than non-semantic tasks, particularly for concrete concepts (Binder, Westbury, McKiernan, Possing, & Medler, 2005; Wang, Conder, Blitzer, & Shinkareva, 2010), yet no effect of control demands (Noonan et al., 2013).

Dorsal AG/IPS, unlike other regions showing a response to semantic control demands, showed an increased response with difficulty for visual features, but task-related deactivation for hard action trials. This interaction between difficulty and task is a novel finding which speaks to the role of dorsal AG within and beyond semantic cognition. Broadly speaking, IPL has been proposed to play a crucial role in reflexive visual attention (Corbetta & Shulman, 2002; Konen, Kleiser, Wittsack, Bremmer, & Seitz, 2004; Nobre, Coull, Walsh, & Frith, 2003). Left IPL may therefore show deactivation when participants perform more demanding tasks which would be disrupted by allocating attention to changing visual inputs. In line with this proposal, we found that dorsal AG/IPS showed above baseline activation when attention to visual features was necessary to perform the task, particularly for harder judgements. In contrast, it showed deactivation when attention was directed towards non-visual features (e.g., actions), again, particularly when these decisions were hard. In short, this site might play an important role in allocating attention towards different types of features according to the task requirements, even when these features are internally represented and not present in the input.

In conclusion, we manipulated semantic control demands and the feature to be matched in the same experiment, revealing overlapping responses to semantic control demands and action knowledge in left IFG/precentral gyrus, medial PFC (pre-SMA) and pMTG at both the group and single-subject level. We also identified a distinct response to semantic selection but not action retrieval in pITG.

Acknowledgments

We would like to thank Pavel Gogolev and Yannan Liu for their contribution to experiment design, data acquisition and pre-processing, Silvia Gennari for her advice regarding data analysis and Hannah Thompson for her useful comments on a draft of this paper. The research was partially supported by BBSRC grant BB/J006963/1. Jefferies was supported by a grant from the European Research Council (283530 – SEMBIND).

Appendix A. Supplementary material

Supplementary material contains Tables S1–S8 and Figs. S1–S4.

References

- Badre D., Poldrack R.A., Paré-Blagoev E.J., Insler R.Z., Wagner A.D. Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron. 2005;47:907–918. doi: 10.1016/j.neuron.2005.07.023. [DOI] [PubMed] [Google Scholar]

- Binder J.R., Desai R.H., Graves W.W., Conant L.L. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex (New York, NY: 1991) 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder J.R., Westbury C.F., McKiernan K.A., Possing E.T., Medler D.A. Distinct brain systems for processing concrete and abstract concepts. Journal of Cognitive Neuroscience. 2005;17(6):905–917. doi: 10.1162/0898929054021102. [DOI] [PubMed] [Google Scholar]

- Bozeat S., Lambon Ralph M.A., Patterson K., Garrard P., Hodges J.R. Non-verbal semantic impairment in semantic dementia. Neuropsychologia. 2000;38:1207–1215. doi: 10.1016/s0028-3932(00)00034-8. [DOI] [PubMed] [Google Scholar]

- Caspers S., Zilles K., Laird A.R., Eickhoff S.B. ALE meta-analysis of action observation and imitation in the human brain. NeuroImage. 2010;50:1148–1167. doi: 10.1016/j.neuroimage.2009.12.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao, Haxby, Martin Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chouinard P.A., Goodale M.A. Category-specific neural processing for naming pictures of animals and naming pictures of tools: An ALE meta-analysis. Neuropsychologia. 2010;48:409–418. doi: 10.1016/j.neuropsychologia.2009.09.032. [DOI] [PubMed] [Google Scholar]

- Chouinard P.A., Goodale M.A. FMRI-adaptation to highly-rendered color photographs of animals and manipulable artifacts during a classification task. NeuroImage. 2012;59(3):2941–2951. doi: 10.1016/j.neuroimage.2011.09.073. [DOI] [PubMed] [Google Scholar]

- Corbett F., Jefferies E., Ehsan S., Lambon Ralph M.A. Different impairments of semantic cognition in semantic dementia and semantic aphasia: Evidence from the non-verbal domain. Brain. 2009;132:2593–2608. doi: 10.1093/brain/awp146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbett F., Jefferies E., Lambon Ralph M.A. Exploring multimodal semantic control impairments in semantic aphasia: Evidence from naturalistic object use. Neuropsychologia. 2009;47:2721–2731. doi: 10.1016/j.neuropsychologia.2009.05.020. [DOI] [PubMed] [Google Scholar]

- Corbett F., Jefferies E., Lambon Ralph M.A. Deregulated semantic cognition follows prefrontal and temporo-parietal damage: Evidence from the impact of task constraint on nonverbal object use. Journal of Cognitive Neuroscience. 2011;23(5):1125–1135. doi: 10.1162/jocn.2010.21539. [DOI] [PubMed] [Google Scholar]

- Corbetta M., Shulman G.L. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3(3):201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Davis C.J. N-watch: A program for deriving neighborhood size and other psycholinguistic statistics. Behavior Research Methods. 2005;37(1):65–70. doi: 10.3758/bf03206399. [DOI] [PubMed] [Google Scholar]

- Desikan R.S., Segonne F., Fischl B., Quinn B.T., Dickerson B.C., Blacker D. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31(3):968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Devlin J.T., Matthews P.M., Rushworth M.F.S. Semantic processing in the left inferior prefrontal cortex: A combined functional magnetic resonance imaging and transcranial magnetic stimulation study. Journal of Cognitive Neuroscience. 2003;15:71–84. doi: 10.1162/089892903321107837. [DOI] [PubMed] [Google Scholar]

- Devlin J.T., Russell R.P., Davis M.H., Price C.J., Moss H.E., Fadili M.J. Is there an anatomical basis for category-specificity? Semantic memory studies in PET and fMRI. Neuropsychologia. 2002;40:54–75. doi: 10.1016/s0028-3932(01)00066-5. [DOI] [PubMed] [Google Scholar]

- Duncan J. The multiple-demand (MD) system of the primate brain: Mental programs for intelligent behaviour. Trends in Cognitive Sciences. 2010;14(4):172–179. doi: 10.1016/j.tics.2010.01.004. [DOI] [PubMed] [Google Scholar]

- Duncan J. The Structure of Cognition: Attentional episodes in mind and brain. Neuron. 2013;80:35–50. doi: 10.1016/j.neuron.2013.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J., Owen A.M. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends in Neurosciences. 2000;23(10):475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Fedorenko E., Duncan J., Kanwisher N. Broad domain generality in focal regions of frontal and parietal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2013;110(41):16616–16621. doi: 10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frazier J.A., Chiu S., Breeze J.L., Makris N., Lange N., Kennedy D.N. Structural brain magnetic resonance imaging of limbic and thalamic volumes in pediatric bipolar disorder. American Journal of Psychiatry. 2005;162(7):1256–1265. doi: 10.1176/appi.ajp.162.7.1256. [DOI] [PubMed] [Google Scholar]

- Ghio M., Tettamanti M. Semantic domain-specific functional integration for action-related vs. abstract concepts. Brain and Language. 2010;112(3):223–232. doi: 10.1016/j.bandl.2008.11.002. [DOI] [PubMed] [Google Scholar]

- Goldstein J.M., Seidman L.J., Makris N., Ahern T., O’Brien L.M., Caviness V.S., Jr. Hypothalamic abnormalities in schizophrenia: Sex effects and genetic vulnerability. Biological Psychiatry. 2007;61(8):935–945. doi: 10.1016/j.biopsych.2006.06.027. [DOI] [PubMed] [Google Scholar]

- Gouws A., Woods W., Millman R., Morland A., Green G. DataViewer3D: An open-source, cross-platform multi-modal neuroimaging data visualization tool. Frontiers in Neuroinformatics. 2009;3:9. doi: 10.3389/neuro.11.009.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K., Kourtzi Z., Kanwisher N. The lateral occipital complex and its role in object recognition. Vision Research. 2001;41:1409–1422. doi: 10.1016/s0042-6989(01)00073-6. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Kushnir T., Edelman S., Avidan G., Itzchak Y., Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grinband J., Wager T.D., Lindquist M., Ferrera V.P., Hirsch J. Detection of time-varying signals in event-related fMRI designs. NeuroImage. 2008;43(3):509–520. doi: 10.1016/j.neuroimage.2008.07.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O., Pulvermüller F. Neurophysiological distinction of action words in the fronto-central cortex. Human Brain Mapping. 2004;21(3):191–201. doi: 10.1002/hbm.10157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hindy N.C., Altmann G.T.M., Kalenik E., Thompson-Schill S.L. The effect of object state-changes on event processing: Do objects compete with themselves? The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2012;32(17):5795–5803. doi: 10.1523/JNEUROSCI.6294-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hindy N.C., Solomon S.H., Altmann G.T.M., Thompson-Schill S.L. A cortical network for the encoding of object change. Cerebral Cortex (New York, NY: 1991) 2013 doi: 10.1093/cercor/bht275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoenig K., Sim E.-J., Bochev V., Herrnberger B., Kiefer M. Conceptual flexibility in the human brain: Dynamic recruitment of semantic maps from visual, motor, and motion-related areas. Journal of Cognitive Neuroscience. 2008;20(10):1799–1814. doi: 10.1162/jocn.2008.20123. [DOI] [PubMed] [Google Scholar]

- Hoffman P., Jefferies E., Lambon Ralph M.A. Ventrolateral prefrontal cortex plays an executive regulation role in comprehension of abstract words: Convergent neuropsychological and repetitive TMS evidence. The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2010;30(46):15450–15456. doi: 10.1523/JNEUROSCI.3783-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries C., Binder J.R., Medler D., Liebenthal E. Time course of semantic processes during sentence comprehension: An fMRI study. NeuroImage. 2007;36:924–932. doi: 10.1016/j.neuroimage.2007.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishibashi R., Lambon Ralph M.A., Saito S., Pobric G. Different roles of lateral anterior temporal lobe and inferior parietal lobule in coding function and manipulation tool knowledge: Evidence from an rTMS study. Neuropsychologia. 2011;49(5):1128–1135. doi: 10.1016/j.neuropsychologia.2011.01.004. [DOI] [PubMed] [Google Scholar]

- Jefferies E. The neural basis of semantic cognition: Converging evidence from neuropsychology, neuroimaging and TMS. Cortex; A Journal Devoted to the Study of the Nervous System and Behavior. 2013;49(3):611–625. doi: 10.1016/j.cortex.2012.10.008. [DOI] [PubMed] [Google Scholar]

- Jefferies E., Lambon Ralph M.A. Semantic impairment in stroke aphasia versus semantic dementia: A case-series comparison. Brain: A Journal of Neurology. 2006;129(8):2132–2147. doi: 10.1093/brain/awl153. [DOI] [PubMed] [Google Scholar]

- Jenkinson M., Bannister P., Brady M., Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. NeuroImage. 2002;17(2):825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Jenkinson M., Beckmann C.F., Behrens T.E.J., Woolrich M.W., Smith S.M. FSL. NeuroImage. 2012;62(2):782–790. doi: 10.1016/j.neuroimage.2011.09.015. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey S.H., Newman-Norlund R., Grafton S.T. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex (New York, NY: 1991) 2005;15(6):681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach M.L., Brett M., Patterson K. Actions speak louder than functions: The importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience. 2003;15:30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kilner J.M. More than one pathway to action understanding. Trends in Cognitive Sciences. 2011;15(8):352–357. doi: 10.1016/j.tics.2011.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H. Differential neural activity in the recognition of old versus new events: An activation likelihood estimation meta-analysis. Human Brain Mapping. 2011;34(4) doi: 10.1002/hbm.21474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim K.K., Karunanayaka P., Privitera M.D., Holland S.K., Szaflarski J.P. Semantic association investigated with functional MRI and independent component analysis. Epilepsy & Behavior: E&B. 2011;20(4):613–622. doi: 10.1016/j.yebeh.2010.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konen C.S., Kleiser R., Wittsack H.-J., Bremmer F., Seitz R.J. The encoding of saccadic eye movements within human posterior parietal cortex. NeuroImage. 2004;22(1):304–314. doi: 10.1016/j.neuroimage.2003.12.039. [DOI] [PubMed] [Google Scholar]

- Liljeström M., Tarkiainen A., Parviainen T., Kujala J., Numminen J., Hiltunen J. Perceiving and naming actions and objects. NeuroImage. 2008;41(3):1132–1141. doi: 10.1016/j.neuroimage.2008.03.016. [DOI] [PubMed] [Google Scholar]

- Makris N., Goldstein J.M., Kennedy D., Hodge S.M., Caviness V.S., Faraone S.V. Decreased volume of left and total anterior insular lobule in schizophrenia. Schizophrenia Research. 2006;83(2):155–171. doi: 10.1016/j.schres.2005.11.020. [DOI] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A., Kyle Simmons W., Beauchamp M.S., Gotts S.J. Is a single ‘hub’, with lots of spokes, an accurate description of the neural architecture of action semantics? Comment on “Action semantics: A unifying conceptual framework for the selective use of multimodal and modality-specific object knowledge” by van Elk, Van Schie and Bekkering. Physics of Life Reviews. 2014;11(2):261–262. doi: 10.1016/j.plrev.2014.01.002. [DOI] [PubMed] [Google Scholar]

- Meteyard L., Rodriguez Cuadrado S., Bahrami B., Vigliocco G. Coming of age: A review of embodiment and the neuroscience of semantics. Cortex; A Journal Devoted to the Study of the Nervous System and Behavior. 2012;48(7):788–804. doi: 10.1016/j.cortex.2010.11.002. [DOI] [PubMed] [Google Scholar]

- Nobre C., Coull J.T., Walsh V., Frith C.D. Brain activations during visual search: Contributions of search efficiency versus feature binding. NeuroImage. 2003;18:91–103. doi: 10.1006/nimg.2002.1329. [DOI] [PubMed] [Google Scholar]

- Noonan K.A., Jefferies E., Corbett F., Lambon Ralph M.A. Elucidating the nature of deregulated semantic cognition in semantic aphasia: Evidence for the roles of prefrontal and temporo-parietal cortices. Journal of Cognitive Neuroscience. 2010;22(7):1597–1613. doi: 10.1162/jocn.2009.21289. [DOI] [PubMed] [Google Scholar]

- Noonan K., Jefferies E., Visser M., Lambon Ralph M.A. Going beyond inferior prefrontal involvement in semantic control: Evidence for the additional contribution of dorsal angular gyrus and posterior middle temporal cortex. Journal of Cognitive Neuroscience. 2013;25(11):1824–1850. doi: 10.1162/jocn_a_00442. [DOI] [PubMed] [Google Scholar]

- Noppeney U., Price C.J., Penny W.D., Friston K.J. Two distinct neural mechanisms for category-selective responses. Cerebral Cortex (New York, NY: 1991) 2006;16(3):437–445. doi: 10.1093/cercor/bhi123. [DOI] [PubMed] [Google Scholar]

- Patterson K., Nestor P.J., Rogers T.T. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8(12):976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Peelen M.V., Romagno D., Caramazza A. Independent representations of verbs and actions in left lateral temporal cortex. Journal of Cognitive Neuroscience. 2012;24(10):2096–2107. doi: 10.1162/jocn_a_00257. [DOI] [PubMed] [Google Scholar]

- Peeters R., Simone L., Nelissen K., Fabbri-Destro M., Vanduffel W., Rizzolatti G. The representation of tool use in humans and monkeys: Common and uniquely human features. The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2009;29(37):11523–11539. doi: 10.1523/JNEUROSCI.2040-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pobric G., Jefferies E., Lambon Ralph M.A. Category-specific versus category-general semantic impairment induced by transcranial magnetic stimulation. Current Biology. 2010;20(10):964–968. doi: 10.1016/j.cub.2010.03.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermüller F. How neurons make meaning: Brain mechanisms for embodied and abstract-symbolic semantics. Trends in Cognitive Sciences. 2013;17(9) doi: 10.1016/j.tics.2013.06.004. [DOI] [PubMed] [Google Scholar]

- Raposo A., Moss H.E., Stamatakis E.A., Tyler L.K. Modulation of motor and premotor cortices by actions, action words and action sentences. Neuropsychologia. 2009;47(2):388–396. doi: 10.1016/j.neuropsychologia.2008.09.017. [DOI] [PubMed] [Google Scholar]

- Rodd J.M., Johnsrude I.S., Davis M.H. The role of domain-general frontal systems in language comprehension: Evidence from dual-task interference and semantic ambiguity. Brain and Language. 2010;115(3):182–188. doi: 10.1016/j.bandl.2010.07.005. [DOI] [PubMed] [Google Scholar]

- Romagno D., Rota G., Ricciardi E., Pietrini P. Where the brain appreciates the final state of an event: The neural correlates of telicity. Brain and Language. 2012;123(1):68–74. doi: 10.1016/j.bandl.2012.06.003. [DOI] [PubMed] [Google Scholar]

- Rüeschemeyer S.-A., Brass M., Friederici A.D. Comprehending prehending: Neural correlates of processing verbs with motor stems. Journal of Cognitive Neuroscience. 2007;19:855–865. doi: 10.1162/jocn.2007.19.5.855. [DOI] [PubMed] [Google Scholar]

- Rueschemeyer S.-A., van Rooij D., Lindemann O., Willems R.M., Bekkering H. The function of words: Distinct neural correlates for words denoting differently manipulable objects. Journal of Cognitive Neuroscience. 2010;22(8):1844–1851. doi: 10.1162/jocn.2009.21310. [DOI] [PubMed] [Google Scholar]

- Sasaki A.T., Kochiyama T., Sugiura M., Tanabe H.C., Sadato N. Neural networks for action representation: A functional magnetic-resonance imaging and dynamic causal modeling study. Frontiers in Human Neuroscience. 2012;6:236. doi: 10.3389/fnhum.2012.00236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schuil K.D.I., Smits M., Zwaan R.A. Sentential context modulates the involvement of the motor cortex in action language processing: An fMRI study. Frontiers in Human Neuroscience. 2013;7:1–13. doi: 10.3389/fnhum.2013.00100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seghier M.L., Fagan E., Price C.J. Functional subdivisions in the left angular gyrus where the semantic system meets and diverges from the default network. The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2010;30(50):16809–16817. doi: 10.1523/JNEUROSCI.3377-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shtyrov Y., Butorina A., Nikolaeva A., Stroganova T. Automatic ultrarapid activation and inhibition of cortical motor systems in spoken word comprehension. Proceedings of the National Academy of Sciences of the United States of America. 2014;111(18):E1918–E1923. doi: 10.1073/pnas.1323158111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons W.K., Martin A. The anterior temporal lobes and the functional architecture of semantic memory. Journal of the International Neuropsychological Society: JINS. 2009;15(5):645–649. doi: 10.1017/S1355617709990348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith S.M. Fast robust automated brain extraction. Human Brain Mapping. 2002;17(3):143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith S.M., Jenkinson M., Woolrich M.W., Beckmann C.F., Behrens T.E.J., Johansen-Berg H. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Spreng R.N., Stevens W.D., Chamberlain J.P., Gilmore A.W., Schacter D.L. Default network activity, coupled with the frontoparietal control network, supports goal-directed cognition. NeuroImage. 2010;53(1):303–317. doi: 10.1016/j.neuroimage.2010.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt R.P., Lieberman M.D. Dissociating modality-specific and supramodal neural systems for action understanding. The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2012;32(10):3575–3583. doi: 10.1523/JNEUROSCI.5715-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill S.L., Aguirre G.K., D’Esposito M., Farah M.J. A neural basis for category and modality specificity of semantic knowledge. Neuropsychologia. 1999;37:671–676. doi: 10.1016/s0028-3932(98)00126-2. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill S.L., D’Esposito M., Aguirre G.K., Farah M.J. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proceedings of the National Academy of Sciences of the United States of America. 1997;94(26):14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomasino B., Rumiati R.I. At the mercy of strategies: The role of motor representations in language understanding. Frontiers in Psychology. 2013;4:27. doi: 10.3389/fpsyg.2013.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turken A.U., Dronkers N.F. The neural architecture of the language comprehension network: Converging evidence from lesion and connectivity analyses. Frontiers in Systems Neuroscience. 2011;5:1–20. doi: 10.3389/fnsys.2011.00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dam W.O., Rueschemeyer S.-A., Lindemann O., Bekkering H. Context effects in embodied lexical-semantic processing. Frontiers in Psychology. 2010;1:1–6. doi: 10.3389/fpsyg.2010.00150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dam W.O., van Dijk M., Bekkering H., Rueschemeyer S.-A. Flexibility in embodied lexical-semantic representations. Human Brain Mapping. 2012;33(10):2322–2333. doi: 10.1002/hbm.21365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Elk M., van Schie H., Bekkering H. The scope and limits of action semantics: Reply to comments on ‘Action semantics: A unifying conceptual framework for the selective use of multimodal and modality-specific object knowledge’. Physics of Life Reviews. 2014;11(2):273–279. doi: 10.1016/j.plrev.2014.03.009. [DOI] [PubMed] [Google Scholar]

- Visser M., Jefferies E., Embleton K.V., Lambon Ralph M.A. Both the middle temporal gyrus and the ventral anterior temporal area are crucial for multimodal semantic processing: Distortion-corrected fMRI evidence for a double gradient of information convergence in the temporal lobes. Journal of Cognitive Neuroscience. 2012;24(8):1766–1778. doi: 10.1162/jocn_a_00244. [DOI] [PubMed] [Google Scholar]

- Visser M., Jefferies E., Lambon Ralph M.A. Semantic processing in the anterior temporal lobes: A meta-analysis of the functional neuroimaging literature. Journal of Cognitive Neuroscience. 2010;22(6):1083–1094. doi: 10.1162/jocn.2009.21309. [DOI] [PubMed] [Google Scholar]

- Vitali P., Abutalebi J., Tettamanti M., Rowe J., Scifo P., Fazio F. Generating animal and tool names: An fMRI study of effective connectivity. Brain and Language. 2005;93(1):32–45. doi: 10.1016/j.bandl.2004.08.005. [DOI] [PubMed] [Google Scholar]

- Wagner A.D., Paré-Blagoev E.J., Clark J., Poldrack R.A. Recovering meaning: Left prefrontal cortex guides controlled semantic retrieval. Neuron. 2001;31(2):329–338. doi: 10.1016/s0896-6273(01)00359-2. [DOI] [PubMed] [Google Scholar]

- Wang J., Conder J., Blitzer D., Shinkareva S. Neural representation of abstract and concrete concepts: A meta-analysis of neuroimaging studies. Human Brain Mapping. 2010;31(10):1459–1468. doi: 10.1002/hbm.20950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson C.E., Cardillo E.R., Ianni G.R., Chatterjee A. Action concepts in the brain: An activation likelihood estimation meta-analysis. Journal of Cognitive Neuroscience. 2013;25(8):1191–1205. doi: 10.1162/jocn_a_00401. [DOI] [PubMed] [Google Scholar]

- Whitney C., Jefferies E., Kircher T. Heterogeneity of the left temporal lobe in semantic representation and control: Priming multiple versus single meanings of ambiguous words. Cerebral Cortex (New York, NY: 1991) 2011;21(4):831–844. doi: 10.1093/cercor/bhq148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney C., Kirk M., O’Sullivan J., Lambon Ralph M.A., Jefferies E. The neural organization of semantic control: TMS evidence for a distributed network in left inferior frontal and posterior middle temporal gyrus. Cerebral Cortex (New York, NY: 1991) 2011;21(5):1066–1075. doi: 10.1093/cercor/bhq180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney C., Kirk M., O’Sullivan J., Lambon Ralph M.A., Jefferies E. Executive semantic processing is underpinned by a large-scale neural network: Revealing the contribution of left prefrontal, posterior temporal, and parietal cortex to controlled retrieval and selection using TMS. Journal of Cognitive Neuroscience. 2012;24(1):133–147. doi: 10.1162/jocn_a_00123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wirth M., Jann K., Dierks T., Federspiel A., Wiest R., Horn H. Semantic memory involvement in the default mode network: A functional neuroimaging study using independent component analysis. NeuroImage. 2011;54(4):3057–3066. doi: 10.1016/j.neuroimage.2010.10.039. [DOI] [PubMed] [Google Scholar]

- Woo C.-W., Krishnan A., Wager T.D. Cluster-extent based thresholding in fMRI analyses: Pitfalls and recommendations. NeuroImage. 2014;91:412–419. doi: 10.1016/j.neuroimage.2013.12.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolgar A., Hampshire A., Thompson R., Duncan J. Adaptive coding of task-relevant information in human frontoparietal cortex. The Journal of Neuroscience. The Official Journal of the Society for Neuroscience. 2011;31(41):14592–14599. doi: 10.1523/JNEUROSCI.2616-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich M.W., Jbabdi S., Patenaude B., Chappell M., Makni S., Behrens T. Bayesian analysis of neuroimaging data in FSL. NeuroImage. 2009;45:S173–S186. doi: 10.1016/j.neuroimage.2008.10.055. [DOI] [PubMed] [Google Scholar]