Abstract

The amygdala is thought to play a critical role in detecting salient stimuli. Several studies have taken ecological approaches to investigating such saliency, and argue for domain-specific effects for processing certain natural stimulus categories, in particular faces and animals. Linking this to the amygdala, neurons in the human amygdala have been found to respond strongly to faces and also to animals. However, the amygdala’s necessary role for such category-specific effects at the behavioral level remains untested. Here we tested four rare patients with bilateral amygdala lesions on an established change-detection protocol. Consistent with prior published studies, healthy controls showed reliably faster and more accurate detection of people and animals, as compared with artifacts and plants. So did all four amygdala patients: there were no differences in phenomenal change blindness, in behavioral reaction time to detect changes or in eye-tracking measures. The findings provide decisive evidence against a critical participation of the amygdala in rapid initial processing of attention to animate stimuli, suggesting that the necessary neural substrates for this phenomenon arise either in other subcortical structures (such as the pulvinar) or within the cortex itself.

Keywords: change detection, amygdala, attention, eye-tracking

INTRODUCTION

The human amygdala clearly contributes to processing emotionally salient and socially relevant stimuli (Kling and Brothers, 1992, LeDoux, 1996, Adolphs, 2010). Although most studies have investigated stimuli that are salient because they are emotionally arousing (McGaugh, 2004) or involve reward-related valuation (Baxter and Murray, 2002, Paton et al., 2006), recent findings show that the amygdala processes salient stimuli even when there is no emotional component involved at all (Herry et al., 2007). Earlier notions that the amygdala specifically mediates fear processing have been replaced by recent accounts that it is involved in processing a broader spectrum of salient stimuli, such as biological values and rewards (Baxter and Murray, 2002), novel objects (Bagshaw et al., 1972), emotion-enhanced vividness (Todd et al., 2012), animate entities (Yang et al., 2012b), temporal unpredictability (Herry et al., 2007) and personal space (Kennedy et al., 2009). While some of these may involve fear processing, it has been argued that a more parsimonious explanation is that the amygdala instead acts as a detector of perceptual saliency and biological relevance (Sander et al., 2005, Adolphs, 2008).

One category of salient stimuli that have been recently investigated is animate (living) stimuli (New et al., 2007, Mormann et al., 2011). Subjects can rapidly detect animals in briefly presented novel natural scenes even when attentional resources are extremely limited (Li et al., 2002), suggesting that such detection may in fact be pre-attentive. Furthermore, images of animals and people are detected preferentially during change blindness tasks (New et al., 2007), an approach on which we capitalized here. The amygdala’s role in such preferential detection is also related to a large literature of neuroimaging studies suggesting that amygdala activation to faces might be seen even under conditions of reduced attention or subliminal presentation (Morris et al., 1998, Whalen et al., 1998, Morris et al., 2001, Vuilleumier et al., 2001, Anderson et al., 2003, Jiang and He, 2006) [but see (Pessoa et al., 2006)]. Importantly, recent studies have shown that single neurons directly recorded in the human amygdala respond preferentially to images of animals (Mormann et al., 2011) as well as images of faces (Rutishauser et al., 2011). This begs the question whether the strong neuronal responses tuned to animals in the amygdala (Mormann et al., 2011) have a behavioral consequence such as enhanced attention to animals (New et al., 2007). If so, we would expect a reduced preferential detection of animals in patients with amygdala lesions.

Here we tested four rare patients with bilateral amygdala lesions on a flicker change-detection protocol (Grimes, 1996, Rensink et al., 1997) with concurrent eye-tracking to test the amygdala’s role in rapid detection of animate stimuli. We found both healthy controls and all four amygdala patients showed reliably faster and more accurate detection of animals and people. Detailed eye-tracking analyses further corroborated the superior attentional processing of animals, people and faces, and again were equivalent in controls and amygdala patients.

METHODS

Subjects

We tested four rare patients, SM, AP, AM and BG, who have bilateral amygdala lesions due to Urbach–Wiethe disease (Hofer, 1973), a condition that caused complete bilateral destruction of the basolateral amygdala and variable lesions of the remaining amygdala while sparing the hippocampus and all neocortical structures (see Supplementary Figure S1 for magnetic resonance imaging anatomical scans and Supplementary Table S1 for neuropsychological data). AM and BG are monozygotic twins whose lesions and neuropsychology have been described in detail previously (Becker et al., 2012): both AM and BG have symmetrical complete damage of the basolateral amygdala with some sparing of the centromedial amygdala. SM and AP are two women who have also been described previously (Hampton et al., 2007, Buchanan et al., 2009): SM has complete bilateral amygdala lesions, whereas AP has symmetrical bilateral lesions encompassing ∼75% of the amygdala. Ten neurologically and psychiatrically healthy subjects were recruited as controls, matched in gender, age, intelligence quotient and education (Supplementary Table S1). Subjects gave written informed consent, and the experiments were approved by the Caltech institutional review board. All subjects had normal or corrected-to-normal visual acuity.

Stimuli and apparatus

We used a flicker change-detection task using natural scenes (Figure 1). Change targets were drawn from the following five categories: animals (32 images), artifacts (32 images), people (31 images), plants (29 images) and head directions (26 images). A subset of the images had been used in previous studies that showed reliably faster detection of animals and people (New et al., 2007, 2010). Targets were embedded in complex and natural scenes that contained items from non-target categories as well. The changes to the targets between alternating presentations of an image included both flips and disappearances. Construction and validity of the stimuli, stimulus properties and further control experiments using inverted stimuli have been discussed in previous studies (New et al., 2007, 2010).

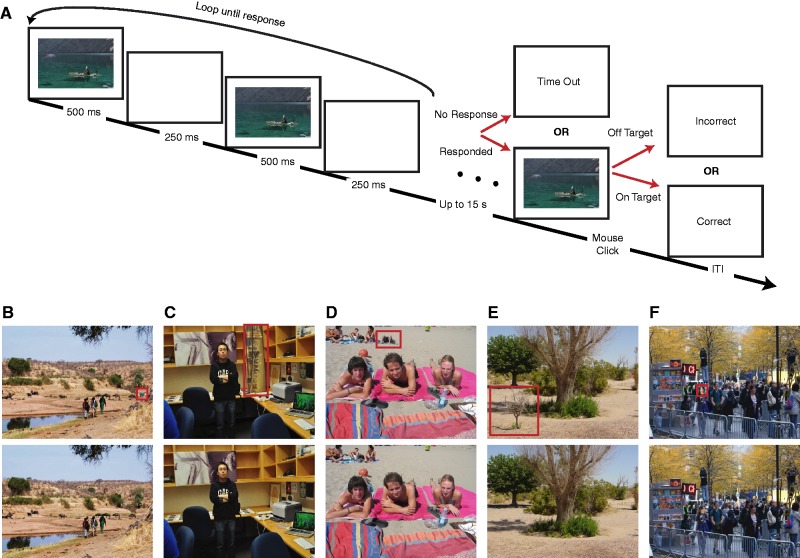

Fig. 1.

Task and sample stimuli. (A) Task structure and time-course. One target object either disappeared or changed its orientation between two alternating frames. These frames were separated by a blank frame. Note that the sizes of the stimuli are not to scale. Sample stimuli showing changes of (B) an animal, (C) artifact, (D) person, (E) plant and (F) head direction. The changes are labeled by a red box. Low-level saliency and eccentricity of the changes did not differ between categories, while plants were significantly larger in area, favoring easier detection.

We quantified low-level properties of all stimuli. Target categories did not differ in terms of bottom-up local saliency around the target region as quantified by the Itti–Koch bottom-up model of attention (Itti et al., 1998, Itti and Koch, 2001) [one-way analysis of variance (ANOVA), P = 0.44; mean saliency was normalized to 1 within each image], nor by mean distance from the center of the image (P = 0.28). Plants subtended a larger area on the screen than the other categories (P < 0.05). SM and SM controls were tested on a subset of the stimuli that had larger area for inanimate stimuli (artifacts and plants vs animals and people; P < 0.005), but did not differ in Itti–Koch saliency (artifacts and plants vs animals and people; P = 0.77) or distance to the center (P = 0.13). Overall, any low-level differences in area favored a faster detection of inanimate stimuli instead of the faster detection of animate stimuli we observed. We also note that our key comparison is between amygdala patients and their matched controls, and these two groups always saw identical stimuli in any case.

Subjects sat 65 cm from a liquid-crystal display (refresh rate 60 Hz, centrally presented stimuli subtending 14.9° × 11.2°). Stimuli were presented using MATLAB with Psychtoolbox 3 (Brainard, 1997) (http://psychtoolbox.org).

Task

In each trial, we presented a sequence of the original scene image (500 ms), a blank screen (250 ms), the altered scene with a changed target (500 ms) and a blank (250 ms). This sequence was repeated until subjects detected the changed target (Figure 1). Subjects were asked to press the space bar as quickly as possible on detecting the change. Subsequent to detection, subjects were asked to use a mouse to click on the location of the change on the original scene image, which was followed by a feedback screen for 1 s (the words, ‘accurate’ or ‘inaccurate’). If subjects did not respond within 15 s (20 s for SM and SM controls), a message ‘Time Out’ was displayed. An intertrial interval was jittered between 1 and 2 s. Scene and category order were completely randomized for each subject. Subjects practiced five trials (one trial per stimulus category) for initial familiarization.

Patients AP, AM and BG and eight matched controls performed the task as described above. Patient SM and two matched controls performed the task with a subset of the stimuli (identical setup and stimuli to New et al. (2010), which did not contain the head direction change category).

Eye tracking

We tracked binocular eye positions using a Tobii TX300 system operating at 300 Hz with a 23 inch screen (screen resolution: 1920 × 1080). Fixations were detected using the Tobii Fixation Filter implemented in Tobii Studio (Olsson, 2007), which detects quick changes in the gaze point using a sliding window averaging method (velocity threshold was set to 35 pixels/sample and distance threshold was set to 35 pixels in our study).

Data analysis

Regions of interest (ROIs) were defined for each image pair by delineating a rectangular area that encompassed the target change region. Of 1818 trials, 1571 mouse clicks (86.4%) fell within these pre-defined ROIs (correct trials) and 111 clicks (6.11%) fell outside (incorrect trials); 136 trials (7.48%) were time-out trials. For all subsequent analyses, we only analyzed correct trials with reaction times (RTs) that fell within ±2.5 s.d.; 61 correct trials (3.36% of all trials) were excluded owing to this RT criterion. There was no difference between amygdala patients and matched control subjects in the proportion of any of the above trial types (all t-tests, Ps > 0.05). We used MATLAB for t-tests and one-way ANOVAs, and R (R Foundation for Statistical Computing, Vienna, Austria) for repeated-measures ANOVAs.

RESULTS

Phenomenological change blindness and conscious detectability

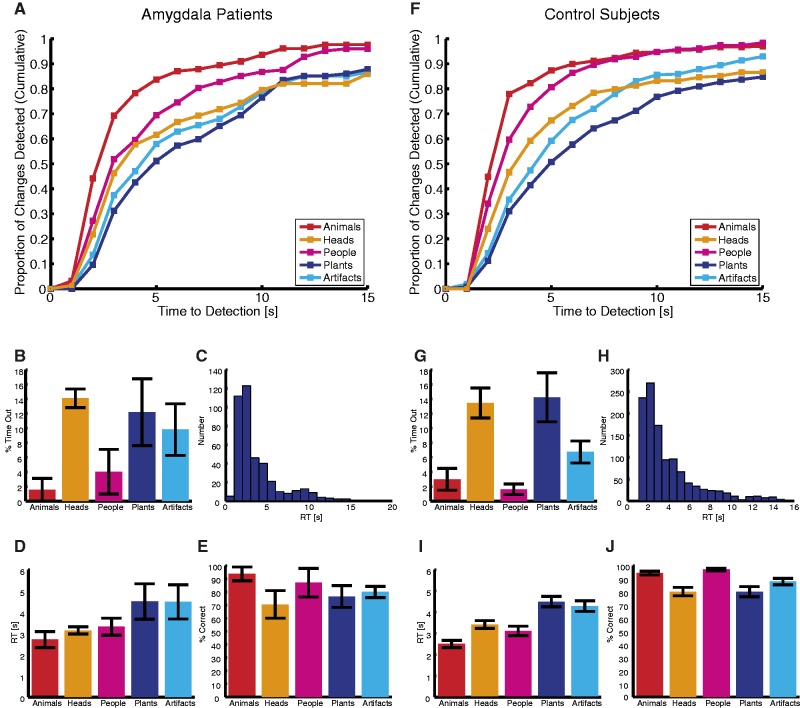

To obtain a systematic characterization of awareness of, and attention to, the change target, we first quantified phenomenological change blindness—the most severe case of change blindness in which the target change is missed entirely. The full time-course of change detection for each stimulus category is depicted in Figure 2A and F, which plots the cumulative proportion of changes detected as a function of time elapsed. Steeper slopes indicate faster change detection and higher asymptotes mean more changes eventually detected. For both amygdala patients and control subjects, the curves for animate targets rose more rapidly and reached higher asymptotes compared with inanimate targets. At any given time, a greater proportion of changes was detected for animate targets than inanimate ones. Both amygdala patients and control subjects were entirely change-blind more often for inanimate targets than for animate ones (time-out rates, Figure 2B and G; amygdala: 5.4 ± 4.8% for animate vs 11.0 ± 7.8% for inanimate; see Table 1 for statistics) and there was no significant difference between amygdala patients and controls.

Fig. 2.

Change detection is category-specific. Both amygdala lesion patients (A–E) (N = 4) and control subjects (F–J) (N = 10) showed advantageous change detection of animals, people and head directions over changes to plants and artifacts. (A and F) Graphs show proportion of changes detected as a function of time and semantic category. (B and G) Percentage of time-out for each category. (C and H) RT histogram across all trials. (D and I) Mean RT for each category. (E and J) Percentage of correct detection for each category. Error bars denote one s.e.m. across subjects.

Table 1.

ANOVA table

| Measure | Statistical test | Effect | F-statistic (d.f.) | P-value |

|---|---|---|---|---|

| Change blindness | 5 × 2 mixed-model ANOVA of target category × group (amygdala lesion vs control) | Main effect of target category | F(4,45) = 13.1 | 3.76 × 10−7 |

| Main effect of subject group | F(1,12) = 0.053 | 0.82 | ||

| Interaction | F(4,45) = 0.46 | 0.76 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,11) = 2.68 | 0.088 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,34) = 11.4 | 5.82 × 10−6 | |

| Conscious detection | Mixed-model two-way ANOVA of target category × subject group | Main effect of category | F(4,36) = 21.1 | 5.11 × 10−9 |

| Main effect of group | F(1,9) = 0.045 | 0.84 | ||

| Interaction | F(4,36) = 0.079 | 0.99 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,8) = 6.73 | 0.011 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,28) = 14.8 | 1.29 × 10−6 | |

| RT | Mixed-model two-way ANOVA of target category × subject group | Main effect of category | F(4,45) = 44.4 | 4.44 × 10−15 |

| Main effect of group | F(1,12) = 0.22 | 0.65 | ||

| Interaction | F(4,45) = 0.12 | 0.97 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,11) = 7.57 | 0.0035 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,34) = 39.7 | 2.26 × 10-12 | |

| Number of fixations | Mixed-model two-way ANOVA of target category × subject group | Main effect of category | F(4,36) = 32.2 | 1.95 × 10−11 |

| Main effect of group | F(1,9) = 0.15 | 0.71 | ||

| Interaction | F(4,36) = 1.45 | 0.24 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,8) = 4.19 | 0.040 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,28) = 31.6 | 5.22 × 10−10 | |

| Hit rates | Mixed-model two-way ANOVA (subject group × category) | Main effect of target category | F(4,45) = 17.2 | 1.22 × 10−8 |

| Main effect of subject group | F(1,12) = 1.37 | 0.26 | ||

| Interaction | F(4,45) = 0.88 | 0.48 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,11) = 5.64 | 0.010 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,34) = 12.5 | 2.35 × 10−6 | |

| Fixation order | Mixed-model two-way ANOVA of target category × subject group | Main effect of category | F(4,36) = 24.6 | 7.14 × 10-10 |

| Main effect of group | F(1,9) = 0.049 | 0.83 | ||

| Interaction | F(4,36) = 2.65 | 0.049 | ||

| One-way repeated-measures ANOVA in amygdala lesion group | Main effect of category | F(4,8) = 2.27 | 0.15 | |

| One-way repeated-measures ANOVA in control group | Main effect of category | F(4,28) = 26.7 | 3.32 × 10−9 | |

| Latency | Mixed-model two-way ANOVA of target category × subject group | Main effect of category | F(4,36) = 11.2 | 5.43 × 10-6 |

| Main effect of group | F(1,9) = 0.45 | 0.52 | ||

| Interaction | F(4,36) = 0.70 | 0.59 | ||

| Horizontal position effect | Mixed-model three-way ANOVA of category × subject group × horizontal position (left vs right); main effect of category | Main effect of category | F(4,102) = 38.4 | P < 10−20 |

| Main effect of horizontal position | F(1,102) = 0.52 | 0.47 | ||

| Main effect of subject group | F(1,12) = 0.38 | 0.55 | ||

| Interactions | all Ps > 0.05 | |||

| Two-way ANOVA of category × horizontal position in amygdala lesion group | Main effect of category | F(4,25) = 6.98 | 0.0006 | |

| Main effect of horizontal position | F(1,25) = 0.071 | 0.79 | ||

| Interaction | F(4,25) = 1.06 | 0.40 | ||

| Two-way ANOVA of category × horizontal position in control group | Main effect of category | F(4,77) = 36.6 | P < 10−20 | |

| Main effect of horizontal position | F(1,77) = 1.70 | 0.20 | ||

| Interaction | F(4,77) = 2.07 | 0.093 | ||

| Vertical position effect | Mixed-model three-way ANOVA of category × subject group × vertical position (upper vs lower) | Main effect of category | F(4,100) = 22.3 | 3.48 × 10-13 |

| Main effect of vertical position | F(1,100) = 11.9 | 0.00084 | ||

| Main effect of subject group | F(1,12) = 0.22 | 0.64 | ||

| Interaction between category and vertical position | F(4,100) = 3.90 | 0.0055 | ||

| Other interactions | all Ps > 0.05 | |||

| Two-way ANOVA of category × vertical position in amygdala lesion group | Main effect of category | F(4,25) = 7.92 | 2.89 × 10−4 | |

| Main effect of vertical position | F(1,25) = 1.48 | 0.23 | ||

| Interaction | F(4,25) = 1.13 | 0.37 | ||

| Two-way ANOVA of category × vertical position in control group | Main effect of category | F(4,75) = 14.5 | 8.56 × 10-9 | |

| Main effect of vertical position | F(1,75) = 10.8 | 0.0015 | ||

| Interaction | F(4,75) = 3.16 | 0.019 |

Note: P-values in bold indicate a statistical significance at P < 0.05. d.f.: degree of freedom

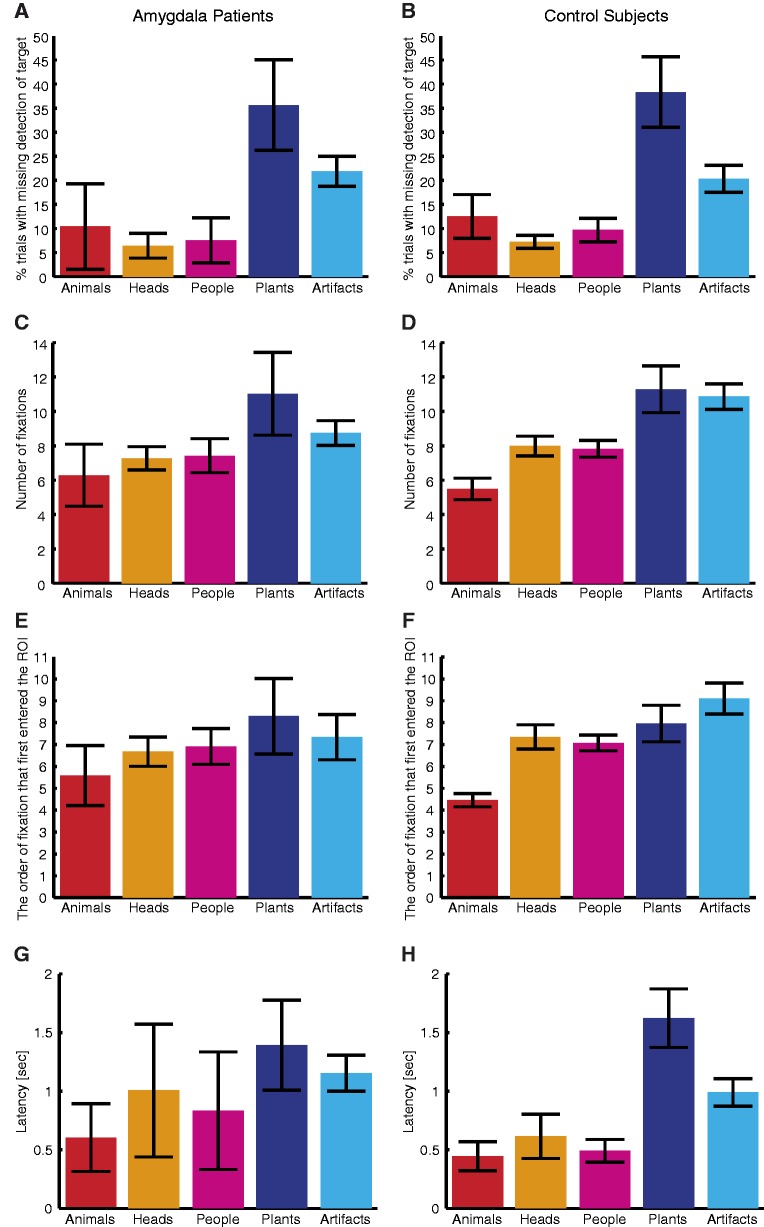

We further analyzed gaze patterns to elucidate a possible mechanism for faster conscious detectability of animate stimuli: having fixated a target, its change should be detected more efficiently for animate than inanimate stimuli. We quantified this by computing the percentage of trials having ‘misses’, which were defined as fixations onto the target area ROI (a rectangular ROI tightly surrounding the target) yet without the change detected. We excluded the last three fixations entering the ROI for misses because they may have been associated with subsequent detection of changes (subjects tended to fixate on the target for one to three fixations to confirm their selection. Thus, the last one to three fixations corresponded to the detection instead of misses of targets). For homogeneity of the data, we here only analyzed the data from AP, AM, BG and their matched controls, who all had identical stimuli and experimental setup.

Figure 3A and B shows that animate stimuli had a lower percentage of trials with misses and thus preferentially emerged into consciousness [Table 1, conscious detection analysis; animate vs inanimate: 8.1 ± 9.2% vs 28.8 ± 9.3%, t(2) = −4.26, P = 0.051 for amygdala patients, and 9.8 ± 6.5% vs 29.3 ± 12.9%, t(7) = −6.63, P = 2.96 × 10−4 for controls] and there was no difference between amygdala patients and control subjects. No target category showed any significant differences in the percentage of misses between amygdala patients and their matched controls (two-tailed t-tests, all Ps > 0.67; bootstrap (Efron and Tibshirani, 1994) with 1000 runs, all Ps > 0.30). The same pattern of results held when we repeated the analysis by computing the average number of misses instead of percentage of trials with misses as used above. Similarly, the same pattern held when we inflated the size of the ROI to a more lenient region of the image [a 50 pixel circular ROI (1.2° visual angle) centered on the target]. These results confirm that the amygdala is not required for preferential conscious detection of biologically relevant stimuli.

Fig. 3.

Quantification of fixation properties. (A and B) Percentage of trials with change blindness despite direct fixation on the change target. (C and D) Number of fixations before detecting changes. (E and F) The serial order of fixation that first entered the target ROI. (G and H) Latency from first fixation onto target to detection of target. (A, C, E and G) Amygdala lesion patients (N = 3). (B, D, F and H) Control subjects (N = 8). Error bars denote one s.e.m. across subjects.

Rapid detection of animate stimuli by explicit behavioral reports of change detection

We next quantified RTs for the explicit behavioral reports of change detection. We found category-specific effects in RTs in both subject groups (see Table 1 RT analysis for statistics). There was a main effect of category but none of group nor any interaction. Category effects were significant when tested separately in the amygdala lesion group (Figure 2D) as well as in the control group (Figure 2I), with animate targets (animals, people and head directions) reliably showing faster detection than inanimate targets (artifacts and plants). Both amygdala-lesioned subjects and controls detected animate targets faster (amygdala: 3.13 ± 0.66 s for animate and 4.50 ± 1.63 s for inanimate; controls: 2.91 ± 0.52 s for animate and 4.36 ± 0.70 s for inanimate, mean ± s.d.). We confirmed this animacy effect for both groups using a summary statistic approach: the difference of the mean RT for animate and inanimate targets was significant both for the amygdala patients [t(3) = −2.57, P = 0.041, paired t-test] and control subjects [t(9) = −12.94, P = 2.02 × 10−7). All individual control subjects and amygdala patients except AM showed detection advantages of animate stimuli (two-tailed t-tests comparing animate vs inanimate stimuli within each subject, all Ps < 0.05). No target category showed any significant differences between amygdala patients and their matched controls (two-tailed t-tests, all Ps > 0.47; bootstrap with 1000 runs, all Ps > 0.24). All above effects also held when we used log-transformed RT as our dependent measure.

We quantified the number of fixations made before the explicit report of change detection (Figure 3C and D) and found a pattern that mirrored the RT results. There was a category effect as expected (Table 1, number of fixations analysis) but no difference between amygdala patients and controls. No target category showed any significant differences between amygdala patients and their matched controls (two-tailed t-tests, all Ps > 0.14; bootstrap with 1000 runs, all Ps > 0.32). Category effects were prominent separately within amygdala patients (Figure 3C) and within control subjects (Figure 3D), with changes in animate stimuli requiring fewer numbers of fixation to be detected than those in inanimate stimuli. Direct comparisons collapsing all animate stimuli vs inanimate stimuli revealed a significantly faster detection of animate stimuli for both amygdala patients (7.0 ± 2.0 vs 9.9 ± 2.5 fixations, paired-sample two-tailed t-test, t(2) = −9.20, P = 0.012) and control subjects (7.1 ± 1.5 vs 11.1 ± 2.9 fixations, t(7) = −6.85, P = 2.42 × 10−4).

Consistent with prior reports (New et al., 2007), more rapid detection of changes to animals and people was not accompanied by any loss of accuracy. On the contrary, both amygdala patients and control subjects were both faster (Figure 2D and I) and more accurate for animate targets (hit rates, Figure 2E and J; amygdala: 86.2 ± 17.3% for animate vs 78.3 ± 12.6% for inanimate; control: 91.6 ± 4.3% for animate vs 84.1 ± 8.7% for inanimate; see Table 1, hit rates analysis, for statistics), and there was no difference between amygdala patients and control subjects. Thus, speed–accuracy trade-offs could not explain the faster detection of animate stimuli, and the strong orienting toward animate stimuli resulted in both more rapid and accurate detection of changes.

Within animate targets, animals showed the greatest detection advantages. For both amygdala patients and control subjects, animals had the steepest cumulative detection rate curve (Figure 2A and F) and the shortest detection RT {Figure 2D and I, two-tailed pairwise t-tests to compare animals vs every other category; amygdala: P = 0.041 [t(3) = −3.44] for people and Ps < 0.081 for all other comparisons; controls: Ps < 0.05 for all comparisons}. Further, animals featured a higher detection rate over artifacts, plants and head direction changes (Figure 2E and J, two-tailed paired-sample t-test; Ps < 0.05 for all comparisons of both amygdala patients and controls) and a lower time-out rate over head direction changes (Figure 2B and G, Ps < 0.05 for both amygdala patients and controls).

Finally, a series of direct and uncorrected t-tests showed no significant differences between amygdala patients and control subjects on change blindness (i.e. time-out), hit rates and RT for any categories [two-tailed unpaired t-tests, Ps > 0.11 for all comparisons; confirmed by bootstrap with 1000 runs (all Ps > 0.19)].

Implicit measures of change detection from eye tracking

While we did not find any impairment of change blindness in amygdala patients at the level of phenomenology or explicit detection response, it remained possible that they might be impaired on more implicit measures. To address this possibility, we analyzed the eye-tracking data in more detail: subjects might look at targets more rapidly for animate stimuli [an attentional mechanism of faster orienting that could in principle be distinct from the conscious detectability mechanism (Koch and Tsuchiya, 2007)]. We quantified this by computing the serial order of fixation that first entered the target area.

Control subjects had earlier fixations onto animate than inanimate targets [Figure 3F and Table 1, fixation order analysis; 6.3 ± 1.3 vs 8.5 ± 2.2 for animate vs inanimate, paired t-test: t(7) = −4.31, P = 0.0035], and animals attracted the earliest fixations (paired t-tests against every other category, Ps < 0.005). We observed a similar pattern of earlier fixations onto animals and animate targets in the amygdala lesion patients [Figure 3E; 6.4 ± 1.6 vs 7.8 ± 2.1 for animate vs inanimate; paired t-test: t(2) = −5.15, P = 0.036], and we observed no difference between amygdala lesion patients and control subjects. No target category showed any significant differences between amygdala patients and their matched controls (two-tailed t-tests, all Ps > 0.22; bootstrap with 1000 runs, all Ps > 0.19).

In the above analysis, we counted as a datapoint the last fixation of the trial even when the subject never fixated onto the target (i.e. time-out trials). When we repeated the above analysis by excluding all time-out trials, we obtained qualitatively the same pattern of results. Furthermore, when we repeated the above analysis with the absolute latency (in seconds) of the first fixation onto the target (instead of the serial order of the first fixation), we obtained qualitatively the same pattern of results.

So far, we have shown that detection advantages of animate stimuli could be attributed to either attention or conscious detection, but neither requires the amygdala. However, how might initial attention and conscious detectability interact? We observed that faster detection of animate stimuli (by pushing a button) was typically preceded by more rapid initial fixation toward them (Figure 3E and F). Supporting a role for fast initial orientation in facilitating subsequent detection, there was a significant trial-by-trial correlation (on all correct trials) between the serial order of the first fixation onto the target ROI and the total number of fixations taken to detect the change (Pearson correlation; amygdala: r = 0.89, P < 10−20; control: r = 0.76, P < 10−20); similarly, there was a correlation between latency (absolute time elapsed in seconds) of the first fixation onto the target ROI and button press RT (amygdala: r = 0.81, P < 10−20; control: r = 0.78, P < 10−20). To further establish the role of initial orienting in conscious detectability, we next measured the latency from having first fixated onto the target ROI to detecting the target change on all correct trials (Figure 3G and H). Once the target ROI had been fixated, this latency should reflect the efficacy of conscious detectability. We found a category-specific effect on latency (Table 1, latency analysis), with animate stimuli featuring shorter latencies than inanimate stimuli. Again, there was neither difference between amygdala patients and controls nor any interaction. No target category showed any significant differences between amygdala patients and their matched controls (two-tailed t-tests, all Ps > 0.32; bootstrap with 1000 runs, all Ps > 0.17). These results isolate a category-specific effect of animate stimuli on the efficacy of conscious detectability, and furthermore demonstrate that this mechanism is independent of the amygdala.

Detection advantages to animals were not lateralized

Given that animal-selective neurons were discovered primarily in the right amygdala (Mormann et al., 2011), we expected that detection advantages might be lateralized to some extent. We thus divided target locations according to their horizontal positions. The category effects described above replicated for targets in either the left or right half of the image (Table 1, horizontal position effect analysis), and there was no main effect of laterality (3.7 ± 1.2 vs 3.6 ± 1.3 s (mean ± s.d.) for left vs right) or subject group, nor any interactions. Similarly, laterality effect was found neither separately within amygdala patients nor within control subjects. Further post hoc paired-sample t-tests showed no difference in detecting the targets between left and right {Ps > 0.05 for all categories and for both amygdala patients and control subjects, except one uncorrected P = 0.022 [t(18) = 2.50] for people detection from control subjects}.

We repeated this analysis in relation to upper vs lower parts of the image. The category effects were observed for both upper and lower parts (Table 1, vertical position effect analysis). We found a main effect of category, and to our surprise, a main effect of vertical position [4.0 ± 1.4 vs 3.6 ± 1.1 s (mean ± s.d.) for upper vs lower] as well as an interaction between category and vertical position. Separate analyses within amygdala patients and control subjects confirmed both the category effect and the vertical position effect (amygdala: 4.1 ± 1.5 vs 3.7 ± 1.3 s for upper vs lower; controls: 4.0 ± 1.4 vs 3.5 ± 0.9 s for upper vs lower). This vertical position effect was primarily driven by faster detection of people and plants in the lower visual field. All above patterns held also with log-transformed RT as the dependent measure.

DISCUSSION

On a flicker change-blindness protocol, all our control subjects showed an advantage in detecting animate stimuli (animals, people and head directions) over inanimate stimuli (artifacts and plants), consistent with the prior finding of category-specific attention toward animals (New et al., 2007). Interestingly, the amygdala lesion patients also showed the same detection advantages. Category effects were not lateralized. Eye-tracking data further dissociated two mechanisms contributing to these detection advantages: animate stimuli attracted initial gaze faster and were preferentially detected by button press. Amygdala lesions spared both of these components. Our findings argue against a critical participation of the amygdala in rapid initial processing of attention to ecologically salient stimuli, and extend this conclusion to both initial orienting as well as to detectability.

Advantages of our change detection task and comparison with other tasks

Compared with previous studies of change detection (New et al., 2007, 2010), our addition of eye tracking to the design strongly expanded the scope of our analyses and allowed us to elucidate the mechanisms underlying change detection and provide interesting insights into the visual search performance in change detection. One advantage of using change detection in this study is to better link it with previous studies—for instance, it permits comparisons with a large college population (New et al., 2007), a developmental population (i.e. 7–8-year olds) (New et al., 2010) and with individuals diagnosed with autism spectrum disorder (New et al., 2010). Most importantly, the change detection task allows us to quantify the percentage of misses to dissociate attention to animals from conscious detectability of them (eye tracking vs detection), which is difficult to probe with a free viewing task.

In studies of ultra-rapid categorization of animals, human participants can reliably make saccades to the sides containing animals in as little as 120 ms. (Kirchner and Thorpe, 2006). Our response latency was considerably longer compared with this markedly different task, which explicitly tasks the participants with detecting the specific target category, and typically presents one large central object in each image. It is very likely that the participants in this study would have performed that explicit task far more quickly, even with the natural and complex scenes used here. Conversely, had the change detection task been conducted with far simpler stimuli, such as two side-by-side objects, the animate bias could easily have been revealed through first fixation locations. Interestingly, in the first studies of this bias in healthy participants (New et al., 2007), the fastest responses (<1 s) were for detecting animate than inanimate objects. Change detection within the first second likely required the target object to be the first attended item in the scene (New et al., 2007).

Possible caveats

In this study, we have shown that the amygdala is not involved in rapid initial processing of ecologically salient animate stimuli. Top-down contextual knowledge might have played a more important role [cf. (Kanan et al., 2009)], and the reliance on top-down control and contextual information in the task could have diminished the potential effect of amygdala lesions on detection performance. It has been shown that contextual knowledge can drive change detection performance (e.g. Rensink et al., 1997) and, interestingly, as a function of semantic inconsistency (Hollingworth and Henderson, 2000). However, in our stimuli, all of the targets were comparably semantically consistent with their scenes.

Top-down control and contextual knowledge are mostly effective when applied toward explicit tasks or targets. However, in our stimuli, the target from one category was often embedded in other distractor categories, and the subject had no prior expectation of the target category to apply a specific contextual knowledge regarding that target category. In other words, because our natural scene stimuli mostly contained multiple categories of objects, subjects could only apply a uniform strategy across all stimuli. For example, in a scene containing both faces and plants, subjects might look at faces first regardless of whether the target was a face or a plant. Therefore, any top-down control involved in our study would be unlikely to affect within-subject comparisons between categories. It will be interesting to explore this issue further in future studies with quantitative analyses of the spatial layout of fixations with respect to the distribution of different target categories.

Our findings were not explained by category differences in low-level saliency. Our stimulus set was biased, if anything, toward low-level features favoring better detection of inanimate stimuli, the opposite of the effect we found, and detection advantages toward animate stimuli are known to be abolished with inverted stimuli, which preserve low-level stimulus properties (New et al., 2007), an effect we replicated in SM and SM’s controls.

Lateralized effects of category attention

We did not observe lateralized effects of category attention in this study, even though there is a lateralized distribution of animal-selective neurons in the right human amygdala (Mormann et al., 2011). Behaviorally, lateralized effects have been reported for the sensory and cognitive processing of language, face and emotion (MacNeilage et al., 2009). Neurologically, laterality has been also well documented for attentional systems (Fox et al., 2006) as well as cortical components of face processing (De Renzi et al., 1994). Recent studies also report laterality effects in frogs, chickens, birds and monkeys, implying an evolutionarily preserved mechanism for detecting salient stimuli that shows an asymmetry for the right hemisphere (Vallortigara and Rogers, 2005). The absence of laterality effects in our data may be due to the limited visual angle subtended by our stimuli (none of the stimuli were far in the left or right periphery), the nature of the stimuli (e.g. none included threatening or strongly valenced stimuli) or the nature of the task. In healthy subjects, a strong asymmetry in attentional resolution has been reported between the upper and lower visual field (He et al., 1996), a finding that may be related to the intriguing effect of vertical position of change targets in our study.

Amygdala lesions and plasticity

All four amygdala patients have symmetrical complete damage of the basolateral amygdala, and in general, the damage is extensive, as documented in detail in prior publications (see Methods section). Although, in the three patients other than SM, there is some sparing of the centromedial amygdala, it would seem unlikely that this remaining intact portion of the amygdala would be able to play the role required for attention or detectability in our task: because the basolateral amygdala is the primary source of visual input to the amygdala (Amaral et al., 1992) and all patients have complete lesions of the basolateral amygdala, this would effectively disconnect any remaining spared parts of the amygdala from temporal neocortex. Furthermore, patient SM has complete bilateral amygdala lesions, and yet, her individual data still showed normal detection advantages for animate stimuli, demonstrating that the amygdala is not necessary for the rapid detection of animate stimuli.

A final consideration concerns the issue of reorganization and plasticity. While we found entirely intact orientation to, and detection of, animate stimuli in all four amygdala patients, all of them had developmental-onset lesions arising from Urbach–Wiethe disease. On the one hand, this made for a homogenous population to study; on the other it introduces the possibility that, over time, compensatory function was provided by other brain regions in the absence of the amygdala. Indeed, evidence for compensatory function (on an unrelated task) has been reported in one of the patients we studied (Becker et al., 2012). Furthermore, normal recognition of prototypical emotional faces has been reported in some (Siebert et al., 2003), but not other (Adolphs et al., 1999), patients with amygdala lesions, and one study even reported a hypervigilance for fearful faces in three patients with Urbach–Wiethe disease (Terburg et al., 2012). A critical direction for future studies will be to replicate our findings in patients with adult, and with acute-onset, amygdala lesions to investigate the added complexities introduced by developmental-onset amygdala lesions.

The role of the amygdala in attention and saliency

Since the early 1990s, an influential view of the role of the amygdala in sensory processing was that it plays a rather automatic non-conscious role (Dolan, 2002, Ohman, 2002), with long-standing debates about the amygdala’s response to fearful faces being either independent of attention (Vuilleumier et al., 2001, Anderson et al., 2003) or requiring attention (Pessoa et al., 2002). A subcortical pathway through the superior colliculus and pulvinar to the amygdala is commonly assumed to mediate rapid, automatic and non-conscious processing of affective and social stimuli and to form a specific subcortical ‘low route’ of information processing (LeDoux, 1996, Tamietto and de Gelder, 2010). However, the same patient SM we tested here, who has complete bilateral amygdala lesions, nonetheless showed normal rapid detection and non-conscious processing of fearful faces, suggesting that the amygdala does not process fear-related stimuli rapidly and non-consciously [(Tsuchiya et al., 2009), replicated in (Yang et al., 2012a)]. A variety of evidence, including the long latencies that are observed from amygdala recordings in humans (Mormann et al., 2008, Rutishauser et al., 2011), further challenges the ‘low route’ account of amygdala function (Cauchoix and Crouzet, 2013). Instead, it has been proposed that the amygdala participates in an elaborative cortical network to evaluate the biological significance of visual stimuli (Pessoa and Adolphs, 2010)—a role that appears to necessarily require the amygdala when detailed social judgments need to be made about faces (Adolphs et al., 1994, 1998), but not when rapid detection or conscious visibility are assessed.

The human amygdala responds to both emotionally and socially significant information, and arguably social stimuli are often also emotionally salient. However, there seem to be effects of social saliency even independent of emotion: the human amygdala is more strongly activated for neutral social vs non-social information but activated at a similar level when viewing socially positive or negative images (Vrticka et al., 2013). Socially relevant information in faces is expressed in large part in the eye region, including gaze directions (Argyle et al., 1973, Whalen et al., 2004), and viewers predominantly fixate the eyes, a tendency normally correlated with amygdala activation (Gamer and Büchel, 2009). A range of psychiatric disorders feature abnormal fixations onto faces, including abnormal fixations onto the eye region of faces, and several of these are hypothesized to involve the amygdala (Baron-Cohen et al., 2000, Baron-Cohen, 2004, Dalton et al., 2005). Patients with schizophrenia (Sasson et al., 2007), social phobia (Horley et al., 2004) and autism (Adolphs et al., 2001) all show abnormal facial scanning patterns. Although by no means eliminating the amygdala as one structure contributing to social dysfunction in these diseases, the data from the present study do argue that it may not play a key online role in those components involving orienting and attentional mechanisms.

CONCLUSION

Our results show unambiguously that an intact amygdala is not required for rapid orientation toward, and conscious detection of, animate stimuli that normally show preferential processing with these measures. This conclusion leaves open the question of what are the essential structures mediating this effect. Three plausible candidates worth further study would be the pulvinar nucleus of the thalamus, prefrontal cortex or visual cortices. Both the pulvinar (Tamietto and de Gelder, 2010) and prefrontal cortex (Bar, 2007) have been hypothesized to subserve rapid initial evaluation of stimuli, which can then influence subsequent processing; it is also possible that circuitry within visual cortices itself could suffice to detect salient stimulus categories. How such mechanisms are initially set up during development and whether any of them might be innate remain important topics for future studies.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Acknowledgments

This research was supported by grants from NSF, the Pfeiffer Family Foundation, the Simons Foundation, and an NIMH Conte Center. We thank Claudia Wilimzig for providing some of the stimuli, Ty Basinger for creating some of the stimuli, Peter Foley for help with the statistical analysis and Mike Tyszka for providing the anatomical scans of the lesion patients. The authors declare no competing financial interests. S.W., N.T., J.N. and R.A. designed experiments. R.H. contributed two patients with amygdala lesions. S.W. and N.T. performed experiments and analyzed data. S.W. and R.A. wrote the article. All authors discussed the results and made comments on the article.

REFERENCES

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18:166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Sears L, Piven J. abnormal processing of social information from faces in autism. Journal of Cognitive Neuroscience. 2001;13:232–40. doi: 10.1162/089892901564289. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio AR. The human amygdala in social judgment. Nature. 1998;393:470–4. doi: 10.1038/30982. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–72. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, et al. Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia. 1999;37:1111–17. doi: 10.1016/s0028-3932(99)00039-1. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL, Pitkanen A, Carmichael ST. Anatomical organization of the primate amygdaloid complex. In: Aggleton JP, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York: Wiley-Liss; 1992. pp. 1–66. [Google Scholar]

- Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JDE. Neural correlates of the automatic processing of threat facial signals. The Journal of Neuroscience. 2003;23:5627–33. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Argyle M, Ingham R, Alkema F, McCallin M. The different functions of gaze. Semiotica. 1973;7:19–32. [Google Scholar]

- Bagshaw MH, Mackworth NH, Pribram KH. The effect of resections of the inferotemporal cortex or the amygdala on visual orienting and habituation. Neuropsychologia. 1972;10:153–62. doi: 10.1016/0028-3932(72)90054-1. [DOI] [PubMed] [Google Scholar]

- Bar M. The proactive brain: using analogies and associations to generate predictions. Trends in Cognitive Sciences. 2007;11:280–9. doi: 10.1016/j.tics.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S. Autism: research into causes and intervention. Developmental Neurorehabilitation. 2004;7:73–8. doi: 10.1080/13638490310001654790. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Ring HA, Bullmore ET, Wheelwright S, Ashwin C, Williams SCR. The amygdala theory of autism. Neuroscience and Biobehavioral Reviews. 2000;24:355–64. doi: 10.1016/s0149-7634(00)00011-7. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Murray EA. The amygdala and reward. Nature Reviews Neuroscience. 2002;3:563–73. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Becker B, Mihov Y, Scheele D, et al. Fear processing and social networking in the absence of a functional amygdala. Biological Psychiatry. 2012;72:70–7. doi: 10.1016/j.biopsych.2011.11.024. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–6. [PubMed] [Google Scholar]

- Buchanan TW, Tranel D, Adolphs R. The human amygdala in social function. In: Whalen PW, Phelps L, editors. The Human Amygdala. New York: Oxford University Press; 2009. pp. 289–320. [Google Scholar]

- Cauchoix M, Crouzet SM. How plausible is a subcortical account of rapid visual recognition? Frontiers in Human Neuroscience. 2013;7:39. doi: 10.3389/fnhum.2013.00039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, et al. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–26. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Renzi E, Perani D, Carlesimo GA, Silveri MC, Fazio F. Prosopagnosia can be associated with damage confined to the right hemisphere–an MRI and PET study and a review of the literature. Neuropsychologia. 1994;32:893–902. doi: 10.1016/0028-3932(94)90041-8. [DOI] [PubMed] [Google Scholar]

- Dolan RJ. Emotion, cognition, and behavior. Science. 2002;298:1191–4. doi: 10.1126/science.1076358. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani RJ. An Introduction to the Bootstrap (Chapman & Hall/CRC Monographs on Statistics & Applied Probability) Boca Raton: Chapman and Hall/CRC; 1994. [Google Scholar]

- Fox MD, Corbetta M, Snyder AZ, Vincent JL, Raichle ME. Spontaneous neuronal activity distinguishes human dorsal and ventral attention systems. Proceedings of the National Academy of Sciences USA. 2006;103:10046–51. doi: 10.1073/pnas.0604187103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Büchel C. Amygdala activation predicts gaze toward fearful eyes. The Journal of Neuroscience. 2009;29:9123–6. doi: 10.1523/JNEUROSCI.1883-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimes J. On the failure to detect changes in scenes across saccades. In: Akins K, editor. Perception: Vancouver Studies in Cognitive Science. Vol. 5. New York: Oxford University Press; 1996. pp. 89–110. [Google Scholar]

- Hampton AN, Adolphs R, Tyszka JM, O'Doherty JP. contributions of the amygdala to reward expectancy and choice signals in human prefrontal cortex. Neuron. 2007;55:545–55. doi: 10.1016/j.neuron.2007.07.022. [DOI] [PubMed] [Google Scholar]

- He S, Cavanagh P, Intriligator J. Attentional resolution and the locus of visual awareness. Nature. 1996;383:334–7. doi: 10.1038/383334a0. [DOI] [PubMed] [Google Scholar]

- Herry C, Bach DR, Esposito F, et al. Processing of temporal unpredictability in human and animal amygdala. The Journal of Neuroscience. 2007;27:5958–66. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hofer PA. Urbach-Wiethe disease (lipoglycoproteinosis; lipoid proteinosis; hyalinosis cutis et mucosae). a review. Acta Dermato-Venereologica Supplementum. 1973;53:1–52. [PubMed] [Google Scholar]

- Hollingworth A, Henderson JM. Semantic informativeness mediates the detection of changes in natural scenes. Visual Cognition. 2000;7:213–35. [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Face to face: visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research. 2004;127:43–53. doi: 10.1016/j.psychres.2004.02.016. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C. Computational modelling of visual attention. Nature Reviews Neuroscience. 2001;2:194–203. doi: 10.1038/35058500. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C, Niebur E. A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis Machine Intellgence. 1998;20:1254–9. [Google Scholar]

- Jiang Y, He S. Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Current Biology. 2006;16:2023–9. doi: 10.1016/j.cub.2006.08.084. [DOI] [PubMed] [Google Scholar]

- Kanan C, Tong MH, Zhang L, Cottrell GW. SUN: top-down saliency using natural statistics. Visual Cognition. 2009;17:979–1003. doi: 10.1080/13506280902771138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy DP, Glascher J, Tyszka JM, Adolphs R. Personal space regulation by the human amygdala. Nature Neuroscience. 2009;12:1226–7. doi: 10.1038/nn.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirchner H, Thorpe SJ. Ultra-rapid object detection with saccadic eye movements: visual processing speed revisited. Vision Research. 2006;46:1762–76. doi: 10.1016/j.visres.2005.10.002. [DOI] [PubMed] [Google Scholar]

- Kling AS, Brothers LA. The amygdala: neurobiological aspects of emotion, memory and mental dysfunction. Yale Journal of Biology and Medicine. 1992;65:540–2. [Google Scholar]

- Koch C, Tsuchiya N. Attention and consciousness: two distinct brain processes. Trends in Cognitive Sciences. 2007;11:16–22. doi: 10.1016/j.tics.2006.10.012. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. The Emotional Brain: The Mysterious Underpinnings of Emotional Life. New York: Simon & Schuster; 1996. [Google Scholar]

- Li FF, VanRullen R, Koch C, Perona P. Rapid natural scene categorization in the near absence of attention. Proceedings of the National Academy of Sciences USA. 2002;99:9596–601. doi: 10.1073/pnas.092277599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacNeilage PF, Rogers LJ, Vallortigara G. Origins of the left & right brain. Scientific American. 2009;301:60–7. doi: 10.1038/scientificamerican0709-60. [DOI] [PubMed] [Google Scholar]

- McGaugh JL. The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annual Review of Neuroscience. 2004;27:1–28. doi: 10.1146/annurev.neuro.27.070203.144157. [DOI] [PubMed] [Google Scholar]

- Mormann F, Dubois J, Kornblith S, et al. A category-specific response to animals in the right human amygdala. Nature Neuroscience. 2011;14:1247–9. doi: 10.1038/nn.2899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mormann F, Kornblith S, Quiroga RQ, et al. latency and selectivity of single neurons indicate hierarchical processing in the human medial temporal lobe. The Journal of Neuroscience. 2008;28:8865–72. doi: 10.1523/JNEUROSCI.1640-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124:1241–52. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393:467–70. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- New J, Cosmides L, Tooby J. Category-specific attention for animals reflects ancestral priorities, not expertise. Proceedings of the National Academy of Sciences USA. 2007;104:16598–603. doi: 10.1073/pnas.0703913104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- New JJ, Schultz RT, Wolf J, et al. The scope of social attention deficits in autism: prioritized orienting to people and animals in static natural scenes. Neuropsychologia. 2010;48:51–9. doi: 10.1016/j.neuropsychologia.2009.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohman A. Automaticity and the amygdala: nonconscious responses to emotional faces. Current Directions in Psychological Science. 2002;11:62–6. [Google Scholar]

- Olsson P. Real-Time and Offline Filters for Eye Tracking. 2007. Stockholm: Msc, KTH Royal Institute of Technology. [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–70. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a ‘low road' to ‘many roads' of evaluating biological significance. Nature Reviews Neuroscience. 2010;11:773–83. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Sturman D, Ungerleider LG. Target visibility and visual awareness modulate amygdala responses to fearful faces. Cerebral Cortex. 2006;16:366–75. doi: 10.1093/cercor/bhi115. [DOI] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proceedings of the National Academy of Sciences USA. 2002;99:11458–63. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rensink RA, O'Regan JK, Clark JJ. To see or not to see: the need for attention to perceive changes in scenes. Psychological Science. 1997;8:368–73. [Google Scholar]

- Rutishauser U, Tudusciuc O, Neumann D, et al. Single-unit responses selective for whole faces in the human amygdala. Current Biology. 2011;21:1654–60. doi: 10.1016/j.cub.2011.08.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Pourtois G, et al. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage. 2005;28:848–58. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Sasson N, Tsuchiya N, Hurley R, et al. Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia. 2007;45:2580–8. doi: 10.1016/j.neuropsychologia.2007.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siebert M, Markowitsch HJ, Bartel P. Amygdala, affect and cognition: evidence from 10 patients with Urbach-Wiethe disease. Brain. 2003;126:2627–37. doi: 10.1093/brain/awg271. [DOI] [PubMed] [Google Scholar]

- Tamietto M, de Gelder B. Neural bases of the non-conscious perception of emotional signals. Nature Reviews Neuroscience. 2010;11:697–709. doi: 10.1038/nrn2889. [DOI] [PubMed] [Google Scholar]

- Terburg D, Morgan BE, Montoya ER, et al. Hypervigilance for fear after basolateral amygdala damage in humans. Translational Psychiatry. 2012;2:e115. doi: 10.1038/tp.2012.46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd RM, Talmi D, Schmitz TW, Susskind J, Anderson AK. Psychophysical and neural evidence for emotion-enhanced perceptual vividness. The Journal of Neuroscience. 2012;32:11201–12. doi: 10.1523/JNEUROSCI.0155-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N, Moradi F, Felsen C, Yamazaki M, Adolphs R. Intact rapid detection of fearful faces in the absence of the amygdala. Nature Neuroscience. 2009;12:1224–5. doi: 10.1038/nn.2380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallortigara G, Rogers LJ. Survival with an asymmetrical brain: advantages and disadvantages of cerebral lateralization. Behavioral and Brain Sciences. 2005;28:575–89. doi: 10.1017/S0140525X05000105. [DOI] [PubMed] [Google Scholar]

- Vrticka P, Sander D, Vuilleumier P. Lateralized interactive social content and valence processing within the human amygdala. Frontiers in Human Neuroscience. 2013;6:358. doi: 10.3389/fnhum.2012.00358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, et al. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. The Journal of Neuroscience. 1998;18:411–18. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang E, McHugo M, Dukic M, Blake R, Zald D. 2012a. Advantage of fearful faces in breaking interocular suppression is preserved after amygdala lesions. Journal of Vision 12:679. [Google Scholar]

- Yang J, Bellgowan PSF, Martin A. Threat, domain-specificity and the human amygdala. Neuropsychologia. 2012b;50:2566–72. doi: 10.1016/j.neuropsychologia.2012.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.