Abstract

Intrinsic emotional expressions such as those communicated by faces and vocalizations have been shown to engage specific brain regions, such as the amygdala. Although music constitutes another powerful means to express emotions, the neural substrates involved in its processing remain poorly understood. In particular, it is unknown whether brain regions typically associated with processing ‘biologically relevant’ emotional expressions are also recruited by emotional music. To address this question, we conducted an event-related functional magnetic resonance imaging study in 47 healthy volunteers in which we directly compared responses to basic emotions (fear, sadness and happiness, as well as neutral) expressed through faces, non-linguistic vocalizations and short novel musical excerpts. Our results confirmed the importance of fear in emotional communication, as revealed by significant blood oxygen level-dependent signal increased in a cluster within the posterior amygdala and anterior hippocampus, as well as in the posterior insula across all three domains. Moreover, subject-specific amygdala responses to fearful music and vocalizations were correlated, consistent with the proposal that the brain circuitry involved in the processing of musical emotions might be shared with the one that have evolved for vocalizations. Overall, our results show that processing of fear expressed through music, engages some of the same brain areas known to be crucial for detecting and evaluating threat-related information.

Keywords: amygdala, hippocampus, fear, emotional expressions, music, vocalizations

INTRODUCTION

Emotional communication plays a fundamental role in social interactions. In particular, emotional expressions provide critical information about the emitter’s affective state and/or the surrounding environment. As such, these signals can help guide behavior and even have, in some cases, survival value. Emotional information can be transmitted through different modalities and channels. In humans, the most studied class of emotional expressions is that conveyed by facial expressions (Fusar-Poli et al., 2009). Building on a large literature on experimental animals, through lesion studies and, especially, neuroimaging experiments, researchers have begun to delineate the neural structures involved in the processing of facial expressions, highlighting the amygdala as a key structure (Adolphs et al., 1995; Mattavelli et al., 2014). Although the vast majority of studies have focused on amygdala responses to fearful faces, there is now strong evidence that the amygdala also responds to other emotions, such as anger, sadness, disgust and happiness (Sergerie et al., 2008). These findings are in line with more recent theories suggesting a wider role for the amygdala in emotion, including the processing of signals of distress (Blair et al., 1999) or ambiguous emotional information such as surprise (Whalen et al., 2001; Yang et al., 2002). Others posit that the amygdala act as a relevance detector to biologically salient information (Sander et al., 2003) or even to ‘potential’ (but not necessarily actual) relevant stimuli (for a review, see Armony, 2013). Although much less studied than faces, there is growing support for the notion that the amygdala is also involved in the decoding of emotional expressions—including, but not limited to, fear—conveyed by other channels, such as body gestures (de Gelder, 2006; Grèzes et al., 2007; Grèzes et al., 2013) and non-linguistic vocalizations (Phillips et al., 1998; Morris et al., 1999; Fecteau et al., 2007 Phillips). This is consistent with the fact that the amygdala receives information from various sensory modalities (Amaral et al., 1992; Young et al., 1994; Swanson and Petrovich, 1998).

In order for emotional signals to be useful, their intended meaning needs to be accurately decoded by the receiver. This meaning can be hardwired, shaped through evolution, as it seems to be the case (at least in part) for facial, body and vocal expressions (Izard, 1994; Kreiman, 1997; Darwin, 1998; Barr et al., 2000), or learnt by explicit arbitrary associations (e.g. the meaning of ‘· · · – – – · · ·’ in Morse code). In this context, emotions conveyed by music are of particular interest: it is very powerful in expressing emotions (Juslin and Sloboda, 2001) despite having no significant biological function or survival value. Yet, there is mounting evidence suggesting that emotions associated with music are not just the result of individual learning. For instance, a few studies suggest that the evaluation of emotions conveyed by (unfamiliar) music is highly consistent among individuals with varying degrees of musical preferences and training, even when comparing different cultures (Fritz et al., 2009). This has led to the proposal that musical emotions might be constrained by innate mechanisms, as is the case for facial expressions (Grossmann, 2010), vocal expressions (Sauter et al., 2010) and basic tastes (sweet, salt, sour, bitter; Steiner, 1979). Although the origin of this putative predisposition remains to be determined, it has been suggested that musical emotions owe their precociousness and efficacy to the ‘invasion’ (Dehaene and Cohen, 2007) of the brain circuits that have evolved for emotional responsiveness to vocal expressions (for reviews, see Peretz, 2006; Peretz et al., 2013). This hypothesis is consistent with neuroimaging studies showing some degree of overlap in the regions responsive for (non-emotional) voice and music, particularly along the superior temporal gyrus (Maess et al., 2001; Steinbeis and Koelsch, 2008; Slevc et al., 2009; Angulo-Perkins, A., Aubé, W., Peretz, I., Barrios, F.A, Armony, J. &, Concha, L., submitted for publication; see Schirmer et al., 2012 for a meta-analysis).

According to this model, brain responses to emotional music should be, at least in part, overlapping with those involved in processing emotions conveyed by voice—and possibly other modalities—in particular within the amygdala. Although some studies have indeed reported amygdala activation in response to emotional music (Koelsch, 2010), results are still largely inconsistent (Armony and LeDoux, 2010; Peretz et al., 2013). Part of this inconsistency may arise from the fact that most previous studies investigating brain responses to emotional music have used long, familiar stimuli mainly selected from the musical repertoire (Blood and Zatorre, 2001; Brown et al., 2004; Menon and Levitin, 2005; Koelsch et al., 2006; Flores-Gutiérrez et al., 2007; Mitterschiffthaler et al., 2007; Green et al., 2008; Escoffier et al., 2013; Koelsch et al., 2013), as well as widely different control conditions [e.g. dissonant musical stimuli (Blood et al., 1999), scrambled musical pieces (Menon and Levitin, 2005; Koelsch et al., 2006) or rest (Brown et al., 2004)]. The latter issue is of particular importance, as the choice of the control condition in neuroimaging experiments can have significant effects on the results obtained, particularly in the case of emotional stimuli (Armony and Han, 2013). In terms of commonalities in brain responses to emotional music and voice, very little data exist, as almost all studies have focused on only one of those domains. The one study that did explicitly compare them failed to detect any regions commonly activated by emotional (sad and happy) music and prosody (relative to their neutral counterparts) (Escoffier et al., 2013). The authors interpreted this null finding as possibly due to individual differences among subjects and/or lack of statistical power.

Thus, the main objective of the present study was to investigate brain responses to individual basic emotions expressed through music, in a large sample size, and directly compare them, in the same subjects and during the same testing session, with the equivalent emotions expressed by faces and vocalizations. Specifically, our aims were—(i) to identify the brain regions responsive to specific basic emotions (fear, sadness and happiness) expressed by short unknown musical stimuli, and whether these responses were modulated by musical expertise; (ii) to determine whether there are any brain regions commonly engaged by emotional expressions conveyed through different modalities (i.e. auditory and visual) and domains (i.e. faces, vocalizations and music); and (iii) to determine whether there is a correlation in the magnitude of the individual responses to specific emotions across domains (suggesting common underlying mechanisms). To do so, we conducted a functional magnetic resonance imaging (fMRI) experiment in which faces, non-linguistic vocalizations and musical excerpts expressing fear, sadness and happiness—as well as emotionally neutral stimuli—were presented in a pseudo-random (event-related) fashion. Subjects with varying degrees of musical training were recruited to determine whether musical experience modulates brain responses to emotional music (Strait et al., 2010; Lima and Castro, 2011).

METHODS

Subjects

Forty-seven healthy right-handed volunteers (age: M = 26.4, s.d. = 4.8, 20 female) with no history of hearing impairments took part in the study. Of those, 23 (9 female) had at least 5 years of musical training (M = 10.6, s.d. = 5.5).

Stimuli

Music

Sixty novel instrumental clips were selected from a previously validated set of musical stimuli (Vieillard et al., 2008; Aubé et al., 2013). They were written according to the rules of the Western tonal system, based on a melody with an accompaniment and specifically designed to express fear, sadness, happiness and ‘peacefulness’ (neutral condition). Recordings of each score were made with piano and violin, each played by a professional musician. Each clip was then segmented to have a duration similar to that of the vocalizations (mean duration: 1.47 s; s.d.: 0.13 s). Half of the final set was composed of piano clips and the other half of violin, equally distributed for all emotions. Physical characteristics of the stimuli are shown in Table 1.

Table 1.

Description of the auditory stimuli

| Acoustic parameters: Mean (s.d.) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Emotion | Duration (ms) | Number of events | Mode | Tempo | Spectral centroid (Hz) | Spectral flux (a.u.) | Intensity flux (a.u.) | Spectral regularity (a.u.) | RMS (a.u.) | HNR (dB) | Median f0 (Hz) |

| Music | |||||||||||

| Fear | 1454 (115) | 6*,** (2.52) | Minor | 119.75 (43.16)*,** | 1763.44 (866.33) | 28.68*,** (13.81) | 271.23 (63.83) | 1.18 (0.28) | 0.0979 (0.0219) | 8.09** (5.71) | 169.69 (97.88) |

| Happiness | 1431 (159) | 7.83*,** (2.26) | Major | 149 (33.03)*,** | 1930.48 (824.03) | 31.45*,** (12.09) | 246.46 (58.83) | 0.96 (0.36) | 0.1059 (0.0263) | 15.75 (7.19) | 261.26 (108.59) |

| Sadness | 1473 (71) | 2.92 (1.29) | Minor | 65.33 (17.19) | 1767.13 (954.10) | 17.63 (10.16) | 276.47 (56.94) | 1.07 (0.32) | 0.0962 (0.0187) | 15.09 (9.05) | 207.90 (111.90) |

| Neutral | 1505 (152) | 3.75 (1.05) | Major | 69.79 (7.54) | 1637.26 (660.44) | 19.92 (6.82) | 281.34 (46.25) | 1.02 (0.35) | 0.1069 (0.0274) | 13.27 (8.51) | 186.09 (92.69) |

| Vocalizations | |||||||||||

| Fear | 1362 (289) | n/a | n/a | n/a | 2567.45*,** (784.82) | 85.09*,** (31.30) | 372.83** (70.28) | 0.98* (0.59) | 0.2394*,** (0.0726) | 8.81 (5.44) | 303.66 (92.5) |

| Happiness | 1766 (179) | n/a | n/a | n/a | 1798.60 (566.58) | 66.91 (31.06) | 323.39 (61.59) | 0.73 (0.47) | 0.1967** (0.0688) | 9.57 (4.24) | 257.97 (88.27) |

| Sadness | 1404 (450) | n/a | n/a | n/a | 2328.96 (1093.57) | 52.67 (20.64) | 307.97 (59.25) | 0.65 (0.29) | 0.1299 (0.0381) | 10.36 (5.42) | 295.69 (75.00) |

| Neutral | 1264 (336) | n/a | n/a | n/a | 1912.84 (624.22) | 57.86 (27.89) | 315.63 (112.08) | 0.53 (0.31) | 0.1747 (0.0733) | 10.45 (6.18) | 257.94 (78.55) |

Note: Significantly different from *neutral and **sad (P < 0.05, Bonferroni corrected). a.u., arbitrary units. The spectral centroid (weighted mean of spectrum energy) reflects the global spectral distribution and has been used to describe the timber, whereas the spectral flux conveys spectrotemporal information (variation of the spectrum over time) (Marozeau et al., 2003; MIRtoolbox; Lartillot et al., 2008). The intensity flux is a measure of loudness as a function of time (Glasberg and Moore, 2002; Loudness toolbox. GENESIS®, Aix en Provence, France). Other measures were also computed such as the root mean square (RMS), the harmonic-to-noise ratio (HNR) and the median f(0) (Fecteau et al., 2007; Lima et al., 2013; Boersma & Weenink, 2014).

Vocalizations

Sixty non-linguistic vocalizations (mean duration: 1.41 s; s.d.: 0.37 s) were selected from sets previously used in behavioral (Fecteau et al., 2005; Armony et al., 2007; Aubé et al., 2013) and neuroimaging (Fecteau et al., 2004, 2007; Belin et al., 2008) experiments. They consisted of 12 stimuli per emotional category—happiness (laughter and pleasure), sadness (cries) and fear (screams)—and 24 emotionally neutral ones (12 coughs and 12 yawns), each produced by a different speaker (half female). The main acoustic parameters are shown in Table 1.

Faces

Sixty gray-scale pictures of facial expressions (duration: 1.5 s) were selected from a validated data set (Sergerie et al., 2006; 2007). They included 12 stimuli per emotional category (fear, happiness and sadness) and 24 emotionally neutral. Each stimulus depicted a different individual (half female). Uniform face size, contrast and resolution were achieved using Adobe Photoshop 7.0 (Adobe Systems, San Jose, CA). Hair was removed to emphasize the facial features.

Procedure

Stimuli were presented in a pseudo-random order in an event-related design, through MRI-compatible headphones and goggles (Nordic NeuroLab, Bergen, Norway). To prevent habituation or the induction of mood states, no more than three stimuli of the same modality or domain (i.e. faces, voices or music), two stimuli of the same emotion category or three stimuli of the same valence (i.e. positive or negative) were presented consecutively. Stimulus duration was on average 1.5 s with an average of intertrial interval (ITI) of 2.5 s. A number of longer ITIs (so called ‘null events’) were included to obtain a reliable estimate of baseline activity and to prevent stimulus onset expectation. In addition, stimulus onsets were de-synchronized with respect to the onset of volume acquisitions to increase the effective sampling rate and allow an even sampling of voxels across the entire brain volume (Josephs et al., 1997). To ensure attention, participants were asked to press a button on the sporadic presentation of a visual (inverted face) or auditory (500 Hz pure tone) target. A short run in the scanner was conducted prior to the experimental session to ensure auditory stimuli were played at a comfortable sound level and that subjects could perform the task. In the same scanning session, two other runs were conducted using a different set of auditory stimuli. Results of these are presented elsewhere (Angulo-Perkins et al., submitted for publication). After scanning, subjects rated all auditory stimuli on valence and intensity (‘arousal’) using a visual analog scale (see Aubé et al., 2013).

Image acquisition

Functional images were acquired using a 3-T MR750 scanner (General Electric, Wuaukesha, Wisconsin) with a 32-channel coil using parallel imaging with an acceleration factor of 2. Each volume consisted of 35 slices (3 mm thick), acquired with a gradient-echo, echo planar imaging sequence (Field of view (FOV) = 256 × 256 mm2, matrix = 128 × 128, Repetition Time (TR) = 3 s, Echo Time (TE) = 40 ms; voxel size = 2 × 2 × 3 mm3). A 3D T1-weighted image was also acquired and used for registration (voxel size = 1 × 1 × 1 mm3, TR = 2.3 s, TE = 3 ms).

Statistical analysis

Image preprocessing and analysis were carried out using SPM8 (Wellcome Trust Centre for Neuroimaging, London, UK; http://www.fil.ion.ucl.ac.uk/spm). A first-level single-subject analysis included all 12 expressions (3 domains × 4 emotions), visual and auditory targets and the six realignment parameters. Parameter estimates for each condition were then taken to a second-level repeated-measures Analysis of Variance (ANOVA). Statistical significance was established using a voxel-level threshold of P = 0.001 together with a cluster-based correction for multiple comparisons (k = 160; P < 0.05, Familywise Error (FWE)) obtained through Monte Carlo simulations (n = 10 000) using a procedure adapted from Slotnick et al. (2003, https://www2.bc.edu/∼slotnick/scripts.htm), and using the spatial smoothness estimated from the actual data, except in the amygdala where, due to its small size, a voxel-level correction for multiple comparisons was used instead, based on Gaussian Random Field Theory (P < 0.05, FWE; Worsley et al., 2004). To assess regions preferentially responsive to a given domain (faces, music or vocalizations), regardless of emotional expression, contrasts representing main effects were used. To identify regions commonly activated by a given emotion for the three domains, a main effect was used. To ensure that the candidate clusters were indeed responsive to that emotion for all domains, the resulting statistical map was masked inclusively by the conjunction of the three individual simple main effects (‘minimum statistic compared to the conjunction null’, P < 0.05).

RESULTS

Behavior

Subjects’ performance in the target detection task was well above chance (91% and 96% for auditory and visual targets, respectively), confirming that participants were able to perceive and distinguish visual and auditory stimuli without any problems and that they were paying attention to them throughout the experiment.

Behavioral ratings confirmed that stimuli were judged to have valence and intensity values consistent with their a priori assignment. As expected, there were significant main effects of emotional category in valence and intensity ratings for music on Valence (F(3, 59) = 112.29, η2 = 0.86, P < 0.001) and intensity (F(3, 59) = 62.01, η2 = 0.77, P < 0.001). All post hoc pairwise comparisons for valence rating were significant (Ps < 0.001) except for fear vs sadness (P = 0.9), whereas all pairwise comparisons of emotional intensity were significant (P < 0.001) with the exception of fear vs happy (P = 0.9).

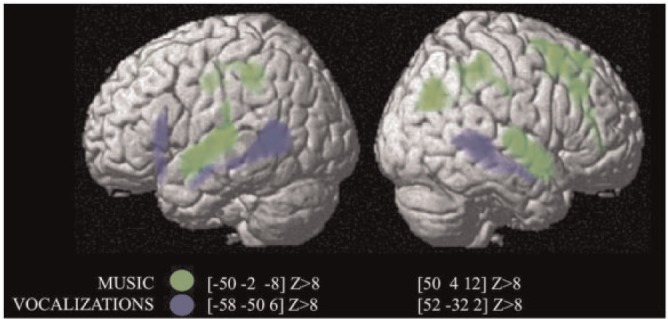

Domain-preferential responses

The contrast between visual (faces) and auditory (vocalizations and music) stimuli for all expressions revealed significant bilateral activation in posterior visual regions, including the face fusiform area ([36, −48, −26] Z > 8 [−36, −56, −24] Z > 8) (Table 2). The opposite contrast activated bilaterally temporal areas [superior temporal sulcus (STS)/superior temporal gyrus (STG)] (auditory cortex), including auditory core, belt and parabelt region ([62, −20, 0], Z > 8; [−54, −24, 2], Z > 8) (Table 2). The contrast between vocalizations and music revealed significant clusters along the STS bilaterally (Figure 1, ‘blue’) (Table 2), whereas the contrast music minus vocalizations activated a more anterior region on the STG bilaterally (Figure 1, ‘green’) (Table 2). Post hoc analysis also revealed stronger activations for musicians than non-musicians in a cluster within this music-responsive area in the left hemisphere ([−52, −2, −10], k = 20, Z = 3.88, P < 0.001).

Table 2.

Significant activations associated with the main contrasts of interest

| Anatomical location | Left |

Right |

Z-score (peak voxel) | ||||

|---|---|---|---|---|---|---|---|

| x | y | z | z | y | z | ||

| Faces > (music + vocalizations) | |||||||

| Occipital | −38 | −82 | −14 | >8 | |||

| Motor cortex | 44 | 2 | 30 | >8 | |||

| SMA | −32 | −14 | 48 | 6.83 | |||

| dlPFC | 50 | 34 | 18 | 5.6 | |||

| (Music + vocalizations) > faces | |||||||

| STS/STG | 62 | −20 | 0 | >8 | |||

| STS/STG | −54 | −24 | 2 | >8 | |||

| Vocalizations > music | |||||||

| STS (posterior) | 52 | −32 | −2 | 7.63 | |||

| STS (posterior) | −58 | −50 | 6 | 6.82 | |||

| IFG | −48 | 22 | 8 | 4.58 | |||

| Music > vocalizations | |||||||

| STG (anterior) | −50 | −2 | −8 | >8 | |||

| STG (anterior) | 50 | 4 | −12 | >8 | |||

| Parietal | 48 | −72 | 36 | 4.71 | |||

| mPFC | 22 | 30 | 52 | 4.62 | |||

| Parietal lobule | −36 | −36 | 42 | 4.5 | |||

| Musicians > non-musicians | |||||||

| STG (anterior) | −52 | −2 | −10 | 3.88 | |||

| Main effect of fear | |||||||

| Insula (posterior) | −38 | −26 | 2 | 5.73 | |||

| Insula (posterior) | 42 | −22 | −6 | 5.46 | |||

| Amygdala/hippocampus | −28 | −8 | −22 | 4.79 | |||

| Music (fear > neutral) | |||||||

| STG | 44 | −18 | −6 | 4.91 | |||

| STG | −46 | −16 | −2 | 4.62 | |||

| Music (happiness > neutral) | |||||||

| STG | −52 | 0 | −10 | 6.16 | |||

| STG | 54 | 4 | −10 | 5.76 | |||

| Music (correlations between BOLD signal and intensity) | |||||||

| STG | −52 | −8 | −6 | 5.40 | |||

| STG | 56 | −24 | −2 | 4.29 | |||

Fig. 1.

Statistical parametric map showing the significant clusters with the contrast of vocalizations > music (blue) and music > vocalizations (green).

Main effect of emotions across domains

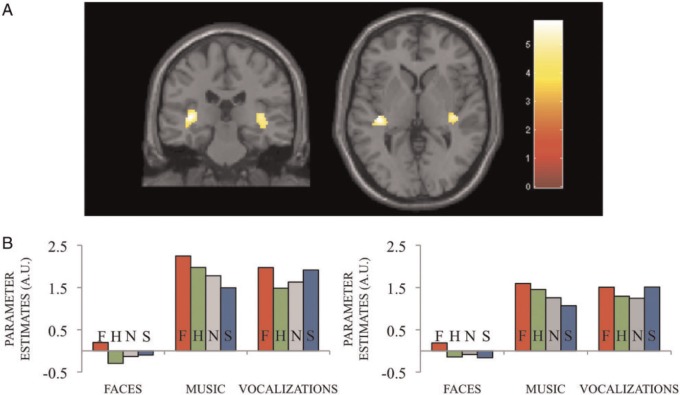

Fear

The contrast corresponding to the main effect of fear expressions, compared with neutral ones, across domains (i.e. faces, vocalizations and music) revealed increased activity bilaterally in the left amygdala/hippocampus (Figure 2) ([−28, −8, −22] Z = 4.89) and the posterior insula bilaterally (Figure 3) (Left [−38, −26, −2] Z = 5.73; Right [42, −22, −6] Z = 5.46) (Table 2).

Fig. 2.

(A) Amygdala cluster associated with the main effect of fearful minus neutral expressions across domains ([−28, −8, −22] Z = 4.79). (B) Cluster-averaged parameter estimates for the conditions of interest. (C) Cluster-averaged evoked BOLD responses for fearful and neutral expressions corresponding to the three domains. a.u.: arbitrary units.

Fig. 3.

(A)Significant clusters in the posterior insula for the main effect of fearful minus neutral expressions across domains, and (B) the corresponding cluster-averaged parameter estimates for the conditions of interest. Left [−38, −26, 2], right [42, −22, −6].

To explore whether the blood oxygen level-dependent (BOLD) signal increase for fearful expressions was correlated across domains, we calculated pairwise semi-partial correlations between the subject-specific parameter estimates between domains, removing the contribution of the other expressions (to account for possible other, non–emotion-specific effects, such as attention, sensory processing, etc). In the left amygdala/hippocampus cluster, significant correlations were found between fearful music and vocalizations (r(42) = 0.34, P < 0.05), but no significant correlations between either music or vocalizations and faces (P’s > 0.05). No significant correlations were obtained in the posterior insula (P’s > 0.1).

There were no significant differences between male and female subjects or between musicians and non-musicians for this contrast, either with a between-group whole-brain analysis or through post hoc comparisons in the regions obtained in the main effect of fear vs neutral expressions. No correlation was found either between amygdala activity and any of the acoustic parameters of the auditory stimuli included in Table 1.

Happiness and sadness

No significant activations were found in any brain areas for the main effect of happiness or sadness across domains.

Music

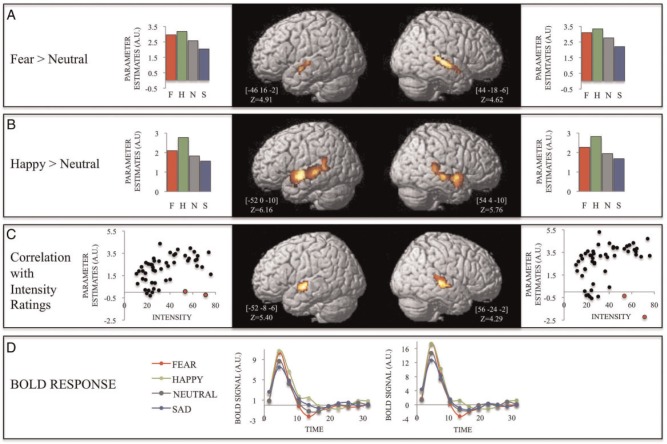

To determine whether other brain regions were activated by emotions expressed through music stimuli but not necessarily common to the other domains, we also performed emotion-specific contrasts only for music stimuli.

Fear

The contrast between fear and neutral music revealed significant bilateral activity along the anterior STG (Figure 4) (left: [−46, −16, −2] Z = 4.91; right: [44, −18, −6] Z = 4.62) (Table 2).

Fig. 4.

Significant clusters in the temporal lobe for the contrasts (A) Fear minus neutral and (B) Happy minus neutral for musical expressions, and the corresponding cluster-averaged parameter estimates. (C) Significant clusters associated with the correlation between BOLD signal and intensity ratings, and the corresponding cluster-averaged scatter plots. The two points in red in each graph represents measures with high Cook’s Distance scores (Left: 0.37 and 0.11; Right: 0.93 and 0.1). Removing these outliers increased the correlation values of the clusters in the left and right hemispheres to 0.52 and 0.48 (P < 0.0001), respectively. (D) Cluster-averaged evoked BOLD responses for fearful and neutral music for the clusters depicted in C.

Happiness

Activity associated with happiness contrasted with neutral revealed significant bilateral activity along the STG (Figure 4) (left: [−52, 0, −10] Z = 6.16; right: [54, 4, −10] z = 5.76) and the STS (left: [−62, −26, 0] z = 5.10; right: [56, −22, −6] Z = 4.87) (Table 2).

Sadness

No BOLD signal increased was found for the contrast of sadness compared with neutral in the case of music excerpts.

Arousal

As shown in Figure 4, the regions within the posterior STG showing significantly stronger responses for fearful and happy, compared with neutral, music were very similar. Moreover, as evidenced by the parameter estimates in those clusters, the response to sad music in these regions was similar to neutral and significantly lower than either fear or happy. Thus, these results suggest that this region may be sensitive to emotional intensity (arousal) rather than to a specific emotion or to valence. To further test this hypothesis, we conducted a stimulus-based subsidiary analysis in which each music stimulus was modeled separately as a condition and the corresponding parameter estimates were taken to a second-level correlation analysis with the intensity values obtained in the post-scan ratings. This analysis yielded significant clusters in the STG bilaterally (left ([−52, −8, −6]; Z = 5.40); right ([56, −24, −2]; Z = 4.29), which overlapped with the ones obtained in the above-mentioned analyses (Figure 4). Increased BOLD signal activity along the STG was not modulated by number of events of the musical clips or any other acoustical features.

DISCUSSION

The main goal of the present study was to investigate brain responses to facial expressions, non-linguistic vocalizations and short novel musical excerpts expressing fear, happiness and sadness, compared with a neutral condition. More specifically, we aimed at determining whether there are common brain areas involved in processing emotional expressions across domains and modalities and whether there are any correlations in the magnitude of the response at a subject level. We also sought to identify brain regions responsive to specific basic emotions expressed through music. We observed significant amygdala and anterior insula activation in response to fearful expressions for all three domains. Interestingly, subject-specific amygdala responses to fearful music and vocalizations were correlated, whereas no such correlation was observed for faces. When exploring responses to emotional music alone, we observed significantly stronger activation for happy and fearful excerpts than for sad and neutral ones in a region within the anterior STG that preferentially responds to musical stimuli, compared with other auditory signals. Further analyses showed that music responses in this area are modulated by emotional intensity or arousal. Finally, we failed to observe any differences in these emotion-related responses as a function of participants’ sex or musical expertise.

Fear and amygdala

A cluster within the left amygdala responded to expressions of fear regardless of domain. That is, fearful faces, vocalizations and musical excerpts elicited significantly larger activity in this region when compared with their neutral counterparts. This is consistent with the established role of the amygdala in the processing of threat-related stimuli, both in the visual and auditory modalities. Our findings, however, suggest that the involvement of this structure in fear is not restricted to innate or biologically relevant stimuli, as previously suggested (for a review, see Armony, 2013). Indeed, we observed that the same voxels in the amygdala that responded to stimuli that have arguably been shaped by evolution to inform conspecifics about the presence of danger in the environment, namely fearful faces and vocalizations, also responded to musical excerpts that conveyed the same type of information. Importantly, as these latter stimuli were unknown to the subjects and varying in their acoustical properties, it is unlikely that the amygdala responses were the result of some form of direct learning or classical conditioning, or related to some basic acoustic feature of the stimuli, such as frequency (none of the measured acoustic parameters significantly correlated with amygdala activity). Moreover, the within-subject design, which allowed us to compare brain activity across domains at an individual level, revealed that the magnitude of amygdala activity in response to fearful music and vocalizations was correlated, whereas no correlation was found between visual and auditory expressions, either music or vocalizations. This suggests that the amygdala may process different domains within a sensory modality in a similar fashion, consistent with the proposal that music and voice both share the same emotional neural pathway (see Juslin and Juslin and Vastjall, 2008; Juslin, 2013; Peretz et al., 2013).

Comparison with previous imaging studies of emotional music

Lesion and neuroimaging studies have consistently implicated the amygdala in the processing of fearful expressions conveyed by faces and, to a lesser extent, vocalizations. In contrast, although amygdala activity has been reported in response to dissonant (unpleasant) or irregular music, unexpected chords (Blood and Zatorre, 2001; Koelsch et al., 2006) and musical tension (Lehne et al., 2014), few studies have directly explored the neural responses to fearful music (Koelsch et al., 2013; Peretz et al., 2013). In fact, only two sets of studies, one involving neurological patients and one using fMRI study, exist. Gosselin et al. investigated the recognition of emotional music, using similar stimuli to those of the current study, in a group of epileptic patient who had undergone a medial temporal resection including the amygdala (Gosselin et al., 2005), as well as in a patient who had complete and restricted bilateral damage to the amygdala and had previously been reported to exhibit impaired recognition of fearful faces (Adolphs et al., 1994; Adolphs, 2008). Interestingly, this patient had difficulty recognizing fear, and to some extent sadness, in music, while her recognition of happiness was intact (Gosselin et al., 2007). In a recent study, Koelsch et al. (2013) examined brain responses underlying fear and happiness (joy) evoked by music using 30-s-long stimuli. They found BOLD signal increases in bilateral auditory cortex and superficial amygdala (SF) in the case of joy but not for fear. The authors argued that an attention shift toward joy, associated with the extraction of a social significance value, was responsible for this increase, in contrast to fear, which lacks any ‘socially incentive value’. Although we do not have a definite explanation for the discrepancy between their findings and ours, especially in terms of amygdala, one possibility is the duration of the stimuli used. Indeed, the amygdala has been shown to habituate to threat-related stimuli, including fearful expressions (Breiter et al., 1996). In addition, our experiment consisting of rapid presentation of short stimuli of different modalities and emotional value was designed to explore the brain correlates of perception of expressed emotions and not that of induced emotional states. The use of short stimuli might also explain why we did not find any amygdala activation in the case of happiness and sadness, in contrast to some previous studies that used long musical excerpts to induce specific emotional states in participants (Mitterschiffthaler et al., 2007; Salimpoor et al., 2011; Koelsch et al., 2013).

It is therefore possible that differences observed among studies reflect distinct (although not mutually exclusive) underlying mechanisms (see, e.g. LeDoux, 2013). Interestingly, although Koelsch et al. (2013) did not observe sustained amygdala activity for fear, they did observe an increase in functional connectivity between SF and visual as well as parietal cortices, which they interpreted as reflecting ‘increased visual alertness and an involuntary shift of attention during the perception of auditory signals of danger’. These results appear to be compatible with the idea of an amygdala response preparing the organism for a potential threat. Further studies, manipulating the length of the stimuli and attention focus, may help clarify these issues.

Hippocampus

As shown in Figure 2, the activation obtained in the contrast fearful vs neutral expressions across domains (faces, vocalizations and music) included both the amygdala and the anterior hippocampus. This is particularly relevant in the case of music, as previous studies have also reported hippocampal activation (Koelsch, 2010; Trost et al., 2012) and enhanced hippocampal–amygdala connectivity (Koelsch and Skouras, 2014) in response to music emotional stimuli. Importantly, given that the stimuli used in our study were novel and unknown to the subjects, it is unlikely that the observed hippocampal activation merely reflected memory processes. Instead, this activation is consistent with the proposed critical role of this structure in the neural processing of emotional music (for reviews, see Koelsch, 2013, 2014).

Insula

BOLD signal increase was also found bilaterally in the posterior insula for fear across domains and modalities. Previous imaging studies have highlighted the involvement of the posterior insula in ‘perceptual awareness of a threat stimulus’ (Critchley et al., 2002) as well as in the anticipation of aversive or even painful stimuli (Jensen et al., 2003; Mohr et al., 2005, 2012). Moreover, the insula has been described as playing an important role in the prediction of uncertainty, notably to facilitate the regulation of affective states and guide decision-making process (Singer et al., 2009).

Music and arousal

Previous studies have shown that activity in regions that respond preferentially to a particular type of expression, such as faces (Kanwisher et al., 1997), bodies (de Gelder et al., 2004; Hadjikhani and de Gelder, 2003) and voices (Belin et al., 2000), can be modulated by their emotional value (Vuilleumier, 2005; Grèzes et al., 2007; Peelen et al., 2007; Vuilleumier and Pourtois; 2007; Ethofer et al., 2009). Our results show that this property extends to the case of music. Indeed, a region within the anterior STG that is particularly sensitive to music (Koelsch, 2010; Puschmann et al., 2010; Figure 4) responded more strongly to fearful and happy excerpts than to neutral or sad ones, in line with previous studies (Mitterschiffthaler et al., 2007). Importantly, further stimulus-specific analyses confirmed that this area appears to be sensitive to emotional intensity, rather than valence. Interestingly previous studies, using similar stimuli, have shown that emotional intensity ratings correlate with recognition memory enhancement (Aubé et al., 2014) and physiological responses (Khalfa et al., 2008). Thus, taken together, these results provide strong support to the proposal that arousal is the key dimension by which music conveys emotional information (Berlyne, 1970; Steinbeis et al., 2006) (see Juslin and Vastjall, 2008).

Individual differences

The observed difference in activation in the music-sensitive area of STG (Figure 4) between musicians and non-musicians for the main effect of music vs vocalizations is in agreement with previous studies (Arafat et al., submitted for publication; Elmer et al., 2013; Koelsch et al., 2005). However, there was no significant difference as a function of emotion between groups. This is consistent with the participants’ valence and intensity ratings, which did not show any significant group differences (see also, Bigand et al., 2005). We also failed to find any significant differences in any of our contrasts between males and females.

CONCLUSION

Overall, our findings confirmed the crucial importance of fear in emotional communication as revealed by amygdala activity across domains and modalities. In addition, the correlation found between fearful music and vocalizations in the amygdala response gives additional support to the idea that music and voices might both share emotional neural circuitry (see Juslin, 2013). Moreover, our results confirm that music constitutes a useful and potentially unique tool to study emotional processing and test current theories and models.

Acknowledgments

This work was partly funded by grants from the National Science and Engineering Research Council of Canada (NSERC) and the Canadian Institutes of Health Research (CIHR) to J.L.A., and from Consejo Nacional de Ciencia y Tecnología de México (CONACyT) and Universidad Nacional Autónoma de México (UNAM) to L.C. We are grateful to Daniel Ramírez Peña, Juan Ortiz-Retana, Erick Pasaye and Leopoldo Gonzalez-Santos for technical assistance during data acquisition. We also thank the authorities of the Music Conservatory ‘José Guadalupe Velázquez’, the Querétaro Philharmonic Orchestra, the Querétaro School of Violin Making and the Querétaro Fine Arts Institute, for their help with the recruitment of musicians. W.A. and A.A.P. were supported by scholarships from NSERC and CONACyT-UNAM, respectively.

REFERENCES

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18(2):166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372(6507):669–72. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR. Fear and the human amygdala. The Journal of Neuroscience. 1995;15(9):5879–91. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaral DG, Price JL, Pitkanen A, Carmichael ST. Anatomical organization of the primate amygdaloid complex. The amygdala: Neurobiological aspects of emotion, memory, and mental dysfunction. 1992:1–66. [Google Scholar]

- Armony JL. Current emotion research in behavioral neuroscience: the role(s) of the amygdala. Emotion Review. 2013;5(1):104–15. [Google Scholar]

- Armony JL, LeDoux J. Emotional responses to auditory stimuli. In: Moore DR, Fuchs PA, Rees A, Palmer A, Plack CJ, editors. The Oxford Handbook of Auditory Science: The Auditory Brain. Oxford University Press; 2010. pp. 479–508. [Google Scholar]

- Armony JL, Han JE. PET and fMRI: basic principles and applications in affective neuroscience research. In: Armony JL, Vuilleumier P, editors. The Cambridge Handbook of Human Affective Neuroscience. Cambridge: Cambridge University Press; 2013. pp. 154–70. [Google Scholar]

- Armony JL, Chochol C, Fecteau S, Belin P. Laugh (or cry) and you will be remembered: influence of emotional expression on memory for vocalizations. Psychological Science. 2007;18(12):1027–9. doi: 10.1111/j.1467-9280.2007.02019.x. [DOI] [PubMed] [Google Scholar]

- Aubé W, Peretz I, Armony JL. The effects of emotion on memory for music and vocalisations. Memory. 2013;21(8):981–90. doi: 10.1080/09658211.2013.770871. [DOI] [PubMed] [Google Scholar]

- Barr RG, Hopkins B, Green JA. Crying as a Sign, a Symptom, and a Signal: Clinical, Emotional and Developmental Aspects of Infant and Toddler Crying. Cambridge University Press; 2000. [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–12. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Charest I, Nicastro N, Hauser MD, Armony JL. Human cerebral response to animal affective vocalizations. Proceedings of the Royal Society B: Biological Sciences. 2008;275(1634):473–81. doi: 10.1098/rspb.2007.1460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bigand E, Vieillard S, Madurell F, Marozeau J, Dacquet A. Multidimensional scaling of emotional responses to music: the effect of musical expertise and of the duration of the excerpts. Cognition and Emotion. 2005;19(8):1113–39. [Google Scholar]

- Berlyne DE. Novelty, complexity, and hedonic value. Perception and Psychophysics. 1970;8:279–86. [Google Scholar]

- Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122(5):883–93. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ, Bermudez P, Evans AC. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nature Neuroscience. 1999;2:382–7. doi: 10.1038/7299. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(20):11818. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boersma P, Weenink D. 2014. Praat: doing phonetics by computer [Computer program]. Version 5.3.65. Available: http://www.praat.org/ [February 27, 2014] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17(5):875–87. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Brown S, Martinez MJ, Parsons LM. Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport. 2004;15(13):2033. doi: 10.1097/00001756-200409150-00008. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Mathias CJ, Dolan RJ. Fear conditioning in humans: the influence of awareness and autonomic arousal on functional neuroanatomy. Neuron. 2002;33(4):653–63. doi: 10.1016/s0896-6273(02)00588-3. [DOI] [PubMed] [Google Scholar]

- Darwin C. The Expression of the Emotions in Man and Animals. Oxford University Press; 1998. [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews Neuroscience. 2006;7(3):242–9. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(47):16701–6. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. Cultural recycling of cortical maps. Neuron. 2007;56(2):384–98. doi: 10.1016/j.neuron.2007.10.004. [DOI] [PubMed] [Google Scholar]

- Elmer S, Hänggi J, Meyer M, Jäncke L. Increased cortical surface area of the left planum temporale in musicians facilitates the categorization of phonetic and temporal speech sounds. Cortex. 2013;49(10):2812–21. doi: 10.1016/j.cortex.2013.03.007. [DOI] [PubMed] [Google Scholar]

- Escoffier N, Zhong J, Schirmer A, Qiu A. Emotional expressions in voice and music: same code, same effect? Human Brain Mapping. 2013;34(8):1796–810. doi: 10.1002/hbm.22029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T, Kreifelts B, Wiethoff S, et al. Differential Influences of Emotion, Task, and Novelty on Brain Regions Underlying the Processing of Speech Melody. Journal of Cognitive Neuroscience. 2009;21(7):1255–68. doi: 10.1162/jocn.2009.21099. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony JL, Joanette Y, Belin P. Is voice processing species-specific in human auditory cortex? An fMRI study. Neuroimage. 2004;23(3):840–8. doi: 10.1016/j.neuroimage.2004.09.019. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony J, Joanette Y, Belin P. Judgment of emotional nonlinguistic vocalizations: age-related differences. Applied Neuropsychology. 2005;12(1):40–8. doi: 10.1207/s15324826an1201_7. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Belin P, Joanette Y, Armony JL. Amygdala responses to nonlinguistic emotional vocalizations. Neuroimage. 2007;36(2):480–7. doi: 10.1016/j.neuroimage.2007.02.043. [DOI] [PubMed] [Google Scholar]

- Flores-Gutiérrez EO, Díaz J-L, Barrios FA, et al. Metabolic and electric brain patterns during pleasant and unpleasant emotions induced by music masterpieces. International Journal of Psychophysiology. 2007;65(1):69–84. doi: 10.1016/j.ijpsycho.2007.03.004. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, et al. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry and Neuroscience. 2009;34(6):418. [PMC free article] [PubMed] [Google Scholar]

- Fritz T, Jentschke S, Gosselin N, et al. Universal recognition of three basic emotions in music. Current Biology. 2009;19(7):573–6. doi: 10.1016/j.cub.2009.02.058. [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ. A model of loudness applicable to time-varying sounds. Journal of the Audio Engineering Society. 2002;50(5):331–42. [Google Scholar]

- Gosselin N, Peretz I, Noulhiane M, et al. Impaired recognition of scary music following unilateral temporal lobe excision. Brain. 2005;128(3):628–40. doi: 10.1093/brain/awh420. [DOI] [PubMed] [Google Scholar]

- Gosselin N, Peretz I, Johnsen E, Adolphs R. Amygdala damage impairs emotion recognition from music. Neuropsychologia. 2007;45(2):236–44. doi: 10.1016/j.neuropsychologia.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Green AC, Bærentsen KB, Stødkilde-Jørgensen H, Wallentin M, Roepstorff A, Vuust P. Music in minor activates limbic structures: a relationship with dissonance? Neuroreport. 2008;19(7):711–15. doi: 10.1097/WNR.0b013e3282fd0dd8. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. Neuroimage. 2007;35(2):959–67. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Adenis M-S, Pouga L, Armony JL. Self-relevance modulates brain responses to angry body expressions. Cortex. 2013;49(8):2210–20. doi: 10.1016/j.cortex.2012.08.025. [DOI] [PubMed] [Google Scholar]

- Grossmann T. The development of emotion perception in face and voice during infancy. Restorative Neurology and Neuroscience. 2010;28(2):219–36. doi: 10.3233/RNN-2010-0499. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology. 2003;13(24):2201–5. doi: 10.1016/j.cub.2003.11.049. [DOI] [PubMed] [Google Scholar]

- Izard CE. Innate and universal facial expressions: evidence from developmental and cross-cultural research. Psychological Bulletin. 1994;115(2):288–99. doi: 10.1037/0033-2909.115.2.288. [DOI] [PubMed] [Google Scholar]

- Jensen J, McIntosh AR, Crawley AP, Mikulis DJ, Remington G, Kapur S. Direct activation of the ventral striatum in anticipation of aversive stimuli. Neuron. 2003;40(6):1251–7. doi: 10.1016/s0896-6273(03)00724-4. [DOI] [PubMed] [Google Scholar]

- Josephs O, Turner R, Friston K. Event-related fMRI. Human Brain Mapping. 1997;5(4):243–8. doi: 10.1002/(SICI)1097-0193(1997)5:4<243::AID-HBM7>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- Juslin PN. What does music express? Basic emotions and beyond. Frontiers in Emotion Science. 2013;4:596. doi: 10.3389/fpsyg.2013.00596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juslin PN, Sloboda JA. Music and Emotion: Theory and Research. Oxford: Oxford University Press; 2001. [Google Scholar]

- Juslin PN, Västfjäll D. Emotional responses to music: the need to consider underlying mechanisms. Behavioral and Brain Sciences. 2008;31(5):559–75. doi: 10.1017/S0140525X08005293. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience. 1997;17(11):4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khalfa S, Roy M, Rainville P, Dalla Bella S, Peretz I. Role of tempo entrainment in psychophysiological differentiation of happy and sad music? International Journal of Psychophysiology. 2008;68(1):17–26. doi: 10.1016/j.ijpsycho.2007.12.001. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Towards a neural basis of music-evoked emotions. Trends in Cognitive Sciences. 2010;14(3):131–7. doi: 10.1016/j.tics.2010.01.002. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Emotion and music. In: Armony JL, Vuilleumier P, editors. The Cambridge Handbook of Human Affective Neuroscience. Cambridge: Cambridge University Press; 2013. pp. 286–303. [Google Scholar]

- Koelsch S. Brain correlates of music-evoked emotions. Nature Reviews Neuroscience. 2014;15(3):170–80. doi: 10.1038/nrn3666. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, Schulze K, Alsop D, Schlaug G. Adults and children processing music: An fMRI study. NeuroImage. 2005;25(4):1068–76. doi: 10.1016/j.neuroimage.2004.12.050. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Skouras S. Functional centrality of amygdala, striatum and hypothalamus in a “small-world” network underlying joy: an fMRI study with music. Human Brain Mapping. 2014 doi: 10.1002/hbm.22416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, V. Cramon DY, Müller K, Friederici AD. Investigating emotion with music: an fMRI study. Human Brain Mapping. 2006;27(3):239–50. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S, Skouras S, Fritz T, et al. The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. Neuroimage. 2013;81(1):49–60. doi: 10.1016/j.neuroimage.2013.05.008. [DOI] [PubMed] [Google Scholar]

- Kreiman J. Listening to voices: theory and practice in voice perception research. In: Johnson K, Mullennix JW, editors. Talker Variability in Speech Processing. New York: Academic; 1997. pp. 85–108. [Google Scholar]

- Lartillot O, Toiviainen P, Eerola T. A matlab toolbox for music information retrieval. In: Preisach C, Burkhardt H, Schmidt-Thieme L, Decker R, editors. Data Analysis, Machine Learning and Applications: Studies in Classification, Data Analysis, and Knowledge Organization. Berlin, Germany: Springer; 2008. pp. 261–8. [Google Scholar]

- LeDoux J. Rethinking the emotional brain. Neuron. 2013;73(4):653–76. doi: 10.1016/j.neuron.2012.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehne M, Rohrmeier M, Koelsch S. Tension-related activity in the orbitofrontal cortex and amygdala: an fMRI study with music. Social Cognitive and Affective Neuroscience. 2014 doi: 10.1093/scan/nst141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lima CF, Castro SL. Speaking to the trained ear: musical expertise enhances the recognition of emotions in speech prosody. Emotion. 2011;11(5):1021–31. doi: 10.1037/a0024521. [DOI] [PubMed] [Google Scholar]

- Lima CF, Castro SL, Scott SK. When voices get emotional: a corpus of nonverbal vocalizations for research on emotion processing. Behavior Research Methods. 2013;45:1–12. doi: 10.3758/s13428-013-0324-3. [DOI] [PubMed] [Google Scholar]

- Maess B, Koelsch S, Gunter TC, Friederici AD. Musical syntax is processed in Broca’s area: an MEG study. Nature Neuroscience. 2001;4(5):540–5. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- Marozeau J, de Cheveigné A, McAdams S, Winsberg S. The dependency of timbre on fundamental frequency. The Journal of the Acoustical Society of America. 2003;114(5):2946. doi: 10.1121/1.1618239. [DOI] [PubMed] [Google Scholar]

- Mattavelli G, Sormaz M, Flack T, et al. Neural responses to facial expressions support the role of the amygdala in processing threat. Social Cognitive and Affective Neuroscience. 2014 doi: 10.1093/scan/nst162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menon V, Levitin DJ. The rewards of music listening: response and physiological connectivity of the mesolimbic system. Neuroimage. 2005;28(1):175–84. doi: 10.1016/j.neuroimage.2005.05.053. [DOI] [PubMed] [Google Scholar]

- Mitterschiffthaler MT, Fu CH, Dalton JA, Andrew CM, Williams SC. A functional MRI study of happy and sad affective states induced by classical music. Human Brain Mapping. 2007;28(11):1150–62. doi: 10.1002/hbm.20337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Scott SK, Dolan RJ. Saying it with feeling: neural responses to emotional vocalizations. Neuropsychologia. 1999;37(10):1155–63. doi: 10.1016/s0028-3932(99)00015-9. [DOI] [PubMed] [Google Scholar]

- Mohr C, Binkofski F, Erdmann C, Buchel C, Helmchen C. The anterior cingulate cortex contains distinct areasdissociating external from self-administered painful stimulation: a parametric fMRI study. Pain. 2005;114(3):347–57. doi: 10.1016/j.pain.2004.12.036. [DOI] [PubMed] [Google Scholar]

- Mohr C, Leyendecker S, Petersen D, Helmchen C. Effects of perceived and exerted pain control on neural activity during pain relief in experimental heat hyperalgesia: a fMRI study. European Journal of Pain. 2012;16(4):496–508. doi: 10.1016/j.ejpain.2011.07.010. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cognitive and Affective Neuroscience. 2007;2(4):274–83. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peretz I. The nature of music from a biological perspective. Cognition. 2006;100(1):1–32. doi: 10.1016/j.cognition.2005.11.004. [DOI] [PubMed] [Google Scholar]

- Peretz I, Aubé W, Armony JL. Towards a neurobiology of musical emotions. In: Altenmüller E, Schmidt S, Zimmermann E, editors. The Evolution of Emotional Communication: From Sounds in Nonhuman Mammals to Speech and Music in Man. Oxford University Press; 2013. pp. 277–99. [Google Scholar]

- Phillips ML, Young AW, Scott SK, et al. Neural responses to facial and vocal expressions of fear and disgust. Proceedings of the Royal Society of London. Series B: Biological Sciences. 1998;265(1408):1809–17. doi: 10.1098/rspb.1998.0506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puschmann S, Uppenkamp S, Kollmeier B, Thiel CM. Dichotic pitch activates pitch processing centre in Heschl’s gyrus. Neuroimage. 2010;49(2):1641–9. doi: 10.1016/j.neuroimage.2009.09.045. [DOI] [PubMed] [Google Scholar]

- Salimpoor VN, Benovoy M, Larcher K, Dagher A, Zatorre RJ. Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nature Neuroscience. 2011;14(2):257–62. doi: 10.1038/nn.2726. [DOI] [PubMed] [Google Scholar]

- Sander D, Grafman J, Zalla T. The human amygdala: an evolved system for relevance detection. Reviews in the Neurosciences. 2003;14(4):303–16. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Sauter DA, Eisner F, Ekman P, Scott SK. Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences of the United States of America. 2010;107(6):2408. doi: 10.1073/pnas.0908239106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Fox PM, Grandjean D. On the spatial organization of sound processing in the human temporal lobe: a meta-analysis. Neuroimage. 2012;63(1):137–47. doi: 10.1016/j.neuroimage.2012.06.025. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL. The role of the amygdala in emotional processing: a quantitative meta-analysis of functional neuroimaging studies. Neuroscience and Biobehavioral Reviews. 2008;32(4):811–30. doi: 10.1016/j.neubiorev.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Lepage M, Armony JL. A process-specific functional dissociation of the amygdala in emotional memory. Journal of Cognitive Neuroscience. 2006;18(8):1359–67. doi: 10.1162/jocn.2006.18.8.1359. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Lepage M, Armony JL. Influence of emotional expression on memory recognition bias: a functional magnetic resonance imaging study. Biological Psychiatry. 2007;62(10):1126–33. doi: 10.1016/j.biopsych.2006.12.024. [DOI] [PubMed] [Google Scholar]

- Singer T, Critchley HD, Preuschoff K. A common role of insula in feelings, empathy and uncertainty. Trends in Cognitive Sciences. 2009;13(8):334–40. doi: 10.1016/j.tics.2009.05.001. [DOI] [PubMed] [Google Scholar]

- Slevc LR, Rosenberg JC, Patel AD. Making psycholinguistics musical: self-paced reading time evidence for shared processing of linguistic and musical syntax. Psychonomic Bulletin and Review. 2009;16(2):374–81. doi: 10.3758/16.2.374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Moo LR, Segal JB, Hart J., Jr Distinct prefrontal cortex activity associated with item memory and source memory for visual shapes. Cognitive Brain Research. 2003;17(1):75–82. doi: 10.1016/s0926-6410(03)00082-x. [DOI] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S, Sloboda JA. The role of harmonic expectancy violations in musical emotions: evidence from subjective, physiological, and neural responses. Journal of Cognitive Neuroscience. 2006;18:1380–93. doi: 10.1162/jocn.2006.18.8.1380. [DOI] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S. Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS One. 2008;3(5):e2226. doi: 10.1371/journal.pone.0002226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steiner JE. Human facial expressions in response to taste and smell stimulation. Advances in Child Development and Behavior. 1979;13:257–95. doi: 10.1016/s0065-2407(08)60349-3. [DOI] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Parbery-Clark A, Ashley R. Musical experience shapes top-down auditory mechanisms: evidence from masking and auditory attention performance. Hearing Research. 2010;261(1–2):22–9. doi: 10.1016/j.heares.2009.12.021. [DOI] [PubMed] [Google Scholar]

- Swanson LW, Petrovich GD. What is the amygdala? Trends in Neurosciences. 1998;21(8):323–31. doi: 10.1016/s0166-2236(98)01265-x. [DOI] [PubMed] [Google Scholar]

- Trost W, Ethofer T, Zentner M, Vuilleumier P. Mapping aesthetic musical emotions in the brain. Cerebral Cortex. 2012;22(12):2769–83. doi: 10.1093/cercor/bhr353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vieillard S, Peretz I, Gosselin N, Khalfa S, Gagnon L, Bouchard B. Happy, sad, scary and peaceful musical clips for research on emotions. Cognition and Emotion. 2008;2(4):720–52. [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9(12):585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45(1):174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion. 2001;1(1):70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Taylor JE, Tomaiuolo F, Lerch J. Unified univariate and multivariate random field theory. Neuroimage. 2004;23(Suppl. 1):S189–95. doi: 10.1016/j.neuroimage.2004.07.026. [DOI] [PubMed] [Google Scholar]

- Yang TT, Menon V, Eliez S, et al. Amygdalar activation associated with positive and negative facial expressions. Neuroreport. 2002;13(14):1737–41. doi: 10.1097/00001756-200210070-00009. [DOI] [PubMed] [Google Scholar]

- Young MP, Scanneil JW, Burns GA, Blakemore C. Analysis of connectivity: neural systems in the cerebral cortex. Reviews in the Neurosciences. 1994;5(3):227–50. doi: 10.1515/revneuro.1994.5.3.227. [DOI] [PubMed] [Google Scholar]