Abstract

We consider the problem of inhibiting undesirable contagions (e.g. rumors, spread of mob behavior) in social networks. Much of the work in this context has been carried out under the 1-threshold model, where diffusion occurs when a node has just one neighbor with the contagion. We study the problem of inhibiting more complex contagions in social networks where nodes may have thresholds larger than 1. The goal is to minimize the propagation of the contagion by removing a small number of nodes (called critical nodes) from the network. We study several versions of this problem and prove that, in general, they cannot even be efficiently approximated to within any factor ρ ≥ 1, unless P = NP. We develop efficient and practical heuristics for these problems and carry out an experimental study of their performance on three well known social networks, namely epinions, wikipedia and slashdot. Our results show that these heuristics perform significantly better than five other known methods. We also establish an efficiently computable upper bound on the number of nodes to which a contagion can spread and evaluate this bound on many real and synthetic networks.

Keywords: Complex contagions, Blocking, Social networks

1 Introduction and motivation

Analyzing social networks has become an important topic in the data mining community (Richardson and Domingos 2002; Domingos and Richardson 2001; Kempe et al. 2003, 2005; Chakrabarti et al. 2008; Tantipathananandh et al. 2007; Anderson et al. 2012). Many researchers have studied diffusion processes in social networks. Some examples are the propagation of favorite photographs in a Flickr network (Cha et al. 2008), the spread of information (Gruhl et al. 2004; Kossinets et al. 2008) via Internet communication, the effects of online purchase recommendations (Leskovec et al. 2007), formation of online communities (Shi et al. 2009), hashtag propagation in Twitter (Romero et al. 2011), and virus propagation between computers (Pastor-Satorras and Vespignani 2001). In some instances, models of diffusion are combined with data mining to predict social phenomena; e.g., product marketing (Domingos and Richardson 2001; Richardson and Domingos 2002), trust propagation (Guha et al. 2004), and epidemics through social contacts (Martin et al. 2011). Furthermore, coupled processes of network and dynamics evolutions are studied with comparisons against experimental data (Centola et al. 2007; Centola 2010); see Vespignani (2012) for an overview.

Here, we are interested in a particular class of diffusion, that of complex contagions. As stated in Centola and Macy (2007), “Complex contagions require social affirmation from multiple sources.” That is, a person acquires a complex social contagion through interaction with t > 1 other individuals, as opposed to only a single individual (i.e., t = 1). The latter is called a simple contagion, perhaps the most notable of which are disease propagation (Pastor-Satorras and Vespignani 2001; Longini et al. 2005) and computer virus transmission (Jin et al. 2009).

The idea of complex contagions dates back to at least the 1960s as described in (Granovetter 1978; Schelling 1978), and more current studies are referenced in (Centola and Macy 2007; Barash et al. 2012; Barash 2011). Such phenomena, according to these researchers, include diffusion of innovations, spread of rumors and worker strikes, educational attainment, fashion trends, and growth of social movements. For example, in strikes, mob violence, and political upheavals, individuals can be reluctant to participate for fear of reprisals to themselves and their families. It is safer for one to wait for a critical mass of one’s acquaintances to commit before committing oneself. These models are concerned with the onset of a behavior and thus focus on the transition from non-participating to participating. Like many epidemic models, they use only one active or contagious state. A notable exception is the threshold model of Melnik et al. (2013) which uses two such states, with different strengths of contagion associated with them. Here, we use a contagion model with one active state.

Crucially, recent data mining analyses and experiments have provided evidence for complex contagion dynamics on appropriate social networks. Examples include online DVD purchases (Leskovec et al. 2007), teenage smoking initiation (Harris 2008; Kuhlman et al. 2011), spread of health-related information (Centola 2010), joining LiveJournal (Kleinberg 2007), and recruitment of people to join Facebook (Ugander et al. 2012).

The threshold model that we employ in this work has been used by many social scientists (e.g., Granovetter 1978; Watts 2002; Centola and Macy 2007) to understand social behaviors. It is argued in (Watts 2002) that threshold dynamics may be used in several situations where more detailed human reasoning is precluded. In a recent study (Gonzalez-Bailon et al. 2011), joining a protest in Spain in 2011 was analyzed through Twitter messages. In that study, deterministic thresholds1 were used to explain the onset of user involvement in the protest.

Another motivation for our work is from recent quantitative work (Centola and Macy 2007; Centola 2009) showing that simple contagions and complex contagions can differ significantly in behavior. It is well known (Granovetter 1973) that weak edges play a dominant role in spreading a simple contagion between clusters within a population, thereby dictating whether a contagion will reach a large segment of a population. However, for complex contagions, this effect is greatly diminished (Centola and Macy 2007). Another difference between simple and complex contagions, discussed in (Centola 2009), is the following: scale-free (SF) communication graphs (i.e., those with power-law degree distributions) show high tolerance for random node failures (i.e., diffusion can still reach the majority of nodes when nodes are removed randomly from the network) for simple contagions, but have low tolerance to random failures for complex contagions.

Additional differences between simple and complex contagions are presented in this paper, where the focus is on the problem of finding agents (called critical nodes) in a population that will thwart the spread of complex contagions. In particular, our theoretical results (presented in Sect. 4) show that one variant of this problem can be solved efficiently for simple contagions, while it is computationally intractable for complex contagions. Furthermore, we show experimentally that several effective heuristics (Habiba et al. 2008; Bonacich 1972; Kleinberg 1999; Tong et al. 2010) for determining critical nodes for simple contagions perform poorly in stopping complex contagions. Thus, our results point out some fundamental differences between simple and complex contagions with respect to diffusion.

Computing effective sets of critical nodes is important because it has wide applicability in several domains of network dynamics. Examples include thwarting the spread of sensitive information that has been leaked (Chakrabarti et al. 2008), disrupting communication of adversaries (Arulselvan et al. 2009), marketing to counteract the advertising of a competing product (Richardson and Domingos 2002; Domingos and Richardson 2001), calming a mob (Granovetter 1978), or changing people’s opinions (Dreyer and Roberts 2009). Indeed, contagion dynamics with critical nodes have been used in several domains, including peer influence in youth behavior, repression of social movements, opinion dynamics, and social isolation in epidemiology (Albert et al. 2000; Mobilia 2003; Mobilia et al. 2007; Kawachi 2008; Centola 2009; Siegel 2010; Salathe and Jones 2010; Acemoglu and Ozdaglar 2011; Yildiz et al. 2011). But these studies overwhelmingly use simple contagion dynamics and/or simple heuristics for determining critical nodes (e.g. using high degree nodes). For complex contagions, we provide diffusion blocking methods that are far better than such methods. More generally, inhibiting diffusion is one aspect of a broader goal of controlling diffusion in complex networks as advocated in (Liu et al. 2011).

Another aspect of our work complements previous studies of contagion blocking. Many previous works (Albert et al. 2000; Barash 2011; Habiba et al. 2008; Centola 2009; Tong et al. 2010) assume that the seed set—the set of nodes initially possessing a contagion—is unknown and a single set of critical nodes is selected to halt diffusion from any seed set. Here, we study the contagion blocking problem assuming that the seed set is known. We compare our methods’ results with those from several others, and demonstrate the improved performance that can be realized with this extra information.

Following (Granovetter 1978; Schelling 1978; Watts 2002; Centola and Macy 2007), we utilize a two-state system where nodes in state 0 (1) do not (do) possess the contagion. A node transitions from state 0 to state 1 if the number of its neighbors in state 1 is at least a specified threshold t. Nodes may not transition back to state 0 from state 1 (Macy 1991; Kempe et al. 2003; Siegel 2009; Gonzalez-Bailon et al. 2011; Ugander et al. 2012). Critical nodes are initially in state 0, and remain in state 0 throughout the diffusion process, regardless of the states of their neighbors, and thereby retard contagion propagation.

Overview of contributions

We formulate several variants of the problem of finding a smallest critical set and prove that, in general, they cannot even be efficiently approximated to within any factor ρ ≥ 1, unless P = NP. These results motivate the development and evaluation of two practical heuristics for finding critical sets. We compare our methods against five state-of-the-art methods and demonstrate that our methods are much more effective in blocking diffusion of complex contagions. We also provide a detailed set of blocking results to understand the range of applicability and the limitations of our methods. Finally, using thousands of networks, we critically evaluate a method to bound the maximum possible (MP) spread size (i.e., the maximum number of nodes to which a contagion can spread) in a network, which is useful in quantifying the effectiveness of blocking schemes. (A detailed summary of results is provided in Sect. 3.1.)

Paper organization

Section 2 describes the model employed in this work and develops problem formulations. Section 3 contains a summary of our main results and related work. Theoretical results are provided in Sect. 4. Our two heuristics are described in Sect. 5. Section 6 contains experimental results on blocking, including the experimental setup, comparisons against five state-of-the-art blocking methods, and further results over a larger parameter space. We present in Sect. 7 theoretical and experimental results for maximum spread sizes of complex contagions in social networks. Conclusions and future work constitute Sect. 8.

This paper combines and extends the results in a conference paper (Kuhlman et al. 2010b) and a workshop paper (Kuhlman et al. 2010a). The former contains preliminary versions of the results in Sect. 4 through 6, while the latter contains a preliminary version of the results in Sect. 7.

2 Dynamical system model and problem formulation

2.1 System model and associated definitions

We model the propagation of complex contagions over a social network using discrete dynamical systems (Barrett et al. 2006, 2007). We begin with the necessary definitions.

Let 𝔹 denote the Boolean domain {0,1}. A Synchronous Dynamical System (SyDS) 𝒮 over 𝔹 is specified as a pair 𝒮 = (G, 𝓕), where

G(V, E), an undirected graph with a set V of n nodes and a set E of m edges, represents the underlying social network over which the contagion propagates, and

𝓕 = {f1, f2, …, fn} is a collection of functions, with fi denoting the local transition function associated with node υi, 1 ≤ i ≤ n.

Each function fi specifies the local interaction between node υi and its neighbors in G. To provide additional details regarding these functions, we note that each node of G has a state value from 𝔹. To encompass various types of social contagions described in Sect. 1, nodes in state 0 (1) are said to be unaffected (affected). Thus, in the case of information flow, for example, an affected node has received the information and will pass it on. It is assumed that once a node reaches the state 1, it cannot return to state 0. We refer to a discrete dynamical system with this property as a ratcheted dynamical system. (Other names such as “progressive systems” (Kleinberg 2007) and “irreversible systems” (Dreyer and Roberts 2009) have also been used.)

We can now formally describe the local transition functions. The inputs to function fi are the state of υi and those of the neighbors of υi in G; function fi maps each combination of inputs to a value in 𝔹. For the propagation of contagions in social networks, it is natural to model each function fi (1 ≤ i ≤ n) as a ti -threshold function (Eubank et al. 2006; Chakrabarti et al. 2008; Dreyer and Roberts 2009; Centola et al. 2006; Centola and Macy 2007; Kempe et al. 2003; Kleinberg 2007) for an appropriate nonnegative integer ti. Such a threshold function (taking into account the ratcheted nature of the dynamical system) is defined as follows.

If the state of υi is 1, then the value of fi is 1, regardless of the values of the other inputs to fi.

If the state of υi is 0, then the value of fi is 1 if at least ti of the inputs are 1; otherwise, the value of fi is 0.

A configuration 𝒞 of a SyDS at any time is an n-vector (s1, s2, …, sn), where si ∈ 𝔹 is the value of the state of node υi (1 ≤ i ≤ n). A single SyDS transition from one configuration to another can be expressed by the following pseudocode.

for each node υi do in parallel

(i) Compute the value of fi. Let denote this value.

(ii) Update the state of υi to .

end for

Thus, in a SyDS, nodes update their state synchronously. Other update disciplines (e.g. sequential updates) for discrete dynamical systems have also been considered in the literature (Barrett et al. 2006, 2007).

If a SyDS has a transition from configuration 𝒞 to configuration 𝒞′, we say that 𝒞′ is the successor of 𝒞 and that 𝒞 is a predecessor of 𝒞′.A configuration 𝒞 is called a fixed point if the successor of 𝒞 is 𝒞 itself.

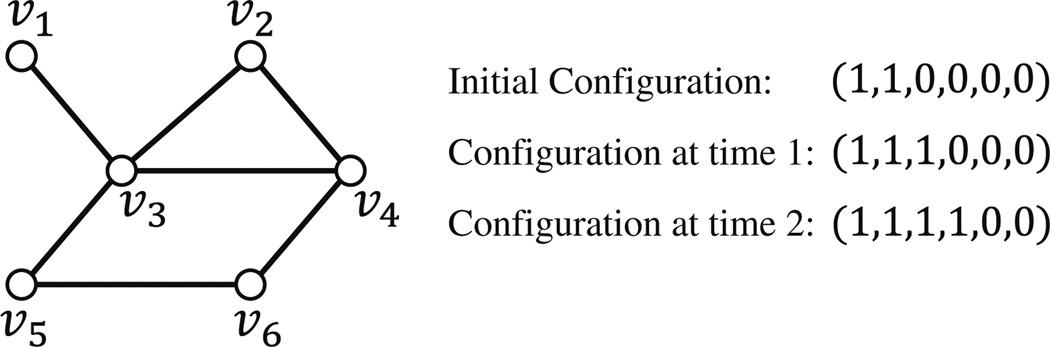

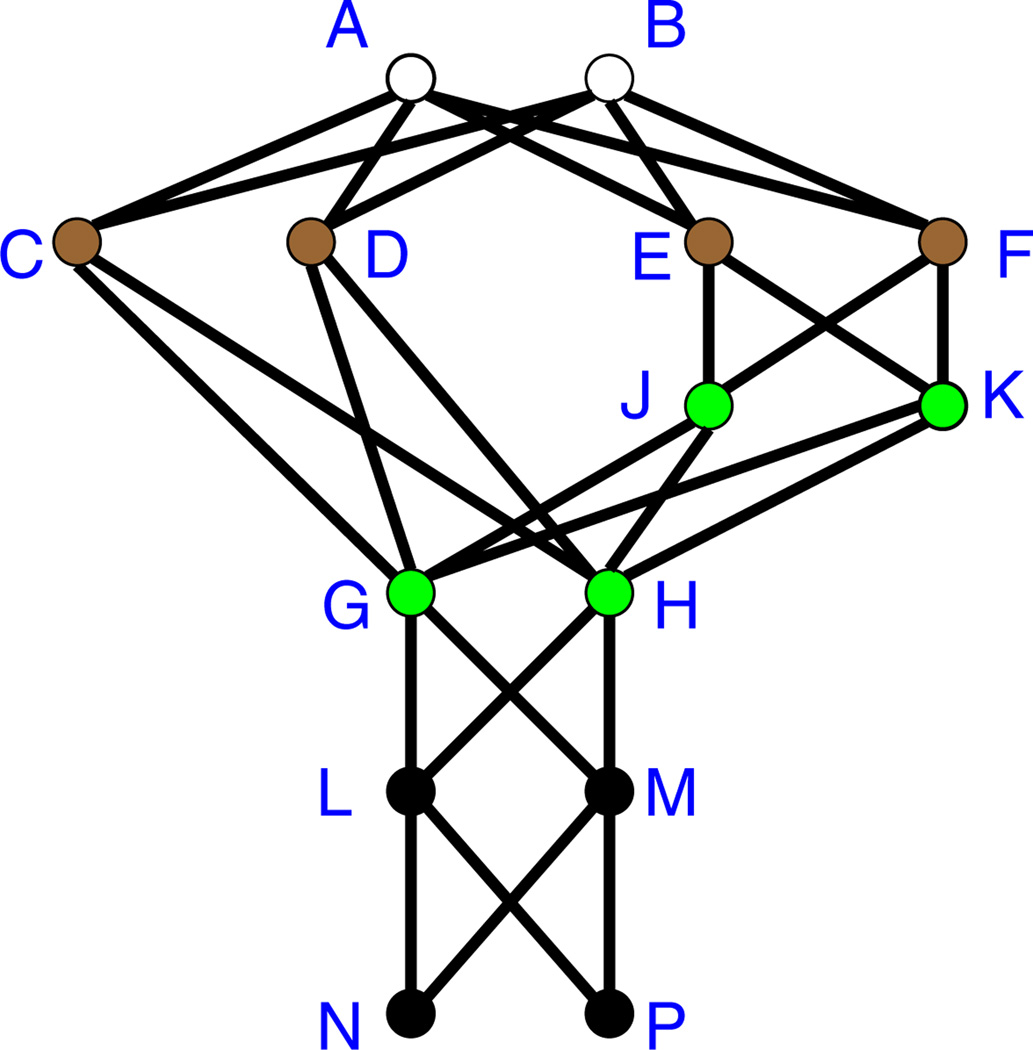

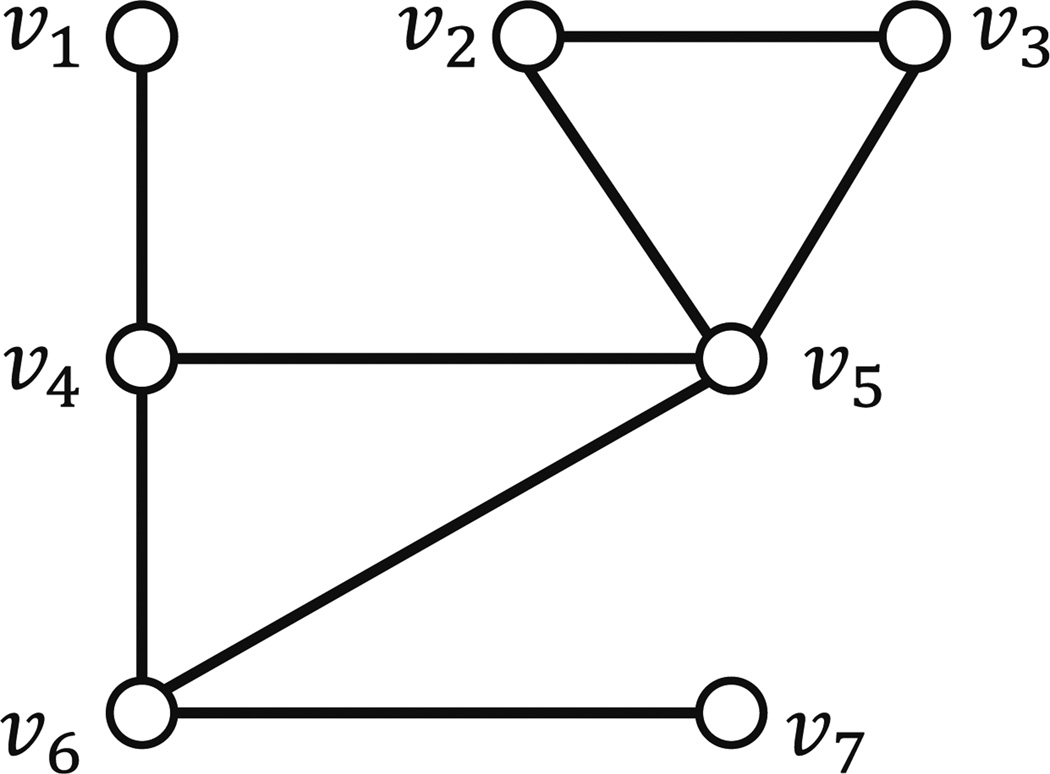

Example Consider the graph shown in Fig. 1. Suppose the local interaction function at each node is the 2-threshold function. Initially, υ1 and υ2 are in state 1 and all other nodes are in state 0. During the first time step, the state of node υ3 changes to 1 since two of its neighbors (namely υ1 and υ2) are in state 1; the states of other nodes remain the same. In the second time step, the state of node υ4 changes to 1 since two of its neighbors (namely υ2 and υ3) are in state 1; again the states of the other nodes remain the same. The resulting configuration (1, 1, 1, 1, 0, 0) is a fixed point for this system.

Fig. 1.

An example of a synchronous dynamical system. Each configuration has the form (s1, s2, s3, s4, s5, s6) where si is the state of node νi, (1 ≤ i ≤ 6). The configuration at time 2 is a fixed point

The SyDS in the above example reached a fixed point. This is not a coincidence. The following simple result shows that every ratcheted dynamical system over 𝔹 reaches a fixed point.

Proposition 1 Every ratcheted discrete dynamical system over 𝔹 reaches a fixed point in at most n transitions, where n is the number of nodes in the underlying graph.

Proof Consider any ratcheted dynamical system 𝒮 over 𝔹. In any transition of 𝒮 from one configuration to another, nodes can only change from 0 to 1 (but not from 1 to 0). Thus, after at most n transitions where nodes change from 0 to 1, there can be no more state changes, i.e., 𝒮 reaches a fixed point.

In the context of opinion propagation, reaching a fixed point means that everyone has formed an unalterable opinion, and hence will not change their mind.

2.2 Problem formulation

For simplicity, statements of problems and results in this paper use terminology from the context of information propagation in social networks, such as that for social unrest. It is straightforward to interpret the results for other contagions.

Suppose we have a social network in which some nodes are initially affected. In the absence of any action to contain the unrest, it may spread to a large part of the population. Decision-makers must decide on suitable actions (interventions) to inhibit information spread, such as quarantining a subset of people. Usually, there are resource constraints or societal pressures to keep the number of isolated people to a minimum (e.g., quarantining too many people may fuel unrest or it may be difficult to apprehend particular individuals). Thus, the problem formulation must take into account both information containment and appropriate resource constraints.

We assume that only people who are as yet unaffected can be quarantined. Under the dynamical system model, quarantining a person is represented by removing the corresponding node (and all the edges incident on that node) from the graph. Equivalently, removing a node v corresponds to changing the local transition function at v so that υ’s state remains 0 for all combinations of input values. The goal of isolation is to minimize the number of new affected nodes that occur over time until the system reaches a fixed point (when no additional nodes can be affected). We use the term critical set to refer to the set of nodes removed from the graph to reduce the number of newly affected nodes. Resource constraints can be modeled as a budget constraint on the size of the critical set. We can now provide a precise statement of the problem of finding critical sets. (This problem was first formulated in (Eubank et al. 2006) for the case where each node computes a 1-threshold function.)

2.2.1 Smallest critical set to minimize the number of new affected nodes (SCS-MNA)

Given A social network represented by the SyDS 𝒮 = (G(V, E),𝓕) over 𝔹, with each function f ∈ 𝓕 being a threshold function; the set I of nodes which are initially in state 1 (the elements of I are called seed nodes); an upper bound β on the size of the critical set.

Requirement A critical set C ⊆ V – I such that |C| ≤ β and among all subsets of V – I of size at most β, the removal of C from G leads to the smallest number of new affected nodes.

An alternative formulation, where the objective is to maximize the number of nodes who are not affected, can also be considered. We use the name “Smallest Critical Set to Maximize Unaffected Nodes” for this problem and abbreviate it as SCS-MUN. To maintain the complementary relationship between the minimization (SCS-MNA) and maximization (SCS-MUN) versions, we assume that critical nodes are not included in the set of unaffected nodes in the formulation of SCS-MUN. With that assumption, any optimal solution for SCS-MUN is also an optimal solution for SCS-MNA. Our results in Sect. 4 provide an indication of the difficulties in obtaining provably good approximation algorithms for either version of the problem. So, our focus is on obtaining heuristics that work well in practice.

We also consider the problem of finding critical sets in a related context. Let 𝒮 = (G(V, E), 𝓕) be a SyDS and let I ⊆ V denote the set of seed nodes. We say that a node υ ∈ V – I is salvageable if there is a critical set C ⊆ V – (I∪{υ}) whose removal ensures that υ remains in state 0 when the modified SyDS (i.e., the SyDS obtained by removing C) reaches a fixed point. Otherwise, υ is called an unsalvageable node. Thus, in any SyDS, only salvageable nodes can possibly be saved from becoming affected.

Example Consider the 2-threshold SyDS shown in Fig. 1. Node υ4 in that figure is salvageable since removal of υ3 ensures that υ4 won’t be affected. Nodes υ5 and υ6 are salvageable since they are not affected even when no nodes are removed from the system. However, node υ3 is not salvageable since it has two neighbors (υ1 and υ2) who are initially affected.

We now formulate a problem whose goal is to find a smallest critical set that saves all salvageable nodes.

2.2.2 Smallest critical set to save all salvageable nodes (SCS-SASN)

Given A social network represented by the SyDS 𝒮 = (G(V, E), 𝓕) over 𝔹, with each function f ∈ 𝓕 being a threshold function; the set I of seed nodes which are initially in state 1.

Requirement A critical set C ⊆ V – I of minimum cardinality whose removal ensures that all salvageable nodes are saved from being affected.

As will be shown in Sect. 4, the complexity of the SCS-SASN problem for simple contagions is significantly different from that for complex contagions.

2.3 Types of thresholds

In the above discussion, the threshold (also called the absolute threshold) of each node was specified as a non-negative integer. A homogeneous threshold SyDS is one where all the nodes of a SyDS have the same threshold t, for some integer t ≥ 0. A heterogeneous threshold SyDS is one where nodes may have different thresholds. Researchers (e.g. Centola and Macy 2007) have also considered relative thresholds, where the threshold value of a node is a non-negative fraction of the number of neighbors of the node. (Here, each node is considered a neighbor of itself.) Similar to absolute thresholds, one can also consider homogeneous and heterogeneous relative thresholds. We refer to these variants as the “t-threshold variants.” Most of our theoretical results (Sect. 4) are presented in terms of absolute thresholds. Extensions of these results to the other t-threshold variants are straightforward, as outlined in the Appendix.

2.4 Additional terminology

Here, we present some terminology used in the later sections of this paper. The term “t-threshold system” is used to denote a SyDS with a homogeneous absolute threshold t ≥ 0 (thus, the value of t is the same for all nodes of the system).

We also need some terminology with respect to approximation algorithms for optimization problems (Garey and Johnson 1979). For any ρ > 1, a ρ-approximation for an optimization problem is an efficient algorithm that produces a solution which is within a factor of ρ of the optimal value for all instances of the problem. Such an approximation algorithm is also said to provide a performance guarantee of ρ. Clearly, the smaller the value of ρ, the better is the performance of the approximation algorithm.

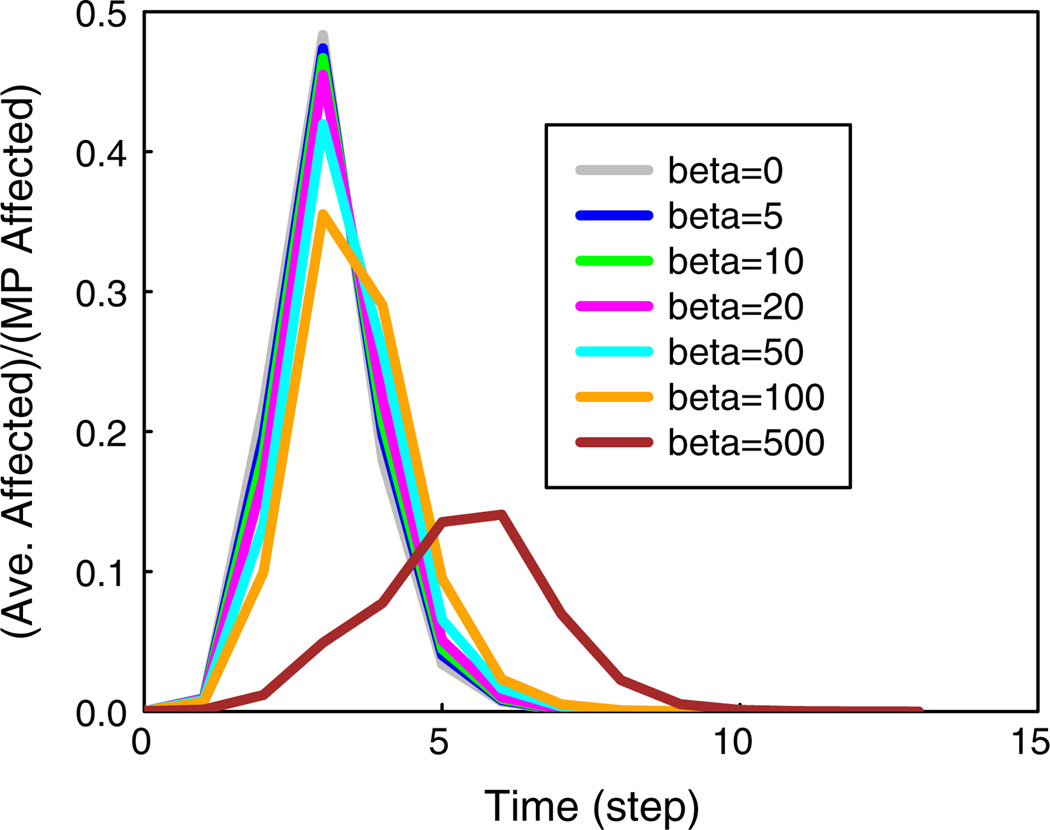

The following terms are used in describing simulation results and the behavior of the heuristics that produce critical sets. The spread size is the number (or fraction) of nodes in the affected state; the final spread size is the value at the end of a diffusion instance. A cascade occurs when diffusion starts from a set of seed nodes and the final fractional or absolute spread size is large relative to the number of nodes that can possibly be affected. Halt means that the chosen set of critical nodes will stop the diffusion process, thus preventing a cascade. A delay means that the chosen set of critical nodes will increase the time at which the peak number of newly affected nodes occurs, but will not necessarily halt diffusion. Finally, Table 1 provides acronyms and Table 2 lists variables used throughout this document.

Table 1.

Acronyms used in this paper

| Acronym | Expansion |

|---|---|

| BCH | Betweenness centrality critical node heuristic |

| CBH | Covering based heuristic |

| ECH | Eigenvalue centrality critical node heuristic |

| ED | Exponential decay |

| ER | Erdős–Renyi |

| HCH | High degree critical node heuristic |

| HITS | Hyperlink-induced topic search |

| MSC | Minimum set cover |

| MVC | Minimum vertex cover |

| PBH | Potential based heuristic |

| RCH | Random critical node heuristic |

| SCS-MNA | Smallest critical set to minimize the number of new affected nodes |

| SCS-MUN | Smallest critical set to maximize the number of unaffected nodes |

| SCS-SASN | Smallest critical set to save all salvageable nodes |

| SF | Scale free |

| SMC | Set multicover |

| SyDS | Synchronous dynamical system |

Table 2.

Variables used in this paper

| Symbol | Meaning |

|---|---|

| f | Local transition function |

| m | Number of edges in a graph |

| n | Number of nodes in a graph |

| ns | Number of seed nodes in a simulation |

| si | State of node vi |

| t | Threshold |

| 𝔹 | Boolean state set {0, 1} |

| C | Critical set of nodes used to block contagion spread |

| 𝒞, 𝒞′ | Configurations of a synchronous dynamical system |

| E | Set of edges in a graph G |

| 𝓕 | Set of local transition functions |

| G | Graph or network |

| I | Seed node set (i.e., set of nodes that are initially in state 1 in a simulation) |

| LG(t) | The size, in terms of nodes, of the largest connected component of a maximal t-core of a graph |

| 𝒮 | Synchronous dynamical system |

| T | An upper bound on time |

| V | Set of nodes in a graph G |

| β | Budget on the size of the critical set |

3 Summary of results and related work

3.1 Summary of results

Section 2 presented the formulations of the problems studied in this paper. The following is a summary of our main results.

We show that for any t ≥ 2 and any ρ ≥ 1, it is NP-hard to obtain a ρ-approximation for either the SCS-MNA problem or the SCS-MUN problem for t-threshold systems. (The result holds even when ρ is a function of the form nδ, where δ < 1 is a constant and n is the number of nodes in the underlying network).

We show that the problem of saving all salvageable nodes (SCS-SASN) can be solved in linear time for 1-threshold systems and that the required critical set is unique. In contrast, we show that the problem is NP-hard for t-threshold systems for any t ≥ 2. We present an approximation algorithm for this problem with a performance guarantee of ρ < 1 + ln (s), where s is the number of salvageable nodes in the system. We also show that the performance guarantee cannot be improved significantly, unless P = NP.

We develop two intuitively appealing heuristics, designated covering-based heuristic (CBH) and potential-based heuristic (PBH), for the SCS-MNA problem, and carry out an experimental study of their performance on three social networks, namely epinions, wikipedia and slashdot. We compare our schemes against five known methods for determining critical nodes (representing a range of blocking methods): random assignment, high-degree nodes, nodes of high betweenness centrality (Freeman 1976), nodes of high eigenvector centrality (Bonacich 1972), which can also be computed for undirected graphs using the hyperlink-induced topic search (HITS) algorithm (Kleinberg 1999), and maximum eigenvalue drop (also called NetShield) (Tong et al. 2010). We show that our methods are far more effective in blocking complex contagions.

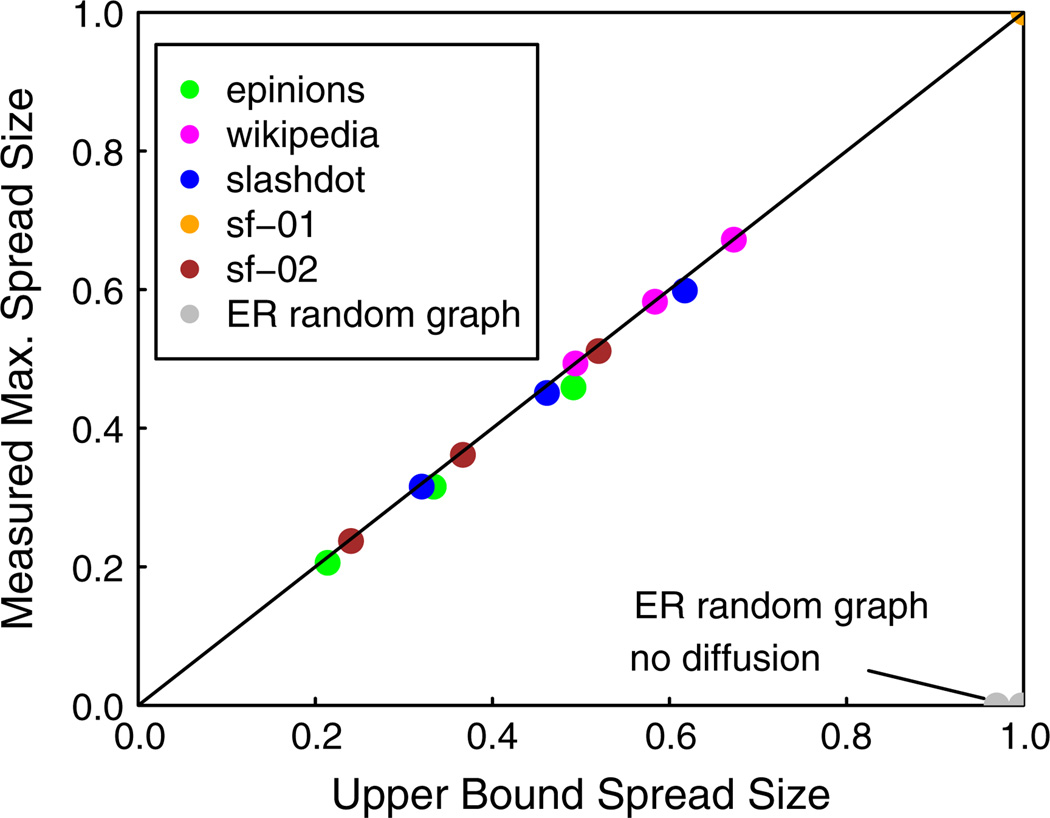

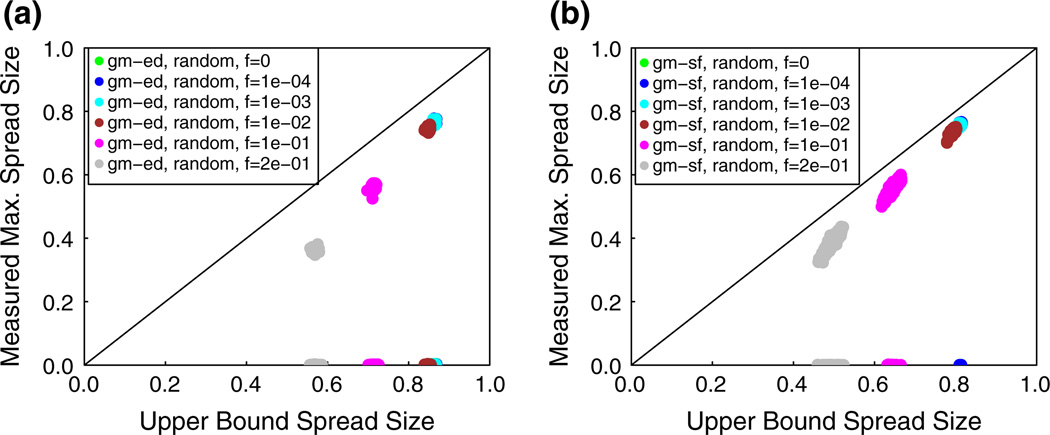

We establish an upper bound on the spread size for diffusion in t-threshold systems, for t ≥ 1. Interestingly, this upper bound estimates a dynamic quantity (maximum spread size) using an easily computable static parameter of the network. We show experimentally that this upper bound is achievable for several values of t for real social networks. We also evaluate the effectiveness of the bound for a large number of synthetic networks.

Our heuristics can be used with heterogeneous thresholds. They can also be extended for use under more general transition criteria for nodes (e.g. generalized contagion models of Dodds and Watts 2005) and with probabilistic diffusion (where a node transitions from 0 to 1 with probability p when its threshold is met). Finally, our methods can also be extended for use in time-varying networks where the edges of the network and transition criteria change in a repeatable pattern (e.g., to reflect daytime and night-time interactions as in Prakash et al. 2010).

3.2 Related work

Work on finding critical sets has been almost exclusively confined to simple contagions (i.e., 1-threshold systems). Critical nodes are called “blockers” in (Habiba et al. 2008); they examine dynamic networks and use a probabilistic diffusion model with threshold = 1. They utilize graph metrics such as degree, diameter, and betweenness centrality (adapted to time-varying networks) to identify critical nodes. Anshelevich et al. (2009) also study dynamic networks and threshold-1 behavior. They use newly affected nodes to specify a predefined number of new blocking nodes per time step as deterministic diffusion emanates from a single seed node.

Many sophisticated methods for blocking simple contagions involve eigenvalue (and eigenvector) computations. Eigenvector centrality (Bonacich 1972) is used to rank nodes from best blocker to worst blocker in decreasing order of the magnitude of their eigenvector components for the dominant eigenvalue (e.g., Habiba et al. 2008; Tong et al. 2010). HITS (Kleinberg 1999) is another eigenvector-based approach for identifying blocking nodes when applied to undirected graphs. PageRank (Page et al. 1999) of a node υ is closely aligned with eigenvector centrality, and was also studied in (Habiba et al. 2008; Tong et al. 2010). Initially in (Wang et al. 2003), and later in (Ganesh et al. 2005; Chakrabarti et al. 2008), a blocking scheme based on eigenvalues of the adjacency matrix of the underlying graph is discussed. A drawback of this method is that it is not practical for large networks, since it requires a large number of eigenvalue computations. To overcome this drawback, an efficient eigenvector-based heuristic has recently been proposed and evaluated on three social networks in (Tong et al. 2010).

A variety of network-based candidate measures for identifying critical nodes for simple contagions are described in (Borgatti 2006); however, the applications are confined to small networks. The effectiveness of removing nodes at random and removing high degree nodes has been studied in (Holme 2004; Albert et al. 2000; Crucitti et al. 2004; Cohen et al. 2003; Madar et al. 2004; Dezso and Barabasi 2002; Briesemeister et al. 2003). An approximation algorithm for the problem of minimizing the number of new affected nodes for simple contagions is presented in (Eubank et al. 2006).

Moving now to complex contagions, we are aware of only one work on inhibiting diffusion, and that is for 2-threshold systems. Centola (2009) examined how removal of nodes from 10,000-node synthetic exponential and power law (i.e., SF) graphs affects the diffusion of complex contagions. His motivation was to determine how resilient a network is to random and targeted node removal schemes; the former scheme removes nodes uniformly randomly while the latter removes high degree nodes. His work differs from ours in that it is focused on observing the spread size under the two node removal schemes rather than on stopping diffusion. While the results in (Centola 2009) show that the targeted method works well in inhibiting diffusion in some synthetic networks, we show herein that this method does not work well for realistic social networks, for t = 2, 3, and 5.

Although we limit ourselves to static networks, our methods can be applied to time-varying networks if the network modifications are deterministic in time. As motivated in (Prakash et al. 2010), this is a reasonable first approximation of people’s regular, repeatable behavior and is used extensively in epidemiological modeling (e.g., Barrett et al. 2008; Perumalla and Seal 2010). Our methods can also be used without modification for probabilistic diffusion where below the threshold t, the probability of node transition is zero, and at or above the threshold, a node υ transitions with probability pυ.

4 Theoretical results for the critical set problems

4.1 Overview and a preliminary lemma

In this section, we first establish complexity results for finding critical sets. We also present results that show a significant difference between 1-threshold systems and t-threshold systems where t ≥ 2. Most of the results in this section are for homogeneous thresholds; extensions of the results to heterogeneous and relative thresholds are outlined in the Appendix.

Lemma 1 Given a SyDS S = (G(V, E), 𝓕), the set I ⊆ V of initially affected (i.e., seed) nodes and a critical set C ⊆ V – I, the number of new affected nodes in the system that results when C is removed from V can be computed in O(|V| + |E|) time.

Proof Recall that the removal of C is equivalent to changing the local transition function fυ of each node v ∈ C to the function that remains 0 for all inputs. Since the resulting SyDS 𝒮1 is also a ratcheted SyDS, by Proposition 1, it reaches a fixed point in at most n = |V| steps. The fact that each local transition function is a threshold function can be exploited to find all the nodes that are affected over the time steps in O (|V| + |E|) time.

The idea is to have for each node υ ∈ V, a counter cυ that stores the number of neighbors of υ that are currently affected. To begin with, for each unaffected node, the counter is initialized to 0. In time step 1, for each node w ∈ I, the counter for each unaffected neighbor x of w is incremented. If the count for x reaches its threshold, then x is added to a list L of nodes which will contain all the nodes that are affected at time step 1. At the next time step, the above procedure is repeated using the nodes in L (instead of the nodes in I). This method can be carried out for each subsequent time step until the system reaches a fixed point (i.e., until the list of newly affected nodes becomes empty). It can be seen that for each node υ of G, this method explores the adjacency list of υ just once through all the time steps. So, the total time spent in the computation is O(Συ∈V degree(υ)) = O(|E|). The initialization of the counters and the final step to output the newly affected nodes take O(|V|) time. Therefore, the total time is O(|V| + |E|).

4.2 Complexity results

As mentioned earlier, the SCS-MNA problem was shown to be NP-complete in (Eubank et al. 2006) for the case when each node has a 1-threshold function. We now extend that result to show that even obtaining a ρ-approximate solution is NP-hard for systems in which each node computes the t-threshold function for any t ≥ 2.

Theorem 1 Assuming that the bound β on the size of the critical set cannot be violated, for any ρ ≥ 1 and any t ≥ 2, there is no polynomial time ρ-approximation algorithm for the SCS-MNA problem for t-threshold systems, unless P = NP

Proof Suppose 𝒜 is a ρ-approximation algorithm for the SCS-MNA problem for t-threshold systems for some ρ ≥ 1 and t ≥ 2. We will show that 𝒜 can be used to efficiently solve the Minimum Vertex Cover (MVC) decision problem (Garey and Johnson 1979): Given an undirected graph G(V, E) and an integer k, is there a subset V′ of V such that |V′| ≤ k and for each edge {u, v} ∈ E, at least one of u and υ is in V′?

Let G = (V, E) be the given graph for the vertex cover problem, with n = |V| and m = |E|. We construct a SyDS 𝒮 = (H(VH, EH), 𝓕) as follows. The vertex set VH consists of three pairwise disjoint groups of nodes denoted by X, Y and Z. The set X = {x1, x2, …, xt} consists of t nodes all of which are initially 1. The set Y = {y1, y2, …, yn} contains a node for each member of V. Let α = ⌈ρ(n–k)⌉+k+1. The set Z = {z1, z2, …, zαm} contains a total of αm nodes, with α nodes corresponding to each edge of G. All the nodes in Y ∪ Z are initially 0. The edges in EH are as follows.

Each node in Y is adjacent to each node in X.

Each node in Z is adjacent to the first t – 2 nodes (i.e., nodes x1, …, xt−2) of X.

Let gj denote the group of α nodes corresponding to edge ej ∈ E; each node of gj is adjacent to the two nodes in Y which correspond to the end points of the edge ej ∈ E, 1 ≤ j ≤ m.

The local transition function at each node of 𝒮 is the t-threshold function. The value of β (the upper bound on the critical set size) is set to k. This completes the construction of the SCS-MNA instance. Obviously, the construction can be done in polynomial time.

Suppose G has a vertex cover V′ = {υi1, υi2, …, υik} of size k. It can be verified that when the critical set C = {yi1, yi2, …, yik} is removed, only the n – k nodes in Y – C are affected; that is, the number of new affected nodes is n – k. Since Algorithm 𝒜 provides a performance guarantee of ρ, the critical set output by 𝒜 in this case leads to at most ρ(n – k) new affected nodes.

Now suppose that a minimum vertex cover for G has k + 1 or more nodes. We claim that no matter which subset of k (or fewer) nodes from Y ∪ Z is chosen as the critical set, the number of newly affected nodes is at least ρ(n – k) + 1. To see this, note that any critical set can use at most k nodes of Y. Since any minimum vertex cover for G has k + 1 or more nodes, no matter which subset of k nodes from V is chosen, at least one edge ej = {υp, υq} remains uncovered (i.e., neither υp nor υq is in the chosen set). As a consequence, no matter which subset of k nodes from Y is chosen, there is at least one group gj of α nodes in Z such that for each node z ∈ gj, the two nodes in Y, say yp and yq, that are adjacent to z are not in the critical set. Thus, yp and yq will become affected and consequently all nodes in group gj become affected. Since gj contains α = ⌈ ρ(n − k) ⌉ + k + 1 nodes, even if C includes k nodes from gj, at least ⌈ ρ(n − k) ⌉ + 1 nodes of gj will become affected. Thus, when the minimum vertex cover for G is of size k + 1 or more, the number of newly affected nodes is strictly greater than ρ(n − k).

Now, suppose we execute 𝒜 on the resulting SCS-MNA instance and obtain a critical set C. From the above argument, G has a vertex cover of size at most k if and only if the number of new affected nodes that result from the removal of C is at most ρ(n − k). From Lemma 1, the number of new affected nodes after the removal of a critical set can be found in polynomial time. Thus, using 𝒜, we have a polynomial time algorithm for the MVC problem, contradicting the assumption that P ≠ NP.

We note that, in the above proof, the factor ρ need not be a constant; it may be a function of the form nδ, where δ < 1 is a constant and n is the number of nodes of the graph in the MVC instance.

We now present a result similar to that of Theorem 1 for the maximization version of the problem (SCS-MUN).

Theorem 2 Assuming that the bound β on the size of the critical set cannot be violated, for any ρ ≥ 1 and any t ≥ 2, there is no polynomial time ρ-approximation algorithm for the SCS-MUN problem for t-threshold systems, unless P = NP.

Proof Assume that 𝒜 is a ρ-approximation algorithm for the SCS-MUN problem. We prove the result by a reduction from the Minimum Vertex Cover problem, similar to the one used to prove Theorem 1. The modifications are as follows.

In addition to the sets of nodes X, Y and Z, we have another set of nodes W = {w1, w2, …, wh}, where h = ⌈(ρ – 1)|Z|⌉.

Each node in W is adjacent to the first t – 1 nodes in X and all nodes in Z.

Now, if G has a vertex cover of size k, then by choosing the corresponding nodes of Y, all the nodes in Z ∪ W can be saved from becoming affected. Recall from Sect. 2.2 that the chosen critical nodes are not included in the set of unaffected nodes. Thus, in this case, the number of unaffected nodes is |Z| + ⌈(ρ – 1) |Z|⌉ ≥ ρ|Z|. Since 𝒜 is a ρ-approximation algorithm, it must produce a critical set of size k such that the number of nodes which are not affected is at least |Z|.

If every vertex cover for G has k + 1 or more nodes, then no matter which subset of k (or fewer) nodes is chosen from Y, at least one node of Z will become affected. Consequently, all the nodes in W (which are not in the critical set) will also become affected. Therefore, no matter which critical set of size k is chosen, the number of unaffected nodes is at most |Z| – 1.

Thus, using 𝒜, we can obtain a polynomial time algorithm for the MVC problem, contradicting the assumption that P ≠ NP.

4.3 Critical sets for saving all salvageable nodes

Recall from Sect. 2.2 that a node υ of a SyDS is salvageable if there is a critical set whose removal ensures that υ will not be affected. The problem of finding optimal critical sets to save all salvageable nodes, denoted by SCS-SASN, was also formulated in that section. We now present results for SCS-SASN that show a significant difference between 1-threshold systems and t-threshold systems where t ≥ 2.

Theorem 3 Let S = (G(V, E), 𝓕) be a 1-threshold SyDS. The SCS-SASN problem for 𝒮 can be solved in O (|V| + |E|) time. Moreover, the solution is unique.

Proof Call a given unsalvageable node of G a fringe node if it has at least one neighbor that is salvageable. We now argue that the set of all fringe nodes in G is the smallest critical set whose removal ensures that all salvageable nodes are saved from being affected.

First, observe that for a 1-threshold system, a given node is salvageable iff its initial state is 0 and the initial states of all its neighbors are also 0 (thus, determining whether a node υ is salvageable can be done in time O(degree(υ)) time, where the degree is the number of edges incident on υ). It can be seen that removing all the fringe nodes saves all salvageable nodes. Further, this is the smallest critical set since if any fringe node is not removed, all of its salvageable neighbors would become affected. Thus, there is a unique smallest critical set for the system.

As mentioned above, determining whether a node υ is salvageable can be done in O(degree(υ)) time. Therefore, the time to identify all salvageable nodes is O(Συ∈V degree(υ)) = O(|E|). The set of fringe nodes consists of those nodes that are initially 0, have at least one neighbor that is initially 1, and have at least one neighbor that is salvageable. Thus, once all salvageable nodes have been identified, determining all the fringe nodes can also be done in O(|E|) time. Outputting the fringe nodes takes O(|V|) time. Thus, the set of all fringe nodes can be found and output in O (|V| + |E|) time.

The next set of results concerns the SCS-SASN problem for t-threshold systems, where t ≥ 2.

Theorem 4 (a) For any integer t ≥ 2, the SCS-SASN problem is NP-hard for t-threshold systems.

(b) There is an integer t such that for t-threshold systems, there is no polynomial time approximation algorithm for the SCS-SASN problem with a performance guarantee of (1 – ∊) ln(|Z|) for any ∊ > 0, where Z is the set of salvageable nodes in the system, unless P = NP.

Proof of Part (a) This result can be shown by a simple modification to the reduction from Minimum Vertex Cover to the SCS-MNA problem given in the proof of Theorem 1. The modification is that the set Z contains only m = |E| nodes, one corresponding to each edge of G. In the resulting SyDS, the salvageable nodes are those in the set Z. Nodes in the set Y are unsalvageable (each of them has t neighbors who are initially affected). It can be verified that G has a vertex cover of size at most k iff there is a subset C of Y, with |C| ≤ k, whose removal saves all the nodes of Z from becoming affected.

Proof of Part (b) We use a reduction from the Minimum Set Cover (MSC) problem (Garey and Johnson 1979): Given a universal set U = {u1, u2, …, un} and a collection C = {C1, C2, …, Cm} of subsets of U, find a minimum cardinality subcollection C′ of C such that the union of the sets in C′ is equal to U. We will use the fact that the MSC problem cannot be approximated to within the factor (1 - ∊) ln(|U|) for any ∊ > 0, unless P = NP (Raz and Safra 1997).

Given an instance of the MSC problem, let q denote the maximum number of occurrences of an element of U in the sets in C. We construct an instance of the SCS-SASN problem as follows. The node set of the underlying graph of the SyDS consists of three pairwise disjoint sets X, Y and Z, where X = {x1, x2, …, xq} is the set of initially affected nodes, Y = {y1, y2, …, ym} is in one-to-one correspondence with the collection C and Z = {z1, z2, …, zn} is in one-to-one correspondence with the set U. Thus, the initial states of the nodes in X are 1 while those of the nodes in Y ∪ Z are 0. The edges of the underlying graph are as follows.

Each node in X is adjacent to every node in Y.

Consider each element ui ∈ U and suppose ui appears in sets Ci1, Ci2, …, Cir, for some r ≤ q; then, node zi is joined to the first q – r nodes of X and the r nodes yi1, yi2, …, yir of Y.

The threshold for each node is set to q. Thus, the constructed SyDS is a q-threshold system.

It can be seen that none of the nodes in Y is salvageable while all the nodes in Z are salvageable. Thus, every critical set must be a subset of Y. It can also be verified that any critical set of size α corresponds to a solution to the MSC problem with α subsets from C and vice versa. It follows that if there is an approximation algorithm for the SCS-SASN problem with a performance guarantee of (1 – ∊) ln(|Z|) for the SCS-SASN problem, where ∊ > 0, then, since |Z| = |U|, there is an approximation algorithm with a performance guarantee of (1 – ∊) ln(|U|) for the MSC problem. The result of Part (b) follows with t = q.

We now discuss an approximation algorithm for the SCS-SASN problem with a performance guarantee of Hs, where s is the number of salvageable nodes in the system and is the sth Harmonic Number. Since Hs < 1 + ln (s), this algorithm shows that the lower bound result of Part (b) of Theorem 4 is nearly tight. Moreover, our approximation algorithm is valid even when nodes have different thresholds (i.e., for systems with heterogeneous thresholds).

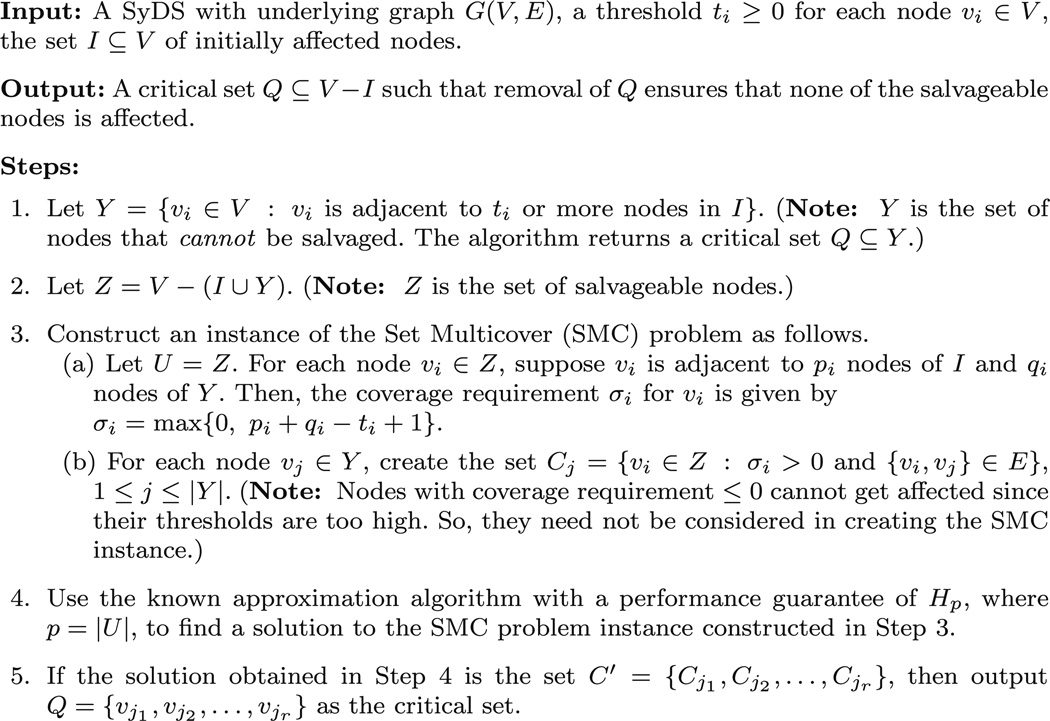

The idea is to reduce the SCS-SASN problem to a more general form of the MSC problem, called the set multicover (SMC) problem (Vazirani 2001). Like the MSC problem, the input to the SMC problem consists of the set U and the collection C of subsets of U. In addition, for each element u ∈ U, a coverage requirement σu ∈ 𝕫+ is also given, and the goal of the SMC problem is to pick a minimum cardinality subcollection C′ of C such that for each element u ∈ U, there are at least σu subsets in C′ that contain u. An approximation algorithm with a performance guarantee of Hp, where p = |U|,is known for the SMC problem (Vazirani 2001). This fact is used in our approximation algorithm for the SCS-SASN problem shown in Fig. 2. It can be seen that the approximation algorithm runs in polynomial time. We now prove its correctness and its performance guarantee.

Fig. 2.

An approximation algorithm for the SCS-SASN problem

Theorem 5 The critical set Q produced by the algorithm in Fig. 2 ensures that none of the salvageable nodes is affected. Also, |Q| ≤ |Q*|Hs, where Q* is an optimal critical set and s is the number of salvageable nodes in the system.

Proof We first observe that the set Z constructed in Step 2 of the algorithm contains all the salvageable nodes. The reason is that no node in Y is salvageable since for each node υ ∈ Y, the number of initially affected neighbors is at least the threshold of υ. Moreover, by removing all the nodes in Y, we can ensure that none of the nodes in Z is affected.

We now argue that the set Q produced by the algorithm is indeed a critical set; that is, the removal of Q ensures that none of the nodes in Z is affected. To see this, consider any node υi ∈ Z and let pi and qi denote the number of neighbors of υi in I and Y respectively. The coverage requirement for υi was chosen as σi = max{0, pi + qi – ti + 1}. If σi = 0, then pi + qi ≤ti – 1, and therefore, υi cannot be affected. If σi ≥ 1, then the number of neighbors of υi after removing all the nodes in Q is at most pi + qi – σi which is at most ti – 1 by the definition of σi. Hence, υi will not get affected.

To prove the performance guarantee, we have the following claim.

Claim 1 Any critical set of size α corresponds to a solution to the SMC problem with α sets and vice versa.

Proof of Claim 1 Consider any critical set Q′ = {υj1, υj2, …, υjα} of size α. We argue that the corresponding collection C′ = {Cj1, Cj2, …, Cjα} of sets is a solution to the SMC problem. Since Q′ is a critical set, for any node υi ∈ Z, after the removal of Q′, the number of neighbors of υi in I ∪ (Y – Q) is at most ti – 1. Using this fact, it can be verified that each node υi ∈ Z is covered at least σi times by C′. In other words, C′ is a solution to the SMC problem. The proof that any solution to SMC problem with α sets corresponds to a critical set of size α is similar, and this establishes Claim 1.

To establish the performance guarantee, let Q* be an optimal critical set. Thus, by the above claim, there is a solution to the SMC problem with at most |Q*| sets. Since the approximation algorithm for the SMC problem provides a performance guarantee of Hs, where s = |U| = |Z|, the size of the solution to the SMC problem obtained in Step 4 of the algorithm is at most |Q*|Hs. Since the approximation algorithm produces a critical set whose size is exactly the number of sets in the approximate solution to the SMC problem, we have |Q| ≤ |Q*| Hs.

5 Heuristics for finding small critical sets

5.1 Overview

The complexity results presented in Sect. 4 point out the difficulty of developing heuristics with provably good performance guarantees for the SCS-MNA and SCS-MUN problems. So, we focus on the development of heuristics that work well in practice for one of these problems, namely SCS-MNA. In this section, we present two such heuristics. The first heuristic uses a greedy set cover computation. The second heuristic relies on a potential function, which provides an indication of a node’s ability to affect other nodes. Experimental evaluation of these heuristics on several social networks is discussed in Sect. 6.

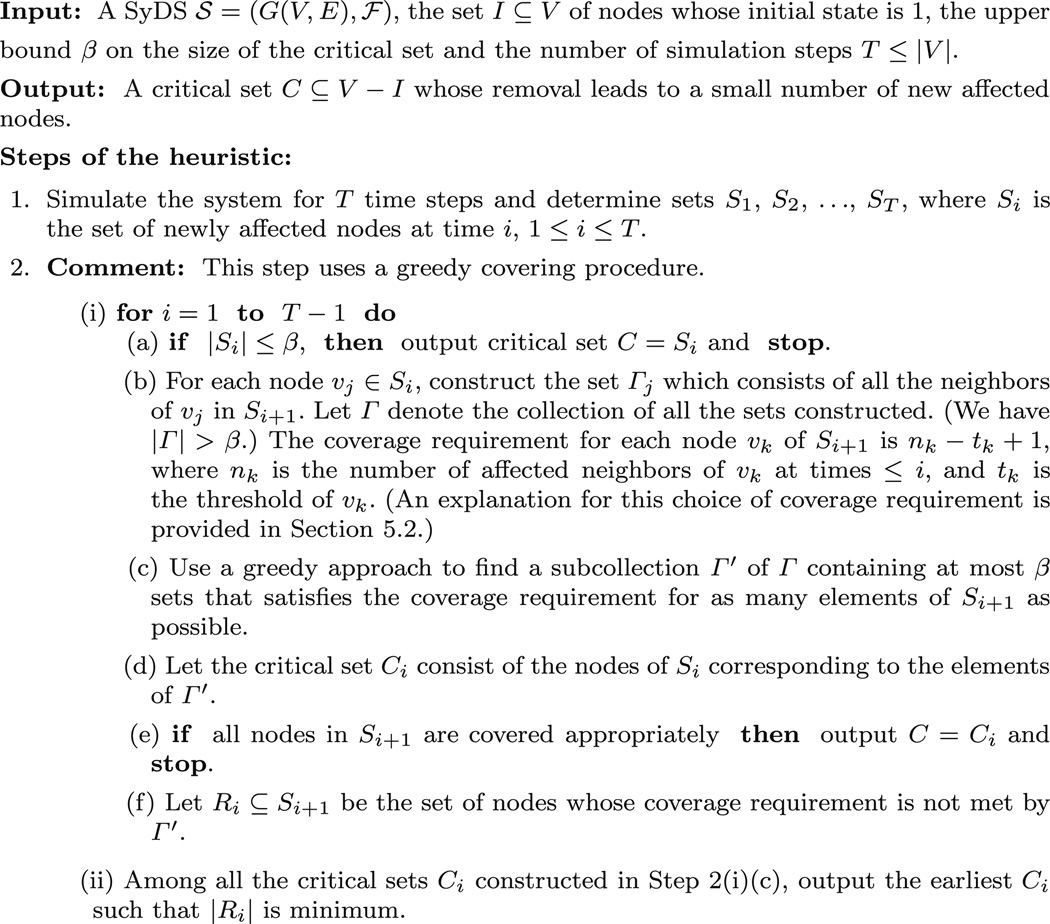

5.2 Covering-based heuristic (CBH)

Given a SyDS 𝒮 = (G(V, E), 𝓕) and the set I ⊆ V of nodes whose initial state is 1, one can compute the set Si ⊆ V of nodes that change to state 1 at the ith time step, 1 ≤ i ≤ T, for some suitable T ≤ |V|, which can be taken as the time to reach a fixed point (this can be done efficiently as explained in the proof of Lemma 1). The CBH, whose details appear in Fig. 3, chooses a critical set C as a subset of Si for some suitable i. The intuitive reason for doing this is that each node υ in Si+1 has at least one neighbor w in Si (otherwise, υ would have changed to 1 in an earlier time step). Therefore, if a suitable subset of Si can be chosen as critical so that none of the nodes in Si+1 changes to 1 during the (i + 1)st time step, the contagion cannot spread beyond Si. Consistent with the goal of the SCS-MNA problem, we seek to halt the diffusion process as early as possible in Step 2 of Fig. 3. There are two means by which this can be accomplished at each time i: Step 2(i)(a) which checks whether Si can itself serve as a critical set (i.e., |Si| < β) and Step 2(i)(e) which checks whether the covering procedure discussed below produces a suitable critical set from Si.

Fig. 3.

Details of the CBH

In general, when nodes have thresholds ≥ 2, the problem of choosing at most β nodes from Si to prevent a maximum number of nodes in Si+1 from changing to 1 corresponds to the SMC problem mentioned in Sect. 4. Step 2(i)(b) constructs an instance of SMC, where each set Γj in the collection Γ corresponds to a node υj of Si. The elements to be covered are the nodes in Si+1. The coverage requirement for each node υk ∈ Si+1 is determined as follows. Suppose υk has threshold tk and has nk affected neighbors in S1 ∪ S2 ∪ … ∪ Si. Since υk ∈ Si+1 is affected in time step i + 1 in the absence of any critical nodes, we have nk ≥ tk. Thus, to prevent υk from getting affected, at least nk – (tk – 1) = nk − tk + 1 nodes from Si must be chosen as critical nodes. In the SMC formulation, this number corresponds to the coverage requirement for node υk.

Since SMC is NP-hard, a greedy approach is used (Step 2(i)(c)) for this covering problem (Vazirani 2001). This approach iterates over the sets of Γ; in each iteration, the chosen set from Γ corresponds to a node υj from Si that contributes to saving the largest number of nodes in Si+1 from becoming affected. That is, υj is the node that has the greatest number of edges in G(V, E) to nodes in Si+1 that are still affected (ties are broken arbitrarily). Thus, the subcollection Γ’ produced by Step 2(i)(c) corresponds to a subset of Si.

If the conditions in Steps 2(i)(a) and 2(i)(e) are not satisfied for any T, 1 ≤ i ≤ T – 1, then a solution that has the smallest number of nodes whose coverage requirement is not met is chosen as the output. We now establish the running time of CBH.

Proposition 2 Let G(V, E) denote the underlying graph of the given SyDS 𝒮. The running time of CBH is O (|V| |E|).

Proof Consider the description of CBH in Fig. 3. Using Lemma 1, Step 1 can be implemented to run in O(|V| + |E|) time. In each iteration of Step 2(i), it can be seen that the dominant part of the running time is due to the greedy heuristic for SMC. This heuristic can be implemented to run in O(σ) time, where σ is the sum of the sizes of the given sets (Vazirani 2001). In CBH, since the set constructed for any node υ is of size at most degree(υ), the sum of the sizes of all the sets is at most Συ∈V degree(υ) = |E|. Thus, each execution of the greedy heuristic runs in O(|E|) time. Since Step 2(i) runs the greedy set cover heuristic T – 1 times, the running time of that step is O(T|E|). Since T ≤ |V|, the worst-case running time of CBH is O(|V| |E|).

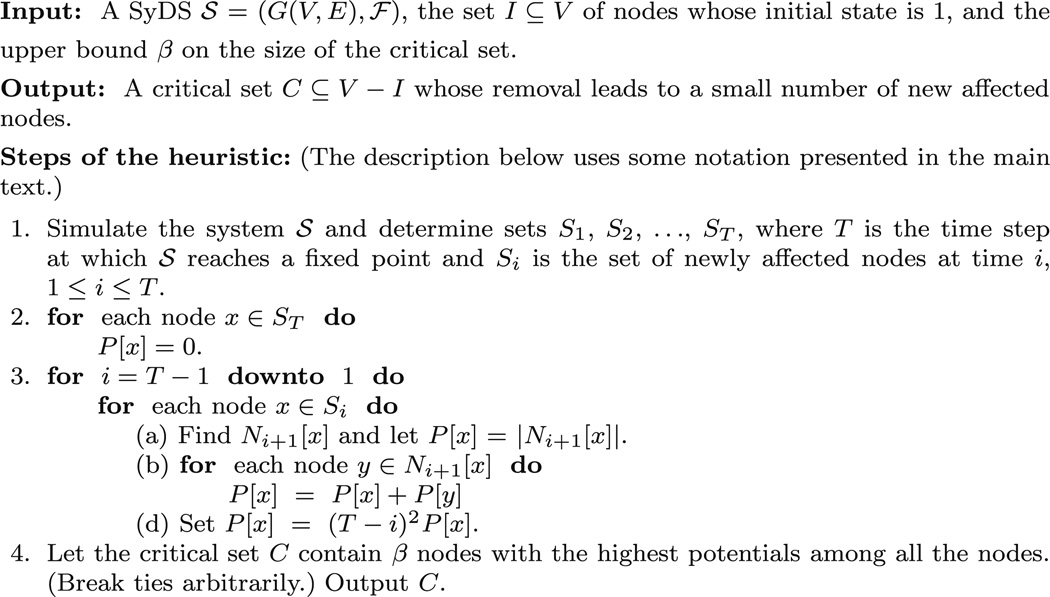

5.3 Potential-based heuristic

Here, we provide the details of the PBH. The idea is to assign a potential to each node υ depending on how early υ is affected and how many nodes it can affect later. Nodes with larger potential values are more desirable for inclusion in the critical set. While the CBH chooses a critical set from one of the Si sets, the potential based approach may select nodes in a more global fashion from the whole graph.

We assume that set Si of newly affected nodes at time i has been computed for each i, 1 ≤ i ≤ T, where T is the time at which the system reaches a fixed point. For any node x ∈ Si, let Ni+1 [x] denote the set of nodes in Si+1 which are adjacent to x in G. The potential P[x] of a node x is computed as follows.

-

-

For each node x in ST, P[x] = 0. (Justification Since there is no diffusion beyond level T, it is useless to have nodes from ST in the critical set).

-

-For each node x in level Si, 1 ≤ i ≤ T – 1,

(Justification The term (T – i)2 decreases as i increases. Thus, the term enables us to assign higher potentials to nodes that are affected earlier. The term |Ni+1 [x]| is included in the expression for potential so that nodes which have a large number of neighbors in the next level become desirable candidates for inclusion in the critical set.)(1)

The steps of the PBH are shown in Fig. 4. Steps 1, 2 and 3 compute the potentials for all the nodes in S1 ∪ S2 ∪ … ∪ ST in a bottom-up fashion. Step 4 indicates how the critical set is chosen. We now establish the running time of PBH.

Fig. 4.

Details of the PBH

Proposition 3 Let G(V, E) denote the underlying graph of the given SyDS 𝒮. The running time of PBH is O(|V| + |E|).

Proof Consider the description of PBH in Fig. 4. From Lemma 1, Step 1 can be implemented to run in O(|V| + |E|) time. Step 2 runs in O(|V|) time. In Step 3, the potential for each node υ is computed by examining the neighbors of υ. Thus, for any node υ, the time used to compute υ’s potential is O(degree(υ)). Hence, the time used to compute the potentials of all the nodes is O(Συ∈V degree(υ)) = O(|E|). Step 4 can be carried out in O(|V|) time since the βth largest potential value can be computed in O(|V|) time using the well known linear time selection algorithm (Cormen et al. 2001). Hence, the overall running time of PBH is O(|V| + |E|).

The next section presents a simple example to illustrate similarities and differences between the behaviors of PBH and CBH.

5.4 Comparison of critical node heuristics

We compare behaviors of the two critical node heuristics PBH and CBH with respect to how they block t = 2 diffusion on the graph of Fig. 5 when the two nodes A and B are the seed nodes. We note that this graph could be embedded in a larger graph. Also, we have chosen the edges among the nodes to be somewhat regular for expository reasons. However, there are many edge sets among this set of nodes that will give the same behavior as that discussed below. Furthermore, many additional edges can be introduced without affecting the spread size.

Fig. 5.

Example graph where threshold-2 diffusion starts from the two seed nodes A and B. Without critical nodes, the four brown nodes (C, D, E and F) are affected at time i = 1 and the four green nodes (G, H, J and K) are affected at i = 2 (Color figure online)

In the absence of critical nodes, the contagion will propagate throughout the network. In particular, nodes G and H are affected by nodes C and D, and hence nodes L, M, N, and P are all affected through the nodes C and D. We consider two cases of critical nodes: one in which CBH performs much better than PBH and a second case where the two methods perform comparably. We now consider the case where the number of blocking nodes is β = 2 and demonstrate that CBH performs far better than PBH.

For CBH, we start with the nodes (in green) that are affected at time i = 2. The candidate nodes that may be specified as critical are the four brown nodes, namely C, D, E and F. If either of C or D is not affected at i = 1, then neither G nor H can be affected at i = 2 (because their thresholds are 2). Similarly, if either E or F is not affected at i = 1, then neither J nor K can be affected at i = 2, and the contagion is halted in that direction. Thus, CBH will select one of {C, D} and one of {E, F} as critical nodes (ties are broken arbitrarily) and the final spread size is four nodes: the two seeds and whichever two of the four brown nodes are not set critical.

For PBH, we note that potentials are computed first for the nodes that are affected last, and the computations proceed backwards to the seed nodes. Starting from nodes N and P, whose potentials are zero, the potentials of nodes will increase as one moves up toward the seed nodes. Thus, the nodes with the largest potential, and hence the nodes that will form the set critical, are C and D. The resulting spread size is 12 since all nodes except C and D will be affected.

Now, for the second case, suppose instead that β is increased from 2 to 4. The critical sets for CBH and PBH are, respectively, {C, D, E, F} and {C, D, G, H} and the final spread sizes are 2 and 6. Thus, with four critical nodes, the difference in spread sizes between the two heuristics is cut in half (from a difference of 8 when β = 2, to a difference of 4 when β = 4).

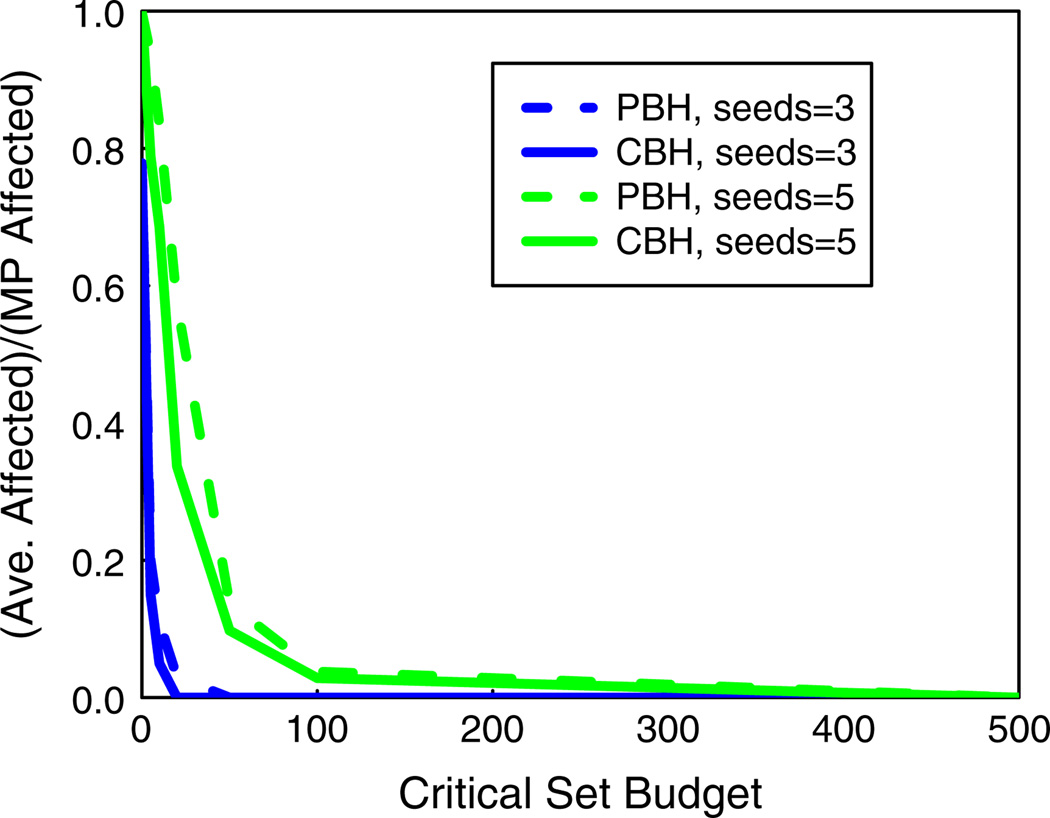

In Sect. 6.4 (Fig. 12) we discuss parameter settings which show significant differences between the blocking performances of PBH and CBH for realistic social networks.

Fig. 12.

Comparisons of the CBH and PBH in inhibiting diffusion in the epinions with t = 2 and wikipedia (wiki) with t = 3. The number β of critical nodes is 500

6 Blocking experiments and results

6.1 Overview

We first describe the social networks used for testing. Next, we compare the blocking performance of our heuristics with those of five known heuristics. When then provide further results from an experimental evaluation of our methods to illustrate additional aspects of blocking. Our results provide answers for the SCS-MNA problem. We also provide timing data for our heuristics to emphasize the tradeoff between execution speed and quality of critical sets.

6.2 Networks and generation of seed nodes

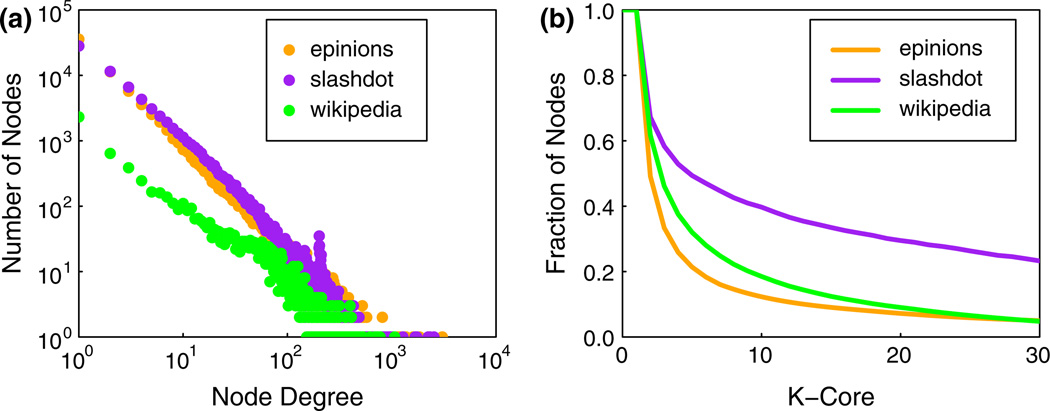

Table 3 provides selected features of the three social networks used in this study (we refer to these as “realistic social networks,” since they were produced by mining real social datasets). We assume that all edges are undirected to accentuate diffusion and thereby test the heuristics more stringently. Average degree and average clustering coefficient vary by more than a factor of 2 across the networks. Power law degree distributions for the three networks are shown in Fig. 6a. While we use mined networks to investigate realistic structures, other works (e.g., Barash 2011) look at the effects of stylized structures on contagion processes without blocking nodes.

Table 3.

Selected characteristics of three realistic social networks (Richardson et al. 2003; Leskovec et al. 2008, 2010)

| Network | Number of nodes |

Number of edges |

Average degree | Average clustering coefficient |

Number of connected components |

Size of largest component |

|---|---|---|---|---|---|---|

| Epinions | 75879 | 405740 | 10.7 | 0.138 | 2 | 75877 |

| Slashdot | 77360 | 469180 | 12.1 | 0.0555 | 1 | 77360 |

| Wikipedia | 7115 | 100762 | 28.3 | 0.141 | 1 | 7115 |

Fig. 6.

a Degree distributions and b k-core sizes for the three networks of this study

A k-core of a graph is a subgraph in which each node has a degree of at least k (Seidman 1983). A k-core is maximal if there is no larger subgraph (in terms of number of nodes) which is also a k-core.2 Figure 6b provides maximal k-core sizes for the three networks. The number of nodes in each maximal k-core must be non-increasing as k increases. We will be particularly interested in k = 2, 3, 5, and 20 in this paper, and for each of these values, the maximal k-core forms one connected component (although in general, a k-core need not consist of only one component).

All our diffusion experiments use the following method of generating seed sets. For a given value of number ns of seeds, 100 sets of seed nodes are determined from each network to provide a range of cases for testing the heuristics. Each set of seed nodes is taken from a 20-core; thus, each seed node has a degree of at least 20. Choosing seed nodes from the 20-core provides a good compromise between selecting high-degree nodes, and having a sufficiently large pool of nodes to choose from so that there is no duplication of seed node sets. Moreover, each set of seed nodes forms a connected subgraph, which fosters diffusion. Thus, the test cases utilize two means (namely, seeding of high-degree nodes and ensuring that they form a connected subgraph) to foster diffusion and hence tax the heuristics.

Barash et al. (2012) demonstrate that there are conditions under which complex contagions will not spread in stylized graphs; as mentioned earlier, they do not consider critical nodes. Since our goal is to study the effectiveness of critical nodes in blocking diffusion, we seek to generate widespread diffusion in the absence of critical nodes, even for small seed sets. Then, the number of affected nodes when including critical nodes indicates the effectiveness of a blocking method in inhibiting contagion spread.

6.3 Comparisons of CBH and PBH with other blocking methods

We now turn to evaluating the heuristics in halting and delaying diffusion by first comparing our heuristics with five other heuristics: (1) randomly setting nodes critical (RCH), (2) setting high-degree nodes critical (HCH), (3) setting critical the nodes with greatest betweenness centrality (BCH), (4) setting critical nodes with greatest eigenvector centrality (ECH), which for undirected graphs is the same as the HITS method (Kleinberg 1999), and (5) the maximum eigenvalue drop method called NetShield (Tong et al. 2010). As mentioned earlier, methods referred to in items (2) through (5) above were proposed in the literature for blocking simple contagions. The random choice method (RCH) serves as a baseline. In the remainder of this section, the above five approaches are referred to collectively as other blocking methods.

Our experimental procedure is as follows. We consider the three networks (epinions, slashdot and wikipedia) discussed in Sect. 6.2 and set the threshold value t = 2. We use two values (namely, 2 and 3) for the number ns of seed nodes. For each value of ns, 100 sets of seed nodes are generated from the 20-core of the corresponding social network. The budget β on the number of blocking nodes is set to 500. We chose the values of ns and β to give the blocking methods a good opportunity to succeed since the value of β/ns is reasonably large (250 and 166.7 respectively for ns = 2 and 3). For each combination of social network, value of ns and blocking method with β = 500, we ran 100 diffusion instances (one corresponding to each seed set) and recorded the number of nodes in the affected state (state 1). The seed sets are the same for each blocking method. For each set of 100 diffusion instances, we provide the average number of affected nodes.

We also provide, in the last column of the following tables, the ratio of average number of affected nodes to the maximum possible (MP) number of nodes that can be affected. This ratio is crucial because for complex contagions, graph structure itself can retard contagion propagation, and a blocking method should not be given credit for a seemingly smaller spread size when the effect is due to the graph structure. For example, 47 % of epinions nodes (i.e., 35755 nodes) have degree 1. With t = 2 diffusion, it is impossible for these nodes to become affected (unless they are seed nodes) because they cannot have two neighbors in state 1. Thus, one upper bound on the maximum spread size for t = 2 contagion in epinions is 40124 (= 75879 – 35755) nodes. In fact, a tighter upper bound is the size of the largest connected component of the 2-core, which for epinions is 37300 nodes (a general result, from which this bound for epinions follows, will be established in Sect. 7). Thus, with no blocking nodes, the MP spread size in epinions for t = 2 diffusion is 37300. To illustrate why this MP spread size is important, suppose a method that uses blocking nodes allows a spread size of 30000 on the epinions network. Here, one might conclude (erroneously) that the blocking method is effective because only a fraction 30000/75879 ≈ 0.4 of all the nodes are affected. In actuality, the method is not effective because a fraction 30000/37300 ≈ 0.8 of all the nodes that the contagion can reach in the absence of blocking nodes are affected, even though blocking nodes are used. Therefore, the values in the final column of the following tables normalize the average spread size (computed over 100 diffusion instances via simulation) by the theoretical MP spread size. This ratio assists in the interpretation of the reported spread size.

Our experimental results for epinions, slashdot, and wikipedia, are given in Tables 4 through 9, where each table contains data for one network and one seed set size. Our findings can be summarized as follows.

For all three networks, CBH and PBH perform well in blocking diffusion, allowing almost no spreading for any diffusion instance. Over the conditions of all tables, PBH permits widespread contagion propagation for only one diffusion instance; CBH permits no widespread diffusion. The random choice method RCH allows the greatest fractions of affected nodes for all three networks (the reason for the poor performance by RCH is that nodes of degree 1 account for between 35 and 50 % of nodes in these networks. RCH has a good chance of choosing such nodes as critical nodes even though other nodes which are better suited to block the complex contagion are available).

For the epinions network (Tables 4, 5), HCH, BCH, ECH/HITS and NetShield permit average spread sizes between 18809 and 27119 nodes. For slashdot (Tables 6, 7), the range in numbers of affected nodes is 12440 to 31882 nodes, while for wikipedia (Tables 8, 9), the range in numbers of affected nodes across other methods is 1877 to 3185 nodes. For all three networks, when the number of seed nodes increases to ns = 3, contagion spreading significantly increases. These results suggest that in the three networks, complex contagions are often able to circumvent the nodes of high degree or high centrality and find alternate paths to reach many nodes.

Average numbers of affected nodes for PBH and CBH are two orders of magnitude less, and in most cases are three to four orders of magnitude less, than those for the five other methods. These results highlight the improved blocking performance that can be realized by using the additional information provided by the seed node sets.

It is also useful to compare the performance of the other blocking methods. The high degree heuristic performs just as well as—in some cases better than—the more sophisticated methods in blocking complex contagions. This result provides an interesting contrast with the findings in Tong et al. (2010) for simple contagions, where NetShield was shown to have the best performance.

The results also show that all five of the other methods are more effective in blocking complex contagions in slashdot than in epinions and wikipedia. We believe that this is related to the average clustering coefficients (Cave) of the networks. As can be seen from Table 3 (Sect. 6.2), the slashdot network has the smallest Cave among the three networks. For complex contagions, where multiple affected neighbors are required to propagate a contagion, the clustering coefficient is potentially an important factor in determining a network’s ability to spread contagions. The Cave values for epinions and wikipedia are somewhat more typical of social networks, while that for slashdot is smaller (Newman and Park 2003). These results suggest that networks with smaller Cave can still spread complex contagions, but blocking their progression is easier.

Table 4.

Performance of blocking methods for epinions (t = 2 and β = 500) with two seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 2.0 | 5.4 × 10−5 |

| PBH | 2.0 | 5.4 × 10−5 |

| Random (RCH) | 32373.0 | 0.87 |

| High degree (HCH) | 20627.7 | 0.55 |

| Betweenness centrality (BCH) | 18809.3 | 0.50 |

| Eigenvector centrality (ECH/HITS) | 22105.1 | 0.59 |

| NetShield | 20929.2 | 0.56 |

The maximum number of nodes that can be affected from t = 2 diffusion for epinions is 37300

Table 5.

Performance of blocking methods for epinions (t = 2 and β = 500) with three seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 3.0 | 8.0 × 10−5 |

| PBH | 306.1 | 8.2 × 10−3 |

| Random (RCH) | 34437.7 | 0.92 |

| High degree (HCH) | 25712.3 | 0.69 |

| Betweenness centrality (BCH) | 25896.2 | 0.69 |

| Eigenvector centrality (ECH/HITS) | 26585.5 | 0.71 |

| NetShield | 27119.1 | 0.73 |

The maximum number of nodes that can be affected from t = 2 diffusion for epinions is 37300

Table 6.

Performance of blocking methods for slashdot (t = 2 and β = 500) and two seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 2.0 | 4.2 × 10−5 |

| PBH | 2.0 | 4.2 × 10−5 |

| Random (RCH) | 27523.7 | 0.58 |

| High degree (HCH) | 15453.7 | 0.32 |

| Betweenness centrality (BCH) | 15990.9 | 0.33 |

| Eigenvector centrality (ECH/HITS) | 13425.1 | 0.28 |

| NetShield | 12440.7 | 0.26 |

The maximum number of nodes that can be affected from t = 2 diffusion for slashdot is 47760

Table 7.

Performance of blocking methods for slashdot (t = 2 and β = 500) and three seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 3.0 | 6.3 × 10−5 |

| PBH | 3.1 | 6.5 × 10−5 |

| Random (RCH) | 43569.8 | 0.91 |

| High degree (HCH) | 23586.5 | 0.49 |

| Betweenness centrality (BCH) | 27183.4 | 0.57 |

| Eigenvector centrality (ECH/HITS) | 31882.1 | 0.67 |

| NetShield | 26952.7 | 0.56 |

The maximum number of nodes that can be affected from t = 2 diffusion for slashdot is 47760

Table 8.

Performance of blocking methods for wikipedia (t = 2 and β = 500) and two seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 2.0 | 4.2 × 10−4 |

| PBH | 2.0 | 4.2 × 10−4 |

| Random (RCH) | 4187.3 | 0.87 |

| High degree (HCH) | 1882.8 | 0.39 |

| Betweenness centrality (BCH) | 2337.2 | 0.49 |

| Eigenvector centrality (ECH/HITS) | 1885.6 | 0.39 |

| NetShield | 1876.6 | 0.39 |

The maximum number of nodes that can be affected from t = 2 diffusion for wikipedia is 4786

Table 9.

Performance of blocking methods for wikipedia (t = 2 and β = 500) and three seed nodes

| Blocking method | Average number of nodes affected with blocking |

Ratio of (average affected) to (MP number affected) |

|---|---|---|

| CBH | 3.0 | 6.3 × 10−4 |

| PBH | 39.2 | 8.2 × 10−3 |

| Random (RCH) | 4361.3 | 0.91 |

| High degree (HCH) | 3171.6 | 0.66 |

| Betweenness centrality (BCH) | 3171.3 | 0.66 |

| Eigenvector centrality (ECH/HITS) | 3036.7 | 0.63 |

| NetShield | 3185.4 | 0.67 |

The maximum number of nodes that can be affected from t=2 diffusion for wikipedia is 4786

We end this section by noting that the other blocking methods are sensitive to the number of seed nodes: an increase in numbers ns of seed nodes from 2 to 3 significantly decreases the effectiveness of these methods. We will see this sensitivity to ns in CBH and PBH as well in Sect. 6.4; however, in our methods, this sensitivity is pushed further out to larger values of ns.

6.4 Experimental evaluation of CBH and PBH

We carried out a parametric study to experimentally evaluate the performance of PBH and CBH. Table 10 lists the parameters and values used in the parametric study.

Table 10.

Parameters and values of the full-factorial parametric study

| Networks | Thresholds (t) | Numbers of seeds (ns) | Budgets on critical nodes (β) |

|---|---|---|---|

| Epinions, | 2, 3, 5 | 2, 3, 5, 10, 20 | 0, 5, 10, 20, 50, 100, 500, 1000 |

| slashdot, | |||

| wikipedia |

For each network and each combination of parameter values, 100 diffusion instances were run

The test plan consists of running 100 simulations of diffusion instances (one instance per seed node set) on each of the three networks for all combinations of t, ns, and β values shown in Table 10. Our simulator outputs for each node υ the time at which υ is affected. The heuristics use this as input data and calculate one set of β critical nodes for each iteration (i.e., diffusion instance). The simulations are then repeated, but now they include the critical nodes, so that the decrease in the total number of affected nodes caused by a critical set can be quantified. The main results from this full-factorial parametric study are outlined below. For brevity, when results for different networks and combinations of parameter values are similar, only results for one representative network and some specific combinations of parameter values are presented.

For reasons discussed in Sect. 6.3, all results are given as the average number of affected nodes over 100 diffusion instances, divided by the MP number of affected nodes, designated “(Ave. Affected)/(MP Affected).” We also refer to this ratio as the spread fraction. The denominator is the theoretical upper bound on spread size, derived from Theorem 6 (which is established in Sect. 7).

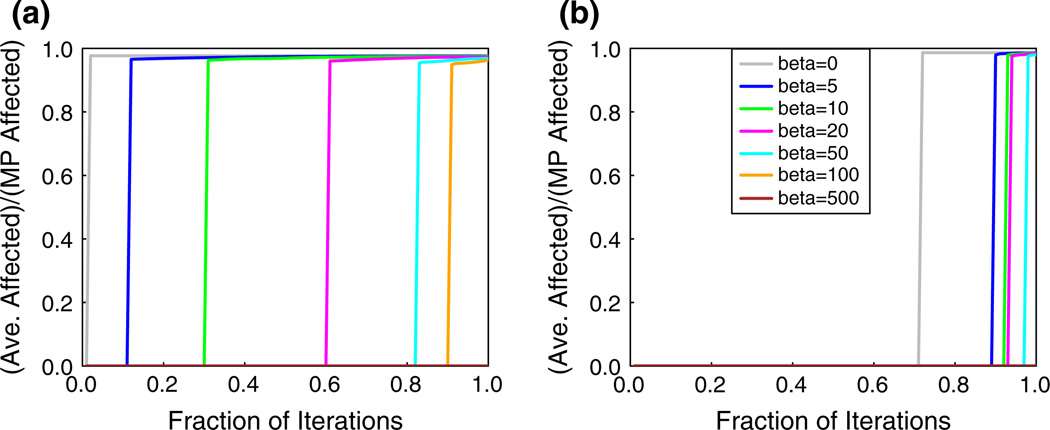

Effect of critical set budget and number of seed nodes on spread fraction

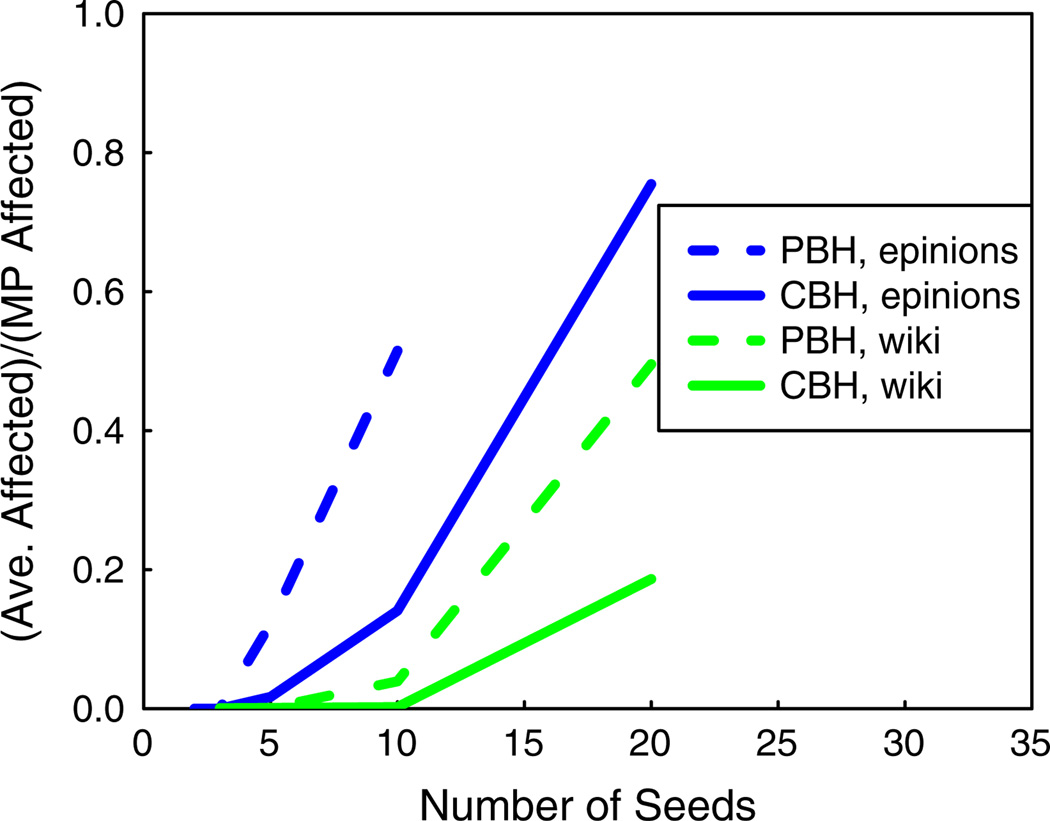

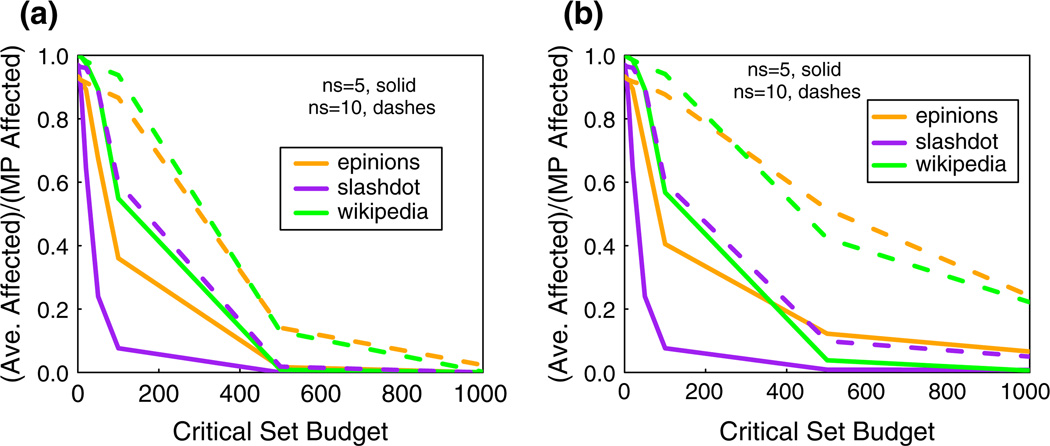

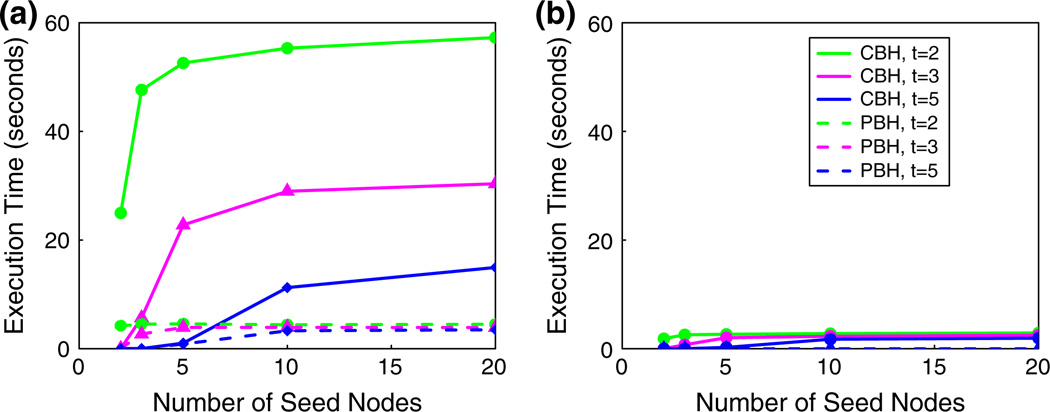

Figure 7 compares the spread fraction for CBH and PBH across all three networks for ns = 5 (solid curves) and 10 (dashed curves). As one would expect, for both the methods, given a value for ns, the spread fraction decreases as the budget β on critical nodes increases. The two plots show how a doubling of the number of seed nodes can produce a six-fold increase in the spread fraction; compare the slashdot data for β = 100, where increasing the number of seed nodes from 5 to 10 increases the spread fraction from about 0.1 to 0.6. The blocking performance of CBH and PBH are similar for slashdot; however, CBH significantly outperforms PBH for epinions and wikipedia when the number of seed nodes is increased to 10. As mentioned in Sect. 6.3, this behavior can be attributed to the significantly smaller average clustering coefficient of slashdot compared to the other networks.

Fig. 7.

Comparisons of the a CBH and b PBH for inhibiting diffusion in all networks, for two seed set sizes and t = 2. The ordinate is the average number of affected nodes (counting seed nodes) over 100 diffusion instances divided by the MP number of affected nodes

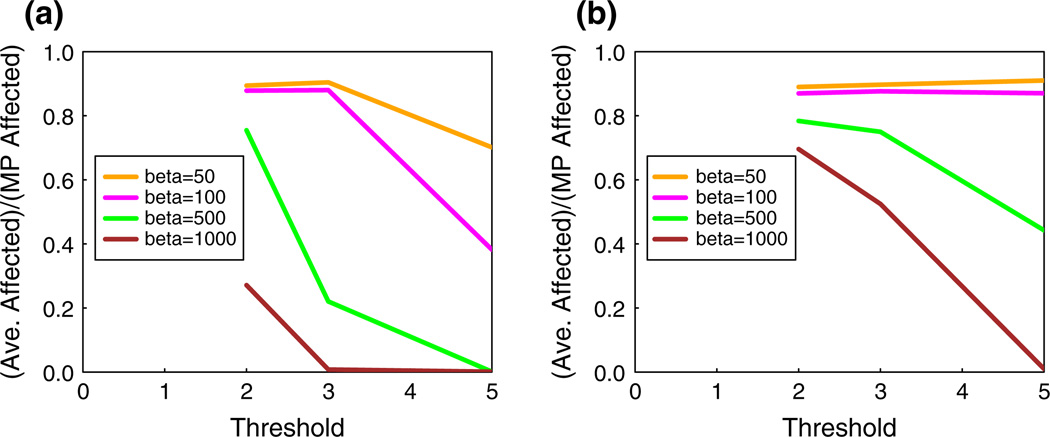

Effect of threshold and critical set budget on spread fraction

The effect of increasing threshold values on the spread fraction is shown in Fig. 8 for the epinions network using CBH for four values of β; the two plots correspond to ns = 20 and 50, respectively. In Fig. 8a, if we use t = 2 and β = 1000 as a baseline case, we see that increasing the threshold from 2 to 3 enables the number of blocking nodes to be reduced by 500 while achieving a slightly lower spread fraction (0.27 vs. 0.22). If the threshold is increased to 5, the number of blocking nodes can be decreased by an order of magnitude, from 1000 to 100, with only a 50 % increase in the spread fraction (0.27 vs. 0.38). Thus, increasing the threshold can significantly decrease the critical node budget needed to achieve the same spread fraction. However, by comparing Fig. 8a, b, it is seen that increasing the number of seed nodes from 20 to 50 significantly increases the spread fraction.

Fig. 8.

Effect of threshold on spread fraction in the epinions network, with β = 50, 100, 500 and 1000. CBH is used in both plots, with a ns = 20 and b ns = 50