Abstract

Background

Programs seek to expose trainees to research during residency. However, little is known in any formal sense regarding how to do this effectively, or whether these efforts result in more or better-quality research output.

Questions/purposes

The objective of our study was to evaluate a dedicated resident research program in terms of the quantity and quality of resident research peer-reviewed publications. Specifically we asked: (1) Did residents mentored through a dedicated resident research program have more peer-reviewed publications in higher-impact journals with higher citation rates compared with residents who pursued research projects under a less structured approach? (2) Did this effect continue after graduation?

Methods

In 2006, our department of orthopaedic surgery established a dedicated resident research program, which consisted of a new research policy and a research committee to monitor quality and compliance with this policy. Peer-reviewed publications (determined from PubMed) of residents who graduated 6 years before establishing the dedicated resident research program were compared with publications from an equal period of the research-program-directed residents. The data were assessed using descriptive statistics and regression analysis. Twenty-four residents graduated from 2001 to 2006 (before implementation of the dedicated resident research program); 27 graduated from 2007 to 2012 (after implementation of the dedicated resident research program). There were 74 eligible publications as defined by the study inclusion and exclusion criteria.

Results

Residents who trained after implementation of the dedicated resident research program published more papers during residency than did residents who trained before the program was implemented (1.15 versus 0.79 publications per resident; 95% CI [0.05,0.93]; p = 0.047) and the journal impact factor was greater in the group that had the research program (1.25 versus 0.55 per resident; 95% CI [0.2,1.18]; p = 0.005). There were no differences between postresidency publications by trainees who graduated with versus without the research program in the number of publications, citations, and average journal impact factor per resident. A regression analysis showed no difference in citation rates of the residents’ published papers before and since implementation of the research program.

Conclusions

Currently in the United States, there are no standard policies or requirements that dictate how research should be incorporated in orthopaedic surgery residency training programs. The results of our study suggest that implementation of a dedicated resident research program improves the quantity and to some extent quality of orthopaedic resident research publications, but this effect did not persist after graduation.

Level of Evidence

Level III, therapeutic study.

Introduction

Research is considered by many to be an integral part of any medical education program [1–3, 6–11]. The Accreditation Council for Graduate Medical Education recommends that orthopaedic surgery training programs encourage their residents to learn and participate in a basic research curriculum [1]. However, little is known in any formal sense about how to accomplish this effectively. Among the initiatives reported to have a positive effect on orthopaedic resident research are grant funding [10], a formalized research curriculum [8], hiring a medical editor [6], a dedicated research year [11], a mandatory research rotation [3], publishing in peer-reviewed journals [6], career choices [9], and primary authorship [2]. If research constitutes an important aspect of orthopaedic education, it should have some lasting effect on behavior in clinical practice, such as the ability to critically assess the literature and continuous contribution to publications by orthopaedic residency graduates. Because there has been emphasis placed on evidence-based medicine for clinical decision-making, the exposure to research in training could be of critical importance. To our knowledge, there are no reports that thoroughly assess the quantity and quality of the peer-reviewed publications by establishing a more structured orthopaedic resident research program.

Therefore, we implemented an overhaul of our orthopaedic research curriculum to enhance and promote resident research at our institution. In 2006, the leadership of our department of orthopaedic surgery changed and we established a dedicated resident research program which consisted of a new research policy and a research committee to more formally direct and monitor this policy. The research committee was comprised of select existing clinical and research faculty committed to work on this component of the training program. No basic scientist, grant writer, nor research coordinator was hired. The existing faculty and staff organized this program without any personnel changes. Before the dedicated resident research program was initiated, our residency required each trainee to complete one research project during his or her residency for presentation at one of the department’s annual scientific symposia. The level of mentorship and publication of this material were left to the discretion of each resident and his or her mentor, as the department did not have any specific standards in place for research mentorship during that period. The dedicated resident research program policy modifications included (1) the requirement for the number of original research projects per resident increased from one to two; (2) each project required at least one faculty mentor; (3) a project proposal had to be reviewed by the newly established departmental research committee and revised as needed; (4) the resident presented the project proposal to the entire departmental faculty for majority approval before it was accepted as an official project; (5) once the project was approved, the research committee monitored the project’s progress; and (6) project completion was achieved by manuscript submission for peer-reviewed publication.

The objective of our study was to determine the effect of implementing a dedicated resident research program on the quantity and quality of resident research peer-reviewed publications. To test whether this program had an effect on the number and quality of resident publications, indicative of a positive effect of our program, we asked (1) whether residents mentored through a dedicated resident research program have more peer-reviewed publications in higher-impact journals with higher citation rates compared with residents who pursued research projects under a less structured approach, and (2) whether this effect continues after graduation.

Materials and Methods

This retrospective study compared the publications of residents starting 6 years before establishing the dedicated resident research program with those of the first 6 years of residents who have graduated since its implementation. Research publication data from all residents between 2001 and 2006 (before implementation) and 2007 through 2012 (after implementation) were collected and compared. We assumed that the 2-year postgraduation period allowed enough time for the last graduating class to publish their research projects.

The objectives of the newly established research committee composed of existing research-oriented faculty and laboratory and editorial staff were to provide research ideas, review project proposals, assist with project mentorship, monitor research progress, and participate with manuscript preparation and submission to peer-reviewed journals. The committee also enforced residents’ and mentors’ compliance with the departmental research policy by establishing mandatory project progress reports and milestones. Our research policy mandated that each resident complete at least two research projects throughout training; project completion was considered manuscript submission to a peer-reviewed journal before graduation. All residents acknowledged their awareness of the new research policy at the beginning of the first year of training by signing a compliance agreement. Lack of compliance with the policy was sanctioned with suspension of resident department-funded educational travel after the committee’s review and executive decision of the department chairperson.

A PubMed search was performed using the graduating resident’s name as the author search criterion to determine the number of publications from the department’s residents during the study period. This was cross-referenced with Ovid/Medline, Research Gate, and our department’s archived publication list. We analyzed all the residents’ peer-reviewed publications. All searches were completed from February 15 to March 15, 2014. We considered the journal impact factor to be a surrogate measure of the quality of the journal in which a paper was published; we also tallied the number of citations for each publication that we retrieved from the Institute for Scientific Information (ISI), Research Gate, Ovid/Medline, and PubMed. We collected the journal impact factor from the Journal Citation Report. The ISI has published scientific journal quality characteristics in the Journal Impact Factor since 1964 [5]. The impact factor formula is described as the ratio of citations in the previous year to the number of source items published in the two preceding years; this ratio then is divided by the number of source items published in the same two years [4]. Because the impact factor fluctuates yearly, it was assigned in accordance with the paper’s year of publication; 2013 and 2014 publications were assigned the 2012 impact factor (owing to the 2-year lag in the impact factor formula).

Other data collected included the number of publications, date of publication, type of publication (original versus nonoriginal), and whether the graduating resident pursued an orthopaedic subspecialty fellowship. An original study was defined as any research-based publication that was not a case report, review, or mere description of a new technique. Subspecialty fellowship data were collected from departmental alumni records, Internet business home pages, physician rating sites, and other cross-referencing material such as medical board records.

The inclusion criteria for publications were all peer-reviewed publications from 2001 to 2012 with a department resident as an author, published in journals with impact factors, and research done during residency and/or after graduating residency. Abstracts, letters, editorials, and publications in supplements, in nonphysician journals, or from medical school or college were excluded as were articles published in nonindexed journals for which the journal impact factor was not available.

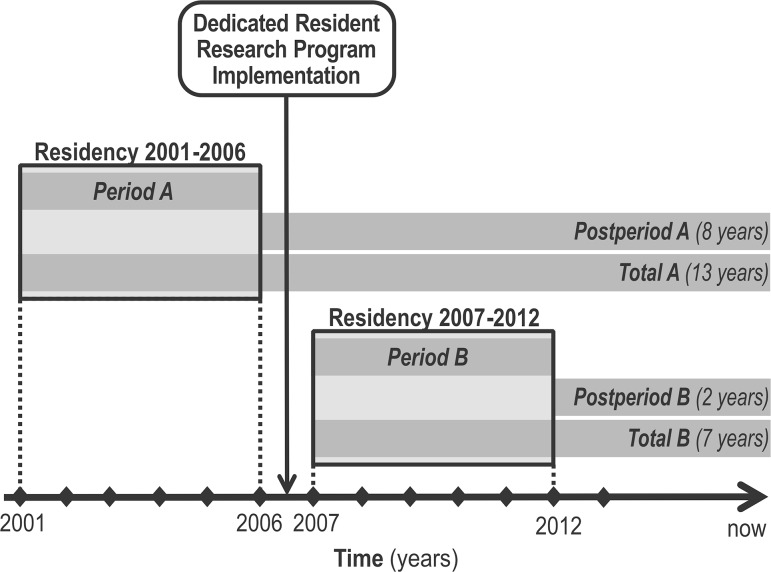

The publications that were tracked and compared included those published by orthopaedic residents who graduated from the training program before the dedicated research program was implemented (2001–2006 residency graduates) versus publications by orthopaedic residents who graduated after the dedicated research program was implemented (2007–2012) (Fig. 1). Period A included papers published during residency from 2001 to 2006. Postperiod A was comprised of research papers published by 2001 to 2006 graduates after 2006 until 2014. Period B included publications during residency from 2007 to 2012. Postperiod B consisted of papers published by 2007 to 2012 residency graduates after 2012 until 2014. The combined research publications from Period A and Postperiod A formed an aggregate Total A group; similarly, publication data from Period B and Postperiod B formed the aggregate Total B group.

Fig. 1.

The residency publication subgroups before and after implementation of the dedicated resident research program are shown.

There were 24 residency graduates identified before implementing the dedicated resident research program and 27 graduates identified after the research program was implemented. There was an Accreditation Council for Graduate Medical Education-approved increase in the number of residents (from four to five residents per year) which occurred shortly before implementing the dedicated resident research program. This difference was factored in our statistical analysis. The PubMed search revealed 95 publications for all residency program graduates from 2001 to 2012. A total of 74 publications met the inclusion and exclusion criteria and were analyzed (Table 1).

Table 1.

Overall publication and citation data

| Variable | Total A | Total B |

|---|---|---|

| Publications | 27 | 47 |

| Original | 20 | 38 |

| Nonoriginal | 7 | 9 |

| Citations | 208 | 147 |

| Average publications per resident | 1.1 | 1.7 |

| Average citations per resident | 8.7 | 5.4 |

| Average journal impact factor per resident | 0.7 | 1.4 |

Total A = research done by graduates from 2001 to 2006; Total B = research done by graduates from 2007 to 2012; original publication = any original paper that was not a case report, review, or mere description of a new technique.

Statistical Method

Statistical analyses were used to evaluate any difference in the quantity or quality of publications between the residents before and after the dedicated resident research program was implemented. A subanalysis also was performed to evaluate whether the pursuit of an orthopaedic subspecialty fellowship affected the quantity and quality of publications. T-test and chi-square or Fisher’s exact tests were used to compare the two groups for continuous and categorical variables, respectively. The sample size (24 residents before implementation of the research program and 27 after implementation) had sufficient power to detect 20% change in publication output between residencies before and after implementation of the dedicated research program. The sample size calculation was post hoc. To control for the followup difference between the two groups, we modeled different functional forms on time to number of citations through regression models. Based on R2, the log transformation on the number of citations was chosen and analyzed by a generalized estimating equation model to control for cluster effect of residents and adjusted for period and fellowship. All tests were two-sided with an alpha of 0.05 and were performed using 9.3 (SAS Institute Inc, Cary, NC, USA). Where confidence intervals are presented on two data sets being compared with t-tests, the 95% CIs are shown on the difference between the means of those sets.

Results

Residents who trained under the dedicated resident research program published more papers than did residents who trained before the program was implemented (1.15 versus 0.79 publications per resident; 95% CI [0.05,0.93]; p = 0.047). Furthermore, journal impact factor of publications (1.25 versus 0.55 per resident; 95% CI [0.2,1.18]; p = 0.005) and the number of original papers as a fraction of all publications (74% (23/31) versus 38% (seven of 19); p = 0.009) by residents who trained with the research program were higher compared with those of residents who trained before the program began (Table 2). We did not observe differences in the number of citations per resident (3.07 versus 5.83; 95% CI [−7.32,1.80]; p = 0.23) between the residency periods. In a second analysis, to remove skewing caused by the presence of residents who had no publications, the data were analyzed on a per-publication basis. In this analysis, residents who participated in the dedicated research program published papers in journals with higher impact factors than those trained before the research program (1.58 versus 1.03; 95% CI [0.08,1.08]; p = 0.032); however the latter group had a higher cumulative citation count per publication than the former (7.37 versus 2.68; 95% CI [0.63,10.43]; p = 0.025) (Table 3).

Table 2.

Publication data per resident†

| Variable | Publications | Citations | Average journal impact factor | Original papers | Fellowship | Academic career |

|---|---|---|---|---|---|---|

| Period A | 0.79 ± 0.83 | 5.83 ± 10.13 | 0.55 ± 0.06 | 37.5% | ||

| Period B | 1.15 ± 0.82 | 3.07 ± 4.38 | 1.25 ± 1.02 | 74.1% | ||

| p value | 0.05* | 0.23 | 0.01** | 0.01** | ||

| Postperiod A | 0.33 ± 0.76 | 2.83 ± 7.59 | 0.38 ± 0.88 | 16.7% | 45.8% | 8.3% |

| Postperiod B | 0.59 ± 1.08 | 2.37 ± 6.26 | 0.78 ± 1.03 | 29.6% | 81.5% | 14.8% |

| p value | 0.33 | 0.81 | 0.19 | 0.28 | 0.01** | 0.67 |

| Total A | 1.13 ± 1.08 | 8.67 ± 13.14 | 0.74 ± 0.55 | 50.0% | ||

| Total B | 1.74 ± 1.61 | 5.44 ± 8.00 | 1.39 ± 0.95 | 74.1% | ||

| p value | 0.11 | 0.30 | 0.01* | 0.08 |

† Mean ± SD; Period A = publications during residency of 2001–2006; Period B = publications during residency of 2007–2012; Postperiod A = publication after residency of 2001–2006; Postperiod B = publications after residency of 2007–2012; Total A = publications during and after residency of 2001–2006; Total B = publications during and after residency of 2007–2012; *p < 0.05; **p < 0.01).

Table 3.

Citations and impact factor data per publication†

| Variable | Citations | Average journal impact factor | Original papers |

|---|---|---|---|

| Period A | 7.37 ± 7. 98 | 1.03 ± 0.70 | 78.9% |

| Period B | 2.68 ± 3.94 | 1.58 ± 0.86 | 83.9% |

| p value | 0.03* | 0.03* | 0.66 |

| Postperiod A | 8.50 ± 10.38 | 1.72 ± 1.08 | 62.5% |

| Postperiod B | 4.00 ± 0.94 | 2.69 ± 1.02 | 75.0% |

| p value | 0.18 | 0.04* | 0.53 |

| Total A | 7.70 ± 8.57 | 1.23 ± 0.87 | 74.1% |

| Total B | 3.13 ± 4.61 | 1.94 ± 1.07 | 80.9% |

| p value | 0.01* | 0.01** | 0.50 |

† Mean ± SD; Period A = publications during residency of 2001–2006; Period B = publications during residency of 2007–2012; Postperiod A = publication after residency of 2001–2006; Postperiod B = publications after residency of 2007–2012; Total A = publications during and after residency of 2001–2006; Total B = publications during and after residency of 2007–2012; *p < 0.05; **p < 0.01.

There was no effect of the dedicated resident research program on postresidency publications in terms of their number (0.59 versus 0.33; 95% CI [−0.27, 0.78]; p = 0.33), proportion that were original (that is, not case reports, reviews, or mere descriptions of new techniques; 29.6% versus 16.7%; p = 0.28), citations (2.37 versus 2.83; 95% CI [−4.39,3.54]; p = 0.81), or journal impact factor (0.78 versus 0.38; 95% CI [−0.21,1.21]; p = 0.19) per resident (Table 2). The only continuing postresidency effect of implementing the dedicated resident research program was the higher average impact factor of the journal per publication (2.69 versus 1.72; 95% CI [0.03,2.02]; p = 0.042). The pursuit of a postresidency fellowship had a strong positive effect on the number of publications after graduation (0.70 versus 0.06; 95% CI [0.24,1.04]; p = 0.003), citations (3.91 versus 0.17; 95% CI [0.54,6.46]; p = 0.014), and the impact factor of the journal (0.91 versus 0.06; 95% CI [0.38,1.33]; p = 0.001) (Table 4). Trainees who graduated with the dedicated research program continued education in a subspecialty fellowship more often compared with trainees who graduated without the research program (81.5% versus 45.8%; p = 0.008). A subgroup analysis showed that residents who pursued an orthopaedic subspecialty fellowship had a greater number of publications (1.15 versus 0.67; 95% CI [0.02,0.96]; p = 0.046) and a higher journal impact factor (1.12 versus 0.54; 95% CI [0.11,1.06]; p = 0.032) during their residency than those who did not. The higher number of publications (0.70 versus 0.06; 95% CI [0.24,1.04]; p = 0.003), original versus nonoriginal publication (33.3% versus 5.6%; p = 0.037), journal impact factor (0.91versus 0.06; 95% CI [0.38,1.33]; p = 0.001), and cumulative citations (3.91 versus 0.17; 95% CI [0.54,6.46]; p = 0.014) on a per-resident basis persisted during postgraduate periods for residents who pursued fellowship training (Table 4).

Table 4.

Publication data per resident during residency and postresidency

| Variable | Fellowship Yes | Fellowship No | p value |

|---|---|---|---|

| During residency | |||

| Publications per resident | 1.15 ± 0.83 | 0.67 ± 0.77 | 0.05* |

| Citations per resident | 4.94 ± 8.61 | 3.33 ± 5.73 | 0.48 |

| Journal impact factor per resident | 1.12 ± 1.00 | 0.54 ± 0.67 | 0.03* |

| Original paper | 64% | 44% | 0.19 |

| After graduation | |||

| Publications per resident | 0.70 ± 1.01 | 0.06 ± 0.24 | 0.00** |

| Citations per resident | 3.91 ± 8.25 | 0.17 ± 0.71 | 0.01* |

| Journal impact factor per resident | 0.88 ± 1.31 | 0.06 ± 0.26 | 0.00** |

| Original paper | 33% | 6% | 0.04* |

| Total | |||

| Publications per resident | 1.85 ± 1.52 | 0.72 ± 0.75 | 0.00*** |

| Citations per resident | 8.85 ± 12.36 | 3.50 ± 5.67 | 0.04* |

| Journal impact factor per resident | 1.00 ± 0.91 | 0.30 ± 0.34 | 0.00*** |

| Original paper | 70% | 50% | 0.16 |

* p < 0.05; **p < 0.01; ***p < 0.001.

A regression analysis for number of citations, adjusted for largely uneven periods of combined time during and after residency (13 years versus 7 years), showed no difference in the number of citations between the residents without versus with the dedicated research program (Table 5).

Table 5.

Regression analysis

| Parameter | Estimate | SD | 95% confidence limit | Z-score | Probability > |Z| | |

|---|---|---|---|---|---|---|

| Intercept | −2.87 | 1.87 | −6.54 | 0.79 | −1.54 | 0.12 |

| Citations in Total A versus Total B | 0.86 | 1.25 | −1.58 | 3.31 | 0.69 | 0.49 |

| Citations in Periods A + B versus Postperiods A + B | 1.19 | 1.07 | −0.91 | 3.28 | 1.11 | 0.27 |

| Years in Total A versus Total B | 0.72 | 0.20 | 0.33 | 1.12 | 3.59 | 0.00*** |

| Citation based on fellowship – yes versus no | 0.24 | 0.98 | −1.69 | 2.16 | 0.24 | 0.81 |

***p < 0.001.

Discussion

In a recent survey, 99% of residents agreed that research is an important part of clinical orthopaedics [10]. It is important to cultivate interest in research early during an orthopaedic surgeon’s career to encourage evidence-based practices and continued research after graduation. However, a unified approach for resident research education has not been established in the United States, although its merits are universally recognized. To our knowledge, there has been no description of the best way to determine whether research education has a positive effect on subsequent research productivity and how best to promote and monitor resident research publications. We found that research publications by our residents improved in quantity and quality after implementation of a dedicated resident research program.

There are several limitations to our study. First, the journal impact factor is not an accurate surrogate measure for journal quality, and papers published in journals with high impact factors are not necessarily better papers. Numerous problems have been identified with using the impact factor as a tool to measure the quality of a journal or the work published in it [4], including manipulation by journals, whether the journal is a general-interest or a subspecialty journal, whether the journal publishes review articles, and the size of the subspecialty or subspecialties represented by the journal in question. In our study, the impact factor for the year of publication was used to reflect the readability and difficulty of publishing in that journal at that particular time. This could have falsely elevated the average impact factor for publications for 2007 to 2012 graduates. The impact factor for many journals has a tendency to trend upward. The second limitation is the discrepancy in the length of followup between the 2001 to 2006 graduates and 2007 to 2012 graduates. This could affect the total citation and citation count per publication, because articles published for a longer time are likely to have garnered more citations. We evaluated this using a regression analysis that adjusted for time as a variable and found a positive trend but no statistically significant difference with the numbers available. This may have been the result of insufficient sample size to power that analysis, and/or the time that elapsed after implementation of the dedicated resident research program (2 years) was too short. The third limitation is associated with 2007 to 2012 graduates having research in submission or pending publication, which may have skewed the true number of publications by those residents. These aforementioned factors may indicate a false low number of publications or citations for 2007 to 2012 graduates. Another limitation is that more residents pursued subspecialty fellowship in the dedicated resident research program group, and this seems to have had a positive effect for publication number, cumulative citation count, and impact factor at multiple times during residents’ research periods. Although our study was not designed to determine the causative relationship, it is plausible that pursuit of a fellowship or an academic career could be the result of the dedicated resident research program, and therefore increased the quantity and quality of the publications after graduation. Likewise, it also is possible that the higher incidence of subspecialty fellowships with the research requirement caused the subsequent increase in the quality and number of publications, and this may be a source of potential bias in our study. Furthermore, emphasizing the merits of research in orthopaedic training through the dedicated resident research program may affect applicants’ selection of our program and our faculty’s selection of appropriate residents. This could be a source of additional bias.

The overall number of publications, journal impact factors, and percentage of original papers improved for orthopaedic residency trainees after implementation of the dedicated resident research program. When the impact factor was determined on a per-publication basis, there was an increase for all publications for 2007 to 2012 graduates. While the research program increased number of publications and journal impact factor, when we controlled for the important confounding variable of time since publication, articles published since the program’s inception did not receive more citations. Merwin et al. [8] used the following criteria to compare residents’ productivity after a multifaceted change in their research curriculum: institutional review board protocol submissions, abstracts submitted to national meetings, and percentage of institution-required research training that was completed by faculty and residents. They used a 3-year time to evaluate their research curriculum, and neither quantity nor quality of publications was addressed. Robbins et al. [10] reported changes in research milestones for each training year, a built-in support structure, addition of an accredited bioskills laboratory, mentoring by NIH-funded scientists, protected research time, and increasing grant funding. Although the quantity of publications was addressed, the quality was not. Manring et al. [7] described an extensive change in their research curriculum, which included hiring an experienced medical editor, additional publishing requirements, faculty mentorship, and activities monitored by the editor and/or resident coordinator. They analyzed a 4-year period and included faculty and resident publications in their study. They lacked a comparison group and they did not evaluate publication quality.

While our dedicated research program improved publication quantity and quality for residents in training, that effect was not sustained after graduation on a per-resident basis. The only postgraduation effect of the dedicated research program was the higher journal impact factor on a per-publication basis. These findings are original and, as such, have not been previously reported. Pursuit of a subspecialty fellowship had an effect on residency graduates’ subsequent publication quantity and quality, regardless of their participation in the research program. However, because almost twice as many residents exposed to the research program chose fellowship training, one may speculate about the potential causative relationship between the research program and the decision to pursue fellowship. This may be an indirect effect of the dedicated research program and warrants further investigation.

Currently, in the United States, there are no standard policies or requirements that dictate how research should be incorporated in orthopaedic surgery residency training programs. The results of our study suggest that implementation of a dedicated resident research program improves the quantity and to some extent quality of orthopaedic resident research publications, but that this program did not appear to result in residents publishing more papers after graduation.

Acknowledgments

We thank Yong-Fang Kuo PhD from the Office of Biostatistics, The University of Texas Medical Branch for support with statistical analysis and Suzanne Simpson BA from our Department for editorial assistance.

Footnotes

Each author certifies that he or a member of his or her immediate family, has no funding or commercial associations (eg, consultancies, stock ownership, equity interest, patent/licensing arrangements, etc) that might pose a conflict of interest in connection with the submitted article.

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research ® editors and board members are on file with the publication and can be viewed on request.

References

- 1.Accreditation Council for Graduate Medical Education . ACGME Program Requirements for Graduate Medical Education in Orthopaedic Surgery: Approved Focus Revision. Chicago, IL: ACGME; 2013. [Google Scholar]

- 2.Ahn J, Donegan DJ, Lawrence JT, Halpern SD, Mehta S. The future of the orthopaedic clinician-scientist: part II: Identification of factors that may influence orthopaedic residents’ intent to perform research. J Bone Joint Surg Am. 2010;92:1041–1046. doi: 10.2106/JBJS.I.00504. [DOI] [PubMed] [Google Scholar]

- 3.Bernstein J, Ahn J, Iannotti JP, Brighton CT. The required research rotation in residency: the University of Pennsylvania experience, 1978–1993. Clin Orthop Relat Res. 2006;449:95–99. doi: 10.1097/01.blo.0000224040.77215.ff. [DOI] [PubMed] [Google Scholar]

- 4.Garfield E. The Science Citation Index and Institute for Scientific Information’s Journal Citation Reports: Their Implications for Journal Editors. Available at: http://garfield.library.upenn.edu/papers/255.html. Accessed on Sept 26, 2014.

- 5.Hakkalamani S, Rawal A. Hennessy, Parkinson RW. The impact factor of seven orthopaedic journals: factors influencing it. J Bone Joint Surg Br. 2006;88:159–162. doi: 10.1302/0301-620X.88B2.16983. [DOI] [PubMed] [Google Scholar]

- 6.Macknin JB, Brown A, Marcus RE. Does research participation make a difference in residency training? Clin Orthop Relat Res. 2014;472:370–376. doi: 10.1007/s11999-013-3233-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Manring MM, Panzo JA, Mayerson JL. A framework for improving resident research participation and scholarly output. J Surg Educ. 2014;71:8–13. doi: 10.1016/j.jsurg.2013.07.011. [DOI] [PubMed] [Google Scholar]

- 8.Merwin SL, Fornari A, Lane LB. A preliminary report on the initiation of a clinical research program in an orthopaedic surgery department: roadmaps and tool kits. J Surg Educ. 2014;71:43–51. doi: 10.1016/j.jsurg.2013.06.002. [DOI] [PubMed] [Google Scholar]

- 9.Namdari S, Jani S, Baldwin K, Mehta S. What is the relationship between number of publications during orthopaedic residency and selection of an academic career? J Bone Joint Surg Am. 2013;95:e45. doi: 10.2106/JBJS.L.01115. [DOI] [PubMed] [Google Scholar]

- 10.Robbins L, Bostrom M, Marx R, Roberts T, Sculco TP. Restructuring the orthopedic resident research curriculum to increase scholarly activity. J Grad Med Educ. 2013;5:646–651. doi: 10.4300/JGME-D-12-00303.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Segal LS, Black KP, Schwentker EP, Pellegrini VD. An elective research year in orthopaedic residency: how does one measure its outcome and define its success? Clin Orthop Relat Res. 2006;449:89–94. doi: 10.1097/01.blo.0000224059.17120.cb. [DOI] [PubMed] [Google Scholar]