Abstract

Objective: This study quantified the ease of use for patients and providers of a microcomputer-based, computer-assisted interview (CAI) system for the serial collection of the American College of Rheumatology Patient Assessment (ACRPA) questionnaire in routine outpatient clinical care in an urban rheumatology clinic.

Design: A cross-sectional survey was used.

Measurements: The answers of 93 respondents to a computer use questionnaire mailed to the 130 participants of a previous validation study of the CAI system were analyzed. For a 30-month period, the percentage of patient visits during which complete ACRPA questionnaire data were obtained with the system was determined.

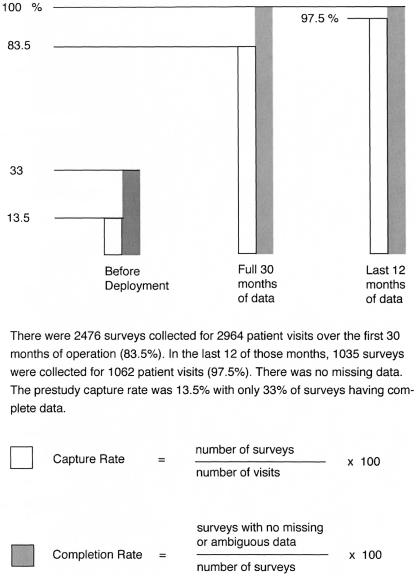

Results: The computer system provided cost and labor savings in the collection of 2,476 questionnaires for 2,964 patients visits over 30 months for a capture rate of 83.5%. In the last 12 of those months, 1,035 questionnaires were collected for 1,062 patient visits (97.5% capture). There were no missing data. The prestudy capture rate was 13.5%, with 33% of surveys having complete data. Patients rated the overall usability of the system as good (mean = 1.34, standard deviation = 0.61) on a scale of 0–2, where 2 = good, but expressed difficulty with mouse manipulation and concerns about the privacy of the data entry environment.

Conclusion The system proved easy to use and cost-effective for the (mostly) unaided self-entry of self-report data for each patient for each visit in routine outpatient clinical care in an urban rheumatology clinic.

Since at least 1949 with the publication of the Cornell Medical Index, the value of self-administered health surveys to augment the patient interview and improve the accuracy and completeness of diagnostic appraisals while conserving provider time and effort has been repeatedly documented.1,2,3,4,5,6,7 The Institute of Medicine, the Centers for Disease Control and Prevention, and the American College of Rheumatology (ACR) now advocate the serial collection of health surveys in routine clinical care.8,9,10 These data are usually not collected. Barriers to implementation, many identified five decades ago, have been catalogued as the logistics of acquisition, distribution, and collection of paper forms; difficulty understanding and completing surveys by patients; the potential disruption of clinic workflow; difficulty scoring and interpreting results; clinical relevance; and cost.6,11,12,13,14,15 Questionnaires have traditionally been completed with pen and paper. Improvements in the quality of care and savings in cost, time, and labor were sought with the deployment of a computer-assisted interview (CAI) system in an urban rheumatology outpatient clinic with the goal of obtaining the unaided self-entry of self-report data for each patient for each routine visit.

Background

Slack et al.,3 at the University of Wisconsin Medical School, introduced unmediated CAI systems for the collection of historical patient data into American medicine in 1966. Mayne et al.,4 at the Mayo Clinic, published the first usability study of such a system in 1968. Cost and technical limitations, as detailed by Weed15 in 1969, at the University of Vermont College of Medicine, restricted early widespread adoption of these mainframe- and minicomputer-based systems. With low-cost modern microcomputers, mobile devices, and Internet delivery, CAI system use is now broadly feasible, even for small practices with limited resources.16 To date, such use remains uncommon.17

The senior author (ADM-W) validated a computer version of the ACR Patient Assessment (ACRPA) questionnaire against its paper counterpart on microcomputers operating over a local area network (LAN) in her urban, mostly African American population.18 She has used the system continuously since 2001.

To be practical for use in routine care in an outpatient clinic setting, computer data collection and storage must be easy and must integrate into the workflow. Data retrieval must be simple and prompt. The data must be clinically relevant and, ideally, standardized and validated.2,3,4,15 Data must be presented in a way that is easy to interpret, preferably at the point of care so as to assist in clinical decision making. In a small office, all this must be accomplished with modest budgets and minimal computer expertise.17 These were the design and usability goals of this system.

In designing for usability, herein defined as ease of use, developers should consider the nature of the user or user groups, the tasks to be performed, and the environment in which the system will operate. “The aim is to create applications that will make users more efficient than if they performed the task with an equivalent manual system…. Users do not perform tasks in isolation nor is any task an isolated task.”19 The sociology of the user's environment is germane. This system has three user groups: the patients, clinic staff, and providers. This paper quantifies some usability characteristics for each.

Research Question or Hypothesis

The study had two hypotheses: (1) all patients, including those with little or no prior computer experience, could find it easy to use a thoughtfully designed CAI system to supply complete and accurate health survey data with little or no assistance on first and subsequent, relatively infrequent exposures during routine visits to outpatient clinics; and (2) such a CAI system could be affordable and practical from the perspective of clinicians in small, independent practices, even with only one provider.

Patients and Methods

Study Site

The study site, the outpatient clinic of the Wayne State University School of Medicine rheumatology faculty, in central Detroit, Michigan, was used because it was the practice location of the senior author. The faculty teaches medical students, residents, and rheumatology fellows and provides consultative and longitudinal rheumatologic care. The clinic (which has since relocated) received patients from throughout the greater metropolitan area. It housed five units, each with one consultation and two examination rooms, and hosted ten rheumatologists (including five rheumatology fellows), one nurse-manager, four medical assistants (MAs), five clerical assistants, and ten general internists. On a typical day, five physicians saw 100 patients. The rheumatologists had 10,000–11,000 annual visits. Most of the patients were African Americans of about age 50. Approximately 85% were female. One physician (ADM-W) and four MAs participated in this project.

Institutional review board approval was obtained.

Chart Review

Metrics regarding health survey use at the study site before the implementation of the CAI system were collected by using a retrospective chart review.

Systems Analysis, Computer Applications, Networking, and System Configuration

A database was designed using OpenBase, a relational database management system by Openbase International, Ltd. (Francestown, NH). Two database client applications were written using the OpenStep developer system for Next Computers, now Apple Computers (Cupertino, CA), by ArtfulMed.com (Detroit, MI). The first, Questionnaire (Q), provides for the unaided patient self-entry of self-report data—in this case, the ACRPA. The second, Questionnaire Viewer (QV), enables a provider to recall, display, and print survey reports at a computer workstation.

A hard-wired LAN was installed at the study site. Two client computers for patient use were placed in the reception area. They were housed in kiosks to shield the units from tampering and to present a simpler human–machine interface: a video monitor and a mouse. The provider placed a networked printer and a minicomputer with a password-protected automatic screen saver in a consultation room. A database server was placed in a private, secure location. No patient data were stored on the public machines. Patients interacted with the kiosks while seated. Wheelchairs were accommodated. Instructional posters were placed in prominent view near the units.

The Q Application

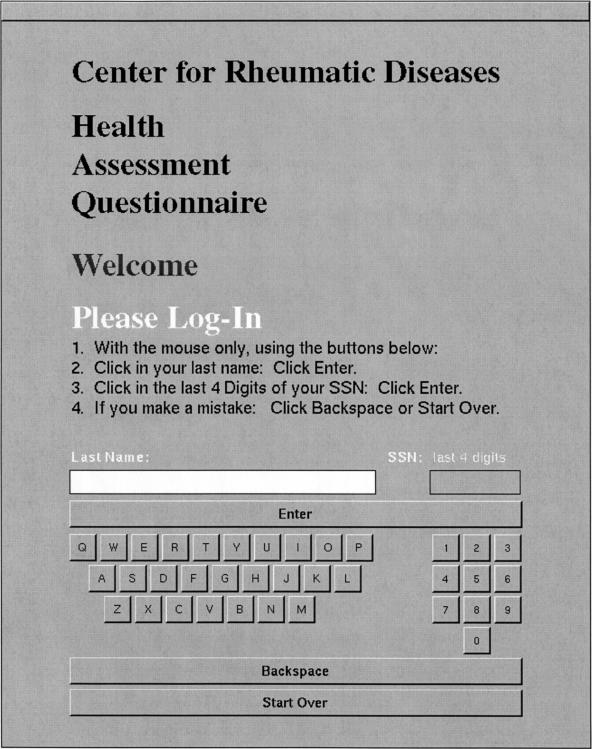

An effort was made to render the Q application self-explanatory with intuitive functionality. A high priority was accorded to screen organization, user orientation, ease of navigation, clarity of display, precision of selection, and appropriate user feedback. The patient was limited to this program, was exposed only to his or her own data, and could perform no other function with the computer. The application was configured so that all data were entered using the mouse. For simplicity, no physical keyboard was available. No typing was needed or allowed. For data integrity, a log-in using an on-screen keyboard was required: the patient's last name and the last four digits of his or her social security number were entered (▶) and authenticated from clinic patient scheduling data. A single, form-style, data-entry screen containing all questions appeared (▶). No application navigation was needed. Controls for all possible actions were always visible. The state of completion was always apparent at a glance. The survey could be completed in as little as 1–2 minutes.

Figure 1.

Patient log-in screen for the Questionnaire application.

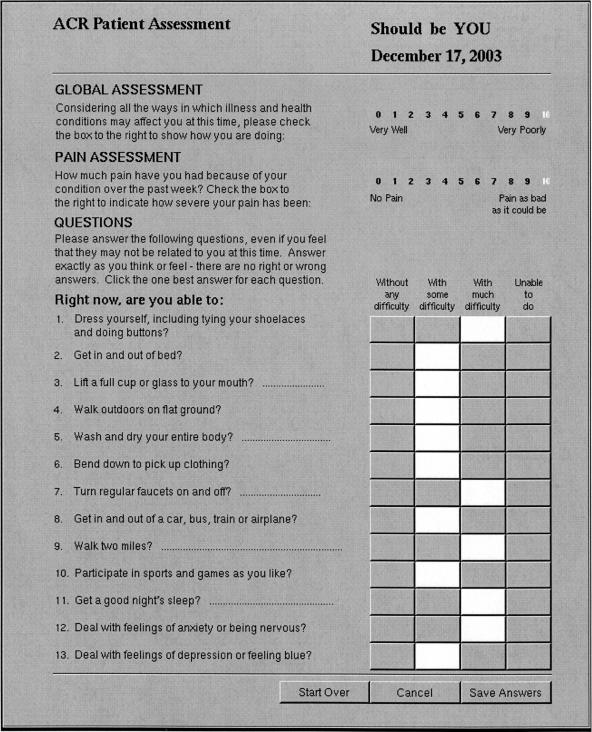

Figure 2.

Patient data entry screen for the Questionnaire application. ACR = American College of Rheumatology.

Patients clicked in responses to the 15 items of the ACRPA questionnaire, then clicked a “Save” button. Feedback was clear and immediate. Mistakes were easily corrected. The software precluded double or ambiguous answers. With any attempt to save answers, the program politely prompted for completion of missing items. Submission of incomplete surveys was not allowed. However, a patient could cancel a session at any time. With a successful save, data were automatically transferred across the LAN to the database server. A dialog box thanked the user for his or her efforts. With a save, cancellation, or the abandonment of a session, automatic, timed log-outs returned the log-in screen to the video monitor to retain privacy and maintain the system in a stable state. The program could run unattended indefinitely.

The QV Application

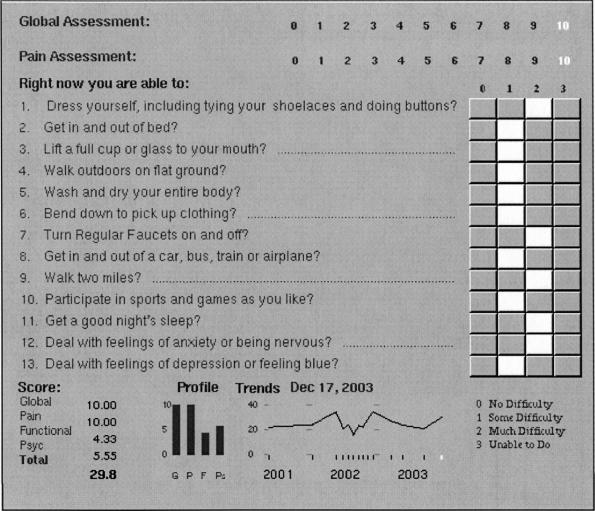

The automatically scored and graphically displayed data were recalled and reviewed by the provider on a minicomputer in a consultation room using the QV application (▶). Results were instantly available to share with patients and for use in clinical decision making at the point of care. A hard copy of the report was printed immediately for inclusion in the patient's history because the provider's practice group, as of this writing, maintained its medical records on paper-based charts.

Figure 3.

Data presentation panel for the American College of Rheumatology Patient Assessment Questionnaire for the provider in the Questionnaire Viewer application.

ACRPA Validation Study

A study, described elsewhere,18 was conducted to validate Q, the computer version of the ACRPA against its paper counterpart. In 2002, after completion of the validation study, each participant was mailed a Computer Use Questionnaire (CUQ) with a postage-paid return envelope and a brief cover letter. There were two follow-up mailings at four-week intervals.

Computer Use Questionnaire

The CUQ was a one-page paper survey comprising 18 closed questions concerning: (1) sociodemographic variables that include work status, occupation, education, and yearly household income; (2) previous experience and level of comfort with computers or computer-like devices; (3) the helpfulness of the clinic staff and instructional posters; and (4) the ease of use of the CAI system. The ease-of-use questions were as follows: Were the screen instructions understandable? Was the mouse difficult to use? Was the log-in process confusing? How difficult overall was the system to use? For scoring, the polarities of the last three items were reversed. All were graded 0 (bad) through 2 (good). Usability was defined as the mean of the answers of these four questions. There was also a section for comments.

Focus Groups

All patient participants older than 65 years were invited to discuss the use of the CAI system in two focus groups supervised by an independent group leader. Their comments were recorded, transcribed, and analyzed.

Auditing System Use

For a 30-month period after the deployment of the CAI system, the percentage of patient visits in which surveys were collected with complete data was determined. The staff also noted the number of people who needed assistance.

A Clinimetrics Survey of African American Providers

At the 2003 National Medical Association national meeting, a “clinimetrics” survey was distributed to a convenience sample of physicians attending the Internal Medicine Rheumatology Update lectures and dinner. It characterized clinical documentation styles and familiarity with and patterns of use of health surveys.

Data Analysis

Data were entered by the first author (CAW) and checked by the third (ADM-W). The second author (TT) assisted with statistical analysis using the Statistical Package for the Social Sciences, version 11.5 for Microsoft Windows (SPSS, Chicago, IL). The data were characterized by:

Pre- and poststudy questionnaire capture and completion rates

Descriptive statistics of the study population including previous computer experience

A usability index calculated from CUQ usability questions

Correlations (Pearson product-moment) of usability against sociodemographic variables from the CUQ and selected medical variables as assessed in the paper version of the ACRPA obtained in the validation study

Selected comparisons with analysis of variance (Student-Newman-Keuls)

Cognitive analysis of two patient participant focus group discussions, the CUQ comments, impressions of the participating physician, informal interviews with MAs, and the physician responses to the clinimetrics survey

Results

Data Quantity and Quality Were Increased

Starting in 1999 and continuing until the onset of this project, the study site rheumatologists collected paper copies of the Health Assessment Questionnaire (HAQ)5 at the time of the initial visit for each patient, i.e., one time only. The ACRPA is derived from and is comparable with the HAQ. The chart audit method used to measure HAQ capture and completion rates was similar to the extrapolation scheme used by the Center for Medicare and Medicaid Services, insurance companies, and health maintenance organizations (HMOs) in the United States.20

A review of ten randomly selected charts of established patients of all study site rheumatologists revealed that although nine had an HAQ present, six had missing or ambiguous data and none were scored. No chart had more than one questionnaire. Therefore, the capture rate for new patients was nine of ten, or 90%. Of those captured, the completion rate was three of nine, or 33%. Fifteen percent of the rheumatology faculty's clinical schedule is composed of new patients. An overall capture rate of 0.90 × 0.15 = 13.5% may be estimated. The utility of these data in screening for functional impairment, monitoring disease progression, quality assessment, or casemix adjustment would be limited because they were not complete, scored, correlated with other data, or serially collected.

In its initial 30 months of operation, the CAI system collected 2,476 questionnaires for 2,964 patient visits, for a capture rate of 83.5%. In the last 12 of those months, 1,035 questionnaires were collected for 1,062 patient visits (97.5%). The completion rate was 100%, i.e., there were no missing or ambiguous data (▶).

Figure 4.

Effect of the computer-assisted interview system on health survey data quantity and quality.

Patients Rated the Overall Usability of the Computer System as Good

Demographics

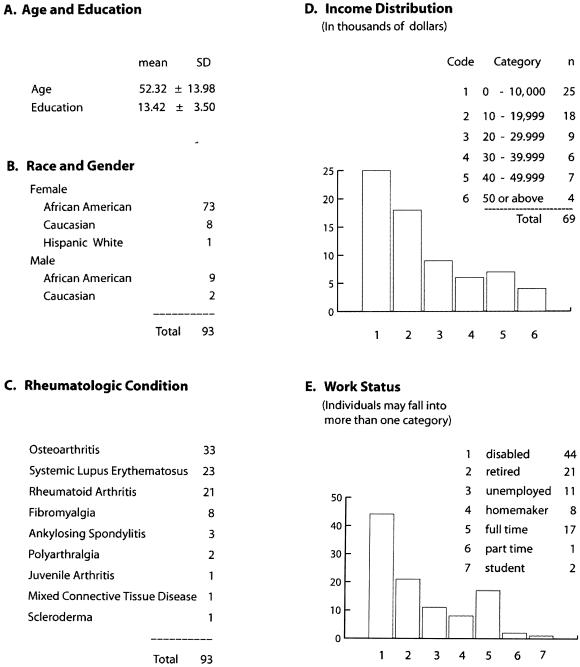

There was a good response to the CUQ. Ninety-three replies were received from 130 people (71%). The mean age was 52.32 years, with a standard deviation (SD) of 13.98. There were 82 women. The number of years of formal education ranged from five to 22 [mean = 13.42, SD = 3.50]. Incomes were distributed among the 69 individuals who answered the question, in thousands of dollars per year, as < 10, 25 individuals; 10 to < 20, 18; 20 to < 30, nine; 30 to < 40, six; 40 to < 50, seven; and >50, four. Of 85 responses on the issue of employment, there were 44 disabled individuals, 21 retired individuals, 11 unemployed individuals, eight homemakers, 17 full-time employees, two students, and one part-time employee (▶).

Figure 5.

Study participant demographics.

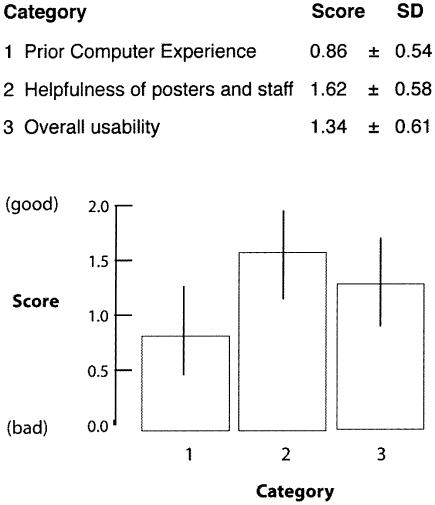

To reduce the number of variables, related items of the CUQ were aggregated into three summative subscales for previous experience with computers or computer-like devices, the helpfulness of staff and instructional posters, and the usability of the CAI system. A factor analysis showed good agreement with this classification. All scores ranged from 0 (not at all or never) to 2 (quite a bit or regularly), where 0 = bad and 2 = good.

Previous Computer Experience

The patients quantified their use of computers or computer-like devices as a little less than occasional (mean = 0.86, SD = 0.54). Those reporting never having used a computer were 20 of 57 (35%) patients for home and 18 of 83 (21.68%) patients for work or other public settings. Computers were reported in the homes of 39 of 91 (41.75%) patients.

Helpfulness

Posters and staff were rated as quite helpful (mean = 1.62, SD = 0.58).

Usability

Patients rated the overall usability as good (mean = 1.34, SD = 0.61).

There Were Sociodemographic and Medical Correlates of Usability

The usability of the CAI system correlated highest with experience with computers or computer-like devices (r = 0.38, p < 0.01). Those who were employed full or part time had more experience with computers or computer-like devices than those who were retired, disabled, or unemployed (F388 = 7.48, p < 0.01).

Although weak, the unexpected second and fourth strongest significant relationships with usability were for hand dysfunctions: trouble turning regular faucets on and off (r = 0.25, p = 0.02) and trouble lifting a glass to one's mouth (r = 0.22, p = 0.04).

Household income was the third-ranked significant usability correlate (r = 0.23, p < 0.05), even though it had a moderate and significant relationship to computer experience (r = 0.48, p < 0.05).

There were no statistically significant correlations between usability and age, years of formal education, occupation, work status, difficulty dressing, or patient global assessment (▶).

Table 1.

Correlates of Usability

| Significance | Item | r | p-value |

|---|---|---|---|

| Significant | Computer experience | 0.38 | 0.01 |

| Hand dysfunction (turn regular faucets on and off?)* | 0.25 | 0.02 | |

| Household income | 0.23 | 0.03 | |

| Hand dysfunction (lift a full cup or glass to your mouth?)* | 0.22 | 0.04 | |

| Not significant | Age | ||

| Education | |||

| Hand dysfunction (dress self?)† | |||

| Occupation | |||

| Work status | |||

| Patient global assessment |

Patient usability was defined as the mean of the scores of four questions: The ease of understanding of the on-screen instructions, the ease of mouse use, the ease of the log-in process, and the overall ease of system use. Patients rated the overall usability of the system as good (mean = 1.34, standard deviation = 0.61) on a scale of 0–2; 2 = good.

The correlation of these hand dysfunctions and usability may be due to decreased grip strength, which might make grasping a mouse easier than gripping a pencil.

The deleterious effects of hand dysfunction for dressing might be “neutralized” by the adoption of arthritis-friendly clothing such as pullover shirts and Velcro closures. Hence, the lack of correlation between usability and dressing oneself.

The Focus Groups and CUQ Comments Illuminated Difficulty with Mouse Manipulation and Privacy

Of 45 eligible seniors, seven (15.5%) took part in two focus groups. All were African American, female, aged 65 years or older, and retired. The most frequent focus group and CUQ comments related to trouble with mouse manipulation. Many expressed anxiety about using a system of relative unfamiliarity in the imperfect privacy of the patient reception area where others might observe their answers and miscues. They expressed a strong desire for privacy in the data entry environment. No one objected to the storage of his or her information in electronic form.

Patient–Provider Communication Was Improved

The provider found that patient interviews were streamlined with ACRPA self-report data serving as a starting point for discussion. The diagrammatic displays were reviewed with patients (▶). Most readily understood the graphs. Initially, some patients complained about having to repeatedly complete questionnaires. Acceptance of the system grew with the perception that it enhanced the quality of care. Patient–physician communication was enhanced.

The Office MAs Informally Rated the Usability of the Cai System as Good

They embraced it and readily assisted patients in completing questionnaires as needed. The MAs no longer had to track an inventory of paper forms or distribute, explain, and collect them. The MAs directed patients to the kiosk computer workstations, the physical presence of which served as a prompt. There were no system crashes or other difficulties. During the 31st month of operation, the MAs observed that of the 71 patients seen, 21 (29.5%), mostly the elderly on first exposure, required assistance, in decreasing frequency, for difficulty with mouse manipulation, difficulty with log-in, verbal question administration, and general guidance with computer conventions and motifs. The MAs estimated the mean completion times to be 4 minutes for those who required assistance and 2 minutes for those who did not.

The Clinimetrics Survey Disclosed a Lack of Understanding of the Use Of Health Surveys by Physicians

Thirty-three surveys were returned from an estimated pool of 70 physicians. All respondents were African American. There were 24 replies to an item regarding the use of health surveys: 16 reported never using them, four used them for 1%–25% of office visits, two for 26%–75% of visits, and two for 75%–100%. Each respondent could declare zero or more reasons for the nonuse of health surveys. Fifteen (68%) of the 22 who used health surveys for 25% or fewer visits cited a lack of previous exposure and training, four (18%) worried about disruption of clinic workflow, and four (18%) thought that some health surveys might be too long for patients to fill out.

Cost

In this study, our equipment cost was $5,000 for two patient workstations, one physician workstation, and one server. All components were used (second-hand). The color video screens were 20 inches in diameter. Two kiosks and one computer cart cost $500. A licensed copy of the relational database management system (Openbase) was $900. Systems analysis, LAN installation, database design, application programming for Q and QV, and software installation and configuration were performed by the first author (CAW). Total expenditures were $15,000. The CAI collected 2,476 questionnaires in 30 months. The hardware cost per survey was $15,000 divided by 2,476, which equals $6.06.

Discussion

Previously Documented Usability Concerns

There are numerous reports of the successful deployment of patient CAI systems in clinical medicine.16,21,22 With respect to computer use in general, the literature reveals that user performance suffers with lack of computer experience and age23; seniors have more negative attitudes about computers, but those attitudes can be positively influenced24; seniors have more difficulty with mouse manipulation25; work status correlates positively with computer use for all ages26; and patients worry about the confidentiality of personal data.27 Providers lack training in the use and utility of health surveys in clinical care and often perceive them to be difficult to use.6,11,28 Our findings echo this. All but the privacy concerns will dissipate with increasing exposure of future generations to computer technology. Privacy concerns are likely to increase.27

Of Mice and Screens

The larger video displays afforded by personal computers versus hand-held devices allow the presentation of more information with optimal font sizes and white space on fewer screens so that less scrolling and application navigation are needed. Fewer gestures are needed to record a given amount of information. Better screen design increases readability and decreases the memory, orientation, and navigation burdens for users.29

With patient data entry via pointer device (mouse), no typing is needed. We speculate that the hand dysfunctions that correlated positively with usability are due to diminished grip strength. The poorer the grip strength, the more difficulty one has holding a pen or pencil and the increased relative usability of a mouse. Grip strength was not measured in this study. The lack of correlation of usability with trouble dressing might be explained by the prevalence of “arthritis-friendly” clothing—pullover shirts, elastic waist bands (without fasteners or belts), and Velcro closures—as well as by assistive devices such as button hooks, which lessen the effects of poor grip strength.

Given the degree of difficulty that study participants expressed with the mouse, alternative pointing devices should be considered. Our computer equipment was manufactured between 1991 and 1993. Newer mice with better ergonomics would probably go far in eliminating mouse-related concerns.

Touch screens are attractive. However, they allow less precise selection—a finger as a pointing device is convenient but crude—and so require wider spacing of screen elements. More screen “real estate” is needed to present a given amount of information. This may translate into more entry screens and application navigation. Touch screens require larger arcs of motion for responses and may not be any less difficult to use for people with upper extremity dysfunction, especially of the shoulder and elbow.

The surfaces of computer equipment used in patient care areas need to be cleaned and disinfected regularly, ideally after each use, to prevent them from acting as infectious vectors.30 An infrared mouse is easier and cheaper to clean and disinfect between patient use and to periodically replace than is a keyboard or touch screen (which can also smudge).

Privacy

After analysis of the focus group and CUQ comments, the patient workstations were relocated from the reception area to examination rooms. The privacy concerns associated with the data entry environment disappeared.

The Internet

Web-based, health-related CAI systems are numerous. For instance, HAQs and tender and swollen joint counts may be completed at the Johns Hopkins University Rheumatology Web site31 and the Medical Outcomes Studies Short Form-36 at www.sf-36.org.32

Web-based access to the CAI system from outside the clinic might be convenient. However, Web-entered self-report data may not be linked to a specific clinical encounter and its diagnostic and therapeutic components, thus weakening any associations. Moreover, the medicolegal obligation conferred by an information transfer, unlinked to a phone or clinical encounter, is unclear. Web access introduces the additional data integrity concerns associated with Web hosting (transmission security, server security, user authentication) and/or the use of an application service provider (persistence of data, data ownership, data availability, confidentiality).33 System complexity and administrative burdens are increased.

Literacy

Nearly half of adult Americans have limited literacy.34 Low health literacy, in particular, limits communication between patients and provider.35 For health literature to be easily readable by 90% of adult Americans, it must be written at about the third-grade level.36 The ACRPA was chosen in part because it is easy for patients to understand.37 Readability statistics generated with the Flesch-Kincaid formula by Microsoft Word (Microsoft Corporation, Redmond, WA) show it to be written at the second-grade level (actually grade 1.9), suggesting that this population, based on the number of years of formal education, should have had little difficulty understanding it. Reading levels and health literacy were not measured in this study.

General Disability

Low general and low health literacy in combination with low computer access and low computer literacy in some segments of society,26,38 musculoskeletal dysfunction, visual impairments, cognitive dysfunction, and malaise secondary to illness guarantee that some fraction of patients will always require assistance.39 Thus, there is an inherent ceiling effect for patient usability for self-administered questionnaires whether they are computer- or paper-based.

Sociodemographics

Low incomes and low educational attainment have been correlated with worse outcomes in rheumatology in some studies.40,41,42 Other studies failed to demonstrate those relationships.43,44,45 We were surprised that we did not find a statistically significant relationship between formal education and usability in this study or, in contrast to others,23 a statistically significant correlation between usability and age.

The least economically advantaged patients were found to be among those who rated this system least usable. These individuals, who may be most at risk of poor outcomes and so most in need of the careful management that the collection of self-report data can facilitate, are least able to provide those data. Care must be taken to include them. African American providers, as were all of the clinimetrics survey respondents, are most likely to care for African American patients,46 as were the majority of the study participants. In an effort to address the health care disparities of this population, the clinimetric practices of these providers assume particular importance. Some degree of provider assistance for patient data entry in a CAI system will probably always be needed to ensure universal inclusion and to assist in bridging the “digital divide.”26,38 Pincus and Wolfe28,47 have emphasized the importance of a friendly and helpful clinic staff in the collection of self-report data. That our participants rated the helpfulness of the MAs and posters higher than the usability of the computer system underscores this point (▶). A CAI system is a valuable adjunct in the collection of self-report data. It is not a panacea.

Figure 6.

Computer use questionnaire scores.

Systems Issues

In this implementation, Q, the patient data entry application, was run on microcomputers over a LAN. A mouse was used for input and a standard video monitor with a graphical user interface for output. Privacy considerations in the patient reception area precluded voice recognition input or synthesized voice or sound playback. This platform was selected instead of mobile devices such as personal digital assistants or tablet computers because the equipment is more robust, easier to secure, easier to clean, and less expensive and does not have to be dispensed, tracked, or manually synchronized. With a hard-wired LAN, fast, secure, and stable data transmission is achieved. Less system administration is needed for the management of security and encryption than would be the case with wireless devices or Web-based services. This decreases the financial and administrative burden of the provider organization while adhering to the provisions of the Health Insurance Portability and Accountability Act of 1996.48

Cost

Pincus47 estimated in 1996 that the cost of acquisition for each paper questionnaire in a university rheumatology clinic was $3.50 without, and $4.50 with, transcription into a computer.7 He found that approximately 25% of his patients required assistance, as did we. An anonymous author, in 2001, reported a cost of $0.20 per questionnaire for an Internet based system.49 That author did not consider equipment costs.

The hardware cost per survey depends on how expenditures are shared between computer-based clinic functions (e.g., patient records, billing, scheduling) and distributed over the life of the equipment.15 For an office with the same outlay as this project ($15,000) and 4,500 patient visits (i.e., surveys) per year, a typical workload for a solo rheumatologist,50 the per-survey hardware cost for the first year would be $15,000 ÷ 4,500 = $3.33; the cost would amortize to $1.67 in the second year, $1.11 in the third, 83¢ in the fourth, 66¢ in the fifth, and so on asymptotically toward zero with each passing year. If the equipment is shared with other computer applications or if the number of patients seen per year is greater, the unit costs are proportionately less.

The operational cost per survey is negligible: systems administration and electricity. This hardware/software platform (Apple OS X, built on a UNIX foundation) is extraordinarily stable; we experienced no system crashes or downtime (100% availability). The system administration duties (mainly daily database backup, which can be automated) were streamlined and minimized.

Data Management

To analyze groups of responses from one or more patients or to correlate them with other health measures, data entry into a computer is usually necessary. Direct computer entry enhances survey data quality by disallowing missing, ambiguous, and double answers. It eliminates the need, delay, cost, and errors associated with transcription. The digital data can be immediately scored, displayed, and printed. A patient CAI system is most efficiently and economically operated as part of an electronic medical record (EMR). There are synergies when self-report data are dynamically integrated with previously stored information to construct trend lines and profiles that may assist in interpretation and in clinical decision making at the point of care (▶). The data may be incorporated into an EMR and made available for secondary uses such as quality assessment, the compilation of outcomes, and the evaluation of the effectiveness of treatments.

Study Limitations

Some factors that may affect the patient usability of this system were not measured, e.g., reading levels, health literacy, cognitive impairment, visual impairment, and grip strength. The reasons for nonparticipation in the validation study by the study site patients, the reasons for nonresponse to the CUQ by the validation study participants, and many of the demographic variables of those who chose not to participate are unknown.

The introduction of patient CAI systems into routine clinical care changes the workflow. Full consideration of business practice reengineering and the implications and sequelae associated with the deployment, partial or otherwise, of an EMR are beyond the scope of this paper. Nevertheless, because computer-based, self-administered questionnaires are engineered not to increase and possibly even to decrease the expenditure of provider and staff time, there should be little or no cost associated with workflow changes.

Conclusion

This microcomputer-based CAI system for the administration of health surveys in routine clinical care provides higher quality data than paper-based questionnaires, with the potential for decreased cost and administrative burdens. It makes practical, convenient, precise, and reliable that which is otherwise tedious, error-prone, and impractical in many settings. Clinicians, even in small offices, can directly capture patient self-report data into computer storage on all routine clinical encounters. Key to the wider adoption of health surveys in routine clinical care are the indoctrination of physicians to the utility of self-report data; careful selection or construction of standardized, validated, reliable, and clinically relevant questionnaires; and the increased availability of affordable, easy-to-use computer systems.6,7,11,13 The systems must automate the collection, storage, scoring, recall, and display processes and integrate them into the workflow without increasing the burden of patients, health care workers, or providers.3,4,15 This CAI system proved stable, self-explanatory, and acceptable to patients. It was shown to be easy to use for patients, staff, and providers alike for the (mostly) unaided self-entry of self-report data for each patient for each routine outpatient visit in an urban rheumatology clinic.

Supported by the Michigan Center for Urban African American Aging Research, which is funded by NIH grant number AGI5281.

References

- 1.Brodman K, Erdmann A, Lorge I, Wolff H, Broadbent T. The Cornell Medical Index. An adjunct to medical interview. JAMA. 1949;140:530–4. [DOI] [PubMed] [Google Scholar]

- 2.Collen M, Rubin L, Neyman J, Dantzig G, Baer R, Siegelaub A. Automated multiphasic screening and diagnosis. Am J Public Health. 1964;54:741–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Slack W, Hicks P, Reed C, Van Cura L. A computer-based medical-history system. N Engl J Med. 1966;274:194–8. [DOI] [PubMed] [Google Scholar]

- 4.Mayne J, Weksel W, Sholtz P. Toward automating the medical history. Mayo Clin Proc. 1968;43(1):1–25. [PubMed] [Google Scholar]

- 5.Fries J, Spitz P, Kraines R, Holman H. Measurement of patient outcome in arthritis. Arthritis Rheum. 1980;23:137–45. [DOI] [PubMed] [Google Scholar]

- 6.Deyo R, Patrick D. Barriers to the use of health status measures in clinical investigation, patient care, and policy research. Med Care. 1989;27(suppl 3):S254–68. [DOI] [PubMed] [Google Scholar]

- 7.Slack WV, Slack CW. Patient–computer dialog. N Engl J Med. 1972;286:1304–9. [DOI] [PubMed] [Google Scholar]

- 8.Institute of Medicine Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press, 2001. [PubMed]

- 9.US Department of Health and Human Services Healthy People 2010: Understanding and Improving Health, 2nd ed. Washington, DC: Government Printing Office, 2000.

- 10.Patient Assessment. Available at: http://www.rheumatology.org/member/templates/patient-phys improve. PDF. Accessed Sept 2003.

- 11.Bellamy N, Kaloni S, Pope J, Coulter K, Campbell J. Quantitative rheumatology: a survey of outcome measurement procedures in routine rheumatology outpatient practice in Canada. J Rheumatol. 1998;25:852–8. [PubMed] [Google Scholar]

- 12.Flowers N, Wolfe F. What do rheumatologists do in their practices? [abstract]. Arthritis Rheum. 1998;41 (suppl 9):337 [Google Scholar]

- 13.Bellamy N. Evaluation of the patient: health status questionnaires. In: Klippel JH (ed). Primer on the Rheumatic Diseases 12th ed. Atlanta, GA: Arthritis Foundation, 2001. pp. 124–133

- 14.Wolfe F, Pincus T, Thompson A, Doyle J. The assessment of rheumatoid arthritis and the acceptability of self-report questionnaires in clinical practice. Arthritis Care Res. 2003;49(1):59–63. [DOI] [PubMed] [Google Scholar]

- 15.Weed L. Medical Records, Medical Education, and Patient Care. The Problem-oriented Record as a Basic Tool. Chicago, IL: Year Book Medical Publishers, The Press of Case Western Reserve University, 1969.

- 16.Revere D, Dunbar P. Review of computer-generated outpatient health behavior interventions: clinical encounters in absentia. J Am Med Inform Assoc. 2001;8:62–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.The Electronic Medical Record for the Physicians Office. The American College of Rheumatology Committee on Rheumatologic Care 2003 report, 2003. Available at: http://www.rheumatology.org. Accessed Aug 2003.

- 18.Mosley-Williams A, Williams CA. Validation of a computer version of the American College of Rheumatology patient assessment questionnaire for the autonomous self-entry of self-report data in an urban rheumatology clinic. Arthritis Rheum. 2004;50:332–3. [DOI] [PubMed] [Google Scholar]

- 19.Faulkner C. The Essence of Human–Computer Interaction. London, UK: Prentice Hall, 1998.

- 20.United States General Accounting Office Medicare—Recent CMS Reforms Address Carrier Scrutiny of Physicians' Claims for Payment. Washington, DC: U.S. General Accounting Office, 2002.

- 21.Lewis D. Computer-based approaches to patient education: a review of the literature. J Am Med Inform Assoc. 1999;6:272–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Parkin A. Computers in clinical practice: applying experience from child psychiatry. BMJ. 2000;321:615–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Czaja S, Sharit J. Age differences in the performance of computer-based work. Psychol Aging. 1993;8(1):59–67. [DOI] [PubMed] [Google Scholar]

- 24.Czaja S, Sharit J. Age differences in attitudes towards computers. J Gerontol B Psychol Sci Soc Sci. 1998;53:329–40. [DOI] [PubMed] [Google Scholar]

- 25.Smith M, Sharit J, Czaja S. Aging, motor control and the performance of computer mouse tasks. Hum Factors. 1999;41:389–96. [DOI] [PubMed] [Google Scholar]

- 26.US Department of Commerce National Telecommunications and Information Administration. Falling through the Net: Toward Digital Inclusion. Washington, DC: U.S. Department of Commerce, 2000.

- 27.Lester T. The reinvention of privacy. Atlantic Monthly. 2001; Mar:27–39

- 28.Wolfe F, Pincus T. Listening to the patient. A practical guide to self-report questionnaires in clinical care. Arthritis Rheum. 1999;42:1797–808. [DOI] [PubMed] [Google Scholar]

- 29.Koyanl SJ, Balley RW, Nall JR. Research-based Web Design and Usability Guidelines. Washington, DC: National Institutes of Health, National Cancer Institute, 2003.

- 30.Neeley AN, Sittig DF. Basic microbiologic and infection control information to reduce the potential transmission of pathogens to patients via computer hardware. J Am Med Inform Assoc. 2002;9:500–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rheumatoid Arthritis Activity Minder. Available at: http://www.hopkins-arthritis.som.jhmi.edu/arthritis. Accessed Mar 10, 2004.

- 32.SF-36 Online Survey. Available at: http://www.sf-36.org. Accessed Mar 10, 2004.

- 33.Cook B. Wipeout: Lessons on Protecting Web-based EMR Data. Amednews.com. August 19, 2002. Available at: http://www.ama-assn.org/amednews/2002. Accessed Mar 10, 2004.

- 34.Kirsh I, Jungeblut A, Jenkins L, Kolstad A. Adult literacy in America: a first look at the results of the national adult literacy study. Washington, DC: National Center for Education Statistics, U.S. Department of Education, 1993.

- 35.Anonymous Putting the spotlight on health literacy to improve quality care. Qual Lett Healthc Leaders. 2003;15(7):2–11. [PubMed] [Google Scholar]

- 36.Doak C, Doak L, Root J. Teaching Patients with Low Literacy Skills, 2nd ed. Philadelphia: JB Lippincott, 1996.

- 37.Pincus T, Swearingen C, Wolfe F. Toward a multidimensional health assessment questionnaire (MDHAQ). Arthritis Rheum. 1999;42:2220–30. [DOI] [PubMed] [Google Scholar]

- 38.Brodie M, Flournoy R, Altman D, Blendon R, Benson J, Rosenbaum M. Health information, the Internet, and the digital divide. Health Aff. 2000;19:255–65. [DOI] [PubMed] [Google Scholar]

- 39.Deyo R, Carter W. Strategies for improving and expanding the application of health status measures in clinical settings. A researcher-developer viewpoint. Med Care. 1992;30(suppl 3):MS176–86. [DOI] [PubMed] [Google Scholar]

- 40.Pincus T, Callahan L. Formal education as a marker for increased mortality and morbidity in rheumatoid arthritis. J Chron Dis. 1985;38:973–84. [DOI] [PubMed] [Google Scholar]

- 41.Vlieland T, Buitenhuis N, van Zeben D, Vandenbroucke J, Breedveld F, Hazes J. Sociodemographic factors and the outcome of rheumatoid arthritis in young women. Ann Rheum Dis. 1994;53:803–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Callahan L, Pincus T. Formal education level as a significant marker of clinical status in rheumatoid arthritis. Arthritis Rheum. 1988;31:1346–57. [DOI] [PubMed] [Google Scholar]

- 43.Meenan R, Kazis L, Anderson J. The stability of health status in rheumatoid arthritis: a five-year study of patients with established disease. Am J Public Health. 1988;78:1484–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lorish C, Abraham N, Austin J, Bradley L, Alarcon G. Disease and psychosocial factors related to physical functioning in rheumatoid arthritis. J Rheumatol. 1991;18:1150–7. [PubMed] [Google Scholar]

- 45.Wolfe F, Cathey M. The assessment and prediction of functional disability in rheumatoid arthritis. J Rheumatol. 1991;18:1298–306. [PubMed] [Google Scholar]

- 46.Institute of Medicine Unequal Treatment: Confronting Racial and Ethnic Disparities in Health Care States. Washington, DC: National Academy Press, 2002. [PMC free article] [PubMed]

- 47.Pincus T. Documenting quality management in rheumatic disease: are patient questionnaires the best (and only) method?. Arthritis Care Res. 1996;9:339–48. [DOI] [PubMed] [Google Scholar]

- 48.The Health Insurance Portability and Accountability Act of 1996. Available at: http://www.cms.hhs.gov/hipaa. Accessed Mar 10, 2004.

- 49.Anonymous The next level of Internet applications: providing quality care to high-risk patients. Qual Lett Healthc Leaders. 2000;12(5):2–3. [Google Scholar]

- 50.Benchmarks in Rheumatology Practice. American College of Rheumatology. 1999. Available at: http://www.rheumatology.org/practice/benchmarking. Accessed Mar 10, 2004.