Abstract

In the context of the emerging field of public health services and systems research, this study (i) tested a model of the relationships between public health organizational capacity (OC) for chronic disease prevention, its determinants (organizational supports for evaluation, partnership effectiveness) and one possible outcome of OC (involvement in core chronic disease prevention practices) and (ii) examined differences in the nature of these relationships among organizations operating in more and less facilitating external environments. OC was conceptualized as skills and resources/supports for chronic disease prevention programming. Data were from a census of 210 Canadian public health organizations with mandates for chronic disease prevention. The hypothesized relationships were tested using structural equation modeling. Overall, the results supported the model. Organizational supports for evaluation accounted for 33% of the variance in skills. Skills and resources/supports were directly and strongly related to involvement. Organizations operating within facilitating external contexts for chronic disease prevention had more effective partnerships, more resources/supports, stronger skills and greater involvement in core chronic disease prevention practices. Results also suggested that organizations functioning in less facilitating environments may not benefit as expected from partnerships. Empirical testing of this conceptual model helps develop a better understanding of public health OC.

Introduction

As the burden of chronic disease on health system resources increases, there is growing recognition of the need for comprehensive and integrated primary prevention [1]. Public health systems are centrally important to this prevention effort and it is crucial to ensure that these systems, and more specifically the organizations that comprise these systems, have adequate capacity to address the burden effectively [2]. Despite the importance of organizational capacity (OC) to chronic disease prevention (CDP), there is no widely accepted definition of OC in the public health context. Further, systematic explorations of the associations between public health OC, its determinants and its outcomes are rare in the public health and health promotion literatures [3–6]. Finally, although the importance of broader macro-level influences or external context on OC has been discussed [6, 7–10], few studies have empirically examined if the external context in which organizations operate affects the associations between public health OC, its determinants and its outcomes. Attention to effect modification by external context in analyses of OC relationships is critical to advancing our understanding of OC for CDP. These questions are part of the emerging field of public health services and systems research that uses a broad set of disciplinary perspectives to scrutinize the public health systems and their potential to impact population health [11].

In 2007, drawing on the public health, health promotion, health services and organizational research literatures, we [12] proposed a new conceptual model of the relationship between OC for CDP and one of its many potential outcomes, i.e. level of involvement in CDP practices (figure provided as Supplementary data). The purpose of this study is to test the relationships proposed in our 2007 model [12] between possible determinants of OC, OC and one of its potential outcomes. We used data from a census of Canadian public health organizations with mandates for CDP and/or healthy lifestyle promotion programming [12].

The way in which external factors affect the relationship between OC and its determinants and outcomes is also of interest. Therefore, a secondary purpose of this study was to examine differences in the nature of these relationships among organizations that report high levels of facilitation from external factors (i.e. government priority for CDP, public priority for CDP and access to external resource tools) and those with external factors reported to be non-facilitative.

The following sections describe the components of our model in greater detail than in our previous publications and present our hypotheses concerning these relationships.

Public health OC for CDP (skills and resources/supports)

We defined OC as skills and resources/supports needed to conduct CDP programming. Although OC has been defined variably borrowing from definitions used in practitioner capacity research [13] and/or community/OC building for health promotion [14–21], OC to tackle a health issue is conceptualized as having at least three domains: organizational commitment, skills and structures [22]. OC in government/non-governmental organizations incorporates the structures, skills and resources required to deliver programs that are responsive to specific health problems [23]. Within cardiovascular disease (CVD) prevention, OC is viewed as a set of skills and resources needed to conduct effective health promotion programs [24]. This definition was expanded to include knowledge [25] and commitments [5]. Others [26] adopted the Singapore Declaration definition of OC [27] as the capability of an organization to promote health, formed by the will to act, infrastructure and leadership. Finally, Naylor et al. [28] included infrastructure, collaboration, an evidence base, and policy and technical expertise as components of OC. Overall, skills and resources/supports to conduct CDP programs emerge in this literature as the two most commonly cited dimensions of OC in the public health context. Skills required by organizations for CDP programming comprise those needed to engage in a fundamental set of requisite practices: assessment of population health needs, identification of relevant practices, program planning, selection and employment of implementation strategies and evaluation of interventions, as well as content-specific skills required to address behavioral risk factors common to several chronic diseases including unhealthy eating, physical inactivity and tobacco use. Resources and supports refer to the organizational assets needed to implement prevention programming including fiscal, human, material and administrative assets [29]. We hypothesized that the two dimensions of capacity, skills and resources/supports are highly correlated.

Potential determinants of OC

Effective partnerships and intra-organizational supports for evaluation are two of many potential determinants of OC that have attracted attention in the literature [10, 18, 30]. Partnering among organizations is the norm in primary prevention, largely because of the complexity of the current health environment, rising costs associated with implementing multifaceted interventions and challenges associated with tackling the wider determinants of health and health inequalities [31–35]. Research on partnerships in public health has focused on identifying the determinants of effective partnering [34–38], and some research has examined whether interagency collaboration improves health outcomes [39–41]. However, very little is known about how partnerships affect the capacity of partner organizations [42]. Although co-operation ‘can be more challenging than independent action’ [43] and is costly in terms of time and finances [44], there are numerous possible benefits of partnerships that are aligned with the notion of OC including additional sources of funds, staff and volunteers, information, knowledge and expertise, in-kind resources and contacts and networks [18, 29, 45].

To ensure ongoing learning about how best to use limited resources, public health organizations need organizational cultures that support evaluation (i.e. leadership valuing learning and evaluation; having the necessary systems, processes and policies for engaging in evaluation; providing communication channels and opportunities to access and disseminate evaluation information so that learning from evaluation is embedded in everyday work). This intra-organizational support for evaluation is seen as a vital determinant of OC [46–47]. However, actions that shape organizational culture are often unconsciously defined and applied and as a result, the direct influence of organizational culture on OC is often underestimated or not well understood [10]. The construct of organizational support for evaluation is to be differentiated from the conduct of evaluation, which is a component of capacity (i.e. part of the skill set needed to conduct CDP programming). We posit that having higher levels of organizational supports for evaluation leads to greater capacity in general not just higher levels of the capacity sub-component, evaluation. We hypothesized that organizational supports for evaluation correlates with partnership effectiveness and both are directly related to OC.

Potential OC outcomes

Common to all characterizations of OC in the area of public health is the assumption that capacity is linked proximally to performance (i.e. conduct of a set of practices, delivery of programs and services, policies, regulations) and more distally to population health outcomes including risk factor and disease prevalence [6–8, 10, 48]. The proximal outcome of interest in our conceptual model [12] was the degree of involvement in a set of fundamental practices requisite to all types of CDP programming, namely, conducting needs assessment, identifying relevant programs, planning and evaluation. This set of practices is based on the core functions of public health that have been empirically linked to improved population outcomes [49] including among others, reduction in the prevalence of risk factors for chronic disease. We posited that higher levels of OC are associated with higher levels of involvement in CDP practices.

Effect modification of OC relationships

Several factors in the larger social, economic and political context in which public health organizations operate may modify the relationships between OC and its determinants and/or outcomes. These factors include, among others, funding and policy decisions by provincial and national governments, health system reform, public support for CDP, socioeconomic characteristics of the populations served, the burden of chronic disease locally, community priorities, national and global economies, emerging diseases and prevention research systems [7, 8]. The ability of public health organizations to adapt to either minimize the effect of context or take advantage of opportunities has been demonstrated [3]. Therefore, exploring if the associations depicted in conceptual models of public health OC are different at different levels of external context is important to understanding the effectiveness of public health practice. We hypothesized that organizations with high external facilitation report higher mean levels of organizational supports for evaluation, partnership effectiveness, skills, resources and supports and involvement. However, we would not expect to see significant differences in the nature of the relationships between the two groups.

Materials and methods

From October 2004 to April 2005, data were collected in a telephone survey of all national, provincial and regional-level organizations in Canada with mandates for CDP programming at the population level. These organizations comprised regional health authorities and public health units/agencies (herein referred to as formally mandated regional public health organizations); government departments; national health charities and their provincial/district divisions; other non-governmental and non-profit organizations (herein referred to as non-governmental organizations); para-governmental health agencies (defined as agencies financed by the government but acting independently of it); resource centers; professional organizations; and coalitions, partnerships, alliances and consortia (herein referred to as grouped organizations). This census of public health organizations in the 10 provinces in Canada was enumerated in an exhaustive Internet search. The search began with a number of initial organizations in each province commonly known to be engaged in CDP that acted as ‘seeds’. Information about other CDP organizations was obtained from the seed organization web sites. Those so identified served to identify others and so on until no new organizations could be identified. Province-specific lists of organizations were reviewed for completeness by senior researchers (one per province) with in-depth knowledge of CDP activity in their respective provinces. Telephone interviews (mean length 43 ± 17 min) were conducted using a structured, close-ended format with one key informant per organization, identified by a senior manager as the individual most knowledgeable about implementation/delivery of CDP programs, practices, campaigns or activities within the organization.

Measures

The hypothesized model consists of five latent variables: organizational supports for evaluation, partnership effectiveness, resources and supports, skills for CDP and involvement in CDP practices. Each latent variable was measured by at least three manifest variables and the items/scales comprising these manifest variables are identified in Table I. A total of 23 variables contributed to the derivation of the 5 latent variables. Development, use and psychometric testing of the measurement tool have been reported [12]. See note in Table I for a brief summary of the process and main findings of instrument development.

Table I.

Detailed description of variables (n = 23) used in the hypothesized model of OC for CDP in Canadian public health organizations, 2004a

| Latent variable | Manifest variable | Label | Items | Response category |

|---|---|---|---|---|

| Support for evaluation | Evaluation policy | SE1 | • There are written monitoring and evaluation policy for CDP |

|

| Availability of results | SE2 | • Monitoring and evaluation information about your CDP activities is available | ||

| Use of lessons learned | SE3 | • Lessons learned from monitoring and evaluation of CDP activities are used to make changes | ||

| Partnership effectiveness | Adequate partnering | PE1 | • Current levels of partnering with other organizations are adequate for effective CDP |

|

| New partnership-related ideas | PE2 | • Partnerships with other organizations are bringing new ideas about CDP to your organization | ||

| New partnership-related resources | PE3 | • Partnerships with other organizations are bringing resources for CDP to your organization | ||

| Adequate coalition participation | PE4 | • Your organization’s level of participation in coalitions and networks is adequate for effective CDP | ||

| Increased partnerships | PE5 | • The number of organizations that you are connected to through networks concerned with CDP has increased in the last 3 years | ||

| Skills for CDP | Needs assessment | S1 | • Identifying community, cultural and organizational factors that influence CDP activities |

|

| Evaluation (6-item scale) | S2 | 1. Monitoring of CDP activities |

|

|

| 2. Measuring achievement of CDP objectives | ||||

| 3. Using quantitative methods to assess impacts of CDP | ||||

| 4. Using qualitative methods to assess impacts of CDP | ||||

| 5. Undertaking long-term follow-up with the target population for CDP | ||||

| 6. Identifying best practices for CDP | ||||

| Identify relevant practices/activities (6-item scale) | S3 | 1. Reviewing CDP activities of other organizations to fund gaps in programming for your target population(s) |

|

|

| 2. Reviewing CDP activities developed by other organizations to see if they can be used by your organization | ||||

| 3. Finding relevant best practices in CDP to see if they can be used by your organization | ||||

| 4. Reviewing research to help develop CDP priorities | ||||

| 5. Assessing the organization’s strengths and limitations in CDP | ||||

| 6. Consulting with community members to identify priorities for CDP | ||||

| Planning (5-item scale) | S4 | 1. Using theoretical frameworks to guide development of CDP activities |

|

|

| 2. Setting goals and objectives for CDP | ||||

| 3. Reviewing your resources to assess feasibility of CDP activities | ||||

| 4. Developing action plans for CDP | ||||

| 5. Designing, monitoring and evaluation of CDP | ||||

| Implementation strategies (7-item scale) | S5 | 1. Group development |

|

|

| 2. Public awareness and education | ||||

| 3. Skill building at the individual level | ||||

| 4. Partnership building | ||||

| 5. Community mobilization | ||||

| 6. Facilitation of self-help groups | ||||

| 7. Service provider skill building | ||||

| Resources and supports | Managerial supports (9-item scale) | RS1 | 1. Decisions about CDP activities are made in a timely fashion |

|

| 2. Staff are routinely involved in management’s decisions about CDP programming | ||||

| 3. Internal communication about CDP is effective | ||||

| 4. Innovation in CDP is encouraged | ||||

| 5. Everyone is encouraged to show leadership for CDP within their jobs | ||||

| 6. Staff take leadership roles for CDP activities | ||||

| 7. Managers are accessible regarding CDP activities | ||||

| 8. Managers are responsive to CDP issues | ||||

| 9. Managers are receptive to new ideas for CDP | ||||

| Staffing supports (6-item scale) | RS2 | 1. Staff have timely access to information they need about CDP |

|

|

| 2. Staffing levels are adequate to carry out CDP activities | ||||

| 3. Staff are hired specifically to conduct CDP activities | ||||

| 4. There is an appropriate level of administrative support for CDP | ||||

| 5. There are professional development opportunities to learn about CDP | ||||

| 6. Staff participate in CDP professional development opportunities | ||||

| Priority for CDP | RS3 | • Level of priority for CDP within your organization |

|

|

| Resource adequacy (3-item scale) | RS4 | 1. Funding levels for CDP activities |

|

|

| 2. Funding levels for monitoring and evaluation of CDP activities | ||||

| 3. Access to material resources for CDP activities | ||||

| Internal senior support (2-item scale) | RS5 | 1. Level of board support |

|

|

| 2. Commitment to CDP by senior management | ||||

| Internal structure support (3-item scale) | RS6 | 1. Organizational structure for CDP |

|

|

| 2. Staff experience with CDP | ||||

| 3. Internal co-ordination of CDP activities | ||||

| Involvement in CDP practices | Needs assessment | I1 | • Identifying community, cultural and organizational factors that influence CDP activities |

|

| Evaluation (6-item scale) | I2 | 1. Monitoring of CDP activities |

|

|

| 2. Measuring achievement of CDP objectives | ||||

| 3. Using quantitative methods to assess impacts of CDP | ||||

| 4. Using qualitative methods to assess impacts of CDP | ||||

| 5. Undertaking long-term follow-up with the target population for CDP | ||||

| 6. Identifying best practices for CDP | ||||

| Identify relevant practices (6-item scale) | I3 | 1. Reviewing CDP activities of other organizations to fund gaps in programming for your target population(s) |

|

|

| 2. Reviewing CDP activities developed by other organizations to see if they can be used by your organization | ||||

| 3. Finding relevant best practices in CDP to see if they can be used by your organization | ||||

| 4. Reviewing research to help develop CDP priorities | ||||

| 5. Assessing the organization’s strengths and limitations in CDP | ||||

| 6. Consulting with community members to identify priorities for CDP | ||||

| Planning (5-item scale) | I4 | 1. Using theoretical frameworks to guide development of CDP activities |

|

|

| 2. Setting goals and objectives for CDP | ||||

| 3. Reviewing your resources to assess feasibility of CDP activities | ||||

| 4. Developing action plans for CDP | ||||

| 5. Designing, monitoring and evaluation of CDP |

aItems measuring each component of the model were developed or adapted from existing instruments [15, 18, 22, 50–60]. No item was used exactly as it was originally developed, and no existing scales were used in their entirety. Items were tested for content validity with four researchers recognized nationally for their work in chronic disease health policy, health promotion, public health and dissemination. The questionnaire was pilot testing in nine organizations that delivered prevention activities unrelated to CVD, diabetes, respiratory diseases or cancer. Separate psychometric analyses were undertaken for subsets of items selected to measure each construct in the conceptual framework, to assess unidimensionality and internal consistency. Our scales showed generally excellent internal consistency (α = 0.70–0.88).

Three survey items were used to measure the latent variable organizational supports for evaluation, including level of agreement (5-point Likert scale: 1 = strongly disagree to 5 = strongly agree) with statements regarding the existence of written monitoring and evaluation policy for CDP (SE1), availability of monitoring and evaluation information about CDP work (SE2) and use of lessons learned from monitoring and evaluation of CDP activities (SE3). Partnership effectiveness was measured using five items that asked key informants to rate their level of agreement with the following statements that current levels of partnering with other organizations are adequate (PE1), partnerships are bringing new ideas for CDP to their organization (PE2), partnerships with other organizations are bringing new resources for CDP to their organization (PE3), level of organizational participation in coalitions and networks is adequate (PE4) and number of organizations to which the organization is connected through networks has increased in the last 3 years (PE5). One item and four scales measuring core skills needed for effective CDP programming were used to measure the latent variable skills for CDP. One item rated needs assessment skill (1 = poor to 5 = excellent) in identifying community, cultural and organizations factors that influence CDP activities (S1) and four scales measured skill to evaluate (S2), identify best practices (S3), plan (S4) and implement strategies for CDP programming (S5). Resources and supports was measured using five scales rating level of agreement regarding fiscal, human and organizational assets, namely managerial supports (RS1), staffing supports (RS2), resource adequacy (RS4), internal structure supports (RS5) and senior management supports (RS6) and one single item (RS3) asking respondents to rate the level of organizational priority (1 = very low to 5 = very high) for CDP. Level of involvement in CDP practices latent variable was measured with one item rating level in needs assessment activity, i.e. ‘How would you rate your organization’s level of involvement in identifying community, cultural and organizational factors that influence CDP activities?’ (I1), and three scales rating involvement in evaluation (I2), identification of best practices (I3) and planning (I4).

An external facilitation score was calculated for each organization as follows: (i) responses for each of the three manifest variables (government priority for CDP, public priority for CDP, access to external resource tools for CDP programming) were summed separately (Table II) and (ii) each sum was divided by the number of items in the scale, i.e. 4, 6 and 4, respectively, to get a sub-score. If an organization was missing one or more responses the sum was divided by the number of responses provided; (iii) the three sub-scores were summed and divided by 3 to allow the level of external facilitation to range from ‘1’ (very weak facilitator) to ‘7’ (very strong facilitator) with ‘4’ set as neutral. A total score ≥ 5 was labelled ‘high facilitation’ (n = 110) and scores < 5 were labelled ‘low facilitation’ (n = 106).

Table II.

Detailed description of variables used in the creation of the external facilitator latent variable

| Indicator variable | Itemsa | Range of score |

|---|---|---|

| Government priority (GP) | 1. Level of provincial priority for CDP | 4–28 |

| 2. Level of national priority for CDP | ||

| 3. Level of provincial/ministry support for CDP capacity building | ||

| 4. Health system reform | ||

| Public priority (PP) | 1. Level of target population interest in CDP | 6–42 |

| 2. Level of public understanding of CDP | ||

| 3. Availability of CDP research | ||

| 4. Availability of CDP data about your specific target population(s) | ||

| 5. Level of support for CDP from partners | ||

| 6. Access to media for coverage of CDP | ||

| External resource tools (ERT) | 1. Access to provincial resources for CDP | 4–28 |

| 2. Usefulness of the provincial resource organizations for CDP | ||

| 3. Access to related national resource organizations for CDP | ||

| 4. Usefulness of the national resource organizations for CDP |

aResponse choices for all items ranged from 1 (very weak facilitator) to 7 (very strong facilitator) with 4 set at neutral. Coefficient alpha reliability estimates computed for each multi-item scale were as follows: GP = 0.80; PP = 0.73 and ERT = 0.83.

Data analyses

To test the hypothesized model (provided as Supplementary data), we used maximum-likelihood estimation structural equation modeling (SEM) with LISREL/PRELIS 8.80. The dataset contained responses from 216 organizations. The analyses were conducted using organizations with complete data only (n = 210). First, data were screened for patterns of missing data, outliers and violations of the assumption of normality [61]. Second, descriptive statistics were computed for the main study variables. Third, independent confirmatory factor analyses were run to compute the composite reliability coefficients of the scores for organizational supports for evaluation, partnership effectiveness, resources and supports, skills and involvement in CDP [62]. Fourth, the measurement and structural models of the hypothesized relationships between organizational supports for evaluation, partnership effectiveness, resources and supports, skills and involvement in CDP were tested using maximum-likelihood estimation. In the path model, the latent factors for OC determinants (i.e. partnership effectiveness and organizational supports for evaluation) were free to correlate. Similarly, the latent factors for OC (skills and resources and supports) were also allowed to correlate. Given how the skills and involvement in CDP constructs were measured (i.e. the same question stem with reference to either skills or involvement), error covariances were freed between similar indicators. Similarly, two items for partnership effectiveness (i.e. ‘new partnership-related ideas’ and ‘new partnership-related resources’) were allowed to correlate. Model goodness-of-fit [63] was assessed by the root mean square error of approximation (RMSEA < 0.08), comparative fit index (CFI > 0.90), non-normed fit index (NNFI > 0.90) and the standardized root mean square residual (SRMR values approximating 0.05).

To test for differences in the structural associations between latent variables among organizations with high levels of external facilitators and those with low levels, measurement, latent mean and structural invariance tests were conducted in line with common practice [64, 65]. Furthermore, the latent means and structural paths as outlined in the hypothesized model were tested for significant differences between organizations with high and low external facilitators [64].

Results

Approximately half of all organizations were formally mandated regional public health organizations, one-quarter was non-governmental organizations, 19% represented grouped organizations and 8% were categorized as ‘other’. The majority (71%) provided CDP programming to populations at a regional or sub-provincial level, 24% were engaged in CDP activities at a provincial level and 5% had multi-province mandates. Table III presents selected characteristics of participating organizations.

Table III.

Selected characteristics of Canadian public health organizations engaged in primary prevention of chronic disease (n = 216)

| Organization type (%)a | |

|---|---|

| Formally mandated public health | 48 |

| Non-governmental organization (e.g. health charities) | 25 |

| Alliance, coalition and partnership (i.e. grouped organization) | 19 |

| Other | 8 |

| Years in existence, median (IQR) | 27 (7–51) |

| No. full-time equivalent staff, median (IQR) | |

| Organizations with CDP units | 150 (69–850) |

| CDP units within larger organizations | 15 (7–35) |

| Organizations entirely engaged in CDP | 3 (1–11) |

| Geographic area served (%) | |

| Region (sub-provincial) | 71 |

| Province | 24 |

| Multi-province | 2 |

| Canada | 3 |

IQR, interquartile range.

aKey informants were asked to select one of the following that best described their organization: (i) Federal/Provincial/Territorial Government (Ministry/branch/department); (ii) Health Authority/District (provincial or regional); (iii) Public Health Agency/Department/Unit; (iv) Para-governmental Health Agency; (v) Non-governmental (NGO), Not-for-Profit organization (NPO) or Health Charity; (vi) Professional Association; (vii) Research Center; (viii) Resource Center; (ix) Coalition/Partnership/Alliance/Network. Although testing the hypothesized model was conducted using data from all organizations, for descriptive purposes we collapsed these nine organizational types into four mutually exclusive categories: formally mandated public health organizations (ii, iii); non-governmental organizations (v); grouped organizations (ix) and other (i, iv, vi, vii, viii).

Features of the measurement model are presented in Table IV. All factor loadings were statistically significant, and the standard errors were low (<0.05). The factor loadings for skills pertaining to needs assessment (S1) and involvement pertaining to needs assessment (I1) were low suggesting some misspecification in the measures. The proportion of explained variance in each indicator (with the exception of S1 and I1) was moderate to high (R2 values ranging from 0.34 to 0.76), providing evidence of reliability. The correlations among the OC-related latent factors were moderate to strong (P < 0.05) and positive (Table V).

Table IV.

Properties of the measurement model for the total analytic sample (n = 210)

| Factors and indicators | Standardized loading | Error variancea | Reliabilityb |

|---|---|---|---|

| Partnership effectiveness | 0.78c | ||

| PE1 | 0.75 | 0.44 | — |

| PE2 | 0.60 | 0.63 | — |

| PE3 | 0.50 | 0.75 | — |

| PE4 | 0.72 | 0.48 | — |

| PE5 | 0.64 | 0.64 | — |

| Support for evaluation | 0.80c | ||

| SE1 | 0.60 | 0.69 | — |

| SE2 | 0.87 | 0.24 | — |

| SE3 | 0.86 | 0.86 | — |

| Skills | 0.80c | ||

| S1 | 0.41 | 0.83 | — |

| S2 | 0.78 | 0.40 | 0.88 |

| S3 | 0.71 | 0.50 | 0.85 |

| S4 | 0.79 | 0.37 | 0.88 |

| S5 | 0.61 | 0.64 | 0.82 |

| Resources and supports | 0.78c | ||

| RS1 | 0.57 | 0.68 | 0.88 |

| RS2 | 0.62 | 0.61 | 0.72 |

| RS3 | 0.61 | 0.63 | — |

| RS4 | 0.56 | 0.69 | 0.77 |

| RS5 | 0.65 | 0.58 | 0.51 |

| RS6 | 0.69 | 0.56 | 0.75 |

| Involvement | 0.74c | ||

| I1 | 0.34 | 0.88 | — |

| I2 | 0.73 | 0.47 | 0.86 |

| I3 | 0.68 | 0.54 | 0.84 |

| I4 | 0.79 | 0.38 | 0.86 |

aCalculated as 1 − the indicator reliability, where indicator reliability = square of factor loading (λ2). bCoefficient alpha reliability estimates computed for all multi-item scales. cComposite reliability for each latent factor = (Σλ)2)/((Σλ)2 + Σθ), where θ = error variance. Composite reliability coefficient (minimally acceptable level > 0.70) reflects the internal consistency of the indicators measuring a given factor [62].

Table V.

Latent variable correlation coefficients for study variables in the total analytic sample (n = 210)

| Partnership effectiveness | Support for evaluation | Skills | Resources and supports | External facilitators | Involvement | |

|---|---|---|---|---|---|---|

| Partnership effectiveness | — | |||||

| Support for evaluation | 0.23 | — | ||||

| Skills | 0.34 | 0.63 | — | |||

| Resources and supports | 0.44 | 0.56 | 0.57 | — | ||

| External facilitators | 0.41 | 0.14 | 0.24 | 0.36 | — | |

| Involvement | 0.44 | 0.64 | 0.77 | 0.78 | 0.25 | — |

All latent variable correlations were statistically significant (t < 1.96); RS1, RS2, RS3 and RS4 scaled 1–5; RS5 and RS6 scaled 1–7.

Fit statistics suggested a good fitting measurement model for the total sample (χ2(215) = 421.91, RMSEA = 0.06, NNFI = 0.94, CFI = 0.95, SRMR = 0.06), as well as for both high external facilitator organizations (χ2(215) = 301.93, RMSEA = 0.06, NNFI = 0.93, CFI = 0.94, SRMR = 0.07) and low external facilitator organizations (χ2(215) = 324.62, RMSEA = 0.07, NNFI = 0.89, CFI = 0.91, SRMR = 0.07). Removing the indicators of S1 and/or I1 improved model fit but made little difference to the relationships and were therefore retained in the model.

When testing the measurement properties of the model for organizations with high and low external facilitators, there were no meaningful differences (Table VI). Specifically, there were no statistically significant changes in model fit when the factor structure and loadings were set to be equal across the two groups, which are the minimum criteria to demonstrate no difference in invariance group analyses [66, 67]. Therefore, it is reasonable to suggest that the two groups of organizations (high and low external facilitators) responded to the survey items in the same way and that mean differences are real differences that are not attributed to measurement error. Organizations with high external facilitators had statistically significantly higher means for partnership effectiveness (t = 2.97), resources and supports (t = 2.52), skills for CDP (t = 2.38) and involvement in CDP practices (t = 2.16) compared with organizations with low external facilitators. The mean for organizational supports for evaluation was not significantly different (t = 1.28).

Table VI.

Fit indices of nested models testing group invariance (n = 210)

| Models | χ2 | Degrees of freedom | RMSEA | CFI | NNFI | SRMR |

|---|---|---|---|---|---|---|

| Model 1: measurement model | ||||||

| High external facilitators | 329.26 | 219 | 0.07 | 0.95 | 0.94 | 0.04 |

| Low external facilitators | 350.42 | 219 | 0.07 | 0.91 | 0.89 | 0.07 |

| Model 2: group invariance | ||||||

| Baseline | 626.55 | 430 | 0.065 | 0.94 | 0.93 | 0.09 |

| Factor loadingsa (FL) | 650.90 | 448 | 0.065 | 0.94 | 0.93 | 0.10 |

| FL + factor variancesb (FV) | 667.34 | 453 | 0.066 | 0.94 | 0.93 | 0.12 |

| FL + FV + factor covariancesc (FC) | 695.71 | 463 | 0.069 | 0.93 | 0.93 | 0.12 |

| FL + FV + FC + uniquenessd | 736.99 | 485 | 0.07 | 0.93 | 0.93 | 0.13 |

| FL + item intercepts + latent means | 765.21 | 509 | 0.07 | 0.93 | 0.93 | 0.13 |

aChange in chi-square for 18 changes in degrees of freedom = 24.35, P = 0.14. bChange in chi-square for 5 changes in degrees of freedom = 16.44, P = 0.005. cChange in chi-square for 10 changes in degrees of freedom = 28.37, P = 0.002. dChange in chi-square for 22 changes in degrees of freedom = 41.28, P = 0.008.

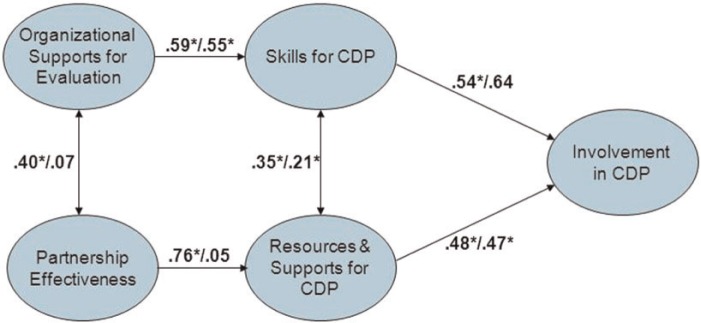

The path model was a good fit for both organizations with high external facilitators (χ2(219) = 329.26, RMSEA = 0.07, NNFI = 0.94, CFI = 0.95, SRMR = 0.04) and low external facilitators (χ2(219) = 350.42, RMSEA = 0.07, NNFI = 0.89, CFI = 0.91, SRMR = 0.07). Organizational supports for evaluation accounted for 34% and 31% of the variance in skills for high and low external facilitators, respectively, while partnership effectiveness accounted for 57% and 2%. The OC factors (skills and resources and supports) had statistically significant effects on involvement for CDP, accounting for 71% and 77% of the variance, respectively (Fig. 1).

Fig. 1.

Structural equation model representing the relationships among organizational determinants, OC for CDP and involvement in CDP, for organizations with high and low external facilitators. Note: standardized path coefficients are displayed. *P < 0.05.

When comparing organizations with high and low external facilitators, partnership effectiveness was not statistically significantly associated with organizational supports for evaluation in the model for organizations with low external facilitators and the path from partnership effectiveness to resources and supports was not significant. This finding was confirmed in final model testing examining structural invariance (baseline model: χ2(448) = 655.67, RMSEA = 0.07, NNFI = 0.93, CFI = 0.94, SRMR = 0.09 and constrained model: χ2(452) = 667.90, RMSEA = 0.07, NNFI = 0.92, CFI = 0.94, SRMR = 0.10; Δχ2 = 12.23, P = .01).

Discussion

The objective of this study was to test the relationships depicted in our model of public health OC for CDP programming. We hypothesized, based on prior research, that organizational determinants of OC (i.e. organizational supports for evaluation and partnership effectiveness) influence OC (i.e. skills and resources and supports) which in turn influences involvement in CDP practices.

Overall, the results showed support for the hypothesized model. All latent factors were correlated, supporting the postulated complexity of OC. Furthermore, these associations were invariant across different strata of external facilitators. However, organizations operating within a more facilitating external context for CDP (i.e. reporting higher scores on variables indicating the presence of government priority for CDP, presence of public priority for CDP and access to external resource tools for CDP programming) had statistically significantly higher mean levels of partnership effectiveness, resources and supports, skills and involvement. This may be because facilitating external factors help organizations develop more capacity or it may be because they remove barriers to developing capacity. We are not able to investigate this tenet in the current dataset. The path from partnership effectiveness to resources and supports was not statistically significant in organizations with less facilitating external environments.

Although organizational skills can be enhanced by an outside group or entity, the development of capacity is essentially an internal process [8]. Our results corroborate this tenet because organizational supports for evaluation (i.e. built into the organizational culture through internal policies and the general way of ‘doing business’) was directly related to the fundamental set of skills needed for CDP programming. Although evaluation is key to providing an evidence base for future CDP programming, this finding speaks to the importance of institutionalizing evaluation to provide a foundation needed to enact current CDP programs. Having strong internal support for evaluation contributes to the processes of planning, development and implementation, thereby increasing the quality and effectiveness of CDP initiatives [68]. Furthermore, the relationship between organizational supports for evaluation and skills was robust in that the mean level of organizational supports for evaluation and the amount of variance in skills explained by organizational supports for evaluation was similar across organizations irrespective of the external context.

Although by nature external, partnerships are internally driven. Creation of partnerships is a fundamental concept in public health and factors that predict sustainable public health partnerships have been widely reported [6, 44, 69]. This study extends these findings by showing that the presence of partnerships perceived to be effective is directly related to the resources and supports dimension of OC and adds to the limited evidence connecting external collaboration to the capacity of the public health organizations involved in the partnerships [4]. But contrary to the implicit assumption among policy makers that partnerships will aid public health organizations [42], our findings suggest that collaborating with other organizations may not contribute to building capacity in all circumstances. The strong link between partnership effectiveness and resources and supports among organizations functioning in favorable environments was not significant in organizations reporting a less facilitating context. This result is consistent with the view that working in partnership is demanding on organizations [70], particularly those grappling with unexpected or overwhelming issues related to outside environmental pressures [71]. Such organizations may not benefit as expected from these collaborative relationships. These results would support the adoption of strategic planning mechanisms to assess the impact of political, economic, sociocultural, environmental and other external influences and determine the added value of entering into new or maintaining current partnerships.

Previous studies have documented wide variation in both funding and staffing levels across local public health systems in the United States and suggest that disparities in these two types of resources may account for much of the variation in performance [72, 73]. Some have explored the influence of public health workforce characteristics such as skills and competencies on public health performance [6, 74]. In our analysis, both skills and resources and supports were directly and strongly related to involvement in CDP practices. This finding adds to the work of Barman and MacIndoe [75] who report that capacity is an independent predictor of organizational practice in non-profit organizations. Although skills and involvement were measured similarly using the same root question and as such were expected to correlate, SEM accounts for the shared variance resulting from this type of measurement issue. Nonetheless, it is important to consider that these paths might be bi-directional, such that greater involvement in CDP could also be expected to lead to improved skill levels. Longitudinal data are required to examine these relationships further.

Public health delivery systems exist as complex systems comprising many different actors [12, 76]. The few studies that collect public health capacity-related data at an organizational level have been hampered by small sample sizes and/or limited to one particular type of organization such as public health agencies [4, 5, 26]. Strengths of this analysis include a complete census of all the types of organizations engaged in CDP in Canada and the use of SEM allowing the simultaneous evaluation of multiple relationships in the preventive public health system as a whole. Limitations of this study include the cross-sectional design. Concurrent measurement of the variables precludes specification of the direction of the effects beyond the theory used to develop the model. Although this model was tested in a complete census of CDP organizations, the number of organizations studied was not sufficiently large to allow splitting the data in half to estimate the model twice [77]. It was also not possible to perform cross-validation with an external sample engaged in primary prevention of chronic disease. Therefore, it is not known if the model will generalize to other public health systems beyond Canada. Although key informants were selected as those most knowledgeable about CDP within their respective organizations, data on organizational characteristics and processes provided by a single person may not reliably reflect the inherent complexity of organizations [78–82].

Conclusion

Conceptual models are often used in the public health literature to illustrate possible relationships among a set of factors [83]. Although these diagrams help synthesize knowledge, define concepts, generate hypotheses, indicate variables to be operationalized and plan interventions and analytic approaches [84], empirical testing of the relationships posited is essential if we are to develop better understanding of public health OC for CDP. Situated in the context of the emerging field of public health services and systems research, this study contributes to our understanding of the links between OC, its determinants and outcomes, as well as the effect of external contextual factors on these relationships. This is just one attempt at identifying a substantively meaningful model that fits observed data adequately. There may be other ways to conceptualize the OC process as it pertains to CDP efforts within the public health system.

Supplementary data

Supplementary data are available at HEALED online.

Acknowledgements

At the time this study was conducted, C.M.S. held a new investigator award from Le Fonds de Recherche du Québec - Santé (FRQS). J.O.L. holds a Canada Research Chair in the Early Determinants of Adult Chronic Disease.

Funding

This study was supported by the Canadian Institutes of Health Research (SEC 117124) and the Medical Services Incorporated Foundation (853).

Conflict of interest statement

None declared.

References

- 1.World Health Organization. Gaining Health: The European Strategy for the Prevention and Control of Noncommunicable Diseases. Copenhagen: WHO Regional Office for Europe, 2006. [Google Scholar]

- 2.World Health Organization. Preventing Chronic Diseases: A Vital Investment. Geneva: World Health Organization; 2005. [Google Scholar]

- 3.Champagne F, Leduc N, Denis JL, Pineault R. Organizational and environmental determinants of the performance of public health units. Soc Sci Med. 1993;37:85–95. doi: 10.1016/0277-9536(93)90321-t. [DOI] [PubMed] [Google Scholar]

- 4.Riley B, Taylor M, Elliott S. Determinants of implementing heart health promotion activities in Ontario public health units: a social ecological perspective. Health Educ Res. 2001;16:425–41. doi: 10.1093/her/16.4.425. [DOI] [PubMed] [Google Scholar]

- 5.McLean S, Ebbesen L, Green K, et al. Capacity for community development: an approach to conceptualization and measurement. J Community Dev Soc. 2001;32:251–70. [Google Scholar]

- 6.Scutchfield FD, Knight EA, Kelly AV, et al. Local public health agency capacity and its relationship to public health system performance. J Public Health Manag Pract. 2004;10:204–15. doi: 10.1097/00124784-200405000-00004. [DOI] [PubMed] [Google Scholar]

- 7.Handler A, Issel M, Turnock B. A conceptual framework to measure performance of the public health system. Am J Public Health. 2001;91:1235–9. doi: 10.2105/ajph.91.8.1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lafond AK, Brown B, Macintyre K. Mapping capacity in the health sector: a conceptual framework. Int J Health Plan Manage. 2002;17:3–22. doi: 10.1002/hpm.649. [DOI] [PubMed] [Google Scholar]

- 9.Mays GP, McHugh MC, Shim K, et al. Institutional and economic determinants of public health system performance. Am J Public Health. 2006;96:523–31. doi: 10.2105/AJPH.2005.064253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Meyer AM, Davis M, Mays GP. Defining organizational capacity for public health services and systems research. J Public Health Manag Pract. 2012;18:535–44. doi: 10.1097/PHH.0b013e31825ce928. [DOI] [PubMed] [Google Scholar]

- 11.Scutchfield FD, Ingram RC. Public health systems and services research: building the evidence base to improve public health practice. Public Health Rev. 2013;35 [Google Scholar]

- 12.Hanusaik N, O’Loughlin JL, Kishchuk N, et al. Building the backbone for organisational research in public health systems: development of measures of organisational capacity for chronic disease prevention. J Epidemiol Community Health. 2007;61:742–9. doi: 10.1136/jech.2006.054049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Raphael D, Steinmetz B. Assessing the knowledge and skills of community-based health promoters. Health Promot Int. 1995;10:305–15. [Google Scholar]

- 14.Jackson C, Fortmann SP, Flora JA, et al. The capacity-building approach to intervention maintenance implemented by the Stanford Five-City Project. Health Educ Res. 1994;9:385–96. doi: 10.1093/her/9.3.385. [DOI] [PubMed] [Google Scholar]

- 15.Goodman R, Speers M, McLeroy K, et al. Identifying and defining the dimensions of community capacity to provide a basis for measurement. Health Educ Behav. 1998;25:258–78. doi: 10.1177/109019819802500303. [DOI] [PubMed] [Google Scholar]

- 16.Hawe P, Noort M, King L, Jordens C. Multiplying health gains: the critical role of capacity building within health promotion programs. Health Policy. 1997;39:29–42. doi: 10.1016/s0168-8510(96)00847-0. [DOI] [PubMed] [Google Scholar]

- 17.Goodman RM, Steckler A, Alciati MH. A process evaluation of the National Cancer Institute’s Data-based Intervention Research program: a study of organizational capacity building. Health Educ Res. 1997;12:181–97. doi: 10.1093/her/12.2.181. [DOI] [PubMed] [Google Scholar]

- 18.Crisp BR, Swerissen H, Duckett SJ. Four approaches to capacity building in health: consequences for measurement and accountability. Health Promot Int. 2000;15:99–107. [Google Scholar]

- 19.Labonte R, Laverack G. Capacity building for health promotion: Part 1. For whom? And for what purpose? Crit Public Health. 2001;11:111–27. [Google Scholar]

- 20.Labonte R, Laverack G. Capacity building for health promotion: Part 2. Whose use? And with what measurement? Crit Public Health. 2001;11:129–38. [Google Scholar]

- 21.Germann K, Wilson D. Organizational capacity for community development in regional health authorities: a conceptual model. Health Promot Int. 2004;19:289–98. doi: 10.1093/heapro/dah303. [DOI] [PubMed] [Google Scholar]

- 22.Hawe P, King L, Noort M, et al. Indicators to Help with Capacity Building in Health Promotion. North Sydney: NSW Health Department; 1999. [Google Scholar]

- 23.Labonte R, Laverack G. Capacity building for health promotion: Part 1. For whom? And for what purpose? Crit Public Health. 2001;11:111–27. [Google Scholar]

- 24.Taylor SM, Elliott S, Riley B. Heart health promotion: predisposition, capacity and implementation in Ontario public health units, 1994-96. Can J Public Health. 1998;89:410–4. doi: 10.1007/BF03404085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Heath S, Farquharson J, MacLean D, et al. Capacity building for health promotion and chronic disease prevention—Nova Scotia’s experience. Promot Educ. 2001;(Suppl. 1):17–22. [PubMed] [Google Scholar]

- 26.Smith C, Raine K, Anderson D, et al. A preliminary examination of organizational capacity for heart health promotion in Alberta’s regional health authorities. Promot Educ. 2001;(Suppl. 1):40–3. [PubMed] [Google Scholar]

- 27.Pearson TA, Bales VS, Blair L, et al. The Singapore Declaration: forging the will for heart health in the next millennium. CVD Prev. 1998;1:182–99. [Google Scholar]

- 28.Naylor P, Wharf-Higgins J, O’Connor B, et al. Enhancing capacity for cardiovascular disease prevention: an overview of the British Columbia Heart Health Dissemination Project. Promot Educ. 2001;(Suppl. 1):44–8. [PubMed] [Google Scholar]

- 29.Chinman M, Imm P, Wandersman A. Getting to Outcomes™ 2004: Promoting Accountability Through Methods and Tools for Planning, Implementation, and Evaluation. Santa Monica: RAND Corporation. Available at: http://www.rand.org/pubs/technical_reports/TR101.html. Accessed: 31 October 2012. [Google Scholar]

- 30.Joffres C, Heath S, Farquharson J, et al. Facilitators and challenges to organizational capacity building in heart health promotion. Qual Health Res. 2004;14:39–60. doi: 10.1177/1049732303259802. [DOI] [PubMed] [Google Scholar]

- 31.Lasker RD, Weiss ES, Miller R. Partnership synergy: a practical framework for studying and strengthening the collaborative advantage. Milbank Q. 2001;79:179–205. doi: 10.1111/1468-0009.00203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Carlisle S. Tackling health inequalities and social exclusion through partnership and community engagement? A reality check for policy and practice aspirations from a Social Inclusion Partnership in Scotland. Crit Public Health. 2010;20:117–27. [Google Scholar]

- 33.Taylor-Robinson DC, Lloyd-Williams F, Orton L, et al. Barriers to partnership working in public health: a qualitative study. PLoS One. 2012;7:e29536. doi: 10.1371/journal.pone.0029536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Shortell SM, Zukoski AP, Alexander JA, et al. Evaluating partnerships for community health improvement: tracking the footprints. J Health Polit Policy Law. 2002;27:49–91. doi: 10.1215/03616878-27-1-49. [DOI] [PubMed] [Google Scholar]

- 35.Weiss ES, Anderson RM, Lasker RD. Making the most of collaboration: exploring the relationship between partnership synergy and partnership functioning. Health Educ Behav. 2002;29:683–98. doi: 10.1177/109019802237938. [DOI] [PubMed] [Google Scholar]

- 36.Florin P, Mitchell R, Stevenson J, Klein I. Predicting intermediate outcomes for prevention coalitions: a developmental perspective. Eval Program Plann. 2000;23:341–6. [Google Scholar]

- 37.Perkins D, Feinberg ME, Greenberg MT, et al. Team factors that predict to sustainability indicators for community-based prevention teams. Eval Program Plann. 2011;34:283–91. doi: 10.1016/j.evalprogplan.2010.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lasker RD, Weiss ES. Creating partnership synergy: the critical role of community stakeholders. J Health Hum Serv Adm. 2003;26:119–39. [PubMed] [Google Scholar]

- 39.Roussos ST, Fawcett SB. A review of collaborative partnerships as a strategy for improving community health. Annu Rev Public Health. 2000;21:369–402. doi: 10.1146/annurev.publhealth.21.1.369. [DOI] [PubMed] [Google Scholar]

- 40.Hayes SL, Mann MK, Morgan FM, et al. Collaboration between local health and local government agencies for health improvement. Cochrane Database Syst Rev. 2011:CD007825. doi: 10.1002/14651858.CD007825.pub5. [DOI] [PubMed] [Google Scholar]

- 41.Baker PR, Francis DP, Soares J, et al. Community wide interventions for increasing physical activity. Cochrane Database Syst Rev. 2011:CD008366. doi: 10.1002/14651858.CD008366.pub2. [DOI] [PubMed] [Google Scholar]

- 42.Smith KE, Bambra C, Joyce KE, et al. Partners in health? A systematic review of the impact of organizational partnerships on public health outcomes in England between 1997 and 2008. J Public Health (Oxf) 2009;31:210–21. doi: 10.1093/pubmed/fdp002. [DOI] [PubMed] [Google Scholar]

- 43.Torjman S. Partnerships: The Good, the Bad and the Uncertain. Caledon Institute of Social Policy 1998. Available at: http://www.caledoninst.org/Publications/PDF/partners.pdf. Accessed: 23 January 2012. [Google Scholar]

- 44.Zahner SJ. Local public health system partnerships. Public Health Rep. 2005;120:76–83. doi: 10.1177/003335490512000113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Butterfoss FD, Goodman RM, Wandersman A. Community coalitions for prevention and health promotion. Health Educ Res. 1993;8:315–30. doi: 10.1093/her/8.3.315. [DOI] [PubMed] [Google Scholar]

- 46.Edmondson A, Moingeon B. From organizational learning to the learning organization. In: Grey C, Antonacopoulou E, editors. Essential Readings in Management Learning. London: Sage Publications Ltd.; 2004. pp. 21–36. [Google Scholar]

- 47.Preskill H, Boyle S. A multidisciplinary model of evaluation capacity building. Am J Eval. 2008;29:443–59. [Google Scholar]

- 48.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–50. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 49.Public Health Agency of Canada. Core Competencies for Public Health in Canada. Release 1.0. Available at: http://www.phac-aspc.gc.ca/php-psp/ccph-cesp/pdfs/zcard-eng.pdf. Accessed: 29 April 2014. [Google Scholar]

- 50.Canadian Heart Health Initiative—Ontario Project (CHHIOP) Survey of Capacities, Activities, and Needs for Promoting Heart Health. Waterloo, ON: University of Waterloo; 1997. [unpublished survey instrument] [Google Scholar]

- 51.Heart Health Nova Scotia. Measuring Organizational Capacity for Heart Health Promotion: SCAN of Community Agencies. Halifax, NS: Dalhousie University; 1996. [unpublished survey instrument] [Google Scholar]

- 52.Heart Health Nova Scotia. Capacity for Heart Health Promotion Questionnaire—Organizational Practices. Halifax, NS: Dalhousie University; 1998. [unpublished survey instrument] [Google Scholar]

- 53.Saskatchewan Heart Health Program. Health Promotion Contact Profile. Saskatoon, SK: University of Saskatchewan; 1998. [unpublished survey instrument] [Google Scholar]

- 54.Alberta Heart Health Project. Health Promotion Capacity Survey. Edmonton, AB: University of Alberta; 2000. [unpublished survey instrument] [Google Scholar]

- 55.Alberta Heart Health Project. Health Promotion Organizational Capacity Survey: Self-assessment. Edmonton, AB: University of Alberta; 2001. [unpublished survey instrument] [Google Scholar]

- 56.British Columbia Heart Health Project (BCHHP) Revised Activity Scan. Victoria, BC: Ministry of Health and Ministry Responsible for Seniors; 2001. [unpublished survey instrument] [Google Scholar]

- 57.Ontario Heart Health Project. Survey of Public Health Units. Waterloo, ON: University of Waterloo; 2003. [unpublished survey instrument] [Google Scholar]

- 58.Lusthaus C, Adrien MH, Anderson G, Carden F. Enhancing Organizational Performance: A Toolbox for Self-assessment. Ottawa, ON: International Development Research Centre; 1999. [Google Scholar]

- 59.Nathan S, Rotem S, Ritchie J. Closing the gap: building the capacity of non-government organizations as advocates for health equity. Health Promot Int. 2002;17:69–78. doi: 10.1093/heapro/17.1.69. [DOI] [PubMed] [Google Scholar]

- 60.Alberta Heart Health Project. Health Promotion Individual Capacity Survey: Self-assessment. Edmonton, AB: University of Alberta; 2001. [unpublished survey instrument] [Google Scholar]

- 61.Pedhazur E. Multiple Regression in Behavior Research. 3rd edn. Fort Worth, TX: Harcourt-Brace; 1997. [Google Scholar]

- 62.Yang Y, Green SB. A note on structural equation modeling estimates of reliability. Struct Equ Model. 2010;17:66–81. [Google Scholar]

- 63.Hu L, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct Equ Model. 1999;6:1–55. [Google Scholar]

- 64.Byrne BM. Structural Equation Modeling with LISREL, PRELIS and SIMPLIS: Basic Concepts, Applications and Programming. Mahwah, NJ: Lawrence Erlbaum Associates; 1998. [Google Scholar]

- 65.Vandenberg RJ, Lance CE. A review and synthesis of the measurement invariance literature: suggestions, practices, and recommendations for organizational research. Organ Res Methods. 2000;3:4−69. [Google Scholar]

- 66.Dishman RK, Motl RW, Saunders R, et al. Factorial invariance and latent mean structure of questionnaires measuring social-cognitive determinants of physical activity among black and white adolescent girls. Prev Med. 2002;34:100–8. doi: 10.1006/pmed.2001.0959. [DOI] [PubMed] [Google Scholar]

- 67.Marsh HW, Hey J, Johnson S, Perry C. Elite athlete self-description questionnaire: hierarchical confirmatory factor analysis of responses by two distinct groups of elite athletes. Int J Sport Psychol. 1997;28:237–58. [Google Scholar]

- 68.Centres for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48(No. RR-11). Available at: http://www.cdc.gov/mmrwr/PDF/rr/rr4811.pdf. Accessed: 26 January 2012. [Google Scholar]

- 69.Nelson JC, Rashid H, Galvin VG, et al. Public/private partners. Key factors in creating a strategic alliance for community health. Am J Prev Med. 1999;16(Suppl. 3):94–102. doi: 10.1016/s0749-3797(98)00148-2. [DOI] [PubMed] [Google Scholar]

- 70.Griffiths S, Thorpe A. Public health in transition: views of the specialist workforce. J R Soc Promot Health. 2007;127:219–23. doi: 10.1177/1466424007081782. [DOI] [PubMed] [Google Scholar]

- 71.Lawrence PR, Lorsch JW. Organization and Environment: Managing Differentiation and Integration. Cambridge, MA: Harvard University Press; 1967. [Google Scholar]

- 72.Gordon RL, Gerzoff RB, Richards TB. Determinants of US local health department expenditures, 1992 through 1993. Am J Public Health. 1997;87:91–5. doi: 10.2105/ajph.87.1.91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Kennedy VC, Moore FI. A systems approach to public health workforce development. J Public Health Manag Pract. 2001;7:17–22. doi: 10.1097/00124784-200107040-00004. [DOI] [PubMed] [Google Scholar]

- 74.Rosenberg D, Herman-Roloff A, Kennelly J, Handler A. Factors associated with improved MCH epidemiology functioning in state health agencies. Matern Child Health J. 2011;15:1143–52. doi: 10.1007/s10995-010-0680-x. [DOI] [PubMed] [Google Scholar]

- 75.Barman E, MacIndoe H. Institutional pressures and organizational capacity: the case of outcome measurement. Sociol Forum. 2012;27:70–93. [Google Scholar]

- 76.Halverson PK, Miller CA, Kaluzny AD, et al. Performing public health functions: the perceived contribution of public health and other community agencies. J Health Hum Serv Adm. 1996;18:288–303. [PubMed] [Google Scholar]

- 77.Shah R, Goldstein SM. Use of structural equation modeling in operations management research: looking back and forward. J Oper Manag. 2006;24:148–69. [Google Scholar]

- 78.Podsakoff PM, Organ DW. Self-reports in organizational research: problems and prospects. J Manag. 1986;12:531–44. [Google Scholar]

- 79.Mitchell V. Using industrial key informants: some guidelines. J Market Res Soc. 1994;36:139–44. [Google Scholar]

- 80.Krannich RS, Humphrey CR. Using key informant data in comparative community research: an empirical assessment. Sociol Methods Res. 1986;14:473–93. [Google Scholar]

- 81.Steckler A, Goodman RM, Alciati MH. Collecting and analyzing organizational level data for health behaviour research (editorial) Health Educ Res. 1997;12:i–iii. doi: 10.1093/her/12.3.279. [DOI] [PubMed] [Google Scholar]

- 82.Donaldson SI, Grant-Vallone EJ. Understanding self-report bias in organizational behavior research. J Bus Psychol. 2002;17:245–60. [Google Scholar]

- 83.Paradies Y, Stevens M. Conceptual diagrams in public health research. J Epidemiol Community Health. 2005;59:1012–3. doi: 10.1136/jech.2005.036913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Earp JA, Ennett ST. Conceptual models for health education research and practice. Health Educ Res. 1991;6:163–71. doi: 10.1093/her/6.2.163. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.