Abstract

Fidelity in implementing an intervention is critical to accurately determine and interpret the effects of an intervention. It is important to monitor the manner in which the behavioral intervention is implemented (e.g. adaptations, delivery as intended and dose). Few interventions are implemented with 100% fidelity. In this study, high school health teachers implemented the intervention. To attribute study findings to the intervention, it was vital to know to what degree the intervention was implemented. Therefore, the purposes of this study were to evaluate intervention fidelity and to compare implementation fidelity between the creating opportunities for personal empowerment (COPE) Healthy Lifestyles TEEN (thinking, emotions, exercise, and nutrition) program, the experimental intervention and Healthy Teens, an attention-control intervention, in a randomized controlled trial with 779 adolescents from 11 high schools in the southwest region of the United States. Thirty teachers participated in this study. Findings indicated that the attention-control teachers implemented their intervention with greater fidelity than COPE TEEN teachers. It is possible due to the novel intervention and the teachers’ unfamiliarity with cognitive-behavioral skills building, COPE TEEN teachers had less fidelity. It is important to assess novel skill development prior to the commencement of experimental interventions and to provide corrective feedback during the course of implementation.

Introduction

Fidelity of behavioral interventions, including the methodological strategies to monitor and strengthen the interventions [1], has received considerable attention in the past 3 decades. Fidelity is necessary for accurate assessment and interpretations of treatment effects [2]. Intervention outcomes are a result of what components the intervention contains and how the intervention was delivered rather than just the number of intervention components delivered [3].

Researchers in the early 1980s began to focus on improving characteristics of delivered interventions, including their strength, integrity and effectiveness [4]. Waltz et al. [5] proposed additional methodological improvements to strengthen an intervention, including the assessment of adherence and competence. They advocated for the benefits of using treatment manuals and the need for conducting manipulation checks. The dimensions of treatment receipt and enactment were introduced a short time later [6]. A landmark manuscript focusing on fidelity was published in 2004 by the Treatment Fidelity Workgroup of the NIH Behavior Change Consortium [7]. They recommended evaluating five components of fidelity: study design, provider training, treatment delivery, treatment receipt and enactment of treatment skills. Gearing et al. [8] published a review of aspects of fidelity that were identified in published manuscripts between 1980 and 2009. They developed a guide for evaluating fidelity in four of the five targeted components recommended by the Treatment Fidelity Workgroup. The authors provide a table outlining the assessment of major fidelity components and recommend assessing intervention design, intervention training, monitoring the intervention and monitoring the intervention receipt. Within each category, specific points are categorized for the protocol, execution, maintenance, feedback, threats and measurement. Gearing et al. [8] did not include enactment because an intervention may be delivered with fidelity, but the participant(s) may not be willing or able to apply it.

Despite a researcher’s best intention to design and implement an intervention with fidelity, it is common for one or more aspects of fidelity to not be completed and/or documented [8, 9]. Therefore, it is helpful that ongoing work in behavioral research focus ‘strongly on quantification of treatment fidelity rather than assuming that fidelity was achieved because of rigorous design plans’ [10] (p. 53). Documenting the implementation of each component and subsequent quantification can aid researchers in better evaluating intervention fidelity and also may lend understanding of treatment effects or lack thereof.

Beyond a rigorous design and plan for monitoring fidelity of an intervention, researchers often are challenged with lack of adherence to program protocol resulting in the inevitable adaptation of their intervention when it is implemented [11]. ‘It is also necessary to know how a specific intervention should be implemented and under which circumstances it can be successful’ [12] (p. 1). Carvalho et al. [11] identified five types of adaptations that took place in 12 sites that implemented evidence-based interventions, which include (i) changes to educational materials, (ii) changes to intended audience, (iii) changes to program delivery, (iv) adding new activities and (v) deleting core elements. It is important to be aware of the types of adaptations that occur frequently in intervention research and monitor which occur during implementation.

The creating opportunities for personal empowerment (COPE) Healthy Lifestyles TEEN (Thinking, Emotions, Exercise and Nutrition) (COPE TEEN) program is a one-semester cognitive-behavioral skills building (CBSB) intervention to improve a teen’s physical and mental health. This program includes cognitive reframing, problems solving, stress management, goal setting, physical activity and nutritional information (Table I). High school health teachers implemented the intervention 1 day per week during their regular scheduled health class (∼50 min in length). Most teachers are unfamiliar with CBSB strategies and many learned these techniques for the first time during training for this intervention. The Healthy Teens control program also was taught over the course of one semester by health teachers in their regular scheduled health class. Healthy Teens was based on increasing a teen’s health literacy and included topics familiar to health teachers such as first aid, sun safety and transportation safety (Table I). We were interested to learn if there would be differences in fidelity between each group due to the novel content learned by the COPE TEEN teachers. Therefore, the purposes of this study are to (i) discuss intervention fidelity in the COPE Healthy Lifestyles TEEN program, (ii) describe the fidelity of intervention design, training, delivery and receipt in the COPE TEEN group and (iii) compare fidelity to the intervention between the COPE TEEN and Healthy Teen intervention groups in a prospective blinded (to teachers and participants) randomized controlled trial (RCT) to promote mental and physical health in high school teens.

Table I.

Intervention curriculum

| COPE TEEN session content |

Healthy teens content |

||

|---|---|---|---|

| Session no. | Title | Session no. | Title |

| 1 | Healthy Lifestyles | 1 | Health Literacy |

| 2 | Self-Esteem and Positive Thinking | 2 | Sun Safety and Tanning |

| 3 | Setting Goals and Problem-Solving | 3 | Allergies and Asthma |

| 4 | Stress and Coping | 4 | Health Professions |

| 5 | Dealing with Emotions in Healthy Ways | 5 | Oral Hygiene |

| 6 | Personality and Effective Communication | 6 | Infectious Diseases |

| 7 | Activity—Let's Keep Moving | 7 | Immunizations |

| 8 | Heart Rate and Stretching | 8 | Anatomy of the Eye |

| 9 | Nutrition Basics | 9 | Anatomy of the Heart |

| 10 | Reading Labels | 10 | Genetics |

| 11 | Portion Sizes | 11 | Transportation Safety |

| 12 | Eating for Life and Social Eating—Party Heart(y) | 12 | Environmental Safety |

| 13 | Snacks | 13 | Sustaining the Environment |

| 14 | Healthy Choices | 14 | First Aid |

| 15 | Wrap-up | 15 | Wrap-up |

Methods

Sample/participants

Health teachers (N = 30) were requested by their school and/or district leadership to participate in the RCT by delivering the intervention in their health courses during one semester. All health teachers referred by their district were eligible to participate.

Students were invited to participate if they were aged 14–16 years and enrolled in health education courses at 11 high schools from two school districts in a large metropolitan city in the southwest United States. To participate in the study, the teen needed to provide assent, parental consent and be free from medical conditions that would not allow them to participate in the physical activity component of the program if they were randomized to the COPE TEEN group. Parents of the teens were invited to participate, but it was not mandatory. A more detailed description of the study methods has been published previously [13].

Data collection

Data were collected between December 2009 and December 2012. Teachers in both intervention groups were introduced to the study during a 1-day training prior to the start of a school semester. The training for the COPE TEEN teachers consisted of (i) a review of the literature on adolescent/childhood obesity and mental health problems, (ii) description of the research study aims, objectives and procedures, including prior feedback from teachers, (iii) review of the research protocol, (iv) completion of consent and background questionnaire including teaching experience and teaching satisfaction as well as demographic information, (v) orientation to the program manual, (vi) explanation and demonstration of CBSB, (vii) review of all session content along with emphasis of key elements, (viii) demonstration of and practice implementing available physical activities that could be done within the limited space of a classroom and (ix) use of pedometers. The training for the Healthy Teens teachers consisted of (i) review of the research protocol, (ii) completion of consent and background questionnaire, including teaching experience and teaching satisfaction as well as demographic information, (iii) orientation to the program manual and (iv) review of all session attention-control content along with emphasis of key elements and (v) use of pedometers.

After the teacher training, research team members introduced the study to all students in each health class participating during the first week of the semester and sent consent/assent packets home with all teens who expressed interest in study participation. Students with assent and parental consent were enrolled in the study. Enrollment in the study included completing questionnaires at baseline, post-intervention, 6-month follow-up and 12-month follow-up time points. Parent participation in the study included questionnaires at baseline and post-intervention. Additionally, students who were identified as overweight or obese from calculated body mass index (BMI) were asked to assent (parental consent) to provide a fasting finger stick blood sample for analysis of blood lipids.

Whether students were enrolled in the study or not, all adolescents in the participating health education courses received either (i) a 15-week, 15-session multi-component educational and CBSB intervention with physical activity (COPE TEEN) or (ii) a 15-week, 15-session attention-control program (Healthy Teens) focusing on common adolescent health topics.

Teachers at the 1-day training received a $25 gift card, a t-shirt and a universal serial bus storage device with study materials. Each teacher received $100 for completing questionnaires at the end of the semester. All student and parent participants in the study received gift card incentives for participation; a total of $55 for the students (an additional $30 for blood sampling) and a total of $40 for the parent.

Observations and measure

Teachers were observed during intervention implementation by research team members. The goal was to arrive to observe the session unannounced at four sessions (25%). Four of the research team members were trained to use a standardized fidelity observation form by the principal investigator and the project manager. Inter-rater reliability was 90%. Observers were not blinded to the group assignment.

The fidelity observation form was created by reviewing literature for relevant information that was used to assess fidelity in prior intervention studies through observational methods [14, 15]. The goal was to create an easily completed form to evaluate intervention delivery including observations focusing on (i) teacher preparation, (ii) presentation of learning objectives, (iii) teacher delivery of intervention, (iv) adherence to lesson plan including critical intervention input subcategories of role play, homework and physical activity (COPE TEEN), (v) participation level of students and (vi) cognitive behavior skills building practice by students (COPE TEEN). Participation of students in both intervention groups and cognitive behavior skills building practiced in the COPE TEEN group were included because each was emphasized in the classroom as part of the intervention. Each of the categories was evaluated with a 5-point Likert scale. There were additional dichotomous questions (e.g. yes/no) under each category (e.g. ‘PowerPoint® displayed’, ‘Teacher addressed all learning objectives’ and ‘Utilized examples in teacher’s manual’). These additional questions were intended to assist the observer in rating the item on the Likert scale (Table II). The responses from the observation form were analyzed individually rather than with a summation score. The form was evaluated for content validity by six research team members. A score of 4 out of 5 or greater on the Likert scale and >80% of affirmative responses on the dichotomous items were chosen to be considered a desirable level of fidelity [16]. Training consisted of reviewing the protocol for conducting an observation and items on the observation form, discussing selection of responses and selecting the day for an observation. Background education of observers included (i) educational training of the intervention content in both the COPE TEEN and Healthy Teen groups, (ii) use of the observation form and (iii) practice observational sessions to improve inter-rater reliability. Prior to independently observing an intervention session, two or three observers monitored the same session and compared results.

Table II.

Adherence to interventions: GEE modela (dichotomous items) and count

| 95% CI |

Healthy Teen |

COPE TEEN |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Category/question | Wald Chi Square | Sig | Adjusted odds ratio | Lower | Upper | n | Yes | No | n | Yes | No |

| Preparation | |||||||||||

| Was the teachers manual present? | 1.41 | 0.24 | 0.52 | 0.17 | 1.54 | 60 | 41 | 19 | 54 | 32 | 22 |

| Was the PowerPoint presentation displayed to the students? | 5.72 | 0.02 | 0.05 | 0.00 | 0.57 | 59 | 58 | 1 | 56 | 49 | 7 |

| Were the necessary materials for role play, physical activity, etc, present? | 0.32 | 0.57 | 0.65 | 0.14 | 2.95 | 39 | 35 | 4 | 39 | 34 | 5 |

| Was it evident the teacher reviewed the materials prior to the lesson? | 0.00 | 0.97 | 1.02 | 0.41 | 2.53 | 59 | 45 | 14 | 54 | 42 | 12 |

| Learning objectives | |||||||||||

| Learning objectives were not mentioned by the teacher | 17.60 | 0.00 | 4.88 | 2.33 | 10.24 | 58 | 8 | 50 | 56 | 14 | 42 |

| Learning objectives were referenced but not explained by the teacher | 6.74 | 0.01 | 4.51 | 1.45 | 14.05 | 57 | 3 | 54 | 56 | 9 | 47 |

| Learning objectives were handed out to students | 5.15 | 0.02 | 0.30 | 0.11 | 0.85 | 40 | 28 | 12 | 49 | 23 | 26 |

| Learning objectives were read/spoken to the students by the teacher | 10.54 | 0.00 | 0.18 | 0.06 | 0.51 | 56 | 51 | 5 | 56 | 41 | 15 |

| Learning objectives were discussed with the students by the teacher | 20.37 | 0.00 | 0.13 | 0.06 | 0.32 | 57 | 51 | 6 | 56 | 37 | 19 |

| Instruction | |||||||||||

| Teacher addresses all learning objectives | 2.20 | 0.14 | 0.28 | 0.05 | 1.51 | 59 | 54 | 5 | 54 | 42 | 12 |

| Teacher stayed on task (refrained from irrelevant or lengthy discussions) | 0.02 | 0.89 | 1.13 | 0.22 | 5.69 | 60 | 50 | 10 | 55 | 45 | 10 |

| Teacher summarized important points and related discussion to previous and future topics/concepts | 9.72 | 0.00 | 0.15 | 0.04 | 0.49 | 60 | 58 | 2 | 55 | 49 | 6 |

| Teacher adequately addressed questions that were raised in class | 0.46 | 0.50 | 0.43 | 0.04 | 4.91 | 60 | 58 | 2 | 56 | 54 | 2 |

| Ideas were related to similar concepts | 2.16 | 0.14 | 0.22 | 0.03 | 1.65 | 61 | 59 | 2 | 56 | 52 | 4 |

| Adherence to lesson plan | |||||||||||

| Discussed all PowerPoint slides | 0.48 | 0.49 | 0.67 | 0.22 | 2.06 | 55 | 38 | 17 | 48 | 30 | 18 |

| Key points of the lesson were emphasized | 0.45 | 0.50 | 0.61 | 0.14 | 2.59 | 60 | 56 | 4 | 54 | 50 | 4 |

| Utilized examples in teachers manual | 3.38 | 0.07 | 0.35 | 0.12 | 1.07 | 59 | 50 | 9 | 53 | 37 | 16 |

| Adherence to lesson plan: role play/case scenario | |||||||||||

| Completed role play/case scenario for discussion | 1.57 | 0.21 | 0.35 | 0.07 | 1.82 | 31 | 28 | 3 | 49 | 40 | 9 |

| Followed script for role play/case scenario | 3.40 | 0.07 | 0.28 | 0.07 | 1.08 | 30 | 27 | 3 | 49 | 34 | 15 |

| Encouraged discussion of role play/case scenario | 2.28 | 0.13 | 0.33 | 0.08 | 1.39 | 29 | 26 | 3 | 49 | 38 | 11 |

| Adherence to lesson plan: homework | |||||||||||

| Assigned homework as indicated in lesson plan | 0.07 | 0.79 | 0.87 | 0.33 | 2.33 | 56 | 44 | 12 | 52 | 41 | 11 |

| Provided homework instructions | 1.29 | 0.26 | 0.59 | 0.24 | 1.47 | 56 | 42 | 14 | 51 | 34 | 17 |

| Collected homework as indicated in lesson plan | 0.02 | 0.90 | 1.07 | 0.41 | 2.79 | 48 | 28 | 20 | 53 | 33 | 20 |

| Encouraged students to complete homework | 0.24 | 0.62 | 1.34 | 0.42 | 4.31 | 55 | 46 | 9 | 54 | 47 | 7 |

| Discussed completed homework and answered questions | 3.74 | 0.05 | 0.39 | 0.15 | 1.01 | 49 | 30 | 19 | 52 | 23 | 29 |

| Active participation | |||||||||||

| Students maintained eye contact with teacher and/or power point presentation | 1.95 | 0.16 | 0.20 | 0.02 | 1.93 | 57 | 56 | 1 | 55 | 51 | 4 |

| Students raised hands | 0.56 | 0.46 | 0.63 | 0.19 | 2.13 | 60 | 57 | 3 | 55 | 51 | 4 |

| Students asked questions | 6.49 | 0.01 | 0.23 | 0.07 | 0.71 | 60 | 57 | 3 | 54 | 46 | 8 |

| Students expressed opinions and personal experiences | 0.64 | 0.42 | 0.64 | 0.21 | 1.93 | 60 | 56 | 4 | 55 | 50 | 5 |

| Practices skills | |||||||||||

| Students participated in the skill building activity | 0.63 | 0.43 | 0.48 | 0.08 | 2.94 | 52 | 50 | 2 | 50 | 47 | 3 |

| Students completed the homework | 2.30 | 0.13 | 0.39 | 0.12 | 1.31 | 48 | 40 | 8 | 46 | 31 | 15 |

aGEE model controlled for years teaching experience.

Ethical considerations

The study was approved by the university Institutional Review Board and each participating school district.

Data analysis

Data analysis included descriptive statistics and logistic regression for repeated measures using generalized estimating equations (GEE) models for the binary responses and the Likert-scale responses on the observation form comparing teacher’s implementation between the two groups. All GEE models controlled for years of teaching experience as it was significantly higher in the Healthy Teen teachers at baseline [Healthy Teens = 17.09 years (SD = 8.27), COPE TEEN = 11.43 years (SD = 6.24); P = 0.04]. Power was sufficient to identify a one standard deviation difference.

Results

All health teachers approached about involvement in the study consented to participate. Thirty teachers were observed implementing the interventions, including 16 Healthy Teens teachers and 14 COPE TEEN teachers. Teachers in both groups were similar in age and years teaching health courses and education (Table III). Healthy Teen teachers had significantly more years teaching overall than COPE TEEN teachers. A total of 117 observations were completed [Healthy Teens = 61 observations, (M = 3.81 per teacher); COPE TEEN = 56 observations, (M = 4.00 per teacher)].

Table III.

Teacher demographics by group

| Total (n = 30) |

COPE TEEN (n = 14) |

Healthy teen (n = 16) |

|||||

|---|---|---|---|---|---|---|---|

| Characteristics | M | SD | M | SD | M | SD | P |

| Age (years)a | 43.23 | 9.96 | 40.21 | 10.67 | 45.88 | 8.79 | 0.13 |

| Teaching experience (years)a | 14.45 | 7.81 | 11.43 | 6.24 | 17.09 | 8.27 | 0.04 |

| Teaching health education (years)a | 8.15 | 5.93 | 8.50 | 5.61 | 7.84 | 6.36 | 0.77 |

| Total |

COPE TEEN |

Healthy teen |

P | ||||

| n | % | n | % | n | % | ||

| Educationb | |||||||

| Four-year college or university | 2 | 6.67 | 2 | 14.29 | 0 | 0.00 | 0.37 |

| Have taken Master's level credits | 9 | 30.00 | 4 | 28.57 | 5 | 31.20 | |

| Completed Master's degree | 18 | 60.00 | 7 | 50.00 | 11 | 68.75 | |

| Have taken Doctoral level credits | 1 | 3.33 | 1 | 7.14 | 0 | 0.00 | |

M, mean; SD, standard deviation.

at test.

bChi square.

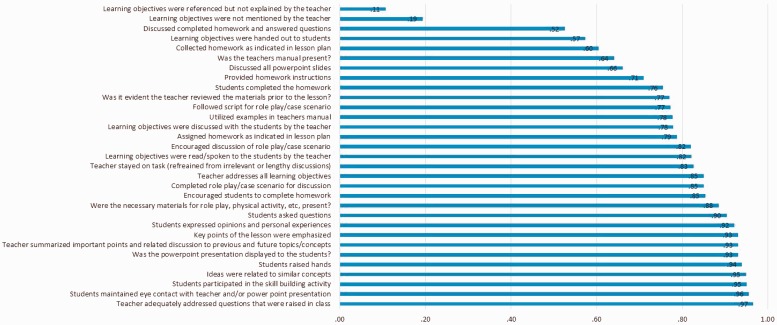

Fidelity in both groups

The proportion of teachers completing the fidelity components varied (Fig. 1). There was only one component that was completed <50% of the time. Eleven percent of the learning objectives were referenced but not explained by the teacher. There were five components that were completed by between 50 and 70% of the observations. These items related to learning objectives, instruction and adherence to lesson plan. There were 14 components that were completed between 71 and 89% of the time. These items related to learning objectives, preparation, instruction, adherence to lesson plan and practicing skills. There were 10 components that were completed >90% of the time and included components regarding preparation, instruction, adherence to lesson plan, active participation and practicing skills.

Fig. 1.

Proportion of dichotomous fidelity components completed by entire sample.

Narrative review of fidelity in COPE teachers

We completed a detailed accounting of implementation fidelity in the COPE TEEN teachers for the four components of intervention fidelity based on recommendations by Gearing et al. [8] (Table IV). Overall, most components were addressed in this study, but there were several aspects of fidelity that were not fully measured or addressed. Two examples include (i) a mechanism was not in place to assess teacher skill development prior to intervention implementation and (ii) a rigorous protocol was not in place to provide corrective feedback when protocol deviations occurred during implementation.

Table IV.

Intervention fidelity components for COPE TEEN

| Design | Training | Delivery | Receipt | |

|---|---|---|---|---|

| Protocol |

|

|

|

|

| Execution |

|

|

|

|

| Maintenance | NA | NA |

|

|

| Feedback |

|

|

|

|

| Threats | Internal

|

Internal

|

|

|

| Measurement |

|

A mechanism was not in place to assess teacher skill development for intervention implementation and ongoing delivery |

|

|

PA, physical activity.

Comparison of fidelity between groups

Adherence to intended core elements (e.g. Likert scale items) were documented with two significant differences (Table V). Healthy Teen teachers demonstrated increased clarity in describing the learning objectives compared with the COPE TEEN teachers [OR: 0.51; 95% confidence interval (CI): 0.35–0.75; P = 0.001], and the session content was delivered as detailed in the manual by teachers in the Healthy Teen group (OR: 0.68; 95% CI: 0.46–1.0; P = 0.05) when adjusted for teaching years. Adherence to prescribed interventionists’ behaviors and teens’ responses (e.g. dichotomous questions) also were analyzed (Table II). Several significant differences were present between the two groups. All differences favored the Healthy Teens teachers, including (i) more teachers displayed the PowerPoint® presentations during the intervention (OR: 0.05; 95% CI: 0.00–0.57; P = 0.02), (ii) all aspects regarding the learning objectives were presented (see Table V for specifics), (iii) summarization of important points and discussion was completed (OR: 0.15; 95% CI: 0.04–0.49; P = 0.002), (iv) discussion of completed homework and answering questions was completed (OR: 0.39; 95% CI: 0.15–1.01; P = 0.05) and (v) more active participation of students, demonstrated by students asking questions occurred (OR: 0.23; 95% CI: 0.07–0.71; P = 0.01).

Table V.

Adherence to intervention core elements: GEEa (Likert scale items)

| Healthy teens |

COPE TEEN |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Question | Wald Chi Square | Sig. | Adjusted odds ratio | Lower (95% CI) | Upper (95% CI) | n | Meanb | SE | n | Meanb | SE |

| Was the teacher prepared to teach the lesson? | 1.40 | 0.24 | 0.80 | 0.55 | 1.16 | 59 | 3.96 | 1.44 | 53 | 3.73 | 1.41 |

| Were the learning objectives clear to the students? | 11.92 | 0.00 | 0.51 | 0.35 | 0.75 | 60 | 4.03 | 1.71 | 56 | 3.36 | 1.67 |

| How appropriately was the material presented by the teacher? | 3.92 | 0.05 | 0.68 | 0.46 | 1.00 | 60 | 4.30 | 1.35 | 56 | 3.91 | 1.38 |

| Did the teacher faithfully follow the lesson plans in the curriculum?: instruction | 0.74 | 0.39 | 0.83 | 0.54 | 1.27 | 58 | 4.11 | 1.47 | 56 | 3.92 | 1.54 |

| Did the teacher faithfully follow the lesson plans in the curriculum?: role play/case scenario discussion | 0.21 | 0.64 | 0.81 | 0.33 | 2.00 | 40 | 3.53 | 2.78 | 52 | 3.32 | 3.04 |

| Did the teacher faithfully follow the lesson plans in the curriculum?: homework | 2.76 | 0.10 | 0.63 | 0.36 | 1.09 | 55 | 3.91 | 2.15 | 56 | 3.44 | 2.18 |

| How actively did the students participate in the lesson? | 0.25 | 0.62 | 0.88 | 0.54 | 1.44 | 59 | 4.31 | 1.73 | 56 | 4.19 | 1.79 |

| How many students practiced the skills and/or messages of the lesson? | 3.09 | 0.08 | 0.63 | 0.38 | 1.05 | 55 | 4.33 | 1.91 | 56 | 3.87 | 2.00 |

aGEE model controlled for years teaching experience.

bAdjusted mean controlled for years teaching experience.

Discussion

This study allowed for careful assessment of the fidelity of intervention implementation. The fidelity observation form created for this intervention was comprehensive and addressed important fidelity components. Overall, 17 of the 31 (55%) dichotomous items were completed by the teachers 80% or more of the time. Lower fidelity (<70%) was observed for components related to learning objectives, homework, teacher’s manual being present and discussing all PowerPoint® slides. The greatest fidelity (>90%) was observed in teachers addressing questions, students’ active participation and several aspects of implementing the intervention.

It was enlightening to compare implementation between the COPE TEEN and the Healthy Teens experimental groups implementing different curricula. This is the first study that we are aware of to document the comparison of fidelity of implementation between an experimental intervention group and an attention-control group. In this study, significant differences were identified in fidelity for implementation between COPE TEEN and Healthy Teens. All significant differences in implementation favored the Healthy Teen teachers and occurred in all aspects of presenting the learning objectives, how appropriately the material was presented, if the PowerPoint® presentation was displayed during implementation, summarization of important points by the teacher, discussion of completed homework and student participation by asking questions. Fidelity may have been greater in the Healthy Teen teachers because they had significantly more years teaching experience compared with the COPE TEEN teachers. It is challenging to determine how much of an effect the limitations in fidelity had on the study’s outcomes. Despite less fidelity in the COPE TEEN teachers, important outcome differences were achieved between the two groups of students. The COPE TEEN group had significantly (i) greater number of steps per day (P = 0.03), (ii) less BMI (P = 0.01), (iii) less depression in the students who started the study with severe depressive symptoms (P = 0.02), (iv) lower alcohol use (P = 0.04) and (v) higher average scores on all Social Skills Rating Scale (P < 0.05) and higher health course grades (P = 0.01) [17]. Although intervention fidelity was less in the COPE TEEN teachers, positive outcomes were achieved, which may indicate that teens who receive the COPE TEEN intervention may receive even more benefit in the future if the intervention achieves greater fidelity.

Some possible effects of the fidelity differences between groups to consider include (i) the likelihood that the scripted sessions were not taught per the manual when the PowerPoint® presentations were not displayed, (ii) the students’ lack of clear understanding of the content for the session when the learning objectives were not discussed per the manual, (iii) teacher reinforcement of session content may not have been sufficient without the discussion of each session’s homework assignment, (iv) fewer questions posed by the students may have indicated that the students were less ‘engaged’ in the session and (v) cognitive behavior skills building practice may not have been appropriately presented and practiced when the materials were not appropriately presented.

None of the teachers in either group implemented the intervention with 100% fidelity. Durlak and DuPre [16] reviewed literature on the influence of implementation on program outcomes. They noted that it is unrealistic to expect a perfect or near-perfect implementation. Their review of interventions identified positive program results with levels around 60% with most reviewed studies achieving 80% fidelity.

Numerous factors are interrelated when evaluating intervention outcomes, including adherence and competence [18]. Adherence and competence have been shown to independently predict outcomes [19]. Adherence to intended intervention is an important aspect of fidelity. In this study, the Healthy Teens teachers adhered more closely to the proscribed intervention. Importantly, the content of the Healthy Teens program was aligned more closely with what would be expected as core knowledge of health teachers and district curriculum requirements (Table I). There were minimal new knowledge or skills that needed to be learned to implement the Healthy Teen program as it included content across a variety of common teen health topics. The Healthy Teen program did not include any CBSB activities and may have been easier and more familiar to teach. Conversely, the COPE TEEN curriculum contained new content about cognitive-behavior skills building that the teachers had to first learn themselves and then teach their students. New content centered primarily on understanding and implementing CBSB activities (Table I) [13]. CBSB activities embedded in COPE TEEN included setting goals, increasing communication, recognition of unhealthy habits, awareness of stress responses and interconnection of thoughts and actions. Skills developed during this intervention help the teen to recognize and think about their unhealthy behaviors and have been shown to improve behavior change [17]. We theorize that the decreased adherence to the COPE TEEN intervention may be related to the teachers’ limited proficiency in teaching the new content. We anticipate with added exposure and experience in teaching the curriculum, the intervention teachers’ adherence and competence would improve.

Despite the limitations in fidelity for implementing the intervention in the COPE TEEN teachers, significant favorable results were found in the study [20]. One theoretical model of program implementation proposes that the effects of fidelity on program outcomes are moderated by participant responsiveness [21]. The active participation of students and teacher responsiveness to questions may have facilitated achievement of some anticipated program outcomes. Wenz-Gross [22] introduced a year-long curriculum to preschool teachers to improve problematic behaviors. They provided direct support for 2 years and then monitored implementation the third year. It was noted that, by year 3, the teachers were able to independently implement the intervention with high fidelity. This finding may indicate that individuals that are trained but new in delivering interventions will need more (i) frequent educational sessions and (ii) on-going supervision and consultation throughout the intervention program to improve their confidence and ability to deliver the intervention as planned as well as to emphasize key components of the intervention.

Another factor to consider when evaluating outcomes in an intervention program is the degree to which the program was adapted. Carvalho et al. [11] suggested, ‘The tension between fidelity and adaptation might well be reframed as a natural process of program evolution’. Durlak and DuPre [16] identified factors affecting implementation in five categories including characteristics of innovations, individuals and communities and features associated with the prevention delivery and support systems. In our intervention, teacher adaptations to the interventions occurred frequently when changes were made to the PowerPoint® presentations. Other teachers omitted curriculum activities or added new materials not in the manualized intervention. These changes were unexpected because these interventions were created for the intended age of the students, were culturally relevant and designed to be implemented in a health course. Future healthy lifestyle interventions in the school setting will benefit from additional teacher input and collaboration during the development phase when critical intervention content and planning for the delivery of that content is created. In addition, a protocol must be in place prior to implementation of the intervention to address deviations that are observed during fidelity checks.

Lessons learned and implications for future intervention research

Durlak and DuPre [16] suggest eight steps that can be taken to improve implementation: ‘(i) specify the essential ingredients of an intervention; (ii) collaborate with change agents in field settings to tailor the program to the target setting; (iii) obtain a clear commitment to administer the agreed-upon intervention; (iv) train change agents to conduct the program effectively; (v) provide on-going supervision and consultation once the program has begun; (vi) be ready for unexpected problems; (vii) do pilot work and (viii) designate staff with responsibilities for implementation’ (p. 14). LaChausse et al. [23] also identified a need for an enhanced comprehensive teacher training rather than a one shot curriculum training to improve a teacher’s implementation fidelity.

In our study, we recognized the need to have teachers involved early in the process. School administration and school boards must demonstrate interest and buy-in initially, but interest in the intervention program and research study must quickly filter down to the teachers to improve direct interaction with the teachers regarding their participation. Another important factor is to obtain teacher input regarding the intervention at several planning stages. Information elicited from the teachers prior to implementation can help to adapt the intervention as needed prior to implementation and will improve the integrity of the intervention. A debriefing after completion of each session will allow teachers to share their experience implementing the intervention and will provide more feedback for future revisions and improvements to the interventions. In our post-implementation evaluation survey of the program, 79% of COPE teachers indicated they would recommend one or more things changed in the intervention. Less than half (43%) of the COPE TEEN teachers indicated they had enough time to present all of the PowerPoint® slides, fully discuss the content and initiate an in-class physical activity. Fifty seven percent of the COPE TEEN teachers indicated the program provided them with new skills and/or useful knowledge. We recommend assigning a study team member as a mentor to assist the teacher during the first few intervention sessions to support the teacher as he/she implements new information. Several teachers also indicated that they would have liked a more flexible curriculum so that they could align the COPE TEEN session content with required school district core health curriculum as much of the same content (i.e. coping, stress, healthy nutrition and physical activity) is included in both the COPE TEEN and the school district health curriculum. Ultimately, we believe we would have had more buy-in for delivery of the intervention and, therefore, improve fidelity, if the teachers were able to have early more input and direct support in the delivery of the intervention.

Limitations

This study had several limitations including non-blinded observers, a small sample size and, occasionally, due to scheduling issues, the class observations were announced to the teacher prior to the planned session. Every attempt by the observers was made to arrive at the classroom unannounced for the observation of the intervention. Teachers were able to choose which day of the week that they wanted to present the COPE TEEN or Healthy Teens intervention. Often, the predetermined day for delivery of the interventions changed due to school scheduling or other classroom demands and the observer arrived when the teacher was not delivering the intervention. To avoid numerous missed observation opportunities, some observations were scheduled with the teacher on a specific day. Additionally, the observations are only a random sample of the program sessions, and the results are assumed to generalize to all program sessions. Our intervention lacked a rigorous protocol for corrective feedback and ongoing support/coaching for program facilitators, which we now recognize as vitally important to include in future intervention work. We also did not systematically document teacher adaptation of the interventions.

Although coaching has been documented as a beneficial component to increase implementation fidelity (particularly when program content and skills are novel), there are challenges inherent in translating this model into a school or public health setting [24]. Some appropriate coaching strategies in the school setting to consider may include peer supervision, coaching via telephone, creation of an informational intervention blog or delivery of session specific tips via email prior to each lesson.

Conclusions

Fidelity to the intervention is essential to measure in intervention research. Each aspect of fidelity needs to be carefully addressed early in the planning process of the study’s implementation. To sustain intervention programs in the school setting, collaboration with those individuals who will be responsible for the delivery of the intervention long after the research team leaves the setting, is paramount. Early and sustained input from teachers during the development of the intervention content, protocol planning and implementation of the intervention is vital. Adaptation, without loss of key programmatic ingredients, may be necessary to accommodate implementation of healthy lifestyle interventions such as COPE TEEN in schools. Frequent monitoring by the research team with planned corrective follow-up and support is necessary to improve the delivery fidelity of the intervention.

Acknowledgements

Special thanks to Kim Weberg, Kristine Hartmann, Alan Moreno, Meghan Paradise and Jonathon Rose for all of their efforts on and dedication to this study.

Funding

This work was supported by the National Institutes of Health/National Institute of Nursing Research (grant number 1R01NR012171) (PI: Bernadette Melnyk).

Conflict of interest statement

None declared.

References

- 1.Borrelli B, Sepinwall D, Ernst D, et al. A new tool to assess treatment fidelity and evaluation of treatment fidelity across 10 years of health behavior research. J Consult Clin Psychol. 2005;73:852–60. doi: 10.1037/0022-006X.73.5.852. [DOI] [PubMed] [Google Scholar]

- 2.Perepletchikova F, Kazdin AE. Treatment integrity and therapeutic change: Issues and research recommendations. Clin Psychol Sci Pract. 2005;12:365–83. [Google Scholar]

- 3.Song MK, Happ MB, Sandelowski M. Development of a tool to assess fidelity to a psycho-educational intervention. J Adv Nurs. 2010;66:673–82. doi: 10.1111/j.1365-2648.2009.05216.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yeaton WH, Sechrest L. Critical dimensions in the choice and maintenance of successful treatments: strength, integrity, and effectiveness. J Consult Clin Psychol. 1981;49:156–67. doi: 10.1037//0022-006x.49.2.156. [DOI] [PubMed] [Google Scholar]

- 5.Waltz J, Addis ME, Koerner K, et al. Testing the integrity of a psychotherapy protocol: assessment of adherence and competence. J Consult Clin Psychol. 1993;61:620–30. doi: 10.1037//0022-006x.61.4.620. [DOI] [PubMed] [Google Scholar]

- 6.Lichstein KL, Riedel BW, Grieve R. Fair tests of clinical trials: A treatment implementation model. Adv Behav Res Ther. 1994;16:1–29. [Google Scholar]

- 7.Bellg AJ, Borrelli B, Resnick B, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH behavior change consortium. Health Psychol. 2004;23:443–51. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- 8.Gearing RE, El-Bassel N, Ghesquiere A, et al. Major ingredients of fidelity: a review and scientific guide to improving quality of intervention research implementation. Clin Psychol Rev. 2011;31:79–88. doi: 10.1016/j.cpr.2010.09.007. [DOI] [PubMed] [Google Scholar]

- 9.Schober I, Sharpe H, Schimidt U. The reporting of fidelity measures in primary prevention programmes for eating disorders in schools. Eur Eat Disord Rev. 2013;21:374–81. doi: 10.1002/erv.2243. [DOI] [PubMed] [Google Scholar]

- 10.Resnick B, Bellg AJ, Borrelli B, et al. Examples of implementation and evaluation of treatment fidelity in the bcc studies: where we are and where we need to go. Ann Behav Med. 2005;29:46–54. doi: 10.1207/s15324796abm2902s_8. [DOI] [PubMed] [Google Scholar]

- 11.Carvalho ML, Honeycutt S, Escoffery C, et al. Balancing fidelity and adaptation: implementing evidence-based chronic disease prevention programs. J Public Health Manage Pract. 2013;19:348–56. doi: 10.1097/PHH.0b013e31826d80eb. [DOI] [PubMed] [Google Scholar]

- 12.Van Daele T, Van Audenhove C, Hermans D, et al. Empowerment implementation: enhancing fidelity and adaptation in a psycho-educational intervention. Health Promot Int. 2014;29:212–22. doi: 10.1093/heapro/das070. [DOI] [PubMed] [Google Scholar]

- 13.Melnyk BM, Kelly S, Jacobson D, et al. The COPE healthy lifestyles TEEN randomized controlled trial with culturally diverse high school adolescents: baseline characteristics and methods. Contemp Clin Trials. 2013;36:41–53. doi: 10.1016/j.cct.2013.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Melde C, Esbensen FA, Tusinski K. Addressing program fidelity using onsite observations and program provider descriptions of program delivery. Eval Rev. 2006;30:714–40. doi: 10.1177/0193841X06293412. [DOI] [PubMed] [Google Scholar]

- 15.Sánchez V, Steckler A, Nitirat P, et al. Fidelity of implementation in a treatment effectiveness trial of reconnecting youth. Health Educ Res. 2007;22:95–107. doi: 10.1093/her/cyl052. [DOI] [PubMed] [Google Scholar]

- 16.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Commun Psychol. 2008;41:327–50. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 17.Melnyk BM, Jacobson D, Kelly S, et al. Promoting healthy lifestyles in high school adolescents: a randomized controlled trial. Am J Prev Med. 2013;45:407–15. doi: 10.1016/j.amepre.2013.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Clarke G. Intervention fidelity in the psychosocial prevention and treatment of adolescent depression. J Prev Intervent Community. 1998;17:19–33. [Google Scholar]

- 19.Smith JD, Dishion TJ, Shaw DS, et al. Indirect effects of fidelity to the family check-up on changes in parenting and early childhood problem behaviors. J Consult Clin Psychol. 2013;81:962–74. doi: 10.1037/a0033950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Melnyk BM, Jacobson D, Kelly S, et al. Promoting healthy lifestyles in high school adolescents: a randomized controlled trial. Am J Prev Med. 2013;45:407–15. doi: 10.1016/j.amepre.2013.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Berkel C, Mauricio AM, Schoenfelder E, et al. Putting the pieces together: an integrated model of program implementation. Prev Sci. 2011;12:23–33. doi: 10.1007/s11121-010-0186-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wenz-Gross M, Upshur C. Implementing a primary prevention social skills intervention in urban preschools: factors associated with quality and fidelity. Early Educ Dev. 2012;23:427–50. doi: 10.1080/10409289.2011.589043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.LaChausse RG, Clark KR, Chapple S. Beyond teacher training: the critical role of professional development in maintaining curriculum fidelity. J Adolesc Health. 2014;54:S53–8. doi: 10.1016/j.jadohealth.2013.12.029. [DOI] [PubMed] [Google Scholar]

- 24.Ringwalt CL, Pankratz MM, Jackson-Newsom J, et al. Three year trajectory of teachers’ fidelity to a drug prevention curriculum. Prev Sci. 2010;11:67–76. doi: 10.1007/s11121-009-0150-0. [DOI] [PMC free article] [PubMed] [Google Scholar]