Abstract

ERP responses to spoken words are sensitive to both rhyming effects and effects of associated spelling patterns. Are such effects automatically elicited by spoken words or dependent on selectively attending to phonology? To address this question, ERP responses to spoken word pairs were investigated under two equally demanding listening tasks that directed selective attention either to sub-syllabic phonology (i.e., rhyme judgments) or to melodies embedded within the words. ERPs elicited when participants selectively attended to phonology demonstrated a rhyming effect that was concurrent with online stimulus encoding and an orthographic effect that emerged later. ERP responses to the same stimuli presented under melodic focus, however, showed no evidence of sensitivity to rhyme or spelling patterns. Results reveal limitations to the automaticity of such ERP effects, suggesting that rhyme effects may depend, at least to some degree, on allocation of attention to phonology, which may in turn activate task-incidental orthographic information.

Keywords: Selective attention, Auditory words, Phonological similarity, Orthographic similarity, Phonology, Rhyming words, ERP, Topography

1. Introduction

Rhyming, a central device in poetry, songs, and children’s books, rests upon similarities between spoken words at a sub-syllabic level. ERP responses to spoken word pairs reveal sensitivity to rhyming that is so rapid that these effects overlap with online word encoding processes (e.g., Praamstra & Stegeman, 1993). Spoken rhyming words often share corresponding spelling patterns which contribute to their perceived similarity. These orthographic associations can also influence ERP responses to spoken words (e.g., Pattamadilok, Perre, & Ziegler, 2011). Such effects raise questions about the interaction between phonological and orthographic representations during auditory word processing. Do the auditory ERP effects of rhyming and associated spelling reflect automatic, stimulus-driven activation of phonological and orthographic codes regardless of task constraints? Alternatively, do these effects reflect an active process that depends on the listener selectively attending to such linguistic information? Recent cognitive neuroscience investigations have sought to delineate how top-down components, such as selective attention to phonology (McCandliss & Yoncheva, 2011), interact with effects defined by stimulus properties (i.e., rhyming pairs, words with shared spelling patterns) to inform what governs the bottom-up activation of cognitive codes associated with auditory words. Here we combine behavioral and event-related potential (ERP) measures that can track the temporal dynamics of selective attention and stimulus-driven responses to examine these questions.

ERPs to auditory word pairs exhibit robust bottom-up sensitivity to phonological similarity (Dumay et al., 2001; Praamstra & Stegeman, 1993). Contrasting rhyming vs. non-rhyming word pairs with respect to the second word ERP typically reveals a centroparietal N400-like modulation (Coch, Grossi, Coffey-Corina, Holcomb, & Neville, 2003). This ERP rhyming effect has been reported over a gamut of linguistic tasks varying in the degree to which this information is task-relevant, for instance rhyme judgments (Praamstra, Meyer, & Levelt, 1994), verbal shadowing (Dumay et al., 2001), and lexical decision (Praamstra et al., 1994). Across these diverse tasks, converging findings indicate that ERP responses to auditory words show sensitivity to rhyme even when such information is not explicit in task demands supporting the idea that merely presenting auditory word stimuli engages a form of obligatory phonological processing at a level that is sensitive to rhyming information.

Remarkably, spelling knowledge plays a significant role in auditory rhyme effects. The influence of orthography on rhyming effects received early support from findings of shorter reaction-time latencies to verify that two auditory words rhyme when associated spellings are similar (deed--greed) as opposed to dissimilar (deed--bead) (Seidenberg & Tanenhaus, 1979). Conversely, the time needed to reject non-rhyming auditory pairs increased in the face of overlapping spelling patterns (couch--touch). Importantly, such reaction-time patterns occur under auditory conditions involving no visual print and involving distractor trials that rule out strategic effects of intentionally considering spelling information. The demonstration that task-irrelevant orthographic information associated with spoken words can enhance Stroop interference for printed words (Tanenhaus, Flanigan, & Seidenberg, 1980) further supported the notion that orthographic modulation of auditory rhyme effects are highly automatic, reflecting stimulus-driven processes. This work catalyzed a wide range of investigations of orthographic influences on auditory word decisions, including orthographic feedback (sound-to-spelling) consistency contrasts (Ziegler & Ferrand, 1998) and auditory priming (Chereau, Gaskell, & Dumay, 2007). Across this literature, such effects are routinely interpreted as stimulus-driven activation of associated visual word form representations that can be captured in models of interactive activation between orthography and phonology (McClelland & Rumelhart, 1981) and reflect a set of rapid, automatic processes largely independent of attention (Posner & Snyder, 1975).

Understanding the influence of orthography on spoken word perception has recently been approached using ERP methods, whose fine temporal precision enables linking experimental effects to the time-course of perception, and transcranial magnetic stimulation (TMS), which offers insights into both time-course and causality (Poldrack, 2006). In one such study, TMS disruption of phonological, but not of orthographic, regions abolished the advantage of orthographic feedback consistency in an auditory lexical decision task, suggesting a lack of concurrent recruitment of spelling information during word encoding (Pattamadilok, Knierim, Kawabata Duncan, & Devlin, 2010). Furthermore, ERP patterns related to orthographic effects on auditory word perception have demonstrated considerable sensitivity to differences in task demands (Pattamadilok et al., 2011). This variability based on specific stimulus/task combinations raises the question: do findings reflect bottom-up interactions between orthographic and phonological codes or rather more active processes imposed in a top-down fashion?

We propose that selective attention to phonology is a key modulator of rhyming and associated orthographic effects when processing spoken words. Following the work of Desimone and Duncan (1995), we refer to selective attention as a top-down process biasing competition between stimulus features with effects cascading from perception to higher cognitive functions. Thus, experimentally isolating the impact of selective attention requires a paradigm that manipulates only participants’ focus on certain cues within identical stimuli (e.g., tones superimposed on spoken words) while keeping task difficulty constant. Using this selective attention approach in an fMRI study (Yoncheva, Zevin, Maurer, & McCandliss, 2010b), we identified a left-hemispheric network specifically recruited when subjects attended to rhyming words rather than another, equally demanding, acoustic dimension. This network notably included the visual word form area suggesting potential activation of orthographic codes when attention is directed to phonology. The temporal precision of ERP measures may provide additional insights into the influence of selective attention on the automaticity of such phonological and orthographic effects, as well as their relative time-course in relation to online encoding of stimulus information and response execution.

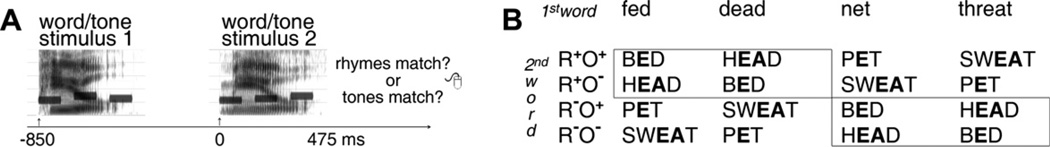

This study’s goals are twofold: (1) investigate ERP responses to isolate the time-course of the influence of selective attention to phonology on auditory rhyming effects and (2) investigate orthographic contributions to these rhyming effects induced by selective attention to phonology. To this end, we use a design similar to Yoncheva et al. (2010b) with stimuli consisting of tones superimposed on word pairs. The same stimulus pairs of rhyming and non-rhyming auditory words were presented under equally challenging task conditions focusing attention either on rhyme (i.e., phonological focus) or on melodic match judgments on the tone-triplets embedded within the words (i.e., melodic focus) while ERPs are measured (Fig. 1A). Further, auditory word stimuli were selected such that every phonological rime-unit was associated with two alternative spellings, enabling direct examination of the effect of orthography. Following the logic and design properties of the orthographic manipulation within rhyming pairs introduced in the seminal paper by Seidenberg & Tanenhaus, (1979), we contrasted ERPs to rhyming pairs that shared entire rime unit spellings (R+O+) with rhyming pairs that contained a deviation in the spelling in the nucleus, the coda, or in some instances, both (R+O−). Unlike previous studies, rather than presenting a unique set of words with special properties to enable examination of the case in which an entire orthographic rime unit was shared across non-rhyming words, we counterbalanced the same set of second word stimuli contained in the R+O+ vs. R+O− contrast into a non-rhyming condition (Fig. 1B). This manipulation allowed us to investigate whether this same orthographic contrast produced opposite ERP effects when placed in the context of a non-rhyming word pair.

Fig. 1.

Task and design. (A) Every trial presented a pair of auditory stimuli, each consisting of a word overlapping with a tone triplet. Based on prior task cue, subjects decided whether or not the two words rhymed (phonological focus) or, alternatively, whether or not the tone triplets matched (melodic focus). ERPs time-locked to the onset of stimulus 2 were analyzed. (B) Four conditions (R+O+, R+O−, R−O+, R−O−) were created based on the Rhyme/Orthography relationship within a word pair. The orthographic similarity effect was captured by contrasting trials where the second word shared spelling with the first one with trials where it did not (within R+ (first word fed): O+ (bed) vs. O− (head); within R−, (first word net): O+ (bed) vs. O− (head)). The rhyming effect involved contrasting the second word of a rhyme (R+) with that of a non-rhyme (R−) pair.

2. Results

2.1. Behavioral task effects

Rhyming and melodic match judgments were equally challenging in terms of accuracy (phonological: M = 91.94%, SD = 4.50 vs. melodic focus: M = 93.41%, SD = 4.35: t(15) = 1.32, p = 0.21), while reaction times were slower for rhyming (phonological: M = 970.47 ms, SD = 70.92 vs. melodic focus: M = 900.47 ms, SD = 96.05: t(15) = 3.07, p < 0.01).

2.2. Rhyming effect (R+ vs. R−)

2.2.1. Phonological focus

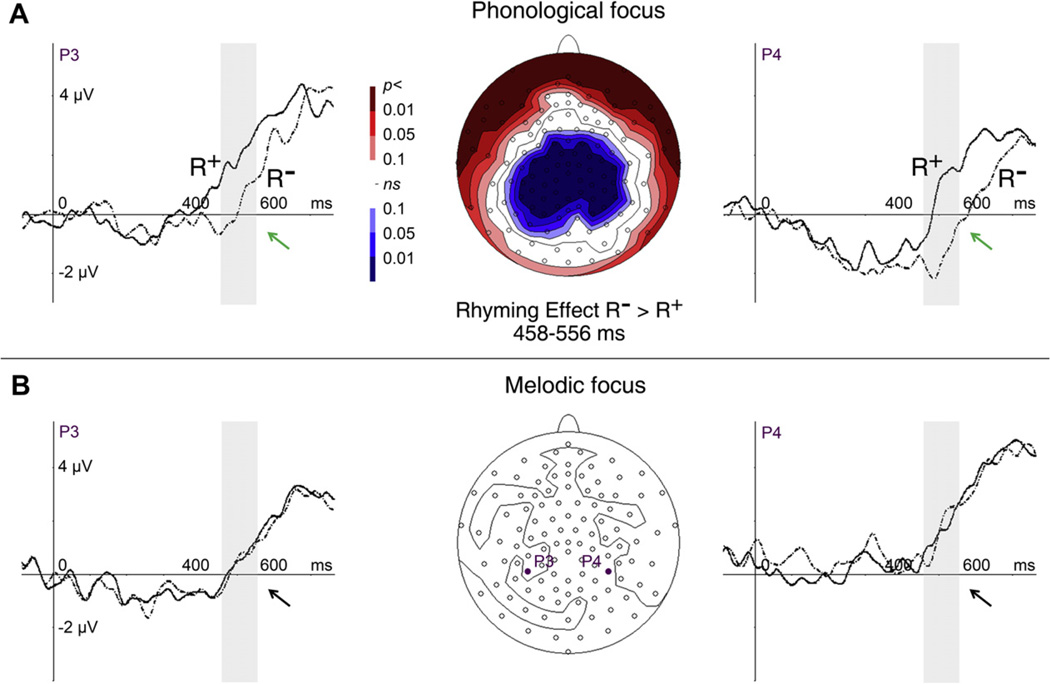

An ERP rhyming effect manifested 458–556 ms after stimulus two onset, an interval corresponding on average to the offset of the auditory word (as indicated by a significant effect in the time-point by time-point TANOVA for R+ vs. R−). Across the 458–556 ms averaged time-window, contrasting rhyme vs. nonrhyme pairs revealed a whole-map topographic difference (normalized TANOVA: p < 0.001; p-values at each electrode plotted in Fig. 2). This rhyming effect was independent of map strength (GFP rhyme vs. non-rhyme: t(15) = −0.20, p = 0.84).

Fig. 2.

ERP rhyming effect. (A) Under phonological focus, rhyme pairs (R+; solid line) (A) show enhanced positivity over parietal sites (illustrated in the left and right waveform panels) relative to non-rhyme pairs (R−; dashed line). The topographic distribution of this effect (p-value at each site, middle panel) is consistent with a whole-map modulation. (B) Under melodic focus, there are no significant differences between rhyme and non-rhyme pairs.

No behavioral differences were observed for rhyme vs. nonrhyme trials (accuracy: t(15) = 0.77, p = 0.44; RT: t(15) = 0.47, p = 0.64).

2.2.2. Melodic focus

No rhyming effect emerged in the ERP, across the trial (p > 0.05 time-point by time-point TANOVA) or within the 458–556 ms averaged time-window (normalized TANOVA: p = 0.743; GFP: t(15) = 1.14, p = 0.27). Similarly, there were no behavioral differences when hearing rhyme vs. non-rhyme pairs in the tone judgment task (accuracy: t(15) = −1.07, p = 0.30; RT: t(15) = 0.16, p = 0.87).

2.3. Orthographic similarity effect (R+O+ vs. R+O−; R−O+ vs. R−O−)

2.3.1. Phonological focus

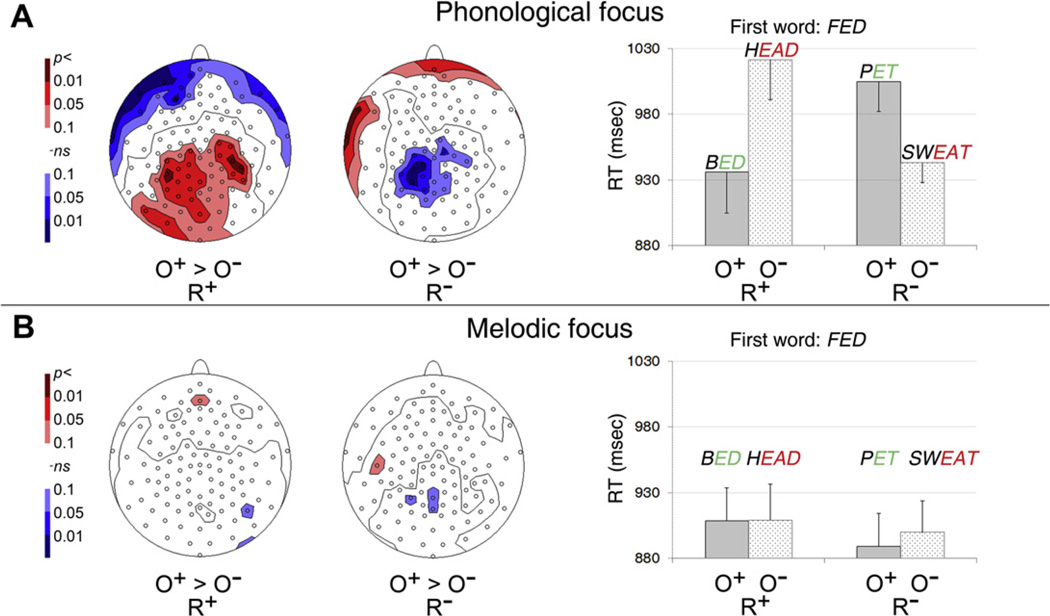

Orthographic similarity ERP differences were present 702–730 ms after stimulus two onset as indicated by a significant effect in the time-point by time-point TANOVA for R+O+ vs. R+O−. The orthographic similarity modulation of rhyme trials manifested as a topographic difference (normalized TANOVA: p < 0.001) accompanied by a map strength difference (GFP: t(15) = 2.62, p < 0.05). Significant orthographic effects were corroborated for non-rhyme trials, again with a map strength difference (GFP: t(15) = −2.32, p < 0.005) and a topographic difference (normalized TANOVA: p < 0.001) but with reversed polarity compared to the difference pattern for rhyme trials (Fig. 3).

Fig. 3.

ERP and behavioral orthographic similarity effect. (A) Under phonological focus, orthographic similarity produces post-perceptual (702–730 ms) whole-map effects (p < 0.05), exhibiting reversed polarities for rhyme (R+) relative to non-rhyme (R−) pairs. A corresponding effect of orthography on reaction times during rhyming judgments is found. (B) Under melodic focus, no effect of orthography is evident in either ERP or behavioral responses.

Orthographic similarity produced a robust behavioral effect on rhyming judgment latencies (Fig. 3). For rhyme pairs, identical rime-unit spelling resulted in superior performance relative to differing spelling (R+O+ vs. R+O−: accuracy t(15) = 3.27, p < 0.005, RT t(15) = −3.97, p < 0.001). For non-rhyme pairs, in contrast, orthographic similarity lead to worse task performance (R−O+ vs. R−O−: accuracy t(15) = −3.76, p < 0.005, RT t(15) = 3.51, p < 0.005).

2.3.2. Melodic focus

There were no significant orthographic ERP effects for rhyme or non-rhyme trials (all p’s > 0.34). Similarly, orthographic similarity did not impact behavioral performance (all p’s > 0.41).

3. Discussion

Selective attention to phonological information drastically modulated auditory word processing. Extending previous fMRI findings, this complementary ERP investigation demonstrated that although spelling consideration was neither required for accurate task performance nor necessarily beneficial, associated orthography biased spoken word ERP topographies when participants selectively focused on rhyme-relevant information. Rhyming effects coincided with processes pertinent to spoken word encoding, emerging only when attending to phonological codes within words. Notably, both the orthographic and the rhyming effects were eliminated when attention was drawn away from phonology.

ERP effects for both auditory rhyming and orthographic similarity manifested only when participants selectively attended to phonology. Potential stimulus-class confounding factors were controlled for by examining ERP responses to the same set of spoken words when paired in conditions where a minimal corresponding orthographic contrast (e.g., ee--ea) was preserved across the two contexts of rhymes and non-rhymes. We found that such subtle orthographic contrasts can influence spoken word ERPs, even when no explicit decision is required for the associated spelling, suggesting a degree of automaticity. This complements similar conclusions from studies that contrast ERPs to auditory words that have feedback-consistent vs. feedback-inconsistent relationships between phonology and orthography (Pattamadilok, Perre, Dufau, & Ziegler, 2009; Pattamadilok et al., 2011). In the current study, the absence of an orthographic effect when auditory rhymes are presented under conditions that prevent selective focus on phonology supports the notion that selective attention to phonological information is pivotal in driving the orthographic engagement during spoken word processing.

Behaviorally, greater orthographic similarity led to superior performance when verifying rhyming pairs and worse performance when rejecting non-rhymes. This pattern of results corroborates findings from classic auditory rhyming studies (Seidenberg & Tanenhaus, 1979), which, unlike the present study, sought to equate the degree of orthographic overlap between R+O+ and R−O+ conditions by selecting words with highly inconsistent pronunciations (e.g., couch--touch). The lack of orthographic effects when attention was diverted from phonology argues against a strong form of orthographic automaticity, which might be elicited by the mere presentation of auditory words when one’s attention is focused on listening to those stimuli for a different purpose (Chereau et al., 2007; Tanenhaus et al., 1980; cf. Damian & Bowers, 2010). Furthermore, orthographic similarity elicited parallel effects in ERP topographies and behavioral data. Previous research has tackled segregating effects relevant to early perceptual vs. late motor-response processes by targeting a specified ERP component (e.g., lateralized readiness potential) or response-locked ERPs. Here we employed data-driven analysis with fine temporal sensitivity to between-condition differences to link effects occurring while the stimulus is unraveling to encoding and subsequent effects to decision-making. Given its timing within the trial, (i.e. after the offset of the second spoken word of the pair), the ERP orthographic similarity modulation likely reflects post-perceptual stimulus evaluation and comparison processes. This convergence of ERP and behavioral patterns suggests that the impact of selective attention to phonological information in driving an obligatory recruitment of orthographic information during auditory rhyming might be relevant to behavioral outcomes.

The finding of a modulation of auditory word encoding during rhyming fits with a wide array of linguistic auditory task ERP findings (Coch et al., 2003; Praamstra & Stegeman, 1993). Importantly, active listening alone is insufficient in producing sensitivity to rhymes: phonological engagement is required. These results are not inconsistent with findings that phonological similarity ERP effects can be detected when focusing on the melodies of sung pairs of words (Gordon, Schön, Magne, Astésano, & Besson, 2010). Sung words contain both phonological and musical dimensions within the same inseparable acoustic signal, which may be automatically processed in the absence of irrelevant competing information. Here the obliteration of ERP sensitivity to rhymes emerged under conditions of relatively high perceptual load that mimic the typical speech perception milieu, which promotes attentional selection (Desimone & Duncan, 1995). As such, our findings counter the notion that orthographic and phonological similarities of two auditory words are automatically activated merely by presenting the stimuli. Given this lack of automaticity for phonological and orthographic information, the current results also speak to limitations of selective attention mechanisms in encoding multiple dimensions of a stimulus at once, as in the case of attending to the similarity of two short melodies and the similarity of two spoken words.

The present study provides a new perspective to the burgeoning literature on the time-course of the interaction between orthographic and phonological influences during word recognition (Carreiras, Perea, Vergara, & Pollatsek, 2009; Cornelissen et al., 2009; Duncan, Pattamadilok, & Devlin, 2010; Pattamadilok et al., 2010): the orthographic effect appears to be a late-emerging process, actively induced – in a context-dependent manner - by the specific task demands used to examine it. In contrast, although the rhyming effect may also depend on context, when tasks support rhymerelevant encoding it manifests concurrently with the perceptual encoding of the auditory stimuli. Both the task-dependent nature of rhyme and orthographic effects and the difference in their time-courses hold important implications for TMS disruption of phonological and orthographic influences on auditory word perception (Duncan et al., 2010; Pattamadilok et al., 2010). The present results suggest that premature stimulation may miss the orthographic influences, and late stimulation may miss the influence of phonology.

Taken together, the present findings suggest that selective attention to phonology enables rhyming information to influence perceptual encoding of spoken words and also enables associated orthographic information to influence post-perceptual decision making processes relevant to executing rhyme judgments. As such, selective focus on rhyming can enhance stimulus-specific encoding of the phonologically similar word pairs, while focus on concurrent competing melodic information can obliterate this effect at encoding. Once phonological information has been extracted from the auditory stimulus, an interaction between stimulus-specific phonological characteristics and associated spelling emerges indicating the obligatory influence of orthography in skilled readers that might further influence decision making processes. To the extent that the late orthographic ERP biases reflect the impact of spelling knowledge on auditory word judgments, the current findings indicate that spelling knowledge can be evoked in the absence of print or explicit orthographic task demands, while underscoring the role of selective attention to phonology in generating these orthographic effects.

4. Methods

4.1. Participants

Right-handed, neurologically healthy native English speakers with normal hearing and vision provided written informed consent. Ethical approval was granted by the Institutional Review Board of the Weill Cornell Medical College. Data from subjects with accuracy above 80% on each experimental task is reported (two subjects were excluded). The final group comprised 16 participants (10 women, mean age: 26 years) with normal reading abilities (Woodcock, McGrew, & Mather, 2001).

4.2. Stimuli

As detailed in Yoncheva et al. (2010b), a word/tone stimulus consisting of an auditory word (duration = 479 ms, SD = 63) presented simultaneously with a tone triplet comprising a sequence of three unique pure tones (duration of each tone = 125 ms, silence gap between tones = 50 ms) was played centrally in front of the participant at −60 dB (Fig. 1A). E-prime 1.2 (Psychology Software Tools, Inc., Pittsburgh, PA) was used for stimulus presentation. The stimulus list design (256 unique non-homophones) ensured presentation of the same words while allowing pairings that isolate both rhyming and orthographic similarity effects. For each phonological rime-unit we selected two words associated with one orthographic pattern and two others with an alternative pattern. These four-word groups were matched with other four-word groups (together called an ‘octet’) allowing subsequent formation of non-rhyme conditions that contained at least an overlapping vowel or a final consonant cluster within a word pair, while maintaining a comparable orthographic contrast (e.g., e--ea) across the two four-word groups (Fig. 1B). Fully counterbalanced stimulus lists were compiled based on these octets ensuring that no single word was: repeated in the same position within a pair; paired twice with the same word; and appeared more than once within 32 trials. Crucially, since each word appeared equally often in all four conditions (R+O+, R+O−, R−O+, R−O−), behavioral measures and ERPs derived from each condition were elicited by the same collection of stimuli, and therefore between-condition differences reflected solely condition assignment. This enabled direct investigation of the impact of orthography (orthographic similarity effect) in the context of rhyme trials (R+O+ vs. R+O−), and analogously the same impact of a minimal orthographic contrast in the context of non-rhyme trials (R−O+ vs. R−O−). As illustrated in the highlighted black outlined boxes (Fig. 1B) the amount of contrasting orthographic information in the R+O+ vs. R+O−comparison is identical to that in the R−O+ vs. R−O− comparison. The current design differs from previous experiments (Seidenberg & Tanenhaus, 1979) that sought to equate the degree of orthographic overlap between R+O+ and R−O+ conditions by selecting words (e.g., touch), whose orthographic rimes are not assigned the same pronunciation in any other word and thus cannot be assigned to R+O+ condition.

4.3. Procedure

Two tasks were performed on the chimeric auditory pair, based on preceding task cue: rhyming judgment (phonological focus) on the word pair, and tone-triplet matching (melodic focus) on the tone-triplet pair. Intensive auditory processing was required under phonological focus by emphasizing phonological demands through acoustically close distractors in the non-rhyme trials, and under melodic focus by emphasizing melodic analysis through non-matching tone-triplets that were constructed by reversing the order of the second and third tones of the triplet.

Prior to the EEG session, a behavioral staircase test that progressively reduced tone amplitude while holding word amplitude constant was conducted to establish the stimulus amplitude level at which the participant surpassed an accuracy threshold of 90% on two consecutive ten-trial sessions.

The EEG experiment consisted of 256 intermixed trials of the rhyming and tone tasks in pseudo-randomized order (allowing brief breaks every 32 trials). A trial began with a visual cue (150 ms) prescribing the impending rhyming or tone task. After a 1500-ms interval (fixation cross), an auditory stimulus of maximal duration 550 ms was presented, followed by 300 ms of silence. A second auditory stimulus was then played (SOA = 850 ms), after which participants had 1600 ms to respond to this two-alternative forced choice task with a button press. The next trial began after a normally distributed jitter of 500–1500 ms.

4.4. EEG data acquisition and preprocessing

128-Channel EEG was recorded using a Hydrocel Geodesic Sensor Net (Electrical Geodesics Inc., Eugene, Oregon) referenced to Cz (sampled at 500 Hz/channel, 0.1–200 Hz filters, calibrated technical zero baselines, and electrode impedances below 50 kO). After spline-interpolation of channels with excessive artifacts and blink correction (multiple source eye correction minimizing topographic distortions (Berg & Scherg, 1994)), EEG data were digitally band-pass filtered (0.1–30 Hz: 24 dB/oct, zero phase), and artifacts exceeding ±100 µV rejected. Single-subject potentials (ERPs on correct trials time-locked to onset of the second auditory stimulus within a pair) were averaged separately for each task and within task for each condition, re-referenced to average reference, and Global Field Power (GFP) computed (Lehmann & Skrandies, 1980) as previously detailed (Yoncheva et al., 2010a).

4.5. ERP analysis

Given our interest in rhyming and orthographic ERP effects, which are defined as between-condition difference based on word properties, we employed a data-driven approach sensitive to topographic differences to identify the time-ranges over which differential processing (i.e., systematic topographic differences and overall amplitude variations) is manifested (Yoncheva et al., 2010a). Thus, a topographic analysis of variance TANOVA (Strik, Fallgatter, Brandeis, & Pascual-Marqui, 1998) was conducted on raw ERP maps at each time-sample from 0 to 1000 ms separately for each task contrasting: (a) Non-rhymes minus Rhymes, i.e., auditory rhyming effect; and (b) Rhymes: O+ minus O−, i.e. orthographic effect. At each time-sample, global dissimilarity was computed (Lehmann & Skrandies, 1980). A probability distribution was determined via 5000 re-sampling randomization test (Manly, 1991). Finally, a z-score of the original dissimilarity in relation to its respective distribution was computed.

The unified algorithm to false discovery rate (fdr) estimation (Strimmer, 2008a) dealt with multiple comparisons over the 500 time-samples of each TANOVA. Several statistical properties motivated its utility for our ERP data: its empirical model fitting deals with time-sample correlations inherent to the time-domain; its truncation point for model fitting minimizes false non-discovery rate (type II error) increasing leverage in interpreting both significant (phonological focus) and non-significant (melodic focus) findings; the estimated local fdr represents the readily interpretable empirical Bayesian posterior probability of the null hypothesis (Efron, 2004, 2007). z-Scores for each time-point were inputted into the fdrtool algorithm (Strimmer, 2008b) (http://strimmerlab.org/software/fdrtool) as part of the R package archive from CRAN (R Development Core Team, 2007) resulting in these fitting parameters: Phonological focus: rhyming effect η0 = 0.894, SD = 2.322; orthographic effect: η0 = 0.978, SD = 1.196; Melodic focus: rhyming: η0 = 1, SD = 294.43; orthographic: η0 = 1, SD = 3.368. Statistical significance was set at local fdr p < 0.05.

An identical approach of conducting a TANOVA over each time-sample of the 1000-ms ERP and estimating local fdr was performed for the rhyming effect and for the orthographic effect separately for two tasks. In the phonological focus task, the rhyming effect occurred 458–556 ms, and the orthographic effect occurred 702–730 ms, after the onset of stimulus two within the pair. No significant differences emerged for either effect under melodic focus. Thus, for the purpose of direct between-task comparisons, the time intervals where significant rhyming and orthographic effects emerged under phonological focus were used for segmentation under melodic focus. Each participant’s potentials were averaged separately for each condition over the 458–556 ms and 702–730 ms intervals, and then contrasted directly with respect to: (1) strength of the electric field (indexed by GFP) and (2) topographic differences across all electrodes (indexed by TANOVA on normalized to GFP = 1 maps). These two complementary measures allowed comprehensive characterization of map effects.

Response latencies were indexed by 5% trimmed means of reaction times (RT) on correct trials, separately for each condition for each subject, and reported in ms from the onset of the second stimulus.

Acknowledgments

This study was supported by an RO1 grant (DC007694) from the National Institute on Deafness and Other Communication Disorders awarded to B.D.M.

References

- Berg P, Scherg M. A multiple source approach to the correction of eye artifacts. Electroencephalography and Clinical Neurophysiology. 1994;90(3):229–241. doi: 10.1016/0013-4694(94)90094-9. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Perea M, Vergara M, Pollatsek A. The time course of orthography and phonology: ERP correlates of masked priming effects in Spanish. Psychophysiology. 2009;46(5):1113–1122. doi: 10.1111/j.1469-8986.2009.00844.x. [DOI] [PubMed] [Google Scholar]

- Chereau C, Gaskell MG, Dumay N. Reading spoken words: orthographic effects in auditory priming. Cognition. 2007;102(3):341–360. doi: 10.1016/j.cognition.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Coch D, Grossi G, Coffey-Corina S, Holcomb PJ, Neville HJ. A developmental investigation of ERP auditory rhyming effects. Developmental Science. 2003;5(4):467–489. [Google Scholar]

- Cornelissen PL, Kringelbach ML, Ellis AW, Whitney C, Holliday IE, Hansen PC. Activation of the left inferior frontal gyrus in the first 200 ms of reading: Evidence from magnetoencephalography (MEG) PloS One. 2009;4(4):e5359. doi: 10.1371/journal.pone.0005359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damian MF, Bowers JS. Orthographic effects in rhyme monitoring tasks: Are they automatic? European Journal of Cognitive Psychology. 2010;22(1):106–116. [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Dumay N, Benraiss A, Barriol B, Colin C, Radeau M, Besson M. Behavioral and electrophysiological study of phonological priming between bisyllabic spoken words. Journal of Cognitive Neuroscience. 2001;13(1):121–143. doi: 10.1162/089892901564117. [DOI] [PubMed] [Google Scholar]

- Duncan KJ, Pattamadilok C, Devlin JT. Investigating occipito-temporal contributions to reading with TMS. Journal of Cognitive Neuroscience. 2010;22(4):739–750. doi: 10.1162/jocn.2009.21207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B. Large-scale simultaneous hypothesis testing: the choice of a null hypothesis. Journal of the American Statistical Association. 2004;99:96–104. [Google Scholar]

- Efron B. Correlation and large-scale simultaneous significance testing. Journal of the American Statistical Association. 2007;102:93–103. [Google Scholar]

- Gordon RL, Schön D, Magne C, Astésano C, Besson M. Words and melody are intertwined in perception of sung words: EEG and behavioral evidence. PloS One. 2010;5(3):e9889. doi: 10.1371/journal.pone.0009889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehmann D, Skrandies W. Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalography and Clinical Neurophysiology. 1980;48:609–621. doi: 10.1016/0013-4694(80)90419-8. [DOI] [PubMed] [Google Scholar]

- Manly BFJ. Randomization and Monte Carlo methods in biology. Chapman and Hall; 1991. pp. xiii–281. [Google Scholar]

- McCandliss BD, Yoncheva Y. Integration of left-lateralized neural systems supporting skilled reading. In: Benasich AA, Fitch RA, editors. Developmental dyslexia: Early precursors, neurobehavioral markers and biological substrates (The Extraordinary Brain Series) Baltimore, MD: Brookes Publishing Co.; 2011. pp. 325–339. [Google Scholar]

- McClelland JL, Rumelhart DE. An interactive activation model of context effects in letter perception: Part I. An account of basic findings. Psychological Review. 1981;88:375–407. [PubMed] [Google Scholar]

- Pattamadilok C, Knierim IN, Kawabata Duncan KJ, Devlin JT. How does learning to read affect speech perception? Journal of Neuroscience. 2010;30(25):8435–8444. doi: 10.1523/JNEUROSCI.5791-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pattamadilok C, Perre L, Dufau S, Ziegler JC. On-line orthographic influences on spoken language in a semantic task. Journal of Cognitive Neuroscience. 2009;21(1):169–179. doi: 10.1162/jocn.2009.21014. [DOI] [PubMed] [Google Scholar]

- Pattamadilok C, Perre L, Ziegler JC. Beyond rhyme or reason: ERPs reveal task-specific activation of orthography on spoken language. Brain and Language. 2011;116(3):116–124. doi: 10.1016/j.bandl.2010.12.002. [DOI] [PubMed] [Google Scholar]

- Poldrack RA. Can cognitive processes be inferred from neuroimaging data? Trends in Cognitive Sciences. 2006;10(2):59–63. doi: 10.1016/j.tics.2005.12.004. [DOI] [PubMed] [Google Scholar]

- Posner MI, Snyder CR. Attention and cognitive control. In: Solso R, editor. Information processing and cognition: The Loyola symposium. Hillsdale, NJ: Lawrence Erlbaum Associates; 1975. pp. 55–85. [Google Scholar]

- Praamstra P, Meyer A, Levelt WJM. Neurophysiological manifestations of phonological processing: latency variation of negative ERP component timelocked to phonological mismatch. Journal of Cognitive Neuroscience. 1994;6(3):204–219. doi: 10.1162/jocn.1994.6.3.204. [DOI] [PubMed] [Google Scholar]

- Praamstra P, Stegeman DF. Phonological effects on the auditory N400 event-related brain potential. Cognitive Brain Research. 1993;1(2):73–86. doi: 10.1016/0926-6410(93)90013-u. [DOI] [PubMed] [Google Scholar]

- R Development Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2007. < http://www.r-project.org>. [Google Scholar]

- Seidenberg MS, Tanenhaus MK. Orthographic effects in rhyme monitoring. Journal of Experimental Psychology: Human Learning and Memory. 1979;5:546–554. [PubMed] [Google Scholar]

- Strik WK, Fallgatter AJ, Brandeis D, Pascual-Marqui RD. Three-dimensional tomography of event-related potentials during response inhibition: evidence for phasic frontal lobe activation. Electroencephalography and Clinical Neurophysiology. 1998;108:406–413. doi: 10.1016/s0168-5597(98)00021-5. [DOI] [PubMed] [Google Scholar]

- Strimmer K. A unified approach to false discovery rate estimation. BMC Bioinformatics. 2008a;9:303. doi: 10.1186/1471-2105-9-303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strimmer K. Fdrtool: A versatile R package for estimating local and tail area-based false discovery rates. Bioinformatics. 2008b;24(12):1461–1462. doi: 10.1093/bioinformatics/btn209. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Flanigan HP, Seidenberg MS. Orthographic and phonological activation in auditory and visual word recognition. Memory and Cognition. 1980;8:513–520. doi: 10.3758/bf03213770. [DOI] [PubMed] [Google Scholar]

- Woodcock RW, McGrew K, Mather N. Woodcock-Johnson III. Itasca, IL USA: Riverside Publishing; 2001. [Google Scholar]

- Yoncheva YN, Blau V, Maurer U, McCandliss BD. Attentional focus during learning impacts N170 responses to an artificial script. Developmental Neuropsychology. 2010a;35(4):423–445. doi: 10.1080/87565641.2010.480918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoncheva YN, Zevin JD, Maurer U, McCandliss BD. Auditory selective attention to speech modulates activity in the visual word form area. Cerebral Cortex. 2010b;20(3):622–632. doi: 10.1093/cercor/bhp129. http://dx.doi.org/10.1093/cercor/bhp129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ziegler JC, Ferrand L. Orthography shapes the perception of speech: The consistency effect in auditory word recognition. Psychonomic Bulletin and Review. 1998;5:683–689. [Google Scholar]