Abstract

Understanding events often requires recognizing unique stimuli as alternative, mutually exclusive states of the same persisting object. Using fMRI, we examined the neural mechanisms underlying the representation of object states and object-state changes. We found that subjective ratings of visual dissimilarity between a depicted object and an unseen alternative state of that object predicted the corresponding multivoxel pattern dissimilarity in early visual cortex during an imagery task, while late visual cortex patterns tracked dissimilarity among distinct objects. Early visual cortex pattern dissimilarity for object states in turn predicted the level of activation in an area of left posterior ventrolateral prefrontal cortex (pVLPFC) most responsive to conflict in a separate Stroop color-word interference task, and an area of left ventral posterior parietal cortex (vPPC) implicated in the relational binding of semantic features. We suggest that when visualizing object states, representational content instantiated across early and late visual cortex is modulated by processes in left pVLPFC and left vPPC that support selection and binding, and ultimately event comprehension.

Keywords: conflict, fMRI, pattern similarity, visual cortex, working memory

Introduction

A category is a set of nonidentical members that invite the same response (or, in the case of humans, the same verbal label) (Medin and Schaffer 1978; Huth et al. 2012). Individual members of a category can vary along countless dimensions: apples can be red or green, desks can be wooden or metal, ideas can be clever or half-baked. This variation can create ambiguity in our understanding of even the most simple concept (Cree and McRae 2003; Clarke et al. 2012). Although members of a category vary, by definition, we tend to think of the features of any given exemplar of a category to be fixed. The current work examines properties of category members that can change, and in particular, how humans resolve the ambiguity that results from changes of state.

Some object-state changes are unidirectional (e.g., a pumpkin can be carved before Halloween, but a jack-o-lantern cannot be uncarved afterward); other state changes are reversible (e.g., ropes can be coiled or uncoiled). But, barring the example of Schrödinger's cat (Schrödinger 1935), at any one point in time, an object can be in only one state. So, while we know the category “balloon” includes both inflated and deflated varieties, we might also know that a particular balloon that is now deflated was once inflated. Indeed, to understand an event in which a particular balloon is inflated entails such knowledge about the distinct states in which it did, and does now, exist. We propose that this situation creates a particular challenge for a cognitive system that is designed to select a single representation from among incompatible alternatives (Altmann and Kamide 2009). Insofar as an object cannot be in multiple states at the same time, the mental representations of such object states are mutually exclusive. Thus, when recalling information about that object as it existed at a particular time (i.e., before, during, or after changes in state), the appropriate object-state representation must be retrieved. And since event comprehension requires maintenance in memory of the multiple states that the object occupied (otherwise, one could not comprehend that a specific event had taken place), selecting among available and salient object-state representations may entail conflict—the contextually appropriate object-state representation must be retrieved at the expense of the other(s). Such conflict is reminiscent of the conflict manifest during a Stroop color-word interference task (Stroop 1935; MacLeod 1991), in which the subject must choose between the color in which a color word such as “red” is depicted, and the meaning of the color word. Stroop interference in this task (e.g., when the word red is printed in green and the subject must report the typeface color) is the manifestation of the simultaneous activation of these mutually exclusive representations. Conflict may also arise during event comprehension when an initially activated object-state representation remains active even after the contextually appropriate representation has been computed; such conflict may be modulated by the extent that an initially activated (but now contextually inappropriate) object-state representation differs from the newly computed contextually appropriate representation. In support of this idea, Hindy et al. (2012) reported increased activity in the posterior portion of left ventrolateral prefrontal cortex (pVLPFC), an area implicated in the resolution of semantic conflict (Thompson-Schill et al. 1997, 1998, 1999), when subjects read about an event in which the state of an object changed compared with when it did not. Hindy et al. (2012) found that the amount each object changed affected the amount of activation in a region of pVLPFC defined, on an individual-subject basis, to respond most strongly to conflict trials in a Stroop color-word interference task (Banich et al. 2000). Thus, tracking object-state changes elicits a response in frontal cortex associated with conflict resolution. But why? If parts of left pVLPFC respond to conflict, perhaps enabling one neural response to dominate over another (Miller and Cohen 2001), where is this conflict, and might there be other regions which, together with left pVLPFC, form a functional network supporting the representation of, and selection among, alternative states of the same object?

In the present study, we attempt to track, across multiple brain regions, the changing representation of an object undergoing a state change, and then link this change to the left pVLPFC response. One strategy neuroscientists use to determine where some type of information is represented is to vary that information—in our case, object state—and to determine which neural tissue shows an associated change in response. We shall therefore identify neural activity that changes in response to object-state changes, and does so proportionally to the degree of change. Because the state changes we create here all pertain to visual properties, our focus will begin with cortical regions known to support visual functions.

There is ample evidence that visualizing an object involves reinstatement of a percept of that object in ventral visual cortex (Lee et al. 2012), a set of findings predicted by sensorimotor theories of concepts (Martin 2007), sometimes called embodied cognition (Barsalou 2008). From these ideas, we propose that the physical dissimilarity between alternative states of the same object will be associated with neural dissimilarity in visual cortex when subjects simply “imagine” the object states. If left pVLPFC processes support selection among interfering sensorimotor representations (Thompson-Schill et al. 2005), visual cortex dissimilarity between a contextually relevant object-state representation and a contextually “irrelevant,” yet salient, object-state representation may predict the level of left pVLPFC activation.

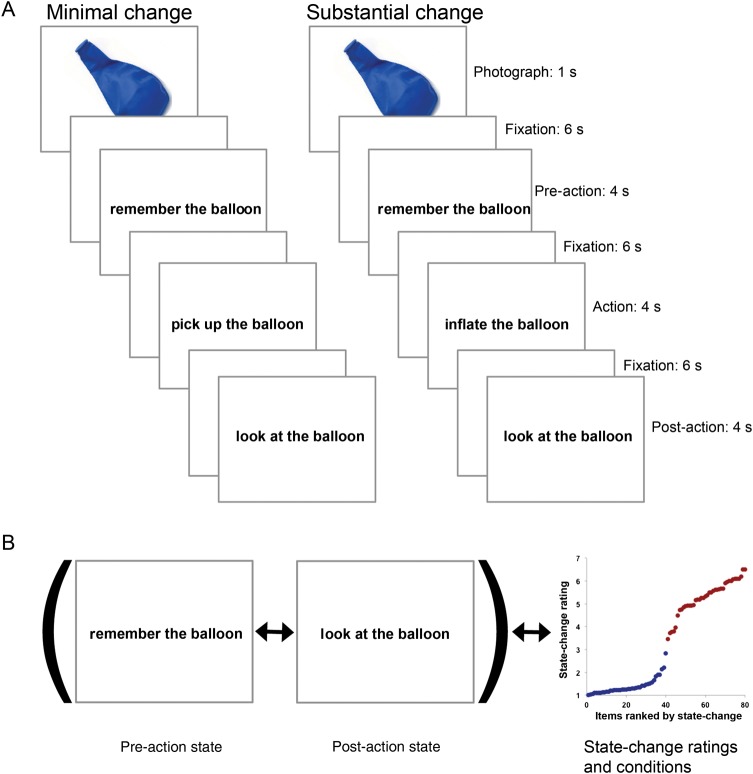

Using fMRI, we examined the effect of object-state change on the blood oxygen level–dependent (BOLD) response in early and late visual cortex, in left pVLPFC, and across the brain. In order to evoke a salient bottom-up representation of each object, each state-change trial began with a briefly presented object photograph. Each photograph was followed by a sequence of 3 visual imagery task instructions separated by fixation: 1) Visualize the object (e.g., a balloon), 2) visualize a specified action involving the object (e.g., inflating it), and 3) visualize the object in its final state after the action (Fig. 1A). Across trials, we varied whether the described action minimally (e.g., “pick up … ”) or substantially (e.g., “inflate … ”) changed the object, a distinction we verified with subjective ratings of the degree to which the described action changed the object. Although participants initially saw the unchanged object (e.g., an uninflated balloon), in the substantial change condition, they only ever imagined the object in its changed state (inflated); they never saw it. Thus, by displaying the initial object state and then asking subjects to imagine the altered state, we created an asymmetry in the bottom-up strength of the object-state representations so that the initial object-state representation would remain salient to subjects while they imagined the altered state. We calculated the multivoxel pattern dissimilarity for each trial between voxel patterns collected at the pre-action time point and voxel patterns collected at the post-action time point (Fig. 1B).

Figure 1.

State-change task and pattern-dissimilarity measure. (A) Each state-change trial began with a briefly presented object photograph, followed by a sequence of 3 visual imagery task instructions separated by fixation. Following each trial, subjects indicated which of 2 clipart images was most similar to the object at a cued point of the trial, and then rated the vividness with which they imagined the end state of the object. (B) Pattern dissimilarity was measured as the correlation distance between the multivoxel patterns at the pre-action and post-action time points. Pattern-dissimilarity scores were then either binned within condition, or regressed on the corresponding state-change ratings. Plot on far right displays the state-change rating for each item, ranked by rating and color-coded by condition.

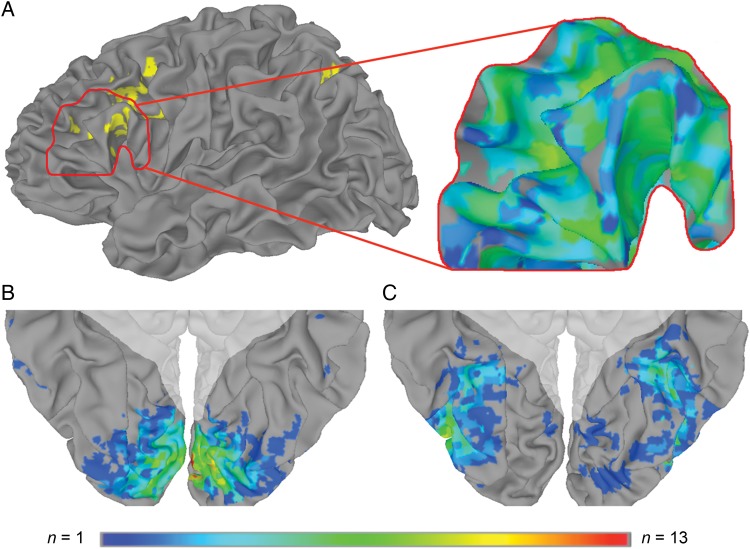

We focused our analyses on 3 functionally defined regions of interest (ROIs): Early and late regions of visual cortex most responsive to either objects or scrambled objects, and regions of left pVLPFC most responsive to conflict trials in a Stroop color-word interference task (Fig. 2). ROI-based comparisons within visual cortex, confirmed by a multivoxel searchlight analysis (Kriegeskorte et al. 2006), revealed that the distinctiveness of fMRI patterns in early visual cortex depended on the judged visual dissimilarity between object states. Within left pVLPFC (and across the whole brain), we tested whether univariate activation levels tracked pattern dissimilarity in early visual cortex. Interactions between patterns measured in visual cortex, and activation measured in left pVLPFC, suggest a neural basis for cognitive conflict that results from feature variability in object processing. Further, activation within left ventral posterior parietal cortex (vPPC) was also predicted by pattern dissimilarity in early visual cortex. And whereas pattern dissimilarity in early visual cortex correlated with perceived dissimilarity of the altered object states, patterns measured in late visual cortex reflected the between-object dissimilarity of the stimuli. We suggest that these data are compatible with a functional network supporting feature binding, object identity, and object-state representation.

Figure 2.

Functional ROI frequency overlap. (A) Left pVLPFC (outlined in red) was among areas most responsive to conflict trials compared with neutral trials in a group analysis of the Stroop task (left; yellow indicates P < 0.01, 2-tailed corrected, in a group-level contrast of Stroop conflict trials vs. Stroop neutral trials). The left pVLPFC ROI for each subject included voxels most responsive to Stroop conflict (right). (B) The early visual cortex ROI included voxels most responsive to scrambled objects in a perceptual localizer. (C) The late visual cortex ROI included voxels most responsive to intact objects. Maximum overlap was 9 of 14 subjects for the left pVLPFC Stroop-conflict ROI, 13 of 14 subjects for the early visual cortex ROI, and 10 of 14 subjects for the late visual cortex ROI. Shaded areas indicate voxels outside of ventral visual cortex, which were excluded from the searchlight analysis based on anatomical criteria.

Materials and Methods

Subjects

Fourteen right-handed native English speakers (eight females), aged 22–33 years, participated in the study. Each subject had normal or corrected to normal vision. One additional subject was excluded from data analysis and replaced due to unusually poor performance on the state-change task (correctly identifying the indicated object on 62.5% of all trials; 6.5 standard deviations (SDs) below the mean accuracy of all other subjects). All fMRI subjects were paid $20/h, were recruited from within the University of Pennsylvania community, and gave informed consent as approved by the University of Pennsylvania Institutional Review Board. Additionally, 804 native English speakers participated online in tasks used for stimulus norming.

Stimuli

We selected 40 common objects for the experiment. The only difference between the substantial change and minimal change conditions was the described action. Across subjects, every object appeared in both the substantial change condition and minimal change condition, while each subject encountered each object in only 1 of the 2 conditions. (See Supplementary Table 1 for a complete list of objects and actions.)

State-change ratings for each item were collected through an online survey completed by undergraduate students at the University of Pennsylvania (N = 106). The 80 total items were randomly split into 2 lists, so that each subject rated only 1 action per object. For each item, subjects were presented with the object photograph and, just below the photograph, either the minimal change action or the substantial change action. Subjects were asked, “Upon the event, will the object stay just the same as it had been before, or will it be changed at all?” Subjects rated each item on a 7-point scale ranging from “just the same” to “completely changed.” The average state-change rating was 5.28 for substantial change items, and 1.39 for minimal change items, with a reliable difference between conditions (P < 0.001; Fig. 1B).

To ensure that differences in pVLPFC activation for substantial change and minimal change trials were not due to differences in semantic association between objects and actions, we collected ratings of the associative strength between each photographed object and described action through a separate online survey (N = 98). Undergraduate subjects viewed object photographs individually, with either the minimal-change action or the substantial-change action printed below the photograph, and rated each item on a 7-point scale ranging from “action not at all associated with object” to “action extremely associated with object.” Association-strength ratings were reliably lower for minimal change actions (M = 3.23) than for substantial change actions (M = 5.41; P < 0.001). It has been hypothesized that the associative strength of paired stimuli predicts greater left pVLPFC recruitment when the associations are weakest (Badre and Wagner 2002; Martin and Cheng 2006). This would predict greater activation in left pVLPFC for minimal change actions—the opposite pattern of results from hypotheses based on representational conflict due to object-state change. To preview the results presented below, this is also opposite to the pattern we actually observed for the left pVLPFC ROI; object-state change predicted activation in the left pVLPFC ROI despite differences across conditions in the associative relationship between objects and actions. Likewise, associative strength between the depicted objects and described actions did not reliably predict left pVLPFC activation when treated as a continuous variable (P > 0.3).

Between-object shape-dissimilarity ratings for all object pairs were collected through an online survey posted on Amazon Mechanical Turk (N = 600). The 1560 object pairs were randomly split into 6 lists so that each subject rated a total of 260 pairs. Subjects were presented with side-by-side object photographs for each object pair, and rated their similarity in shape on a 7-point scale ranging from “not at all similar” to “extremely similar.” The most dissimilar pair was essay/potatoes, and the least dissimilar pair was onion/pumpkin (Supplementary Fig. 1A).

State-Change Task

The primary task comprised 5 scanning runs with a total of 40 trials (20 minimal change, 20 substantial change). Stimuli were presented using E-Prime (Psychology Software Tools). Each trial was 45 s in duration, beginning with an object photograph that appeared on the screen for 1 s. After 6 s fixation, 3 visual imagery task instructions appeared one at a time on the screen: remember the object, imagine an action involving the object, imagine the object after the action. Each instruction appeared on the screen for 4 s, followed by 6 s fixation. Subsequent to the visual imagery segments of each trial, subjects used a button box to make 2 separate responses for each trial. First, subjects identified which of 2 clipart images was most similar to the object at either the beginning or end of the trial (as indicated by a cue). Subjects correctly identified the indicated object on 90.8% of trials. Because the retrieval questions were subsequent to time points of interest, we included all trials in analyses reported below. Removing error trials had no effect on the statistical significance of the described results, with 2 exceptions: ratings-predicted activation in left pVLPFC was less reliable and no longer significant with error trials removed, while pattern-predicted activation in left pVLPFC was even more reliable with error trials removed. Finally, subjects indicated on a scale of 1–4 the vividness with which they imagined the end state of the object. The average vividness rating was slightly but reliably greater for substantial change trials (M = 3.57) than for minimal change trials (M = 3.38; t(13) = 3.19, P < 0.01). Importantly, the direction of this difference suggests that visual cortex pattern dissimilarity and left pVLPFC activation were not driven by either failure or difficulty in visualizing the changed states of the objects.

Stroop Interference Task

Following the state-change runs, subjects performed 1 run of a Stroop color identification task, for which the response box was restricted to 3 buttons: yellow, green, and blue. Subjects were presented with a single word for each trial, and instructed to press the button corresponding to the typeface color. Each word appeared for 1800 ms with a 1200-ms interstimulus interval. Stimuli included 4 trial types: response-eligible conflict, response-ineligible conflict, and 2 groups of neutral trials (Milham et al. 2001; Hindy et al. 2012). For response-eligible conflict trials, the color word matched one of the possible responses (i.e., yellow, green, or blue), but mismatched the typeface color. Words for response-ineligible conflict trials (orange, brown, or red) also mismatched the typeface color, but were not possible responses. Separate sets of noncolor neutral trials (e.g., farmer, stage, and tax) were intermixed with the response-eligible and response-ineligible conflict trials. Subjects correctly answered 98.3% of Stroop trials, with an average response time of 790 ms for conflict trials and 755 ms for neutral trials (t(13) = 2.64, P < 0.05). In a group-level contrast, left pVLPFC was reliably more responsive to Stroop conflict than surrounding areas, while the location of the top 250 conflict-responsive voxels in left pVLPFC varied across subjects (Fig. 2A).

Visual Cortex Localizers

The final scanning run was a functional localizer during which subjects viewed 18 s blocked sequences of individual photographed objects without backgrounds, alternating with 18 s blocks of scrambled images of the object photographs (see below for further discussion of this localizer). Each stimulus was presented for 490 ms with a 490-ms interstimulus interval, and subjects performed a one-back repeat detection task. To generate scrambled objects, a 60 × 60 square grid for each object photograph was randomly permuted with a weighting to preserve center coherence.

Image Acquisition

Structural and functional data were collected on a 3-T Siemens Trio system and a 32-channel array head coil. Structural data included axial T1-weighted images with 160 slices and 1 mm isotropic voxels (TR = 1620 ms, TE = 3.87 ms, TI = 950 ms). Functional data included echo-planar fMRI performed in 44 axial slices and 3 mm isotropic voxels (TR = 3000 ms, TE = 30 ms). Twelve seconds preceded data acquisition in each functional run to approach steady-state magnetization.

Image Processing and Analysis

Image preprocessing and statistical analyses were performed using AFNI (Cox 1996) and visualized in SUMA. Functional data were sinc interpolated to correct for slice timing, aligned to the mean of all functional images using a 6-parameter iterated least squares procedure, registered to structural data, normalized to Talairach space (Talairach and Tournoux 1988), smoothed with a 4-mm FWHM Gaussian kernel, and z-normalized within each run. Each trial segment was modeled with a canonical hemodynamic response function, convolved with a boxcar that matched the duration of the trial segment (i.e., 1 s for each photograph, 4 s for each imagery component, and 6 s for the cued-retrieval and vividness rating questions that followed each trial). Beta coefficients were estimated using a modified general linear model that included a restricted maximum likelihood estimation of the temporal autocorrelation structure, with a polynomial baseline fit and 6 motion parameters as covariates of no interest. For whole-brain analyses, minimum cluster extent was determined by Monte Carlo simulation in order to correct for multiple comparisons.

ROIs were functionally defined separately for each subject using the perceptual localizer task and Stroop interference task described above, and were restricted anatomically to ventral occipitotemporal cortex and left pVLPFC. Anatomical constraints were defined in Talairach space using probabilistic cytoarchitectonic maps provided in the SPM Anatomy Toolbox (Eickhoff et al. 2005). Visual cortex ROIs were anatomically constrained to bilateral ventral occipitotemporal cortex, including inferior occipital cortex, lingual gyrus, and the posterior regions of fusiform gyrus and inferior temporal gyrus. The ROI for late visual cortex (Fig. 2B) included the 250 visual cortex voxels most responsive to intact objects compared with scrambled objects, while the ROI for early visual cortex (Fig. 2C) included the 250 visual cortex voxels most responsive to scrambled objects compared with intact objects. For further details on this method of defining early visual cortex as voxels most responsive to scrambled objects, see also MacEvoy and Epstein (2011) and Axelrod and Yovel (2012). Alternatively, early visual cortex can be defined anatomically, using a probabilistic retinotopic atlas (Wang et al. 2012). Supplementary Figure 1 displays analysis of anatomically defined V1 and V2. Consistent with neurophysiology and neuroimaging evidence of stimulus selectivity in striate and extrastriate visual cortex (Hegdé and Van Essen 2000; Wilkinson et al. 2000), this analysis revealed that change-specific pattern-dissimilarity effects were strongest in V2, but did not reliably differ across areas.

The ROI for Stroop-sensitive cortex in left pVLPFC was anatomically constrained to BA 44 (pars opercularis), BA 45 (pars triangularis), and the inferior frontal sulcus, and comprised the 250 voxels with the highest t-statistics in a contrast of conflict trials (either response-eligible or response-ineligible) versus neutral trials during the Stroop interference task (Fig. 2A). The functional constraint provided by the Stroop task ensured that those voxels included in this ROI were most sensitive to conflict on a subject-specific basis. Because the Stroop task involves multiple distinct forms of conflict at multiple levels (e.g., task set, motor response, and color representation), it is important to anatomically constrain a Stroop-defined ROI to cortex that is most likely to be involved in the process of interest. The anatomical constraint to left pVLPFC ensured that this ROI reflected conflict-related processing at the level of semantic representation. For ROI-based regression analysis of neural activation in left pVLPFC, activation values were extracted across the entire left pVLPFC ROI for each subject. For further details on identifying conflict-sensitive cortex within left pVLPFC, see also January et al. (2009) and Hindy et al. (2012).

Pattern dissimilarity was measured for each trial as the correlation distance (1 – Pearson correlation) across all voxels in each ROI or searchlight, between post-action and pre-action time points. Likewise, univariate BOLD activation was measured for each trial as the mean amplitude difference of the BOLD signal between post-action and pre-action time points. In second-level analyses, we compared pattern dissimilarity to stimulus ratings and univariate activation. For categorical analysis of pattern dissimilarity, we used paired-sample t-tests to compare pattern dissimilarity across minimal change and substantial change conditions. For parametric analyses of both pattern dissimilarity and pattern-predicted activation, we estimated a separate linear regression coefficient for each subject that predicted either pattern dissimilarity for each trial based on the state-change ratings, or univariate activation for each trial based on the pattern dissimilarity. We then used 1-sample t-tests to examine the reliability across subjects of the regression coefficients. Statistical significance of each pattern dissimilarity and pattern-predicted activation result is identical when Pearson correlation coefficients are entered into the second-level analyses, in place of linear regression coefficients.

Results

ROI Dissimilarity

We extracted the multivoxel pattern across all voxels in each ROI for 2 different time points within each trial: when subjects imagined the object in its initial form before the action, and when subjects imagined the same object in its new form after an action that caused either a minimal or substantial change. We used correlation distance (1−Pearson correlation) (Haxby et al. 2001; Aguirre 2007) between the 2 multivoxel patterns for each trial as a measure of within-trial pattern dissimilarity between distinct states of the same object. Once pattern-dissimilarity scores were computed, we measured the extent to which pattern dissimilarity could be predicted by object-state change. In an analysis of the categorical effect of trial condition, we measured the extent to which pattern dissimilarity was greater for substantial change items than for minimal change items. In late visual cortex, the difference between conditions was not reliable (P > 0.4). In early visual cortex, pattern dissimilarity was reliably greater for substantial change items than for minimal change items (t(13) = 2.19, P < 0.05). The interaction between early and late visual cortex and condition was marginally reliable (F1,13 = 3.19, P < 0.1), with no reliable interactions between hemisphere and condition in either early or late visual cortex (P's > 0.6). In a parametric analysis of the effect of state change measured continuously, we replaced the trial type covariate in the model with a continuous measure of state change based on the average rating collected for each item. In late visual cortex, ratings of object-state change did not reliably predict pattern dissimilarity (P > 0.5). In early visual cortex, state-change ratings reliably predicted visual cortex pattern dissimilarity (t(13) = 2.23, P < 0.05), while the interaction between early and late visual cortex was marginally reliable (t(13) = 1.78, P < 0.1). There were no reliable differences across hemisphere in either early or late visual cortex (P's > 0.5).

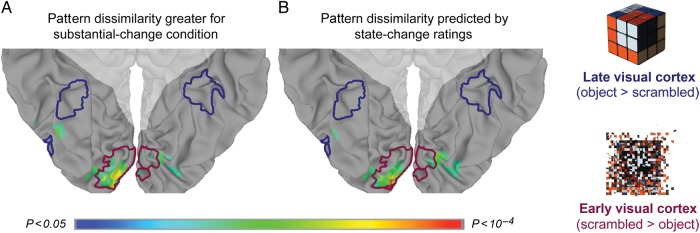

Searchlight Dissimilarity

We used multivoxel searchlight analysis to examine the anatomical specificity to early visual cortex of state-change dependent pattern dissimilarity. We passed a spherical searchlight (Kriegeskorte et al. 2006) with a 3-voxel radius over each voxel in bilateral ventral visual cortex, including inferior occipital cortex, lingual gyrus, and the posterior fusiform and inferior temporal gyri (Fig. 3). Unless constrained by a boundary on the outermost edge of the visual cortex mask, each spherical searchlight comprised 123 voxels. Within each searchlight, we made 3 separate comparisons for each subject and assigned the resulting measurements to the central voxel of the searchlight. For the first comparison, we used data from the perceptual localizer task to identify voxels in visual cortex that responded more to scrambled than intact objects, defined as early visual cortex, and vice versa for late visual cortex. The second 2 comparisons were based on data from the state-change task.

Figure 3.

Pattern-dissimilarity searchlight in visual cortex. (A) Searchlights in which pattern dissimilarity was greater for substantial change condition, and their overlap with the perceptual localizers for early visual cortex (blue) and late visual cortex (red). (B) Searchlights in which pattern dissimilarity was most reliably predicted by the state-change ratings. Shaded areas indicate voxels outside of ventral visual cortex, which were excluded from the searchlight analysis based on anatomical criteria.

In a searchlight analysis of the categorical effect of trial condition, we computed the mean pattern dissimilarity for minimal change and substantial change items, and compared the resulting searchlight maps across subjects in a paired-sample t-test at each searchlight. Searchlight spheres in which pattern dissimilarity was reliably greater for substantial change items (1-tailed P < 0.05) tended to be in lingual and inferior occipital cortex, overlapping extensively with the searchlights for early visual cortex (94 of 141 searchlights), and not at all with the searchlights for late visual cortex (χ2(1, N = 14) = 141.00, P < 0.001, in a test of the null hypothesis that searchlights with state-change dependent pattern dissimilarity overlapped equally with searchlights most responsive to scrambled objects and searchlights most responsive to intact objects; Fig. 3A). In a searchlight analysis of the parametric effect of object-state change on neural dissimilarity, reliable ratings-predicted searchlights overlapped extensively with the searchlights for early visual cortex (66 of 118 searchlights), and did not overlap at all with the searchlights for late visual cortex (χ2(1, N = 14) = 91.62, P < 0.001; Fig. 3B).

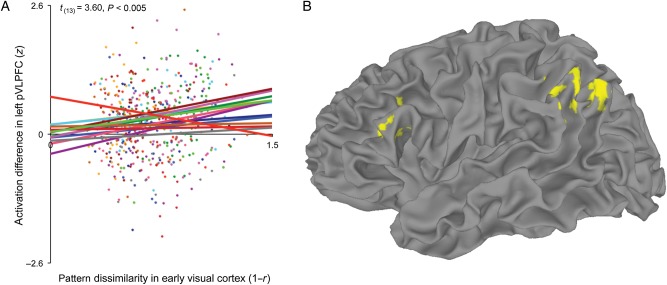

Pattern-predicted Activation

If pattern dissimilarity in early visual cortex reflects competition between object-state representations, then it should couple with conflict-related activation in left pVLPFC when subjects visualized the object after the state change. To test this, across all voxels in each subject's left pVLPFC ROI, we extracted the mean amplitude difference for each trial between the post-action time point and the pre-action time point. We then used linear regression to test the relationship across trials between pattern dissimilarity in early visual cortex and the modulation of mean activation in left pVLPFC. In each subject's linear regression model, a single parameter included the pattern-dissimilarity scores from early visual cortex for all state-change trials. Pattern dissimilarity in early visual cortex positively predicted activation in the left pVLPFC ROI of 13 of 14 subjects (t(13) = 3.60, P < 0.005; Fig. 4A). In a separate analysis, we measured the voxelwise overlap between voxels for which early visual pattern dissimilarity reliably predicted activation (1-tailed P < 0.05), and voxels most reliably responsive to conflict trials in the Stroop color-word interference task. This analysis revealed that 99 of the 232 left pVLPFC voxels in which visual cortex pattern dissimilarity reliably predicted activation overlapped with the 250 pVLPFC voxels most reliably responsive to Stroop conflict (χ2(1, N = 14) = 9.38, P < 0.005, in a test of the null hypothesis that voxels with reliable pattern-predicted activation were independent from voxels most sensitive to Stroop conflict; Supplementary Fig. 2). We conducted a control analysis to ensure that underlying signal-to-noise differences across pVLPFC voxels did not drive the observed overlap between pattern-predicted activation and Stroop conflict. Specifically, we calculated the temporal signal-to-noise ratio (TSNR) of each voxel in left pVLPFC by dividing the mean of each time series by its standard deviation (Murphy et al. 2007). Only 62 of the 232 voxels with the highest TSNR overlapped with the 250 voxels most reliably responsive to Stroop conflict, significantly fewer than the 99 voxels that overlapped for pattern-predicted activation and Stroop conflict (χ2(1, N = 14) = 13.02, P < 0.001).

Figure 4.

Pattern-predicted activation in left pVLPFC and across the cortical surface. (A) Early visual cortex pattern dissimilarity positively predicted the level of activation in the left pVLPFC ROI for 13 of 14 subjects. Data and linear trend for each subject is plotted in a single color. (B) In a whole-brain analysis, 2 reliable clusters of pattern-predicted activation were found: 1 cluster in left pVLPFC (peak: −43.5, 16.5, 17.5), and 1 cluster in left vPPC (peak: −46.5, −58.5, 41.5). There were no statistically reliable voxel clusters for which early visual cortex pattern dissimilarity negatively predicted activation. Minimum cluster extent was determined by Monte Carlo simulation in order to correct for multiple comparisons at 2-tailed P < 0.05.

To summarize: rated object-state change reliably predicted pattern dissimilarity in early visual cortex, and pattern dissimilarity in early visual cortex reliably predicted activation in left pVLPFC. However, in addition to the pattern-mediated relationship between state-change ratings and left pVLPFC activation, rated state change directly predicted activation in the Stroop-defined pVLPFC ROI (t(13) = 2.26, P < 0.05). We used multiple regression to conduct a mediation analysis in order to test whether pattern dissimilarity in early visual cortex predicted activation in left pVLPFC above and beyond that predicted by the stimulus ratings. In a linear model with 2 predictor variables—visual cortex pattern dissimilarity and state-change ratings—pattern dissimilarity remained a reliable predictor of the left pVLPFC response, (t(13) = 3.41, P < 0.005). This indicates that the effect of pattern dissimilarity in early visual cortex on BOLD activation in left pVLPFC reflects a coupling of these 2 brain areas, rather than mutually independent responses to the same stimuli.

To examine the anatomical specificity of the relationship between early visual cortex and activation in left pVLPFC, and to identify additional cortical regions for which activation varied with visual cortex pattern dissimilarity, we used early visual cortex as a seed for a whole-brain pattern-predicted activation analysis. By using early visual cortex pattern dissimilarity to predict voxel activation for each trial, we estimated a linear regression coefficient for every voxel in each subject's brain. Left pVLPFC and left vPPC were the only regions with clusters of activation reliably predicted by early visual cortex pattern dissimilarity, with no reliable clusters of pattern-predicted activation anywhere in the right hemisphere (P < 0.05, corrected; Fig. 4B).

In a separate whole-brain analysis seeded in late visual cortex, which was more responsive to intact objects than to scrambled objects, pattern dissimilarity across trials did not reliably predict activation anywhere in the brain. In the ROI analysis, there was a statistically unreliable relationship between pattern dissimilarity in late visual cortex and left pVLPFC activation (t(13) = 1.35, P = 0.20), although the difference between early and late visual cortex in the degree to which pattern dissimilarity predicted left pVLPFC activation was not statistically significant (P > 0.4).

Patterns in pVLPFC

While level of activation in left pVLPFC depended on pattern dissimilarity in early visual cortex, pattern dissimilarity in left pVLPFC was not reliably predicted by either early visual cortex pattern dissimilarity (P > 0.07) or the state-change ratings (P > 0.1). Moreover, the relationship between early visual cortex pattern dissimilarity and pVLPFC activation is significantly stronger than the relationship between early visual cortex pattern dissimilarity and pVLPFC pattern dissimilarity (t(13) = 2.98, P = 0.01). Likewise, while pattern dissimilarity in early visual cortex was sensitive to object-state change, state-change ratings did not reliably predict the mean level of activation in either early or late visual cortex (P's > 0.4).

Simulating Actions

Visualizing the altered state of an object (the balloon when inflated) corresponded to increased activation in left pVLPFC. Did visualizing the action that altered the state of that object (the act of inflating) also lead to increased left pVLPFC activation? To test this, for each trial we measured the mean amplitude difference between the pre-action time point and the time point at which subjects visualized the action that minimally or substantially changed the state of the object. Early visual cortex pattern dissimilarity did not reliably predict the difference in left pVLPFC activation between the pre-action and action time points of each trial (t(13) = 0.44, P = 0.67). There was a marginally reliable difference (t(13) = 2.15, P = 0.05) between the null effect of pattern-predicted activation for visualizing actions, and the reliable relationship (P < 0.005, see above) between early visual cortex pattern dissimilarity and left pVLPFC activation for visualizing the altered states of objects.

Pattern Dissimilarity for Distinct Objects

All of the effects in visual cortex reported above were specific to early, but not late (i.e., object-selective) visual cortex. In order to verify that response patterns in late visual cortex carried meaningful information, we conducted a post hoc analysis in which we examined the relationship between pattern dissimilarity and rated dissimilarity of object shapes, across different objects (e.g., “balloon” vs. “blackboard“). We conducted a pairwise dissimilarity analysis (referred to as representational similarity analysis, RSA, in Kriegeskorte et al. 2008; see also Weber et al. 2009) of data collected at the pre-action time point of each trial, when subjects visualized a previously depicted object before any state change. We separately collected shape-dissimilarity ratings for all pairwise combinations of the 40 objects used in the state-change task, in order to construct a ratings-based dissimilarity matrix of the 780 pairwise measurements for the full stimulus set (Supplementary Fig. 3). For each subject, within each of the different ROIs, we measured pattern dissimilarity between brain images corresponding to each of the 40 objects in order to construct a pattern-dissimilarity matrix of the 780 pairwise dissimilarity scores. Then we used Spearman rank correlation to compare the ratings dissimilarity matrix of the objects to the pattern-dissimilarity matrix for each subject for each ROI (see Kriegeskorte et al. (2008) regarding comparisons between high-dimensional pairwise dissimilarity matrices). In 1-sample t-tests of reliability across subjects based on the Fisher r-to-z transformed correlation coefficients, shape-dissimilarity ratings reliably predicted pattern dissimilarity in the ROI for late visual cortex (t(13) = 4.06, P = 0.001), but not in either early visual cortex or left pVLPFC (P's > 0.3). Due to the nonindependence of pairwise dissimilarity matrices, we ran additional analyses on shuffled data to confirm the statistical reliability of the observed relationships between shape dissimilarity ratings and pattern dissimilarity within each of the ROIs. Specifically, we randomly permuted the object labels of the shape-dissimilarity matrix 10 thousand times. For each matrix permutation, we calculated the Spearman rank correlation between the shuffled ratings dissimilarity matrix and the pattern-dissimilarity matrix for each ROI of each subject, and then used 1-sample t-tests of the Fisher r-to-z transformed correlation coefficients to simulate a null distribution. Within the respective null distributions, the actually observed relationship between rated dissimilarity and pattern dissimilarity was statistically reliable for late visual cortex (P < 0.005), and did not approach significance for either early visual cortex or left pVLFPC (P's > 0.3). While the difference between late visual cortex and early visual cortex in the degree to which shape-dissimilarity ratings-predicted pattern dissimilarity was not reliable (P > 0.1), the difference between left pVLPFC and late visual cortex was significant (t(13) = 2.37, P < 0.05). Hence, pattern dissimilarity in late visual cortex did not track “within”-object dissimilarity, but it did track “between”-object dissimilarity.

Discussion

Visual features of category exemplars can vary. In this study, we explored the consequences of tracking feature variation in a single object after an event that alters it. We identified a neural signature of object-state change in early visual cortex, evident in multivoxel pattern similarity. We used this signature to test a hypothesis regarding left pVLPFC activation evoked during state-change comprehension. Through a mediation analysis, we demonstrated that the relationship between early visual cortex pattern dissimilarity and left pVLPFC activation is not solely explained by their respective stimulus-evoked responses to object-state change, but also by a neural response coupling that extends beyond the stimulus dimension captured by the state-change ratings. In this section, we summarize the key findings, including those for late visual cortex and left vPPC, and discuss their implications for our understanding of visual processing and cognitive control. We suggest that left pVLPFC and left vPPC support complementary top-down signals that, at the same time, dissociate visual cortex patterns so that the appropriate object-state representation is expressed in each specific context, and also bind visual cortex patterns together so that a persisting object representation is maintained across varied contexts.

In early visual cortex, pattern dissimilarity across time points varied continuously with the rated degree to which an object was suggested to be changed in state by a described action. In searchlight analyses, dissimilarity between multivoxel patterns most reliably tracked object-state change in areas of lingual and inferior occipital gyri that responded most strongly to scrambled objects in a separate perceptual localizer task. This set of findings is consistent with sensorimotor models of long-term memory (Martin 2007; Barsalou 2008), in which semantic and episodic information is maintained in the same cortical regions that process the relevant sensory information, and also with recent evidence that early visual cortex is involved in the short-term maintenance of object representations (Harrison and Tong 2009; Serences et al. 2009).

Early visual cortex pattern dissimilarity in turn predicted differences in mean activation in left pVLPFC. While object-state change reliably predicted both pattern dissimilarity in early visual cortex and activation in left pVLPFC, the linear relationship between early visual cortex and left pVLPFC was reliable even when variance accounted for by the state-change ratings was factored out. This coupling of the BOLD response across brain areas suggests that the left pVLPFC activation observed here functionally interacts with competing distributed representations in primary sensory cortex. The direction of this interaction is open to interpretation: it could be that pattern dissimilarity in early visual cortex leads to increased recruitment of left pVLPFC, or that increased recruitment of left pVLPFC leads to increased pattern dissimilarity in early visual cortex. While our analysis approach was to use visual cortex pattern dissimilarity to predict left pVLPFC activation, we suggest that left pVLPFC supports a top-down signal of selective attention that bolsters expression of the contextually appropriate object-state representation.

While early visual cortex pattern dissimilarity predicted changes in left pVLPFC activation for the post-action time point when subjects imagined the altered state of each object, we failed to find a reliable relationship between early visual cortex pattern dissimilarity and left pVLPFC activation when subjects visualized the state-changing actions themselves. In contrast to hypotheses regarding the role for left pVLPFC in planning and mental simulation of actions (Bunge 2004), this null effect for the action time point suggests that left pVLPFC activation described here appears not to reflect mental simulation of actions themselves. Instead, left pVLPFC appears to be involved in managing interference between competing internal representations of an object when an intended action will change its state.

Unlike in early visual cortex, pattern dissimilarity in late visual cortex failed to reliably predict activation in left pVLPFC, and was invariant to the state-change manipulation. However, also unlike early visual cortex, late visual pattern dissimilarity did reliably correlate with visual dissimilarity among the various objects presented to each subject across different trials. At the pre-action time point, the more visually dissimilar a pair of objects was rated, the more dissimilar the patterns of activation in the late visual cortex ROI. At the same time, shape-dissimilarity judgments failed to predict between-object pattern dissimilarity in early visual cortex. Inferences regarding the dissociation of early and late visual cortex are limited because 1) the interactions between early visual cortex and late visual cortex in the degree to which object change predicted pattern dissimilarity were only marginally reliable (P < 0.1) for analysis based on condition as well as for analysis based on ratings, and 2) while both the rated dissimilarity and the pattern dissimilarity corresponding to distinct objects involved subjects viewing photographs of each object, rated dissimilarity and pattern dissimilarity for object-states were each based on the dissimilarity between an object photograph and an imagined altered state. Notwithstanding these limitations, the observed dissociation between visual areas suggests a possible neural basis for the distinction between objects and object states. While patterns in late visual cortex may reflect more abstract visual information that distinguishes between distinct objects (Grill-Spector and Malach 2004), patterns in early visual cortex may help distinguish between distinct states of a single object as it changes over time. Since perceptual invariance across object states is likely related to perceptual invariance across object viewpoints (Grill-Spector et al. 1999), future studies that include photographs of postchange as well as prechange object states may distinguish state-change invariance from viewpoint invariance within hierarchical models of object perception (Riesenhuber and Poggio 1999). Critically, however, while we may predict similar early visual cortex dissimilarity effects for imagined changes in object viewpoint as for imagined changes in object state, because alternative viewpoints of an object are easily combined into a single object (Serre et al. 2007), we would not necessarily predict viewpoint-dependent pattern dissimilarity to drive left pVLPFC activation in the same way as state-dependent pattern dissimilarity.

While early visual cortex pattern dissimilarity predicted left pVLPFC activation, it did not reliably predict left pVLPFC pattern dissimilarity. Indeed, the relationship between early visual cortex pattern dissimilarity and left pVLPFC activation is significantly stronger than the relationship between early visual cortex pattern dissimilarity and left pVLPFC pattern dissimilarity. Likewise, left pVLPFC representations appeared not to be specific to stimulus features that vary across either object states or distinct objects, as we failed to find a correlation between left pVLPFC pattern dissimilarity with either the state-change ratings or the between-object shape-dissimilarity ratings. These findings are consistent with previous neuroimaging (Jonides et al. 1998; D'Esposito et al. 1999), patient (Thompson-Schill et al. 2002), and transcranial magnetic stimulation (Feredoes et al. 2006; Feredoes and Postle 2010) studies suggesting that left pVLPFC recruitment depends on working memory interference independent of working memory content or load. While it seems unlikely that left pVLPFC activation in the present study depended on item-specific content, since the task for every item was the same (viz., to visualize the post-action state of the object), the data are consistent with active-maintenance models of prefrontal cortex (Miller and Cohen 2001; O'Reilly et al. 2010), in which patterns of neural activation are task-specific, rather than item-specific. Visualizing the post-action state of an object is more than a trivial task; it requires the subject to ignore the salient yet contextually inappropriate memory of an object photograph in favor of the weaker imagined representation of the same object in a different state.

The conception of left pVLPFC as the source of a task-specific bias signal, as evidenced here by pattern-predicted activation, may bear on recent findings regarding the relationship between left pVLPFC activation and object-state change during sentence comprehension (Hindy et al. 2012). In that experiment, described objects were generally changed from a canonical state for which subjects had a strong semantic representation, to a less typical state for which the semantic representation was sparser. Therefore, because of its relative strength, the initially activated object-state representation may have remained salient even after the contextually appropriate representation had been computed. (See Hindy et al. 2012 for Discussion of the distinction between maintaining and selecting between simultaneously activated object-state representations and computing and maintaining just a single contextually appropriate object-state representation; we argued there that our data were not straightforwardly explainable if event comprehension involves maintaining just a single object-state representation, and also appeared incompatible with an account in which the effects in left pVLPFC were due to increased load in the substantial change condition.) Indeed, neural dissimilarity between competing distributed patterns in sensory cortex may also underlie this previously observed effect of object-state change on left pVLPFC activation.

The specificity of effects to left pVLPFC in each dataset may be partially tied to the conceptual tasks used in each experiment. If subjects had instead viewed a photograph of each post-action object state, we may have expected right pVLPFC to be preferentially recruited for directing attention and resolving conflict between specific episodic representations (Dobbins and Wagner 2005; Kuhl et al. 2007). Because the task used here required subjects to attend to semantic information about objects and action consequences, left pVLPFC may have been specifically recruited to modulate competing semantic representations.

In biased competition models of semantic representation (Kan and Thompson-Schill 2004), top-down signals based on a task representation constrain competitive interactions between mutually inhibitory ensembles of interconnected neurons. Through excitatory pathways, activation in left pVLPFC increases the firing rate of specific neural populations in low-level visual cortex that code for specific task-relevant features that, in turn, inhibit the firing rate of other surrounding neural populations. Indeed, biased competition may be a general mechanism reflected in the left pVLPFC response to conflict in various tasks such as verb generation (Thompson-Schill et al. 1997), control of proactive interference (Jonides and Nee 2006), resolution of lexical (Hindy et al. 2009) and syntactic (Novick et al. 2005) ambiguity, and action planning when alternative actions are possible (Donohue et al. 2007), or when an intended action may result in changes to a stimulus. Pattern dissimilarity in visual cortex may thus reflect the goal-directed expression of the contextually appropriate object-state representation, and its separation from the initial contextually inappropriate representation (enhancing the distinction between the 2 representations). And at the same time that left pVLPFC processes keep object-state representations separate so that the appropriate representation is expressed in each specific context, processes elsewhere in the brain may keep object-state representations bound together across varied contexts.

Outside of left pVLPFC, left vPPC was the only other cortical region in which visual cortex pattern dissimilarity reliably predicted activation when subjects visualized altered states of objects. As with any unpredicted finding, our interpretation of this result is accompanied with a dash of caution. Notably, however, this finding is consistent with prior evidence that implicates left vPPC in conceptual combination (Binder et al. 2009; Shimamura 2011), in tasks such as integrating jumbled or disconnected words and information across contexts into a coherent narrative (Xu et al. 2005; Humphries et al. 2007). The notion of conceptual combination or binding, in the current context, is intriguing: We conjecture that through top-down feedback from left vPPC and left pVLPFC, the frequently changing sensory instantiations of an object can be bound to a stable and persisting object representation, while interference between incompatible alternative instantiations is minimized (Kahneman et al. 1992; Takahama et al. 2010).

In general, while multivoxel pattern analysis has been extremely useful for examining neural representations in sensory and motor cortex (Tong and Pratte 2012), it has been less effective for testing mechanistic models of frontal and parietal function (Riggall and Postle 2012). Pattern-predicted activation combines multivoxel pattern analysis in visual cortex with parametric univariate analysis of activation in frontal and parietal cortex. Here, we used this connectivity-based approach to test a specific account of the role of left pVLPFC in visualizing objects, and in managing the selection of the contextually appropriate state of the object when that object has to be recalled or imagined. Additionally, through parietal cortex findings in a whole-brain analysis, we demonstrated the exploratory usefulness of examining correlations between neural patterns and neural activation.

In summary, we have shown that the neural representation of object states involves a distinct network of brain areas, including areas of the ventral visual cortex that are central-to-visual perception, an area of lateral prefrontal cortex necessary for cognitive control, and an area of posterior parietal cortex implicated in conceptual combination and binding. While a distributed network across both early and late visual cortex encodes the distinct states corresponding to the same object across time, left pVLPFC processes are necessary to select among incompatible representations, and left vPPC is recruited to bind the distinct states to the same persisting object representation. Pattern-predicted activation analyses suggest that left pVLPFC exerts executive control over posterior brain regions that store and instantiate information, and that biased competition is an integral part of event cognition, enabling selection of the contextually appropriate representation among competing instantiations of the same object as it undergoes change. In this way, top-down projections from prefrontal and parietal cortex may bias visual and semantic representations in the retrieval and binding of conceptual knowledge and visual imagery. When the comprehender must resolve the interference caused by alternative states of a single object, left pVLPFC may act as a top-down modulatory signal to bias candidate neural patterns toward the contextually appropriate representation of the object.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This research was funded by the NIH (RO1 EY021717 to S.L.T.-S.), the ESRC (RES-063-27-0138 and RES-062-23-2749 to G.T.M.A.), and an NSF graduate research fellowship to N.C.H.

Supplementary Material

Notes

We are grateful to Emily Kalenik for help with stimulus development. Conflict of Interest: None declared.

References

- Aguirre GK. Continuous carry-over designs for fMRI. NeuroImage. 2007;35:1480–1494. doi: 10.1016/j.neuroimage.2007.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altmann GTM, Kamide Y. Discourse-mediation of the mapping between language and the visual world: eye movements and mental representation. Cognition. 2009;111:55–71. doi: 10.1016/j.cognition.2008.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Axelrod V, Yovel G. Hierarchical processing of face viewpoint in human visual cortex. J Neurosci. 2012;32:2442–2452. doi: 10.1523/JNEUROSCI.4770-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badre D, Wagner AD. Semantic retrieval, mnemonic control, and prefrontal cortex. Behav Cogn Neurosci Rev. 2002;1:206–218. doi: 10.1177/1534582302001003002. [DOI] [PubMed] [Google Scholar]

- Banich MT, Milham MP, Atchley R, Cohen NJ, Webb A, Wszalek T, Kramer AF, Liang ZP, Wright A, Shenker J. fMRI studies of Stroop tasks reveal unique roles of anterior and posterior brain systems in attentional selection. J Cogn Neurosci. 2000;12:988–1000. doi: 10.1162/08989290051137521. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Grounded cognition. Annu Rev Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunge SA. How we use rules to select actions: a review of evidence from cognitive neuroscience. Cogn Affect Behav Neurosci. 2004;4:564–579. doi: 10.3758/CABN.4.4.564. [DOI] [PubMed] [Google Scholar]

- Clarke A, Taylor KI, Devereux B, Randall B, Tyler LK. From perception to conception: how meaningful objects are processed over time. Cereb Cortex. 2012;23:187–197. doi: 10.1093/cercor/bhs002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cree GS, McRae K. Analyzing the factors underlying the structure and computation of the meaning of chipmunk, cherry, chisel, cheese, and cello (and many other such concrete nouns) J Exp Psychol Gen. 2003;132:163. doi: 10.1037/0096-3445.132.2.163. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Postle BR, Jonides J, Smith EE. The neural substrate and temporal dynamics of interference effects in working memory as revealed by event-related functional MRI. Proc Natl Acad Sci. 1999;96:7514. doi: 10.1073/pnas.96.13.7514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobbins IG, Wagner AD. Domain-general and domain-sensitive prefrontal mechanisms for recollecting events and detecting novelty. Cereb Cortex. 2005;15:1768–1778. doi: 10.1093/cercor/bhi054. [DOI] [PubMed] [Google Scholar]

- Donohue SE, Wendelken C, Bunge SA. Neural correlates of preparation for action selection as a function of specific task demands. J Cogn Neurosci. 2007;20:694–706. doi: 10.1162/jocn.2008.20042. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Feredoes E, Postle BR. Prefrontal control of familiarity and recollection in working memory. J Cogn Neurosci. 2010;22:323–330. doi: 10.1162/jocn.2009.21252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feredoes E, Tononi G, Postle BR. Direct evidence for a prefrontal contribution to the control of proactive interference in verbal working memory. Proc Natl Acad Sci. 2006;103:19530. doi: 10.1073/pnas.0604509103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/S0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Ann Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Hegdé J, Van Essen DC. Selectivity for complex shapes in primate visual area V2. J Neurosci. 2000;20:RC61. doi: 10.1523/JNEUROSCI.20-05-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hindy NC, Altmann GTM, Kalenik E, Thompson-Schill SL. The effect of object state-changes on event processing: do objects compete with themselves? J Neurosci. 2012;32:5795–5803. doi: 10.1523/JNEUROSCI.6294-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hindy NC, Hamilton R, Houghtling AS, Coslett H, Thompson-Schill SL. Computer-mouse tracking reveals TMS disruptions of prefrontal function during semantic retrieval. J Neurophysiol. 2009;102:3405. doi: 10.1152/jn.00516.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries C, Binder JR, Medler DA, Liebenthal E. Time course of semantic processes during sentence comprehension: an fMRI study. NeuroImage. 2007;36:924. doi: 10.1016/j.neuroimage.2007.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huth AG, Nishimoto S, Vu AT, Gallant JL. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron. 2012;76:1210–1224. doi: 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- January D, Trueswell JC, Thompson-Schill SL. Co-localization of stroop and syntactic ambiguity resolution in broca's area: implications for the neural basis of sentence processing. J Cogn Neurosci. 2009;21:2434–2444. doi: 10.1162/jocn.2008.21179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jonides J, Nee DE. Brain mechanisms of proactive interference in working memory. Neuroscience. 2006;139:181–193. doi: 10.1016/j.neuroscience.2005.06.042. [DOI] [PubMed] [Google Scholar]

- Jonides J, Smith EE, Marshuetz C, Koeppe RA, Reuter-Lorenz PA. Inhibition in verbal working memory revealed by brain activation. Proc Natl Acad Sci. 1998;95:8410. doi: 10.1073/pnas.95.14.8410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Treisman A, Gibbs BJ. The reviewing of object files: object-specific integration of information. Cogn Psychol. 1992;24:175–219. doi: 10.1016/0010-0285(92)90007-O. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Selection from perceptual and conceptual representations. Cogn Affect Behav Neurosci. 2004;4:466–482. doi: 10.3758/CABN.4.4.466. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis—connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:23–24. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Dudukovic NM, Kahn I, Wagner AD. Decreased demands on cognitive control reveal the neural processing benefits of forgetting. Nat Neurosci. 2007;10:908–914. doi: 10.1038/nn1918. [DOI] [PubMed] [Google Scholar]

- Lee S-H, Kravitz DJ, Baker CI. Disentangling visual imagery and perception of real-world objects. NeuroImage. 2012;59:4064–4073. doi: 10.1016/j.neuroimage.2011.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacEvoy SP, Epstein RA. Constructing scenes from objects in human occipitotemporal cortex. Nat Neurosci. 2011;14:1323–1329. doi: 10.1038/nn.2903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod CM. Half a century of research on the Stroop effect: an integrative review. Psychol Bull. 1991;109:163. doi: 10.1037/0033-2909.109.2.163. [DOI] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annu Rev Psychol. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin RC, Cheng Y. Selection demands versus association strength in the verb generation task. Psychon Bull Rev. 2006;13:396–401. doi: 10.3758/BF03193859. [DOI] [PubMed] [Google Scholar]

- Medin DL, Schaffer MM. Context theory of classification learning. Psychol Rev. 1978;85:207–238. doi: 10.1037/0033-295X.85.3.207. [DOI] [Google Scholar]

- Milham MP, Banich MT, Webb A, Barad V, Cohen NJ, Wszalek T, Kramer AF. The relative involvement of anterior cingulate and prefrontal cortex in attentional control depends on nature of conflict. Cogn Brain Res. 2001;12:467–473. doi: 10.1016/S0926-6410(01)00076-3. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Murphy K, Bodurka J, Bandettini PA. How long to scan? The relationship between fMRI temporal signal to noise ratio and necessary scan duration. NeuroImage. 2007;34:565–574. doi: 10.1016/j.neuroimage.2006.09.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Novick JM, Trueswell JC, Thompson-Schill SL. Cognitive control and parsing: reexamining the role of Broca's area in sentence comprehension. Cogn Affect Behav Neurosci. 2005;5:263. doi: 10.3758/CABN.5.3.263. [DOI] [PubMed] [Google Scholar]

- O'Reilly RC, Herd SA, Pauli WM. Computational models of cognitive control. Curr Opin Neurobiol. 2010;20:257. doi: 10.1016/j.conb.2010.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riesenhuber M, Poggio T. Hierarchical models of object recognition in cortex. Nat Neurosci. 1999;2:1019–1025. doi: 10.1038/14819. [DOI] [PubMed] [Google Scholar]

- Riggall AC, Postle BR. The relationship between working memory storage and elevated activity as measured with functional magnetic resonance imaging. J Neurosci. 2012;32:12990–12998. doi: 10.1523/JNEUROSCI.1892-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schrödinger E. Die gegenwärtige Situation in der Quantenmechanik. Naturwissenschaften. 1935;23:823–828. doi: 10.1007/BF01491914. [DOI] [Google Scholar]

- Serences JT, Ester EF, Vogel EK, Awh E. Stimulus-specific delay activity in human primary visual cortex. Psychol Sci. 2009;20:207. doi: 10.1111/j.1467-9280.2009.02276.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serre T, Wolf L, Bileschi S, Riesenhuber M, Poggio T. Robust object recognition with cortex-like mechanisms. IEEE Trans Pattern Anal Mach Intell. 2007;29:411–426. doi: 10.1109/TPAMI.2007.56. [DOI] [PubMed] [Google Scholar]

- Shimamura AP. Episodic retrieval and the cortical binding of relational activity. Cogn Affect Behav Neurosci. 2011;11:277–291. doi: 10.3758/s13415-011-0031-4. [DOI] [PubMed] [Google Scholar]

- Stroop JR. Studies of interference in serial verbal reactions. J Exp Psychol. 1935;18:643. doi: 10.1037/h0054651. [DOI] [Google Scholar]

- Takahama S, Miyauchi S, Saiki J. Neural basis for dynamic updating of object representation in visual working memory. NeuroImage. 2010;49:3394–3403. doi: 10.1016/j.neuroimage.2009.11.029. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Thompson-Schill SL, Bedny M, Goldberg RF. The frontal lobes and the regulation of mental activity. Curr Opin Neurobiol. 2005;15:219–224. doi: 10.1016/j.conb.2005.03.006. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc Natl Acad Sci. 1997;94:14792. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Kan IP. Effects of repetition and competition on activity in left prefrontal cortex during word generation. Neuron. 1999;23:513–522. doi: 10.1016/S0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Jonides J, Marshuetz C, Smith EE, D'Esposito M, Kan IP, Knight RT, Swick D. Effects of frontal lobe damage on interference effects in working memory. Cogn Affect Behav Neurosci. 2002;2:109–120. doi: 10.3758/CABN.2.2.109. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Swick D, Farah MJ, D'Esposito M, Kan IP, Knight RT. Verb generation in patients with focal frontal lesions: a neuropsychological test of neuroimaging findings. Proc Natl Acad Sci. 1998;95:15855–15860. doi: 10.1073/pnas.95.26.15855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F, Pratte MS. Decoding patterns of human brain activity. Annu Rev Psychol. 2012;63:483–509. doi: 10.1146/annurev-psych-120710-100412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, Mruczek R, Arcaro M, Kastner S. Visual topographic probability maps (VTPM) in standard MNI space. Presented at the; Society for Neuroscience Annual Meeting; October 13–17; New Orleans, LA. 2012. [Google Scholar]

- Weber M, Thompson-Schill SL, Osherson D, Haxby J, Parsons L. Predicting judged similarity of natural categories from their neural representations. Neuropsychologia. 2009;47:859–868. doi: 10.1016/j.neuropsychologia.2008.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilkinson F, James TW, Wilson HR, Gati JS, Menon RS, Goodale MA. An fMRI study of the selective activation of human extrastriate form vision areas by radial and concentric gratings. Curr Biol. 2000;10:1455–1458. doi: 10.1016/S0960-9822(00)00800-9. [DOI] [PubMed] [Google Scholar]

- Xu J, Kemeny S, Park G, Frattali C, Braun A. Language in context: emergent features of word, sentence, and narrative comprehension. NeuroImage. 2005;25:1002. doi: 10.1016/j.neuroimage.2004.12.013. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.