Abstract

One of the key benefits of using cochlear implants (CIs) in both ears rather than just one is improved localization. It is likely that in complex listening scenes, improved localization allows bilateral CI users to orient toward talkers to improve signal-to-noise ratios and gain access to visual cues, but to date, that conjecture has not been tested. To obtain an objective measure of that benefit, seven bilateral CI users were assessed for both auditory-only and audio-visual speech intelligibility in noise using a novel dynamic spatial audio-visual test paradigm. For each trial conducted in spatially distributed noise, first, an auditory-only cueing phrase that was spoken by one of four talkers was selected and presented from one of four locations. Shortly afterward, a target sentence was presented that was either audio-visual or, in another test configuration, audio-only and was spoken by the same talker and from the same location as the cueing phrase. During the target presentation, visual distractors were added at other spatial locations. Results showed that in terms of speech reception thresholds (SRTs), the average improvement for bilateral listening over the better performing ear alone was 9 dB for the audio-visual mode, and 3 dB for audition-alone. Comparison of bilateral performance for audio-visual and audition-alone showed that inclusion of visual cues led to an average SRT improvement of 5 dB. For unilateral device use, no such benefit arose, presumably due to the greatly reduced ability to localize the target talker to acquire visual information. The bilateral CI speech intelligibility advantage over the better ear in the present study is much larger than that previously reported for static talker locations and indicates greater everyday speech benefits and improved cost-benefit than estimated to date.

Keywords: bilateral cochlear implant, speech intelligibility, audio-visual, localization

Introduction

It is well established that the addition of visual information can improve speech understanding in noise, both for listeners with normal hearing and for users of hearing aids and cochlear implants (e.g., Sumby and Pollack 1954; Erber 1972; Summerfield 1979; Desai et al. 2008). However, in noisy social settings, the locations of participants in conversations differ, and access to visual cues requires the ability to quickly localize each new talker of interest. Favorable orientation toward the target also can improve the signal-to-noise ratio (SNR) at an ear when the head is positioned to act as an acoustic barrier between the noise source and the ear (e.g., Shaw 1974), whereas adverse orientation can degrade SNRs particularly for unilateral listening for which the option to attend either ear is unavailable. One of the two main advantages of using cochlear implants in both ears rather than only one is the ability to localize sound sources (e.g., van Hoesel and Tyler 2003; Nopp et al. 2004; Laszig et al. 2004; Tyler et al. 2007; Grantham et al. 2007; Neuman et al. 2007; Litovsky et al. 2009). The other reported benefit is improved speech understanding in noise by attending the better ear when SNRs differ at the two ears, whereas additional benefits from using both ears together are small in cochlear implant (CI) users (e.g., Gantz et al. 2002; Müller et al. 2002; van Hoesel and Tyler 2003; Laske et al. 2009; Schleich et al. 2004; Ramsden et al. 2005; Litovsky et al. 2006, 2009; Ricketts et al. 2006; Buss et al. 2008; Koch et al. 2009; Laszig et al. 2004; Loizou et al. 2009; Culling et al. 2012; see van Hoesel 2011, 2012 for overviews) but not in listeners with normal hearing (e.g., Culling et al. 2004; Hawley et al. 2004). However, the assessment of speech benefits with bilateral implants to date has been limited to audio-only experiments with known target azimuths. In social settings, noise levels will often be similar at the two ears due to the diffuse nature of the noise and reverberation, which will reduce the better-ear-listening benefit available to bilateral CI users. Nevertheless, self-report studies consistently demonstrate subjective preference for bilateral CI use (e.g., Summerfield et al. 2006; Litovsky et al. 2006; Wackym et al. 2007; Laske et al. 2009; Tyler et al. 2009) as well as reduced perceived handicap (Noble et al. 2008) in social situations. It seems likely therefore that much of the perceived benefit of bilateral CI use in social settings derives from the greatly improved ability to localize sound sources with two CIs than with only one because it allows listeners to quickly adjust orientation to improve SNR, and perhaps more importantly gain access to visual cues. In contrast, unilateral CI users who cannot localize will be at a substantial disadvantage. To assess that hypothesis, bilateral CI users were tested for speech intelligibility in noise using a novel dynamic spatial audio-visual (AV) test paradigm.

Methods

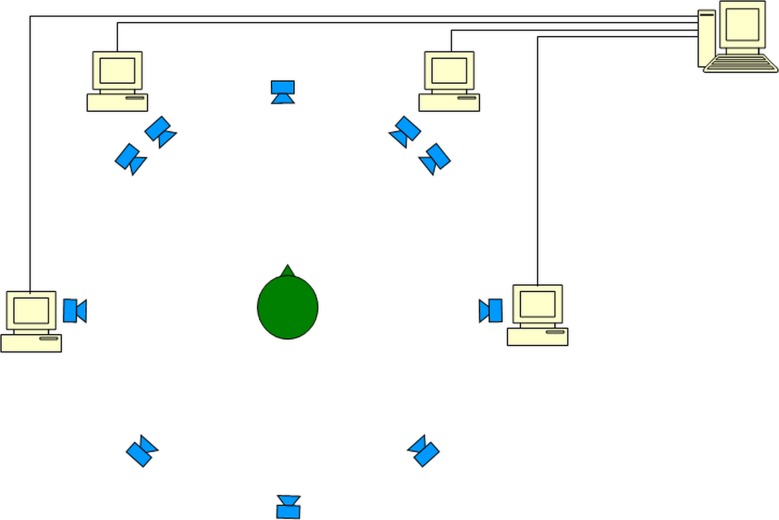

Seven experienced bilateral CI users were tested for speech understanding in noise using their own clinical sound processors, under ethical approval by the Royal Victorian Eye and Ear Hospital Human Research Ethics Committee, Melbourne, Australia. Prior to testing, approximate balance of loudness between ears was checked for sound sources directly to the front of the listener to avoid any confounding effect of large mapping mismatches across ears. Tests were administered using a custom-built audio-visual system comprising five computers, four video screens, and ten loudspeakers of which four coincided in azimuth with the video screens (Fig. 1). All loudspeakers were placed at a distance of 1.7 m from the center of the listener’s head measured in absentia, in a mildly reverberant sound-treated room (T60 = 0.25 s) measuring approximately 6 × 5 × 2 m (length × width × height). The four screens were 15 in in diagonal and placed at the same radius and just above the loudspeakers. Target materials were audio-visual recordings of naturally spoken Bamford-Kowal-Bench (BKB) sentences (Bench et al. 1979) presented at 65 dB (A-weighted). Each target sentence was randomly selected from four native speakers (two male and two female) of Australian English, and presented from one of the four screen-and-loudspeaker locations at ±34 ° and ±90 °. Those four locations were chosen to be spaced relatively evenly across the frontal arc within the constraints of a hemifield of available speakers at about 11 ° increments in the sound booth. An interfering audio-only (A) noise field was created using continuous dialog from eight additional talkers. Each interfering talker signal was presented from a separate loudspeaker spanning a full 360 ° circle at 45 ° increments. The loudspeakers at ±90 ° therefore potentially presented both target and noise signals. In addition, a rendering of the resulting reverberant noise field as it would be experienced in a large cafeteria (T60 = 0.6 s, direct-to-reverberant ratio = 3 dB) was calculated using custom software and additionally presented from the eight loudspeakers spaced at 45 ° increments. The purpose of the reverberant field was to improve the relevance of the test outcome to everyday listening conditions, given that localization abilities of CI users have been shown to be affected by reverberation (Kerber and Seeber 2013).

FIG. 1.

Illustration of the audio-visual test system used. The test procedure was administered primarily through a master computer that controlled the eight-channel audio-only noise field as well as four additional slave computers that in turn each controlled one audio/visual channel.

On each trial, the audio-only noise sound field was first activated, followed shortly afterward by an audio-only cueing phrase. The cueing phrase consisted of one of four reserved BKB sentences spoken by the same talker, at the same level, and from the same loudspeaker as the subsequent target. The cueing phrase provided information about both direction and talker of the subsequent audio-visual target sentence. After a short interval after completion of the cueing phrase, the AV target sentence was presented. At the same time, video-only distracters depicting the other three talkers were shown on the three non-target screens. None of the audio interferer dialog matched the video distractors. Although that does not usually occur in everyday listening, the primary purpose of the visual distractors was to avoid listeners being able to attend the target source location on the basis of it containing the only active video screen. The relevance of that difference is mitigated by the consideration that the noise signal contained many talkers that were individually presented at relatively low levels, making it likely very difficult for CI users to hear out individual talkers. During each trial, listeners were instructed to turn toward the loudspeaker that presented the audio-only cuing phrase so that they could attend the subsequent audio-visual target sentence from the same location and spoken by the same talker. They were also informed of the fact that the other three screens would be showing videos of the “wrong talkers” during the target sentence. Anecdotal observation of all seven subjects confirmed that they had clearly understood the instructions because they turned in different directions in response to the cueing phrase and then usually held the head position fixed toward the suspected screen while watching the associated video throughout the target sentence.

Intelligibility was assessed using an adaptive procedure that adjusted the total noise level to estimate the SNR that produced 50 % correct responses (SRT50). Each test run measure was derived from presentation of 16 sentences in a test list. Sentences were scored according to the number of target words correct. The initial step size in noise level was 3 dB, which was reduced to 2 dB after six sentences. To ensure that SRTs were reliable indicators of SNR, the noise level during the adaptive procedure was not allowed to drop below 45 dB (A-weighted), corresponding to a SNR of 25 dB. Because the number of available AV test materials was limited and lists were fairly short, the SNR for the first sentence was set to +5 dB, which was for most listeners quite difficult. If less than half the target words were identified in the first sentence, that sentence was repeated while decreasing the noise level in 3 dB steps until that criterion was satisfied. That procedure was used to increase the likelihood that from the second sentence onward SNR levels were likely to be in reasonable proximity of the SRT50 value. The subjects’ sound processors were all set to the “everyday listening” setting that does not include noise reduction. Sound field levels in the experiment were chosen to minimize asymmetric activation of fast-acting automatic gain controls, which might adversely impact localization abilities. Left, right, and bilateral listening conditions were all assessed two times, once in each of two separate test sessions. For each assessment, SNRs for the last ten sentences in the list were averaged to calculate the SRT50. The SRT50 values from both sessions were averaged to determine the final SRT50 estimate for each condition. During each session, audio-only SRT50 values were also measured for each listening condition using the same procedure, except that visual cues were absent. Additional AV bilateral SRTs were measured in six of the seven listeners using the same procedure as the AV procedure described above, except that in this case, target sentences were always presented from the fixed screen/loudspeaker at −34 ° and listeners were asked to remain oriented in that direction. The cueing phrase was preserved in the fixed target location assessment to aid listeners in identifying the target talker. The fixed location SRTs served to estimate the best case performance under conditions of perfect localization. Results were analyzed using ANOVA models in the Genstat software package, release version 16.1.

Results

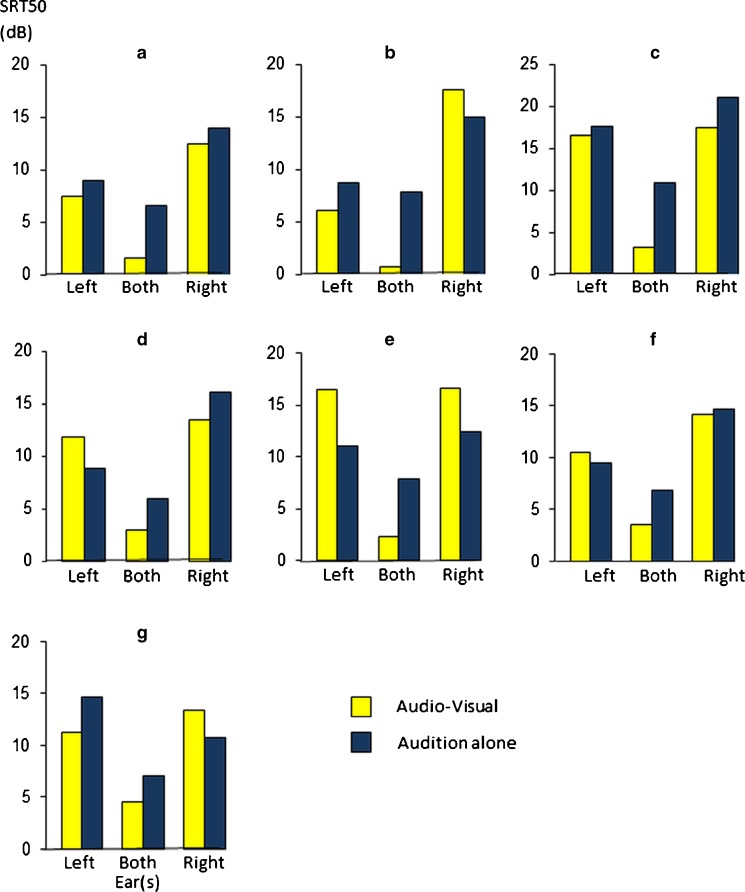

Figure 2 shows individual subject outcomes (one panel per subject) for both audio-visual (AV) and audition-alone (A) modes, and for left ear alone, both ears, and right ear alone. Particularly in the audio-visual mode, all seven listeners showed large improvements when using both ears compared to the better outcome with either ear alone, ranging between about 5 and 15 dB in terms of SRT50 reductions. Figure 3 shows the subject-averaged results for each “Ear” (better, bilateral, poorer) and “Mode” (AV, A). Note that in these averages, the better ear was always the one with the better AV score, even though in one subject (G), that was not also the better ear for audition alone. This was done to avoid comparison of monaural AV and A modes for opposite ears in that subject, and its effect on the overall averages is of no consequence to the outcomes discussed. A two-way ANOVA with subject as a random blocking factor was used to evaluate the outcomes and showed significant effects of Ear (better, both, poorer; F2,30 = 68.3; p < 0.001) and Mode (AV, A; F1,30 = 4.8; p = 0.034), as well as their interaction (F2,30 = 5.8; p = 0.007).

FIG. 2.

Individual subject SRT50 measures with dynamic target presentation for seven bilateral CI users (denoted A through G). Audio-visual mode results are shown in YELLOW, and audition-alone in DARK BLUE. within each subject’s panel, AV and A results are shown pairwise from left to right for listening with the left ear, both ears, or the right ear, respectively. all seven listeners show large improvements for bilateral over unilateral device use particularly for the AV condition.

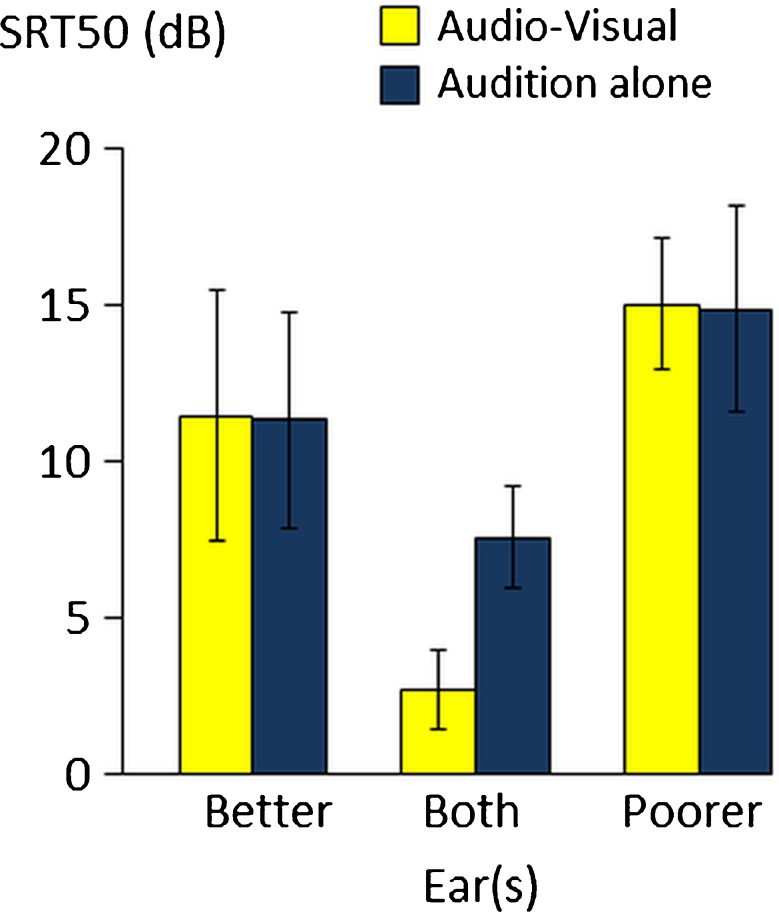

FIG. 3.

Subject-averaged SRT50 values for the seven listeners shown individually in Fig. 2. Audio-visual results are shown in YELLOW, audition-alone in DARK BLUE, and AV/A columns are paired for listening with the better ear, both ears, or the poorer ear. ERROR BARS show 1 stand deviation for the subject pool in each listening condition.

The subject-averaged improvement for bilateral listening over the better ear alone was about 9 dB for the AV mode, and 3 dB for audition-alone (5 % LSD = 1.7 dB). The significant interaction between Ear and Mode is due to a strong effect of adding visual cues for the bilateral condition, but not for either ear alone. For bilateral device use, the provision of visual cues improved average performance by 5 dB (5 % LSD = 1.4 dB), whereas for unilateral listening, performance was unaffected (<0.2 dB) for either ear.

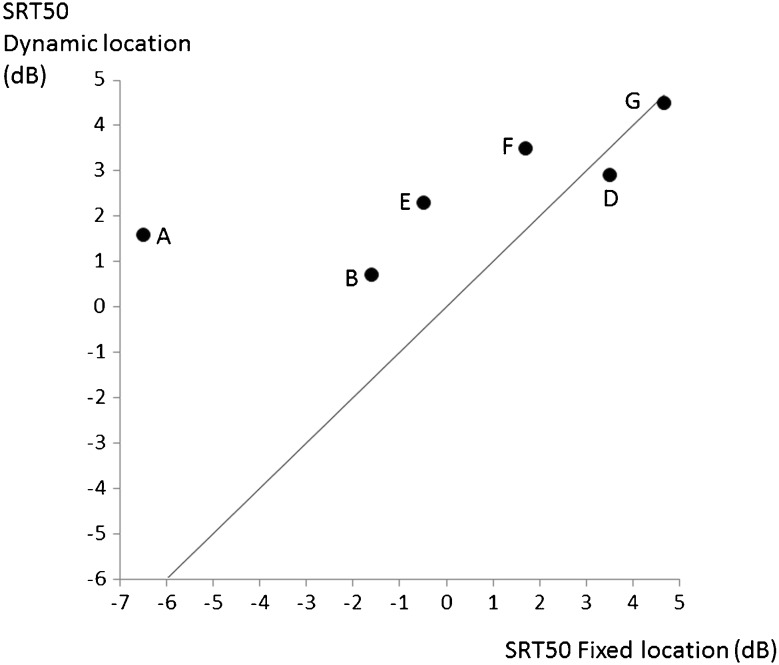

Figure 4 shows the extent to which bilateral SRT50 values increased (performance decreased) when target location was variable rather than fixed, plotted as a function of the fixed location SRT50 for all six listeners tested in both conditions. Linear regression analysis shows that the fixed location performance strongly predicts the performance reduction (p = 0.002) when location is variable and accounts for 90 % of the variance. However, because performance should not be better with variable locations than for the fixed condition, a more appropriate model with likely an even better fit would comprise a two-part piecewise linear function for which the SRT50 change is restricted to being greater than or equal to zero.

FIG. 4.

Audio-visual SRT50 values for dynamic target locations plotted against fixed location performance for the six participants who were evaluated in both conditions. labels A–G identify subjects in the same manner as for Fig. 2. Results show that for subjects who are able to understand AV speech at high noise levels in the fixed target location condition, dynamic target performance increasingly decreases relative to the fixed location result (equivalent performance is shown by the solid line). That is, as expected from the consideration that localization becomes increasingly difficult as SNR decreases. Conversely, for poorer performers who have fixed location SRT50 values that are positive, performance is little affected by varying target location because localization is easier at lower noise levels.

Discussion

The subject-averaged AV results in Fig. 3 show a large bilateral advantage (9 dB) compared to using the better ear alone, which is attributable to a better ability to localize the talker and accordingly orient toward that talker. The advantage over the better ear for audition alone was smaller (about 3 dB), but significant in both cases. While the AV advantage of using both ears was therefore primarily derived from improved access to the correct visual cues, the improvement in SNR due to a more favorable orientation toward the target also played a role. For unilateral listening, the inability to localize resulted in a complete absence of any benefit from adding visual cues when talker location varied. Note that attending the wrong screen as a result of incorrect target localization provides conflicting visual cues that can potentially degrade rather than facilitate AV intelligibility compared to audition alone. That conjecture is supported by spontaneous statements made by some of the participants, as well as comparison of unilateral results that show poorer outcomes for AV than A modes in four of the seven subjects (particularly subject E).

Previous speech studies with bilateral CI users have focused on audition alone with static target locations and show that under those conditions, the main benefit of using both ears is the ability to attend the better performing ear according to head shadow considerations. In the present study, the noise levels at the two ears were comparable, as will often be the case in everyday environments due to diffuse noise and reverberation, so that head-shadow-derived benefits are reduced. For static signal locations, previous studies have also shown that there is minimal binaural benefit compared to attending the better ear (see the “Introduction” section). In contrast, when target location varies, as in the present study, bilateral device use offers a much larger benefit (over the better ear) due to improved localization, particularly so for audio-visual presentation because localization mediates access to visual cues. These conditions are precisely the ones likely to be encountered in noisy social settings, which may account for the strong preference and reduced perceived handicap with bilateral rather than unilateral device use in such settings, despite the small bilateral benefit over the better ear indicated by previous laboratory speech studies with static target locations. Note that the same reduction in perceived handicap was not seen in bimodal listeners who use CI with a contralateral hearing aid (Noble et al. 2008), which can be explained by their relatively poor localization abilities compared to bilateral CI users (van Hoesel 2011, 2012). The results from the present study caution against the use of cost-benefit analyses that do not include speech assessments involving dynamic talker location as they are likely to substantially underestimate the everyday benefits in speech understanding and ease of communication with bilateral CIs.

The significant interaction between Ear and Mode (AV versus A) in the current study is due to the finding that presentation of visual target cues improved performance when listening with two ears, but not when listening with only one. That difference is readily understood in terms of the large localization advantage usually available with bilateral implants, which allows listeners to know where to turn to see the required visual information with two ears, but not with one.

Comparison of binaural AV outcomes for fixed and variable target locations (Fig. 4) shows that good performers in the fixed location test, who could tolerate considerably higher high noise levels than poor performers, displayed much less advantage over poor performers when target location was variable. This result suggests that performance in the dynamic task was primarily limited by localization abilities (in noise) that required positive SNRs in the range of a few decibels. For poorer performers, who require positive SNRs in the fixed location task, the dynamic task shows similar outcomes because localization is readily available at those noise levels. In contrast, good performers who can achieve negative SNRs in the fixed location task are not able to localize at those SNRs, so the difference between their fixed and dynamic results is much larger. The loss of the ability to localize at negative SNRs is in agreement with the localization performance in noise for bilateral CI users evaluated by van Hoesel et al. (2008), who using a loudspeaker array with a span of 180 ° found RMS errors in the range of 25 ° to 35 ° at an SNR of 0 dB. Similarly, Kerber and Seeber (2012) reported on bilateral CI users who were able to discriminate left from right at SNRs down to about −3 dB. Taken together, these results are encouraging in terms of practical outcomes because in many everyday situations, noise levels are likely to be limited to comparable levels. At those levels, localization is possible for bilateral but not unilateral implant users, so that the benefit of visual cues to speech understanding is more readily available to the former than the latter type of listener when talker location varies dynamically.

Conclusions

When target location varies dynamically, large audio-visual speech intelligibility benefits are available from the use of both ears rather than the better ear alone.

The large bilateral benefit of almost 10 dB in this experiment was mainly due to improved access to visual cues, but also due to increased SNRs associated with more favorable head orientation, both of which derive from the ability to localize with two implants and not with one.

The bilateral speech benefit over the better ear alone is much larger than reported in previous speech tests using static locations and audition alone.

The results from the present experiment may largely explain subjective preference of bilateral over unilateral CI use in dynamic social settings.

Acknowledgments

The author gratefully acknowledges the participation of the bilateral CI users who volunteered their time and efforts in this study, as well as assistance in acquiring test materials by Mridula Sharma, and provision of the noise field signals by Jörg Bucholz. A previous draft of the manuscript was improved through helpful comments from three anonymous reviewers, Ruth Litovsky, Bob Cowan, and discussions with Andrew Vandali. This research is supported by Hearing CRC, a Commonwealth Government Initiative.

References

- Bench J, Kowal A, Bamford J. The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. Br J Audiol. 1979;13(3):108–12. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Buss E, Pillsbury HC, Buchman CA, Pillsbury CH, Clark MS, Haynes DS, Labadie RF, Amberg S, Roland PS, Kruger P, Novak MA, Wirth JA, Black JM, Peters R, Lake J, Wackym PA, Firszt JB, Wilson BS, Lawson DT, Schatzer R, D’Haese PS, Barco AL. Multicenter US bilateral MED-EL cochlear implantation study: speech perception over the first year of use. Ear. Hear. 2008;29:20–32. doi: 10.1097/AUD.0b013e31815d7467. [DOI] [PubMed] [Google Scholar]

- Culling JF, Hawley ML, Litovsky RY. The role of head-induced interaural time and level differences in the speech reception threshold for multiple interfering sound sources. J. Acoust. Soc. Amer. 2004;116:1057–1065. doi: 10.1121/1.1772396. [DOI] [PubMed] [Google Scholar]

- Culling JF, Jelfs S, Talbert A, Grange JA, Backhouse SS. The benefit of bilateral versus unilateral cochlear implantation to speech intelligibility in noise. Ear Hear. 2012;33(6):673–82. doi: 10.1097/AUD.0b013e3182587356. [DOI] [PubMed] [Google Scholar]

- Desai S, Stickney G, Zeng FG. Auditory-visual speech perception in normal-hearing and cochlear-implant listeners. J Acoust Soc Am. 2008;123(1):428–440. doi: 10.1121/1.2816573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erber NP. Auditory, visual, and AV recognition of consonants by children with normal and impaired hearing. Journal of Speech and Hearing Research. 1972;15:413–422. doi: 10.1044/jshr.1502.413. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Tyler RS, Rubinstein JT, Wolaver A, Lowder M, Abbas P, Brown C, Hughes M, Preece J. Binaural cochlear implants placed during the same operation. Otol. Neurotol. 2002;23:169–180. doi: 10.1097/00129492-200203000-00012. [DOI] [PubMed] [Google Scholar]

- Grantham W, Ashmead DH, Ricketts TA, Labadie RF, Haynes DS. Horizontal-plane localization in noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear. Hear. 2007;28:524–541. doi: 10.1097/AUD.0b013e31806dc21a. [DOI] [PubMed] [Google Scholar]

- Hawley ML, Litovsky RY, Culling JF. The benefit of binaural hearing in a cocktail party: effect of location and type of interferer. J. Acoust. Soc. Amer. 2004;115:833–843. doi: 10.1121/1.1639908. [DOI] [PubMed] [Google Scholar]

- Kerber S, Seeber BU. Sound localization in noise by normal-hearing listeners and cochlear implant users. Ear Hear. 2012;33(4):445–57. doi: 10.1097/AUD.0b013e318257607b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerber S, Seeber BU. Sound localization in noise by normal-hearing listeners and cochlear implant users. Ear Hear. 2013;33(4):445–57. doi: 10.1097/AUD.0b013e318257607b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch DB, Soli SD, Downing M, Osberger MJ (2009) Simultaneous bilateral cochlear implantation: prospective study in adults. Cochlear Implants International 11(2):84–99 [DOI] [PubMed]

- Laske RD, Veraguth D, Dillier N, Binkert A, Holzmann D, Huber AM. Subjective and objective results after bilateral cochlear implantation in adults. Otol. Neurotol. 2009;30:313–318. doi: 10.1097/MAO.0b013e31819bd7e6. [DOI] [PubMed] [Google Scholar]

- Laszig R, Aschendorff A, Stecker M, Müller-Deile J, Maune S, Dillier N, Weber B, Hey M, Begall K, Lenarz T, Battmer RD, Böhm M, Steffens T, Strutz J, Linder T, Probst R, Allum J, Westhofen M, Doering W. Benefits of bilateral electrical stimulation with the nucleus cochlear implant in adults: 6-month postoperative results. Otol. Neurotol. 2004;25:958–968. doi: 10.1097/00129492-200411000-00016. [DOI] [PubMed] [Google Scholar]

- Litovsky R, Parkinson A, Arcaroli J, Sammeth C. Simultaneous bilateral cochlear implantation in adults: a multicenter clinical study. Ear. Hear. 2006;27:714–731. doi: 10.1097/01.aud.0000246816.50820.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Parkinson A, Arcaroli J. Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear. Hear. 2009;30:419–431. doi: 10.1097/AUD.0b013e3181a165be. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou P, Hu Y, Litovsky RY, Yu G, Peters R, Lake J, Roland P. Speech recognition by bilateral cochlear implant users in a cocktail party setting. J. Acoust. Soc. Am. 2009;125:372–383. doi: 10.1121/1.3036175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller J, Schön F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear. Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Neuman AC, Haravon A, Sislian N, Waltzman SB. Sound-direction identification with bilateral cochlear implants. Ear. Hear. 2007;28:73–82. doi: 10.1097/01.aud.0000249910.80803.b9. [DOI] [PubMed] [Google Scholar]

- Noble W, Tyler R, Dunn C, Bhullar N. Hearing handicap ratings among different profiles of adult cochlear implant users. Ear. Hear. 2008;29:112–120. doi: 10.1097/AUD.0b013e31815d6da8. [DOI] [PubMed] [Google Scholar]

- Nopp P, Schleich P, D';Haese P. Sound localization in bilateral users of MED-EL COMBI 40/40+ cochlear implants. Ear. Hear. 2004;25:205–214. doi: 10.1097/01.AUD.0000130793.20444.50. [DOI] [PubMed] [Google Scholar]

- Ramsden R, Greenham P, O'Driscoll M, Mawman D, Proops D, Craddock L, Fielden C, Graham J, Meerton L, Verschuur C, Toner J, McAnallen T, Osborne J, Doran M, Gray R, Pickerill M (2005) Evaluation of bilaterally implanted adult subjects with the nucleus 24 cochlear implant system. Otol Neurotol 26:988–998 [DOI] [PubMed]

- Ricketts TA, Grantham DW, Ashmead DH, Haynes DS, Labadie RF. Speech recognition for unilateral and bilateral cochlear implant modes in the presence of uncorrelated noise sources. Ear. Hear. 2006;27:763–773. doi: 10.1097/01.aud.0000240814.27151.b9. [DOI] [PubMed] [Google Scholar]

- Schleich P, Nopp P, D’Haese P (2004) Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40+ cochlear implant. Ear Hear 25:197–204 [DOI] [PubMed]

- Shaw EAG. Transformation of sound pressure level from the free field to the eardrum in the horizontal plane. J. Acoust. Soc. Am. 1974;56:1848–1861. doi: 10.1121/1.1903522. [DOI] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 1954;26:212–215. doi: 10.1121/1.1907309. [DOI] [Google Scholar]

- Summerfield Q. Use of visual information for phonetic perception. Phonetica. 1979;36:314–331. doi: 10.1159/000259969. [DOI] [PubMed] [Google Scholar]

- Summerfield AQ, Barton GR, Toner J, McAnallen C, Proops P, Harries C, Cooper H, Court I, Gray R, Osborne J, Doran M, Ramdsen R, Mawman D, O’Driscoll M, Graham J, Aleksy W, Meerton L, Verschure C, Ashcroft P, Pringle M. Self-reported benefits from successive bilateral cochlear implantation in post-lingually deafened adults: randomized controlled trial. Int. J. Audiol. 2006;45:S99–S107. doi: 10.1080/14992020600783079. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Dunn CC, Witt SA, Noble WG. Speech perception and localization with adults with bilateral sequential cochlear implants Ear. Hear. 2007;28:86S–90S. doi: 10.1097/AUD.0b013e31803153e2. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Perreau AE, Ji H. Validation of the spatial hearing questionnaire. Ear. Hear. 2009;30:466–474. doi: 10.1097/AUD.0b013e3181a61efe. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel RJM. Chapter 2, Bilateral cochlear implants. In: Zeng FG, Popper A, Fay R, editors. Auditory prostheses: new horizons. New York: Springer; 2011. [Google Scholar]

- van Hoesel RJM. Contrasting benefits from contralateral implants and hearing aids in cochlear implant users. Hearing Res. 2012;288:100–113. doi: 10.1016/j.heares.2011.11.014. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJM, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J. Acoust. Soc. Am. 2003;113:1617–1630. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- van Hoesel R, Böhm M, Pesch J, Vandali A, Battmer RD, Lenarz T. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. J. Acoust. Soc. Am. 2008;123:2249–2263. doi: 10.1121/1.2875229. [DOI] [PubMed] [Google Scholar]

- Wackym PA, Runge-Samuelson CL, Firszt JB, Alkaf FM, Burg LS. More challenging speech perception tasks demonstrate binaural benefit in bilateral cochlear implant users. Ear. Hear. 2007;28:805–855. doi: 10.1097/AUD.0b013e3180315117. [DOI] [PubMed] [Google Scholar]