Abstract

Objective

Substantial investment in electronic health records (EHRs) has provided an unprecedented opportunity to use clinical decision support (CDS) to increase guideline adherence. To inform efforts to maximize adoption, we characterized the adoption of an otitis media (OM) CDS system, the impact of performance feedback on adoption, and the effects of adoption on guideline adherence.

Study Setting

A total of 41,391 OM visits with 108 clinicians at 16 pediatric practices between February 2009 and August 2010.

Study Design

Prospective cohort study of EHR-based CDS adoption during OM visits, comparing clinicians receiving performance feedback to none. CDS was available to all physicians; use was voluntary.

Data Collection

Extraction from a common EHR.

Principal Findings

Clinicians and practices used the CDS system for a mean of 21 percent (range: 0–85 percent) and 17 percent (0–51 percent) of eligible OM visits, respectively. Clinicians who received performance feedback reports summarizing CDS use and guideline adherence had a relative increase in CDS use of 9.0 percentage points compared to others (p = .001). CDS adoption was associated with increased OM guideline adherence. Effects were greatest among clinicians with the lowest adherence prior to the study.

Conclusions

Performance feedback increased CDS adoption, but additional strategies are needed to integrate CDS into primary care workflows.

Keywords: Decision support, feedback, electronic medical record, otitis media, quality of care

The investment of $19 billion to promote the adoption of electronic health records (EHRs) by the American Recovery and Reinvestment Act (ARRA) of 2009 has provided an unprecedented opportunity to improve health care quality through health information technology (HIT) (American Recovery and Reinvestment Act 2009; Blumenthal 2009). Within ARRA, the Health Information Technology for Economic and Clinical Health Act (HITECH) authorizes incentive payments through Medicare and Medicaid to individual clinicians and health systems when they use EHRs to improve the delivery of care. These “meaningful use” incentives are substantial, with up to $44,000 available to individual providers through Medicare and $63,750 through Medicaid (Centers for Medicare and Medicaid Services 2010). This support has accelerated EHR adoption with over 50 percent of practices and 80 percent of health systems now using EHRs, up by more than 33 and 71 percent since 2008, respectively (U.S. Department of Health and Human Services 2013).

Among the best studied tools to improve care through EHRs, clinical decision support (CDS) systems provide intelligently filtered, appropriately timed, and actionable information to clinicians at the point of care (Osheroff et al. 2005; Shojania et al. 2009). Once deployed, CDS has resulted in improvements to health care processes, including medication prescribing, vaccination, and the ordering of medical tests (Osheroff et al. 2005; Shojania et al. 2009; Bright et al. 2012), and there is additional evidence that CDS may result in improved outcomes and reduced treatment costs (Bright et al. 2012). Overall, CDS has proven most effective when it provides recommendations within the context of workflows, when alerts appear without delay in an easily accessible location, and when the system requires clinicians to enter minimal or no additional information (Bates et al. 2003; Garg et al. 2005; Kawamoto et al. 2005).

Despite these well-established rules, the magnitude of the benefit of CDS has been modest. A recent systematic review of the impact of CDS at the point of care found a median improvement of 4.2 percent in health care process adherence and 3.8 percent for vaccination and test ordering (Shojania et al. 2009). Studies have shown that as many as 49–96 percent of alerts are overridden or ignored by physicians in a variety of care settings (Weingart et al. 2003; Hsieh et al. 2004; van der Sijs et al. 2006; Lin et al. 2008). Excessive numbers of alerts often result in alert fatigue (Ash et al. 2007), declining clinician responsiveness to a particular type of alert after repeated exposure over a period of time (Embi and Leonard 2012), which might diminish the effectiveness of CDS systems and potentially result in serious consequences for patients (Carspecken et al. 2013).

Physician performance feedback represents a promising strategy to increase the salience of CDS, minimize alert fatigue, and maximize the benefits of CDS for quality. Feedback addresses the well-described inability of physicians to accurately evaluate their own performance (Davis et al. 2006). Previous studies have shown that feedback is most effective when rates of adherence to practice guidelines are low (Jamtvedt et al. 2006a,b), when the information is directly useful for care (Kanouse and Jacoby 1988), and when practitioners are motivated to change (Mugford, Banfield, and O'Hanlon 1991). In particular, feedback that defines “achievable benchmarks” (Kiefe et al. 1998; Weissman et al. 1999) has been found to improve outcomes (Kiefe et al. 2001). While these results are promising, the impact of feedback on clinician use of CDS systems has not been well studied.

To address this gap in the literature, we studied the impact of feedback in improving CDS adoption as part of a larger trial of the effectiveness of CDS for otitis media (OM) (Forrest et al. 2013). OM is the third most common reason for pediatric office visits (Forrest et al. 1999), the leading cause of subspecialist referrals (Froom et al. 1990), and the most common reason for antibiotic prescribing in the United States. OM care is characterized by practice variability, resulting in the overuse of antibiotics (Lyon et al. 1998) and antibiotic resistance (Dagan et al. 2000). By 1 year of age, 60 percent of all children have experienced at least one episode and 17 percent have had three or more (Leibovitz 2003). Ninety percent of all children will have an episode of OM by school entry (Tos 1984). Indicative of the burden of OM, the annual national costs of acute otitis media (AOM) and otitis media with effusion (OME) are $3.0 and $4.0 billion, respectively (Marcy et al. 2001; Shekelle et al. 2003). To improve outcomes for children with AOM and OME, organizations including the American Academy of Pediatrics (AAP), the American Academy of Family Physicians (AAFP), and the Agency for Healthcare Research and Quality (AHRQ) have developed and promulgated guidelines for OM (American Academy of Family Physicians 2004; American Academy of Pediatrics 2004; Shekelle et al. 2010). However, prior studies have documented poor adherence to these guidelines (Garbutt, Jeffe, and Shackelford 2003; Vernacchio, Vezina, and Mitchell 2006, 2007).

In an effort to improve adherence, we developed a multifaceted CDS to promote adherence to guideline-based recommendations individualized to the patient's history and presentation (Forrest et al. 2013). Using a factorial design, we randomized practices to receive CDS and/or clinician performance feedback. In the trial, both CDS and performance feedback proved effective for improving adherence to otitis media guidelines. The present study extends our prior work by focusing exclusively on clinicians who had access to the CDS tool in the larger trial. Specifically, we conducted this study to characterize patterns of adoption of the CDS system (aim 1), assess the impact of performance feedback on CDS adoption by primary care clinicians (aim 2), and measure the impact of CDS use on guideline adherence (aim 3). We hypothesized that performance feedback would increase CDS adoption.

Conceptual Framework

Rogers' Theory of the Diffusion of Innovation guided the software development process and our analysis of system use (Rogers 2003). Adoption refers to the decision to make full use of an innovation. Rogers' Theory argues that an innovation will be more widely adopted if it (1) confers a relative advantage compared to existing approaches, (2) is compatible with existing values, experiences, and needs of adopters, (3) has limited complexity vis-a-vis other technologies in use, (4) can be tried on a limited basis, and (5) has observable benefits. In the case of the OM CDS, the system was designed to bring OM guidelines to clinicians with minimal effort, appear exclusively at visits by children with ear problems, gather only the few data elements needed to produce specific recommendations (Bates et al. 2003), and allow for the system to be easily tried by any clinician. Conceptually, feedback in the form of individual performance summaries and comparisons of each clinician to others in his or her practice and the broader clinical network was designed to allow clinicians to readily observe benefits of system use.

Materials and Methods

Data Sources

A single EHR system (EpicCare®, Epic Sytems, Inc, Verona, WI, USA), used for documenting OM care at all study practices, served as the primary data source. We extracted data on decision support use from the web service external to the EHR that delivered the OM CDS in this study (Fiks et al. 2012). We then validated metrics on adherence to practice guidelines and other data elements with iterative rounds of review of up to 100 charts by primary care pediatricians on the research team.

Study Design, Setting, and Participants

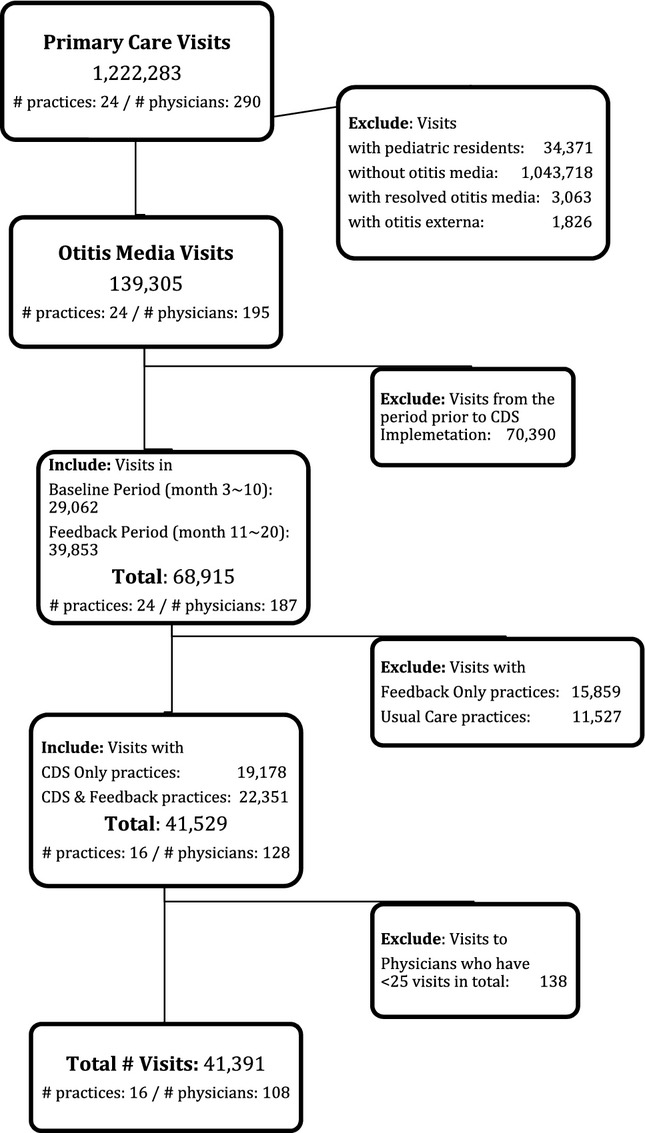

This manuscript reports a secondary analysis of data prospectively collected between February 2009 and August 2010 as part of a cluster randomized trial of OM CDS (Forrest et al. 2013). In brief, we invited all 27 practices within a multistate, academic medical center-owned, primary care practice-based research network caring for more than 200,000 children and adolescents. Following a web-based presentation of the study, 24 practices agreed to participate in the initial trial. From this group, the 16 practices randomized to receive decision support were further randomized at the practice level to physician performance feedback (eight practices) or none. Three of these practices were urban, resident teaching practices, with a >5-year history of CDS use and a long-standing program of teaching evidence-based care. Figure1 outlines how we derived the study sample of 41,391 visits at 16 practices with 108 clinicians. Study participants included all nontrainee pediatric clinicians at involved sites (physicians and nurse practitioners). To focus on clinicians regularly treating OM, we excluded those with fewer than 25 otitis visits during the 21-month study period. Consistent with national guidelines for the management of OM at the time of the study, all visits by children from 2 months through 12 years of age with a diagnosis of AOM or OME were included in the analysis (American Academy of Family Physicians 2004; American Academy of Pediatrics 2004). Members of the research team visited all study sites to explain the study, review OM guidelines, and instruct practitioners on decision support use.

Figure 1.

Study Sample

Notes. The period prior to CDS implementation includes the 14 months prior to the baseline period in this study. The baseline period includes the 12 months after the CDS was implemented. The feedback period includes the 10 months in which half of the practices received CDS only and half received CDS plus clinician performance feedback.

Clinical Decision Support System

Following widely used standards and with input from a local advisory panel that provided advice to adapt national guidelines for local use, we designed, prototyped, pilot tested the system with clinicians, and then implemented the study's CDS system. However, no formal usability testing was conducted. The OM clinical decision support intervention consisted of three primary components (Figure S1). Two components appeared for any visit with an ear-related problem or for any child with a recent OM history. The first component (“history panel”) provided an aggregated history of previous otitis media encounters, including office visits, telephone encounters, audiograms, subspecialist referrals, and surgery. A child's past antibiotic history was also summarized. The second component (“documentation panel”) included a data-gathering tool for recording, according to national guidelines, the OM-related history of present illness and findings from the physical exam. The final component (“order entry panel”) was launched by clinicians and, informed by the history and documentation panels, displayed guideline-based recommendations for treatment, including indicated antibiotics, diagnosis, referral, analgesic use, and a link to a clinically appropriate order set. In addition, the tool provided patient-specific discharge instructions. Through the order entry panel, clinicians could also choose to have the CDS write the history of the present illness, ear exam, assessment and plan, or patient instructions relevant to an OM encounter, reducing the burden of data entry for clinicians. The CDS was programmed using a web service that was not part of the EHR but appeared as part of the visit navigator and gathered data from and returned information through the EHR (Fiks et al. 2012).

Physician Performance Feedback

After an initial 12-month period of CDS use alone (baseline), six rounds of feedback were hand-delivered over 10 months (period 2) to the practice manager of sites randomized to feedback who then distributed the reports to practicing clinicians. Nonfeedback practices had access to the CDS, but there was no ongoing interaction with the study team in lieu of feedback. We added metrics of guideline adherence for OM to the reports in a step-wise fashion. Ultimately, the reports included a measure of overall CDS use based on use of the CDS “documentation” or “order entry” panels, the first measure added, as well as four measures of adherence to AAP AOM guidelines (American Academy of Pediatrics 2004). For AOM, they included (1) appropriate use of amoxicillin as a first-line antibiotic, (2) use of high-dose amoxicillin, (3) pain assessment, and (4) use of analgesia. For each of the metrics as well as overall tool use, the reports included the performance of the clinician, his or her practice, and the network as a whole (Figure S2). Data on the top 10 percent of performers throughout the care network were also included.

Outcome Measures

Aim 1 of this study characterized the adoption of the CDS. The primary outcome of aim 2, the study of the impact of feedback on adoption, was any use of the “documentation” or “order entry” panels as described above. This outcome included clinician use of the CDS to document any aspect of the history, physical, assessment and plan, or patient instructions, as well as using the tool to place any order, for example, an antibiotic prescription, using the tool. We were unable to assess how the history panel was used as it was passively visible at all otitis visits and did not require clinician interaction.

Outcomes for aim 3 focused on adherence to OM guidelines and were based on adherence to guideline recommendations for OM pain management, diagnostic documentation, and medication management as adapted from national guidelines. The pain treatment metric was satisfied by the prescription of an analgesic, the recommendation of an analgesic in patient instructions handed to families on discharge, or both. All metrics were considered except avoidance of antihistamines and decongestants, which was achieved at nearly all visits. In addition, we created a comprehensive measure separately for AOM and OME guideline-adherent care. This measure was satisfied when all applicable guideline adherence metrics at an eligible visit, one at which at least three metrics applied, were achieved.

Covariates

For all visit-level analyses, covariates included factors potentially influencing the association of CDS use and the outcomes of tool use and guideline adherence at the visit level. Patient characteristics included gender, age, and presence of a comorbidity that increases the risk of OM based on national guidelines (American Academy of Family Physicians 2004; American Academy of Pediatrics 2004). Visit characteristics included the visit type (sick or well), when the visit was for AOM or OME, and the number of diagnoses as a proxy for visit complexity. Clinician characteristics included gender, years in practice (<5, 5–15, >15), the proportion of visits by children <3 years old seen by a clinician, the proportion of OM visits by children with a high-risk comorbidity, the proportion of children with ≥3 diagnoses at an OM visit, and the burden of OM (defined as the average number of otitis media visits per clinicians per clinical day). The clinician characteristics described above were the only covariates in the clinician-level analyses for aim 2.

Statistical Analyses

For aim 1, we estimated the proportion of all visits at which the tool was used at both the physician and practice level. Using a marginal model with an independence correlation structure, and a logit link and accounting for clustering at the practice level with robust standard error estimates, we then examined the association between physician, child, and visit characteristics and CDS tool use.

For aim 2, we then modeled the association of feedback with CDS adoption by examining the interaction of the feedback intervention with time. We examined this association at two levels: (1) a visit-level analysis that examined overall patterns and (2) a clinician-month analysis that recognized adoption as a clinician-level process and considered whether clinicians used the tool at all in any given month. Both analyses used marginal models with logit links, clustered by practice. We then compared the importance of feedback as a determinant of tool use for clinicians with low versus high adherence to OM guidelines prior to the study start.

For aim 3, we examined the association of tool use and guideline adherence among the entire study sample of clinicians, all of whom had access to the CDS. First, we again used marginal models as described previously to estimate the association between tool use and the achievement (yes/no) of comprehensive guideline-based care as well as each individual metric. Using similar models, we then explored the association between tool use and guideline adherence separately for physicians with low, intermediate, or high performance for each metric prior to CDS implementation.

To contextualize our findings, we gathered qualitative comments regarding the system through informal conversations at each study site each time feedback was delivered and organized the comments according to Rogers' Theory (Rogers 2003).

Our Institutional Review Board approved the study and practices consented to participate. We used R version 2.14.1 (Vienna, Austria) and Stata version 12.1 (College Station, TX, USA) for all analyses.

Results

Study Visits and Population

Table1 describes the study visits, clinicians, and children. Most OM care was delivered at acute care visits and nearly half of OM visits had a single diagnosis. The two study groups (Feedback and CDS vs. CDS-only) differed in several characteristics. The CDS-only group was slightly more likely to deliver OM care at sick visits, record a diagnosis of AOM, and list a single diagnosis for the encounter. The CDS-only group also had a lower proportion of visits from patients with three or more diagnoses and was less likely to deliver OM care to children >5 years of age.

Table 1.

Visit, Clinician, and Patient Characteristics across Sixteen Practices over the Study Period

| Overall (16 Practices)(n = 41,391),% | Clinical Decision Support + Feedback Group (8 Practices)(n = 22,256), % | Clinical Decision Support Only Group (8 Practices)(n = 19,135), % | |

|---|---|---|---|

| Visit characteristics | |||

| Visit type | |||

| Sick | 38,585 (93.2) | 20,670 (92.9) | 17,915 (93.6) |

| Well | 2,806 (6.8) | 1,586 (7.1) | 1,220 (6.4) |

| Otitis media diagnosis | |||

| Acute otitis media (AOM) | 27,807 (76.5) | 14,196 (72.8) | 13,611 (80.8) |

| Otitis media with effusion (OME) | 8,530 (23.5) | 5,294 (27.2) | 3,236 (19.2) |

| Number of diagnoses recorded | |||

| 0–1 | 18,924 (45.7) | 8,716 (39.2) | 10,208 (53.3) |

| 2–3 | 20,621 (50.1) | 12,278 (55.5) | 8,343 (43.8) |

| 4–5 | 1,683 (4.1) | 1,147 (5.2) | 536 (2.8) |

| ≥6 | 163 (0.4) | 115 (0.5) | 48 (0.3) |

| Clinician characteristics | (n = 108) | (n = 53) | (n = 61) |

| Gender | |||

| Female | 78 (77.2) | 36 (76.6) | 46 (79.3) |

| Years in practice | |||

| <5 | 11 (10.9) | 8 (17.0) | 4 (6.9) |

| 5–15 | 44 (43.6) | 18 (38.3) | 28 (48.3) |

| >15 | 46 (45.5) | 21 (44.7) | 26 (44.8) |

| Proportion of visits from patients ≤3 years in age | |||

| <0.6 | 50 (46.3) | 23 (43.4) | 29 (47.5) |

| ≥0.6 | 58 (53.7) | 30 (56.6) | 32 (52.5) |

| Proportion of otitis media visits from patients with a comorbidity increasing the risk of OM | |||

| <0.04 | 76 (70.4) | 37 (69.8) | 44 (72.1) |

| ≥0.04 | 32 (29.6) | 16 (30.2) | 17 (27.9) |

| Proportion of visits from patients with ≥3 diagnoses | |||

| <0.2 | 71 (65.7) | 29 (54.7) | 46 (75.4) |

| ≥0.2 | 37 (34.3) | 24 (45.3) | 15 (24.6) |

| Burden of otitis media visits | |||

| ≤2 visits per clinical day per clinician | 55 (50.9) | 24 (45.3) | 37 (60.7) |

| >2 visits per clinical day per clinician | 53 (49.1) | 29 (54.7) | 24 (39.3) |

| Patient characteristics | (n = 20,656) | (n = 10,645) | (n = 10,036) |

| Gender | |||

| Female | 9,962 (48.2) | 5,184 (48.8) | 4,765 (47.6) |

| Age | |||

| 0–6 months | 1,381 (6.7) | 734 (6.9) | 645 (6.4) |

| >6–≤24 months | 6,607 (32.0) | 3,325 (31.3) | 3,269 (32.7) |

| >2–≤5 years | 6,343 (30.7) | 3,199 (30.1) | 3,137 (31.3) |

| >5 years | 6,325 (30.6) | 3,362 (31.7) | 2,960 (29.6) |

| Comorbidity that increases the risk of otitis media | |||

| Present | 663 (3.2) | 347 (3.3) | 316 (3.2) |

| Not present | 19,993 (96.8) | 10,273 (96.7) | 9,695 (96.8) |

Adoption

Overall adoption of the OM CDS tool was low, and we found a high level of variability in tool use at the clinician and practice levels. Two clinicians (1.9 percent) in the sample never used the OM tool, and 12 (11.1 percent) used the tool during a 3-month trial period in which the intervention was tested but did not use the CDS during the study period. Forty-two (38.9 percent) of the 108 clinicians in the sample used it at less than or equal to 10 percent of visits, 22 (20.3 percent) clinicians at 10–25 percent of visits, and 30 (27.7 percent) at greater than 25 percent of visits. Overall, clinicians used the OM CDS at a mean of 21.3 percent of eligible OM visits (standard deviation 26 percent; median 8.8 percent, range: 0–84.8 percent).

At the practice level, the CDS tool was used at a mean of 16.8 percent of visits (standard deviation 13 percent; median 15.1 percent, range: 0.0–51.1 percent). All urban teaching practices in the network were above the median rate of use, and this group included the site with highest level of use.

In multivariable models standardized for characteristics of visits, clinicians, and patients, tool use was significantly more frequent at sick visits than well (17 percent vs. 8 percent), at visits with an AOM diagnosis compared to OME (18 percent vs. 13 percent), and at visits with fewer diagnoses (21 percent at visits with 0–1 diagnosis vs. 9 percent at visits with ≥6) (p < .01 for all). Tool use was more common among clinicians with a lower OM practice burden who care for fewer children with OM (25 percent vs. 14 percent), and when the patient was between 24 and 60 months compared to ≤6 months (18 percent vs. 14 percent), an age group at higher risk of adverse outcomes (p = .05 and .02, respectively).

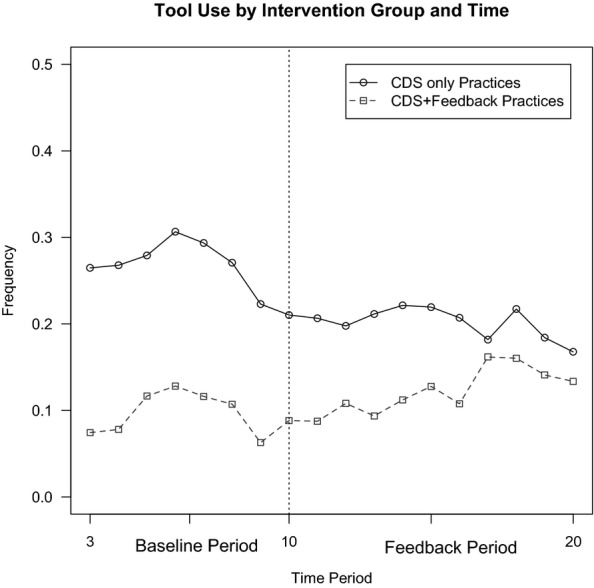

Association of Feedback with CDS Adoption

Feedback reversed a declining trend in CDS use. Figure2 graphs the frequency of tool use at the visit level for each month of the study for practices randomized to feedback or none. Although the rate of adoption was initially higher in the CDS-only group, the rate declined by 6.8 percentage points during the 10-month feedback period compared to the 12-month baseline. In the group that received feedback, the rate of tool use increased by 2.2 percentage points following the introduction of clinician feedback. The relative difference of 9.0 percentage points was statistically significant (p = .001).

Figure 2.

Comparison of CDS Use before and after Clinician Performance Feedback

Note. The difference in difference estimate on the frequency of any tool use at the practice level is 9.0 percent with a p-value < .001 based on two-sample t-test. In the Baseline Period, all practices had access to CDS only. In the Feedback Period the CDS+Feedback Practices had access to both interventions.

We also focused on tool use at the clinician-month level, because the decision to use or not use the tool was made by each individual clinician, and the impact of feedback on CDS use was even more pronounced. At the clinician-month level, the rate of tool use declined by 18.9 percentage points in the CDS-only group over time, and increased by 7.8 percentage points in the group receiving feedback, a relative difference of 26.7 percentage points (p = .004). Results were consistent in secondary analyses limited to clinician-months with 10 or more eligible visits. Results were also unchanged in sensitivity analyses in which the outcome was clinician use of the CDS at greater than 5 percent or 10 percent of visits in a given month as opposed to any tool use.

Next, we examined the association of feedback with CDS use among those with low versus high OM guideline adherence prior to the implementation of the CDS. Among the metrics included in the feedback reports, we found that feedback resulted in a greater increase in CDS use among those with low versus high adherence to guidelines for antibiotic prescribing (15.0 percent vs. 4.1 percent increase for first-line amoxicillin and a 12.5 percent increase vs. a 2.0 percent decline in CDS use for high-dose amoxicillin). This difference was not observed for metrics related to pain assessment and management.

Association of CDS Use with OM Guideline Adherence

In multivariable models, we found that CDS use was associated with improved guideline adherence (Table2). For the comprehensive measures of perfect AOM and OME care, tool use was associated with relative increases of 7.5 (p < .001) and 8.6 (p = .01) percentage points, respectively. For any OM, there was a 48.2 percentage point relative increase in treatment of pain. For AOM, there was a 5.4 percentage point relative increase in use of amoxicillin as a first-line therapy and a 4.9 percentage point increase in the prescribing of an appropriate antibiotic for penicillin-allergic patients. In addition, there was a 17.0 percentage point relative increase in prescribing of high-dose amoxicillin. However, tool use was associated with a slight increase (2.7 percent) in the prescription of antibiotics when they were not indicated. For OME, guideline adherence also improved. We found a 12.0 percentage point relative increase in adequate diagnostic evaluation.

Table 2.

Association of Clinical Decision Support Use with Otitis Media Guideline Adherence

| Guideline Adherence Metric* (n = Number of Visits) | CDS Use | No CDS Use | Adjusted Percentage Difference in Guideline Adherence (CDS Use – No CDS Use) (p-value)† |

|---|---|---|---|

| 6,752 visits (%) | 34,639 visits (%) | ||

| Comprehensive measures‡ | |||

| Comprehensive AOM care | |||

| Achieved (n = 2,888) | 851 (20.0) | 2,037 (10.9) | 7.5 (<.001) |

| Not achieved (n = 19,983) | 3,400 (80.0) | 16,583 (89.1) | |

| Comprehensive OME care | |||

| Achieved (n = 180) | 54 (14.0) | 126 (3.7) | 8.6 (.01) |

| Not achieved (n = 3,611) | 332 (86.0) | 3,279 (96.3) | |

| All otitis media (OM) | |||

| OM: Pain assessed | |||

| Done (n = 39,868) | 6,498 (96.2) | 33,370 (96.3) | −1.2 (.45) |

| Not done (n = 1,523) | 254 (3.8) | 1,269 (3.7) | |

| OM: Pain treated | |||

| Done (n = 2,730) | 983 (82.0) | 1,747 (32.5) | 48.2 (<.001) |

| Not done (n = 3,849) | 216 (18.0) | 3,633 (67.5) | |

| Acute otitis media (AOM) | |||

| AOM: Adequate diagnostic evaluation | |||

| Done (n = 12,969) | 2,559 (53.2) | 10,410 (45.3) | 7.5 (.2) |

| Not done (n = 14,838) | 2,255 (46.8) | 12,583 (54.7) | |

| AOM: Amoxicillin used as first-line therapy | |||

| Done (n = 15,191) | 3,170 (84.9) | 12,021 (76.6) | 5.4 (.007) |

| Not done (n = 4,228) | 563 (15.1) | 3,665 (23.4) | |

| AOM: Appropriate antibiotic for penicillin-allergic patients | |||

| Done (n = 1,131) | 223 (81.1) | 908 (76.9) | 4.9 (.04) |

| Not done (n = 324) | 52 (18.9) | 272 (23.1) | |

| AOM: High-dose Amoxicillin prescribed | |||

| Done (n = 9,608) | 2,484 (77.9) | 7,124 (58.9) | 16.8 (.02) |

| Not done (n = 5,678) | 705 (22.1) | 4,973 (41.1) | |

| AOM: Antibiotics appropriately not prescribed | |||

| Done (n = 937) | 117 (4.2) | 820 (6.6) | −2.7 (<.001) |

| Not done (n = 14,379) | 2,698 (95.8) | 11,681 (93.4) | |

| Otitis media with effusion (OME) | |||

| OME: Adequate diagnostic evaluation | |||

| Done (n = 613) | 198 (19.1) | 415 (5.5) | 12.0 (.01) |

| Not done (n = 7,917) | 836 (80.9) | 7,081 (94.5) | |

| OME: Antibiotics not prescribed | |||

| Done (n = 2,818) | 263 (88.6) | 2,555 (87.1) | 1.4 (.6) |

| Not done (n = 411) | 34 (11.4) | 377 (12.9) | |

Note: These results describe the difference in achievement (yes/no) of each quality metric between visits where the CDS was used versus visits where the CDS was not used. All clinicians had access to the CDS. Bold text indicates p < .05.

Guideline adherence metrics were defined based on national guidelines, with input from a local advisory panel.

A marginal model with a logit link function and robust standard error estimates accounting for clustering in practices was used. Model included tool use (yes/no) and controlled for patient, clinician, and visit-level covariates.

Comprehensive care refers to visits eligible for at least three individual quality metrics with all of them achieved.

We next examined the impact of tool use on guideline adherence separately among clinicians with different levels of performance prior to the study start (Table3). While CDS use improved guideline adherence in all groups, the group with high baseline guideline adherence had the fewest metrics that improved significantly. The magnitude of improvement was greatest in the group with low guideline adherence prior to the study start.

Table 3.

Association of CDS Use with Otitis Media Guideline Adherence Metrics among Clinicians with Different Levels of Otitis Media Guideline Adherence Prior to CDS Implementation

| Percentage Difference in Achievement of Guideline Adherence Metric among Clinicians Using Versus Not Using the OM CDS (p-value)† | |||

|---|---|---|---|

| Level of Guideline Adherence Prior to CDS Implementation | |||

| Guideline Adherence Metric* (n = Number of Visits) | High | Median | Low |

| Comprehensive measures‡ | |||

| Comprehensive AOM care (n = 20,860) | −3.8 (.444) | 7.9 (.001) | 10.9 (.011) |

| Comprehensive OME care (n = 3,577) | 6.6 (.217) | 17.8 (.180) | 5.5 (.048) |

| All otitis media (OM) | |||

| OM: Pain assessed (n = 38,286) | 0.1 (.298) | −0.2 (.661) | 1.0 (.646) |

| OM: Pain treated (n = 5,992) | 13.2 (<.001) | 46.1 (<.001) | 70.1 (<.001) |

| Acute otitis media (AOM) | |||

| AOM: Adequate diagnostic evaluation (n = 25,472) | −11.8 (.330) | 2.3 (.748) | 30.0 (<.001) |

| AOM: Amoxicillin used as first-line therapy (n = 17,721) | 0.9 (.508) | 6.0 (.001) | 5.5 (.177) |

| AOM: Appropriate antibiotic for penicillin-allergic patients (n = 1,289) | 3.1 (.495) | 2.9 (.400) | 11.6 (.044) |

| AOM: High-dose Amoxicillin prescribed (n = 14,095) | 2.0 (.002) | 13.0 (<.001) | 13.9 (.153) |

| AOM: Antibiotics appropriately not prescribed (n = 14,150) | −7.5 (<.001) | −2.6 (.002) | −0.1 (.954) |

| Otitis media with effusion (OME) | |||

| OME: Adequate diagnostic evaluation (n = 8,124) | 17.8 (.007) | 1.9 (.419) | 8.7 (.022) |

| OME: Antibiotics not prescribed (n = 3,040) | 1.7 (.532) | 5.9 (.034) | −1.6 (.811) |

Note: These results describe the difference in achievement (yes/no) of each guideline adherence metric between visits where the tool was used versus visits where the tool was not used, separately for clinicians with low, median, or high OM guideline adherence prior to CDS implementation. Overall, those with low guideline adherence prior to the study start benefitted most from using the CDS. For example, CDS use was associated with a 10.9 percentage point improvement in adherence to comprehensive AOM care for those with low guideline adherence prior to the study compared to a 7.9 percentage point improvement among those with median guideline adherence and a drop of 3.8 percentage points among those with high guideline adherence. Bold text indicates p<.05.

Guideline adherence metrics were defined based on national guidelines, with input from a local advisory panel. Separately for each metric, clinician guideline adherence was defined by examining the 12 months prior to the implementation of the CDS. A single clinician could rate in the highest group for one metric and the median or low group for another.

A marginal model with a logit link function and robust standard error estimates accounted for clustering in practices was used. Model included tool use (yes/no) and controlled for patient, clinician, and visit-level covariates.

Comprehensive guideline adherence refers to visits eligible for at least three individual quality metrics with all of them achieved.

Context of Decision to Use OM CDS

To contextualize the results of this study, we sought feedback from clinicians at study sites. Their responses fit into Rogers' Theory of Diffusion of Innovations. These perceptions are presented to contextualize the study results. In terms of the relative advantage of the system, providers perceived several benefits of the OM CDS relative to usual EHR use. These included the visual timeline of past OM episodes, the automatic calculation of drug doses, and photos of OM that could be shown to parents. However, clinicians also raised concerns regarding the number of “clicks” needed to use the system, which was perceived as inefficient. For compatibility, clinician enthusiasm for the tool was decreased because of the change in workflow that was required, especially for visits with multiple problems. Clinicians were accustomed to existing features in the EHR. They felt that they already knew the OM guidelines or preferred to use their own clinical judgment. In terms of complexity, those reluctant to use the OM decision support perceived that entering information into the history and physical section of the CDS was complex compared to entering data into free text progress notes. Clinicians also perceived that the tool worked best with clear-cut symptoms. Although the system could be tried on a limited basis and the feedback intervention summarized results so they could be more readily appreciated, clinicians did not provide comments related to the domains of trialability, being able to experiment with the system on a limited basis, or observability, the ability to see a difference in outcomes based on system use.

Discussion

With the dramatic increase in EHR and CDS implementation supported by meaningful use incentives, developing optimal strategies to accelerate innovation in health care delivery through EHRs has become increasingly important. This study combined a characterization of the adoption of an OM CDS system, an analysis of the impact of feedback on CDS use, and an examination of the impact of CDS use on OM guideline adherence among clinicians with access to the CDS tool. We found low rates of overall tool use and high levels of variability across practices and that clinician performance feedback reversed a declining trend in CDS use. Clinicians with lower rates of guideline adherence prior to the study start experienced greater benefits from CDS use than others when they received feedback for these metrics. CDS use was most effective at improving adherence to OM guidelines among clinicians with the lowest baseline guideline adherence.

Clinicians ignored the OM tool at 80 percent of eligible visits. Two percent of clinicians never used the CDS, and 11 percent used the tool during a trial period but not again. These results underscore the importance of continued research to define how best to encourage CDS adoption. Although studies have considered the association between workflows as well as clinician and patient characteristics and CDS adoption (Garg et al. 2005; Kawamoto et al. 2005; Romano and Stafford 2011), this study extends that work by focusing on the relationship between characteristics of visits, clinicians, and patients and the outcome of tool use. Rogers' theory of innovation suggests that relative advantage, compatibility, and complexity are important determinants of the adoption of a new innovation and our qualitative results support the relevance of all three to clinician decisions regarding tool use (Rogers 2003). Specifically, we found that clinicians who infrequently diagnose OM, those likely to benefit most from the tool, were 11 percentage points more likely to use the CDS than others. In addition, the tool was more likely to be used at visits with less medical complexity. These visits, particularly those with one diagnosis, fit more readily into the workflow supported by the OM CDS. Still, the low rates of adoption in this study underscore the potential benefit of usability testing and focused design work to broaden the range of visits at which clinician and practice workflows are compatible with CDS use (Nielsen 1993). The low rates of adoption also suggest that CDS may be optimally combined with quality improvement initiatives that alter physician workflow to achieve a desired aim, such as, in this case, improvements in OM care.

Feedback reversed a declining trend in tool use. Our results are consistent with an observational study that found an association between clinician self-reported receipt of feedback in adult primary care and response to clinical reminders (Mayo-Smith and Agrawal 2007). Larger studies focused on the outcome of actual CDS use have not addressed this question. According to Rogers' Theory, observability, the ability to see a difference in outcomes based on system use, drives adoption. In this study, feedback communicated the results of CDS use to clinicians, increasing observability (Rogers 2003). In addition, feedback provided achievable benchmarks by highlighting the results for “top performers,” an evidence-based approach for improving practice with feedback (Kiefe et al. 1998; Weissman et al. 1999). Finally, each clinician was presented with a comparison of his or her own results with those of the practice and network. Prior research suggests that this approach, known as “peer comparison feedback,” may be more effective than feedback without these comparisons (Buntinx et al. 1993; Hadjianastassiou, Karadaglis, and Gavalas 2001).

Even though feedback proved effective in reversing the decline in OM use, the CDS was still used at less than 25 percent of eligible visits. In addition to characteristics of the innovation (CDS) emphasized by Rogers, a growing literature suggests the importance of provider, organizational, and structural factors to implementation outcomes including adoption (Chaudoir, Dugan, and Barr 2013). In the case of the OM CDS, the absence of reimbursement tied to OM guideline adherence, a structural factor, and a lack of emphasis on accountability for adherence to OM guidelines among practice leaders, an organizational factor, might have limited adoption. In addition, despite education regarding tool use and a review of evidence behind the guidelines in educational sessions delivered in-person to clinicians, some clinicians might have remained uncomfortable with tool use or distrusted national guidelines. Tool use was not associated with 100 percent guideline adherence; even when the tool was used, clinicians rejected some guidelines. While our results support the implementation of feedback to foster CDS adoption, situating the intervention within a quality improvement framework that more actively engaged organizational leadership and practitioners might have better improved adoption (Kaplan et al. 2010).

We previously found that the implementation of CDS or feedback for OM resulted in modest improvements in guideline adherence for practices randomized to these interventions (Forrest et al. 2013). This study demonstrates the additional benefit to guideline adherence that could potentially be achieved with higher rates of CDS adoption. Overall, benefits of CDS use were far greater than the median improvement of 4 percent reported in systematic reviews (Shojania et al. 2009) or the less than 5 percentage point improvement in comprehensive AOM or OME care observed between CDS versus non-CDS practices in our trial (Forrest et al. 2013). We also found that the magnitude and scope of improvement in guideline adherence with CDS use was greatest for clinicians with low baseline adherence. Although all groups benefitted, our results highlight the importance of fostering the adoption of CDS tools among underperforming clinicians.

This study had several limitations. First, the study is not an evaluation of the clinical trial as randomized but a description of CDS adoption among the study arm randomized to receive the CDS tool. In addition, the study was conducted within one health system in one region of the United States. Nonetheless, the substantial heterogeneity in tool use across sites highlights the importance of clinician and practice characteristics to CDS adoption. Although this study focused on a single clinical condition, OM is among the most common pediatric conditions, which provided an ideal model to study CDS adoption as a strategy to improve both diagnosis and management. Because all clinicians had access to the “history panel,” the independent effect of this summary of prior OM care could not be evaluated. As a clinician's workflow for any given visit in this study was determined by the appearance of the history panel and an unrecorded conversation with the family in the exam room, rather than relying on the chief complaint, we did not analyze the independent impact of the chief complaint on CDS use. We instead used the number of diagnoses as a measure of visit complexity. Furthermore, it was beyond the scope of this study to evaluate the duration or intensity of feedback likely to yield the greatest benefit. Nevertheless, we found that our standardized bimonthly reports were effective at fostering CDS use. Finally, comments provided by clinicians at the time of feedback summarized perceptions of the tool. In the absence of formal usability testing, which was beyond the scope of this study, we could not determine whether actual tool use matched perceptions.

Conclusions

We found low rates of tool use and high variability in the adoption of an innovative OM CDS tool that provided actionable information to clinicians in real time. Implementing performance feedback along with clinical decision support was an effective strategy to promote adoption. These results support the use of feedback in tandem with CDS as a means of fostering guideline-based care. However, additional strategies and incentives are needed to fully realize the benefits of CDS for guideline adherence.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: We thank the network of primary care physicians, and their patients and families for their contribution to clinical research through the Pediatric Research Consortium (PeRC) at CHOP. In addition, we recognize Stephanie Mayne for her help with manuscript preparation, Thomas Richards for his help with data extraction and management, and Valerie McGoldrick for her assistance with the implementation of this study. Dr. Fiks had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. This project was supported by the Agency for Healthcare Research and Quality (R18 HS017042) and the Eunice Kennedy Shriver National Institute of Child Health and Human Development (K23 HD059919) (AGF). Drs. Fiks and Grundmeier are coinventors of the Care Assistant software used to deliver the decision support in this study. They hold no patent on the software and have earned no money from this invention. No licensing agreement exists. Ms. Khan is a graduate research assistant in a position funded by GlaxoSmithKline. Her work in that position is unrelated to this study. The other authors have no financial relationships relevant to this article to disclose. Given this potential conflict of interest, statistical analyses were supervised by Russell Localio, the study statistician, who had no conflict of interest.

Disclosures: None.

Disclaimers: None.

Supporting Information

Appendix SA1: Author Matrix.

Figure S1: Components of the Otitis Media Clinical Decision Support System.

Figure S2: Physician Performance Feedback. (After an initial 8-month period of clinical decision support use alone, clinicians randomized to the feedback group received six rounds of hand-delivered feedback over a 10-month period. Quality metrics were based on American Academy of Pediatrics guidelines for acute Otitis Media. Data were presented on the top 10% of performers throughout the entire care network.)

Table S1: Adherence to OM Guidelines Metric Definitions for Children Two Months to Twelve Years of Age.

References

- American Academy of Family Physicians. Otitis Media with Effusion. Pediatrics. 2004;113(5):1412–29. doi: 10.1542/peds.113.5.1412. American Academy of Otolaryngology-Head and Neck Surgery; American Academy of Pediatrics, Subcommittee on Otitis Media With Effusion. [DOI] [PubMed] [Google Scholar]

- American Academy of Pediatrics. Diagnosis and Management of Acute Otitis Media. Pediatrics. 2004;113(5):1451–65. doi: 10.1542/peds.113.5.1451. Subcommittee on Management of Acute Otitis Media. [DOI] [PubMed] [Google Scholar]

- American Recovery and Reinvestment Act. 2009. “ H.R.1: Making Supplemental Appropriations for Job Preservation and Creation, Infrastructure Investment, Energy Efficiency and Science, Assistance to the Unemployed, and State and Local Fiscal Stabilization, for Fiscal Year Ending September 30, 2009, and for Other Purposes. Pub L. No. 111-5 123 Stat. 115 (Feb 17, 2009)

- Ash JS, Sittig DF, Campbell EM, Guappone KP. Dykstra RH. Some Unintended Consequences of Clinical Decision Support Systems. AMIA Annual Symposium Proceedings. 2007;2007:26–30. [PMC free article] [PubMed] [Google Scholar]

- Bates DW, Kuperman GJ, Wang S, Gandhi T, Kittler A, Volk L, Spurr C, Khorasani R, Tanasijevic M. Middleton B. Ten Commandments for Effective Clinical Decision Support: Making the Practice of Evidence-Based Medicine a Reality. Journal of the American Medical Informatics Association. 2003;10(6):523–30. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenthal D. Stimulating the Adoption of Health Information Technology. New England Journal of Medicine. 2009;360(15):1477–9. doi: 10.1056/NEJMp0901592. [DOI] [PubMed] [Google Scholar]

- Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD. Lobach D. Effect of Clinical Decision-Support Systems: A Systematic Review. Annals of Internal Medicine. 2012;157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- Buntinx F, Knottnerus JA, Crebolder HF, Seegers T, Essed GG. Schouten H. Does Feedback Improve the Quality of Cervical Smears? A Randomized Controlled Trial. British Journal of General Practice. 1993;43(370):194–8. [PMC free article] [PubMed] [Google Scholar]

- Carspecken CW, Sharek PJ, Longhurst C. Pageler NM. A Clinical Case of Electronic Health Record Drug Alert Fatigue: Consequences for Patient Outcome. Pediatrics. 2013;131(6):e1970–3. doi: 10.1542/peds.2012-3252. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare and Medicaid Services. Medicare and Medicaid Programs; Electronic Health Record Incentive Program. Final Rule. Federal Register. 2010;75(144):44313–588. [PubMed] [Google Scholar]

- Chaudoir SR, Dugan AG. Barr CH. Measuring Factors Affecting Implementation of Health Innovations: A Systematic Review of Structural, Organizational, Provider, Patient, and Innovation Level Measures. Implementation Science. 2013;8:22. doi: 10.1186/1748-5908-8-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dagan R, Leibovitz E, Leiberman A. Yagupsky P. Clinical Significance of Antibiotic Resistance in Acute Otitis Media and Implication of Antibiotic Treatment on Carriage and Spread of Resistant Organisms. Pediatric Infectious Disease Journal. 2000;19:S57–65. doi: 10.1097/00006454-200005001-00009. [DOI] [PubMed] [Google Scholar]

- Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE. Perrier L. Accuracy of Physician Self-Assessment Compared with Observed Measures of Competence: A Systematic Review. Journal of the American Medical Association. 2006;296(9):1094–102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- Embi PJ. Leonard AC. Evaluating Alert Fatigue Over Time to EHR-Based Clinical Trial Alerts: Findings from a Randomized Controlled Study. Journal of the American Medical Informatics Association. 2012;19(e1):e145–8. doi: 10.1136/amiajnl-2011-000743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiks AG, Grundmeier RW, Margolis B, Bell LM, Steffes J, Massey J. Wasserman RC. Comparative Effectiveness Research Using the Electronic Medical Record: An Emerging Area of Investigation in Pediatric Primary Care. Journal of Pediatrics. 2012;160(5):719–24. doi: 10.1016/j.jpeds.2012.01.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forrest CB, Glade GB, Baker AE, Bocian AB, Kang M. Starfield B. The Pediatric Primary-Specialty Care Interface: How Pediatricians Refer Children and Adolescents to Specialty Care. Archives of Pediatrics and Adolescent Medicine. 1999;153(7):705–14. doi: 10.1001/archpedi.153.7.705. [DOI] [PubMed] [Google Scholar]

- Forrest CB, Fiks AG, Bailey LC, Localio R, Grundmeier RW, Richards T, Karavite DJ, Elden L. Alessandrini EA. Improving Adherence to Otitis Media Guidelines with Clinical Decision Support and Physician Feedback. Pediatrics. 2013;131(4):e1071–81. doi: 10.1542/peds.2012-1988. [DOI] [PubMed] [Google Scholar]

- Froom J, Culpepper L, Grob P, Bartelds A, Bowers P, Bridges-Webb C, Grava-Gubins I, Green L, Lion J. Somaini B. Diagnosis and Antibiotic Treatment of Acute Otitis Media: Report from International Primary Care Network. British Medical Journal. 1990;300(6724):582–6. doi: 10.1136/bmj.300.6724.582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garbutt J, Jeffe DB. Shackelford P. Diagnosis and Treatment of Acute Otitis Media: An Assessment. Pediatrics. 2003;112(1 Pt 1):143–9. doi: 10.1542/peds.112.1.143. [DOI] [PubMed] [Google Scholar]

- Garg AX, Adhikari NKJ, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J. Haynes RB. Effects of Computerized Clinical Decision Support Systems on Practitioner Performance and Patient Outcomes: A Systematic Review. Journal of the American Medical Association. 2005;293(10):1223–38. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- Hadjianastassiou VG, Karadaglis D. Gavalas M. A Comparison between Different Formats of Educational Feedback to Junior Doctors: A Prospective Pilot Intervention Study. Journal of the Royal College of Surgeons of Edinburgh. 2001;46(6):354–7. [PubMed] [Google Scholar]

- Hsieh TC, Kuperman GJ, Jaggi T, Hojnowski-Diaz P, Fiskio J, Williams DH, Bates DW. Gandhi TK. Characteristics and Consequences of Drug Allergy Alert Overrides in a Computerized Physician Order Entry System. Journal of the American Medical Informatics Association. 2004;11(6):482–91. doi: 10.1197/jamia.M1556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jamtvedt G, Young JM, Kristoffersen DT, O'Brien MA. Oxman AD. Audit and Feedback: Effects on Professional Practice and Health Care Outcomes. Cochrane Database of Systematic Reviews. 2006a;2:CD000259. doi: 10.1002/14651858.CD000259.pub2. [DOI] [PubMed] [Google Scholar]

- Jamtvedt G, Young JM, Kristoffersen DT, O'Brien MA. Oxman AD. Does Telling People What They Have Been Doing Change What They Do? A Systematic Review of the Effects of Audit and Feedback. Quality and Safety in Health Care. 2006b;15(6):433–6. doi: 10.1136/qshc.2006.018549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanouse DE. Jacoby I. When Does Information Change Practitioners' Behavior? International Journal of Technology Assessment in Health Care. 1988;4(1):27–33. doi: 10.1017/s0266462300003214. [DOI] [PubMed] [Google Scholar]

- Kaplan HC, Brady PW, Dritz MC, Hooper DK, Linam WM, Froehle CM. Margolis P. The Influence of Context on Quality Improvement Success in Health Care: A Systematic Review of the Literature. Milbank Quarterly. 2010;88(4):500–59. doi: 10.1111/j.1468-0009.2010.00611.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawamoto K, Houlihan CA, Balas EA. Lobach DF. Improving Clinical Practice Using Clinical Decision Support Systems: A Systematic Review of Trials to Identify Features Critical to Success. British Medical Journal. 2005;330(7494):765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiefe CI, Weissman NW, Allison JJ, Farmer R, Weaver M. Williams OD. Identifying Achievable Benchmarks of Care: Concepts and Methodology. International Journal for Quality in Health Care. 1998;10(5):443–7. doi: 10.1093/intqhc/10.5.443. [DOI] [PubMed] [Google Scholar]

- Kiefe CI, Allison JJ, Williams OD, Person SD, Weaver MT. Weissman NW. Improving Quality Improvement Using Achievable Benchmarks for Physician Feedback: A Randomized Controlled Trial. Journal of the American Medical Association. 2001;285(22):2871–9. doi: 10.1001/jama.285.22.2871. [DOI] [PubMed] [Google Scholar]

- Leibovitz E. Acute Otitis Media in Pediatric Medicine: Current Issues in Epidemiology, Diagnosis, and Management. Paediatrics Drugs. 2003;5(Suppl 1):1–12. [PubMed] [Google Scholar]

- Lin CP, Payne TH, Nichol WP, Hoey PJ, Anderson CL. Gennari JH. Evaluating Clinical Decision Support Systems: Monitoring CPOE Order Check Override Rates in the Department of Veterans Affairs' Computerized Patient Record System. Journal of the American Medical Informatics Association. 2008;15(5):620–6. doi: 10.1197/jamia.M2453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon JL, Ashton A, Turner B. Magill M. Variation in the Diagnosis of Upper Respiratory Tract Infections and Otitis Media in an Urgent Medical Care Practice. Archives of Family Medicine. 1998;7(3):249–54. doi: 10.1001/archfami.7.3.249. [DOI] [PubMed] [Google Scholar]

- Marcy SM, Takata GS, Chan LS, Shekelle PG, Mason WH, Wachsman L, Ernst R, Hay JW, Corely PM, Morphew T, Ramicone E. Nicholson C. Management of Acute Otitis Media. Rockville, MD: Agency for Healthcare Research and Quality; 2001. Evidence Report/Technology Assessment No. 15, May 2001. [PMC free article] [PubMed] [Google Scholar]

- Mayo-Smith MF. Agrawal A. Factors Associated with Improved Completion of Computerized Clinical Reminders across a Large Healthcare System. International Journal of Medical Informatics. 2007;76:710–6. doi: 10.1016/j.ijmedinf.2006.07.003. [DOI] [PubMed] [Google Scholar]

- Mugford M, Banfield P. O'Hanlon M. Effects of Feedback of Information on Clinical Practice: A Review. British Medical Journal. 1991;303(6799):398–402. doi: 10.1136/bmj.303.6799.398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielsen J. Usability Engineering. San Francisco, CA: Morgan Kaufmann; 1993. [Google Scholar]

- Osheroff JA, Pifer EA, Teich JM, Sittig DF. Jenders RA. Improving Outcomes with Clinical Decision Support: An Implementer's Guide. Chicago: Healthcare Information and Management Systems Society; 2005. [Google Scholar]

- Rogers EM. Diffusion of Innovations. New York: Free Press; 2003. [Google Scholar]

- Romano MJ. Stafford RS. Electronic Health Records and Clinical Decision Support Systems: Impact on National Ambulatory Care Quality. Archives of Internal Medicine. 2011;171(10):897–903. doi: 10.1001/archinternmed.2010.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shekelle P, Takata G, Chan L, Mangione-Smith R, Corely PM, Morphew T. Morton S. Diagnosis, Natural History, and Late Effects of Otitis Media with Effusion. Rockville MD: Agency for Healthcare Research and Quality; 2003. Evidence Report/Technology Assessment No. 55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shekelle PG, Takata G, Newberry SJ, Coker T, Limbos M, Chan LS, Timmer M, Suttorp M, Carter J, Motala A, Valentine D, Johnsen B. Shanman R. Management of Acute Otitis Media: Update. Rockville, MD: Agency for Healthcare Research and Quality; 2010. Evidence Report/Technology Assessment No. 198. [PMC free article] [PubMed] [Google Scholar]

- Shojania KG, Jennings A, Mayhew A, Ramsay CR, Eccles MP. Grimshaw J. The Effects of On-Screen, Point of Care Computer Reminders on Processes and Outcomes of Care. Cochrane Database of Systematic Reviews. 2009;3:CD001096. doi: 10.1002/14651858.CD001096.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Sijs H, Aarts J, Vulto A. Berg M. Overriding of Drug Safety Alerts in Computerized Physician Order Entry. Journal of the American Medical Informatics Association. 2006;13(2):138–47. doi: 10.1197/jamia.M1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tos M. Epidemiology and Natural History of Secretory Otitis. American Journal of Otology. 1984;5(6):459–62. [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. 2013. Washington, DC US Department of Health and Human Services Doctors and Hospitals' Use of Health IT More Than Doubles Since 2012 [Press Release] [DOI] [PubMed]

- Vernacchio L, Vezina RM. Mitchell AA. Knowledge and Practices Relating to the 2004 Acute Otitis Media Clinical Practice Guideline: A Survey of Practicing Physicians. Pediatric Infectious Disease Journal. 2006;25(5):385–9. doi: 10.1097/01.inf.0000214961.90326.d0. [DOI] [PubMed] [Google Scholar]

- Vernacchio L, Vezina RM. Mitchell AA. Management of Acute Otitis Media by Primary Care Physicians: Trends Since the Release of the 2004 American Academy of Pediatrics/American Academy of Family Physicians Clinical Practice Guideline. Pediatrics. 2007;120(2):281–7. doi: 10.1542/peds.2006-3601. [DOI] [PubMed] [Google Scholar]

- Weingart SN, Toth M, Sands DZ, Aronson MD, Davis RB. Phillips RS. Physicians' Decisions to Override Computerized Drug Alerts in Primary Care. Archives of Internal Medicine. 2003;163(21):2625–31. doi: 10.1001/archinte.163.21.2625. [DOI] [PubMed] [Google Scholar]

- Weissman NW, Allison JJ, Kiefe CI, Farmer RM, Weaver MT, Williams OD, Child IG, Pemberton JH, Brown KC. Baker CS. Achievable Benchmarks of Care: The ABCs of Benchmarking. Journal of Evaluation in Clinical Practice. 1999;5(3):269–81. doi: 10.1046/j.1365-2753.1999.00203.x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.

Figure S1: Components of the Otitis Media Clinical Decision Support System.

Figure S2: Physician Performance Feedback. (After an initial 8-month period of clinical decision support use alone, clinicians randomized to the feedback group received six rounds of hand-delivered feedback over a 10-month period. Quality metrics were based on American Academy of Pediatrics guidelines for acute Otitis Media. Data were presented on the top 10% of performers throughout the entire care network.)

Table S1: Adherence to OM Guidelines Metric Definitions for Children Two Months to Twelve Years of Age.