Abstract

Accurate knowledge about size and shape of the body derived from somatosensation is important to locate one’s own body in space. The internal representation of these body metrics (body model) has been assessed by contrasting the distortions of participants’ body estimates across two types of tasks (localization task vs. template matching task). Here, we examined to which extent this contrast is linked to the human body. We compared participants’ shape estimates of their own hand and non-corporeal objects (rake, post-it pad, CD-box) between a localization task and a template matching task. While most items were perceived accurately in the visual template matching task, they appeared to be distorted in the localization task. All items’ distortions were characterized by larger length underestimation compared to width. This pattern of distortion was maintained across orientation for the rake item only, suggesting that the biases measured on the rake were bound to an item-centric reference frame. This was previously assumed to be the case only for the hand. Although similar results can be found between non-corporeal items and the hand, the hand appears significantly more distorted than other items in the localization task. Therefore, we conclude that the magnitude of the distortions measured in the localization task is specific to the hand. Our results are in line with the idea that the localization task for the hand measures contributions of both an implicit body model that is not utilized in landmark localization with objects and other factors that are common to objects and the hand.

Electronic supplementary material

The online version of this article (doi:10.1007/s00221-015-4221-0) contains supplementary material, which is available to authorized users.

Keywords: Body representation, Body schema, Position sense, Somatosensation

Introduction

Stored representations of body size and shape are important components of perception and action (Marino et al. 2010; van der Hoort et al. 2011). The body model refers to an implicit representation of the body size and shape mediating position sense of the human body (Longo and Haggard 2010). For instance, information about the metric properties of the body contributes to determining the relative locations of body parts when using proprioception. Recent investigations measured the implicit body model using a localization task, in which participants indicate the spatial positions of the felt locations of ten landmarks (finger tips and knuckles) on their occluded hand (Longo and Haggard 2010; Longo et al. 2012). The results of this localization task showed a highly distorted representation of hand shape consisting of a shortening of the fingers’ length and a widening of the hand width. These effects generalized across both hands, and across different hand orientations. The latter finding implies that the observed distortions were not caused by a general foreshortening of the perspective or a motor bias (Longo and Haggard 2010). The distorted characteristics of hand shape were interpreted as mirroring distortions of somatosensory representations (Longo and Haggard 2010, 2011). Specifically, the distortion pattern matches the tactile acuity and geometry of the receptive fields of sensory neurons covering the dorsum of the hand (Brown et al. 2004; Longo and Haggard 2011).

The implicit body model has been dissociated from another important body representation, namely the conscious body image (Longo and Haggard 2010, 2012). To assess the body image, participants pick out the image of their own hand among an array of hand images differing in size or shape (template matching task). Participants’ performance in this task is very accurate (Gandevia and Phegan 1999; Longo and Haggard 2010). Such an accurate recognition would not be expected if the body image and the body model were sharing the same distorted representation. Hence, larger distortions in the localization task than in the template matching have been interpreted in favor of a dissociation of the implicit body model from the body image (Longo and Haggard 2010, 2012).

Because the larger distortions in the localization task than in the template matching task are a defining property for the body model, it is important to ensure that this task-specific distortion is specific to the body. To address this point, we compared participants’ performance in a localization and template matching task using non-corporeal objects and the participants’ hands. We chose a broad range of objects, from a square CD-box (least hand like control), to a rectangular post-it pad sharing a similar aspect ratio to the average hand, to a rake with similar structure to the hand. Here, we define localization task-specific distortions as the distortions in the localization task that go beyond the distortions observed in the template matching task, i.e., localization task distortions minus template matching task distortions.

If localization task-specific distortion effects are associated with the body only, they should only be found with the hand but not with non-corporeal items. Specifically, only the hand should present an overestimation of width relative to length in the localization task due to the anisotropies in tactile sensitivity and receptive field geometry on the hand dorsum (Longo and Haggard 2010, 2011). Moreover, because internal body representations were found to be item centered, rotating items 90° should preserve the pattern of distortions only in the case of the hand (Longo and Haggard 2010). In contrast, other non-corporeal items should be more sensitive to biases in retina or torso-centered coordinates and present a different pattern of distortion when presented in upright versus 90° rotated orientation (Künnapas 1958).

Methods

Participants

Sixteen right-handed individuals (10 males) between 19 and 42 years of age (mean = 28.2) participated in the experiment. Participants gave written informed consent prior to the study. The research was approved by the ethics committee of the University of Tübingen.

Stimulus and apparatus

We used four items as stimuli (Fig. 1): the participant’s left hand, a rake, a rectangular post-it pad, and a square CD-box. In order to compare the stimuli with each other, we quantified the item’s shape using its width to length ratio, referred to as its Shape Index (SI = 100*width/length), and assumed to reflect the overall aspect ratio of the item (see “Methods,” Longo and Haggard 2012). In Fig. 1a, the width of an item is marked with a yellow line, and the length is marked with a red line. We calculate SI from these item-centric width and length dimensions, so when items are rotated by 90° the resulting SI should remain the same if measured distortions were item centric, or change if distortions were viewer centric. Following Longo and colleagues’ studies, hand length was defined as the distance between the knuckle and the tip of the middle finger, while hand width corresponded to the distance between the knuckles of the little to the index fingers (average hand SI ≈ 64). For the rake, the length was defined as the distance between the bottom and top of the middle branch, while the width was the distance between the bottom of the first and fifth branches (SI = 40). In the case of the post-it pad (SI = 60) and the CD-box (SI = 100), we simply referred to the vertical and the horizontal dimensions of the items to define their length and width (Fig. 1a). Ten landmarks were used in the localization task for the hand and the rake: the finger tips and center of the knuckles at the bottom of each finger and the top and bottom of the five branches for the rake (Fig. 2). Four landmarks were used for the CD-box and the post-it: one at each corner.

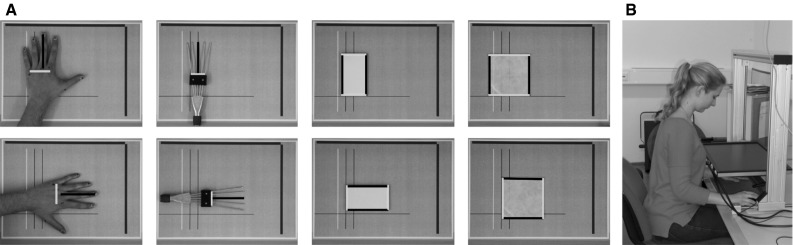

Fig. 1.

a Images of the items used in the experiment. From left to right: hand, rake, post-it, box presented to the participant in upright (top row) and rotated (bottom row) orientation. The yellow and red lines on the items were not present during experimentation and have been drawn to illustrate the item-centric width and length dimensions used to calculate the Shape Index (SI). The terms “length” and “width” always refer to the item-centric length and width; for example, in the upright orientation the “length” of the hand, rake, post-it and box is vertical (top row, red line), and in the rotated orientation, the “length” is horizontal from the viewer’s perspective (bottom row, red line). The lines on the green background were of a known size and used to calculate the actual hand size from the images. b Image of the experimental setup in the localization task, in the condition where the participant estimated the landmarks on the left hand (color figure online)

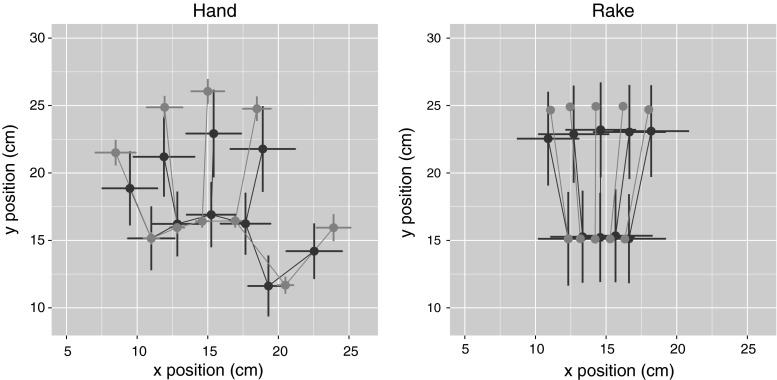

Fig. 2.

Actual and estimated landmarks for the upright hand (left) and rake (right) averaged across 16 participants in the localization task. The filled circles indicate the mean location of actual landmarks (blue) and estimated landmarks (red). The error bars depict the standard deviation (shown for x and y directions separately). For the sake of clarity, the actual and estimated landmarks are connected with thin lines to highlight the structure of the item. Estimates and actual landmark positions were aligned on the knuckle of the little finger for the hand and on the bottom of the leftmost branch for the rake (color figure online)

For the template matching task, we used silhouette images of each item. We used an image of a hand that was gender-matched to the participant to generate the hand silhouette. We used custom written scripts with Unity 3.5 (Unity Technologies, San Francisco, USA) for displaying the stimuli (silhouettes and landmark names) and for collecting participants’ responses. All stimuli were displayed on a Dell U2412M monitor with a 16:10 widescreen aspect ratio at native resolution (1,920 × 1,200 pixels).

Our experimental setup differed from the one employed in Longo and Haggard (2010) in the following ways. We used a cursor on a rectangular monitor instead of a long baton (35 cm) to point on a square board; the screen was positioned 10 cm higher than the board in Longo and Haggard (2010) above the table. Indirect pointing via a mouse peripheral was chosen as a preferred method to help alleviate direct motor command influences which might supplement position sense (Fel’dman and Latash 1982). Previous results have found that hand shape distortions underlying position sense were not viewer centered (Longo and Haggard 2010); as such we assumed that using a widescreen compared to a square board should not play a major role on the results. The height of the screen was constrained by the hand location just below: in order not to touch the hand, the screen had to be positioned 16 cm above the table top.

Procedure

The order of the localization and template matching tasks was counterbalanced across participants.

Localization task

The center of the bottom knuckles and tips of each finger of the participant’s left hand were marked with red non-permanent pen. An experimental block measured participants’ ability to localize predefined landmarks on a particular item (hand or object). First, the experimenter explained and familiarized participants with the landmark names and their corresponding locations on an item resting on a flat surface (for about 2 min). Tools have been shown to affect both spatial and bodily representations after performing and observing tool actions (for review, see Maravita and Iriki 2004). To avoid this confound, none of the objects were touched or manipulated by the participants, nor held in front of participants by the experimenter.

Participants sat at a table with their body midline aligned with a mark on the table which indicated the placing position (a cross) for the items. An item was placed centrally with its lower edge at the center of the cross. Participants viewed the item for 15 s, while the position of the item was recorded using an overhead mounted camera (Canon, EOS 40D; Zoom lens, EF-28–135 mm). Using these images, we derived the exact size of the items (Fig. 1a).

Afterwards a computer monitor was slid in parallel to the table top, over the item, thereby occluding it (Fig. 1b). Participants were told to use the following strategies. For objects, they should imagine the screen to be transparent so that they could “see” the landmarks below it. For the hand, participants were asked to rely exclusively on the felt location of their finger tips and knuckles without using visual imagery.

An experimental trial started by presenting the name of an item’s landmark (e.g., tip of middle finger) in white font at the top center of the black computer screen. After a 2-s delay, the mouse cursor was presented at a random y axis location on the right edge of the screen. Participants indicated as accurately as possible the perceived location of the queried landmark by positioning the mouse cursor over the corresponding position on the computer screen and left-clicking with the mouse. The hand directing the mouse pointer was hidden from view. The answer interval was not time restricted and provided no feedback. Then, the next trial started. After testing each landmark in random order five times, the computer monitor was removed for 15 s, making the item visible to the participant, and the item’s location was photographed to ensure that it had not moved. Then, each landmark was again tested five times. The ten measures for each landmark constituted one experimental block.

Each experimental block probed all landmarks of an item (four items) in one specific orientation (upright or 90° clockwise rotation). There were a total of eight blocks. The testing order of experimental blocks was randomized across participants. At the beginning of the localization task, participants received one experimental block as training with a different object (pen). The training data were not included in the analysis.

Template matching task

The template matching task is based on the item’s SI. Participants estimated the SI using a 1-up 1-down adaptive staircase method (50 % threshold; Levitt 1971). The actual item was first presented on a table to the participant in an upright position, and participants had 1 min to remember the item’s SI. The item was then hidden from view. Afterwards, participants indicated whether an item’s silhouette displayed on a computer monitor was wider (right arrow key) or narrower (left arrow key) than the actual item. The area of the silhouette matched that of the item, and only the SI was modified. The SI of the displayed silhouette was altered using two randomly interleaved staircase procedures. The first staircase had a start value of 125 % of the actual SI; the second started at 75 %. The procedure ended after each staircase had a total of thirteen reversals, with an upper limit of 80 trials. Step sizes were reduced after each reversal, and the step sizes were 16 (initial step size), 8, 4, and 3 SI. The threshold (SI at convergence) was calculated from the mean of last 5 reversals (across the two procedures). The testing order of items was randomized across participants.

Statistics

We used Mauchly’s sphericity test to validate the analyses of variance used on our data. When violations of sphericity were observed, we reported the results with Greenhouse–Geisser sphericity corrections.

Results

Dissociation between localization and template matching tasks

Assessing distortions of each item separately

Previous studies have shown that participants have an accurate representation of hand shape in the visual template matching task and a distorted representation of hand shape in the localization task characterized by a value that is largely superior to the actual SI of the hand (Longo and Haggard 2010, 2012). If the localization task distortions are mainly associated with bodily items, then it should be primarily present in the hand and not in non-corporeal objects. We normalized each item’s SI by dividing the estimated SI by the actual item’s SI, to create a baseline of 1 and allow between-item comparisons. We compared the normalized SI of all items in upright posture to the baseline (=item’s actual SI) in both tasks. In the localization task, baseline comparisons showed that all normalized SIs were significantly larger than 1 (all p ≤ .013, all effect sizes r ≥ .60; p values were Holm corrected, see Table 1), suggesting that all items showed larger estimations of width relative to length (mean width estimate > mean length; see supplementary material, S1). In contrast, responses on the visual template matching tasks were close to accurate for the majority of items (all p > .05 except for the box t(15) = 5.22, p < .001, r = .80, p values were Holm corrected; see Table 1). The shape estimations are depicted in Fig. 3.

Table 1.

Holm-corrected t tests comparing the SI to the veridical performance of 1 for each upright presented item in the localization and template matching tasks (first and second row)

| Task | Item | |||

|---|---|---|---|---|

| Hand | Rake | Post-it | Box | |

| Localization versus baseline |

t(15) = 4.80

p = .0009 r = .77 |

t(15) = 3.14

p = .013 r = .63 |

t(15) = 3.35

p = .013 r = .65 |

t(15) = 2.88

p = .013 r = .60 |

| Template matching versus baseline |

t(15) = 4.80 p = .93 r = .19 |

t(15) = 3.14 p = .93 r = .052 |

t(15) = 3.35 p = .09 r = .52 |

t(15) = 5.21

p = .0004 r = .80 |

| Localization versus template matching |

t(15) = 4.58

p = .0014 r = .76 |

t(15) = 3.32

p = .014 r = .65 |

t(15) = 2.16 p = .094 r = .49 |

t(15) = 1.39 p = .19 r = .34 |

Holm-corrected comparisons of SI between localization and template matching tasks (third row). Each cell provides the t statistic, p value, and the effect size measure r. Significant effects are highlighted in italics

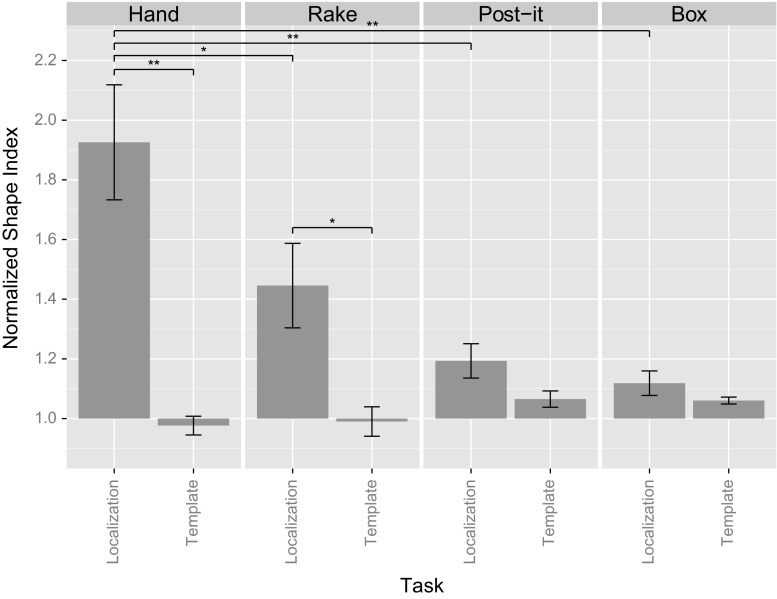

Fig. 3.

Normalized shape index (see text) shown for each task (along the x axis), item (across different panels) in standard orientation (upright). A normalized SI of 1 corresponds to an accurate estimate of the item’s SI. Estimates >1 indicate that participants perceived the item wider and/or shorter than the actual item. Errors bars represent ±1 standard error of the mean. Significant differences are shown for within-item comparisons. Between-item comparisons are shown only for the hand, in the localization task. Asterisks represent Holm-corrected p values

Assessing distortions between items

To assess differences in distortions between items, we conducted a within-subject analysis of variance (ANOVA) on the normalized SI with items (hand, rake, post-it, and box) and task (localization vs. template matching task) as within-subject factors. There was a significant effect of item [F(2.11, 31.58) = 8.60, p < .001, η2 = .14] and task [F(1, 15) = 22.59, p < .001, η2 = .24]. The interaction effect between task and item was significant [F(1.54, 23.03) = 12.72, p < .001, η2 = .20].

We used post hoc pairwise t tests with Holm correction to analyze the interaction between task and item. We compared localization results and template results between items while taking into account our initial assumption that if the hand’s distortions are body specific, they should differ from the distortions measured on the other items. As such, the localization task distortion (normalized SI) was significantly higher in the hand compared to the rake [t(15) = 2.77, p = .014, r = .58], post-it [t(15) = 3.66, p = .0047, r = .69], and box [t(15) = 4.21, p = .0023, r = .74]. For other comparisons between items, see supplementary material S2.

The template matching task results did not differ significantly between items. We also looked at the difference between localization and template matching task results within each item. Pairwise t tests within each item showed a significant difference between the localization and template matching tasks for the hand and the rake only (hand p = .0014, rake p = .014, p values were Holm corrected, see Table 1; Fig. 3). This result indicates that the dissociation between the template matching task and localization task used to differentiate the body image from the body model was also present in the rake. However, the magnitude of the difference between the two tasks was significantly larger for the hand than for the rake [t(15) = 2.75, p = .015, r = .58].

Examining length and width distortions in the localization task

Baseline comparisons

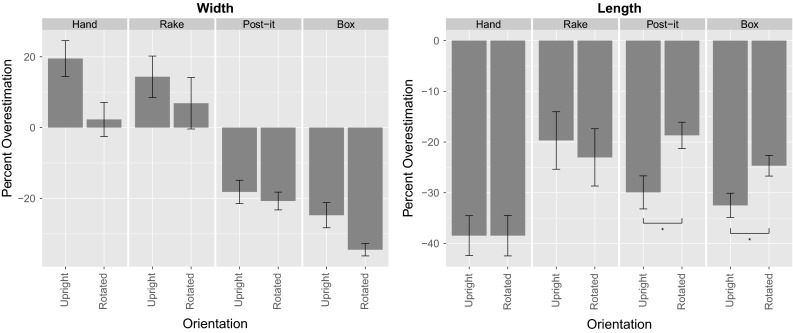

Our analysis of items’ upright SI showed that the SI was larger than baseline (actual SI) in the localization task for all items. Previous studies showed that the increased SI of the hand (compared to baseline) was due to an underestimation of length (shortened fingers) and an overestimation of width (spaces between knuckles; see Longo and Haggard 2010). This very specific pattern of distortion was assumed to retain several characteristics of the somatosensory homunculus. To check whether this pattern of distortion is specific to the human body, we analyzed the averaged percent overestimation of each item’s width and length and compared them to baseline in upright and rotated orientations (see Fig. 4). The percent overestimation [e.g., hand length: 100 × (judged finger length-actual finger length)/actual finger length] was consistently calculated using item-centric vertical and horizontal dimensions (marked red and yellow in Fig. 1a, respectively; e.g., when an item is rotated by 90° the item’s length, red line becomes horizontal from the viewer’s perspective). Overall, underestimation of length was observed for each item both in the upright and rotated orientation. However, only the hand and the rake showed an overestimation of the width in upright (mean hand = 19.52 %, mean rake = 14.37 %) and rotated orientation (mean hand = 2.3 %; mean rake = 6.87 %). All others (post-it, box) showed significant underestimations of the width dimension (see supplementary material S3).

Fig. 4.

Percent overestimations in the upright and rotated orientation for all items with regard to the items’ width (left) and length (right). Errors bars represent ±1 standard error of the mean. Significant differences are shown for within-item comparisons. Asterisks represent Holm-corrected p values

Comparing hand and rake distortions

The hand’s normalized SI appeared significantly more distorted than the rake (see Fig. 3). To better understand the difference in normalized SI between the rake and the hand, we conducted paired t tests comparing the magnitude of the length and width percent overestimation between the two items. There was a significant effect of items on the distortions only for the length, with finger length being significantly more underestimated than branch length in upright [t(15) = −3.32; p = .014; r = .65] and rotated [t(15) = −3.33; p = .005; r = .65] orientation (for visual comparison, see Fig. 4). No significant differences were measured in width estimation between the hand and rake (see supplementary material S4 for additional comparisons between hand and other items). This suggests that the difference in normalized SI between the hand and the rake is principally driven by a difference in estimation of the item’s length.

Item-centric versus viewer-centric distortions

Any viewer-centered biases (e.g., foreshortening of perspective) measured on the items in upright orientation should reverse in rotated orientation. This would be expected in non-corporeal objects. On the other hand, if the results are fairly similar across upright and rotated orientation, it would indicate that distortions are item centric, as previously observed in the case of the hand (Longo and Haggard 2010). In order to compare the item’s distortions across the upright and rotated orientation, we conducted a within-subject ANOVA on the percent overestimation of items’ width and length estimations (analyzing dimensions separately avoided modeling interactions between dimensions and was consistent with previous work, Longo and Haggard 2010). The within-subject factors were item (hand, rake, post-it, or box) and orientation (upright or rotated). An effect of rotation on the percent overestimation of an item’s dimensions would be suggestive of distortions from the viewer’s frame of reference (as opposed to distortions from the item’s frame of reference).

Examining width distortions

For the items’ width estimations, the ANOVA showed a significant main effect of orientation [F(1, 15) = 8.78, p = .009, η2 = .063] and item [F(1.79, 26.8) = 31.73, p < .001, η2 = .50]. There was no significant interaction between item and orientation [F(2.19, 32.85) = 2.67, p = .08, η2 = .021]. This presumably reflects the fact that width estimations are always slightly lower in rotated compared to upright orientation (for visual comparisons, see Fig. 4 and refer to supplementary material S3 for numerical values).

Examining length distortions

For the items’ length estimations, the ANOVA showed a significant main effect of item [F(2.04, 30.55) = 6.47, p = .0044, η2 = .15]. There was no effect of orientation. The interaction between item and orientation was significant [F(2.02, 30.26) = 5.08, p = .012, η2 = .036]. Post hoc paired t tests with Holm correction were applied to determine the effect of orientation for the items’ length estimations. There were significant effects of orientation for the post-it [t(15) = 3.09, p = .030, r = .62] and for the box [t(15) = 2.87, p = .035, r = .60] which were indicative of viewer-centric perceptual biases (Fig. 4).

Discussion

Previous work has shown veridical hand-shape estimations in the template matching task, but distorted estimations in the localization task (Longo and Haggard 2010, 2012). We examined to what degree differences in distortions between localization and template matching tasks are specific to the body. We tested participants in localization and template matching tasks with corporeal and non-corporeal items. We observed orientation-independent shape distortions in the localization task and veridical shape estimations in the template matching task for one object (rake) and for the hand. Although the dissociation between the localization and template matching tasks was also observed for the rake, the magnitude of the dissociation (difference between localization and template matching tasks results) was significantly larger for the hand than for the rake. These results are in line with the idea that the localization task for the hand relies on an implicit body model that differs from the representation used in landmark localization with objects.

Overall our results confirmed the characteristic distortions of hand shape: There was an overestimation of hand width relative to length, a property previously interpreted as mirroring known anisotropies in somatosensation such as the “greater tactile acuity on the hand dorsum medio-laterally than proximo-distally” (Longo and Haggard 2010, p. 11728). Interestingly, we also found this pattern of distortions in the case of the rake. While the distortions were less pronounced than the one found on the hand (at least in the case of the length), they were also characterized by an overestimation of the width axis compared to a large underestimation of the length.

The more pronounced localization task-specific distortion with the hand might be explained by body model based mechanisms in the localization of corporeal landmarks (e.g., somatosensation; Longo and Haggard 2010). However, the fact that distortions are also observed with non-corporeal items points to other non-somatosensory factors that are shared between objects and the body and contribute to the distortions in the localization task. This idea is in line with recent fMRI studies which show that tool and hand BOLD response patterns (based on static pictures) are partially overlapping in high level visual cortex areas in contrast to non-manipulable items, suggesting that some neural code is shared between these items (Bracci et al. 2012; Peelen et al. 2013). Such visual influences on somatosensory processing have been shown in multiple studies (Fiehler et al. 2007; Maravita et al. 2002) including work directly related to localization tasks (Longo 2014).

The distortion depended for some items on the objects’ orientation, in particular for the box and the post-it. These results point to viewer-centered biases for position estimates in the localization task for those items. The emergence of viewer-centered biases might be facilitated because the box and the post-it do not provide cues with regard to their top and bottom. Such information might be necessary for the emergence of an object-centered reference frame and provide the prerequisite for the distortions being bound to the items’ orientations. In contrast, the rake and the hand provide visual cues with regard to the item’s top and bottom. As such, we found evidence of item-centric biases in the case of the rake: The estimation of the width (overestimation) and length (underestimation) of the rake were preserved and correlated across orientations (rake’s length r = .52; p = .040 and width r = .60; p = .024, see S5 in supplementary material). Hence, one possible explanation for the differential effects of orientation on objects is the emergence of a type of reference frame.

Previous studies have found the hand width to be overestimated (60–80 %), and this overestimation is to be correlated between different hand orientations (Longo and Haggard 2010). In comparison, we found a lower overestimation of hand width (20 %) which was significantly reduced after rotation (for more details see S3 and S5 in supplementary material). One potential explanation is that participants may not have splayed their fingers as much as in previous studies. Descriptively, the average angles between the fingers of the hand in our experiment appear smaller than the angles reported in previous research (see supplementary material S6; Longo 2014; Longo and Haggard 2010). Therefore, posture differences might be partly responsible for the deviation of the hand results from others. Alternatively, the differential findings between our study and previous ones could be due to changes in the localization task setup (see “Methods” section). We think that it is unlikely that the screen shape has a decisive role in explaining these differences. If the shape of the screen were to play a major role on hand-shape distortions, we would have also expected finger length to be significantly different across orientation, which was not the case (finger underestimation was very similar between the upright and rotated postures). The effect of the other methodological differences between our and previous studies (e.g., using a cursor rather than a long baton for localization in 3D space, larger vertical displacement between the occluding surface and the hand) on the overestimation of hand width is not yet known. Investigating the effect of these methodological variations on hand-shape distortions is a topic for future research. However, it is important to note that these methodological differences are constant across all items and therefore cannot explain the differences in distortions between items.

What other possible factors might contribute to the localization task-specific distortions found with the objects and the hand? The distortion difference between the two tasks may result from task complexity (Valiquette and McNamara 2007) or from accessing different types of spatial representation in visual memory with the two tasks (McNamara 2003; Shelton and McNamara 2004). According to McNamara (2003), two independent representations may be formed when participants are learning a spatial layout visually: one involved in localization tasks which encodes spatial relations between landmarks (distance, direction) and one supporting (holistic) scene recognition by means of visual memory (Shelton and McNamara 2004). Hence, the dissociation obtained between body model and body image might simply be part of a more general dissociative process in spatial memory. This could arise from having different representations of the same spatial layout or from having one single representation of the layout accessed by different functions [for “Discussion,” see de Vignemont (2007) and McNamara (2003)].

It is not uncommon to observe distorted memory representations of the world (Cooper et al. 2012; Roediger and McDermott 2000; Tversky and Schiano 1989; Tversky 1981). Specifically, studies examining the cognitive representation of spatial patterns (i.e., 2D drawings) found the spatial representation of these non-corporeal visual patterns to be distorted when reported from memory and independent of the patterns’ orientation (Matthews and Adams 2008; Tversky and Schiano 1989). With regard to visual perception, systematic errors relating to the estimation of the size or shape of certain figures and objects are described in multiple illusions (Hamburger and Hansen 2010; Künnapas 1955; Pressey 1971). For instance, in the vertical–horizontal illusion or bisecting line illusion (L-shape or inverted T-shape configuration), vertical lines are often drawn as smaller in relation to bisected horizontal lines of identical length. This type of illusion was demonstrated with various figures (rectangle, square) in laboratory conditions as well as with more realistic stimuli in natural environments (Chapanis and Mankin 1967; Sleight and Austin 1952). Therefore, the distortions observed on the items could be partly induced by such effects (i.e., memory or illusory effects induced by the configuration of the hand itself). This is an interesting avenue for future research.

Conclusion

The current study investigated the body specificity of hand distortions in localization versus template matching tasks. Our results have shown that localization task-specific distortions are more pronounced for the human hand than for non-corporeal items. This is in line with the idea that the localization task for the hand relies on an implicit body model that is not utilized in landmark localization with objects.

Nevertheless, the rake had a similar qualitative pattern of distortion to the hand. Therefore, in addition to body model based mechanisms, biases from other factors such as spatial memory might also play a role in hand-shape distortion. This proposal is consistent with previous findings showing influences of alternative perceptual factors like vision on hand-shape distortions in localization tasks (Longo 2014).

Electronic supplementary material

Acknowledgments

This research was partially supported by the WCU (World Class University) program funded by the Ministry of Education, Science, and Technology through the National Research Foundation of Korea (R31-10008). We thank Hong Yu Wong, Matthew R. Longo, and Luke Miller for discussions on this work.

Contributor Information

Aurelie Saulton, Phone: +49 (0) 7071 601645, Email: aurelie.saulton@tuebingen.mpg.de.

Heinrich H. Bülthoff, Phone: +49 (0) 7071 601 601, Email: heinrich.buelthoff@tuebingen.mpg.de

References

- Bracci S, Cavina-Pratesi C, Ietswaart M, Caramazza A, Peelen MV. Closely overlapping responses to tools and hands in left lateral occipitotemporal cortex. J Neurophysiol. 2012;107(5):1443–1456. doi: 10.1152/jn.00619.2011. [DOI] [PubMed] [Google Scholar]

- Brown PB, Koerber HR, Millecchia R. From innervation density to tactile acuity: 1. Spatial representation. Brain Res. 2004;1011(1):14–32. doi: 10.1016/j.brainres.2004.03.009. [DOI] [PubMed] [Google Scholar]

- Chapanis A, Mankin DA. The vertical-horizontal illusion in a visually-rich environment. Percept Psychophys. 1967;2(6):249–255. doi: 10.3758/BF03212474. [DOI] [Google Scholar]

- Cooper AD, Sterling CP, Bacon MP, Bridgeman B. Does action affect perception or memory? Vision Res. 2012;62:235–240. doi: 10.1016/j.visres.2012.04.009. [DOI] [PubMed] [Google Scholar]

- de Vignemont F. How many representations of the body? Behav Brain Sci. 2007;30(2):1–6. doi: 10.1017/S0140525X07001434. [DOI] [Google Scholar]

- Fel’dman AG, Latash ML. Interaction of afferent and efferent signals underlying joint position sense: empirical and theoretical approaches. J Mot Behav. 1982;14(3):174–193. doi: 10.1080/00222895.1982.10735272. [DOI] [PubMed] [Google Scholar]

- Fiehler K, Engel A, Rösler F. Where are somatosensory representations stored and reactivated? Behav Brain Sci. 2007;30(02):206–207. doi: 10.1017/S0140525X07001458. [DOI] [Google Scholar]

- Gandevia SC, Phegan CML. Perceptual distortions of the human body image produced by local anaesthesia, pain and cutaneous stimulation. J Physiol. 1999;514(2):609–616. doi: 10.1111/j.1469-7793.1999.609ae.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamburger K, Hansen T. Analysis of individual variations in the classical horizontal-vertical illusion. Atten Percept Psychophys. 2010;72(4):1045–1052. doi: 10.3758/APP.72.4.1045. [DOI] [PubMed] [Google Scholar]

- Künnapas TM. An analysis of the “vertical-horizontal illusion”. J Exp Psychol. 1955;49(2):134–140. doi: 10.1037/h0045229. [DOI] [PubMed] [Google Scholar]

- Künnapas TM. Influence of head inclination on the vertical–horizontal illusion. J Psychol. 1958;46(2):179–185. doi: 10.1080/00223980.1958.9916283. [DOI] [Google Scholar]

- Levitt HCCH. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49(2B):467–477. doi: 10.1121/1.1912375. [DOI] [PubMed] [Google Scholar]

- Longo MR. The effects of immediate vision on implicit hand maps. Exp Brain Res. 2014;232(4):1241–1247. doi: 10.1007/s00221-014-3840-1. [DOI] [PubMed] [Google Scholar]

- Longo MR, Haggard P. An implicit body representation underlying human position sense. Proc Natl Acad Sci. 2010;107(26):11727–11732. doi: 10.1073/pnas.1003483107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Longo MR, Haggard P. Weber's illusion and body shape: anisotropy of tactile size perception on the hand. J Exp Psychol Hum Percept Perform. 2011;37(3):720. doi: 10.1037/a0021921. [DOI] [PubMed] [Google Scholar]

- Longo MR, Haggard P. Implicit body representations and the conscious body image. Acta Psychol. 2012;141(2):164–168. doi: 10.1016/j.actpsy.2012.07.015. [DOI] [PubMed] [Google Scholar]

- Longo MR, Long C, Haggard P. Mapping the invisible hand: a body model of a phantom limb. Psychol Sci. 2012;23(7):740–742. doi: 10.1177/0956797612441219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maravita A, Iriki A. Tools for the body (schema) Trends Cogn Sci. 2004;8(2):79–86. doi: 10.1016/j.tics.2003.12.008. [DOI] [PubMed] [Google Scholar]

- Maravita A, Spence C, Kennett S, Driver J. Tool-use changes multimodal spatial interactions between vision and touch in normal humans. Cognition. 2002;83(2):B25–B34. doi: 10.1016/S0010-0277(02)00003-3. [DOI] [PubMed] [Google Scholar]

- Marino BF, Stucchi N, Nava E, Haggard P, Maravita A. Distorting the visual size of the hand affects hand pre-shaping during grasping. Exp Brain Res. 2010;202(2):499–505. doi: 10.1007/s00221-009-2143-4. [DOI] [PubMed] [Google Scholar]

- Matthews WJ, Adams A. Another reason why adults find it hard to draw accurately. Perception. 2008;37(4):628–630. doi: 10.1068/p5895. [DOI] [PubMed] [Google Scholar]

- McNamara TP. How are the locations of objects in the environment represented in memory? In: Freksa C, Brauer W, Habel C, Wender KF, editors. Spatial cognition III. Berlin: Springer; 2003. pp. 174–191. [Google Scholar]

- Peelen MV, Bracci S, Lu X, He C, Caramazza A, Bi Y. Tool selectivity in left occipitotemporal cortex develops without vision. J Cogn Neurosci. 2013;25(8):1225–1234. doi: 10.1162/jocn_a_00411. [DOI] [PubMed] [Google Scholar]

- Pressey AW. An extension of assimilation theory to illusions of size, area, and direction. Percept Psychophys. 1971;9(2):172–176. doi: 10.3758/BF03212623. [DOI] [Google Scholar]

- Roediger HL, McDermott KB. Tricks of memory. Curr Dir Psychol Sci. 2000;9(4):123–127. doi: 10.1111/1467-8721.00075. [DOI] [Google Scholar]

- Shelton AL, McNamara TP. Spatial memory and perspective taking. Mem Cogn. 2004;32(3):416–426. doi: 10.3758/BF03195835. [DOI] [PubMed] [Google Scholar]

- Sleight RB, Austin TR. The horizontal–vertical illusion in plane geometric figures. J Psychol. 1952;33(2):279–287. doi: 10.1080/00223980.1952.9712836. [DOI] [Google Scholar]

- Tversky B. Distortions in memory for maps. Cogn Psychol. 1981;13(3):407–433. doi: 10.1016/0010-0285(81)90016-5. [DOI] [Google Scholar]

- Tversky B, Schiano DJ. Perceptual and conceptual factors in distortions in memory for graphs and maps. J Exp Psychol Gen. 1989;118(4):387–398. doi: 10.1037/0096-3445.118.4.387. [DOI] [PubMed] [Google Scholar]

- Valiquette C, McNamara TP. Different mental representations for place recognition and goal localization. Psychon Bull Rev. 2007;14(4):676–680. doi: 10.3758/BF03196820. [DOI] [PubMed] [Google Scholar]

- van der Hoort B, Guterstam A, Ehrsson HH. Being barbie: the size of one’s own body determines the perceived size of the world. PloS One. 2011;6(5):e20195. doi: 10.1371/journal.pone.0020195. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.