Abstract

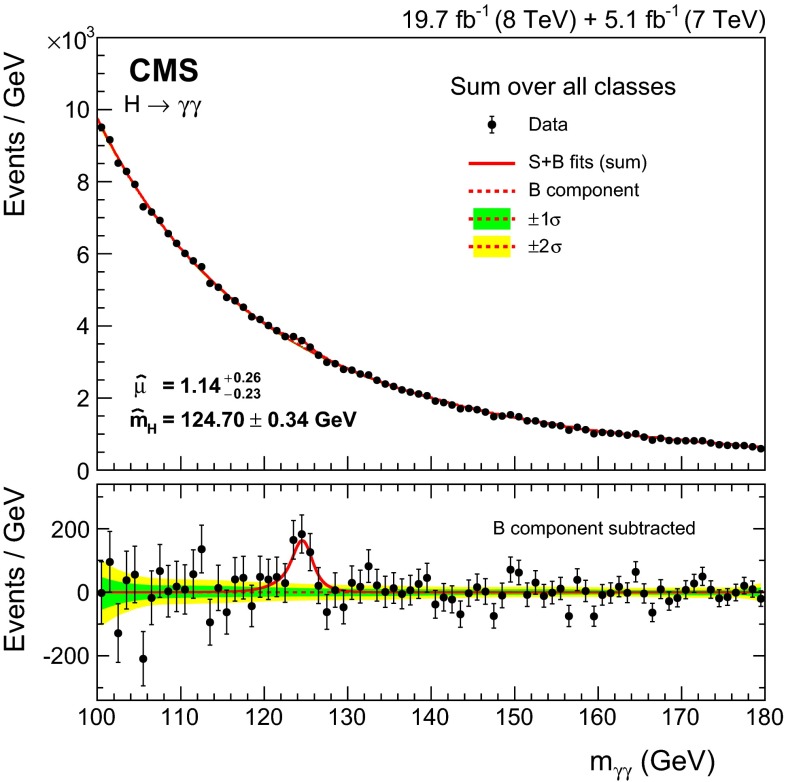

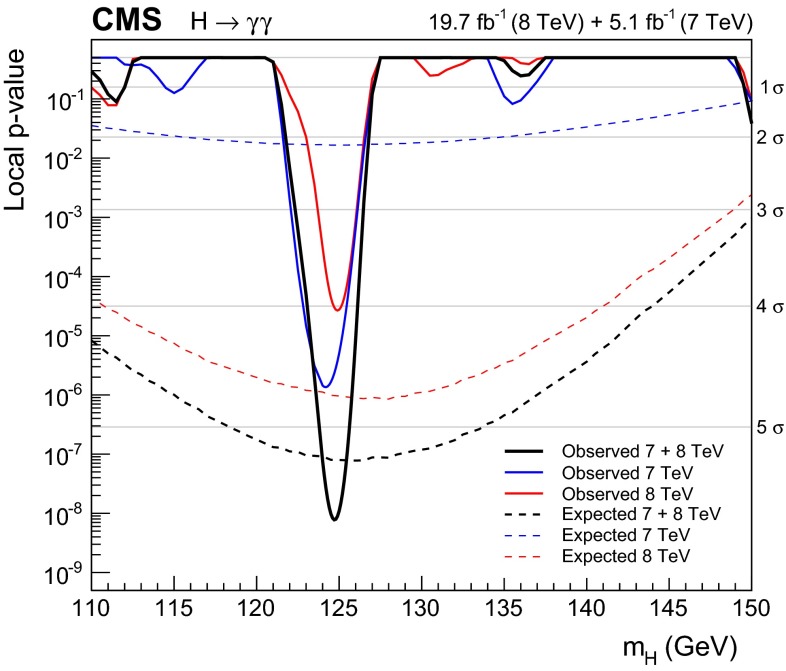

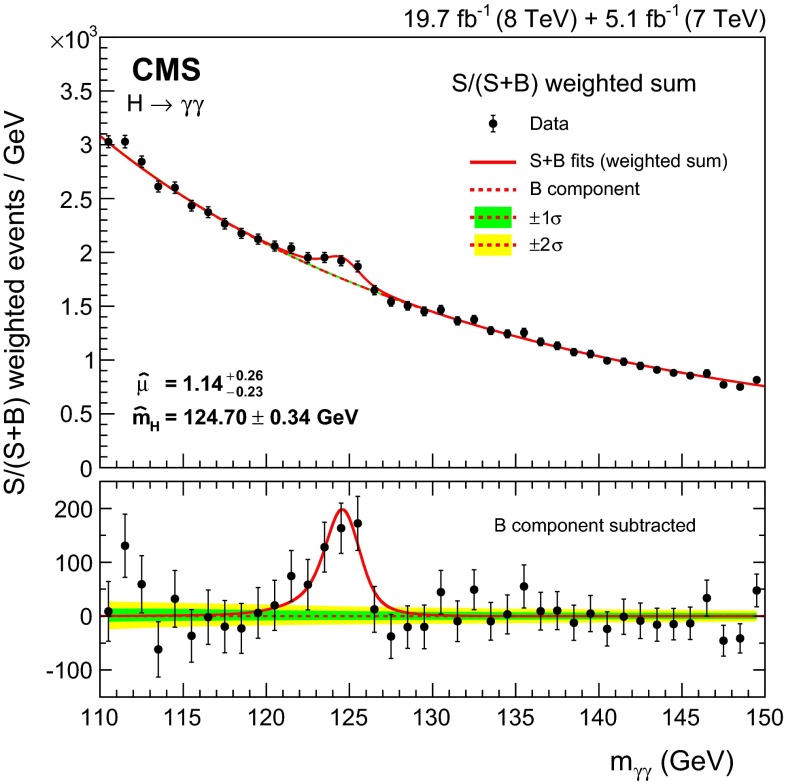

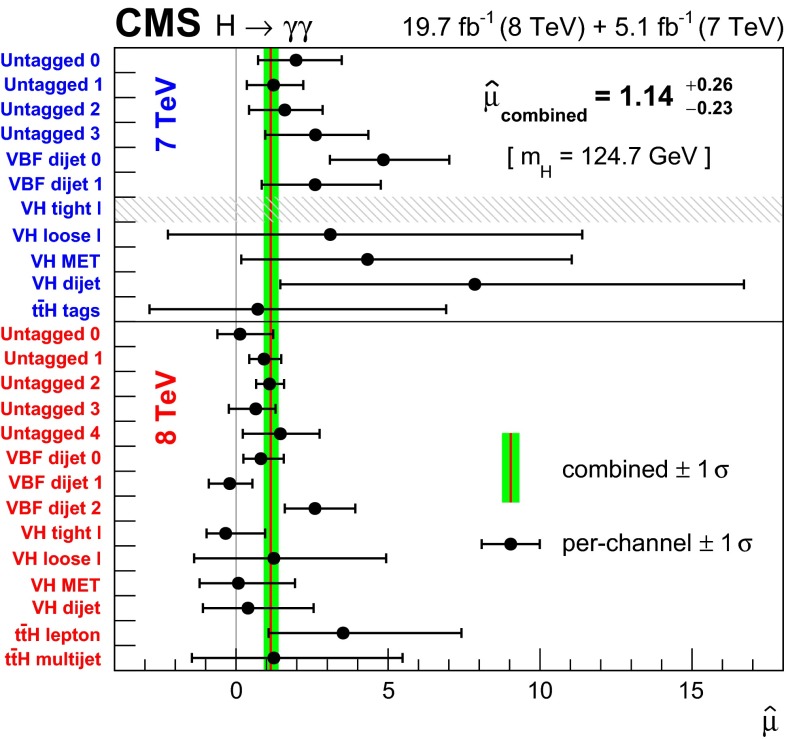

Observation of the diphoton decay mode of the recently discovered Higgs boson and measurement of some of its properties are reported. The analysis uses the entire dataset collected by the CMS experiment in proton-proton collisions during the 2011 and 2012 LHC running periods. The data samples correspond to integrated luminosities of 5.1at and 19.7at 8 . A clear signal is observed in the diphoton channel at a mass close to 125 with a local significance of , where a significance of is expected for the standard model Higgs boson. The mass is measured to be , and the best-fit signal strength relative to the standard model prediction is . Additional measurements include the signal strength modifiers associated with different production mechanisms, and hypothesis tests between spin-0 and spin-2 models.

Introduction

In 2012 the ATLAS and CMS Collaborations announced the observation [1, 2] of a new boson with a mass, , of about 125and properties consistent, within uncertainties, with expectations for a standard model (SM) Higgs boson. The Higgs boson is the particle predicted to exist as a consequence of the spontaneous symmetry breaking mechanism acting in the electroweak sector of the SM [3–5]. This mechanism was first suggested nearly fifty years ago [6–11], and introduces a complex scalar field, which also gives masses to the fundamental fermions through a Yukawa interaction. Results using the full available dataset have recently been published by CMS [12–19], and by ATLAS [20–25].

The diphoton decay channel provides a clean final-state topology that allows the mass of the decaying object to be reconstructed with high precision. Having in mind the discovery of a low mass Higgs boson in the diphoton channel, the electromagnetic calorimeter performance was a design priority for CMS. The diphoton decay is mediated by loop diagrams containing charged particles. The top quark loop and the W boson loop diagrams dominate the decay amplitude, though they contribute with opposite sign. The branching fraction is small, reaches a maximum value of 0.23 % at and falls steeply to values less than 0.1 % above 150 [26]. As a consequence the search reported in this paper is limited to the mass range, . Despite the small branching fraction and the presence of a large diphoton continuum background, the diphoton decay mode provides an expected signal significance for the 125SM Higgs boson that is one of the highest among all the decay modes.

This paper presents the analysis performed on the full dataset collected in 2011 and 2012, reconstructed with the final detector calibration values, in collisions at the Large Hadron Collider (LHC), with an integrated luminosity of 5.1at a centre-of-mass energy of 7(herein referred to as the “7dataset”) and 19.7at 8(“8 dataset”). The results supersede those previously reported by CMS for this decay mode [27, 28].

The primary production mechanism of the Higgs boson at the LHC is gluon-gluon fusion (ggH) [29] with additional smaller contributions from vector boson fusion (VBF) [30] and production in association with a or boson (VH) [31] or a pair ( ) [32, 33]. Events from specific production mechanisms are identified and classified by the presence of additional objects in the final state. Requiring the presence of two forward jets, in addition to the photon pair, favours events produced by the VBF mechanism, while event classes designed to preferentially select VH or production require the presence of muons, electrons, missing transverse energy from neutrinos, or jets arising from the hadronization of b quarks. To achieve the best sensitivity, the remaining events, and also the dijet events selected as having a VBF signature, are further separated using multivariate classifiers that provide measures of their probability to be signal rather than background. The signal is measured performing a simultaneous fit to the diphoton invariant mass distributions in the various event classes. The signal model is derived from simulation, while the background is obtained from the fit to data. A very large sample of events is available in which a boson decays to a pair of electrons; treating the electron showers in these events as if they were from photons allows precise and detailed knowledge to be obtained concerning the accuracy of the simulation of the signal, specifically the simulation of the energy reconstruction and selection of photons, and the simulation of the selection and classification of diphoton events.

With respect to analyses of this decay mode previously reported by CMS there are refinements in methodology, which are described in the main body of the paper. In addition, the analysis uses an improved intercalibration of the electromagnetic calorimeter channels and an improved energy regression algorithm to correct the clustered energy, resulting in better energy resolution. The simulation of the signal and Z boson samples is also improved. The changes in the energy-equivalent noise in the electromagnetic calorimeter during the data-taking period are simulated, and a significantly increased time window is used to simulate the effect of deposited energy coming from interactions in earlier bunch crossings.

The paper is organized as follows. After a brief description of the CMS detector and event reconstruction in Sect. 2 and of the data and simulated samples in Sect. 3, the reconstruction and identification of photons is detailed in Sect. 4. The issue of identifying the diphoton vertex is covered in Sect. 5. In Sect. 6 the event classification is described. The section first describes the construction of a multivariate event classifier which takes as input quantities associated with the two photons, and then goes on to describe the tagging of events by the presence of objects in the final state, in addition to the photon pair, that give the event a signature characteristic of one of the production processes. It concludes by detailing the use of two multivariate event classifiers to additionally subdivide into classes both the untagged events, and the events tagged as coming from the VBF process. Sections 7 and 8 describe, respectively, the signal and background models used in the statistical procedures which provide the results of the analysis, and Sect. 9 discusses the systematic uncertainties taken into account in those procedures. Section 10 outlines three alternative analyses that use specific variations of methodology that provide corroboration of particular aspects of the main analysis. Finally, in Sect. 11 the results of the measurements of the Higgs boson production and its properties are presented and discussed.

CMS detector

The central feature of the CMS apparatus is a superconducting solenoid, 13 in length and with an inner diameter of 6 , which provides an axial magnetic field of 3.8 . The bore of the solenoid is instrumented with both the central tracker and the calorimeters. The steel flux-return yoke outside the solenoid hosts gas ionization detectors used to identify and reconstruct muons.

The CMS experiment uses a right-handed coordinate system, with the origin at the nominal interaction point, the axis pointing to the centre of the LHC, the axis pointing up (perpendicular to the LHC plane), and the axis along the anticlockwise-beam direction. The polar angle is measured from the positive axis and the azimuthal angle is measured in the – plane. Transverse energy, denoted by , is defined as the product of energy and , with being measured with respect to the nominal interaction point. Charged-particle trajectories are measured by the silicon pixel and strip tracker, with full azimuthal coverage within , where the pseudorapidity is defined as . A lead tungstate crystal electromagnetic calorimeter (ECAL) and a brass/scintillator hadron calorimeter (HCAL) surround the tracking volume and cover the region . The ECAL barrel extends to while the ECAL endcaps cover the region . A lead/silicon-strip preshower detector is located in front of the ECAL endcap in the region . The preshower detector includes two planes of silicon sensors measuring the and coordinates of the impinging particles. A steel/quartz-fibre Cherenkov forward calorimeter extends the calorimetric coverage to . In the region , the HCAL cells have widths of 0.087 in both and . In the – plane, and for , the HCAL cells map on to 55 ECAL crystal arrays to form calorimeter towers projecting radially outwards from points slightly offset from the nominal interaction point. In the endcap, the ECAL arrays matching the HCAL cells contain fewer crystals.

Calibration of the ECAL is achieved exploiting the –symmetry of the energy flow, and using photons from and decays, and electrons from and decays [34]. Changes in the transparency of the ECAL crystals due to irradiation during the LHC running periods and their subsequent recovery are monitored continuously, and corrected for, using light injected from a laser system [34].

The first level of the CMS trigger system, composed of custom hardware processors, uses information from the calorimeters and muon detectors to select the most interesting events in a fixed time interval of less than 4 . The high-level trigger processor farm further decreases the event rate from around 100 to around 400 , before data storage.

A more detailed description of the CMS detector can be found in Ref. [35].

Reconstruction of the photons used in this analysis is described in Sect. 4, and uses a clustering of the energy recorded in the ECAL, known as a “supercluster”, which may be extended in the direction to form an extended cluster or group of clusters.

The global event reconstruction (also called particle-flow event reconstruction) consists of reconstructing and identifying each particle with an optimized combination of all subdetector information [36, 37]. In this process, the identification of the particle type (photon, electron, muon, charged hadron, neutral hadron) plays an important role in the determination of the particle direction and energy. Photons are identified as ECAL energy clusters not linked to the extrapolation of any charged-particle trajectory to the ECAL. Electrons are identified as a primary charged-particle track associated with ECAL energy clusters corresponding to this track’s extrapolation to the ECAL and to possible bremsstrahlung photons emitted along the way through the tracker material. Muons are identified as a track in the central tracker consistent with either a track or several hits in the muon system, associated with less energy in the calorimeters than would be deposited by a charged hadron or electron. Charged hadrons are identified as charged-particle tracks neither identified as electrons, nor as muons. Finally, neutral hadrons are identified as HCAL energy clusters not linked to any charged hadron trajectory, or as ECAL and HCAL energy excesses with respect to the expected energy deposited by a matching charged hadron.

The energy of photons used in the global event reconstruction is directly obtained from the ECAL measurement. The energy of electrons is determined from a combination of the track momentum at the main interaction vertex, the corresponding ECAL cluster energy, and the energy sum of all bremsstrahlung photons attached to the track. The energy of muons is obtained from the corresponding track momentum. The energy of charged hadrons is determined from a combination of the track momentum and the corresponding ECAL and HCAL energy, calibrated for the nonlinear response of the calorimeters. Finally, the energy of neutral hadrons is obtained from the corresponding calibrated ECAL and HCAL energies.

For each event, hadronic jets are clustered from these reconstructed particles using the infrared- and collinear-safe anti- algorithm [38] with a size parameter of 0.5. The jet momentum is determined as the vectorial sum of all particle momenta in the jet, and the scale is found in the simulation to be within 5–10 % of the true momentum over the whole transverse momentum spectrum and detector acceptance. Jet energy corrections are derived from simulation, and are confirmed with in situ measurements using the energy balance of dijet and events [39]. The jet energy resolution typically amounts to 15 % (8 %) at 10 (100) , to be compared to about 40 % (12 %) obtained when the calorimeters alone are used for jet clustering.

To identify jets originating from the hadronization of bottom quarks, the combined secondary vertex b-tagging algorithm [40] is employed. The algorithm tags jets from b-hadron decays by identifying their displaced decay vertex. The working point of the tagging algorithm used provides an efficiency for identifying b-quark jets of about 70 % and a misidentification probability for jets from light quarks and gluons of about 1 %.

The missing transverse energy vector is taken as the negative vector sum of all reconstructed particle candidate transverse momenta in the global event reconstruction, and its magnitude is referred to as .

Data sample and simulated events

The events used in the analysis were selected by diphoton triggers with asymmetric transverse energy thresholds and complementary photon selections. One selection requires a loose calorimetric identification based on the shape of the electromagnetic shower and loose isolation requirements on the photon candidates, while the other requires only that the photon candidate has a high value of the shower shape variable. High trigger efficiency is maintained by allowing both photons to satisfy either selection. The variable is defined as the energy sum of 33 crystals centred on the most energetic crystal in the supercluster divided by the energy of the supercluster. Photons that convert before reaching the calorimeter tend to have wider showers and lower values of than unconverted photons. To cover the entire data taking period two trigger threshold configurations are used: on the leading (trailing) photon, and . The measured trigger efficiency is for events satisfying the diphoton preselection required for events entering the analysis, as described in Sect. 4.

The Monte Carlo (MC) simulation of detector response employs a detailed description of the CMS detector, and uses version 9.4 (patch 03) [41]. Simulated events include simulation of the multiple interactions taking place in each bunch crossing and are weighted to reproduce the distribution of the number of interactions in data. They thus simulate the effects of pileup—the presence of signals from multiple interactions, in multiple bunch crossings, in each recorded event. The interactions used to simulate pileup are generated with the same versions of pythia [42], 6.424 or 6.426, that are used for other purposes as described below. The pythia tunes used for the underlying event activity are Z2 and Z2* for the 7 and 8samples, respectively [43]. Simulated Higgs boson signal events are used both for training of multivariate discriminants and to construct the signal model used in the statistical procedures employed to extract the results. Sufficient samples have been produced to ensure that the samples of simulated signal events used for construction of the signal model (Sect. 7) are not used for training the multivariate discriminants. The MC signal event samples for the ggH and VBF processes are obtained using the next-to-leading order (NLO) matrix-element generator powheg (version 1.0) [44–48] interfaced with pythia. For the 7samples, events are weighted so that the transverse momentum spectrum of Higgs bosons produced by the ggH process agrees with the next-to-next-to-leading logarithm + NLO distribution computed by hqt (version 1.0) [49–51]. At 8, powheg has been tuned following the recommendations of the LHC Higgs Cross Section Working Group [52] and reproduces the hqt spectrum. The ggH process cross section is reduced by 2.5 % for all values of to account for the interference with nonresonant diphoton production [53]. For the VH and processes pythia is used alone; processes are generated at leading-order by pythia, and higher order diagrams are accounted for only by pythia’s “parton showering” model. The SM Higgs boson cross sections and branching fractions used are taken from Ref. [54]. Samples used for the testing of spin hypotheses were generated with leading-order accuracy by jhugen [55, 56], interfaced to pythia.

Simulated samples of , , and events used for comparison with data, and for the derivation of energy scale and resolution smearing corrections are generated with MadGraph, sherpa, and powheg [57], allowing comparisons to be made between the different generators.

Simulated background samples are used only for training multivariate discriminants and defining selection and classification criteria. The background is simulated using a combination of samples. At the diphoton processes are simulated using a combination of MadGraph 5 [58] interfaced to pythia for processes apart from the gluon-fusion box diagram, and pythia alone for the box diagram. At the diphoton continuum processes involving two prompt photons are simulated using sherpa 1.4.2 [59]. The sherpa samples give a noticeably improved description of diphoton continuum events accompanied by one or two jets, and enable training of a more effective multivariate discriminant in the case of diphoton-plus-dijet events. The remaining processes where one of the photon candidates arises from misidentified jet fragments are simulated using pythia alone, the cross sections of the processes are scaled by -factors derived from CMS measurements [60, 61].

Photon reconstruction and identification

Photon candidates for the analysis are reconstructed from energy deposits in the ECAL using algorithms that constrain the superclusters in and to the shapes expected from electrons and photons with high . The algorithms do not make any hypothesis as to whether the particle originating from the interaction point is a photon or an electron; when reconstructed in this way, electrons from events provide measurements of the photon trigger, reconstruction, and identification efficiencies, and of the photon energy scale and resolution. The clustering algorithms achieve a rather complete (95 %) collection of the energy of photons and electrons, even those that undergo conversion and bremsstrahlung in the material in front of the ECAL. In the barrel region, superclusters are formed from five-crystal-wide strips in , centred on the locally most energetic crystal (seed), and have a variable extension in . In the endcaps, where the crystals are arranged according to an – rather than an – geometry, matrices of 55 crystals, which may partially overlap and are centred on a locally most energetic crystal, are summed if they lie within a narrow road. The photon candidates are required to be within the fiducial region , excluding the barrel-endcap transition region , where the photon reconstruction is suboptimal. The fiducial region requirement is applied to the supercluster position in the ECAL, i.e. the value of is calculated with respect to the origin of the coordinate system. The exclusion of the barrel-endcap transition region ensures complete clustering of the accepted showers in either the ECAL barrel or endcaps.

About half of the photons convert in the material upstream of the ECAL. If the resulting charged particle tracks originate sufficiently close to the interaction point so as to pass through three or more tracking layers, conversion track pairs may be reconstructed and matched to the photon candidate.

Photon energy

The photon energy is computed from the signals recorded by the ECAL. In the region covered by the preshower detector () the signals recorded in it are also considered. In order to obtain the best energy resolution, the calorimeter signals are calibrated and corrected for several detector effects [34]. The variation of crystal transparency during the run is continuously monitored and corrected for using a factor based on the measured change in response to the light from the laser system, with the response for each crystal being computed approximately every 40 minutes. The single-channel response of the ECAL is equalized exploiting the -symmetry of the energy flow, the mass constraint on the energy of the two photons in and decays, and the momentum constraint on the energy of isolated electrons from - and -boson decays. Finally, the containment of the shower in the clustered crystals, the shower losses for photons that convert in the material upstream of the calorimeter, and the effects of pileup, are corrected using a multivariate regression technique. The photon energy response distribution is parameterized by a function with a Gaussian core and two power law tails, an extended form of the Crystal Ball function [62]. The regression provides a per-photon estimate of the parameters of the function, and therefore a prediction of the distribution of the ratio of true energy to uncorrected supercluster energy. The most probable value of this distribution is taken as the corrected photon energy. The width of the Gaussian core is further used as a per-photon estimator of the energy uncertainty. The regression input variables are a collection of shower shape variables including of the supercluster, the ratio of the 55 crystal energy centred around the seed crystal to the uncorrected supercluster energy sum, the energy-weighted -width and -width of the supercluster, and the ratio between the hadronic energy behind the supercluster and the electromagnetic energy of the cluster. The global coordinate of the supercluster is included, and for the barrel the global coordinate and the coordinates of the seed cluster with respect to the crystal centre are also included. In the endcap, the ratio of preshower energy to raw supercluster energy is included. Finally, the number of primary vertices and the median energy density [63] in the event are included in order to allow for the correction of residual energy scale effects due to pileup.

A multistep procedure has been implemented to correct the energy scale in data, and to determine the parameters of Gaussian smearing to be applied to showers in simulated events so as to reproduce the energy resolution seen in data. First, the energy scale in data is equalized with that in simulated events, and residual long-term drifts in the response are corrected, using decays in which the electron showers are reconstructed as photons. The data are corrected as a function of the time at which they were taken, using 8 epochs in the 7dataset and 51 epochs in the 8dataset. Following this, the photon energy resolution predicted by the simulation is made more realistic by adding a Gaussian smearing determined from the comparison between the line-shape in data and in simulated events. The amount of smearing required is extracted differentially in (two bins in the barrel and two in the endcap) and (two bins). In the fits from which the required amount of smearing is extracted, the data energy scale is allowed to float, and a residual scale correction for the data is extracted in the same eight bins. A sufficient number of events is available in the 8data to allow a third step, in which the energy scale for the ECAL barrel is further corrected in 20 bins defined by ranges in , , and , and the smearing magnitude is allowed to have an energy dependence; the additional energy resolution () is parameterized as the quadratic sum of a constant term and a term proportional to , and the relative magnitude of the two components extracted from the fits.

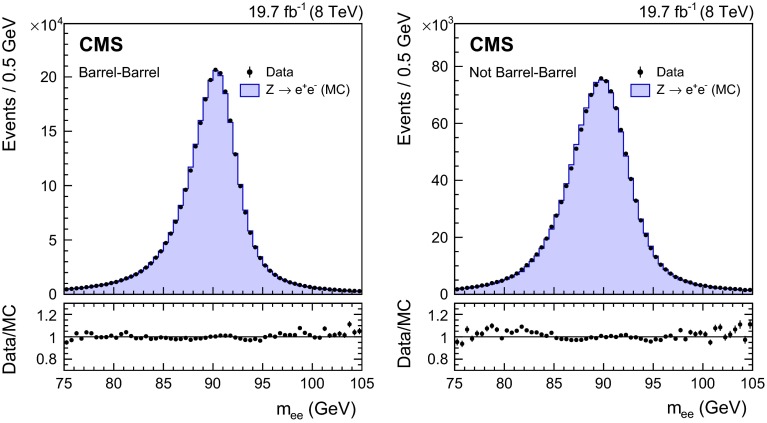

Figure 1 shows the invariant mass of electron pairs reconstructed in events in the 8data and in simulated events in which the electron showers are reconstructed as photons, and the full set of corrections to the data, and smearings of the simulated energies, are applied. The selection applied to the diphoton candidates is the same, apart from the inversion of the electron veto, as is applied to diphoton candidates entering the analysis (as described in Sect. 6). There is excellent agreement between the data and the simulation in the core of the distributions. A slight discrepancy is present in the low-mass tail in the endcaps, where the Gaussian smearing is not enough to account for some noticeable non-Gaussian energy loss. The mass peaks are shifted from the true -boson mass, both in data and simulation, because the electron showers are reconstructed as photons.

Fig. 1.

Invariant mass of pairs in events in the 8data (points), and in simulated events (histogram), in which the electron showers are reconstructed as photons, and the full set of photon corrections and smearings are applied. The comparison is shown for (left) events with both showers in the barrel, and (right) the remaining events. For each bin, the ratio of the number of events in data to the number of simulated events is shown in the lower main plot

Photon preselection

The continuum background to the process is mainly due to prompt diphoton production, with a reducible contribution from and dijet processes where at least one of the objects reconstructed as a photon comes from a jet. Typically these photon candidates come from one or more neutral mesons that take a substantial fraction of the total jet and are thus relatively isolated from hadronic activity in the detector. In the transverse momentum range of interest, the photons from neutral pion decays are rather collimated and are reconstructed as a single photon. In the events used for the analysis, i.e. after all selection and classification criteria are applied, MC simulation predicts that about 70 % of the total background is due to the irreducible prompt diphoton production.

The photons entering the analysis are required to satisfy preselection criteria similar to, but slightly more stringent than, the trigger requirements. These consist of

and , where and are the transverse momenta of the leading (in ) and subleading photons, respectively.

a selection on the hadronic leakage of the shower, measured as the ratio of energy in HCAL cells behind the supercluster to the energy in the supercluster,

a loose selection based on isolation and the shape of the shower,

an electron veto, which removes the photon candidate if its supercluster is matched to an electron track with no missing hits in the innermost tracker layers, thus excluding almost all events.

The selection requirements are applied with different stringency in four categories defined to match the different selections used in the trigger. The four categories are shown in Table 1.

Table 1.

Photon preselection efficiencies for both the 7 and 8datasets measured for events, where the electrons are reconstructed as photons, in four photon categories. The statistical uncertainties in the efficiencies found in simulated events are negligible, and the uncertainties measured in data are discussed in the text

| Preselection category | (%) | (%) | / |

|---|---|---|---|

| 7dataset | |||

| Barrel; 0.90 | 98.7 0.3 | 99.1 | 0.996 0.003 |

| Barrel; 0.90 | 96.2 0.5 | 96.7 | 0.995 0.006 |

| Endcap; 0.90 | 99.1 0.9 | 98.2 | 1.008 0.009 |

| Endcap; 0.90 | 96.1 1.5 | 95.6 | 1.005 0.018 |

| 8dataset | |||

| Barrel; 0.90 | 98.8 0.3 | 98.6 | 0.999 0.003 |

| Barrel; 0.90 | 95.7 0.6 | 96.1 | 0.995 0.006 |

| Endcap; 0.90 | 98.4 0.9 | 97.9 | 1.005 0.009 |

| Endcap; 0.90 | 95.5 1.7 | 94.5 | 1.011 0.018 |

The efficiency of the photon preselection is measured in data using a “tag-and-probe” technique [64]. The efficiency of all preselection criteria, except the electron veto requirement, is measured using events. The efficiency for photons to satisfy the electron veto requirement is measured using events, in which the photon is produced by final-state radiation, which provide a more than pure source of prompt photons. The ratio of the photon efficiency measured in data to that found in simulated events, , is consistent with unity in all categories. The complete set of efficiencies, in data and in simulated events, and the ratios , are shown in Table 1. The systematic uncertainty in the measurement is included in both the efficiencies and the ratio. The statistical uncertainties in the efficiencies measured in simulated events are negligible. The measured ratios are used to correct the simulated signal sample, and the associated uncertainties are taken into account as systematic uncertainties in the signal extraction procedure. For photons in simulated Higgs boson events the efficiency of the preselection criteria in the four categories ranges from 92 to 99 %.

Photon identification

A boosted decision tree (BDT), implemented using the tmva [65] framework, is trained to separate prompt photons from photon candidates resulting from misidentification of jet fragments passing the preselection requirements. The following variables are used as inputs to the photon identification BDT:

Lateral shower shape variables, six of which use data from the ECAL crystals, and one of which measures the shower spread in the preshower detector (where it is present). The shape variables obtained in the MC simulation are compared to those observed in and data samples. No significant differences are observed.

Isolation variables, based on the particle-flow algorithm [37], and using sums of the of photons, and of charged hadrons, within regions of around the candidate, where . Two charged-hadron isolation variables are used: one that considers charged hadrons coming from the vertex chosen for the event (described in Sect. 5), and one that is the largest of all such sums among those made for each reconstructed vertex. The second variable is effective when a photon candidate originating from misidentification of jet fragments comes from a vertex other than the chosen one (Sect. 5 describes the vertex choice).

The energy median density per unit area in the event, . This variable is introduced to allow the BDT classifier to take into account the pileup dependence of the isolation variables.

The pseudorapidity and energy of the supercluster corresponding to the reconstructed photon. These variables are introduced to allow the dependence of the shower topology and isolation variables on and to be taken into account.

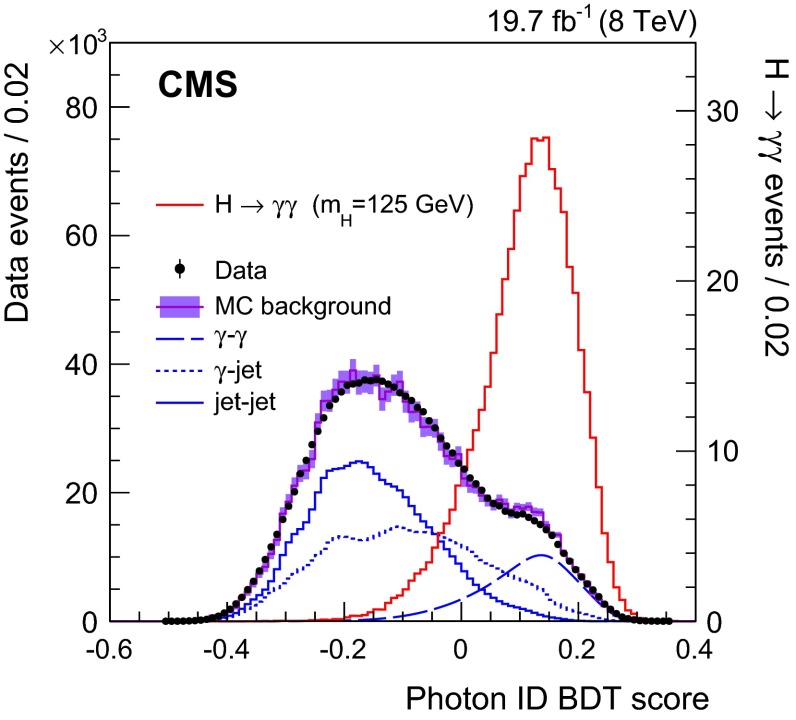

Figure 2 shows the photon identification BDT score of the lower-scoring photon in diphoton pairs with an invariant mass, , in the range , for events passing the preselection in the 8dataset and for simulated background events (histogram with shaded error bands showing the statistical uncertainty). The tall histogram on the right corresponds to simulated Higgs boson signal events. Although the simulated background events are only used for training the BDT, it is worth noting that the agreement of their BDT score distribution with that in data is good. The bump that can be seen in both distributions at a BDT score of slightly above 0.1 corresponds to events where both photons are prompt and, therefore, signal-like.

Fig. 2.

Photon identification BDT score of the lower-scoring photon of diphoton pairs with an invariant mass in the range , for events passing the preselection in the 8dataset (points), and for simulated background events (histogram with shaded error bands showing the statistical uncertainty). Histograms are also shown for different components of the simulated background, in which there are either two, one, or zero prompt signal-like photons. The tall histogram on the right (righthand vertical axis) corresponds to simulated Higgs boson signal events

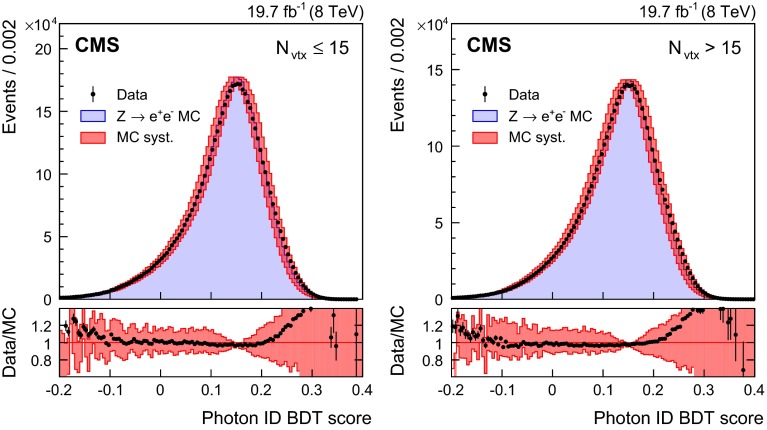

The agreement between data and simulation for photon identification is assessed using electrons from decays, photons from decays, and the highest- photon in diphoton events with in which the relative magnitude of the contribution from misidentified jet fragments is small. Figure 3 shows a comparison of the photon identification BDT score for electron showers reconstructed as photons in the barrel, for data and MC simulated events. The events must pass all the preselection requirements, but the electron veto condition is inverted. The systematic uncertainty assigned to the photon identification BDT score is shown as a band, and corresponds to a shift of 0.01 in the score. The comparison is made for the 8dataset, and is shown for two sets of events with different numbers of primary vertices, , to demonstrate the independence of the result from effects coming from pileup. The differences between the distributions for the data and the simulation fall within the assigned systematic uncertainties for both the lower-pileup () and higher-pileup () sets of events, and the difference between the distributions in the two sets is negligible.

Fig. 3.

Comparison of the photon identification BDT score for electron showers in the barrel in events in the 8dataset and MC simulated events, for events passing the preselection, but with the electron veto condition inverted. The systematic uncertainty assigned to the photon identification BDT score is shown as a band. The comparison is shown for two sets of events with different numbers of primary vertices, . For each bin, the ratio of the number of events in data to the number of simulated events is shown in the lower plot

Diphoton vertex

The mean number of interactions per bunch crossing is 9 in the 7dataset and 21 in the 8dataset. In the longitudinal direction, , the interaction vertices, built from the reconstructed tracks, have a distribution with an rms spread of about 6 (5) in the 7 (8)dataset.

The diphoton mass resolution has contributions from the resolution of the measurement of the photon energies and the measurement of the angle between the two photons. If the vertex from which the photons originate is known to within about 10 , then the experimental resolution on the angle between them makes a negligible contribution to the mass resolution. Thus, if the diphoton is associated with the charged particle vertex corresponding to the interaction in which it originated, then the mass resolution will be entirely dominated by the photon energy resolution, since the longitudinal coordinate of the charged particle vertices is known to greater precision than 10 .

Diphoton vertex identification

No charged particle tracks result from photons that do not convert, so the diphoton vertex is identified indirectly, using the kinematic properties of the diphoton system and its correlations with the kinematic properties of the recoiling tracks. If either of the photons converts, the direction of the resulting tracks can provide additional information.

Three discriminating variables are calculated for each reconstructed primary vertex: the sum of the squared transverse momenta of the charged particle tracks associated with the vertex, and two variables that quantify the vector and scalar balance of between the diphoton system and the charged particle tracks associated with the vertex. The three variables are:

, and

,

where the sums are over the transverse momentum vectors of the charged tracks, , and is the transverse momentum vector of the diphoton system. In addition, if either photon is associated with any charged particle tracks that have been identified as resulting from conversion, then a further variable, , is used, as defined below. An estimate of the primary vertex longitudinal position, , is obtained from the conversion track(s), and the additional variable is defined as the pull between and the longitudinal position of the reconstructed vertex, : , where is the uncertainty in . The variables are used as the inputs to a multivariate system based on a BDT to choose the reconstructed vertex to be associated with the diphoton system.

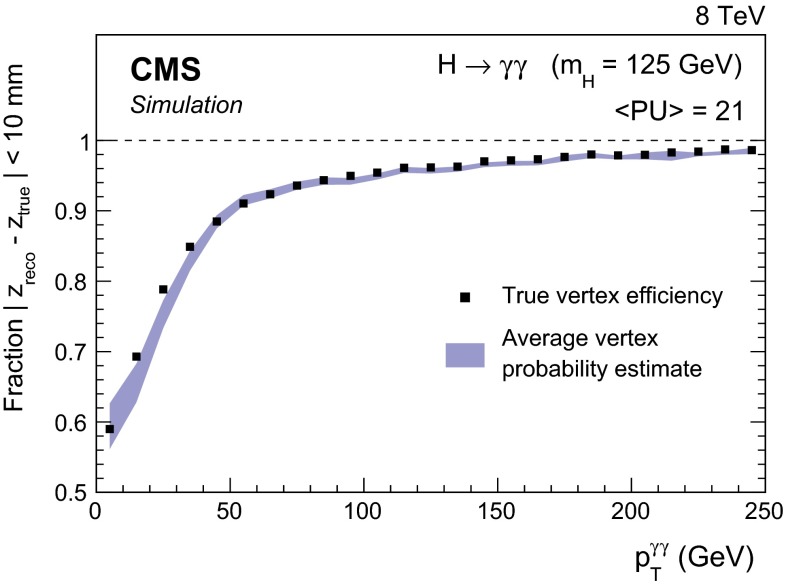

The vertex finding efficiency, defined as the efficiency that the chosen vertex is within 10 of the true vertex location, has been measured using events. The performance of the algorithm is evaluated after re-reconstruction of the vertices following removal of the muon tracks, so that the event mimics a diphoton event. The use of tracks from a converted photon to locate the vertex is validated in events. In both cases the ratio of the efficiency measured in data to that measured in MC simulation is within 1 % of unity when viewed as a function of the number of vertices in the event. When viewed as a function of the -boson , the deviation of the ratio from unity increases to a few percent in the region where . The measured ratio as a function of the -boson is used as a correction to the vertex finding efficiency in simulated Higgs boson signal events. The vertex finding efficiency for a Higgs boson of mass 125, integrated over its spectrum, is computed to be 85.4 (79.6) % in the 7 (8)dataset. Figure 4 shows the efficiency with which a diphoton system is assigned to a vertex reconstructed within 10 of the true diphoton vertex in simulated Higgs boson events () in the 8dataset, as a function of the transverse momentum of the diphoton system.

Fig. 4.

Fraction of diphoton vertices (solid points) assigned, by the vertex assignment BDT, to a reconstructed vertex within 10 of their true location in simulated Higgs boson events, , , as a function of . Also shown is a band, the centre of which is the mean prediction, from the vertex probability BDT (described in Sect. 5.2), of the probability of correctly locating the vertex. The mean is calculated in bins, and the width of the band represents the event-to-event uncertainty in the estimates

Per-event vertex probability

A second vertex-related multivariate discriminant has been designed to estimate, event-by-event, the probability for the vertex assignment to be within 10 of the diphoton interaction point. This, in conjunction with the event-by-event estimate of the energy resolution of each photon, is used to estimate the diphoton mass resolution for each individual event, and this estimate is used in the event classification, as described in Sect. 6. The inputs of the vertex probability BDT are

the values of the vertex identification BDT output for the three most likely vertices in the event,

the total number of reconstructed vertices in the event,

the transverse momentum of the diphoton system, ,

the distances between the chosen vertex and the second- and third-best vertices,

the number of photons with an associated conversion track or tracks.

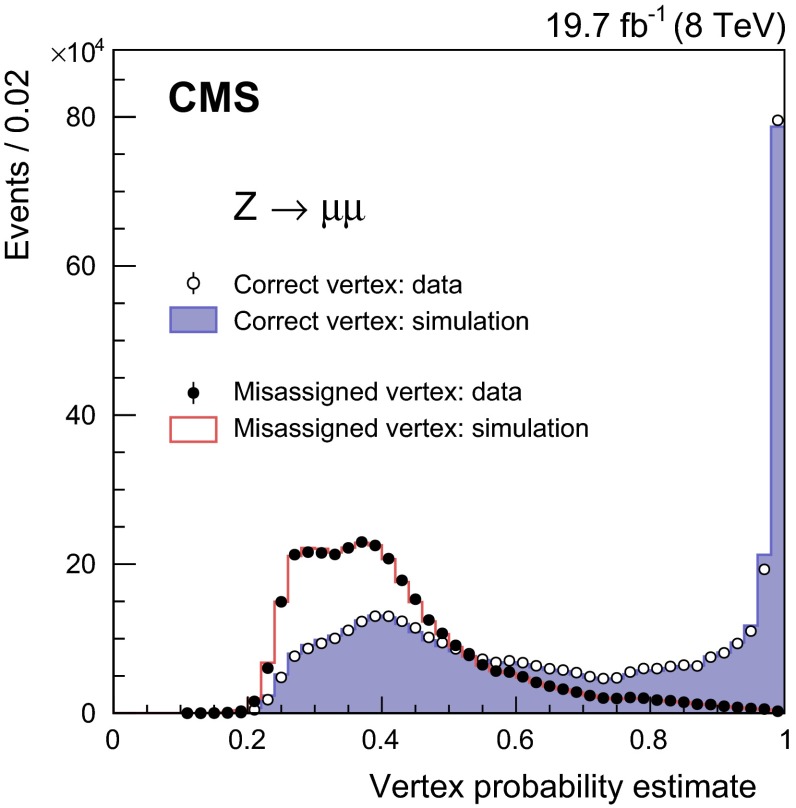

The vertex probability BDT is tested with simulated signal events as shown in Fig. 4, and the performance in data is tested using events. Validation of the vertex probability BDT for events in which conversion tracks are present is achieved using events in which one or more conversion tracks are reconstructed. The probability to identify a close-enough vertex (vertex probability) has a linear relationship with the vertex probability BDT score, the parameters of which are obtained from a fit using a sample of simulated signal events. Figure 5 shows the distribution of the vertex probability estimate, obtained from the BDT score, in events. The charged particle tracks belonging to the muon pair are used to identify the vertex, and are then removed from the event before re-reconstructing the vertices and passing them to the vertex identification and the vertex probability BDTs. The of the dimuon pair is used in the BDT calculation in place of . The vertex identified by the muons is assumed to be the correct or true vertex, so that if the vertex assignment BDT chooses that vertex, it chooses the right vertex, otherwise it chooses the wrong vertex. The vertex probability estimates in data (points), are compared to MC simulation (histograms). The comparison is made separately for events in which the vertex assignment BDT assigns the right vertex, and for those in which it assigns a wrong vertex.

Fig. 5.

Distribution of the vertex probability estimate in events. The vertex probability estimates in 8data (points), are compared to the estimates in MC simulation (histograms). The comparison is made separately for events in which the vertex is assigned to the same (open circles and filled histogram), or to a different vertex (filled circles and outlined histogram), as that identified by the muons

Event classification

The analysis uses events with two photon candidates satisfying the preselection requirements (described in Sect. 4.3) with an invariant mass, , in the range , and with and . In the rare case of multiple diphoton candidates, the one with the highest is selected. The use of thresholds scaled by prevents the distortion of the low end of the spectrum that results if a fixed threshold is used. An additional requirement is applied on the photon identification BDT scores for both photons, which are required to be greater than (see Fig. 2). This requirement retains more than 99 % of simulated signal events fulfilling the other analysis selection requirements, while removing about 24 % of events in data. The requirements listed above are referred to as the “full diphoton preselection”.

To achieve the best analysis performance, the events are separated into classes based on both their mass resolution and their relative probability to be due to signal rather than background. The first step in the classification of the events involves the extraction of those tagged by the presence of objects in the final state, in addition to the photon pair, that give the event a signature characteristic of one of the production processes. The remaining untagged events, which constitute the majority (99 %) of the events used in the analysis, are classified according to a variable constructed using multivariate techniques.

The classification procedure, which is described in detail below, results in 11 event classes for the 7dataset and 14 for the 8dataset. The event classes, and the expected number of SM Higgs boson events and estimated background in those classes, are set out later, in Table 3, together with the composition of the expected SM Higgs boson signal in terms of the production processes, and the diphoton mass resolution expected for the signal in each of the classes. To ensure that the classes are mutually exclusive, events are tested against the class selection requirements in a fixed order as described in Sect. 6.4.

Table 3.

Expected number of SM Higgs boson events () and estimated background (“Bkg.”) at for all event classes of the 7 and 8datasets. The composition of the SM Higgs boson signal in terms of the production processes and its mass resolution is also given. The number corresponding to the production process making the largest contribution to each event class is highlighted in boldface. Numbers are omitted for production processes representing less than 0.05 % of the total signal. The variables used to characterize the resolution, and , are defined in the text

| Event classes | Expected SM Higgs boson signal yield (=125) | Bkg. () | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Total | ggH (%) | VBF (%) | WH (%) | ZH (%) | (%) | |||||

| 75.1 | Untagged 0 | 5.8 | 79.8 | 9.9 | 6.0 | 3.5 | 0.8 | 1.11 | 0.98 | 11.0 |

| Untagged 1 | 22.7 | 91.9 | 4.2 | 2.4 | 1.3 | 0.2 | 1.27 | 1.09 | 69.5 | |

| Untagged 2 | 27.1 | 91.9 | 4.1 | 2.4 | 1.4 | 0.2 | 1.78 | 1.40 | 135 | |

| Untagged 3 | 34.1 | 92.1 | 4.0 | 2.4 | 1.3 | 0.2 | 2.36 | 2.01 | 312 | |

| VBF dijet 0 | 1.6 | 19.3 | 80.1 | 0.3 | 0.2 | 0.1 | 1.41 | 1.17 | 0.5 | |

| VBF dijet 1 | 3.0 | 38.1 | 59.5 | 1.2 | 0.7 | 0.4 | 1.65 | 1.32 | 3.5 | |

| VH tight | 0.3 | – | – | 77.2 | 20.6 | 2.2 | 1.61 | 1.31 | 0.1 | |

| VH loose | 0.2 | 3.6 | 1.1 | 79.1 | 15.2 | 1.0 | 1.63 | 1.32 | 0.2 | |

| VH | 0.3 | 4.5 | 1.1 | 41.5 | 44.6 | 8.2 | 1.60 | 1.14 | 0.2 | |

| VH dijet | 0.4 | 27.1 | 2.8 | 43.7 | 24.3 | 2.1 | 1.54 | 1.24 | 0.5 | |

| tags | 0.2 | 3.1 | 1.1 | 2.2 | 1.3 | 92.3 | 1.40 | 1.13 | 0.2 | |

| 819.7 | Untagged 0 | 6.0 | 75.7 | 11.9 | 6.9 | 3.6 | 1.9 | 1.05 | 0.79 | 4.7 |

| Untagged 1 | 50.8 | 85.2 | 7.9 | 4.0 | 2.4 | 0.6 | 1.19 | 1.00 | 120. | |

| Untagged 2 | 117. | 91.1 | 4.7 | 2.5 | 1.4 | 0.3 | 1.46 | 1.15 | 418 | |

| Untagged 3 | 153. | 91.6 | 4.4 | 2.4 | 1.4 | 0.3 | 2.04 | 1.56 | 870 | |

| Untagged 4 | 121. | 93.1 | 3.6 | 2.0 | 1.1 | 0.2 | 2.62 | 2.14 | 1,400 | |

| VBF dijet 0 | 4.5 | 17.8 | 81.8 | 0.2 | 0.1 | 0.1 | 1.30 | 0.94 | 0.8 | |

| VBF dijet 1 | 5.6 | 28.5 | 70.5 | 0.6 | 0.2 | 0.2 | 1.43 | 1.07 | 2.7 | |

| VBF dijet 2 | 13.7 | 43.8 | 53.2 | 1.4 | 0.8 | 0.8 | 1.59 | 1.24 | 22.1 | |

| VH tight | 1.4 | 0.2 | 0.2 | 76.9 | 19.0 | 3.7 | 1.63 | 1.24 | 0.4 | |

| VH loose | 0.9 | 2.6 | 1.1 | 77.9 | 16.8 | 1.5 | 1.60 | 1.16 | 1.2 | |

| VH | 1.8 | 16.3 | 2.7 | 34.4 | 35.4 | 11.1 | 1.68 | 1.17 | 1.3 | |

| VH dijet | 1.6 | 30.3 | 3.1 | 40.6 | 23.4 | 2.6 | 1.31 | 1.06 | 1.0 | |

| lepton | 0.5 | – | – | 1.6 | 1.6 | 96.8 | 1.34 | 1.03 | 0.2 | |

| multijet | 0.6 | 4.1 | 0.9 | 0.8 | 0.9 | 93.3 | 1.34 | 1.03 | 0.6 | |

Multivariate event classifier

A multivariate event classifier, the diphoton BDT, is constructed to satisfy the following criteria:

- The diphoton BDT should assign a high score to events that have

- good diphoton mass resolution,

- high probability of being signal rather than background.

The classifier should not select events according to the mass of the diphoton system relative to the particular mass of the Higgs boson signal used for training.

The classifier incorporates a per-event estimate of the diphoton mass resolution, the identification BDT scores of both photons, and the kinematic properties of the diphoton system, except for To avoid any dependence on the transverse momenta and resolutions are divided by .

The complete list of variables used in the BDT is the same as used in previous versions of the analysis [28]: the scaled photon transverse momenta ( and ), the pseudorapidities of both photons, the photon identification BDT classifier values for both photons, the cosine of the angle between the two photons in the transverse plane, the expected relative diphoton mass resolutions under the hypotheses of selecting the correct/a wrong interaction vertex, and also the probability of selecting the correct vertex.

The diphoton mass resolution depends on several factors: the location of the associated energy deposits in the calorimeter; whether or not one or both photons converted in the detector volume in front of the calorimeter; and the probability that the true diphoton vertex has been identified. Events in which one of the photons has a low identification BDT score are more likely to be due to background processes. The Higgs signal-to-background ratio, , varies with the kinematic properties of the diphoton system mainly through the of the photons (highest when both are in the barrel), and (highest for large ). The BDT is trained using a simulated signal sample having a mass, , near the centre of the mass range of the analysis. The relative abundance of events from different production processes in the sample is set according to the expectations for a SM Higgs boson with that mass.

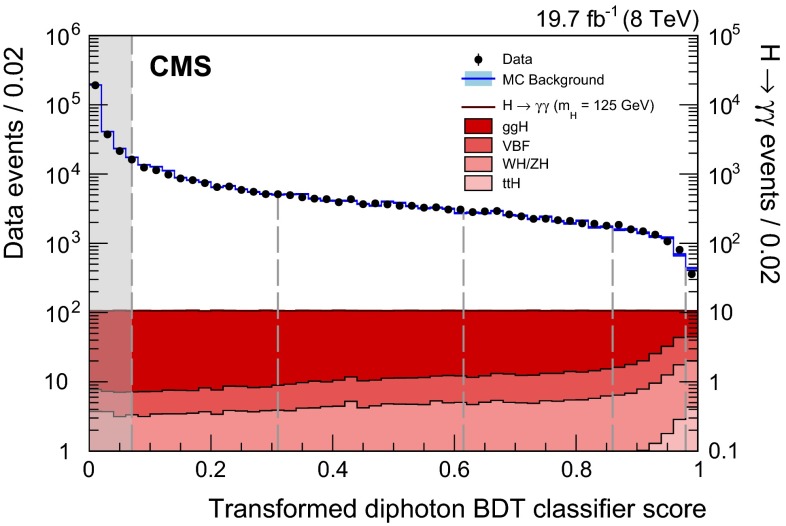

The multivariate classifier assigns a score to each event. It has been verified that selecting simulated background events with high diphoton BDT score does not result in any peak in the diphoton invariant mass distribution of the selected events. Figure 6 shows, for the 8dataset, how the BDT performs on simulated SM signal events with , and on data satisfying the full diphoton preselection. The classifier score has been transformed such that the sum of signal events from all processes has a uniform, flat, distribution. This transformation assists visualization of the performance of the BDT. The outlined histogram, following the data points, is for simulated background events. The vertical dashed lines indicate the boundaries of the untagged event classes, the determination of which is described in Sect. 6.3. Given that the data are completely dominated by background events, it can be seen that the signal-to-background ratio increases substantially with the classifier score, and that the VBF, VH, and processes tend to achieve high scores, due to their significantly harder spectrum [66, 67].

Fig. 6.

Transformed diphoton BDT classifier score for events satisfying the full diphoton preselection in the 8data (points with error bars, left axis), and for simulated signal events from the four production processes (solid filled histograms, right axis). The outlined histogram, following the data points, is for simulated background events. The vertical dashed lines show the boundaries of the untagged event classes, with the leftmost dashed line representing the score below which events are discarded and not used in the final analysis (described in Sect. 6.3)

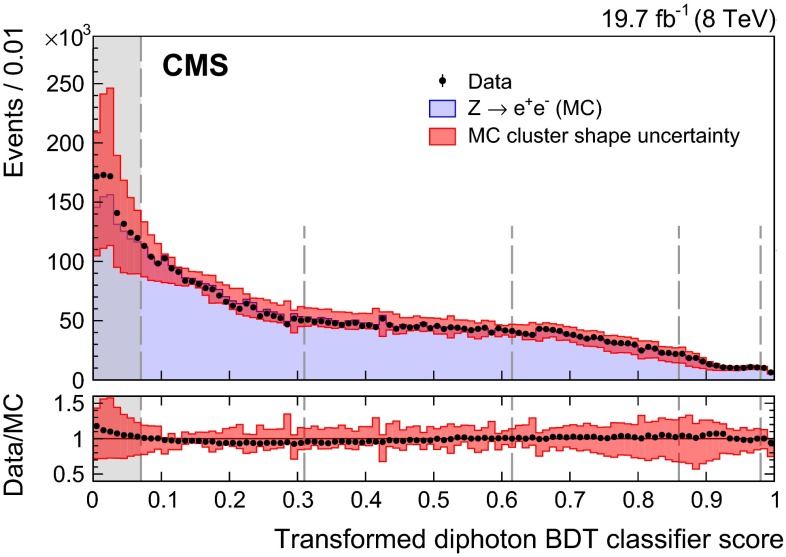

Figure 7 shows a comparison of the transformed classifier score for data and for MC simulated events, in which for both cases the electrons are reconstructed as photons. The electron showers in the events satisfy the full diphoton preselection requirements with the electron veto condition inverted. The classifier score has been subjected to the same transformation as was used for Fig. 6. The score for events peaks at low values whilst Higgs boson signal events have a flat distribution, reflecting the differences between the two types of event, but it can be seen that sufficient numbers of events are present even at high values of the classifier score to enable the agreement between data and MC simulation to be adequately tested there. The good agreement between MC simulation and data for events constitutes an important check that the modeling of the BDT input variables and their correlations in the simulation of the Higgs boson signal is accurate. The simulated events have been weighted so that the -boson distribution matches that observed in data. The band indicates the systematic uncertainty resulting from propagating to the diphoton BDT event classifier both the uncertainty associated with the photon identification BDT score (which corresponds to a shift of 0.01 of the score) and the uncertainty in the per-photon estimate of the energy resolution (which amounts to a scaling of its value by 10 %). Since the magnitudes of these two uncertainties were chosen to cover the discrepancies between data and simulation in the tails of the distributions of the two variables, the resulting uncertainty in the diphoton BDT event classifier appears to be slightly overestimated.

Fig. 7.

Transformed diphoton BDT classifier score for events in 8data, and in MC simulation, in which the electrons are reconstructed as photons. The distribution of simulated events is represented by a histogram, and the data by points with error bars. For each bin, the ratio of the number of events in data to the number of simulated events is shown in the lower plot. The bands in the two plots indicate the systematic uncertainty related to the MC cluster shape uncertainty (see text). The vertical dashed lines show the boundaries of the untagged event classes, with the leftmost dashed line representing the score below which events are discarded and not used in the final analysis (described in Sect. 6.3)

Events tagged by exclusive signatures

Selections enriched in Higgs boson production mechanisms other than ggH can be made by requiring, in addition to the diphoton pair, the presence of other objects which provide signatures of the production mechanism. Higgs bosons produced by VBF are accompanied by a pair of jets separated by a large rapidity gap. Those resulting from the VH production mechanism may be accompanied by one or more charged leptons, large , or jets from the decay of the or boson. Those resulting from production are, as a result of the decay of the top quarks, accompanied by b quarks, and may be accompanied by charged leptons or additional jets.

The tagging of dijet events, targeting VBF production, significantly increases the overall sensitivity of the analysis and precision on the measured signal strength, and increases the sensitivity to deviations of the Higgs boson couplings from their expected values. The tagging aimed at the VH process increases the sensitivity to deviations of the couplings, and the tagging further probes the compatibility of the observed signal with a SM Higgs boson.

The spectrum of Higgs bosons produced by the VBF, VH, and processes is significantly harder than that of Higgs bosons produced by ggH, or of background diphotons. This results in a harder leading-photon spectrum. In the tagged-class selections advantage is taken of this difference by raising the requirement on the leading photon.

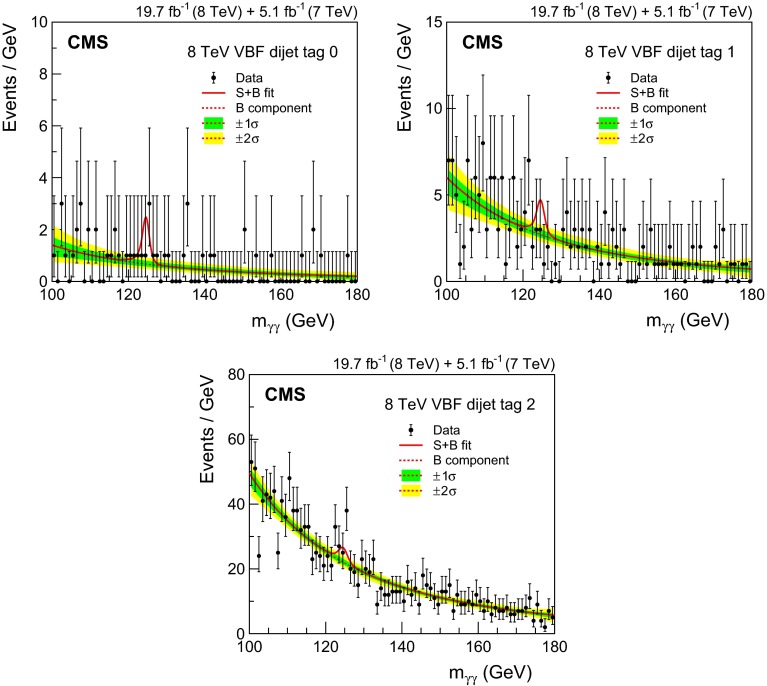

Dijet-tagged event selection and BDT classifiers for VBF production

Vector boson fusion production results in two forward jets, originating from the two scattered quarks. Separating events tagged by the presence of dijets compatible with the VBF process into specific event classes not only increases the separation between signal and background, it also increases the separation between signal production processes. In the purest VBF dijet-tagged class the signal is expected to have a contribution of only 18 % from ggH production. A loose preselection of dijet events is defined and a dijet BDT is trained to separate VBF signal from diphoton background using samples of MC events satisfying this dijet preselection. Signal events from ggH satisfying the dijet preselection are included as background in the training. Details of the dijet preselection and the BDT input variables are given below. A further, “combined”, BDT is then trained. This BDT has only three input variables: the score of the dijet BDT, the score of the diphoton BDT, and the transverse momentum of the diphoton system divided by its mass, . Events for the VBF dijet-tagged classes are selected, from those satisfying the loose dijet preselection, by placing a minimum requirement on their combined BDT score, and the selected events are then classified using that score.

The dijet preselection is applied to diphoton events satisfying the full diphoton preselection and requires the leading (in ) and subleading jets in the event, within , to have and 20respectively, and for the pair to have an invariant mass . The pseudorapidity requirement () is more restrictive than the full detector acceptance (), to avoid the use of jets for which the energy corrections are large and less reliable, and is found to decrease the signal acceptance by 2 %. Additionally, the threshold of the leading photon is raised, requiring for VBF dijet-tagged events.

The jet energy measurement is calibrated to correct for detector effects using samples of dijet, , and events [39]. The energy from pileup interactions and from the underlying event is also included in the reconstructed jets. This energy is subtracted using an -dependent transverse momentum density calculated with the jet areas technique [63, 68, 69], evaluated on an event-by-event basis. Particles produced in pileup interactions may be clustered into jets of relatively large , referred to as pileup jets. These pileup jets are largely removed using selection criteria based on the width of the jet or the compatibility of the tracks in a jet with the primary vertex [70]. Finally, jets within of either of the photons are rejected to exclude the possibility of photons having been included in the reconstruction of the jet.

The variables used in the dijet BDT are the scaled transverse momenta of the photons, and , the transverse momenta of the leading and subleading jets, and , the dijet invariant mass, , the difference between the pseudorapidities of the jets, , the difference between the average pseudorapidity of the two jets and the pseudorapidity of the diphoton system, [71], and the absolute difference in the azimuthal angle between the diphoton system and the dijet system, . Because of the large theoretical uncertainty in the cross section due to higher-order contributions to the ggH process accompanied by two jets in the region very close to [54, 72], the maximum value of the variable is restricted to ; events with are treated as if the value was .

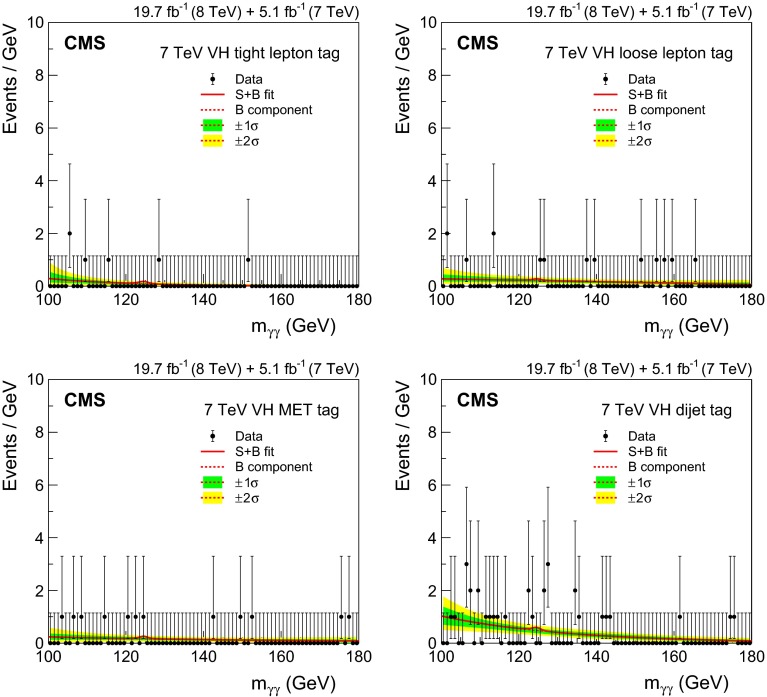

Lepton-, dijet-, and -tagged event classes for VH production

The selection requirements for the classes aimed at selecting events produced by the VH process have been obtained by minimizing the expected uncertainty in the measurement of signal strength of the process, using data in control regions to estimate the background and MC signal samples to estimate the signal efficiency. Four classes are defined: events with a muon or an electron are separated into two classes, according to whether there is significant or another lepton in the event, or there is not; a third class selects events with two or more jets; and the fourth class consists of events with large . The leading photon in the events selected for the lepton classes and for the -tagged class is required to satisfy ; for the dijet-tagged VH class the requirement is tighter, .

Muons are reconstructed with the particle-flow algorithm and are required to be within . A tight selection is applied, based on the quality of the track and the number of hits in the tracker and muon spectrometer. A strict match between the tracker and the muon spectrometer segments is also applied to reduce the contamination from muons produced in decays of hadrons and from beam halo interactions. Finally, a loose particle-flow isolation requirement is applied.

Electrons are identified as clusters of energy deposited in the ECAL matched to tracks. Electron candidates are required to have an ECAL supercluster within the same fiducial region as for photons. Electron identification is based on a multivariate technique [14]. The electron track has to fulfil requirements on the transverse and longitudinal impact parameter with respect to the electron vertex and cannot have more than one missing hit in the innermost layers of the tracker. Electrons from conversions are excluded as described in Ref. [73] and a loose particle-flow isolation requirement is applied.

The tightly selected lepton class (“VH tight ”) is characterised by the full signature of a leptonically decaying or boson, and requires, in addition to the electron or muon, the presence of or another lepton of the same flavour as the first and with opposite sign. For the lepton plus signature the of the lepton is required to be greater than 20 . For the dilepton signature the lepton requirement is relaxed to , but the invariant mass of the pair is required to be between 70 and 110 . For the loose lepton class (“VH loose ”) only a single electron or muon with is required but additional requirements are made to reduce background from leptonic decays of bosons with initial- or final-state radiation: muons and electrons are required to be separated from the closest photon by , and the invariant mass of electron-photon pairs is required to be more than 10away from the -boson mass. In addition, a conversion veto is applied to the electrons to reduce the number of electrons originating from photon conversions.

Events selected for the dijet-tagged VH class are required to have a pair of jets with , within the region , and with an invariant mass within the range ; additional jets may also be present. The of the diphoton system is required to satisfy . The selection also exploits the expected angular distribution of the diphoton pair with respect to the dijet pair from the vector boson decay. The angle, , that the diphoton system makes, in the diphoton-dijet centre-of-mass frame, with respect to the direction of motion of the diphoton-dijet system in the lab frame is computed. The distribution of for signal events coming from VH production is rather flat, whereas background and signal events from ggH production result in distributions strongly peaked at . Consequently is required.

For the tag, additional selection criteria are applied on the azimuthal angular separation between the diphoton system and the direction, , and between the diphoton system and the leading jet in the event, . Discrepancies between data and simulated events in the direction and magnitude of the vector have been studied in detail and a set of corrections derived, some of which need to be applied to simulated events, and others to data. The corrected is required to satisfy .

In addition to the requirements described above, a minimum requirement is also made on the diphoton BDT classifier score for entry into the event classes tagging VH production. The severity of the requirement is optimized for each class: 0.17 for the two lepton-tagged classes, 0.62 for the -tagged class, and 0.76 for the VH dijet-tagged class, where the numerical scale is the classifier score shown in Figs. 6 and 7.

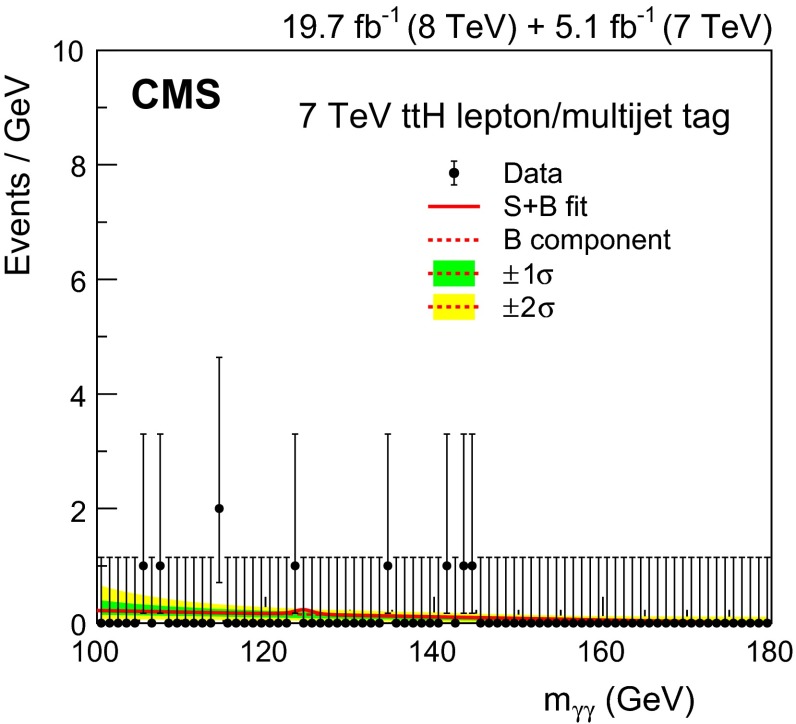

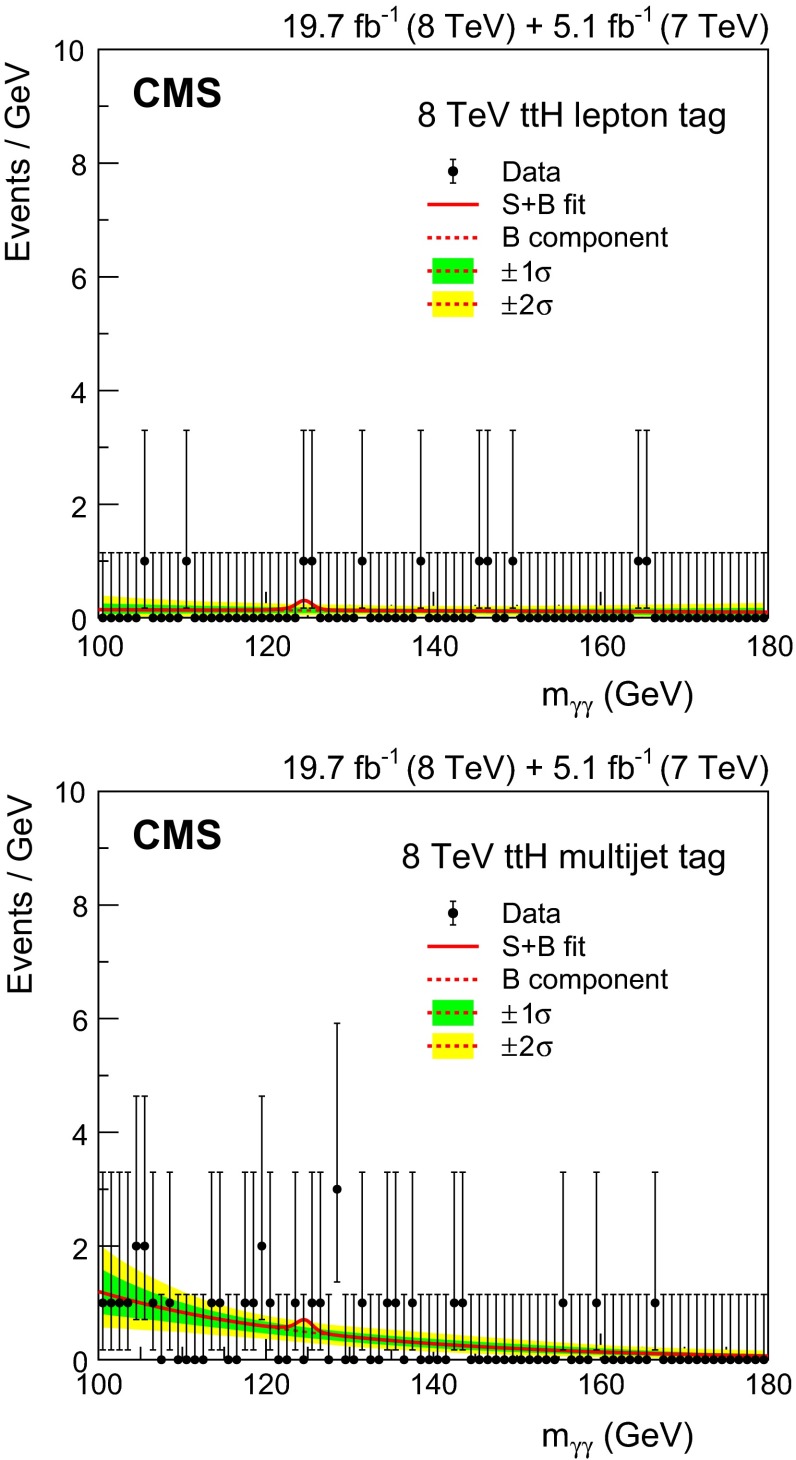

Event classes tagged for production

The production of Higgs bosons in association with top quarks has a small cross section, and so the overall cross section times branching fraction of the decay to photons is only 0.3 at NLO. Therefore, in the full dataset only a handful of events are expected. To maximize signal efficiency we devise event selections that collect both leptonic and hadronic decays of the top quarks, defining both a lepton-tagged and a multijet-tagged event class.

As for the VH event classes, the selection requirements for the classes aimed at selecting events produced by the process have been obtained by minimizing the expected uncertainty in the measurement of signal strength of the process, using data in control regions to estimate the background, and MC signal samples to estimate the signal efficiency. The leading photon is required to have . Jets are required to have and both classes require the presence of at least one b-tagged jet. The lepton tag is then defined by requiring at least one more jet in the event and at least one electron or muon with , and the multijet tag is defined by the requirement of at least four more jets in the event and no lepton. Requirements are also made on the minimum diphoton BDT classifier score for entry into the two classes tagging : 0.17 for the lepton class, and 0.48 for the multijet class, where the numerical scale is the classifier score shown in Figs. 6 and 7. For the 7dataset the events in the two classes are combined after selection to form a single event class.

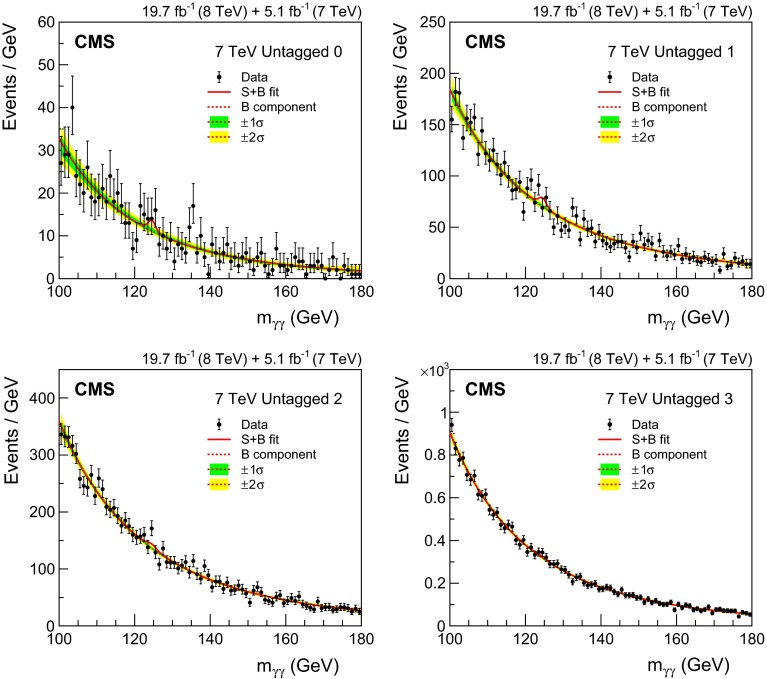

Classification of VBF dijet-tagged and untagged events

Classes for the VBF dijet-tagged events and the untagged events are defined using the scores of the classification BDTs: the combined dijet-diphoton BDT score is used to select and define the dijet-tagged classes, and the diphoton BDT score defines the untagged class into which the untagged events are placed. The BDT score requirements that constitute the event class boundaries are set by an optimization procedure, using simulated event samples, aimed at minimizing the expected uncertainty in the signal strength. To avoid biases, the simulated events are divided into three non-overlapping sets, which are then used only for the training of the BDTs, or the optimization of event class boundaries, or to model the signal in the extraction of the final results. The number of available simulated events limits the statistical precision in the optimization procedure. The small number of simulated events for some background processes where one or more of the photon candidates result from misidentified jet fragments, results in a very uneven and spikey distribution of the event classifier scores for the simulated background in the range of BDT scores in which there is some contribution from these processes, but it is rare. So, for the event class boundary optimization procedure, the event classifier BDT scores are smoothed, using an adaptive-width Gaussian smoothing in the RooFit package [74]. Differences in performance of less than about 2 % are indistinguishable from statistical fluctuations and are regarded as insignificant.

As a result of the optimization procedure, four untagged event classes and two VBF dijet-tagged classes are defined for the 7dataset. For the 8dataset five untagged and three dijet-tagged classes are defined. Events that fail the requirement on the combined dijet-diphoton BDT score to enter the VBF dijet-tagged classes may enter other event classes. Untagged events that have a diphoton BDT score less than the lower boundaries of the untagged classes in the two datasets are not used in the final statistical analysis. The goal of the optimization setting the diphoton BDT score requirements, which define the untagged classes, is to minimize the expected uncertainty in the overall signal strength measurement. The goal of the optimization for the setting of the combined dijet-diphoton BDT score boundaries, which define the VBF dijet-tagged classes, is to minimize the expected uncertainty in the signal strength associated with the VBF production mechanism. When optimizing the boundaries for the 7dataset, for which the number of MC background events available is particularly limited, the number of dijet-tagged classes is limited to two and the lower boundary of the lowest dijet-tagged class is fixed so that the same efficiency times acceptance is obtained for VBF signal events as in the 8dataset.

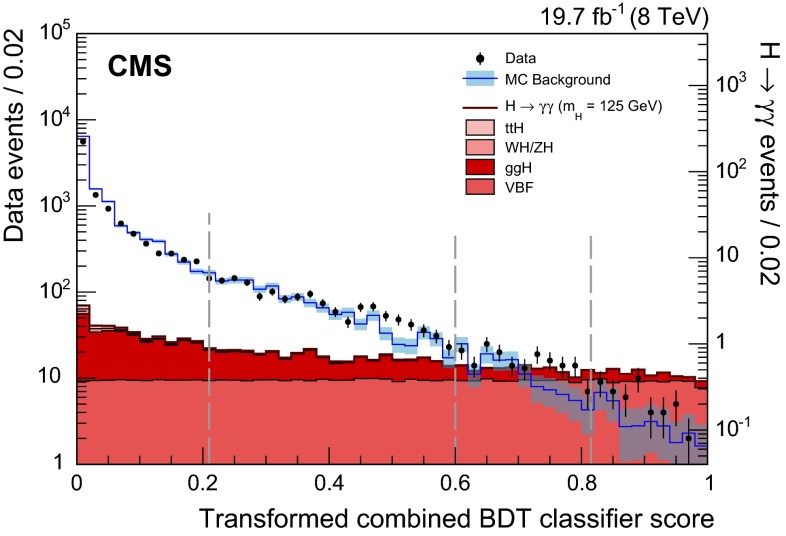

Figure 8 shows the combined dijet-diphoton BDT score for events satisfying the dijet preselection in 8data, and for simulated signal events from the four production processes. The outlined histogram is for simulated background events; the shaded error bands on the histogram show the statistical uncertainty in the simulation. The VBF dijet-tagged class boundaries used for the 8dataset are shown by vertical dashed lines. The classifier score is transformed such that signal events produced by the VBF process have a uniform, flat, distribution across the full range of the score. This allows the visualization of the extent to which signal events produced by the VBF process are favoured over background (which predominates in the data), and signal events produced by other processes. Events with scores below the lower boundary fail the VBF dijet-tagged selection, but remain candidates for inclusion in other classes.

Fig. 8.

Score of the combined dijet-diphoton BDT for events satisfying the dijet preselection in 8data (points with error bars, left axis) and for simulated signal events from the four production processes (histograms, right axis). The outlined histogram is for simulated background events; the shaded error bands on the histogram show the statistical uncertainty in the simulation. The vertical dashed lines show the boundaries of the event classes, with the leftmost dashed line representing the score below which events are not included in the VBF dijet-tagged classes, but remain candidates for inclusion in other classes. The classifier score is transformed such that signal events produced by the VBF process have a uniform, flat, distribution

The lower boundary on the untagged event class with the lowest signal-to-background ratio controls the total number of events used in the analysis and the overall signal efficiency times acceptance of the analysis (see Fig. 6). The boundary excludes events with very low score in the diphoton BDT for which the background is poorly modelled by MC simulation. Exclusion of these events has the advantage of allowing a better assessment of the expected sensitivity of the analysis, but the exact placement of the boundary is of little consequence.

It is found that, within the statistical uncertainty described above, it makes no difference if the optimization goal is the expected overall uncertainty in signal strength, the expected significance of the signal, or the expected uncertainty in the measured signal strength associated with the VBF production mechanism. It is also found that the performance maxima that fix the event class boundaries are rather shallow, so that the boundaries can be moved without significantly changing the expected performance. Adding further event classes for either the untagged or the VBF dijet-tagged events does not significantly improve the expected performance.

The overall efficiency times acceptance for SM Higgs boson events with is 49.3 % (48.6 %) in the 8 (7)analysis. Investigating the properties of the simulated signal events in the untagged classes reveals, as expected, that the best untagged class (“untagged 0”) contains events in which the diphoton system has high (almost all events have ), while the second best class (“untagged 1”) is dominated by events in which both photons are unconverted and situated in the central barrel region of the ECAL.

Procedure of classification

In total there are 14 event classes for the analysis of the 8dataset and 11 for the analysis of the 7dataset. To ensure that the classes are mutually exclusive, events are tested against the class selection requirements in a fixed order: first the production-signature tagged classes ranked by expected signal-to-background ratio, then the untagged classes. Once selected, events are no longer candidates for inclusion in other classes. The ordering is that shown in Table 2, which lists the classes together with their key selection requirements.

Table 2.

Event classes for the 7 and 8datasets and some of their main selection requirements

| Label | No. of classes | Main requirements | |

|---|---|---|---|

| 7 | 8 | ||

| lepton tag | 1 | ||

| 1 b-tagged jet + 1 electron or muon | |||

| VH tight tag | 1 | 1 | |

| [ or , , and ] or | |||

| [ or , ; ] | |||

| VH loose tag | 1 | 1 | |

| or , | |||

| VBF dijet tag 0–2 | 2 | 3 | |

| 2 jets; classified using combined diphoton-dijet BDT | |||

| VH tag | 1 | 1 | |

| multijet tag | 1 | ||

| 1 b-tagged jet + 4 more jets | |||

| VH dijet tag | 1 | 1 | |

| jet pair, and | |||

| Untagged 0–4 | 4 | 5 | The remaining events, |

| classified using diphoton BDT | |||

For the 7dataset, events in the lepton tag and multijet tag classes are selected first, and combined to form a single event class

Signal model

A parametric signal model is constructed separately for each event class and for each production mechanism from a fit of the simulated invariant mass shape, after applying the corrections determined from comparisons of data and simulation for and events, for nine values of in the range , at 5intervals. The two possible cases regarding diphoton vertex identification, correct vertex and wrong (misidentified) vertex, are fitted separately. Good descriptions of the distributions, including the tails, can be achieved using a sum of Gaussian functions, where the means are not required to be identical. The fits are first performed for the MC sample to determine the number of Gaussian functions to be used and the starting values of their parameters for the further fits to the other eight samples. As many as five Gaussian functions are used, although in most cases the use of two or three results in a good fit. Signal models for intermediate values of are obtained by linear interpolation of the fitted parameters.

Table 3 shows the number of expected signal events from a SM Higgs boson with as well as the background density at that mass for each of the event classes in the 7 and 8datasets. The background estimate is obtained from a fit to the data, as described in Sect. 8, and is given as the differential rate, (events/GeV), at . The table also shows the fraction of each Higgs boson production process (as predicted by MC simulation) as well as the mass resolution, measured both by half the width of the narrowest interval containing 68.3 % of the invariant mass distribution, , and by the full width at half maximum of the distribution divided by 2.35, .

It can be seen that in all classes since the tails of the signal mass distribution are always somewhat larger relative to the width of the core of the distribution than would be the case for a Gaussian distribution. Untagged events with the best mass resolution are selected to the best event classes, and even ignoring the improving mass resolution, and considering a wide window to include all the signal events, the signal-to-background ratio improves by an order of magnitude going from the worst to the best untagged class—a significantly larger variation than the change in resolution. The highest signal-to-background ratio is achieved in the tagged classes, many of which manage to also achieve high levels of purity with respect to contamination from the ggH process.

The mass resolution achieved has improved significantly with respect to analyses of this decay mode previously reported by CMS [28], due to improved intercalibration of the ECAL, complemented by the improved supercluster energy correction regression described in Sect. 4.1. For events in which both photons are in the barrel the has been reduced by around 5 % in 7 data, and by more than 20 % in 8 data. When at least one photon is in the endcap region the has been reduced by around 20 % in 7data, and by more than 30 % in 8data. The reduction in , representing the core of the distribution, is slightly larger, generally an additional 5 % better, when compared to .

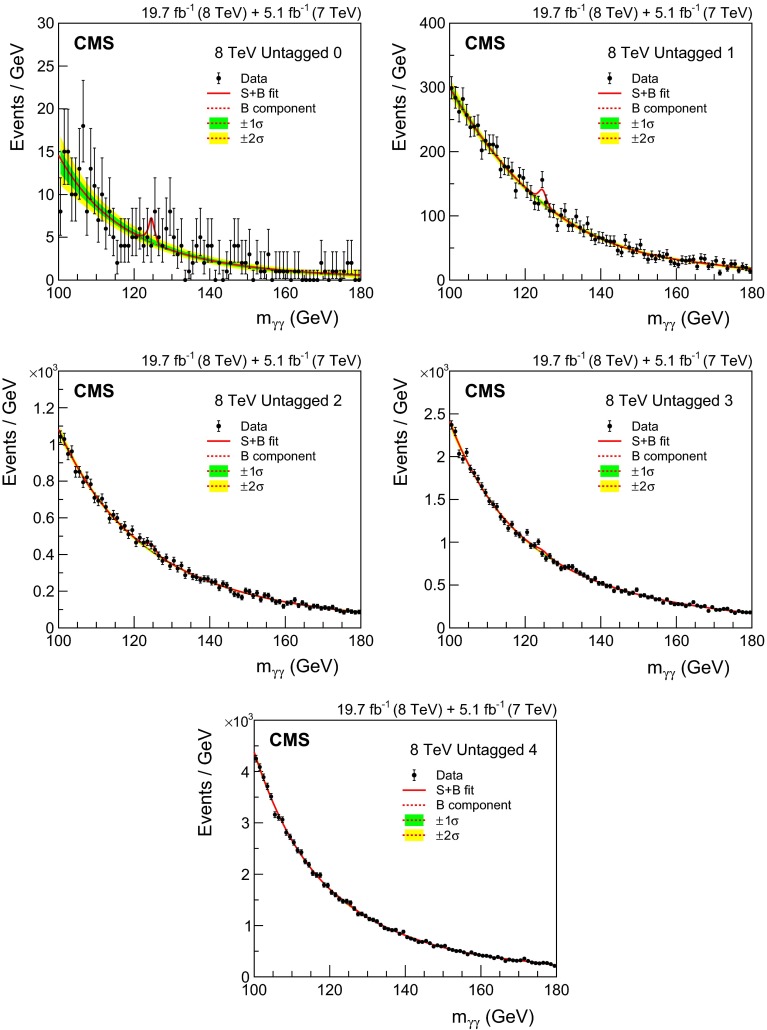

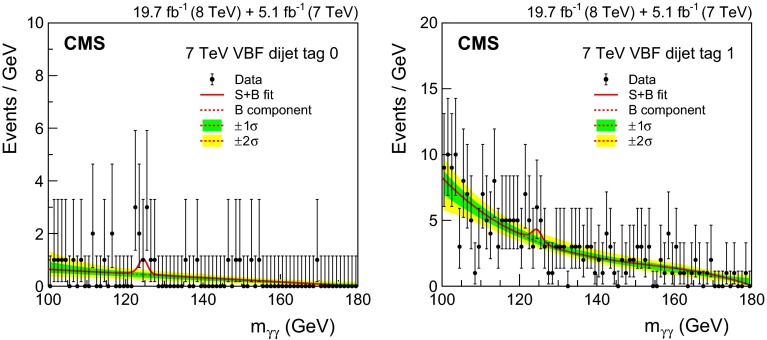

Statistical methodology

To extract a result or measurement a simultaneous binned maximum-likelihood fit to the diphoton invariant mass distributions in all the event classes is performed over the range . Binned fits are used for speed of computation, and the bin size chosen, 250 , is sufficiently small compared to the mass resolution that no information is lost. It has been verified that a binned fit with this bin size gives the same result as an unbinned fit. The signal model is derived from MC simulation after applying the corrections determined from data/MC comparisons of and events, as described in the previous section. The background is evaluated by fitting the distribution in data, without reference to the MC simulation. Thus the likelihood to be evaluated in a signal-plus-background fit is

| 1 |

where comprises those parameters of the signal, such as or the signal strength, that are allowed to vary in the fit, is the parametric signal model, and the background fit function.

The chosen test statistic, used to determine how signal- or background-like the data are, is based on the profile likelihood ratio. Systematic uncertainties are incorporated into the analysis via nuisance parameters and treated according to the frequentist paradigm. A description of the general methodology can be found in Refs. [75, 76]. Unless stated otherwise, the results presented here are obtained using asymptotic formulae [77], including updates introduced in the RooStats package [78].

It is important that the choice of background fit function does not bias the estimate of background obtained from the fit for any signal mass hypothesis, , in the range of the search.

A change has been made with respect to the method used to obtain previous results, which is described in Ref. [28]. Previously, a single fit function was chosen for each class after a study of the potential bias on the estimated background. The potential bias using the chosen function was required to be negligible. The number of degrees of freedom of the fit was increased until the bias became at least five times smaller than the statistical uncertainty in the number of fitted events in a mass window corresponding to the full width at half maximum of the corresponding signal model, for any mass in the range .

For the results reported in this paper a method, the discrete profiling method, has been developed [79] to treat the uncertainty associated with the choice of the function used to fit the background, in a similar way to systematic uncertainties associated with the measurements. The choice of the function used to fit the background, in any particular event class, is included as a discrete nuisance parameter in the likelihood function used to extract the result. All reasonable families of functions should be considered, although in practice it is found that the choice needs to be made between functions in the same families as were previously considered: exponentials, power-law functions, polynomials in the Bernstein basis, and Laurent series. When performing either a background-only fit, or a signal-plus-background fit, by minimizing the value of twice the negative logarithm of the likelihood all functions in these families are tried, with a penalty term added to account for the number of free parameters in the fitting function.

The penalized likelihood function, , for a single fixed background fitting function, , is defined as

| 2 |

where is the unpenalized likelihood function, is the number of free parameters in , and is a constant. When measuring a quantity, , the likelihood ratio, , is used:

| 3 |

where the numerator represents the maximum of given , achieved for the best-fit values of the nuisance parameters, , and a particular background function, . The denominator corresponds to the global maximum of , where , , and . Choosing the functional form of the background that maximizes for any particular value of yields confidence intervals on that can only be wider than those obtained using the single fixed functional form from the global best fit, .

Two values of , which sets the magnitude of the penalty for increasing the number of free parameters in the fit, have been tested in detail. The values of and can be justified, respectively, by the -value and the Akaike information criterion [80]. It is found in tests made with pseudo-experiments that with a value of the method gives consistently good coverage and negligible bias.

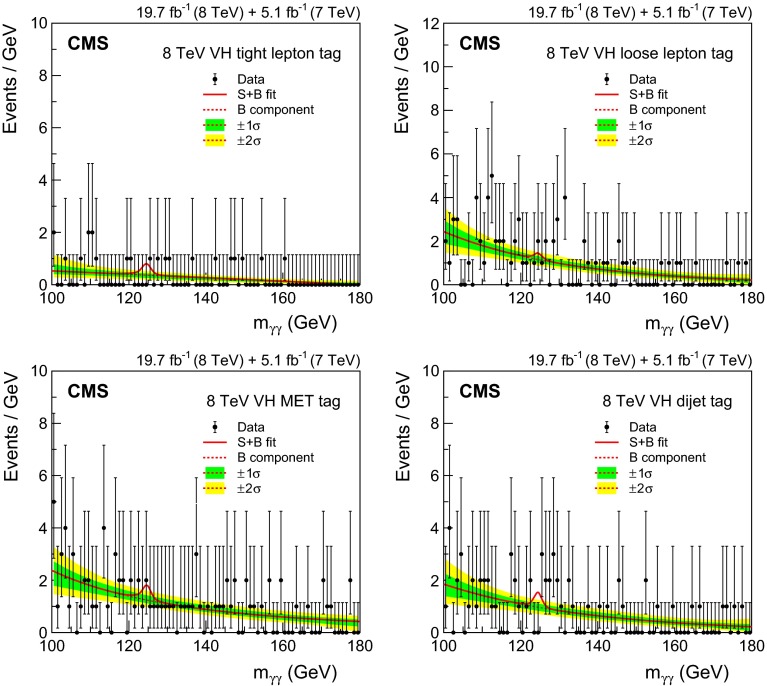

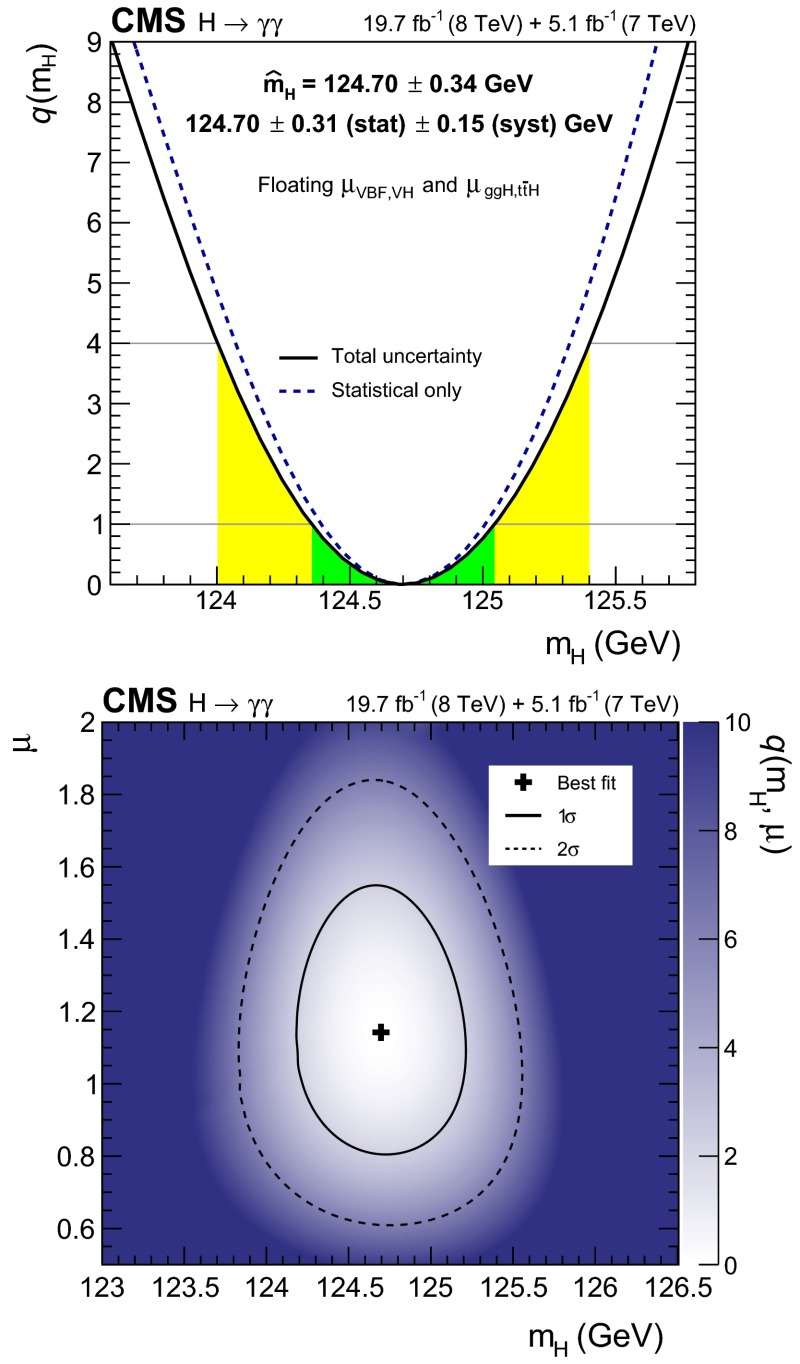

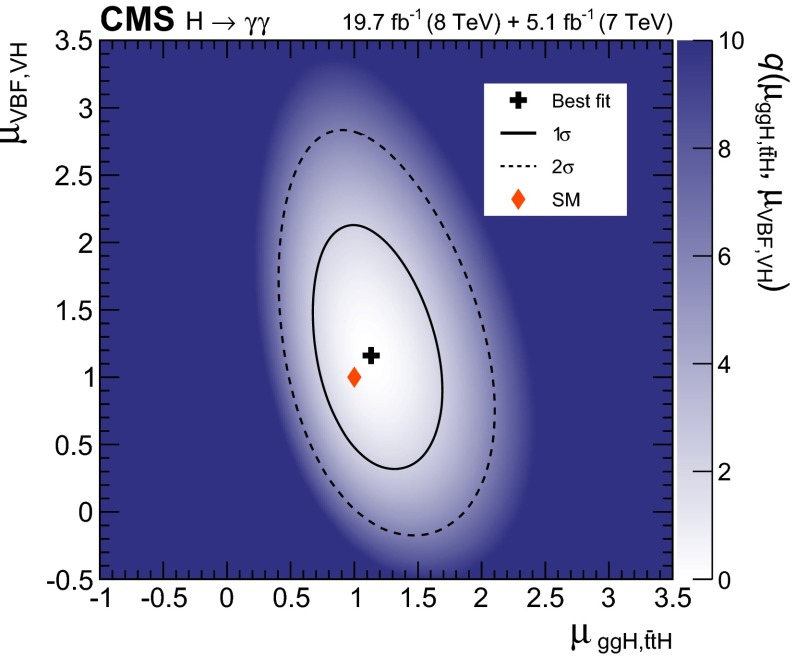

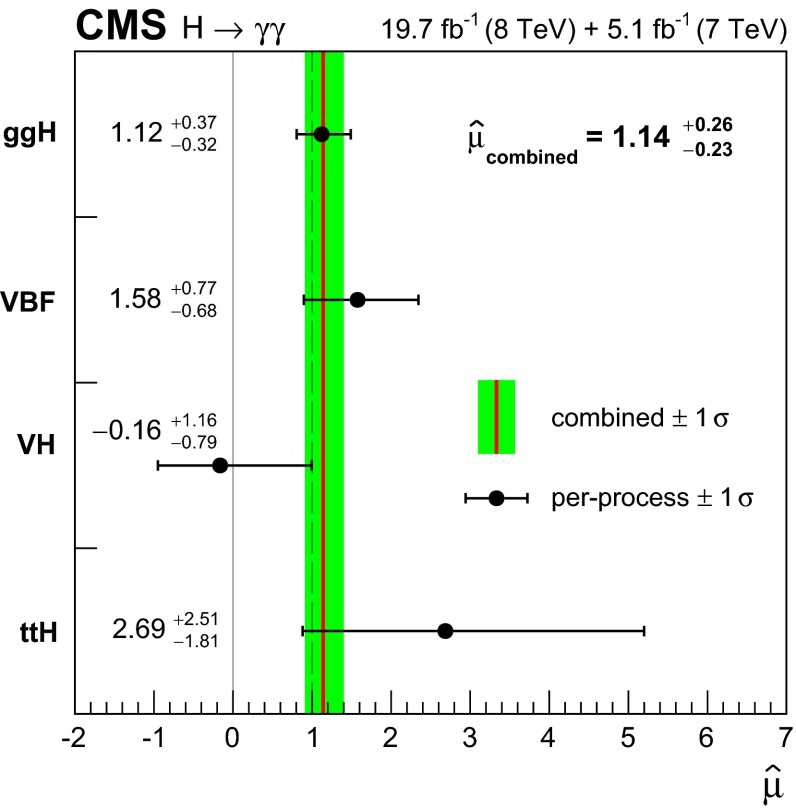

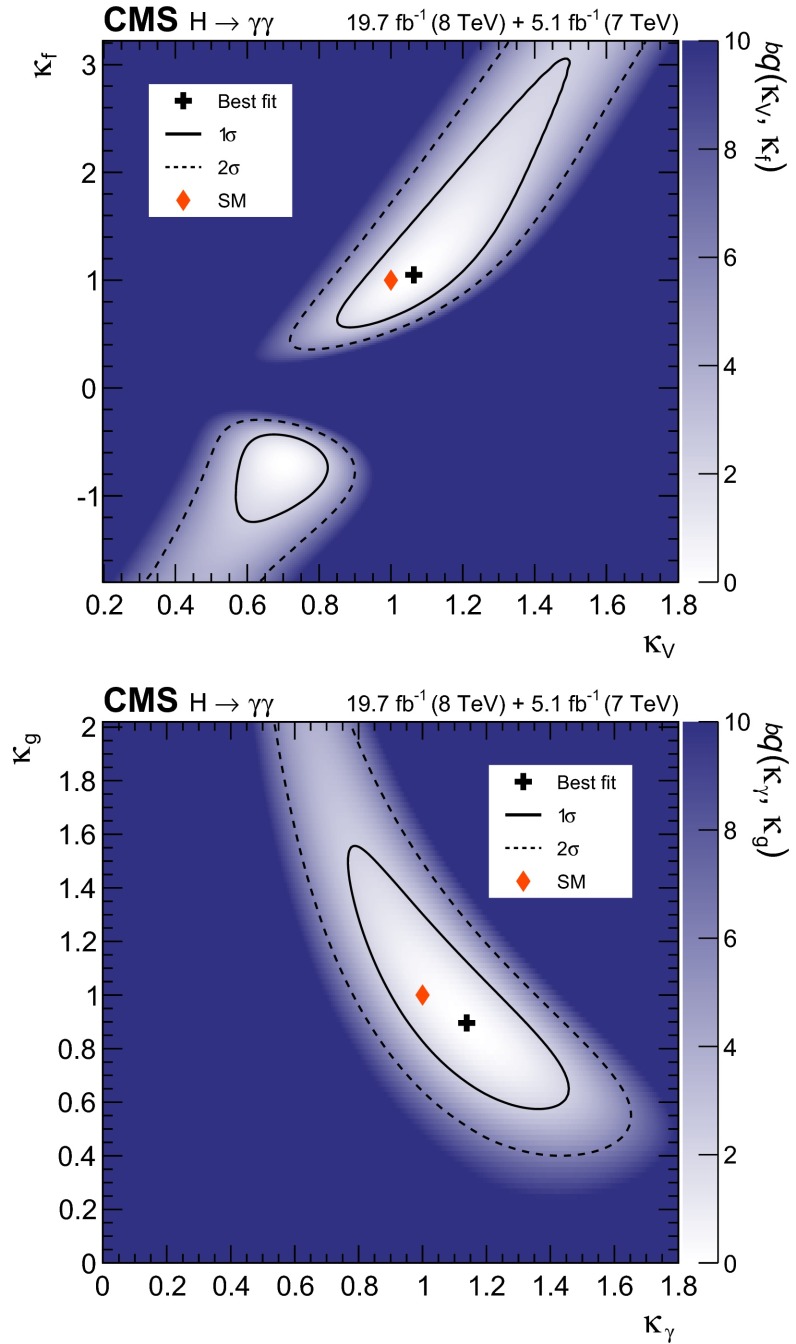

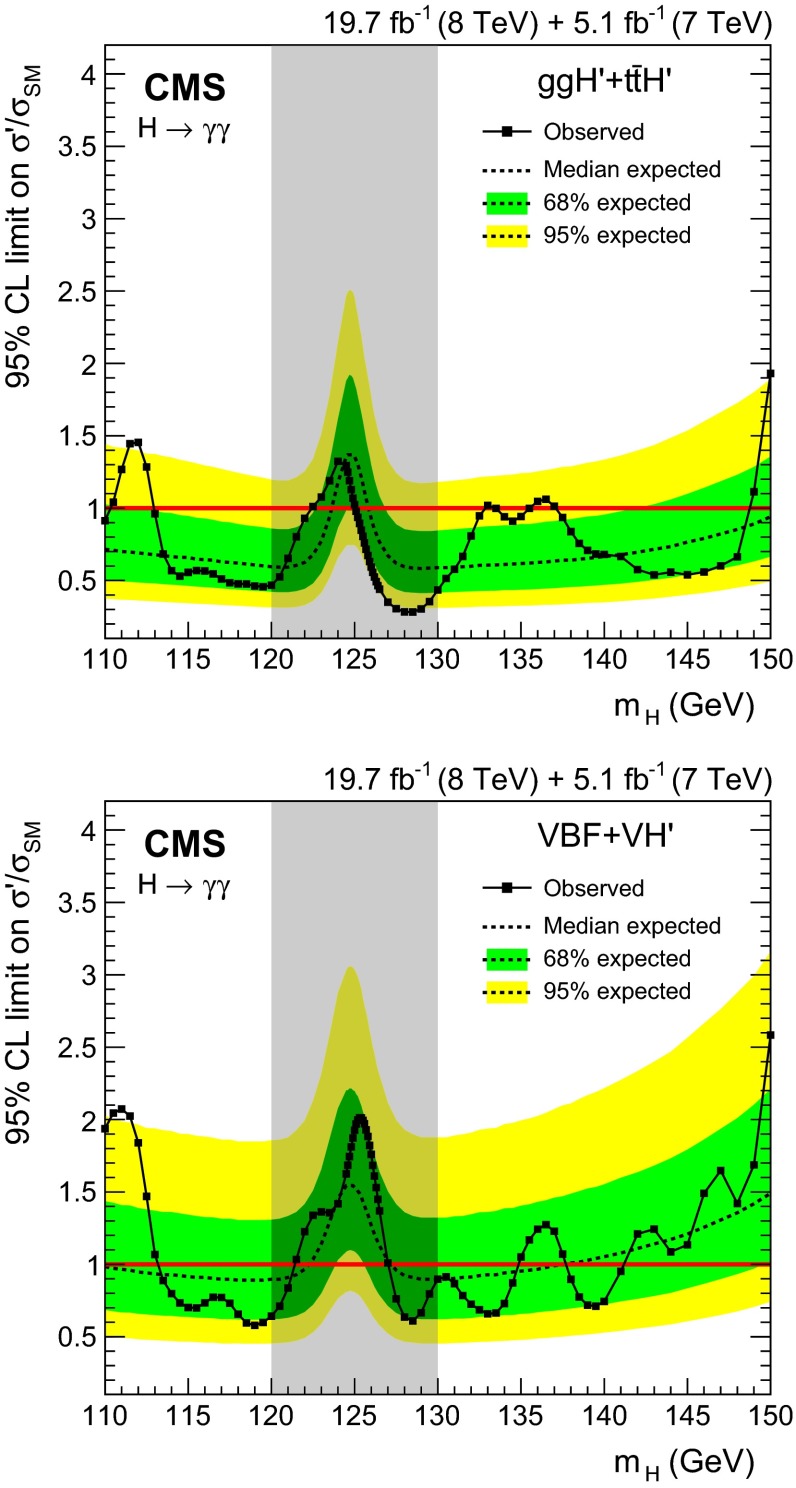

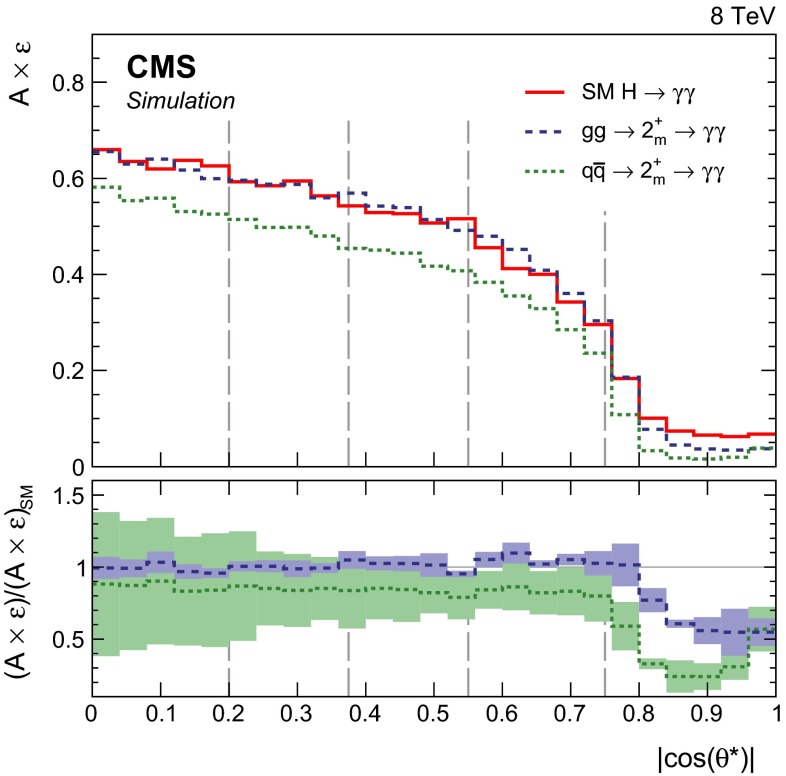

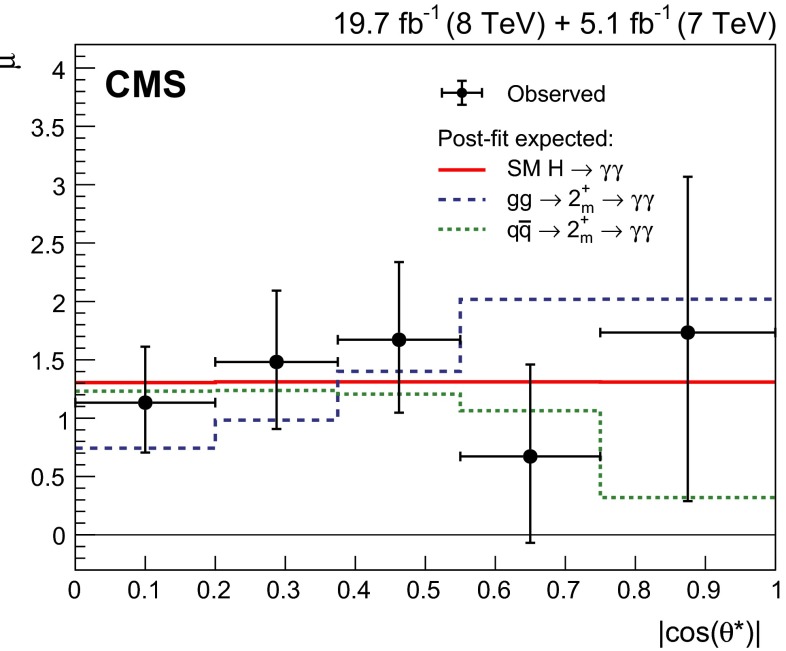

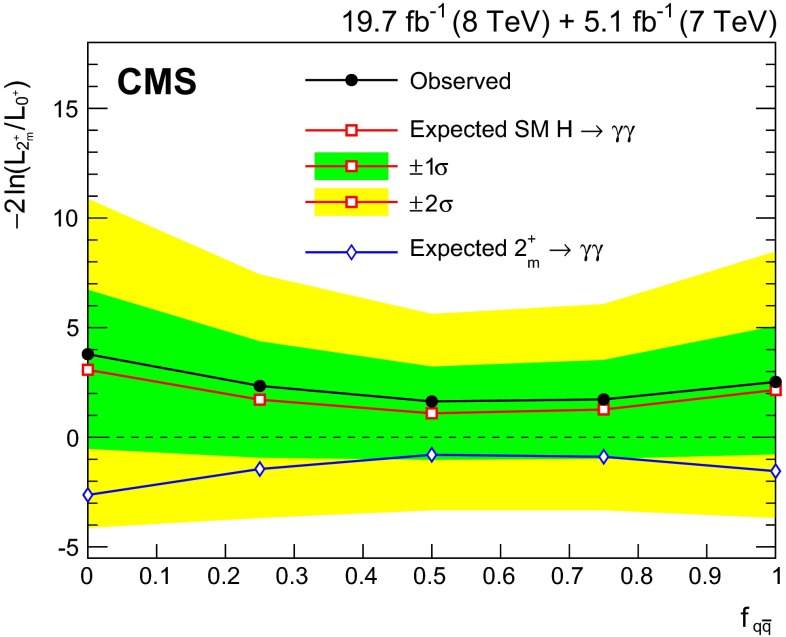

In order to test coverage and bias we generate pseudo-data. To do that we need first to fit the data, thus facing a problem similar to, but not to be confused with, the original problem of choosing the background fit function to model the background in the analysis. The method used to generate pseudo-data is as follows. For each event class in turn, functions from each of the families used in the discrete profiling method, and listed above, are fit to the data. In each family, the number of degrees of freedom (number of exponentials, number of terms in the series, degree of the polynomial, etc.) is increased until the between N+1 degrees of freedom and N degrees of freedom for the fit to data shows no significant improvement ( obtained from the F-distribution [81]). At that point the function with N degrees of freedom is retained as representative of that family of functions. For each event class, the fits to the data with the retained representative functions for that class, are used to generate pseudo-background distributions.