Abstract

Purpose:

As multi-institutional research networks assume a central role in clinical research, they must address the challenge of sustainability. Despite its importance, the concept of network sustainability has received little attention in the literature, and the sustainability strategies of durable scientific networks have not been described.

Innovation:

The Health Maintenance Organization Research Network (HMORN) is a consortium of 18 research departments in integrated health care delivery systems with over 15 million members in the United States and Israel. The HMORN has coordinated federally funded scientific networks and studies since 1994. This case study describes the HMORN approach to sustainability, proposes an operational definition of network sustainability, and identifies 10 essential elements that can enhance sustainability.

Credibility:

The sustainability framework proposed here is drawn from prior publications on organizational issues by HMORN investigators and from the experience of recent HMORN leaders and senior staff.

Conclusion and Discussion:

Network sustainability can be defined as (1) the development and enhancement of shared research assets to facilitate a sequence of research studies in a specific content area or multiple areas, and (2) a community of researchers and other stakeholders who reuse and develop those assets. Essential elements needed to develop the shared assets of a network include: network governance; trustworthy data and processes for sharing data; shared knowledge about research tools; administrative efficiency; physical infrastructure; and infrastructure funding. The community of researchers within a network is enhanced by: a clearly defined mission, vision and values; protection of human subjects; a culture of collaboration; and strong relationships with host organizations. While the importance of these elements varies based on the membership and goals of a network, this framework for sustainability can enhance strategic planning within the network and can guide relationships with external stakeholders.

Keywords: Clinical research, comparative effectiveness research, health maintenance organization, organizational change, program sustainability, data systems

Introduction

Since 1994, the Health Maintenance Organization Research Network (HMORN) has grown to include 18 clinical research centers in the United States and Israel that are embedded within integrated health care delivery systems. HMORN-based investigators have conducted multi-institutional epidemiological studies, comparative effectiveness research, randomized clinical trials, and health services research. Because of its longevity and productivity, the HMORN has been proposed as a model for other multi-institutional networks.1 Drawing on this 20-year experience, we propose a definition of sustainability for multi-institutional scientific networks and present a case study of the HMORN to illustrate 10 essential elements that can contribute to network sustainability.

Background and Context

Multi-institutional research networks are assuming a central role in clinical research in the United States.2,3 Studies from a single site are generally too small to identify differences in outcome across sociodemographic or clinical subgroups. The findings of single-site studies can also be affected by contextual factors such as the organization of care delivery, the characteristics of clinicians, or the quality and completeness of data. As a result, single-site clinical studies are increasingly viewed as pilot investigations to inform the design of definitive, multi-institutional research.

Academic researchers have developed multi-institutional scientific networks to recruit large cohorts of subjects and collect primary data for observational studies and intervention trials.4,5 Investigators in these networks typically share interest in a single disease or expertise in the design of epidemiological studies or Phase 3 clinical trials. Many of these networks lack organizational or financial expertise, particularly early in their development. As a result, essential research processes such as institutional review board (IRB) review, contracting, data sharing, and quality assurance often remain dependent on local norms. This lack of coordination too often leads to slow initiation of projects, failure to achieve timely recruitment targets, and prohibitive expense.1,2,4–6 Physical facilities, data infrastructure, and staff expertise are too seldom preserved or shared with other networks.

In response, scientific leaders have called for community-based, multi-institutional research networks that can study a broad array of health conditions, use electronic health records (EHRs) to identify and recruit subjects, incorporate data from clinical practice, and coordinate regulatory and administrative processes.4,7–9 Federally funded programs such as the Clinical Translational Science Award (CTSA) program,3 the Electronic Data Methods initiative from the Agency for Healthcare Quality and Research (AHRQ),10 the National Institutes of Health (NIH)-sponsored Health Care Systems Pragmatic Clinical Trials Collaboratory,9 and the National Patient-Centered Clinical Research Network (PCORnet)11 have supported this transformation.

These funders also encourage scientific networks to diversify their sources of support to enhance sustainability.12 Few case studies have described durable multi-institutional scientific networks or identified characteristics that enhance their sustainability, however. In this paper, we draw on the 20-year experience of the HMORN to propose an operational definition of sustainability and identify 10 essential elements that can contribute to network sustainability.

The HMORN: A Case Study of a Sustainable Research Network

The HMORN was founded in 1994 by 10 research departments within integrated delivery systems in the United States.13 Currently, the HMORN includes community-based research departments or institutes in 18 integrated delivery systems in the United States and Israel (Table 1). In 2012, these delivery systems maintained EHRs and administrative information for 15.7 million members, including large cohorts of individuals with common and rare diseases. For example, the HMORN-based SUPREME-DM network has access to EHRs and other data for 1.1 million individuals with diabetes.14 These systems are able to identify members who are eligible to receive services in the delivery system, and not just patients, the subset of members who use clinical services. This denominator strengthens epidemiological studies, clinical interventions, and health services research within the HMORN.

Table 1.

HMO Research Network Members - Health Systems and Affiliated Research Centers

| Health System | Year Founded | Research Center | Year Founded |

|---|---|---|---|

| Essentia Health | 1997 | Essentia Institute of Rural Health | 2010 |

| Geisinger Health System | 1915 | Geisinger Center for Health Research | 2003 |

| Group Health Cooperative | 1954 | Group Health Research Institute | 1983 |

| Harvard Pilgrim Health Care | 1969 | Harvard Pilgrim Health Care Institute | 1993 |

| HealthPartners | 1957 | HealthPartners Institute for Education and Research | 1990 |

| Henry Ford Health System | Health Alliance Plan | 1915 | Henry Ford Health System Research Centers | Varies |

| Kaiser Foundation Hospitals – Hawaii | 1958 | The Center for Health Research - Hawaii | 1999 |

| Kaiser Permanente Colorado | 1968 | Institute for Health Research | 1992 |

| Kaiser Permanente Georgia | 1985 | The Center for Health Research – Southeast | 1998 |

| Kaiser Permanente Northern California | 1945 | Division of Research | 1961 |

| Kaiser Permanente Northwest | 1945 | The Center for Health Research – Northwest | 1964 |

| Kaiser Permanente Southern California | 1953 | Department of Research & Evaluation | 1963 |

| Maccabi Healthcare Services | 1941 | Maccabi Institute for Health Services Research | 2004 |

| Marshfield Clinic | Security Health Plan of Wisconsin | 1916 | Marshfield Clinic Research Foundation | 1959 |

| Mid-Atlantic Permanente Medical Group | 1980 | Mid-Atlantic Permanente Research Institute | 1999 |

| Palo Alto Medical Foundation | 1930 | Palo Alto Medical Foundation Research Institute | 1950 |

| Reliant Medical Group | Fallon Community Health Plan | 1929 | Meyers Primary Care Institute | 1996 |

| Scott & White Healthcare | Scott & White Health Plan | 1897 | Scott & White Center for Applied Health Research | 2010 |

The HMORN has assessed modest membership dues since 2009, based on the size of the research department and its activity in the HMORN. These resources support part-time staff who manage network operations, finances, and communications, coordinate volunteer working groups that develop new data resources, and plan an annual HMORN national conference that convenes the Governing Board, scientific networks, programmers, research administrators, and IRB leadership.15 HMORN staff is distributed among member sites, and financial management is provided by the HealthPartners Institute for Education and Research. All HMORN members are expected to develop a virtual data warehouse (VDW) to facilitate collaboration across institutions, and to participate in network governance and scientific activities.

In aggregate, HMORN research centers employ over 1,450 scientists and staff. In 2012 these centers received over $340 million from federal agencies and other extramural sources, although not all that funding supported multi-institutional research. While the HMORN does not track the publications of all its members, investigators from Kaiser Permanente published over 1,000 scientific papers in peer-reviewed journals in 2012,16 and investigators from the Group Health Research Institute published over 350 papers in 2013.

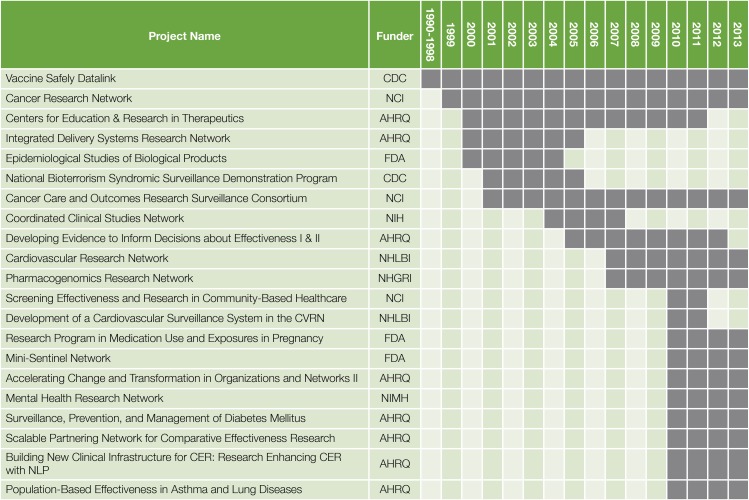

Over its 20-year history, the HMORN has received substantial federal funding to support its scientific networks (Figure 1). Initial support was provided by the Vaccine Safety Datalink, funded by the Centers for Disease Control and Prevention in 1990.17 The Cancer Research Network (CRN), funded by the National Cancer Institute in 1999,18 and the Centers for Education and Research in Therapeutics (CERTs), funded by AHRQ in 2000,19 refined data models and governance approaches, and established new relationships among HMORN sites. Support from the National Cancer Institute has also allowed the CRN to maintain a secure document repository, to maintain data dictionaries, and to establish an “issue tracker” tool to chronicle data problems. Funding from the NIH Roadmap Initiative in 2004 helped the HMORN refine its approach to data governance, contracting and IRB review.20,21 Based on this infrastructure, HMORN investigators led 3 of 11 projects funded by AHRQ under its electronic data infrastructure and methods initiative from 2010–2013,14,22,23 and are leading or participating in 5 of 7 pragmatic clinical trials in the NIH Health Systems Collaboratory.9 A network of 9 HMORN sites (along with one safety-net delivery system) is also participating in PCORnet.

Figure 1.

Select Multi-institutional Research Projects of the HMO Research Network

- AHRQ = Agency for Healthcare Research & Quality

- CDC = Centers for Disease Control and Prevention

- FDA = U.S. Food and Drug Administration

- NHGRI = National Human Genome Research Institute

- NHLBI = National Health, Lung and Blood Institute

- NIH = National Institutes of Health

- NIMH = National Institute of Mental Health

HMORN members have collaborated extensively with academic investigators. In 2012, 9 of the 17 HMORN sites in the United States partnered with academic centers through CTSA awards.24 HMORN investigators have participated in federally funded randomized clinical trials such as the Diabetes Prevention Program Research Group,25 the ACCORD Study Group,26 and the ALLHAT Trial.27 Several HMORN sites have developed biobanking resources that enhance collaboration with academic partners in genomics research.28,29

A Definition of Sustainability for Scientific Networks

Over this 20-year period, HMORN investigators have published papers that describe many aspects of network organization. To develop a definition of sustainability for scientific networks and identify essential components of these networks that might enhance sustainability, we reviewed these papers from a publicly available bibliography compiled by HMORN staff through 2012.30

Our operational definition of sustainability has two parts. First, sustainability requires the development and enhancement of shared research assets to facilitate a sequence of research studies in a specific content area or multiple areas. Second, sustainability requires a community of researchers and other stakeholders who reuse and develop those assets.

The first part of the definition focuses on the technical infrastructure of a scientific network, while the second part emphasizes its community of scientists and stakeholders.

Elements of Sustainability for Scientific Networks

Sustaining a robust multi-institutional research network requires attention to 10 specific areas that align with our definition of sustainability (Table 2): fair and transparent network governance; trustworthy data and strategies for data sharing; shared knowledge about research tools and approaches; administrative efficiency; adequate physical infrastructure; predictable infrastructure funding; a clearly defined mission, vision and values; protection of human subjects; a culture of collaboration; and strong relationships with host organizations.

Table 2.

A Definition of Sustainability for Multi-Institutional Research Networks and the Essential Elements to Achieve It

| Definition/Essential Element |

|---|

| Definition: Development and enhancement of shared research assets to facilitate a sequence of research studies in a specific content area or in multiple areas. |

Essential Elements:

|

| Definition: A group of researchers and other stakeholders willing to reuse and further develop those assets. |

Essential Elements:

|

Shared Research Assets

Network governance

The HMORN is a “network of networks,” with a central governance structure that supports topic-specific scientific networks. Each scientific network adapts the HMORN governance model to meet its internal needs.19,21,31–34 As stated in its bylaws, the HMORN is led by a Governing Board (GB) that comprises the director of each research center or their representative. The GB elects a chair, vice-chair, and Executive Committee to serve two-year terms. The GB is responsible for HMORN strategic planning, management of member dues, membership applications and evaluation, and internal and external communications. The GB may assist in the selection of leaders for scientific networks, but final authority resides with the investigators in that network. The GB delegates oversight of data resources, administrative and research tools, and approaches to human subjects review to an Asset Stewardship Committee that includes directors of scientific networks and other senior investigators and staff.

Data and data sharing

The data infrastructure of the HMORN is based on a common data model, standardized processes for improving data quality and validity, and governance of data sharing.

Data models from early scientific networks were unified into the HMORN virtual data warehouse (VDW) in 2004.31 Each HMORN site imports information from EHRs and other clinical and administrative sources into a set of VDW data tables with standardized variable names, labels, definitions, and coding. The VDW provides a common backbone for diverse studies, freeing individual projects to develop new variables to address specific scientific questions.32,34 As a result, the content and organization of the VDW continue to evolve.35

Since most data used in HMORN studies are derived from routine patient care and operations, researchers have little ability to improve data quality at the point of collection. In response to concerns about the validity of clinically derived data,36 the HMORN has developed approaches for understanding data provenance37 and improving data quality within the VDW.17,19,31,38–40 These approaches informed a recent conceptual model of iterative data quality assessment.41 Each HMORN organization first assesses the quality and completeness of its data using standardized approaches. When data from multiple sites are aggregated, site-level discrepancies that cannot be attributed to clinical variation prompt additional data quality improvement within sites. Data quality problems at either step can lead to a review of processes for extracting, translating, and loading (ETL) data into the research data set, and, where necessary, comparison with external sources such as medical records or tumor registries to assess the validity of specific data elements42–52 and to validate clinical phenotypes using combinations of administrative codes, laboratory findings, and medication fills.14,44,50,51,53

The HMORN uses a distributed model of data sharing in which primary data are retained within the host organizations. Only study-specific, de-identified, or limited data sets are shared for analysis.22,33,54–57 This distributed model protects data privacy, reduces proprietary concerns of host organizations, permits sites to authorize data queries, and engages local knowledge to facilitate data interpretation.58 In recent years, funding from AHRQ and the U.S. Food and Drug Administration (FDA) supported the development of query tools to facilitate study planning and data sharing for research and public health surveillance.22,34,56,57 Extensive tests of PopMedNet, the query tool for the FDA MiniSentinel Network, have shown that data queries received accurate responses within two days.57

Knowledge about research tools and approaches

The HMORN has developed useful research tools such as recruitment materials, computer code, surveys, and dissemination strategies.59–63 The HMORN has not developed a consistent strategy for sharing this knowledge, however. One group of HMORN investigators developed a web-based compendium of research resources in collaboration with several academic centers through the CTSA program, but dissemination was limited and the project was not sustained.59,64,65

Communication tools to connect researchers and disseminate opportunities for participation in new projects are another aspect of knowledge management. HMORN researchers are linked through a directory of HMORN investigators and scientific interest groups. External investigators can seek collaboration with HMORN scientists through the HMORN website. The HMORN website, monthly newsletter, listserv lists, and committees also disseminate knowledge and opportunities.

Administrative efficiency

Historically, business processes such as contracting, data sharing agreements, and financial closeout of studies differed between research institutes. Inefficiencies at the local level were compounded when these administrative activities were carried out across multiple organizations.34,59,64 In recent years, HMORN administrators have developed standard templates for contracts and data use agreements. While metrics for assessing administrative processes such as contracting are increasingly used, such information is not yet consistently collected or reported. As a result of these efforts, the time needed to execute contracts within Kaiser Permanente Colorado has decreased by almost 90 percent since 2010, for example.

Physical infrastructure

Observational epidemiology, comparative effectiveness and patient-centered outcomes research, pragmatic clinical trials, and health services research require little physical infrastructure beyond office space and computing resources. Some HMORN sites also have research clinics and staff to conduct Phase 3 clinical trials of new drugs and devices. Several HMORN sites have established biorepositories, but these efforts have not been coordinated across HMORN members to date.28,29

Infrastructure funding

Most HMORN assets were initially developed for studies in a specific content area. In recent years, member dues have enabled the HMORN to curate these assets and adapt them into broadly useful tools. Even though funding devoted to the HMORN is much less than the direct and indirect research funding of the individual research centers, these funds have proven essential to coordinate network growth and collaboration. Figure 1 shows that external support for the HMORN can be characterized as a “relay race,” in which different funders have supported scientific networks for variable periods of time. Even these network grants and the indirect costs associated with individual research awards such as R01s have not provided sufficient funding to sustain the Network, however. All HMORN sites receive variable amounts of direct or in-kind support from their host health systems, which underwrites activities such as management of tumor registries that satisfy regulatory requirements while providing high-quality data for research. Most HMORN members also evaluate internally funded programs developed by operational or clinical leaders to improve care delivery.66

Relationships Among Researchers and Stakeholders

The scientific community of the HMORN has been sustained through a shared mission, vision and values, common strategies to protect the privacy and security of members and their data, relationship-building among researchers, and engagement with the host delivery systems.

Mission, vision, and values

The 2012 update of the mission, vision and values of the HMORN is shown in Table 3. These core elements emphasize the importance of conducting public-domain research to benefit both the health plans and the broader community.

Table 3.

HMORN Mission, Mission and Values

| Mission | To improve individual and population health through research that connects the resources and capabilities of learning health care systems. |

| Vision | The HMO Research Network is the nation’s preeminent source of population-based research that measurably improves health and health care. |

| Values |

|

Protection of human subjects

Efficient and consistent IRB review across a network is an essential component of sustainability. A decade ago, HMORN investigators described problems in human subjects review that were similar to other multi-institutional studies or consortia. For example, Greene and colleagues described the variability in IRB reviews across six HMORN sites for a low-risk, mailed survey on cancer survivorship.67 Two to eight revisions were necessary to finalize the protocol, delaying initiation of the study and increasing costs. Although the HMORN does not use a central IRB, local IRB administrators convene on a regular basis to develop common approaches to oversight of multi-institutional studies. In general, the IRB for the lead research site serves as the primary IRB for data-only studies, and other participating sites cede oversight to that IRB. Local IRB oversight remains the norm for studies that involve patient contact, even if minimal risk.14,68,69 Differences in IRB management software across organizations continue to hamper efforts to develop common metrics to assess improvement in IRB efficiency.

A culture of collaboration

Although the importance of organizational culture is widely acknowledged, neither the HMORN nor other multi-institutional networks have systematically described their cultures or the effects of these on scientific productivity or administrative efficiency. The HMORN is a loose federation, since it is unincorporated and has no financial resources beyond membership dues. The leadership structure is consensus-based and lacks formal authority over the decisions of individual scientists or research centers. As in most organizations, many critical aspects of the culture of the HMORN are independent of its governance. For example, an important bond between HMORN investigators is their decision to base research careers within community-based delivery systems rather than academic medical centers. Collaboration among the highly trained and dedicated project managers, programmers, statisticians, and administrators in the HMORN is also critical to sustainability, since these individuals maintain the institutional memory of that network across projects and over time.

Relationships with host organizations

The health systems that host HMORN research departments differ substantially in their organizational support for research. Many HMORN-based researchers maintain clinical practices within their systems, allowing them to align research with the needs of the broader organization. Clinical trial units in many HMORN research departments offer opportunities for full-time clinicians to participate in research.

Surveys of primary care physicians provide one method for assessing organizational priorities, and have achieved response rates up to 91 percent.70 Four studies have assessed the interest and concerns of HMO leaders, clinicians, and members about traditional or cluster-randomized trials within the health plan.71–74 Clinicians were enthusiastic about participation as long as their time demands were not excessive and research staff could complete IRB applications and manage timelines and budgets on their behalf.71,73 Organizational leaders raised concerns about the financial impact of clinical trials, the alignment of trials with their organizational mission, and the impact on member satisfaction of informing members about their eligibility for trials. Health plan members worried that participating in trials might jeopardize their care, although these concerns could be alleviated by a trusted physician.74 In response to such operational concerns, successful clinical trials within the HMORN generally are designed to minimize the impact on routine clinical workflow.75

The conceptual model of the Learning Healthcare System emphasizes that ongoing organizational input promotes rapid dissemination of research findings into practice.76 Although examples of successful organizationally driven research within the HMORN have been published,66,77,78 the Network has not developed a systematic approach to identifying and reporting collaborative projects between researchers and clinical/operational leaders.

Discussion

Our proposed definition and essential elements of sustainability demonstrate that sustaining the HMORN has required attention to a broad array of technical and cultural issues. Even after 20 years, the HMORN has developed only provisional solutions to some of these concerns. We recognize that networks based in academic medical centers or community practices that have different organizational structures and incentives may need to address different elements of sustainability. Regardless of the structure of the network, the rapid pace of change in science and technology will require constant reevaluation of these fundamental issues.

A lack of information-sharing between networks is a barrier to sustainability in its own right. Few of the thousands of scientific papers published by HMORN investigators have addressed organizational issues.30 Other practice-based research networks, cooperative clinical trial groups, and academic medical centers (through Clinical Translational Science Awards) have also reported strategies to improve efficiency and governance.3,21,79–84 Areas of current emphasis in these settings include data models,85,86 approaches to querying multiple sites in planning for research studies,87 and strategies for accelerating human subjects review.88 The literature on network sustainability is limited in part because few journals solicit papers that describe the organization and management of research. Substantial publication bias is also likely, since unsustainable networks and ineffective strategies are unlikely to be reported. Finally, project managers, administrators, and IRB leaders who develop innovative solutions to network problems lack incentives to publish their work.

Metrics to assess the efficiency of conducting research are needed. For example, a common definition of the time necessary to complete an IRB review could generate measures that can be used internally to improve efficiency while providing external accountability to funders and other stakeholders. Measurement strategies for improving business processes in other fields have rarely been applied to multi-institutional health care research, but can also enhance internal and external credibility.81

The Patient-Centered Outcomes Research Institute (PCORI) and other funding agencies are demanding that researchers involve patients, clinicians, operational leaders, and community members in designing, conducting, interpreting, and disseminating research. Such stakeholders may also contribute to long-term network sustainability. Including a token number of patients on network advisory committees may no longer suffice in an era when delivery systems can rapidly conduct large surveys of their members, and virtual communities of patients and caregivers share information and identify topics for research.89 Closer relationships between research and operational activities such as quality improvement or business analytics may also help improve the sustainability of these networks, Ideally, the relationship between networks and their stakeholders can become a “virtuous circle” in which stakeholder input into research enhances the value of research findings, which promotes further stakeholder trust and engagement.

Conclusions and Next Steps

Ultimately, the decision to conduct research within a multi-institutional network is based on both pragmatism and trust. Pragmatically, researchers will join networks that meet their scientific needs and improve the efficiency of research. To do so, they must develop trust in the quality of data within and between sites, the fairness and transparency of network policies and dispute resolution, the willingness of other researchers to share resources and scientific credit, and the efficiency of scientific and regulatory activities. In a recent paper, Holve proposed four “pillars of sustainability” for networks conducting research and quality improvement.12 Drawing on the experience of networks funded through the AHRQ Electronic Data Methods Forum, she identified these foundational elements: trust and value, governance, management, and financial and administrative support. Her conceptual model and our operational view of sustainability are well aligned. Case studies of other scientific networks will be essential to confirm these models of sustainability and assess their applicability to networks that vary in size or membership. By developing a strategy for sustainability, multi-institutional scientific networks can assure that they operate efficiently, remain relevant to the needs of their stakeholders, and promote scientific excellence.

Acknowledgments

The Academy Health Electronic Data Methods (EDM) Forum, a project supported by the Agency for Healthcare Research and Quality (AHRQ) through the American Recovery & Reinvestment Act of 2009, Grant U13 HS19564-01. Additional support provided by Grants R01HS019859 and R01HS022963 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

References

- 1.Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624–1632. doi: 10.1001/jama.290.12.1624. PM:14506122. [DOI] [PubMed] [Google Scholar]

- 2.Zerhouni EA. Translational and clinical science--time for a new vision. N Engl J Med. 2005;353:1621–1623. doi: 10.1056/NEJMsb053723. PM:16221788. [DOI] [PubMed] [Google Scholar]

- 3.Rosenblum D, Alving B. The role of the clinical and translational science awards program in improving the quality and efficiency of clinical research. Chest. 2011;140:764–767. doi: 10.1378/chest.11-0710. PM:21896519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Manolio TA, Weis BK, Cowie CC, et al. New models for large prospective studies: is there a better way? Am J Epidemiol. 2012;175:859–866. doi: 10.1093/aje/kwr453. PM:22411865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Szefler SJ, Chinchilli VM, Israel E, et al. Key observations from the NHLBI Asthma Clinical Research Network. Thorax. 2012;67:450–455. doi: 10.1136/thoraxjnl-2012-201876. PM:22514237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Moses H, III, Dorsey ER. Biomedical research in an age of austerity. JAMA. 2012;308:2341–2342. doi: 10.1001/jama.2012.14846. PM:23240142. [DOI] [PubMed] [Google Scholar]

- 7.Darbyshire J, Sitzia J, Cameron D, et al. Extending the clinical research network approach to all of healthcare. Ann Oncol. 2011;22(Suppl 7):vii36–vii43. doi: 10.1093/annonc/mdr424. PM:22039143. [DOI] [PubMed] [Google Scholar]

- 8.Anderson N, Abend A, Mandel A, et al. Implementation of a deidentified federated data network for population-based cohort discovery. J Am Med Inform Assoc. 2012;19:e60–e67. doi: 10.1136/amiajnl-2011-000133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Califf RM, Platt R. Embedding cardiovascular research into practice. JAMA. 2013;310:2037–2038. doi: 10.1001/jama.2013.282771. PM:24240926. [DOI] [PubMed] [Google Scholar]

- 10.Holve E, Calonge N. Lessons from the Electronic Data Methods Forum: collaboration at the frontier of comparative effectiveness research, patient-centered outcomes research, and quality improvement. Med Care. 2013;51:S1–S3. doi: 10.1097/MLR.0b013e31829c518f. PM:23793048. [DOI] [PubMed] [Google Scholar]

- 11.Patient-centered Outcomes Research Institute (PCORI) 2014. [Cited 2014 February 25]. Available from: http://www.pcori.org/2013/pcori-awards-93-5-million-to-develop-national-network-to-support-more-efficient-patient-centered-research/

- 12.Holve E. Ensuring support for research and quality improvement (QI) networks: four pillars of sustainability - an emerging framework. eGEMS (Generating Evidence & Methods to IMprove Patient Outcomes) 2013;1 doi: 10.13063/2327-9214.1005. Article 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vogt TM, Elston-Lafata J, Tolsma D, Greene SM. The role of research in integrated healthcare systems: the HMO Research Network. Am J Manag Care. 2004;10:643–648. PM:15515997. [PubMed] [Google Scholar]

- 14.Nichols GA, Desai J, Elston LJ, et al. Construction of a multisite DataLink using electronic health records for the identification, surveillance, prevention, and management of diabetes mellitus: the SUPREME-DM project. Prev Chronic Dis. 2012;9:E110. doi: 10.5888/pcd9.110311. PM:22677160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Copeland LA, Zeber JE. Advancing research in the era of healthcare reform: the 19th annual HMO Research Network Conference, April 16–18, 2013, San Francisco, California. Clin Med Res. 2013;11:120–122. doi: 10.3121/cmr.2013.1175. PM:24085855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kaiser Permanente KP Publications Library. 2014. [Cited 2014 February 25]. Available from: https://kpresearchpublications.kp.org/

- 17.Chen RT, Glasser JW, Rhodes PH, et al. Vaccine Safety Data-link project: a new tool for improving vaccine safety monitoring in the United States. The Vaccine Safety Datalink Team. Pediatrics. 1997;99:765–773. doi: 10.1542/peds.99.6.765. PM:9164767. [DOI] [PubMed] [Google Scholar]

- 18.Wagner EH, Greene SM, Hart G, et al. Building a research consortium of large health systems: the Cancer Research Network. J Natl Cancer Inst Monogr. 2005:3–11. doi: 10.1093/jncimonographs/lgi032. PM:16287880. [DOI] [PubMed] [Google Scholar]

- 19.Platt R, Davis R, Finkelstein J, et al. Multicenter epidemiologic and health services research on therapeutics in the HMO Research Network Center for Education and Research on Therapeutics. Pharmacoepidemiol Drug Saf. 2001;10:373–377. doi: 10.1002/pds.607. PM:11802579. [DOI] [PubMed] [Google Scholar]

- 20.Greene SM, Larson EB, Boudreau DM, et al. The coordinated clinical studies network: a multidisciplinary alliance to facilitate research and improve care. Perm J. 2005;9:33–35. doi: 10.7812/tpp/05-100. PM:22811643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Williams RL, Johnson SB, Greene SM, et al. Signposts along the NIH roadmap for reengineering clinical research: lessons from the Clinical Research Networks initiative. Arch Intern Med. 2008;168:1919–1925. doi: 10.1001/archinte.168.17.1919. PM:18809820. [DOI] [PubMed] [Google Scholar]

- 22.Toh S, Platt R, Steiner JF, Brown JS. Comparative-effectiveness research in distributed health data networks. Clin Pharmacol Ther. 2011;90:883–887. doi: 10.1038/clpt.2011.236. PM:22030567. [DOI] [PubMed] [Google Scholar]

- 23.Randhawa GS, Slutsky JR. Building sustainable multi-functional prospective electronic clinical data systems. Med Care. 2012;50(Suppl):S3–S6. doi: 10.1097/MLR.0b013e3182588ed1. PM:22692255. [DOI] [PubMed] [Google Scholar]

- 24.Institute of Medicine of the National Academies The CTSA Program at NIH: Opportunities for advancing clinical and translational research. 2013. Jun 25, [Cited 2014 February 25]. Available from: http://www.iom.edu/∼/media/Files/Report%20Files/2013/CTSA-Review/CTSA-Review-RB.pdf. [PubMed]

- 25.Knowler WC, Barrett-Connor E, Fowler SE, et al. Reduction in the incidence of type 2 diabetes with lifestyle intervention or metformin. N Engl J Med. 2002;346:393–403. doi: 10.1056/NEJMoa012512. PM:11832527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gerstein HC, Miller ME, Genuth S, et al. Long-term effects of intensive glucose lowering on cardiovascular outcomes. N Engl J Med. 2011;364:818–828. doi: 10.1056/NEJMoa1006524. PM:21366473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.AllHAT Colllaborative Research Group Major outcomes in high-risk hypertensive patients randomized to angiotensin converting enzyme inhibitor or calcium channel blocker vs diuretic: The Antihypertensive and Lipid-lowering Treatment to Prevent Heart Attack Trial (ALLHAT) JAMA. 2002 Dec 18;288:2891. doi: 10.1001/jama.288.23.2981. [DOI] [PubMed] [Google Scholar]

- 28.Schaefer C, RPGEH GO Project Collaboration C-A3-04: The Kaiser Permanente research program on genes, environgment and health: A resource for genetic epidemiology in adult health and aging. Clin Med Res. 2011;9:177–178. [Google Scholar]

- 29.McCarty CA, Chisholm RL, Chute CG, et al. The eMERGE Network: a consortium of biorepositories linked to electronic medical records data for conducting genomic studies. BMC Med Genomics. 2011;4:13. doi: 10.1186/1755-8794-4-13. PM:21269473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.HMO Research Network HMORN: Infrastructure bibliography and website materials. 2014. [Cited 2014 March 4]. Available from: http://www.hmoresearchnetwork.org/en/Tools%20&%20Materials/Communications/HMORN_Infrastructure_Bibliography-with-Abstracts.docx.

- 31.Hornbrook MC, Hart G, Ellis JL, et al. Building a virtual cancer research organization. J Natl Cancer Inst Monogr. 2005:12–25. doi: 10.1093/jncimonographs/lgi033. PM:16287881. [DOI] [PubMed] [Google Scholar]

- 32.Magid DJ, Gurwitz JH, Rumsfeld JS, Go AS. Creating a research data network for cardiovascular disease: the CVRN. Expert Rev Cardiovasc Ther. 2008;6:1043–1045. doi: 10.1586/14779072.6.8.1043. PM:18793105. [DOI] [PubMed] [Google Scholar]

- 33.Baggs J, Gee J, Lewis E, et al. The Vaccine Safety Datalink: a model for monitoring immunization safety. Pediatrics. 2011;127(Suppl 1):S45–S53. doi: 10.1542/peds.2010-1722H. PM:21502240. [DOI] [PubMed] [Google Scholar]

- 34.Sittig DF, Hazlehurst BL, Brown J, et al. A survey of informatics platforms that enable distributed comparative effectiveness research using multi-institutional heterogenous clinical data. Med Care. 2012;50(Suppl):S49–S59. doi: 10.1097/MLR.0b013e318259c02b. PM:22692259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ross TR, Ng D, Brown JS, et al. The HMO Research Network Virtual Data Warehouse: A Public Data Model to Support Collaboration. eGEMS (Generating Evidence & Methods to IMprove Patient Outcomes) 2014;2 doi: 10.13063/2327-9214.1049. Article 2. DOI: http://dx.doi.org/10.13063/2327-9214.1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hersh WR, Weiner MG, Embi PJ, et al. Caveats for the use of operational electronic health record data in comparative effectiveness research. Med Care. 2013;51:S30–S37. doi: 10.1097/MLR.0b013e31829b1dbd. PM:23774517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Johnson KE, Kamineni A, Fuller S, Olmstead D, Wemli KJ. How the Provenance of Electronic Health Record Data Matters for Research: A Case Example Using System Mapping. eGEMS (Generating Evidence & Methods to IMprove Patient Outcomes) 2014;2 doi: 10.13063/2327-9214.1058. Article 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ford ME, Hill DD, Nerenz D, et al. Categorizing race and ethnicity in the HMO Cancer Research Network. Ethn Dis. 2002;12:135–140. PM:11913601. [PubMed] [Google Scholar]

- 39.Smith N, Iyer RL, Langer-Gould A, et al. Health plan administrative records versus birth certificate records: quality of race and ethnicity information in children. BMC Health Serv Res. 2010;10:316. doi: 10.1186/1472-6963-10-316. PM:21092309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.McCarthy NL, Gee J, Weintraub E, et al. Monitoring vaccine safety using the Vaccine Safety Datalink: utilizing immunization registries for pandemic influenza. Vaccine. 2011;29:4891–4896. doi: 10.1016/j.vaccine.2011.05.003. PM:21596088. [DOI] [PubMed] [Google Scholar]

- 41.Kahn MG, Raebel MA, Glanz JM, Riedlinger K, Steiner JF. A pragmatic framework for single-site and multisite data quality assessment in electronic health record-based clinical research. Med Care. 2012;50(Suppl):S21–S29. doi: 10.1097/MLR.0b013e318257dd67. PM:22692254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mullooly J, Drew L, DeStefano F, et al. Quality of HMO vaccination databases used to monitor childhood vaccine safety. Vaccine Safety DataLink Team. Am J Epidemiol. 1999;149:186–194. doi: 10.1093/oxfordjournals.aje.a009785. PM:9921964. [DOI] [PubMed] [Google Scholar]

- 43.Raebel MA, Ellis JL, Andrade SE. Evaluation of gestational age and admission date assumptions used to determine prenatal drug exposure from administrative data. Pharmacoepidemiol Drug Saf. 2005;14:829–836. doi: 10.1002/pds.1100. PM:15800957. [DOI] [PubMed] [Google Scholar]

- 44.Herrinton LJ, Liu L, Lafata JE, et al. Estimation of the period prevalence of inflammatory bowel disease among nine health plans using computerized diagnoses and outpatient pharmacy dispensings. Inflamm Bowel Dis. 2007;13:451–461. doi: 10.1002/ibd.20021. PM:17219403. [DOI] [PubMed] [Google Scholar]

- 45.Thwin SS, Clough-Gorr KM, McCarty MC, et al. Automated inter-rater reliability assessment and electronic data collection in a multi-center breast cancer study. BMC Med Res Methodol. 2007;7:23. doi: 10.1186/1471-2288-7-23. PM:17577410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Aiello Bowles EJ, Tuzzio L, Ritzwoller DP, et al. Accuracy and complexities of using automated clinical data for capturing chemotherapy administrations: implications for future research. Med Care. 2009;47:1091–1097. doi: 10.1097/MLR.0b013e3181a7e569. PM:19648826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Shui IM, Shi P, Dutta-Linn MM, et al. Predictive value of seizure ICD-9 codes for vaccine safety research. Vaccine. 2009;27:5307–5312. doi: 10.1016/j.vaccine.2009.06.092. PM:19616500. [DOI] [PubMed] [Google Scholar]

- 48.Greene SK, Shi P, Dutta-Linn MM, et al. Accuracy of data on influenza vaccination status at four Vaccine Safety Datalink sites. Am J Prev Med. 2009;37:552–555. doi: 10.1016/j.amepre.2009.08.022. PM:19944924. [DOI] [PubMed] [Google Scholar]

- 49.Arterburn DE, Alexander GL, Calvi J, et al. Body mass index measurement and obesity prevalence in ten U.S. health plans. Clin Med Res. 2010;8:126–130. doi: 10.3121/cmr.2010.880. PM:20682758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Andrade SE, Moore Simas TA, Boudreau D, et al. Validation of algorithms to ascertain clinical conditions and medical procedures used during pregnancy. Pharmacoepidemiol Drug Saf. 2011;20:1168–1176. doi: 10.1002/pds.2217. PM:22020902. [DOI] [PubMed] [Google Scholar]

- 51.Scholes D, Yu O, Raebel MA, Trabert B, Holt VL. Improving automated case finding for ectopic pregnancy using a classification algorithm. Hum Reprod. 2011;26:3163–3168. doi: 10.1093/humrep/der299. PM:21911435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ritzwoller DP, Carroll N, Delate T, et al. Validation of Electronic Data on Chemotherapy and Hormone Therapy Use in HMOs. Med Care. 2012 Oct;51:e67–e73. doi: 10.1097/MLR.0b013e31824def85. PM:22531648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Desai JR, Wu P, Nichols GA, Lieu TA, O’Connor PJ. Diabetes and asthma case identification, validation, and representativeness when using electronic health data to construct registries for comparative effectiveness and epidemiologic research. Med Care. 2012;50(Suppl):S30–S35. doi: 10.1097/MLR.0b013e318259c011. PM:22692256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lazarus R, Yih K, Platt R. Distributed data processing for public health surveillance. BMC Public Health. 2006;6:235. doi: 10.1186/1471-2458-6-235. PM:16984658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Maro JC, Platt R, Holmes JH, et al. Design of a national distributed health data network. Ann Intern Med. 2009;151:341–344. doi: 10.7326/0003-4819-151-5-200909010-00139. PM:19638403. [DOI] [PubMed] [Google Scholar]

- 56.Brown JS, Holmes JH, Shah K, Hall K, Lazarus R, Platt R. Distributed health data networks: a practical and preferred approach to multi-institutional evaluations of comparative effectiveness, safety, and quality of care. Med Care. 2010;48:S45–S51. doi: 10.1097/MLR.0b013e3181d9919f. PM:20473204. [DOI] [PubMed] [Google Scholar]

- 57.Curtis LH, Weiner MG, Boudreau DM, et al. Design considerations, architecture, and use of the Mini-Sentinel distributed data system. Pharmacoepidemiol Drug Saf. 2012;21(Suppl 1):23–31. doi: 10.1002/pds.2336. PM:22262590. [DOI] [PubMed] [Google Scholar]

- 58.Ray WA. Improving automated database studies. Epidemiology. 2011;22:302–304. doi: 10.1097/EDE.0b013e31820f31e1. PM:21464650. [DOI] [PubMed] [Google Scholar]

- 59.Greene SM, Baldwin LM, Dolor RJ, Thompson E, Neale AV. Streamlining research by using existing tools. Clin Transl Sci. 2011;4:266–267. doi: 10.1111/j.1752-8062.2011.00296.x. PM:21884513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.The CCSN Survey and Recruitment Committee Recruitment Best Practices Guide: A guide to optimizing recruitment and data collection in multi-site studies. 2006. [Cited 2014 May 13]. Available from: http://www.hmoresearchnetwork.org/en/Tools%20&%20Materials/Plan_Field/HMORN_Recruitment&DataCollection.pdf.

- 61.Recommendations for improving provider participation in trials. 2012. [Cited 2014 February 25]. Available from: http://www.hmoresearchnetwork.org/en/Tools%20&%20Materials/Plan_Field/HMORN_ProviderParticipation_Recs.pdf.

- 62.HMORN Asset Stewardship Committee HMORN Project Policy Best Practices: Publications & Authorship. 2011. [Cited 2014 February 25]. Available from: http://www.hmoresearchnet-work.org/en/Tools%20&%20Materials/ManagingResearch/HMORN-Project-Policy-Best-Practices_Publications.pdf.

- 63.Griffith JR, Fear KM, Lammers E, Banaszak-Holl J, Lemak CH, Zheng K. A positive deviance perspective on hospital knowledge management: analysis of Baldrige Award recipients 2002–2008. J Healthc Manag. 2013;58:187–203. PM:23821898. [PubMed] [Google Scholar]

- 64.Dolor RJ, Greene SM, Thompson E, Baldwin LM, Neale AV. Partnership-driven Resources to Improve and Enhance Research (PRIMER): a survey of community-engaged researchers and creation of an online toolkit. Clin Transl Sci. 2011;4:259–265. doi: 10.1111/j.1752-8062.2011.00310.x. PM:21884512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Clinical & Translational Science Awards (CTSA) Research toolkit: A toolkit for research in patnership with practices and communities. 2014. [Cited 2014 February 25]. Available from: www.researchtoolkit.org.

- 66.Reid RJ, Coleman K, Johnson EA, et al. The Group Health medical home at year two: cost savings, higher patient satisfaction, and less burnout for providers. Health Aff (Millwood) 2010;29:835–843. doi: 10.1377/hlthaff.2010.0158. PM:20439869. [DOI] [PubMed] [Google Scholar]

- 67.Greene SM, Geiger AM, Harris EL, et al. Impact of IRB requirements on a multicenter survey of prophylactic mastectomy outcomes. Ann Epidemiol. 2006;16:275–278. doi: 10.1016/j.annepidem.2005.02.016. PM:16005245. [DOI] [PubMed] [Google Scholar]

- 68.Greene SM, Braff J, Nelson A, Reid RJ. The process is the product: a new model for multisite IRB review of data-only studies. IRB. 2010;32:1–6. PM:20590050. [PubMed] [Google Scholar]

- 69.Marsolo K. Approaches to facilitate institutional review board approval of multicenter research studies. Med Care. 2012;50(Suppl):S77–S81. doi: 10.1097/MLR.0b013e31825a76eb. PM:22692264. [DOI] [PubMed] [Google Scholar]

- 70.Puleo E, Zapka J, White MJ, Mouchawar J, Somkin C, Taplin S. Caffeine, cajoling, and other strategies to maximize clinician survey response rates. Eval Health Prof. 2002;25:169–184. doi: 10.1177/016327870202500203. PM:12026751. [DOI] [PubMed] [Google Scholar]

- 71.Somkin CP, Altschuler A, Ackerson L, et al. Organizational barriers to physician participation in cancer clinical trials. Am J Manag Care. 2005;11:413–421. PM:16044978. [PubMed] [Google Scholar]

- 72.Mazor KM, Sabin JE, Boudreau D, et al. Cluster randomized trials: opportunities and barriers identified by leaders of eight health plans. Med Care. 2007;45:S29–S37. doi: 10.1097/MLR.0b013e31806728c4. PM:17909379. [DOI] [PubMed] [Google Scholar]

- 73.Somkin CP, Altschuler A, Ackerson L, et al. Cardiology clinical trial participation in community-based healthcare systems: obstacles and opportunities. Contemp Clin Trials. 2008;29:646–653. doi: 10.1016/j.cct.2008.02.003. PM:18397842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Mazor KM, Sabin JE, Goff SL, et al. Cluster randomized trials to study the comparative effectiveness of therapeutics: stake-holders’ concerns and recommendations. Pharmacoepidemiol Drug Saf. 2009;18:554–561. doi: 10.1002/pds.1754. PM:19402030. [DOI] [PubMed] [Google Scholar]

- 75.Magid DJ, Olson KL, Billups SJ, Wagner NM, Lyons EE, Kroner BA. A pharmacist-led, American Heart Association Heart360 Web-enabled home blood pressure monitoring program. Circ Cardiovasc Qual Outcomes. 2013;6:157–163. doi: 10.1161/CIRCOUTCOMES.112.968172. PM:23463811. [DOI] [PubMed] [Google Scholar]

- 76.Etheredge LM. A rapid-learning health system. Health Aff (Millwood) 2007;26:w107–w118. doi: 10.1377/hlthaff.26.2.w107. PM:17259191. [DOI] [PubMed] [Google Scholar]

- 77.Garrido T, Barbeau R. The Northern California perinatal research unit: a hybrid model bridging research, quality improvement and clinical practice. Perm J. 2010;14:51–56. doi: 10.7812/tpp/10-014. PM:20844705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Turner JA, Saunders K, Shortreed SM, et al. Chronic opioid therapy risk reduction initiative: impact on urine drug testing rates and results. J Gen Intern Med. 2014;29:305–311. doi: 10.1007/s11606-013-2651-6. PM:24142119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Hagen NA, Stiles CR, Biondo PD, et al. Establishing a multi-centre clinical research network: lessons learned. Curr Oncol. 2011;18:e243–e249. doi: 10.3747/co.v18i5.814. PM:21980256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Psaty BM, Larson EB. Investments in infrastructure for diverse research resources and the health of the public. JAMA. 2013;309:1895–1896. doi: 10.1001/jama.2013.3445. PM:23652519. [DOI] [PubMed] [Google Scholar]

- 81.Schweikhart SA, Dembe AE. The applicability of Lean and Six Sigma techniques to clinical and translational research. J Investig Med. 2009;57:748–755. doi: 10.231/JIM.0b013e3181b91b3a. PM:19730130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Carpenter WR, Fortune-Greeley AK, Zullig LL, Lee SY, Weiner BJ. Sustainability and performance of the National Cancer Institute’s Community Clinical Oncology Program. Contemp Clin Trials. 2012;33:46–54. doi: 10.1016/j.cct.2011.09.007. PM:21986391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Mold JW, Lipman PD, Durako SJ. Coordinating centers and multi-practice-based research network (PBRN) research. J Am Board Fam Med. 2012;25:577–581. doi: 10.3122/jabfm.2012.05.110302. PM:22956693. [DOI] [PubMed] [Google Scholar]

- 84.MacKenzie SL, Wyatt MC, Schuff R, Tennenbaum JD, Anderson N. Practices and perspectives on building integrated data respositories: Results from a 2010 CTSA survey. J Am Med Inform Assoc. 2012;19:e124. doi: 10.1136/amiajnl-2011-000508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Murphy SN, Weber G, Mendis M, et al. Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2) J Am Med Inform Assoc. 2010;17:124–130. doi: 10.1136/jamia.2009.000893. PM:20190053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Overhage JM, Ryan PB, Reich CG, Hartzema AG, Stang PE. Validation of a common data model for active safety surveillance research. J Am Med Inform Assoc. 2012;19:54–60. doi: 10.1136/amiajnl-2011-000376. PM:22037893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Weber GM, Murphy SN, McMurry AJ, et al. The Shared Health Research Information Network (SHRINE): a prototype federated query tool for clinical data repositories. J Am Med Inform Assoc. 2009;16:624–630. doi: 10.1197/jamia.M3191. PM:19567788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Mascette AM, Bernard GR, Dimichele D, et al. Are central institutional review boards the solution? The National Heart, Lung, and Blood Institute Working Group’s report on optimizing the IRB process. Acad Med. 2012;87:1710–1714. doi: 10.1097/ACM.0b013e3182720859. PM:23095928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Wicks P, Vaughan T, Heywood J. Subjects no more: what happens when trial participants realize they hold the power? BMJ. 2014;348:g368. doi: 10.1136/bmj.g368. PM:24472779. [DOI] [PMC free article] [PubMed] [Google Scholar]