Abstract

Introduction:

To date, little research has been published on the impact that the transition from paper-based record keeping to the use of electronic health records (EHR) has on performance on clinical quality measures. This study examines whether small, independent medical practices improved in their performance on nine clinical quality measures soon after adopting EHRs.

Methods:

Data abstracted by manual review of paper and electronic charts for 6,007 patients across 35 small, primary care practices were used to calculate rates of nine clinical quality measures two years before and up to two years after EHR adoption.

Results:

For seven measures, population-level performance rates did not change before EHR adoption. Rates of antithrombotic therapy and smoking status recorded increased soon after EHR adoption; increases in blood pressure control occurred later. Rates of hemoglobin A1c testing, BMI recorded, and cholesterol testing decreased before rebounding; smoking cessation intervention, hemoglobin A1c control and cholesterol control did not significantly change.

Discussion:

The effect of EHR adoption on performance on clinical quality measures is mixed. To improve performance, practices may need to develop new workflows and adapt to different documentation methods after EHR adoption.

Conclusions:

In the short term, EHRs may facilitate documentation of information needed for improving the delivery of clinical preventive services. Policies and incentive programs intended to drive improvement should include in their timelines consideration of the complexity of clinical tasks and documentation needed to capture performance on measures when developing timelines, and should also include assistance with workflow redesign to fully integrate EHRs into medical practice.

Keywords: Quality Improvement, Health Information Technology, Health Policy

Introduction

Outside of large integrated health systems,1 little information has been published on the immediate impact of electronic health record (EHR) adoption on performance on clinical quality measures. As more clinical information becomes digitized, EHRs offer the potential for more efficient and rapid measurement of services delivered, and the ability to identify groups of patients to target for specific interventions, such as preventive services. Several studies examine the association between quality of care and use of EHRs after implementation2–7 and highlight the positive impact of EHRs for improving clinical quality.

At the same time, as with any new technology, the introduction of EHRs can be disruptive to both small, independent medical practices8,9 and large, integrated health systems.10 Many studies have described the challenges of EHR implementation, including the financial costs;11–13 the added work burden for physicians, nurses, and office staff during the transition period;14,15 and changes to physician and practice productivity in the months leading up to and following implementation.16,17 Overall, the implementation process requires physicians and practice staff to learn new ways to incorporate patient information into electronic forms and properly document in hundreds of text fields. These changes are complex, and practices require lengthy periods to fully transition and return to productivity levels prior to disruption.18–21

This study examines performance on nine clinical quality measures by small, independent medical practices during the transition from paper record keeping to EHR use. The main objective is to understand whether practices improved in their performance on clinical quality measures after EHR implementation and, if they did not, how long it took practices to achieve equal or higher levels of performance.

Methods

Primary Care Information Project

The Primary Care Information Project (PCIP), a bureau of the New York City Department of Health and Mental Hygiene, subsidized EHR implementation for over 3,200 providers and currently assists nearly 16,000 providers in New York City to adopt information systems that measurably improve health. PCIP provided an array of technical assistance services to participating providers, including hardware and network needs assessments and project management support during implementation. Post implementation, practices were offered onsite quality improvement (QI) coaching, revenue cycle optimization consulting, privacy and security assessments, additional training on the use of the EHR, and regular feedback on practice performance.22

In addition to QI support, PCIP codeveloped with its software partner, eClinicalWorks, a clinical decision support system (CDSS), which was implemented through a software upgrade in mid-2009. Initial versions of EHR software did not have CDSS. The upgraded software with CDSS incorporates patient-specific, point-of-care reminders that align with key quality measures. Reminders are displayed in a nonintrusive manner to avoid workflow interruption and to facilitate ordering diagnostic tests, prescribing recommended medications, and intervening with relevant counseling.23

Practice Selection

Practices were invited to participate in the study if they implemented the eClinicalWorks EHR software at least six months prior to January 2009, had signed an agreement to share aggregate clinical data and receive quality reports, and served primarily adult populations. Of the 82 eligible practices, 35 practices provided consent for independent medical reviewers to access, review, and collect data from electronic records, as well as paper records used prior to EHR adoption. Each practice received an honorarium of USD $1,000 for participating in the chart review. The study was approved by the Department of Health and Mental Hygiene Institutional Review Board number 09–067.

Data Collection

To compare performance on quality measures before and after EHR adoption, we chose to collect data through a manual review of both electronic (e-chart) and paper-based patient charts. Chart review is considered the gold standard in clinical quality measurement, and — in this study—it also allowed us to collect data from both paper-based and electronic patient charts in a consistent manner.

Data collection began in 2009, e-chart reviews were completed in 2010, and paper reviews were completed in 2011. To calculate performance trends before and after EHR implementation, we divided patient visits to each practice into four measurement periods. Two 12-month periods were designated as pre-EHR: “p1” represents the 13 to 24 months prior to EHR go-live; “p2” represents the 12 months prior to EHR go-live. The remaining two periods were designated as post-EHR: “e1” represents a period after the completion of EHR implementation up until the upgrade to incorporate CDSS; “e2” represents the 6 months after the CDSS upgrade (e2). More details about the length of the periods are available in Table 1b.

Table 1b.

Characteristics of Patients Whose Charts Were Reviewed for the Study

| Characteristic (Unique patients = 6,007) | Mean (Standard Deviation) at Each Participating Practice | |||

|---|---|---|---|---|

| Reporting Period | ||||

| Pre-EHR | Post-EHR | |||

| p1 | p2 | e1 | e2 | |

| Number of months in period | 12.0 (0.0) | 12.0 (0.0) | 11.0 (4.3) | 6.0 (0.0) |

| Total number of patient charts reviewed | 1,405 | 2,342 | 3,225 | 2,514 |

| Number of charts reviewed per practice | 54.0 (36.4) | 66.9 (39.4) | 94.9 (17.1) | 73.9 (14.4) |

| Patient age (in years)a | 51.5 (4.3) | 51.8 (4.5) | 47.3 (5.3) | 49.0 (5.8) |

| Number of visits in the reporting periodb | 3.8 (1.7) | 3.9 (1.5) | 3.4 (1.4) | 2.7 (1.1) |

| Percent female | 55.0 (21.1) | 56.1 (16.2) | 60.5 (10.8) | 60.7 (11.3) |

| Number of patients per practice with the following diagnosis: | ||||

| Diabetes | 14.1 (12.2) | 17.1 (13.1) | 14.7 (8.0) | 12.9 (7.7) |

| Hyperlipidemia | 22.7 (19.4) | 26.9 (23.0) | 29.9 (17.3) | 26.4 (15.8) |

| Hypertension | 34.1 (25.8 | 40.3 (29.2) | 37.1 (16.4) | 32.1 (13.8) |

| Ischemic vascular disease | 2.7 (2.8) | 3.8 (5.2) | 4.9 (7.2) | 4.7 (6.2) |

| Current smokerc | 13.7 (12.6) | 16.4 (15.4) | 9.4 (5.9) | 7.6 (4.7) |

| Two or more conditions | 27.2 (20.8) | 32.3 (25.6) | 28.2 (15.0) | 25.0 (14.0) |

Notes:

Analysis of Variance (ANOVA) p-value =0.0009. Duncan multiple range tests indicate that, of the charts sampled at each practice, mean age was significantly higher in patients with office visits in p1 and p2 than those with visits in e1 and e2.

ANOVA p-value = 0.0038. Duncan multiple range tests indicate that, of the charts sampled, the mean number of office visits per patient per practice was significantly lower in period e2 than in all other periods.

ANOVA p-value = 0.0028. Duncan multiple range tests indicate that, of the charts sampled, the mean number of current smokers per practice was significantly greater in period p2 than in periods e1 and e2.

Patient samples were constructed separately for the e-chart and the paper record reviews, but followed nearly identical protocols to identify and randomize patients. For the e-chart reviews, we used the EHR registry function to generate a random sample of 120 patients ages 18–75 years who had at least one office visit between the beginning of e1 and the end of e2. For paper chart reviews, we again used the EHR registry function to randomly sample patients ages 18–75 years with office visits between the beginning of e1 and the end of e2—but prior to randomizing, we limited the pool of eligible patients to those that were current smokers or had a diagnosis of diabetes, hyperlipidemia, hyper-tension, or ischemic cardiovascular disease (IVD). Because of potential attrition of available patient records for review in paper charts from 2 or more years past, we purposely restricted the sample to a pool of patients with documented health conditions for the paper chart reviews to ensure that a sufficient sample size would be available to calculate performance on chronic disease quality measures.

During the manual review of patient charts, data abstracted from both electronic and paper charts included patient age and gender, the number of office visits per period, vitals, diagnoses, lab results, and medications. For the e-chart reviews, data were abstracted from predefined structured locations (e.g., laboratory test results, vital signs, medication lists) within the EHR and free-text areas such as history of present illness and social history. For paper chart reviews, reviewers searched predefined sections, such as freestanding problem and medication lists, progress notes, lab results and initial visit intake forms.

We contracted with Island Peer Review Organization (IPRO) to conduct all chart reviews. To ensure high inter-rater reliability, each of the eight chart reviewers received standardized training from IPRO and were required to pass a test designed by IPRO before going into the field. In the event that a chart reviewer was uncertain about whether a particular data element or observation met predefined study criteria, a senior independent reviewer from IPRO and a PCIP staff member would make a determination whether to include the observation.

Practices self-reported their characteristics in a survey completed when they joined PCIP—including number of providers, number of fulltime equivalent (FTE) positions, estimated number of patients seen per year, and percentage of Medicaid or uninsured patients. Practice milestones were obtained from an operations database maintained by PCIP staff (e.g., dates of EHR implementation, upgrade to CDSS functionality).

Clinical Quality Measures

Nine clinical quality measures were calculated using data abstracted from electronic and paper charts. These measures include both process and outcome measures: antithrombotic therapy, body mass index (BMI) recorded, smoking status recorded, smoking cessation intervention offered, hemoglobin A1c (HbA1c) testing and control, cholesterol testing and control, and blood pressure control. Detailed measure descriptions, including patient eligibility criteria, are available in Appendix A.

Data Analysis

Simple frequencies and descriptive statistics were generated to calculate practice and patient characteristics (Tables 1a and 1b). We used one-way ANOVA with Duncan multiple range tests to compare patient characteristics across periods. All statistical tests were conducted using SAS 9.2 analytical software,24 and a two-tailed test with a p-value of <0.05 was considered statistically significant.

Table 1a.

Practice and Patient Panel Characteristics

| Characteristics of Practices and Patient Panels | Mean (minimum–maximum) | |||

|---|---|---|---|---|

| Participating Practices | Nonparticipating Practices | |||

| Number of practices | 35 | - | 47 | - |

| Percent of practices with one site | 85.7 | - | 79.1 | - |

| Number of providers | 3.0 | (1–40) | 3.5 | (1–21) |

| Full-time equivalent (FTE) | 3.0 | (0.7–44.8) | 4.1 | (1–63) |

| Months using EHR, as of 1/1/2010 | 20.5 | (15.2–28.5) | 19.2 | (11.1–28.4) |

| Percent Medicaid patients | 33.4 | (0–85) | 36.6 | (0–86) |

| Percent uninsured patients | 3.6 | (0–15) | 4.7 | (0–24.3) |

| Adult patient panel size | 1000 | (105–4273) | - | |

| Percent female | 60.3 | (47.7–81.1) | - | |

| Percent adult patients ages:a | ||||

| 18–44 | 41.5 | (15.3–66.9) | - | |

| 45–64 | 42.7 | (26.9–58.6) | - | |

| 65–75 | 16.1 | (4.03–38.4) | - | |

| Percent of panel with the following diagnosis: | ||||

| Diabetes | 13.4 | (0–38.4) | - | |

| Hyperlipidemia | 21.4 | (0–64.9) | - | |

| Hypertension | 32.7 | (3.4–76.5) | - | |

| Ischemic vascular disease | 6.9 | (0–29.6) | - | |

| Current smoker | 8.1 | (0.3–21.7) | - | |

Note:

Totals do not add up to 100% due to rounding.

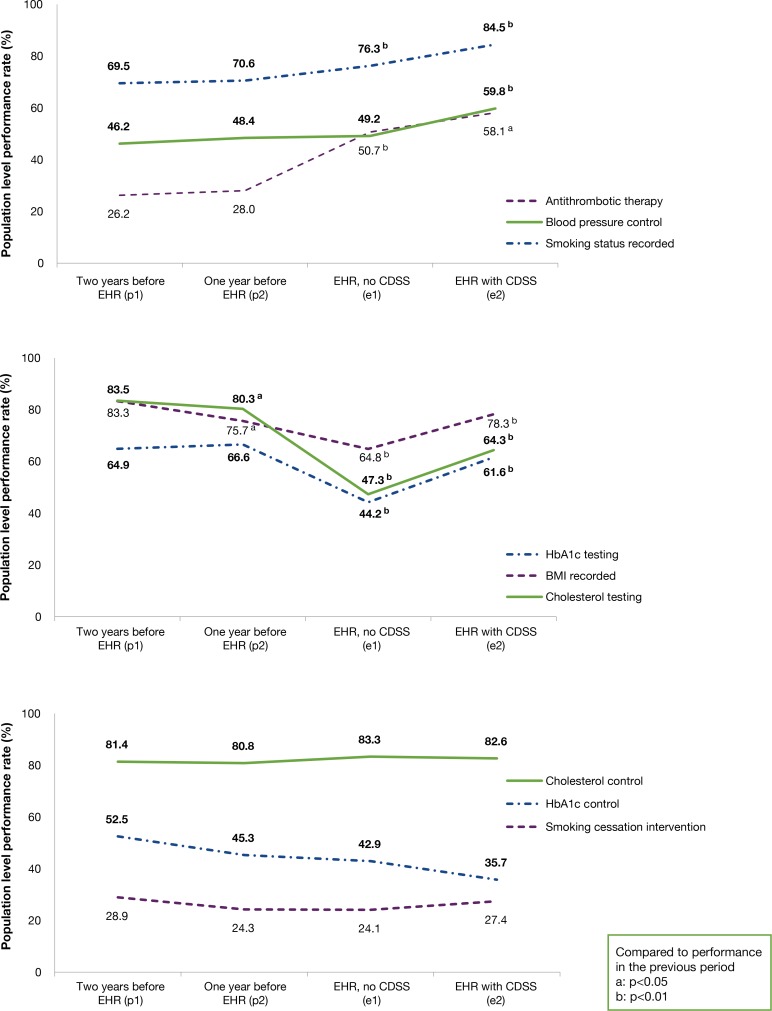

For each clinical quality measure, performance rates were defined as the proportion of eligible patients that received a particular preventive service or met a target threshold. To estimate the overall trend in performance before and after EHR adoption, we calculated population level rates for each quality measure and each period (i.e., sum of numerators across all practices divided by sum of denominators across all practices). We used Chi-square tests to compare rates across periods, and plotted population-level performance rates for each measure (Figure 1). Performance rates were calculated using Microsoft Access Structured Query Language, and graphs were created in Microsoft Excel.

Figure 1.

Change in Population Level Performance Rates on Clinical Quality Measures

We calculated practice performance rates for each measure and period for practices with a minimum of 10 observations per measure per period. We used chi-square tests to compare practice performance across periods (Appendix B). For each practice, we compared performance in each post-EHR period to performance in the second pre-EHR period (p2) for each measure. Table 2 shows a count of the practices able to meet or exceed p2 performance for each measure at the end of each post-EHR period. Table 3 shows a within-practice count of the number of measures for which each practice was able to meet or exceed its p2 performance at the end of each post-EHR period.

Table 2.

Comparison of Practice Performance Rates During the Second Pre-EHR Period (p2) and Each Post-EHR Period

| Measure | Mean Performance Rate (Standard Deviation) in p2 for Practices with 10 or More Eligible Patients in All Measurement Periods | Number of Practices with 10 or More Eligible Patients in All Measurement Periods (N=35) | Number (percent) of Practices Meeting or Exceeding p2 Performance in e1* | Number (percent) of Practices Meeting or Exceeding p2 Performance in e2* | |

|---|---|---|---|---|---|

| Antithrombotic therapy | 25.6 | (17.3) | 24 | 23 (96%) | 23 (96%) |

| Blood pressure control | 48.3 | (15.4) | 27 | 23 (85%) | 27 (100%) |

| Body mass index recorded | 65.0 | (42.0) | 31 | 18 (58%) | 23 (74%) |

| Cholesterol testing | 78.9 | (20.5) | 30 | 10 (33%) | 16 (53%) |

| Cholesterol control | 80.3 | (8.6) | 26 | 24 (92%) | 26 (100%) |

| HbA1c testing | 67.4 | (17.7) | 23 | 14 (61%) | 20 (87%) |

| HbA1c control | 45.2 | (15.0) | 4 | 4 (100%) | 4 (100%) |

| Smoking status recorded | 62.3 | (34.5) | 31 | 23 (74%) | 25 (81%) |

| Smoking cessation intervention | 20.4 | (19.8) | 10 | 7 (70%) | 7 (70%) |

Notes:

On average, the end of e1 was 11 months after EHR go-live, and the end of e2 was 17 months after EHR go-live.

BP: Blood pressure; DM: Diabetes Mellitus; GP: General population; HbA1c: Hemoglobin A1c; HLP: Hyperlipidemia; HTN: Hypertension; IVD: Ischemic vascular disease; LDL: Low density lipoprotein

Table 3.

Number of Quality Measures for Which Qualified Practices Equaled or Exceeded Their Performance in the Second Pre-EHR Period (p2), by Post-EHR Period

| Number of Measures | Number of Practices with 10 or More Eligible Patients in All Measurement Periods (n=35) | Number (Percent) of Practices Equaling or Exceeding p2 Performance in e1a | Number (Percent) of Practices Equaling or Exceeding p2 Performance in e2a |

|---|---|---|---|

| 1 | 31 | 31 (100%) | 31 (100%) |

| 2 | 31 | 31 (100%) | 31 (100%) |

| 3 | 30 | 29 (97%) | 30 (100%) |

| 4 | 30 | 25 (83%) | 28 (93%) |

| 5 | 26 | 15 (58%) | 22 (85%) |

| 6 | 25 | 10 (40%) | 18 (72%) |

| 7 | 22 | 5 (23%) | 9 (41%) |

| 8 | 10 | 1 (10%) | 3 (30%) |

| 9 | 2 | 0 (0%) | 0 (0%) |

Note:

On average, the end of the first post-EHR period (e1) was 11 months after EHR go-live, and the end of the second post-EHR period (e2) was 17 months after EHR go-live

Results

Of the 82 practices invited, 35 practices participated in the study. Of the practices that did not participate, 26 declined any chart review, and 21 did not have paper records reviewed because of the following—paper charts were archived and not accessible (14 practices), the practice refused access to paper charts (6 practices) or the practice had never used paper charts (1 practice). Participating practices did not significantly differ from nonparticipating practices on practice characteristics, including months using EHR, number of providers, patient volume, and percent of patients uninsured or with Medicaid insurance (Table 1a).

Participating practices had an average of three providers (median = one provider) and a panel of 1,000 patients per year. Most practices had one clinic site (85.7 percent), and nearly a third of patients were Medicaid insured (Table 1a). The majority (89.9 percent) of participating providers were primary care providers (i.e., internal medicine, family medicine, obstetrics and gynecology, pediatrics), and the remaining providers specialized in cardiology, endocrinology, allergy, gastroenterology, or did not specify a specialty (data not shown). Of the patients who had ever received care in the practices, 13.4 percent had a documented diagnosis of diabetes (range 0–38.4 percent), 32.7 percent had hypertension (range 3.4–76.5 percent) and 8.1 percent were documented as current smokers (range 0.3–21.7 percent) (Table 1a).

We reviewed charts for 6,007 unique patients over the four periods (Table 1b). We reviewed 1,405 patient charts in the first pre-EHR period (p1) and 3,225 in the first post-EHR period (e1). Unlike all other periods, the first post-EHR period (e1) varied in length by practice due to differences in time between EHR implementation and upgrade to CDSS; the average length was 11.0 months (minimum 1.9 months and maximum 22.1 months). Within the sampled charts, the number of patients with diabetes, hyperlipidemia, hypertension, IVD, and two or more chronic condition diagnoses was not statistically different across the periods. The number of patients identified as current smokers was significantly larger in the second pre-EHR period (p2) than in either post-EHR period; patients in the pre-EHR periods were significantly older than those sampled in post-EHR periods; and the number of office visits per patient was lower in the second EHR period (e2).

During the pre-EHR periods, population level performance rates did not significantly change, with the exception of BMI recorded (decline of 7.6 percentage points (pp)) and cholesterol testing (decline of 3.2 pp) (Figure 1). Between the second pre-EHR period (p2) and first post-EHR period (e1), population level rate increases were statistically significant for antithrombotic therapy (22.7 pp) and smoking status recorded (5.7 pp); and the rate of blood pressure control increased but was not statistically significant. Population level rates for these three measures significantly increased during the second post-EHR period (e2). In contrast, rates of HbA1c testing (−22.3 pp), BMI recorded (−10.8 pp), and cholesterol testing (−33.0 pp), significantly decreased from p2 to e1; and the same three measures rebounded in e2, with significant increases but not necessarily to levels observed in p2. HbA1c control rates decreased consistently and did not rebound. Population level rates for other measures did not change.

Subanalysis to assess practice-level performance on quality measures was available for 31 of the 35 practices; only 2 practices had a sufficient number of patients in their samples in each period to estimate performance on all nine measures. Performance rates for each measure varied across the practices and periods. Detailed practice-level performance trends are available in Appendix B.

Table 2 summarizes practice performance changes after EHR adoption. For each practice, rates from the second pre-EHR period (p2) were compared to rates from the two post-EHR periods. Comparing e1 with p2, over two-thirds of practices equaled or exceeded their p2 performance on antithrombotic therapy (96 percent), blood pressure control (85 percent), cholesterol control (87 percent), and smoking status recorded (74 percent). Within e1, fewer practices were able to do so for BMI recorded (58 percent), cholesterol testing (33 percent), and HbA1c testing (61 percent). By period e2, the percent of practices equaling or exceeding p2 performance increased for blood pressure control (100 percent), BMI recorded (74 percent), cholesterol control (100 percent), cholesterol testing (53 percent), HbA1c testing (87 percent), and smoking status recorded (81 percent).

Table 3 summarizes within-practice performance changes, and tracks—by post-EHR period—the number of measures for which a practice was able to meet or exceed its performance in the final pre-EHR period (p2). For example, the second line of data in Table 3 indicates that 31 of the 35 practices in the study had a sufficient number of patients to reliably track performance over time on two quality measures; comparing period e1 with p2, all 31 practices equaled or exceeded performance on two measures. Fewer practices (26) had a sufficient number of patients to reliably track performance on five measures; 58 percent of these practices were able to meet or exceed performance on all five measures in e1. By period e2, all qualifying practices (30) met or exceeded performance on at least three measures; 85 percent of the 26 qualifying practices were able to meet or exceed performance on five measures; fewer—3 out of 10—practices met or exceeded performance on eight measures. Of the two practices that had a sufficient number of patients to reliably track performance on all nine measures, neither was able to meet or exceed p2 performance on all measures in either e1 or e2.

Discussions

Manual reviews of both paper and electronic records were used to compare performance on clinical quality measures before and after EHR adoption. Performance did not change significantly before EHR adoption, while performance patterns after EHR adoption varied by measure. All of the selected measures were commonly well-known and validated care recommendations monitored by payers and purchasers of health care, and for which small improvements have been observed both in national trends25 and locally in New York City.26 Much of the recent literature has highlighted the overwhelmingly positive improvement of primary and secondary preventive services after the adoption of EHR;27,28 this study highlights the specific changes observed after the conversion to EHR and the time it may take for those changes to occur.

The level of complexity of the new workflows needed to document preventive services after EHR implementation may offer insight into the patterns of quality measure performance we observed. Diversity in practice workflow styles and preferences may also explain the lack of consensus regarding how long to wait after EHR implementation to examine its effect.29 The three trends observed—improvement, decline followed by rebound, and no change—offer examples of potential impact on practice workflow.

Measures in which performance increases were observed soon after EHR adoption were associated with medical record elements that could all be documented within the context of a single office visit. For example, a provider can record a patient’s smoking status and vitals and can prescribe or continue a medication during a brief patient visit. Data capture of these elements does not require any further coordination or outside resources.

In contrast, measures that did not improve soon after EHR adoption were associated with medical record elements tied to clinical documentation, tasks, or follow-up that involved coordination of information generated outside of the immediate context of a single office visit, such as the ordering and return of laboratory test results, or return office visits by patients. With electronic ordering, practices need to integrate new workflows to ensure that specimen samples and accompanying order forms are sent together. In addition, returned test results need to be incorporated as structured data into the EHR, which can be facilitated with an electronic laboratory interface. However, laboratory interfaces can generate new issues, including routing problems with results, mismatched test codes, and EHR software settings that interfere with receipt of results.30 Furthermore, not all test results may be available through an electronic interface; practices may not have electronic interfaces with all the laboratory companies they use (particularly hospital laboratories), and some practices lack an interface altogether. In these situations, a workaround is needed to incorporate results returned by paper, fax, or phone, as manual entry of results is necessary to input them back into the EHR with the original electronic order.31

Measure specifications with longer look-back periods may also explain the lower post-EHR performance on certain measures. For example, if a patient has not been diagnosed with hyperlipidemia, diabetes, or IVD, a cholesterol test is valid for up to five years. Since the study period covered only up to the first two years of EHR use, it is possible providers were following care guidelines and appropriately testing patients’ cholesterol, but that pre-EHR test results from within the relevant time frame did not get incorporated into the EHR. This is consistent with comments from providers during the course of this study; many mentioned they were not able to migrate their preimplementation data into the EHR.

Specifications defining the eligible population for some measures may also explain the observed performance patterns; examples include smoking cessation intervention and cholesterol control, in which there were no observable changes, and HbA1c control, where there was consistent, although nonstatistically significant decrease over the study period. Denominator eligibility for these measures was determined by screening and receiving test results from a prior visit. Practices that improved smoking status documentation or overcame the electronic ordering and documentation challenges to improve performance on cholesterol and HbA1c testing measures increased the size of the measure denominator of the intervention or control measure. Once testing levels improved, practices needed more time for patients to return to the practice and receive follow-up and retesting for control of HbA1c, cholesterol, and delivery of smoking cessation interventions.

Many practices did not match their pre-EHR quality performance soon after EHR implementation for one or more measures. However, by the end of the study period—an average of 17 months after EHR implementation—performance was equivalent to or higher than pre-EHR performance for six of the nine measures at more than two-thirds of the practices with available data. Half of the practices were unable to rebound to pre-EHR recorded level on cholesterol testing. We were unable to draw conclusions about practice performance patterns on HbA1c control and smoking cessation intervention, as few practices had sufficient sample sizes across the periods.

Within individual practices with sufficient data available, all were able to equal or surpass their pre-EHR performance on at least three measures by the end of the study period, but none were able to do so on all nine measures. These results are consistent with those found by the Office of the National Coordinator in an analysis of performance on Meaningful Use metrics, which indicated that although performance on individual measures can improve greatly in a relatively short period,32 providers might need substantially more time to make a completely successful transition and to equal pre-EHR performance on all measures. Another study found that many practices, in addition to time, may also require extensive technical assistance to improve performance on quality measures.33

There are several limitations to the study. All of the practices participating in this study were using the eClinicalWorks EHR system, and they implemented in 2008 or 2009. The study did not take into account differences in other EHR software systems or the versions of software implemented by eClinicalWorks. For instance, we know that not all practices received exactly the same the set of Clinical Decision Support prompts at e2, though over time, this issue was resolved. In addition, even though CDSS prompts were made available to all practices, we were unable to track their use.

This study also did not measure other potential factors that may affect performance on quality measures, such as previous experience with quality measurement or reporting, provider motivation and comfort with computers, organizational culture at the practice, the availability of resources, and the ability to support practice changes. Also not considered were variations in technical assistance received as part of the PCIP program or any financial incentives tied to participation in PCIPs Pay-for-Performance programs.

The study was also limited to a select number of quality measures, which were chosen because of their association with chronic conditions that contribute to a great deal of morbidity, mortality, and health care costs in New York City. As such, in this study we focus on patients that are likely to be sicker than the general population, and it is unclear whether the performance patterns we have observed in chronic disease care would also extend to other quality measures or healthier populations.

Another limitation was the availability of paper charts. In a pilot of the paper chart review, we used our original EHR sampling strategy to select patients for paper chart review, but found it difficult to locate a sufficient number of paper charts for patients diagnosed with the chronic conditions of interest. At the time of our data collection, many practices had been using their EHR systems for two years or longer; as such, we changed our sampling strategy to randomly select patients with at least one of the chronic conditions of interest in order to find a sufficient number of patients to generate stable estimates of quality measure performance in the pre-EHR periods. One result of this methodological decision was that patients whose charts were sampled in the pre-EHR periods were slightly older and more likely to be current smokers than those in the post-EHR periods. These differences are not a reflection of the age or diagnosis distributions at the practices (Table 2). Because patient inclusion in the denominator was based on the presence or absence of a specific diagnosis, the difference in sampling strategy should not have an impact on performance rates for seven of the nine quality measures. For the remaining two measures, BMI and smoking status recorded, it is possible that providers may have been more likely to record BMI or smoking status for older patients, since those patients may also be sicker or at greater risk for having a chronic condition; and this may have led to an upward bias in performance on those two measures in the pre-EHR periods.

Conclusion

Policymakers, stakeholders, and providers undergoing EHR implementation or planning to do so in the near future can anticipate that a successful transition from paper to electronic records may take 18 months or more. During that time, performance on clinical measures may stagnate, decline, or improve, and it is important to account for this in assessment of provider performance and patient care. The transformation process requires providers to think about and enact new ways to interact with patients and document clinical information. Policies and incentive programs intended to drive improvement should include in their timelines consideration of the complexity of clinical tasks and documentation needed to capture performance on measures, and should also include assistance with workflow redesign to fully integrate EHRs into medical practice. For example, because many practices are unable to migrate paper chart information into structured fields of the EHR, it may not be desirable for pay-for-performance programs to include measures with a five-year look-back until practices have been using their EHRs for at least five years. Furthermore, it may make sense to first incentivize measures that encourage processes and follow-up care for patients with chronic disease, and to later incorporate incentives for specific health outcomes.

Once practices become accustomed to the EHR and the new workflows, studies have shown that HIT-enabled interventions, including quality improvement, performance feedback, and pay-for-performance can all contribute to sustainable increases in the delivery of care, above and beyond pre-EHR performance. The study results are consistent with previous findings, and suggest that alongside other interventions listed above, EHR implementation can facilitate improvement in the delivery of some clinical preventive services over the long term.

Acknowledgments

This work was partially funded by the Agency for Healthcare Research and Quality (R18HS018275, R18 HS019164) and the Centers for Disease Control and Prevention Center of Excellence in Public Health Infrastructure (PO1HK000029). The authors acknowledge Drs. Farzad Mostashari and Lawrence Casalino for their input on the initial design of the study and feedback on manuscript drafts. We also thank Dr. Alan Silver and Veronica Prior of IPRO, as well as Samantha Catlett, Zeenath Rehana, Dr. Sheryl Silfen and Chloe Winther of PCIP, for their tireless efforts and assistance with data collection and study administration, and Dr. Jesse Singer for his guidance and support from PCIP.

Appendix A.

Table A1.

Measure Specifications

| Measure | Eligible Patients (denominator) | Patient Goal (numerator) | Similar To |

|---|---|---|---|

| Antithrombotic therapy | Patients 18+ years with ischemic vascular disease or 40+ with diabetes | Taking aspirin or other antithrombotic therapy | National Quality Forum (NQF) 0631 |

| Blood pressure control | Patients 18–75 years with hypertension with or without diabetes | Without diabetes: Systolic <140 mmHg, Diastolic <90 mmHg With diabetes: Systolic <130 mmHg, Diastolic <80 mmHg |

NQF 0018 NQF 0013 |

| Body mass index recorded | Patients 18+ years | BMI recorded in past 24 months | NQF 1690 |

| Cholesterol testing | General population: Male (35+ years) or female (45+) patients with no prior diagnosis High risk: Patients 18–75 with dyslipidemia and (IVD or diabetes) |

General population: Total cholesterol and/or Low Density Lipids (LDL) tested in the past 5 years High risk: LDL tested in the past 12 months |

NQF 0064 (DM); 0074 (IVD/CAD) |

| Cholesterol control | General population: Male (35+ years) or female (45+) patients with no prior diagnosis and total cholesterol and/ or Low Density Lipids (LDL) tested in the past 5 years High risk: Patients 18–75 with dyslipidemia and (IVD or diabetes) and LDL tested in the past 12 months |

General population: LDL <160 mg/ dL or total cholesterol <240 mg/dL High risk: LDL<100 mg/dL |

NQF 0064 (DM); 0074 (IVD/CAD) |

| HbA1c control | Patients 18–75 years with diabetes and hemoglobin A1c tested in the past 6 months | HbA1c level <7% | |

| HbA1c testing | Patients 18–75 years with diabetes | HbA1c test recorded in the past 6 months | NQF 0057 |

| Smoking cessation intervention | Patients 18+ years with a “current smoker” smoking status | Smoking cessation intervention (Rx or counseling) received in the past 12 months | NQF 0028B |

| Smoking status recorded | Patients 18+ years | Smoking status recorded in the past 12 months | NQF 0028A |

Appendix B. Detailed Analysis of Practice Level Performance

This table illustrates the patterns of performance of groups of practices throughout the study period, relative to the second pre-EHR (p2) time period. For example, the first line of the Antithrombotic therapy section indicates that during p2, the average performance rate was 18.1% for the 6 practices that significantly improved their performance in the first post-EHR time period (e1), and that in e1 those 6 practices’ performance averaged 63.9%. Further, in the second post-EHR time period (e2), all 6 of those practices maintained their significant improvement in performance, and their average performance in e2 was 69.4%

Table B1.

| Mean practice performance rate (%) during the time period, including stratifications by practices that improved (+), did not change (0) or declined (−) in performance compared to the second pre-EHR time period (p2) | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Measure | Second Pre-EHR (p2) Time Period | First Post-EHR (e1) Time Period | Second Post-EHR (e2) Time Period | |||||||||||||

| Total number of practices* | Overall Mean (SD) practice rate (%) | Stratified number of practices | Mean (SD) practice rate (%), stratified by e1 performance category | Stratified number of practices | Mean (SD) practice rate (%), stratified by e1 performance category | Stratified number of practices | Mean (SD) practice rate (%), stratified by e1 and e2 performance category | |||||||||

| Antithrombotic Therapy | (+) | 6 | 18.1 | (11.8) | (+) | 6 | 63.9 | (16.5) |

|

(+) | 6 | 69.4 | (11.2) | |||

|

(+) | 4 | 66.7 | (23.6) | ||||||||||||

| 24 | 25.63 | (17.3) | (0) | 17 | 25.4 | (14.6) | (0) | 17 | 41.9 | (16.4) | (0) | 13 | 48.1 | (18.0) | ||

| (−) | 1 | 75.5 | (N/A) | (−) | 1 | 42.9 | (N/A) |

|

(−) | 1 | 45.0 | (N/A) | ||||

| Blood Pressure Control | (+) | 3 | 34.7 | (30.0) | (+) | 3 | 62.0 | (17.8) |

|

(+) | 2 | 63.8 | (19.5) | |||

| (0) | 1 | 65.5 | (N/A) | |||||||||||||

|

(+) | 6 | 69.1 | (11.6) | ||||||||||||

| 27 | 48.33 | (15.4) | (0) | 20 | 47.1 | (11.0) | (0) | 20 | 47.7 | (10.9) | (0) | 14 | 53.2 | (7.5) | ||

|

(0) | 4 | 50.5 | (14.0) | ||||||||||||

| (−) | 4 | 64.6 | (12.1) | (−) | 4 | 28.2 | 12.8 | |||||||||

| Body Mass Index Recorded | (+) | 8 | 9.8 | (19.0) | (+) | 8 | 72.7 | (21.9) |

|

(+) | 8 | 85.3 | (13.4) | |||

|

(+) | 4 | 75.3 | (28.8) | ||||||||||||

| 31 | 64.97 | (42.0) | (0) | 10 | 71.9 | (39.7) | (0) | 10 | 74.6 | (34.0) | (0) | 6 | 97.3 | (2.5) | ||

|

(+) | 1 | 94.2 | (N/A) | ||||||||||||

| (0) | 4 | 85.5 | (6.6) | |||||||||||||

| (−) | 13 | 93.6 | (6.8) | (−) | 13 | 47.9 | (30.5) | (−) | 8 | 48.2 | (35.6) | |||||

| Cholesterol Testing |

|

(+) | 3 | 58.6 | (38.2) | |||||||||||

| 30 | 78.87 | (20.5) | (0) | 10 | 60.4 | (22.0) | (0) | 10 | 55.1 | (19.4) | (0) | 7 | 68.7 | (6.2) | ||

|

(+) | 1 | 68.4 | (N/A) | ||||||||||||

| (0) | 5 | 82.0 | (10.6) | |||||||||||||

| (−) | 20 | 88.1 | (12.0) | (−) | 20 | 41.9 | (22.9) | (−) | 14 | 54.8 | (24.0) | |||||

| Cholesterol Control | (+) | 2 | 64.3 | (2.6) | (+) | 2 | 86.3 | (2.9) |

|

(+) | 1 | 89.7 | (N/A) | |||

| (0) | 1 | 77.3 | (N/A) | |||||||||||||

| 26 | 80.32 | (8.6) | (0) | 22 | 81.3 | (7.7) | (0) | 22 | 83.9 | (6.9) |

|

(0) | 22 | 82.4 | (6.8) | |

|

(0) | 2 | 74.7 | (2.5) | ||||||||||||

| (−) | 2 | 85.4 | (2.3) | (−) | 2 | 64.6 | (3.0) | |||||||||

| Hemoglobin A1c Testing | (+) | 1 | 30.8 | (N/A) | (+) | 1 | 76.9 | (N/A) |

|

(+) | 1 | 80.0 | (N/A) | |||

| 23 | 67.43 | (17.7) | (0) | 13 | 62.9 | (14.8) | (0) | 13 | 53.8 | (17.0) |

|

(0) | 13 | 71.5 | (14.1) | |

|

(0) | 6 | 74.8 | (10.6) | ||||||||||||

| (−) | 9 | 78.0 | (14.2) | (−) | 9 | 31.1 | (19.8) | (−) | 3 | 42.4 | (27.2) | |||||

| Hemoglobin A1c Control | 4 | 45.21 | (15.0) | (0) | 4 | 45.2 | (15.0) | (0) | 4 | 43.3 | (15.8) |

|

(0) | 4 | 32.3 | (13.5) |

| Smoking Status Recorded | (+) | 16 | 40.9 | (33.3) | (+) | 16 | 86.8 | (13.6) |

|

(+) | 13 | 90.1 | (10.5) | |||

| (0) | 3 | 82.4 | (16.1) | |||||||||||||

|

(+) | 1 | 68.8 | (N/A) | ||||||||||||

| 31 | 62.27 | (34.5) | (0) | 7 | 83.6 | (20.0) | (0) | 7 | 85.1 | (14.8) | (0) | 6 | 93.0 | (9.0) | ||

|

(+) | 1 | 97.5 | (N/A) | ||||||||||||

| (0) | 1 | 96.5 | (N/A) | |||||||||||||

| (−) | 8 | 86.5 | (14.3) | (−) | 8 | 55.9 | (17.6) | (−) | 61.7 | (14.7) | ||||||

| Smoking Cessation Intervention | (+) | 1 | 15.4 | (N/A) | (+) | 1 | 89.5 | (N/A) |

|

(+) | 1 | 90.0 | (N/A) | |||

|

(+) | 1 | 81.8 | (N/A) | ||||||||||||

| 10 | 20.36 | (19.8) | (0) | 6 | 9.2 | (12.1) | (0) | 6 | 3.2 | (7.1) | (0) | 6.1 | (10.5) | |||

| (−) | 1 | 0.0 | (N/A) | |||||||||||||

|

(0) | 1 | 14.3 | (N/A) | ||||||||||||

| (−) | 3 | 44.4 | (12.2) | (−) | 3 | 1.5 | (2.5) | (−) | 2 | 0.0 | (0.0) | |||||

To be included in this analysis, practices needed to have a minimum of 10 patients qualifying for an individual measure in each of the three time periods

(+): practice performance significantly (p<0.05) improved compared to p2

(0): practice performance did not significantly change compared to p2

(−): practice performance significantly (p<0.05) declined compared to p2

References

- 1.Jha AK, Perlin JB, Kizer KW, et al. Effect of the Transformation of the VA Health Care System on the Quality of Care. New Engl J Med. 2003;238(22):2218–17. doi: 10.1056/NEJMsa021899. [DOI] [PubMed] [Google Scholar]

- 2.Garrido T, Jamieson L, Zhou Y, et al. Effect of Electronic Health Records in Ambulatory Care: Retrospective, Serial, Cross Sectional Study. Brit Med J. 2005;330:581. doi: 10.1136/bmj.330.7491.581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kern LM, Barrón Y, Dhopeshwarkar RA, et al. Electronic Health Records and Ambulatory Quality of Care. J Gen Intern Med. 2013;28(4):496–503. doi: 10.1007/s11606-012-2237-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cebul RD, Love TE, Jain AK, et al. Electronic Health Records and Quality of Diabetes Care. New Engl J Med. 2011;365(9):825–833. doi: 10.1056/NEJMsa1102519. [DOI] [PubMed] [Google Scholar]

- 5.Shih SC, McCullough CM, Wang JJ, et al. Health Information Systems in Small Practices: Improving the Delivery of Clinical Preventive Services. Am J Prev Med. 2011;41(6):603–609. doi: 10.1016/j.amepre.2011.07.024. [DOI] [PubMed] [Google Scholar]

- 6.De Leon SF, Shih SC. Tracking the Delivery of Prevention-Oriented Care Among Primary Care Providers Who Have Adopted Electronic Health Records. J Am Med Inform Assoc. 2011;18(Suppl 1):i91–95. doi: 10.1136/amiajnl-2011-000219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Herrin J, da Graca B, Nicewander D, et al. The Effectiveness of Implementing an Electronic Health Record on Diabetes Care and Outcomes. Health Serv Res. 2012;47(4):1522–1540. doi: 10.1111/j.1475-6773.2011.01370.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Baron RJ, Fabens EL, Schiffman M, et al. Electronic Health Records: Just Around the Corner? Or Over the Cliff? Ann Intern Med. 2005;143(3):222–226. doi: 10.7326/0003-4819-143-3-200508020-00008. [DOI] [PubMed] [Google Scholar]

- 9.Goldberg DG, Kuzel AJ, Feng LB, et al. EHRs in Primary Care Practices: Benefits, Challenges and Successful Strategies. Am J Manag Care. 2012;18(2):e48–e54. [PubMed] [Google Scholar]

- 10.Scott JT, Rundall TG, Vogt TM, et al. Kaiser Permanente’s Experience of Implementing an Electronic Medical Record: A Qualitative Study. Brit Med J. 2005;331:1313–1316. doi: 10.1136/bmj.38638.497477.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McBride M. Understanding the True Costs of an EHR Implementation. Med Econ. 2012;89(14) [PubMed] [Google Scholar]

- 12.Fleming NS, Culler SD, McCorkle R, et al. The Financial and Non-Financial Costs of Implementing Electronic Health Records in Primary Care Practices. Health Aff (Millwood) 2011;30(3):481–489. doi: 10.1377/hlthaff.2010.0768. [DOI] [PubMed] [Google Scholar]

- 13.Adler-Milstein J, Green CE, Bates DW. A Survey Analysis Suggests that Electronic Health Records Will Yield Revenue Gains for Some Practices and Losses for Many. Health Aff (Millwood) 2013;32(3):562–570. doi: 10.1377/hlthaff.2012.0306. [DOI] [PubMed] [Google Scholar]

- 14.Poissant L, Tamblyn R, Kawasumi Y. The Impact of Electronic Health Records on Time Efficiency of Physicians and Nurses: A Systematic Review. J Am Med Inform Assoc. 2005;12(5):505–516. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Howard J, Clark EC, Friedman A, et al. Electronic Health Record Impact on Work Burden in Small, Unaffiliated, Community-Based Primary Care Practices. J Gen Intern Med. 2012;28(1):107–113. doi: 10.1007/s11606-012-2192-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.De Leon S, Connelly-Flores A, Mostashari F, et al. The Business End of Health Information Technology: Can a Fully Integrated Electronic Health Record Increase Provider Productivity in a Large Community Practice? J Med Pract Manage. 2010;25(6):342–349. [PubMed] [Google Scholar]

- 17.Cheriff AD, Kapur AG, Qiu M, et al. Physician Productivity and the Ambulatory EHR in a Large Academic Multi-Dpecialty Physician Group. Int J Med Inform. 2010;79:492–500. doi: 10.1016/j.ijmedinf.2010.04.006. [DOI] [PubMed] [Google Scholar]

- 18.Jones SS, Heaton PS, Rudin RS, et al. Unraveling the IT Productivity Paradox – Lessons for Health Care. New Engl J Med. 2012;366(24):2243–2245. doi: 10.1056/NEJMp1204980. [DOI] [PubMed] [Google Scholar]

- 19.Box T, McDonell M, Helfrich CD, et al. Strategies From a Nationwide Health Information Technology Implementation: The VA CART Story. J Gen Intern Med. 2009;25(Suppl 1):72–76. doi: 10.1007/s11606-009-1130-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bowens FM, Frye PA, Jones WA. Health Information Technology: Integration of Clinical Workflow into Meaningful Use of Electronic Health Records. Perspect Health Inf Manag. 2010;7:1d. [PMC free article] [PubMed] [Google Scholar]

- 21.Ramaiah M, Subrahmanian E, Sriram RD, et al. Workflow and Electronic Health Records in Small Medical Practices. Perspect Health Inf Manag. 2012;9:1d. [PMC free article] [PubMed] [Google Scholar]

- 22.Mostashari F, Tripathi M, Kendall M. A Tale of Two Large Community Electronic Health Record Extension Projects. Health Aff (Millwood) 2009;28(2):345–356. doi: 10.1377/hlthaff.28.2.345. [DOI] [PubMed] [Google Scholar]

- 23.Shih SC, McCullough CM, Wang JJ, et al. Health Information Systems in Small Practices: Improving the Delivery of Clinical Preventive Services. Am J Prev Med. 2011;41(6):603–609. doi: 10.1016/j.amepre.2011.07.024. [DOI] [PubMed] [Google Scholar]

- 24. SAS Software version 9.2, Copyright 2002–2008 by SAS Institute Inc., Cary, NC, USA.

- 25.The National Committee for Quality Assurance The State of Healthcare Quality 2012: Focus on Obesity and Medicare Plan Improvement. http://www.ncqa.org/Portals/0/State%20of%20Health%20Care/2012/SOHC%20Report%20Web.pdf. Published 2012. Accessed March 13, 2013.

- 26.Wang JJ, Sebek KM, McCullough CM, et al. Sustained Improvement in Clinical Preventive Service Delivery Among Independent Primary Care Practices After Implementing Electronic Health Records. Prev Chronic Dis. 2013;10:120341. doi: 10.5888/pcd10.120341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chaudry B, Wang J, Wu S, et al. Systematic Review: Impact of Health Information Technology on Quality, Efficiency, and Costs of Medical Care. Ann Intern Med. 2006;144:742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 28.Buntin MB, Burke MF, Hoaglin MC, et al. The Benefits of Health Information Technology: a Review of the Recent Literature Shows Predominantly Positive Results. Health Aff (Millwood) 2011 Mar;30(3):464–471. doi: 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 29.Zheng K, Guo MH, Hanauer DA. Using Time and Motion Method to Study Clinical Work Processes and Workflow: Methodological Inconsistencies and a Call for Standardized Research. J Am Med Inform Assoc. 2011;18(5):704–710. doi: 10.1136/amiajnl-2011-000083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yackel TR, Embi PJ. Unintended Errors with EHR-Based Result Management: A Case Series. J Am Med Inform Assoc. 2010;17(1):104–107. doi: 10.1197/jamia.M3294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Parsons A, McCullough C, Wang J, et al. Validity of Electronic Health Record-Derived Quality Measurement for Performance Monitoring. J Am Med Inform Assoc. 2012;19(4):604–609. doi: 10.1136/amiajnl-2011-000557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.King J, Patel V, Furukawa M. Physician Adoption of Electronic Health Record Technology to Meet Meaningful Use Objectives: 2009–2012. ONC Data Brief #7; December 2012.

- 33.Ryan AM, Bishop TF, Shih S, et al. Small Physician Practices in New York Needed Sustained Help to Realize Gains in Quality from Use of Electronic Health Records. Health Aff (Millwood) 2013;32(1):53–6. doi: 10.1377/hlthaff.2012.0742. [DOI] [PMC free article] [PubMed] [Google Scholar]