Short abstract

Complex interventions are more than the sum of their parts, and interventions need to be better theorised to reflect this

Many people think that standardisation and randomised controlled trials go hand in hand. Having an intervention look the same as possible in different places is thought to be paramount. But this may be why some community interventions have had weak effects. We propose a radical departure from the way large scale interventions are typically conceptualised. This could liberate interventions to be responsive to local context and potentially more effective while still allowing meaningful evaluation in controlled designs. The key lies in looking past the simple elements of a system to embrace complex system functions and processes.

Divergent views

The suitability of cluster randomised trials for evaluating interventions directed at whole communities or organisations remains vexed.1 It need not be.2 Some health promotion advocates (including the WHO European working group on health promotion evaluation) believe randomised controlled trials are inappropriate because of the perceived requirement for interventions in different sites to be standardised or look the same.1,3,4 They have abandoned randomised trials because they think context level adaptation, which is essential for interventions to work, is precluded by trial designs. An example of context level adaptation might be adjusting educational materials to suit various local learning styles and literacy levels.

Lead thinkers in complex interventions, such as the UK's Medical Research Council, also think that trials of complex interventions must “consistently provide as close to the same intervention as possible” by “standardising the content and delivery of the intervention.”5 By contrast, however, they do not see this as a reason to reject randomised controlled trials.

These divergent views have led to problems on two fronts. Firstly, the field of health promotion is being turned away from randomised controlled trials.1,3,4 This could have heavy consequences for the future accumulation of high quality evidence about prevention. Secondly, when trials with organisations and whole communities do go ahead, the story is consistently becoming one of expensive failure—that is, weak or non-significant findings at huge cost.6-8 Could one of the reasons for the interventions not working be that the components have been overly standardised?

Something has to change. The current view about standardisation is at odds with the notion of complex systems. We believe that an alternative way to view standardisation could allow state of the art interventions (and ones that might look different in different sites) to be more effective and to be meaningfully evaluated in a randomised controlled trial. First, however, we have to re-examine our understanding of the term complex intervention.

What is a complex intervention?

The MRC document A Framework for the Development and Evaluation of Randomised Controlled Trials for Complex Interventions argues that “the greater the difficulty in defining precisely what exactly are the `active ingredients' of an intervention and how they relate to each other, the greater the likelihood that you are dealing with a complex intervention.”5 The document gives examples of complex interventions from the setting up of new healthcare teams, to interventions to get treatment guidelines adopted, to whole community education interventions. Setting aside the problem that this definition is also consistent with a poorly thought through intervention, we believe that the field could benefit by delving further into complexity science.

Complexity is defined as “a scientific theory which asserts that some systems display behavioral phenomena that are completely inexplicable by any conventional analysis of the systems' constituent parts.”9 Reducing a complex system to its component parts amounts to “irretrievable loss of what makes it a system.” 9 Those of us who have decomposed interventions into components for process evaluation might feel uncomfortable at this point. Yes, we may have been able to describe an intervention, say, simply in terms of the percentage of general practitioners who attend the training workshops and the percentage of patients who report having read the leaflets. Thinking about process evaluation in this way is the norm.10,11 But by doing so, have we really captured the essence of the intervention? We have, if all we think our intervention to be is the sum of the parts. But that is not, by definition, a complex intervention. It remains a simple one.

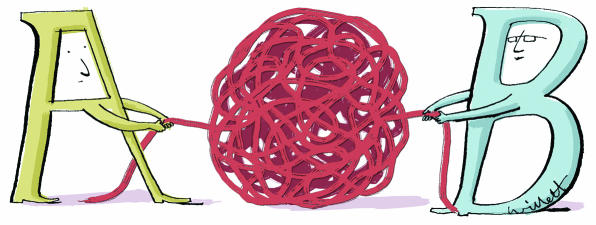

Figure 1.

Standardising complex interventions

So, could a controlled trial design (which requires something to be replicable and recognisable as the intervention in each site) ever be appropriate to evaluate a (truly) complex intervention? The answer is yes. The crucial point lies in “what” is standardised. Rather than defining the components of the intervention as standard—for example, the information kit, the counselling intervention, the workshops—what should be defined as standard are the steps in the change process that the elements are purporting to facilitate or the key functions that they are meant to have. For example, “workshops for general practitioners” are better regarded as mechanisms to engage general practitioners in organisational change or train them in a particular skill. These mechanisms could then take on different forms according to local context, while achieving the same objective. 12 (table).

Table 1.

Example of alternative ways to standardise a whole community intervention to prevent depression in a cluster trial*

|

Type of standardisation

|

||

|---|---|---|

| Principle of intervention | By form | By function |

| To educate patients about depression | All sites distribute the same written patient information kit | All sites devise ways to distribute information tailored to local literacy, language, culture, and learning styles |

| To improve detection, management, and referral of patients in primary care | All sites hold a series of three in-service training workshops for general practitioners with preset curriculums | Local health authorities are provided with materials and resources to devise in-service training tailored to local schedules, venues, and preferred learning methods |

| To involve local residents and decision makers in order to increase uptake, effectiveness, and sustainability of the intervention | A local intervention steering committee is convened in each site with representatives of pre-specified organisations | Mechanisms are devised to engage local key agencies and consumers in decision making about the intervention. Suggested options: steering committee, consultations, surveys, website, phone-ins |

| To harness and facilitate material, emotional, informational, and affirmational support across social networks of people in particular life stages | All mothers of new babies are invited to join discussion and mutual support groups. People moving into nursing homes receive three friendly visits from a designated resident | Methods to alter network size, network diversity, contact frequency, reciprocity, or types of exchanges are tailored to subgroup preferences |

Defining integrity of interventions

With most (simple) interventions, integrity is defined as having the “dose” delivered at an optimal level and in the same way in each site.10 Complex intervention thinking defines integrity of interventions differently. The issue is to allow the form to be adapted while standardising the process and function. Some precedents exist here. For example, Mullen and colleagues conducted a meta-analysis of 500 patient education trials and showed that interventions were more likely to be effective if they met particular criteria fitting with behavioural change theory—for example, being tailored to the patient's individual learning needs or being set up to provide feedback about a patient's progress.17 The indicators of quality were driven by theory and concerned the functions provided by the key elements of the intervention rather than the elements themselves (such as a video).

Context level adaptation does not have to mean that the integrity of what is being evaluated across multiple sites is lost. Integrity defined functionally, rather than compositionally, is the key.

Real world contexts

We are not the first to think this way. In school health, Durlak discussed non-standard interventions that “cannot be compartmentalised into a predetermined number and sequence of activities.”18 This sounds like complex interventions. Characterised by activities like capacity building and organisational change, these interventions have specific, theory driven principles that ensure that non-standard interventions (different forms in different contexts) conform to standard processes. They are still evaluable by randomised controlled trials. Indeed, a randomised controlled trial of such an intervention (which is “out of control” to some ways of thinking) might be exactly what is required to provide more convincing evidence that community development interventions are effective.

More studies of this type would help to reverse the current evidence imbalance when policy makers weigh up “best buys” in health promotion. At present they often have to compare traditional areas like asthma education (which usually come with randomised controlled trial evidence) with community development (which is usually supported only with case study evidence).19 The more conservative, patient targeted interventions backed by randomised controlled trials generally win hands down.19

Rethinking ways to use the intervention-context interaction to maximum effect may make complex interventions stronger. The MRC document on complex intervention trials calls for standardisation but also recognises the need in the exploratory phase to “describe the constant and variable components of a replicable intervention.”5 But it does not say how to make this distinction.

An alternative way of thinking about standardisation may help. The fixed aspects of the intervention are the essential functions. The variable aspect is their form in different contexts. In this way an intervention evaluated in a pragmatic, effectiveness, or real world trial would not be defined haphazardly, as it sometimes is now,20 as the default option for whenever researchers were not able to accomplish the standardised components that they idealised. Instead, with lateral thinking, theorising about the real world context would become the ideal,21,22reversing current custom.23 That is, instead of mimicking trial phases which assume that the “best” or the “ideal” comes from the laboratory and gets progressively compromised in real world applications, community trial design would start by trying to understand communities themselves as complex systems and how the health problem or phenomena of interest is recurrently produced by that system.

Summary points

Standardisation has been taken to mean that all the components of an intervention are the same in different sites

This definition treats a potentially complex intervention as a simple one

In complex interventions, the function and process of the intervention should be standardised not the components themselves

This allows the form to be tailored to local conditions and could improve effectiveness

Intervention integrity would be defined as evidence of fit with the theory or principles of the hypothesised change process

Conclusion

The shackles of simple intervention thinking may prove hard to throw off. Although an intervention may be described as complex, the signs of simple intervention thinking will be apparent in how the intervention is described and whether integrity is tied to the extent to which certain standardised forms are present. Investigators should justify the approach they take with interventions—that is, whether interventions are theorised as simple or complex. Complex systems rhetoric should not become an excuse to mean “anything goes.” More critical interrogation of intervention logic may build stronger, more effective interventions.

Contributors and sources: All authors were collaborators in a cluster randomised intervention trial in maternal health promotion.14 All are participating in a newly funded international collaboration on complex interventions funded by the Canadian Institutes of Health Research. PH drafted the original idea for the paper based on experience and conversations with TR and AS. All contributed to developing the idea and writing the paper.

Funding: PH and AS are senior scholars of the Alberta Heritage Foundation for Medical Research. PH is also supported by an endowment as Markin Chair in Health and Society at the University of Calgary.

Competing interests: None declared.

References

- 1.Nutbeam D. Evaluating health promotion-progress, problems and solutions. Health Promotion Int 1998;13: 27-44. [Google Scholar]

- 2.Oakley A. Experiments in knowing. Cambridge: Polity Press, 2000.

- 3.Tones K. Evaluating health promotion: a tale of three errors. Patient Educ Counsel 2000;39: 227-36. [DOI] [PubMed] [Google Scholar]

- 4.World Health Organisation Europe. Health promotion evaluation: recommendations for policy makers. Copenhagen: WHO Working Group on Health Promotion Evaluation, 1999.

- 5.Medical Research Council. A framework for the development and evaluation of randomised controlled trials for complex interventions to improve health. London: MRC, 2000.

- 6.Secker-Walker RH, Gnich W, Platt S, Lancaster T. Community interventions for reducing smoking among adults. Cochrane Database Syst Rev 2002:3; CD001745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Susser M. The tribulations of trials. Am J Public Health 1995;85: 156-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thompson B, Coronado G, Snipes SA, Puschel K. Methodological advances and ongoing challenges in designing community based health promotion interventions. Annu Rev Public Health 2003;24: 315-40. [DOI] [PubMed] [Google Scholar]

- 9.Casti JL. Would-be worlds: how simulation is changing the frontiers of science. New York: John Wiley, 1997.

- 10.Flora JA, Lefebvre RC, Murray DM, Stone EJ, Assaf A, Mittelmark MB, et al. A community education monitoring system: methods from the Stanford Five-City Project, the Minnesota Heart Health Intervention and the Pawtucket Heart Health Intervention. Health Educ Res 1993;8: 81-95. [DOI] [PubMed] [Google Scholar]

- 11.Hawe P, Degeling D, Hall J. Evaluating health promotion: a health workers guide. Sydney: MacLennan and Petty, 1990.

- 12.Castro FG, Barrera M, Martinez CR. The cultural adaptation of preventive interventions: resolving tensions between fidelity and fit. Prev Sci 2004;5: 41-5. [DOI] [PubMed] [Google Scholar]

- 13.Llewellyn-Jones RH, Baikie KA, Smithers H, Cohen J, Snowden J, Tennant CC. Multifaceted shared care intervention for later life depression in residential care: a randomised trial. BMJ 1999;319: 677-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lumley J, Small R, Brown S, Watson L, Gunn J, Mitchell C, and Dawson W. PRISM (program of resources, information and support for mothers) protocol for a community-randomised trial. BMC Public Health 2003;3: 36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Paton J, Jenkins R, Scott J. Collective approaches for the control of depression in England. Soc Psychiatry Psychiatric Epidemiol 2001;36: 423-8. [DOI] [PubMed] [Google Scholar]

- 16.Israel BA. Social networks and social support: implications for natural helping and community level interventions. Health Educ Q 1985;12: 65-80. [DOI] [PubMed] [Google Scholar]

- 17.Mullen PD, Green LW, Persinger GS. Clinical trials of patient education for chronic disease: a comparative meta analysis of intervention types. Prev Med 1985;14: 735-81. [DOI] [PubMed] [Google Scholar]

- 18.Durlak JA. Why intervention implementation is important. J Prev Intervent Community 1998;17(2): 5-18. [Google Scholar]

- 19.Hawe P, Shiell A. Preserving innovation under increasing accountability pressures: the health promotion investment portfolio approach. Health Promot J Aust 1995;5(2): 4-9. [Google Scholar]

- 20.McMahon AD. Study control, violators, inclusion criteria and defining explanatory and pragmatic trials. Stat Med 2002;21: 1365-76. [DOI] [PubMed] [Google Scholar]

- 21.Bauman LJ, Stein REK, Ireys HT. Reinventing fidelity: the transfer of social technology among settings. Am J Community Psychol 1991;19: 619-39. [DOI] [PubMed] [Google Scholar]

- 22.Ottoson JM, Green LW. Reconciling concept and context: theory of implementation Adv Health Educ Promot 1987;2: 353-82. [Google Scholar]

- 23.Flay BR. Efficacy and effectiveness trials (and other phases of research) in the development of health promotion interventions. Prev Med 1986;15: 451-74. [DOI] [PubMed] [Google Scholar]