Abstract

High-dose-rate (HDR) brachytherapy has become a popular treatment modality for localized prostate cancer. Prostate HDR treatment involves placing 10 to 20 catheters (needles) into the prostate gland, and then delivering radiation dose to the cancerous regions through these catheters. These catheters are often inserted with transrectal ultrasound (TRUS) guidance and the HDR treatment plan is based on the CT images. The main challenge for CT-based HDR planning is to accurately segment prostate volume in CT images due to the poor soft tissue contrast and additional artifacts introduced by the catheters. To overcome these limitations, we propose a novel approach to segment the prostate in CT images through TRUS-CT deformable registration based on the catheter locations. In this approach, the HDR catheters are reconstructed from the intra-operative TRUS and planning CT images, and then used as landmarks for the TRUS-CT image registration. The prostate contour generated from the TRUS images captured during the ultrasound-guided HDR procedure was used to segment the prostate on the CT images through deformable registration. We conducted two studies. A prostate-phantom study demonstrated a submillimeter accuracy of our method. A pilot study of 5 prostate-cancer patients was conducted to further test its clinical feasibility. All patients had 3 gold markers implanted in the prostate that were used to evaluate the registration accuracy, as well as previous diagnostic MR images that were used as the gold standard to assess the prostate segmentation. For the 5 patients, the mean gold-marker displacement was 1.2 mm; the prostate volume difference between our approach and the MRI was 7.2%, and the Dice volume overlap was over 91%. Our proposed method could improve prostate delineation, enable accurate dose planning and delivery, and potentially enhance prostate HDR treatment outcome.

Keywords: Prostate, CT, segmentation, transrectal ultrasound (TRUS), ultrasound-guided, HDR, brachytherapy

1. INTRODUCTION

In recent years, an increasing number of men, many of younger ages, are undergoing prostate high-dose-rate (HDR) brachytherapy instead of radical prostatectomy for localized prostate cancer [1, 2]. Prostate HDR treatment involves placing 10 to 20 catheters (needles) into the prostate gland, and then delivering radiation dose to the cancerous regions through these catheters. In CT-based prostate HDR brachytherapy, catheter insertions are commonly performed under the guidance of intra-operative transrectal ultrasound (TRUS), while treatment planning is based on post-operative CT images.

HDR prostate brachytherapy depends greatly on the precise delineation of the prostate gland on CT images. As is well known, it is challenging to define the prostate volume in CT images due to the poor soft-tissue contrast between the prostate and its surrounding tissue (background). This problem worsens in the HDR procedure, because the 10 to 20 HDR catheters inserted inside the prostate introduce significant artifacts to the prostate CT images.

Many segmentation methods have been proposed for prostate delineation in CT images. Chowdhury et al. proposed a linked statistical shape model (LSSM) that linked the shape variation of a structure of interest across MR and CT imaging modalities to concurrently segment prostate on pelvic CT images [3]. Feng et al. presented a deformable-model-based segmentation method using both shape and appearance information learned from the previous images to guide automatic segmentation of a new set of images [4]. Ghosh et al. combined the high-level-texture features and prostate-shape information with the genetic algorithm to identify the best matching region in the new to-be-segmented prostate CT image [5]. Li et al. presented an online-learning and patient-specific classification method based on the location-adaptive image context to achieve the segmentation of the prostate in CT images [6]. Liao et al used a patch-based representation in the discriminative feature space with logistic sparse LASSO as the anatomical signature to deal with low contrast problems in prostate CT images, and designed a multi-atlases label fusion method formulated under sparse representation framework to segment the prostate [7]. Chen et al. proposed a Bayesian framework which considered anatomical constraints from bones and learnt appearance information to construct the deformable model [8]. These previous methods were all based on the appearance and texture of the prostate CT images, and therefore may not work well in the HDR procedure due to the strong artifacts generated by the HDR catheters which smear the boundary and texture of the prostate on CT images.

In this study, we propose a novel approach that deforms intra-operative TRUS-based prostate contours into the CT images for prostate segmentation through TRUS-CT registration using the catheter locations. Our segmentation approach was evaluated through two studies: a prostate-phantom study and a clinical study of 5 patients undergoing HDR brachytherapy for prostate cancer.

2. METHODS

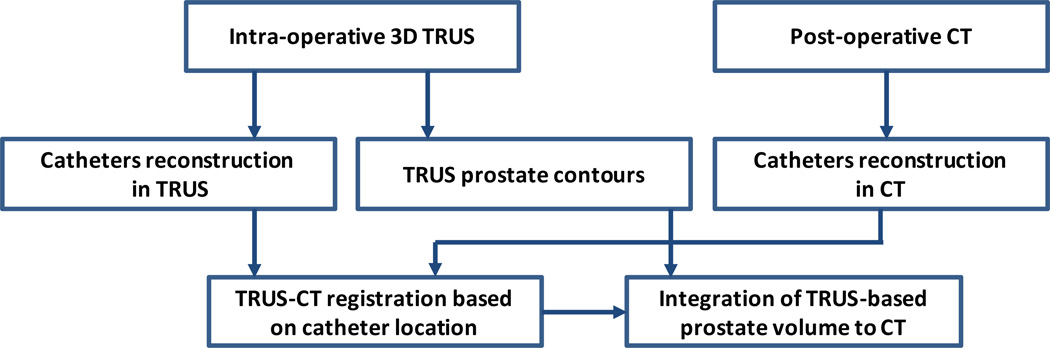

Our prostate segmentation method for the HDR prostate brachytherapy consists of 5 major steps (Fig. 1).

Step 1: 3D TRUS prostate images are captured after catheter insertion during the HDR procedure.

Step 2: After the catheter insertion, each patient receives a post-operative CT scan.

Step3: The prostate volume is contoured in the 3D TRUS images.

Step 4: A TRUS-CT deformable registration is performed using the catheters as landmarks.

Step 5: The TRUS-based prostate contour is integrated into the treatment planning CT images to segment the prostate for the HDR brachytherapy treatment.

Fig. 1. Flow chart of prostate segmentation in TRUS-guided CT-based HDR brachytherapy.

Our approach requires acquisition of 3D TRUS prostate images in the operation room (OR) right after the HDR catheters are inserted, which takes 1–3 minutes. These TRUS images are then used to create prostate contours. The HDR catheters reconstructed from the intra-operative TRUS and post-operative CT images are used as landmarks for the TRUS-CT deformable registration based on a robust point match algorithm.

The correspondences between the catheter landmarks are respectively described by a fuzzy correspondence matrixes P. The matrix P ={pij} consists of two parts. The I × J inner submatrix define the correspondences of X and Y. It is worth noting that pij have real values between 0 and 1, which denote the fuzzy correspondences between landmarks [9, 10]. In order to perform TRUS-CT deformable registration we design an overall similarity function that integrates the similarities between catheter-type landmarks and smoothness constraints on the estimated transformation between catheters in CT and TRUS images.

α, β and λ are the weights for each energy term. The first, second and third terms are the similarity for catheter landmarks, and the forth term is the smoothness constraint term. α is called the temperature parameter and its weighted term is an entropy term comes from the deterministic annealing technique [11]. β is the weight for outlier rejection term. f denotes the transformation between CT and TRUS images. The overall similarity function can be minimized by an alternating optimization algorithm that successively updates the correspondences matrixes pij, and the transformation function f. The TRUS-based prostate volume is then deformed to the CT images to finish CT prostate segmentation for treatment planning after TRUS-CT registration.

3. RESULTS

3.1 Phantom study

Our prostate segmentation method was first tested with a prostate phantom (CIRS Model 053). In this phantom, a tissue-mimicking prostate along with the structures simulating the rectal wall, seminal vesicles and urethra is contained within an 11.5×7.0×9.5 cm3 clear plastic container. To mimic a prostate HDR procedure, 14 catheters were implanted into the prostate gland under the US guidance. In addition, three gold markers were implanted at the base, mid or apex of the prostate. After the prostate segmentation, the displacements of these gold markers between the CT and post-registration TRUS were used to test the accuracy of our registration. Because the phantom prostate boundary is clear on the CT images, we used the manually segmented CT prostate volume as the gold standard to evaluate the performance of our prostate segmentation.

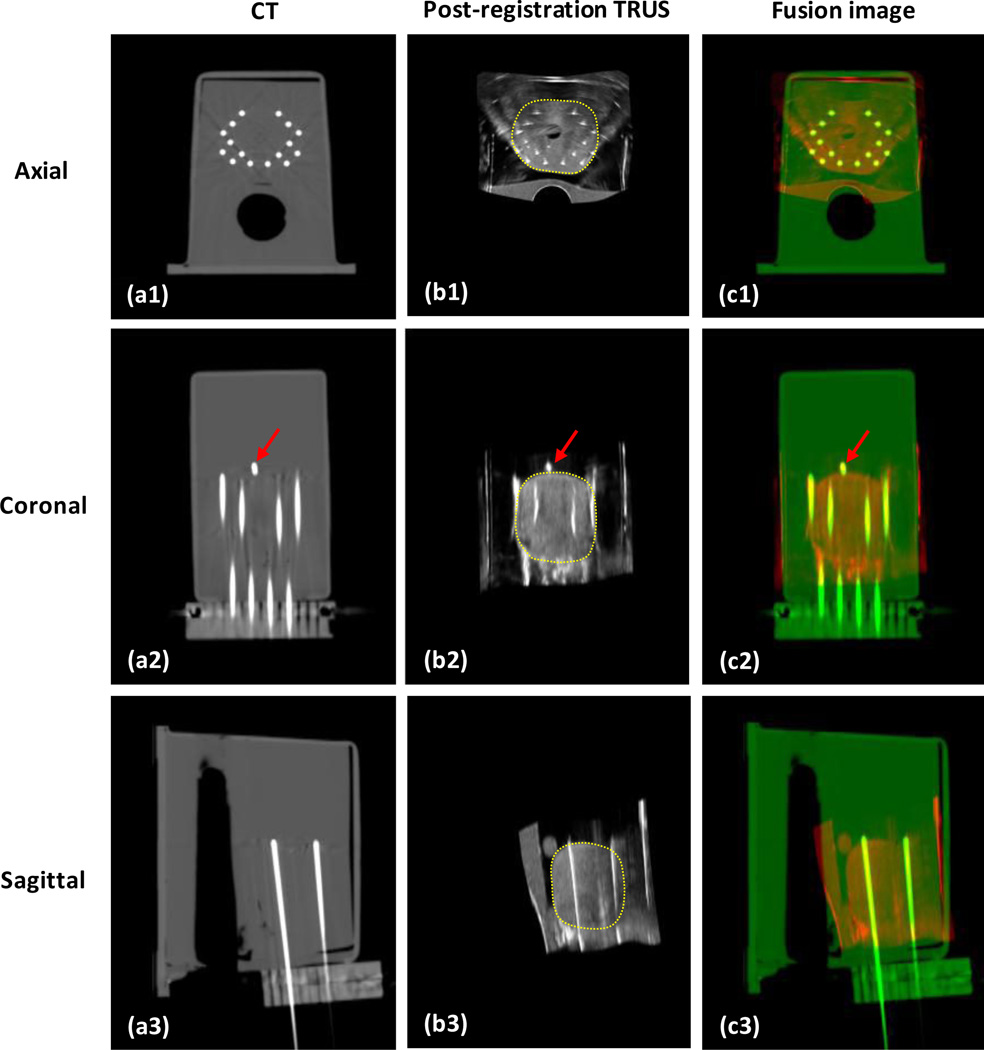

Figure 2 shows the integration of TRUS-based prostate volume into the CT images. The phantom TRUS scan was captured with a transverse (axial) pixel size of 0.08×0.08 mm2 and a step size (slice thickness) of 0.5 mm. The phantom CT was scanned with a voxel size of 0.29×0.29×1.00 mm3. The catheter artifacts are clearly displayed on the axial CT image. On the coronal images, the close match of a gold marker location between the post-registration US and CT is shown. The yellow dotted contour is the prostate transferred from the TRUS images after deformable registration between the TRUS and CT images.

Fig. 2. Integration of TRUS-based prostate volume into CT images.

a1-a3 are CT images of the prostate phantom; b1-b3 are the post-registration TRUS images; c1-c3 are the fusion images between CT and post-registration TRUS images. The close match between the gold markers (red arrows) in the CT and TRUS demonstrated the accuracy of our method.

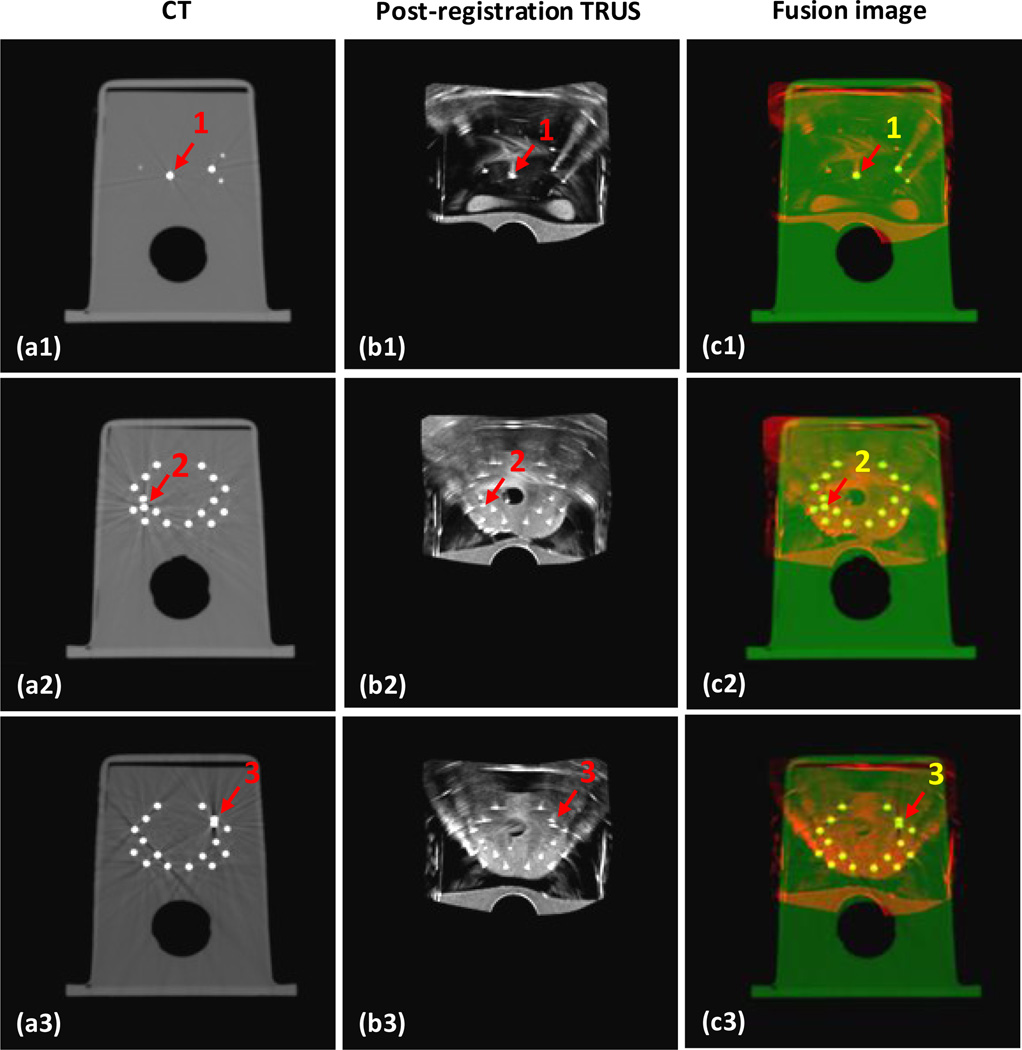

Figure 3 shows the 3 gold markers on the CT, fusion and post-registration TRUS images. The 3 gold markers are located at the base, left mid and right mid of the prostate gland. Visually, we can see the locations of the gold markers on the post-registration TRUS and CT images are very close. Quantitatively, the displacements of the three gold markers between the post-registration TRUS and CT images were 0.51, 0.29 and 0.42 mm, respectively. The mean displacement of the 3 gold markers was less than 0.5 mm; therefore our registration between the CT and TRUS achieved sub-millimeter accuracy.

Fig. 3. Segmentation accuracy is demonstrated through 3 gold markers in the prostate phantom.

a1-a3 are axial CT images of the prostate phantom; b1-b3 are its post-registration TRUS images; c1-c3 are the fusion images between the CT and post-registration TRUS images; and d1-d3 are the TRUS-CT fusion images, where the prostate volume is integrated. The close match between the 3 gold markers (red arrows) in the CT and TRUS demonstrated the accuracy of our registration.

To evaluate the accuracy of our prostate segmentation, we compared our segmented prostate with the manually contoured prostate volume from the CT images. The volume difference between the CT and post-registration TRUS is an essential measurement in the morphometric assessment of anatomical structures. In this phantom study, the absolute volume difference and Dice volume overlap were 1.65% and 97.84%, respectively. The segmentation accuracy is demonstrated by the small volume difference and large volume overlap of the two prostate contours generated from the proposed method and the gold standard.

3.2 In vivo patient study

We conducted a retrospective clinical study of 5 patients, who had received HDR brachytherapy for localized prostate cancer. All treated patients received diagnostic Magnetic Resonance Imaging (MRI) scans before the HDR brachytherapy treatment. For the HDR brachytherapy, 14–18 catheters and 3 gold markers were implanted in each patient under the TRUS guidance. Three gold markers were placed at the base, middle and apex of the prostate. Therefore, each patient had a diagnostic MR scan, intra-operative 3D ultrasound scan and post-operative CT scan. The TRUS prostate image was scanned with 2 mm step and 0.07×0.07 mm2 transverse pixel size. The patient’s CT image was captured with the voxel size of 0.68×0.68×1.00 mm3, and the MR image was obtained with the voxel size of 1.0×1.0×2.0 mm3.

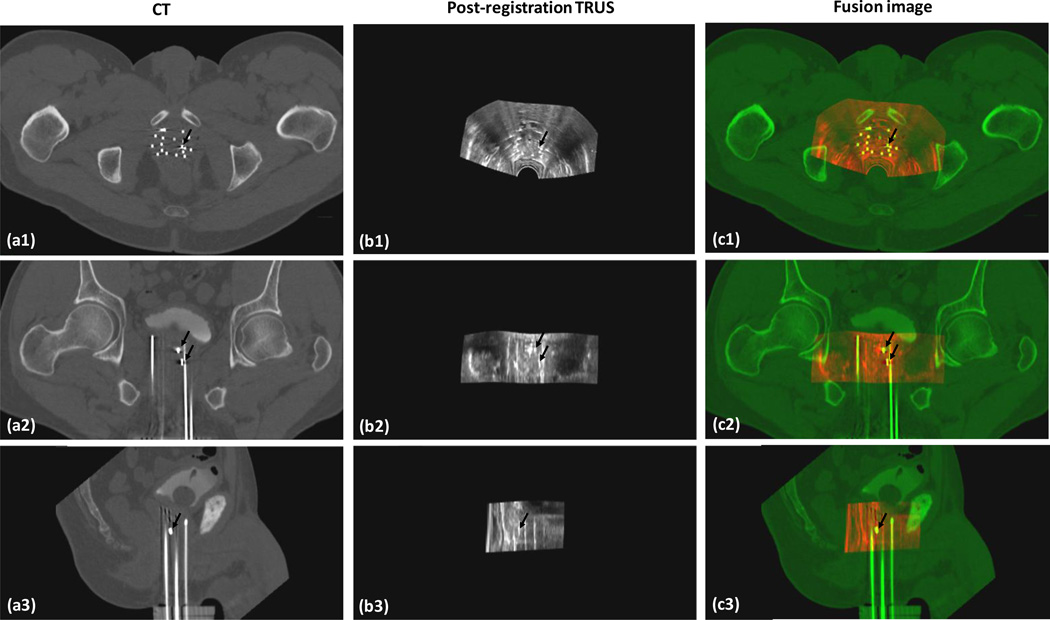

In this clinical study, the prostate segmentation was successfully performed for all 5 patients. Figure 4 shows the prostate registration results between the CT and TRUS of a 58 year old patient. The accuracy of the CT-TRUS image registration was evaluated by the displacement of each gold marker on the CT and post-registration TRUS images. The mean displacement of the gold markers between CT and registered TRUS for each patient ranged between 1.1 to 1.6 mm. Overall, the mean displacement of the 3 gold markers of all patients was 1.2±0.3 mm. Therefore, the registration of the proposed method achieved millimeter accuracy.

Fig. 4. TRUS-CT registration results.

a1-a3 are CT images of a 58-year-old prostate-cancer patient; b1-b3 are his post-registration TRUS images; and c1-c3 are the fusion images between the CT and post-registration TRUS images. The close match between the gold markers (dark arrows) on the CT and TRUS demonstrated the accuracy of our registration method.

To evaluate the accuracy of our prostate segmentation, we used MRI-defined prostate volumes. Studies have shown that MRI has a high soft tissue contrast, and can provide accurate prostate delineation [12, 13]. Therefore we used prostate manually contoured from the MR images as the gold standard to evaluate our prostate segmentation. Due to variations in the patient’s positions during the CT and MR scans, the prostate shape could be different between CT and MRI. Therefore, we transformed the MRI-defined prostate volumes onto CT images by MR-CT deformable registration [14–17]. Finally our segmented prostate volumes were compared with those defined from the MRIs.

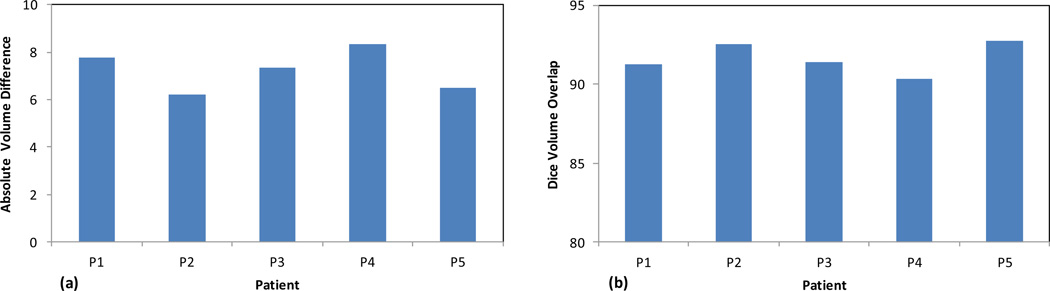

Figure 5 shows the absolute volume difference and Dice volume overlap between the MRI-defined prostate contours and our prostate segmentation for all 5 patients. The average absolute prostate-volume difference between our approach and the corresponding MRI was 7.2±0.9%, and the average Dice volume overlap was 91.6±1.3%. The small prostate volume difference and large volume overlap demonstrated the robustness of our prostate segmentation method.

Fig. 5. Evaluation of our prostate segmentation accuracy.

(a) Absolute volume difference and (b) Dice volume overlap between the corresponding prostate of TRUS and MR images of 5 prostate-cancer patients

4. CONCLUSION

In this study, we have proposed a novel CT prostate segmentation method through TRUS-CT deformable registration using the catheter locations for prostate HDR brachytherapy. While it is well known that accurate CT prostate segmentation is challenging, the 10–20 HDR catheters inserted inside the prostate worsens the problem by introducing significant artifacts in CT images. In our approach, we rely on the 3D TRUS images to provide accurate prostate delineation, and then use the HDR catheters as landmarks to register 3D TRUS prostate images to CT images. Therefore, our approach is not affected by the catheter artifacts, and can accurately segment the prostate volume in CT images. The proposed approach was evaluated through a prostate phantom study and a pilot clinical study of 5 patients. For future directions, we will incorporate automatic prostate segmentation methods into our 3D TRUS prostate segmentation to speed up the process [18–23]. Our prostate segmentation technology will improve prostate delineations, enable accurate dose planning and delivery, and potentially enhance prostate HDR treatment outcomes.

ACKNOWLEDGEMENTS

This research is supported in part by the Department of Defense (DoD) Prostate Cancer Research Program (PCRP) Award W81XWH-13-1-0269 and National Cancer Institute (NCI) Grant CA114313.

REFERENCES

- 1.Mettlin CJ, Murphy GP, McDonald CJ, et al. The National Cancer Data base Report on increased use of brachytherapy for the treatment of patients with prostate carcinoma in the U.S. Cancer. 1999;86(9):1877–1882. [PubMed] [Google Scholar]

- 2.Cooperberg MR, Lubeck DP, Meng MV, et al. The changing face of low-risk prostate cancer: trends in clinical presentation and primary management. J Clin Oncol. 2004;22(11):2141–2149. doi: 10.1200/JCO.2004.10.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chowdhury N, Toth R, Chappelow J, et al. Concurrent segmentation of the prostate on MRI and CT via linked statistical shape models for radiotherapy planning. Medical Physics. 2012;39(4):2214–2228. doi: 10.1118/1.3696376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Feng QJ, Foskey M, Chen WF, et al. Segmenting CT prostate images using population and patient-specific statistics for radiotherapy. Medical Physics. 2010;37(8):4121–4132. doi: 10.1118/1.3464799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ghosh P, Mitchell M. Prostate segmentation on pelvic CT images using a genetic algorithm. Medical Imaging 2008: Image Processing, Pts 1–3. 2008;6914:91442–91442. [Google Scholar]

- 6.Li W, Liao S, Feng QJ, et al. Learning image context for segmentation of the prostate in CTguided radiotherapy. Physics in Medicine and Biology. 2012;57(5):1283–1308. doi: 10.1088/0031-9155/57/5/1283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liao S, Gao YZ, Lian J, et al. Sparse Patch-Based Label Propagation for Accurate Prostate Localization in CT Images. Ieee Transactions on Medical Imaging. 2013;32(2):419–434. doi: 10.1109/TMI.2012.2230018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen SQ, Lovelock DM, Radke RJ. Segmenting the prostate and rectum in CT imagery using anatomical constraints. Medical Image Analysis. 2011;15(1):1–11. doi: 10.1016/j.media.2010.06.004. [DOI] [PubMed] [Google Scholar]

- 9.Chui H, Rangarajan A. A new point matching algorithm for non-rigid registration. Computer Vision and Image Understanding. 2002;89(2–3):114–141. [Google Scholar]

- 10.Yang XF, Akbari H, Halig L, et al. 3D Non-rigid Registration Using Surface and Local Salient Features for Transrectal Ultrasound Image-guided Prostate Biopsy. Medical Imaging 2011: Visualization, Image-Guided Procedures, and Modeling. 2011;7964 doi: 10.1117/12.878153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yuil AL, Kosowsky JJ. Statistical Physics Algorithms That Converge. Neural Computation. 1994;6(3):341–356. [Google Scholar]

- 12.Lee JS, Chung BH. Transrectal ultrasound versus magnetic resonance imaging in the estimation of prostate volume as compared with radical prostatectomy specimens. Urologia Internationalis. 2007;78(4):323–327. doi: 10.1159/000100836. [DOI] [PubMed] [Google Scholar]

- 13.Weiss BE, Wein AJ, Malkowicz SB, et al. Comparison of prostate volume measured by transrectal ultrasound and magnetic resonance imaging: Is transrectal ultrasound suitable to determine which patients should undergo active surveillance? Urologic Oncology-Seminars and Original Investigations. 2013;31(8):1436–1440. doi: 10.1016/j.urolonc.2012.03.002. [DOI] [PubMed] [Google Scholar]

- 14.Cheng G, Yang X, Wu N, et al. Multi-atlas-based segmentation of the parotid glands of MR images in patients following head-and-neck cancer radiotherapy. 8670 doi: 10.1117/12.2007783. 86702Q-86702Q-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dean CJ, Sykes JR, Cooper RA, et al. An evaluation of four CT-MRI co-registration techniques for radiotherapy treatment planning of prone rectal cancer patients. British Journal of Radiology. 2012;85(1009):61–68. doi: 10.1259/bjr/11855927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Betgen A, Pos F, Schneider C, et al. Automatic registration of the prostate on MRI scans to CT scans for radiotherapy target delineation. Radiotherapy and Oncology. 2007;84:S119–S119. [Google Scholar]

- 17.Levy D, Schreibmann E, Thorndyke B, et al. Registration of prostate MRI/MRSI and CT studies using the narrow band approach. Medical Physics. 2005;32(6):1895–1895. [Google Scholar]

- 18.Yang X, Fei B. 3D prostate segmentation of ultrasound images combining longitudinal image registration and machine learning. Proc. SPIE. 2012;8316:83162O. doi: 10.1117/12.912188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yang X, Schuster D, Master V, et al. Automatic 3D segmentation of ultrasound images using atlas registration and statistical texture prior. Proc. SPIE. 2011;7964:796432. doi: 10.1117/12.877888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yan P, Xu S, Turkbey B, et al. Adaptively learning local shape statistics for prostate segmentation in ultrasound. IEEE Trans Biomed Eng. 2011;58(3):633–641. doi: 10.1109/TBME.2010.2094195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhan Y, Shen D. Deformable segmentation of 3-D ultrasound prostate images using statistical texture matching method. IEEE Trans. Med. Imaging. 2006;25(3):256–272. doi: 10.1109/TMI.2005.862744. [DOI] [PubMed] [Google Scholar]

- 22.Noble JA, Boukerroui D. Ultrasound image segmentation: a survey. IEEE Trans. Med. Imaging. 2006;25:987–1010. doi: 10.1109/tmi.2006.877092. [DOI] [PubMed] [Google Scholar]

- 23.Shen D, Zhan Y, Davatzikos C. Segmentation of prostate boundaries from ultrasound images using statistical shape model. IEEE Trans. Med. Imaging. 2003;22(4):539–551. doi: 10.1109/TMI.2003.809057. [DOI] [PubMed] [Google Scholar]