Abstract

Neurobiological memory models assume memory traces are stored in neocortex, with pointers in the hippocampus, and are then reactivated during retrieval, yielding the experience of remembering. Whereas most prior neuroimaging studies on reactivation have focused on the reactivation of sets or categories of items, the current study sought to identify cortical patterns pertaining to memory for individual scenes. During encoding, participants viewed pictures of scenes paired with matching labels (e.g., “barn,” “tunnel”), and, during retrieval, they recalled the scenes in response to the labels and rated the quality of their visual memories. Using representational similarity analyses, we interrogated the similarity between activation patterns during encoding and retrieval both at the item level (individual scenes) and the set level (all scenes). The study yielded four main findings. First, in occipitotemporal cortex, memory success increased with encoding-retrieval similarity (ERS) at the item level but not at the set level, indicating the reactivation of individual scenes. Second, in ventrolateral pFC, memory increased with ERS for both item and set levels, indicating the recapitulation of memory processes that benefit encoding and retrieval of all scenes. Third, in retrosplenial/posterior cingulate cortex, ERS was sensitive to individual scene information irrespective of memory success, suggesting automatic activation of scene contexts. Finally, consistent with neurobiological models, hippocampal activity during encoding predicted the subsequent reactivation of individual items. These findings show the promise of studying memory with greater specificity by isolating individual mnemonic representations and determining their relationship to factors like the detail with which past events are remembered.

INTRODUCTION

Episodic memory allows us to remember past events with a striking level of detail and specificity. Even for types of events we have experienced hundreds of times (e.g., parking our car), we can often vividly remember an individual event within the series (e.g., today’s parking location). How does the brain accomplish this amazing feat? According to current neurobiological models (e.g., Norman & O’Reilly, 2003; Alvarez & Squire, 1994), during encoding, memory traces are stored in various cortical regions and pointers to these cortical locations are stored in the hippocampus. During retrieval, the same cortical regions are reactivated, leading to the conscious experience of remembering the original event. In typical neuro-imaging designs investigating reactivation, sets of items are presented in two different contexts during encoding (e.g., words paired with pictures or with sounds) but without these contexts during retrieval (e.g., words alone), so that differences in retrieval activity can be attributed to the reactivation of the encoding contexts. Although the standard reactivation paradigm has generated many important findings (for reviews, see Danker & Anderson, 2010; Rugg, Johnson, Park, & Uncapher, 2008), it has one limitation: It focuses on the reinstatement of sets of items (e.g., words paired with pictures) rather on the reinstatement of individual items (e.g., a particular word–picture pair). To address this limitation, we used a novel paradigm that allowed us to measure cortical reactivation not only at the set level but also at the item level.

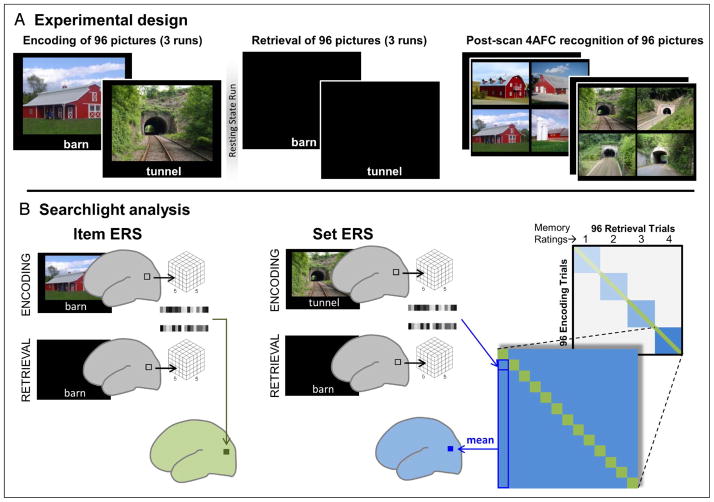

The paradigm is depicted in Figure 1A. During encoding, participants encoded 96 pictures of scenes, each paired with a descriptive verbal label (e.g., barn, tunnel), and during retrieval, they recalled the pictures in response to the labels and rated the quality of their memories on a 4-point scale. A diverse set of complex scenes was used so that scene retrieval would necessitate selecting details of a specific image from among numerous overlapping images. The validity of in-scan memory ratings was confirmed by a forced-choice recognition test after the scan, in which participants selected initially encoded scenes from among three highly similar distractors. Using representational similarity analysis, reactivation was measured as the similarity between distributed activation patterns during encoding and during retrieval (encoding-retrieval similarity [ERS]). ERS was calculated for individual items (e.g., ERS between barn during encoding and barn during retrieval; Figure 1B) and for all items in the same set, where “set” refers to all pictures with the same in-scan memory rating (e.g., ERS between tunnel during encoding and barn during retrieval). Whereas item-ERS measures the reactivation of individual pictures, set-ERS measures general reactivation of picture information. ERS results were analyzed with a 4 (in-scan memory rating: 1–4) × 2 (ERS level: item vs. set) factorial design and confirmed with a regression analysis in which the impact of ERS on memory was measured after controlling for the effects of univariate activity.

Figure 1.

(A) Experimental design. During encoding, scene pictures were presented with a descriptive label while participants judged image composition. At retrieval, descriptive labels for previously encoded scenes were presented. Participants rated how detailed their memory was for the corresponding picture on a 4-point scale. After the scan, all scenes from encoding were presented in a forced-choice recognition task that included three similar scene exemplars. Participants chose the specific image they believed was presented at encoding and then rated their confidence on a 4-point scale. (B) Overview of searchlight analysis. For item-ERS, ERS was calculated with that item’s corresponding encoding trial to produce an ERS volume for each trial. For set-ERS, ERS was calculated in the same way between each retrieval trial and all encoding trial sharing the same subsequent memory rating. These ERS values were then averaged at the voxel level to produce a single set-ERS volume for each retrieval trial that contained the mean similarity between that trial and other encoding trials of the same memory set.

The study had four main goals. Our first goal was to investigate the neural mechanisms whereby the reactivation of individual items leads to successful memory retrieval. Using the paradigm in Figure 1, we searched for regions where ERS increased as a function of memory ratings (1–4) to a greater extent for item-ERS than for set-ERS, that is, a Memory × ERS level interaction. In addition to the aforementioned neurobiological models, such interaction would be consistent with encoding specificity (Tulving & Thomson, 1973) and transfer-appropriate processing (Morris, Bransford, & Franks, 1977) principles, which assume that memory success depends on ERS. In a previous fMRI study that compared patterns of brain activity between encoding and picture recognition (Ritchey, Wing, LaBar, & Cabeza, 2013), we found a Memory × ERS level interaction in bilateral occipitotemporal cortex (OTC). This finding is consistent with evidence that OTC is sensitive to the specific contents of visual scenes (MacEvoy & Epstein, 2009, 2011). However, because this study used a recognition test at retrieval, this finding may have been related to memory reactivation or to the reprocessing of scenes during test. To better isolate neural patterns related to memory reactivation, the current study employed a cued recall test in which only verbal labels are presented during retrieval. Additionally, we used a parametric measure of recall success to index the detail with which encoded scenes were recalled, which improved our ability to assess fine-grained changes in memory vividness relative to the dichotomous measure of memory success used in our previous study. Moreover, to better localize the ERS effects, here we used a voxel-wise searchlight procedure (Figure 1B).

Our second goal was to identify regions where reactivation predicted memory success but was not sensitive to differences among pictures. In our paradigm, these are regions where ERS increases as a function of memory ratings (1–4) similarly for item-ERS and set-ERS, that is, a main effect of memory on ERS. In our previous study (Ritchey et al., 2013), we found a main effect of memory on ERS in several regions including inferior parietal cortex and ventrolateral pFC (VLPFC). These regions are likely to mediate cognitive processes that enhance scene memory when applied in a similar way during encoding and retrieval (i.e., ERS), such as control mechanisms guiding encoding and retrieval operations. Alternatively, such regions could mediate processes associated with the perception of scene pictures, which in our previous study were presented both during encoding and retrieval. Given that in the current study only verbal labels are presented during retrieval, a main effect of memory in regions associated with memory success more broadly would be more consistent with cognitive control than perceptual operations.

Our third goal was to identify regions sensitive to individual items irrespective of memory success. In our paradigm, these are regions where item-ERS is greater than set-ERS regardless of memory ratings, that is, a main effect of ERS level. Early visual cortex showed this pattern most strongly in our previous recognition study (Ritchey et al., 2013), consistent with the overlap of visual features between encoding and retrieval. In the present cued recall study, however, because the scenes are not represented during test, it is possible that item-level overlap will be observed only for successful memory trials, thus resulting in no memory-independent item-ERS effects. Alternatively, because the retrieval trials are cued with scene labels, it is possible that item-level overlap could be triggered by the automatic generation of contextual information relating to scene concepts (contextual information for a barn, a tunnel, etc.). Whereas the anterior temporal cortex is known to subserve processing of semantic or conceptual information (Peelen & Caramazza, 2012; Patterson, Nestor, & Rogers, 2007), other research has identified a set of regions, including the retrosplenial cortex (RSC) and parahippocampal cortex (PHC), as integral to the processing of contextual associations (Ranganath & Ritchey, 2012; Kveraga et al., 2011; Bar, 2004; Bar & Aminoff, 2003). Given that the retrieval cues that we used are likely to directly elicit information about a broader semantic context (whether or not they lead to recovery of episodic details of the specific image from encoding), areas generally involved in semantic or contextual associations might exhibit conceptually driven item-specificity in the present design.

Finally, our fourth goal was to investigate the core assumption of neurobiological memory models (Norman & O’Reilly, 2003; Alvarez & Squire, 1994) that successful encoding involves not only the storage of distributed cortical traces but also the storage of pointers to these traces in the hippocampus. This assumption implies that the reactivation of cortical memory traces during retrieval, and hence, the item-level similarity between encoding and retrieval cortical activation, depends on the engagement of the hippocampus during encoding. Accordingly, we tested the prediction that hippocampal activity during encoding would track memory-related cortical ERS, particularly for item-ERS. To obtain estimates of item-ERS (relative to set-ERS) for each individual trial, we standardized (z-scored) item-ERS in relation to the distribution of set-ERS for each scene.

In summary, by exploring how the reinstatement of distributed patterns varies as a function of ERS level and memory rating, we sought to clarify how different brain regions are involved in both the specificity and degree of detail that attend the retrieval of visual memories. The interaction of memory and ERS level should identify regions in which item-specific information is successfully recovered, with OTC emerging as a prime candidate given the findings of our previous study and its general role in processing higher-level visual information. By contrast, the main effect of Memory should identify regions that support memory success in general, whereas the main effect of ERS level might identify regions that are sensitive to semantic or contextual similarities between a scene and its label. Combined, this approach will enable us to map out the disparate processes contributing to successful memory reinstatement.

METHODS

Experimental Design

The experiment was divided into three phases: encoding, retrieval, and postscan recognition (see Figure 1A). Before beginning the scan, participants completed a short practice session so that they were familiar with the instructions at each phase of the study.

The scan session contained three encoding runs, a resting state run, and three retrieval runs. Stimuli consisted of 96 scene images, which were presented for 4 sec each across the three encoding runs (32 images per run, order randomized within run). At encoding, each scene was accompanied by a short descriptive label that appeared below the image (e.g., “island” or “concert hall”). Participants were asked to rate the representativeness of the image for the given label (e.g., “is this specific picture a good picture of an island”). Encoding trials were separated by an active baseline interval of 8 sec, during which participants made even/odd judgments in response to a series of digits ranging from 1 to 9.

Retrieval scans were identical in format to encoding scans, with the exception of scene presentation. On each retrieval trial, the descriptive label attending a previously presented scene was shown, and participants were asked to recall the corresponding image from encoding in as much detail as possible. Participants then rated the amount of detail with which they could remember the specific picture (1 = least amount of detail, 4 = highly detailed memory). Participants were instructed to distribute their responses across all 4 memory ratings.

Immediately following the scan session, participants completed a four-alternative forced-choice recognition test that covered all 96 pictures presented during encoding. During the first phase of each recognition trial, the target picture and three distractor pictures for the same label were presented in different quadrants of the computer screen. Participants selected the picture they believed they saw in the scanner. During the second phase of the trial, participants used a 4-point scale to rate how confident they were in the preceding recognition decision (1 = guess, 4 = very confident).

Participants

Twenty-two participants completed the experiment. One participant who lacked functional data for one encoding run because of a technical error was excluded from analysis. All analyses were performed with the remaining 21 participants (12 women, age range = 18–30 years, M = 23.5, SD = 3.0). Participants were healthy, right-handed, fluent English speakers with normal or corrected-to-normal vision. Written informed consent was obtained from each participant in accordance with a protocol approved by the Duke University institutional review board.

fMRI Acquisition and Preprocessing

Imaging data were collected using a 3T GE scanner. Following a localizer scan, functional images were acquired using a SENSE spiral-in sequence (repetition time = 2000 msec, echo time = 30 msec, field of view = 24 cm, 34 oblique slices with voxel dimensions of 3.75 × 3.75 × 3.8 mm). Functional data were collected during six task runs of equal length, with a resting state scan following the third run. Stimuli were projected onto a mirror at the back of the scanner bore, and responses were recorded using a four-button fiber-optic response box. Scanner noise was reduced with ear plugs, and head motion was minimized with foam pads. A high-resolution anatomical image (96 axial slices parallel to the AC–PC plane with voxel dimensions of 0.9 × 0.9 × 1.9 mm) was collected following functional scanning.

Preprocessing and data analysis were performed using SPM5 (Wellcome Department of Cognitive Neurology, London, UK) and custom MATLAB (The Mathworks, Natick, MA) scripts. After discarding the initial six volumes of each run to allow for scanner stabilization, images were corrected for slice time acquisition and motion and normalized to the Montreal Neurological Institute template. Searchlight analyses described below were performed on unsmoothed data.

fMRI Data Analysis

For each participant, a general linear model was constructed that included separate regressors for each trial (Rissman, Gazzaley, & D’Esposito, 2004), along with regressors corresponding to head motion and scan session. Each trial was modeled as a 0-duration event and was convolved with a canonical hemodynamic response function. The resulting beta estimates for each trial were then used for subsequent multivariate searchlight analyses.

Searchlight Analysis Overview

As illustrated by Figure 1 (bottom), a whole-brain searchlight approach (Kriegeskorte, Mur, & Bandettini, 2008; Kriegeskorte, Goebel, & Bandettini, 2006) was used to calculate ERS at the item level, where encoding and retrieval trials involved the same item (e.g., a picture of a barn at encoding, the label “barn” at retrieval), and the set level, where encoding and retrieval trials belonged to the same set (pictures with the same memory rating) but involved different items (e.g., a picture of a tunnel at encoding, the label “barn” at retrieval).

Item-ERS and set-ERS were calculated for each voxel using a searchlight procedure (Figure 1B). For item-ERS, a 5 × 5 × 5 voxel cube around each voxel was extracted and vectorized (searchlight results obtained with a smaller 3 × 3 × 3 voxel cube were similar to those reported here). Then, the encoding and retrieval vectors were correlated and the resulting correlation value (Fisher-transformed Pearson’s r, our ERS measure) was placed in the original voxel location. This process was repeated for all voxels in the brain to produce a single similarity volume for a given pair. In this fashion, a similarity volume was ultimately generated for every item-level pair, excluding those pairs where no response was made during retrieval.

For the set-ERS, the procedure was similar except that the ERS value at each voxel was the average of many set-level pairs. Specifically, for each retrieval trial (e.g., barn), ERS was calculated separately for all set-level encoding trials (tunnel–barn, bowling alley–barn, etc.) that yielded the same memory rating at retrieval (e.g., 4, or highly detailed), and these different ERS values were averaged for each voxel to create the whole-brain similarity volume for that retrieval trial. The calculation of the average set-ERS was restricted to trials with the same memory rating (1, 2, 3, or 4) so that the item-level and set-level comparisons would be similarly matched on factors like top–down attention and differ only in the content of image memory.

After ERS volumes had been calculated for each retrieval trail, fixed-effect contrasts were generated separately for item-ERS and set-ERS by averaging together all ERS volumes within each of the four memory ratings, yielding eight mean ERS images per subject (four set-level blue squares plus four item-level green diagonals in Figure 1B). Because decisions about retrieval rating may have differed across participants, the final contrasts of interest were also calculated within subjects before being submitted to random-effect analyses. This involved a weighted combination of the eight mean images to produce contrasts for the interaction of increasing memory with ERS level (item-ERS: –1.5 –0.5 0.5 1.5; set-ERS: 1.5 0.5 –0.5 –1.5), the main effect of increasing memory (item-ERS: –1.5 –0.5 0.5 1.5; set-ERS: –1.5 –0.5 0.5 1.5), and the main effect of item-ERS greater than set-ERS (item-ERS: 1 1 1 1; set-ERS: –1 –1 –1 –1). Random effects were then examined by submitting each of these three contrasts to a separate one sample t test (reported effects at p < .001, cluster extent: 10; see Table 1, Figure 3, top). Group contrasts additionally masked out white matter/CSF (SPM gray matter template values <0.1) and each main effect map was also exclusively masked with the interaction and opposite main effect contrast, both thesholded at a liberal value of p < .05. To visualize change in ERS across memory ratings, similarity volumes were averaged across subjects and within rating level, yielding separate plots for both item-ERS and set-ERS (see Figure 3, bottom).

Table 1.

Searchlight Similarity Effects

| Hem | BA | x | y | z | Vox | t Test | Regr | |

|---|---|---|---|---|---|---|---|---|

| Memory × ERS Level Interaction | ||||||||

| OTC | L | 37 | −56 | −53 | −8 | 16 | 4.64 | 6.96 |

| R | 19 | 41 | −86 | 19 | 39 | 6.81 | 3.84 | |

| Supplementary eye field | L | 6 | −4 | 19 | 68 | 20 | 5.21 | 3.68 |

| Main Effect of Memory (Linear Memory Increase) | ||||||||

| VLPFC | L | 44/46 | −41 | 15 | 23 | 67 | 5.13 | 6.08 |

| R | 44/9 | 41 | 4 | 34 | 224 | 5.66 | 5.11 | |

| Ventral parietal cortex | R | 39 | 30 | −56 | 30 | 54 | 4.79 | 4.68 |

| PHC | L | −30 | −41 | −4 | 23 | 5.36 | 4.43 | |

| Main Effect of ERS Level (Item-ERS > Set-ERS) | ||||||||

| RSC/PCC | L | 30/31 | −8 | −45 | 34 | 14 | 4.47 | NA |

Coordinates in MNI; cluster maxima showing strongest effect after controlling for univariate activity (interaction and main effect of Memory). NA = not applicable because the regression analysis used memory as a dependent variable.

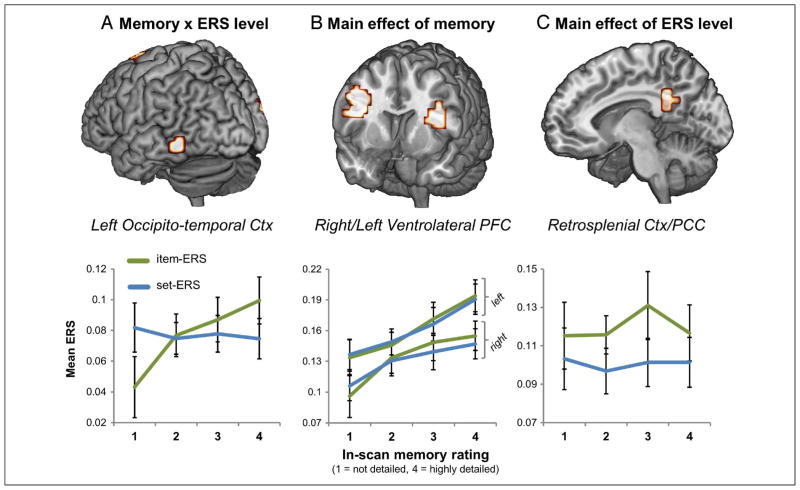

Figure 3.

Regions showing main findings of the factorial design. (A) Left OTC showed a Memory × ERS level interaction with a stronger impact on memory ratings for item-ERS than set-ERS. (B) Bilateral VLPFC showed a main effect of memory where both item-ERS and set-ERS had a similar impact on memory ratings. (C) RSC/PCC showed a main effect of ERS level; in this region item-ERS was greater than set-ERS, but they did not vary with memory scores. Line graphs below each brain image plot the mean item/set ERS value across memory ratings for the corresponding cluster and illustrate the specific nature of the effect within the cluster.

Trialwise Item-specific ERS Estimates (Standardized Item-ERS)

In addition to calculating the interaction between memory and ERS level within each subject, several pattern analyses also required estimates of ERS that reflected the difference between item-ERS and set-ERS at each trial. This was done by transforming the item-ERS value for a given trial into a z score based on that trial’s set-ERS distribution (standardized item-ERS), thus producing a series of images where positive values signaled greater similarity for item-level pairs. For example, if a voxel in the standardized item-ERS image for “barn” trial had a z score of 1, that would mean that the ERS for barn–barn was 1 standard deviation above the mean of the ERS distribution of all set-level pairs for “barn” (tunnel–barn, bowling alley–barn, ocean wave–barn, etc.). This series of standardized item-ERS images was used in the two analyses incorporating trialwise univariate activity estimates (single trial betas) described in the following sections.

Controlling for Differences in Univariate Activity (Voxelwise Regression)

Because ERS was calculated using a Pearson’s r, the results of the ERS contrasts can be assumed to be largely independent of the absolute magnitude of univariate activity during encoding or retrieval (i.e., the mean of the input vectors for each correlation value). However, activation magnitude and multivariate patterns might not be entirely orthogonal given that signal-to-noise and other factors may increase with univariate activity. To clarify whether the ERS effects shown here provide new information about multivariate patterns, beyond what would be predicted by univariate activation alone, we conducted a confirmatory within-subject regression at peak voxels in regions showing significant memory-related similarity effects. In this regression analysis, memory ratings were the dependent variable and there were four independent variables (IVs): (1) ERS, (2) univariate encoding activity, (3) univariate retrieval activity, and (4) Encoding × Retrieval (the univariate activity interaction). A test on the parameter estimates corresponding to the ERS regressor (see Table 1, rightmost column) indicated whether similarity measures uniquely predicted behavioral memory while accounting for the effects of univariate activity. For confirming the Level × Memory interaction, we used the standardized item-ERS estimates described above, which capture the difference between item-ERS and set-ERS on a single trial basis. For confirming the main effect of memory, the ERS independent variable in the regression was the average of item-ERS and set-ERS values.

Identifying Encoding Activity that Predicted ERS

The standardized item-ERS values were also used to identify univariate activity during encoding that predicted memory-related item-ERS. For this analysis, we used the Memory × ERS level interaction contrast to identify the functional ROI (left OTC) where ERS had the greatest predictive weight after accounting for univariate activity. Within this ROI, the mean standardized item-ERS value was extracted for every volume in the series. These values were correlated with the univariate activity at each voxel in the corresponding encoding trials (single-trial betas, smoothed at 8 mm) across the entire set of images to produce a single image capturing the relationship between encoding activity and individual trial item-ERS across time. Each participant’s cross-trial correlation volume was then submitted to a one-sample t test to evaluate group effects (p < .001, cluster extent: 10; see Table 2). Finally, to assess how encoding activity in these regions related directly to memory scores, a standard univariate general linear model was run on the encoding data, with separate regressors for each of the four subsequent retrieval ratings (along with regressors for missing responses, motion parameters, and run means). Within the two regions identified in the whole-brain correlation analysis between encoding activity and item-ERS, single-subject contrast images of encoding activity for the four retrieval ratings were then examined.

Table 2.

Encoding Activity Correlated with Memory-related Item-ERS

| Region with Subpeaks | Hem | BA | x | y | z | t | Vox | Lin (F) |

|---|---|---|---|---|---|---|---|---|

| Anterior MTL | 17 | |||||||

| Hippocampus | L | −15 | −8 | −19 | 4.75 | 10.56 | ||

| Parahippocampal/Amygdala | L | −15 | 0 | −30 | 5.03 | 0.07a | ||

| Occipital cortex | 30 | |||||||

| Cuneus | L | 18 | −15 | −101 | 4 | 4.53 | 2.09a | |

| Cuneus | L | 18 | −15 | −90 | 11 | 3.99 | 0.29a |

Subpeak coordinates in MNI; rightmost column displays F value for within-subject contrast of linear relationship between encoding activity and retrieval score.

Linear effect not significant.

RESULTS

Behavioral Results

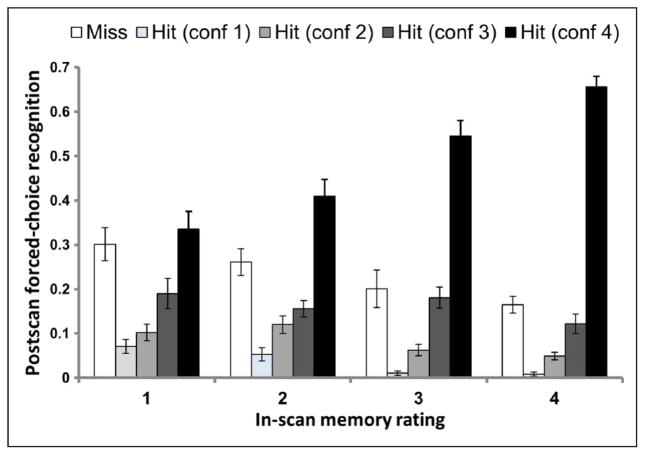

During the retrieval phase, participants distributed their responses across all four memory ratings (mean proportion of responses for ratings 1–4 were 19.9%, 22.6%, 28.8%, and 28.7%, respectively). Overall postscan recognition performance was very good, with a successful recognition rate of 77.4% (SD = 11.0%, chance = 25%). Critically, postscan recognition performance confirmed the validity of the subjective in-scan memory ratings. As illustrated by Figure 2, as in-scan memory ratings increased from 1 to 4, postscan high-confidence hits increased linearly while misses decreased linearly. Confirming these impressions, separate one-way ANOVAs showed a significant linear effect of in-scan ratings on high-confidence hits (linear component: F(1, 20) = 53.24; p < .001) and misses (linear component: F(1, 20) = 16.60; p < .001). Given the strong relationship between in-scan memory ratings and postscan recognition performance, either of these measures could have been used in fMRI analyses; we decided to use in-scan ratings because they are not affected by the particularities of the picture foils used in the postscan forced-choice recognition test.

Figure 2.

Behavioral performance. Postscan forced-choice recognition performance shown as a function of cued-recall ratings given during the scanned retrieval phase. Increases in both recognition accuracy and confidence are evident at each successive cued-recall memory level.

fMRI Results: Factorial Analysis of Memory and ERS Level

Table 1 lists brain regions showing an ERS level × Memory interaction, in addition to those showing main effects of Memory (across the four memory ratings) and ERS level (item vs. set).

Memory × ERS Level Interaction

Our first goal concerned identifying regions where itemERS had a stronger impact on memory ratings than set-ERS, that is, regions showing a Memory × ERS level interaction. As described in the Methods section, to control for potential effects of univariate activity on memory ratings, we confirmed the results of the factorial analysis with a regression analysis in which item-ERS competed with univariate measures of encoding activity, retrieval activity, and Encoding × Retrieval interactions. To isolate item-ERS, we standardized (z-scored) the itemERS measure based on the set-ERS distribution for the corresponding trial. The rightmost column in Table 1 shows the impact of item-ERS on memory ratings after accounting for any effect of univariate activity.

Consistent with our prediction, an interaction between Memory and ERS level was found in OTC (Table 1, top). The OTC region showing the strongest effect on memory performance after accounting for univariate activity was left lateral OTC (BA 37). As illustrated by Figure 3A, item-ERS in this region increased with memory ratings whereas set-ERS did not—a pattern consistent with remembering individual event information. This result extends to cued recall, a similar finding from recognition (Ritchey et al., 2013), confirming that the effect reflects true memory reactivation rather than the processing of scenes during retrieval.

In addition to OTC, a Memory × ERS level interaction (and a significant item-ERS effect in the regression analysis) was also found in the vicinity of the supplementary eye fields. As discussed later, this unexpected finding could reflect ERS in eye movement patterns (Holm & Mäntylä, 2007; Laeng & Teodorescu, 2002).

Main Effect of Memory

The main effect of Memory identified brain regions where both item-ERS and set-ERS predicted memory ratings (see middle panel of Table 1). This main effect of Memory on ERS appeared most strongly in bilateral VLPFC. As illustrated by Figure 3B, ERS in VLPFC increased monotonically as a function of memory for both item-ERS and set-ERS. Although the VLPFC was also found to show a main effect of Memory in our previous recognition study (Ritchey et al., 2013), the current result indicates that this effect is not dependent on the perception of visual scenes during retrieval. Given the memory effect applies to both item-ERS and set-ERS, this region is likely to mediate processes that enhance the encoding and retrieval of all pictures, such as cognitive control processes (Badre & Wagner, 2007).

Main Effect of ERS Level

The main effect of ERS level identified regions where item-ERS was greater than set-ERS, irrespective of memory (see bottom panel of Table 1). Unlike contrasts involving the memory factor, here we did not need to conduct a regression analysis to control for univariate activation differences across memory levels. A main effect of ERS level was evident in the posterior midline, including RSC and extending into adjacent posterior cingulate cortex (PCC). As illustrated by Figure 3C, item-ERS in this region was greater than set-ERS regardless of memory ratings. The absence of overlap in early sensory cortex is unsurprising given the nature of retrieval trials; however, the finding of a main effect within RSC/PCC is consistent with evidence linking RSC to the automatic activation of contextual associations (Ranganath & Ritchey, 2012; Kveraga et al., 2011; Bar, 2004; Bar & Aminoff, 2003), as considered further in the Discussion section.

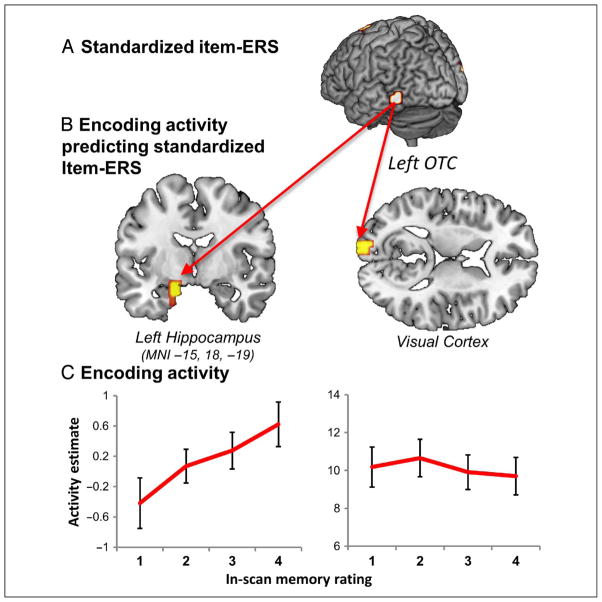

fMRI Results: Effects of Encoding Activity on ERS

Our fourth goal was to identify how the engagement of various regions at encoding related to fluctuations in item-specific reinstatement. Standardized item-ERS in left OTC was found to be correlated with encoding activity in two areas: the left anterior medial-temporal lobe, spanning the hippocampus and amygdala, and visual cortex (Table 2; Figure 4B). In both regions, increased activity during encoding was associated with higher standardized item-ERS in left OTC. The finding that hippocampal activity during encoding predicted item-ERS associated with memory success is consistent with neurobiological models that postulate that the hippocampus stores indexes for the location of cortical memory traces. These models predict that the engagement of the hippocampus during encoding is critical for the reactivation of item-specific memory traces during retrieval, and our finding supports this idea.

Figure 4.

Relationship between univariate encoding activity and item-ERS. (A) Trialwise item-ERS in left OTC was correlated with encoding activity across the whole brain to identify regions where encoding activity predicted item-ERS. (B) In two regions trial-to-trial variability in encoding activity predicted with the degree of memory-related item-ERS: the anterior MTL and early visual cortex. (C) These two regions showed different patterns between encoding activity and subsequent memory, with typical subsequent memory effects (i.e., memory-related increase in univariate activity) evident in hippocampus (graphs shows effects at hippocampal peak, MNI −15, 18 –19) but not in early visual cortex (ROI mean, see Table 2 for subpeaks).

We next examined the relationship between univariate encoding activity in MTL and visual cortex and subsequent retrieval ratings using single-subject activity contrasts (Table 2, rightmost column). As illustrated by Figure 4C (left), as hippocampal activity (MNI: −15, −8, −19) increased during encoding, so too did memory ratings during retrieval, F(1, 20) = 10.56; p = .004. By contrast, no such linear relationship was evident across the visual cortex ROI, F(1, 20) = 3.07; p = .095 (Figure 4C, right). Thus, whereas encoding activity in the hippocampus had an impact on both item-ERS and memory ratings, encoding activity in visual cortex correlated with item-ERS but not with memory. These findings provide further support to the idea that hippocampal responses during encoding are important for storing information that is later reactivated during retrieval.

DISCUSSION

Access to the specific details of previous experience is among the most vital and fundamental components of episodic memory function. In the current study, we sought to address the specificity of mnemonic representations during covert recall by comparing the cortical patterns associated with retrieval of individual items to those present in a large set of similarly recalled items. The study yielded four main findings. First, a Memory × ERS level interaction signaling the reactivation of item-level information was evident in OTC. Second, a main effect of Memory on ERS level was found in VLPFC, a region often associated with control processes involved in successful memory. Third, a main effect of ERS level suggestive of automatic contextual associations was found in RSC/PCC. Finally, consistent with neurobiological memory models, increases in item-ERS within left OTC were predicted by encoding activity within the hippocampus. These four findings are discussed in separate sections below.

Memory-related Increases in Item-specific Reinstatement

Our first goal was to investigate the neural mechanisms whereby the reactivation of individual items leads to successful memory retrieval. Using a searchlight procedure, we looked for regions where ERS increased as a function of memory ratings to a greater extent for item-ERS than set-ERS. On the basis of our previous study (Ritchey et al., 2013) and the nature of the stimuli used, we reasoned that this Memory × ERS level interaction should appear in OTC. Consistent with this idea, we found that within OTC, item-ERS, but not set-ERS, increased as a function of memory success (see Figure 3A). Moreover, a regression analysis showed that item-ERS for each scene (z-scored within the set-ERS distribution for the scene) significantly predicted memory after accounting for differences in univariate activity. This is exactly the pattern one would expect from a region mediating the reactivation of individual events.

The localization of the interaction effects to late visual regions (e.g., left OTC; Figure 3A) suggests the recapitulation of higher-level features as participants attempted to recall the details of individual scenes. Given the large set of scenes from which items were recalled and the brief exposure to any one image, it appears that such higher-level features are nonetheless quite specific. The OTC finding replicates and extends a similar finding observed in our previous ERS study (Ritchey et al., 2013), which also used a large set of complex pictures as stimuli. As noted in the Introduction, the interpretation of the OTC interaction in this previous study was complicated by the use of a recognition test because ERS measures could partly reflect the presentation of scenes during retrieval. The current study addressed this issue by using verbal labels as retrieval cues.

In addition to our previous study using recognition (Ritchey et al., 2013), past memory-related findings appear consistent with a role for OTC in the conscious recovery of item details, as in studies where left OTC (e.g., fusiform gyrus/BA 37) indexed the amount of information recollected (Vilberg & Rugg, 2007) or the recovery of details allowing fine-grained discrimination (Kensinger & Schacter, 2007). In another study, distributed patterns in OTC during cued-recall distinguished both stimulus category type and tracked behavioral memory measures on a postscan test (Kuhl, Rissman, Chun, & Wagner, 2011). Studies comparing internally generated representations (e.g., during mental imagery or memory retrieval) to those present during active visual perception have shown distinct but partially overlapping profiles, with response to the former often appearing in more anterior or higher-level regions of the ventral visual pathway (Johnson & Johnson, 2014; Buchsbaum, Lemire-Rodger, Fang, & Abdi, 2012; Cichy, Heinzle, & Haynes, 2012; Johnson, Mitchell, Raye, D’Esposito, & Johnson, 2007; O’Craven & Kanwisher, 2000).

It is important to emphasize that the circumscribed OTC regions we found in the current study (Table 1, top) are likely to be only a subset of many cortical regions storing memory traces for individual scenes. One possible explanation of why a significant Memory × ERS level interaction was found in relatively limited cortical areas is that our method emphasized regions showing consistent ERS level differences across all 96 scenes investigated. These scenes encompassed a multitude of objects and semantic features, which likely have diverse representations across ventral cortex (Stansbury, Naselaris, & Gallant, 2013; Huth, Nishimoto, Vu, & Gallant, 2012). Nonetheless, a recent study examining memory for short movie clips found reactivation effects in lateral OTC (Buchsbaum et al., 2012), consistent with the present findings. In a related study where multiple unique cue words were paired with one of four scenes (Staresina, Henson, Kriegeskorte, & Alink, 2012), successful memory for specific word–scene pairs was instead reflected in distributed patterns across PHC, consistent with the role of this region in processing scenes and spatial information more broadly (Epstein, 2008; Epstein, Graham, & Downing, 2003; Epstein & Kanwisher, 1998). This last finding suggests that the distribution of cortical reactivation may vary depending on how memory is tested and what aspects of stimuli (even within the same stimulus type) are emphasized at encoding. For example, in Staresina et al. (2012), the reappearance of scenes across unique pairs may have allowed for a more detailed encoding of associative linkages between words and scenes or of the spatial relationships within each scene. By contrast, brief exposure to a wider array of scenes, as in this study, may instead shift processing toward specific objects and other scene components useful for individuation, leading to the dissociation between item-ERS and set-ERS in OTC regions.

In addition to OTC, a Memory × ERS level interaction was also found near the supplementary eye field, a region linked to the control of saccadic eye movements (Grosbras, Lobel, Van de Moortele, LeBihan, & Berthoz, 1999; Schlag & Schlag-Rey, 1987, 1992) and to memory-guided saccades (Anderson et al., 1994). One possible explanation is that item-ERS in this region reflects ERS in eye movements and that this similarity enhanced memory. Consistent with this idea, eye movement studies have shown ERS in eye movement patterns is associated with better memory (Holm & Mäntylä, 2007) and that participants reinstate encoding eye movements during retrieval, even during free recall (Laeng & Teodorescu, 2002). If confirmed, this effect would add to growing evidence for the role of eye movements in visual memory (for a review, see Hannula et al., 2010).

ERS Associated with General Increases in Memory Detail

Our second goal was to identify regions where reactivation predicted memory success but was not sensitive to differences among pictures. Accordingly, we searched for regions where ERS increased as a function of memory ratings similarly for item-ERS and set-ERS. We found that in bilateral VLPFC, ERS increased monotonically as a function of memory for both item-ERS and set-ERS, with effects extending somewhat more dorsally in the left hemisphere (see Figure 3B).

The finding that VLPFC similarity during encoding and retrieval phases was associated with increasing memory ratings is consistent with evidence from fMRI studies examining memory success at both phases (for meta-analyses, see Kim, 2011; Spaniol et al., 2009). Memory-related increases in VLPFC similarity also fit well with prior fMRI evidence that the contribution of this region to memory success is very broad. For example, one study found that the VLPFC showed successful memory activity for both encoding and retrieval and for both faces and scenes (Prince, Dennis, & Cabeza, 2009). Another study using multivariate pattern classification to determine the previous encoding condition of retrieval trials (Johnson, McDuff, Rugg, & Norman, 2009) found that left VLPFC reactivation was associated with better memory but did not distinguish between recollection and familiarity. Thus, VLPFC seems to mediate a mechanism that enhances both encoding and retrieval but does not express stimulus-specific encoding details during retrieval. One likely candidate is the cognitive control of encoding and retrieval operations, which has been consistently linked to VLPFC in univariate fMRI studies of episodic and semantic memory (Badre & Wagner, 2007).

Importantly, the present findings suggest that memory performance may depend on the pattern of activity across regions like VLPFC (as captured by ERS) in addition to the overall level of activation in such a region. In fact, a regression analysis in this region showed that ERS predicted memory ratings even after controlling for the differences in univariate activity (during encoding, retrieval, or both). This finding is intriguing given the impact of ERS on memory did not differ for item-ERS versus set-ERS, suggesting that that this region was not sensitive to differences across individual scenes. One possible explanation of this pattern is that the memory-enhancing effect of ERS in this region did not reflect a similarity in item-specific representations but a similarity in processes engaged across all items. Future studies may help to better characterize dimensions of cognitive control that facilitate memory across both phase and stimulus type, and multivariate analyses may prove particularly useful in this endeavor given that they are less sensitive to subject-level variability in activity patterns (Davis et al., 2014), a factor that may reduce the ability to detect commonalities in univariate analyses.

Item-specific Similarity Independent of Memory

Our third goal was to identify any regions sensitive to individual items irrespective of memory success, which in our paradigm corresponded to the main effect of ERS level (item-ERS > set-ERS). Early visual cortex showed this most clearly in our previous recognition study (Ritchey et al., 2013), which is not surprising given that scenes were presented during both encoding and retrieval phases. In the current study, by contrast, only verbal labels for the scenes (e.g., “barn,” “tunnel”) were presented at test, and hence, the match between encoding and retrieval was more abstract (e.g., the concept of a barn). Therefore, to the extent that any memory-insensitive match effects appeared in this study, we reasoned they should reflect access to preexistent semantic knowledge about typical scenes. The existence of such “scene schema” (Biederman, Mezzanotte, & Rabinowitz, 1982; Palmer, 1975) or context frames (Bar & Ullman, 1996) is supported by the cognitive neuroscience literature (Aminoff, Schacter, & Bar, 2008; Bar, 2004; Bar & Aminoff, 2003), which has linked context frames to univariate activity in RSC and PHC, two candidate regions for the current main effect of ERS.

As illustrated by Figure 3B, RSC/PCC showed a main effect of ERS, with greater item-ERS than set-ERS independent of the memory for the specific pictures encoded. This pattern fits well with the idea that RSC supports context frames for typical scenes (beach, bowling alley, etc.), which are automatically activated either by a picture or by a verbal label. The context frame reflects knowledge for the scene (e.g., what a bowling alley is), and hence, it does not depend on episodic memory for a particular picture during encoding (captured by the memory ratings) or the recapitulation of the specific visual elements contained in each scene. Previous work on context frames has found that RSC shows greater activity for objects strongly associated with one specific context (e.g., supermarket cart, roulette wheel, microscope) than for objects weakly associated with many different contexts (e.g., rope, camera, basket; Kveraga et al., 2011; Bar & Aminoff, 2003). Related research has also shown that RSC activity during the encoding of object pairs belonging to the same context frame (e.g., baby bottle and teddy bear) predicts false memories to objects from same context frame (e.g., “crib”; Aminoff et al., 2008).

The notion of abstract context frames provides a parsimonious account of the main effect of ERS level: Each scene automatically activated the corresponding context frame during encoding (e.g., beach, bowling alley) and the verbal labels during retrieval activated the same context frames, driving a similar response in posterior mid-line activation patterns. In contrast to RSC/PCC, no main effect of ERS level was found in PHC. One explanation for this difference between RSC and PHC concerns the hypothesis that RSC holds more abstract representations than PHC (Bar, Aminoff, & Schacter, 2008; Bar, 2007; Bar & Aminoff, 2003). In fact, in the aforementioned fMRI study on the strength of contextual associations (Bar & Aminoff, 2003), both RSC and PHC activity showed greater activity for strong than weak contextual associations but only RSC was insensitive to the format of the stimuli, whereas analogous findings have been shown for changes in visual context (Park & Chun, 2009). Thus, it is possible that only the more abstract contextual representations stored in RSC/PCC were similar across the two phases of this study, in which perceptual format varied. Yet, this interpretation is speculative and must be confirmed by future research on the differential contributions of RSC and PHC to the storage and processing of context frame representations. In any event, the current finding for the main effect of ERS level extends past research, suggesting that RSC is not only activated when context frames are accessed but also that activation patterns within this region are sensitive to differences among different context frames.

Trial-to-Trial Encoding Activity Related to Item-specific Memory Reinstatement

Our final goal was to test the hypothesis that the hippocampus both binds disparate pieces of information into integrated representations (Eichenbaum, 2004; Eichenbaum, Otto, & Cohen, 1994) and stores pointers to cortical traces for those individual representations (Norman & O’Reilly, 2003; Alvarez & Squire, 1994). To test this hypothesis, we identified regions where trial-to-trial variability in encoding activity tracked item-specific ERS (standardized itemERS). Two areas showed this relationship: the anterior MTL/hippocampus and the occipital cortex (cuneus and lingual gyrus).

The anterior MTL finding makes sense in the context of the present design, where the formation of an integrated memory representation for each individual scene (e.g., specific red barn/green front lawn/trees in background/blue sky) during encoding was critical for reconstructing a very similar mental scene in the scanner and to identifying target scenes from similar distractors outside the scanner. In fact, recent research has shown that encoding phase hippocampal activity rises along with the discriminability of category-related patterns across ventral OTC, a relationship also tied to subsequent recollection (Gordon, Rissman, Kiani, & Wagner, 2013). The anterior MTL effects found in the current study also extend into the amygdala. Although the scene images used were not chosen to be deliberately emotional in nature, it is possible that some scenes nonetheless elicited affective responses (e.g., aesthetically pleasing landscape pictures), triggering amygdala-dependent encoding processes. In our previous study, which used a mixture of emotionally salient and neutral images, amygdala activity during recognition was correlated with the fidelity of cortical reinstatement for emotional images (Ritchey et al., 2013). In general, amygdala interactions with occipitotemporal regions have been shown to support the visual specificity of affective memories (Kensinger, Garoff-Eaton, & Schacter, 2007), and thus, the involvement of this region at encoding or retrieval may result in perceptually vivid memories. The current results are therefore consistent with the well-established finding that the hippocampus facilitates relational and recollection-based memory as well as the idea that the amygdala supports visually specific memories. In both cases, it is the binding of item-specific details that gives rise to a visually rich retrieval experience.

The visual cortex finding was not predicted, but it is reasonable given that visual perception of the scene is a precondition for successful encoding and hence later retrieval. The posterior (early) location of the univariate encoding effect (BA 18, y = −101) contrasts with the more anterior location of the item-ERS effect (BA 37, y = −53) and could reflect a difference between the higher resolution of information during visual perception versus memory-based visual imagery. Interestingly, although the early visual cortex activation was correlated with standardized item-ERS in left OTC, which showed the strongest Memory × ERS level interaction, early visual activity did not vary with subsequent memory ratings (see Figure 4C). One possible explanation is that early visual cortex contributes to the formation of visually detailed representations but does not itself predict subsequent memory, which depends on the reinstatement of higher-level information in later OTC regions. At any rate, further research in understanding the role of early and late visual processing in memory-based imagery and reactivation is warranted.

Conclusion

In summary, we investigated reactivation at the item and set levels using representational similarity analysis in a novel scene recall design. The study yielded four main findings. First, in OTC, ERS at the item level predicted memory ratings. This result indicates that OTC is one of the cortical areas where memory for individual visual events are stored during encoding and reactivated during retrieval. Second, in VLPFC, ERS in activation patterns predicted memory ratings but did not differ across scenes. This result suggests that one of the cognitive control processes mediated by this region benefits both the encoding and the retrieval of diverse scene stimuli. Third, activation patterns in RSC/PCC were sensitive to individual items but did not vary as a function of memory ratings. This finding aligns with past research linking the RSC to abstract representations of scenes known as context frames, which may be automatically activated during the two phases independently of episodic memory. Finally, hippocampal activity during encoding predicted item-level reactivation in the left OTC region showing the strongest interaction effect, consistent with neurobiological models that postulate that the hippocampus stores pointers to the location of cortical memory traces.

Our findings demonstrate the ability to detect the reinstatement of individual items during covert recall by comparing distributed retrieval patterns specific for a given scene to those of many similarly remembered items. The link between level of detail at retrieval and item-specific cortical reinstatement underscores the importance of differentiating similar items from one another, even when behavioral memory measures do not differ across items. At a more general level, the present findings contribute to the wider effort of characterizing the structure of mnemonic information in the context of basic memory operations. Continued study of individual memory representations may help address both longstanding questions of memory function and allow for future research to delve into the idiosyncrasies of remembering individual events from amongst a lifetime of memories.

Acknowledgments

We thank Karl Eklund for assistance with data analysis. This research was supported by National Institutes of Health grant AG19731 (R. C.).

References

- Alvarez P, Squire LR. Memory consolidation and the medial temporal lobe: A simple network model. Proceedings of the National Academy of Sciences, USA. 1994;91:7041–7045. doi: 10.1073/pnas.91.15.7041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aminoff E, Schacter DL, Bar M. The cortical underpinnings of context-based memory distortion. Journal of Cognitive Neuroscience. 2008;20:2226–2237. doi: 10.1162/jocn.2008.20156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson TJ, Jenkins IH, Brooks DJ, Hawken MB, Frackowiak RS, Kennard C. Cortical control of saccades and fixation in man. A PET study. Brain. 1994;117:1073–1084. doi: 10.1093/brain/117.5.1073. [DOI] [PubMed] [Google Scholar]

- Badre D, Wagner AD. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 2007;45:2883–2901. doi: 10.1016/j.neuropsychologia.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nature Reviews Neuroscience. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bar M. The proactive brain: Using analogies and associations to generate predictions. Trends in Cognitive Sciences. 2007;11:280–289. doi: 10.1016/j.tics.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E. Cortical analysis of visual context. Neuron. 2003;38:347–358. doi: 10.1016/s0896-6273(03)00167-3. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Schacter DL. Scenes unseen: The parahippocampal cortex intrinsically subserves contextual associations, not scenes or places per se. The Journal of Neuroscience. 2008;28:8539–8544. doi: 10.1523/JNEUROSCI.0987-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Ullman S. Spatial context in recognition. Perception. 1996;25:343–352. doi: 10.1068/p250343. [DOI] [PubMed] [Google Scholar]

- Biederman I, Mezzanotte RJ, Rabinowitz JC. Scene perception: Detecting and judging objects undergoing relational violations. Cognitive Psychology. 1982;14:143–177. doi: 10.1016/0010-0285(82)90007-x. [DOI] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. The neural basis of vivid memory is patterned on perception. Journal of Cognitive Neuroscience. 2012;24:1867–1883. doi: 10.1162/jocn_a_00253. [DOI] [PubMed] [Google Scholar]

- Cichy RM, Heinzle J, Haynes JD. Imagery and perception share cortical representations of content and location. Cerebral Cortex. 2012;22:372–380. doi: 10.1093/cercor/bhr106. [DOI] [PubMed] [Google Scholar]

- Danker JF, Anderson JR. The ghosts of brain states past: Remembering reactivates the brain regions engaged during encoding. Psychological Bulletin. 2010;136:87–102. doi: 10.1037/a0017937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis T, LaRocque KF, Mumford J, Norman KA, Wagner AD, Poldrack RA. What do differences between multi-voxel and univariate analysis mean? How subject-, voxel-, and trial-level variance impact fMRI analysis. Neuroimage. 2014;97:271–283. doi: 10.1016/j.neuroimage.2014.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum HB. Hippocampus: Cognitive processes and neural representations that underlie declarative memory. Neuron. 2004;44:109–120. doi: 10.1016/j.neuron.2004.08.028. [DOI] [PubMed] [Google Scholar]

- Eichenbaum HB, Otto T, Cohen NJ. Two functional components of the hippocampal memory system. Behavioral and Brain Sciences. 1994;17:449–472. [Google Scholar]

- Epstein R, Graham KS, Downing PE. Viewpoint-specific scene representations in human parahippocampal cortex. Neuron. 2003;37:865–876. doi: 10.1016/s0896-6273(03)00117-x. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Epstein RA. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends in Cognitive Sciences. 2008;12:388–396. doi: 10.1016/j.tics.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD. Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cerebral Cortex. 2013 doi: 10.1093/cercor/bht194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosbras MH, Lobel E, Van de Moortele PF, LeBihan D, Berthoz A. An anatomical landmark for the supplementary eye fields in human revealed with functional magnetic resonance imaging. Cerebral Cortex. 1999;9:705–711. doi: 10.1093/cercor/9.7.705. [DOI] [PubMed] [Google Scholar]

- Hannula DE, Althoff RR, Warren DE, Riggs L, Cohen NJ, Ryan JD. Worth a glance: Using eye movements to investigate the cognitive neuroscience of memory. Frontiers in Human Neuroscience. 2010;4:166. doi: 10.3389/fnhum.2010.00166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holm L, Mäntylä T. Memory for scenes: Refixations reflect retrieval. Memory & Cognition. 2007;35:1664–1674. doi: 10.3758/bf03193500. [DOI] [PubMed] [Google Scholar]

- Huth AG, Nishimoto S, Vu AT, Gallant JL. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron. 2012;76:1210–1224. doi: 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JD, McDuff SGR, Rugg MD, Norman KA. Recollection, familiarity, and cortical reinstatement: A multivoxel pattern analysis. Neuron. 2009;63:697–708. doi: 10.1016/j.neuron.2009.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MR, Johnson MK. Decoding individual natural scene representations during perception and imagery. Frontiers in Human Neuroscience. 2014;8:59. doi: 10.3389/fnhum.2014.00059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MR, Mitchell KJ, Raye CL, D’Esposito M, Johnson MK. A brief thought can modulate activity in extrastriate visual areas: Top–down effects of refreshing just-seen visual stimuli. Neuroimage. 2007;37:290–299. doi: 10.1016/j.neuroimage.2007.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA, Garoff-Eaton RJ, Schacter DL. How negative emotion enhances the visual specificity of a memory. Journal of Cognitive Neuroscience. 2007;19:1872–1887. doi: 10.1162/jocn.2007.19.11.1872. [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL. Remembering the specific visual details of presented objects: Neuroimaging evidence for effects of emotion. Neuropsychologia. 2007;45:2951–2962. doi: 10.1016/j.neuropsychologia.2007.05.024. [DOI] [PubMed] [Google Scholar]

- Kim H. Neural activity that predicts subsequent memory and forgetting: A meta-analysis of 74 fMRI studies. Neuroimage. 2011;54:2446–2461. doi: 10.1016/j.neuroimage.2010.09.045. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proceedings of the National Academy of Sciences, USA. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis—Connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience. 2008;2:1–28. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Chun MM, Wagner AD. Fidelity of neural reactivation reveals competition between memories. Proceedings of the National Academy of Sciences, USA. 2011;108:5903–5908. doi: 10.1073/pnas.1016939108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kveraga K, Ghuman AS, Kassam KS, Aminoff EA, Hämäläinen MS, Chaumon M, et al. Early onset of neural synchronization in the contextual associations network. Proceedings of the National Academy of Sciences, USA. 2011;108:3389–3394. doi: 10.1073/pnas.1013760108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laeng B, Teodorescu DS. Eye scanpaths during visual imagery reenact those of perception of the same visual scene. Cognitive Science. 2002;26:207–231. [Google Scholar]

- MacEvoy SP, Epstein RA. Decoding the representation of multiple simultaneous objects in human occipitotemporal cortex. Current Biology. 2009;19:943–947. doi: 10.1016/j.cub.2009.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacEvoy SP, Epstein RA. Constructing scenes from objects in human occipitotemporal cortex. Nature Neuroscience. 2011;14:1323–1329. doi: 10.1038/nn.2903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris CD, Bransford JD, Franks JJ. Levels of processing versus transfer appropriate processing. Journal of Verbal Learning and Verbal Behavior. 1977;16:519–533. [Google Scholar]

- Norman KA, O’Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: A complementary-learning-systems approach. Psychological Review. 2003;110:611–646. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- O’Craven KM, Kanwisher N. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. Journal of Cognitive Neuroscience. 2000;12:1013–1023. doi: 10.1162/08989290051137549. [DOI] [PubMed] [Google Scholar]

- Palmer TE. The effects of contextual scenes on the identification of objects. Memory & Cognition. 1975;3:519–526. doi: 10.3758/BF03197524. [DOI] [PubMed] [Google Scholar]

- Park S, Chun MM. Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. Neuroimage. 2009;47:1747–1756. doi: 10.1016/j.neuroimage.2009.04.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. Conceptual object representations in human anterior temporal cortex. The Journal of Neuroscience. 2012;32:15728–15736. doi: 10.1523/JNEUROSCI.1953-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prince SE, Dennis NA, Cabeza R. Encoding and retrieving faces and places: Distinguishing process- and stimulus-specific differences in brain activity. Neuropsychologia. 2009;47:2282–2289. doi: 10.1016/j.neuropsychologia.2009.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Ritchey M. Two cortical systems for memory-guided behaviour. Nature Reviews Neuroscience. 2012;13:713–726. doi: 10.1038/nrn3338. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D’Esposito M. Measuring functional connectivity during distinct stages of a cognitive task. Neuroimage. 2004;23:752–763. doi: 10.1016/j.neuroimage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Ritchey M, Wing EA, LaBar KS, Cabeza R. Neural similarity between encoding and retrieval is related to memory via hippocampal interactions. Cerebral Cortex. 2013;23:2818–2828. doi: 10.1093/cercor/bhs258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rugg MD, Johnson JD, Park H, Uncapher MR. Encoding-retrieval overlap in human episodic memory: A functional neuroimaging perspective. Progress in Brain Research. 2008;169:339–352. doi: 10.1016/S0079-6123(07)00021-0. [DOI] [PubMed] [Google Scholar]

- Schlag J, Schlag-Rey M. Evidence for a supplementary eye field. Journal of Neurophysiology. 1987;57:179–200. doi: 10.1152/jn.1987.57.1.179. [DOI] [PubMed] [Google Scholar]

- Schlag J, Schlag-Rey M. Neurophysiology of eye movements. In: Chauvel P, Delgado-Escuerta A, editors. Advances in neurology. Vol. 57. New York: Raven Press; 1992. pp. 135–155. [PubMed] [Google Scholar]

- Spaniol J, Davidson PSR, Kim ASN, Han H, Moscovitch M, Grady CL. Event-related fMRI studies of episodic encoding and retrieval: Meta-analyses using activation likelihood estimation. Neuropsychologia. 2009;47:1765–1779. doi: 10.1016/j.neuropsychologia.2009.02.028. [DOI] [PubMed] [Google Scholar]

- Stansbury DE, Naselaris T, Gallant JL. Natural scene statistics account for the representation of scene categories in human visual cortex. Neuron. 2013;79:1025–1034. doi: 10.1016/j.neuron.2013.06.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Henson RNA, Kriegeskorte N, Alink A. Episodic reinstatement in the medial temporal lobe. The Journal of Neuroscience. 2012;32:18150–18156. doi: 10.1523/JNEUROSCI.4156-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tulving E, Thompson DM. Encoding specificity and retrieval processes in episodic memory. Psychological Review. 1973;80:352–373. [Google Scholar]

- Vilberg KL, Rugg MD. Dissociation of the neural correlates of recognition memory according to familiarity, recollection, and amount of recollected information. Neuropsychologia. 2007;45:2216–2225. doi: 10.1016/j.neuropsychologia.2007.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]