Summary

Background

Hospital length of stay and discharge destination are important outcome measures in evaluating effectiveness and efficiency of health services. Although hospital administrative data are readily used as a data collection source in health services research, no research has assessed this data collection method against other commonly used methods.

Objective

Determine if administrative data from electronic patient management programs are an effective data collection method for key hospital outcome measures when compared with alternative hospital data collection methods.

Method

Prospective observational study comparing the completeness of data capture and level of agreement between three data collection methods; manual data collection from ward-based sources, administrative data from an electronic patient management program (i.PM), and inpatient medical record review (gold standard) for hospital length of stay and discharge destination.

Results

Manual data collection from ward-based sources captured only 376 (69%) of the 542 inpatient episodes captured from the hospital administrative electronic patient management program. Administrative data from the electronic patient management program had the highest levels of agreement with inpatient medical record review for both length of stay (93.4%) and discharge destination (91%) data.

Conclusion

This is the first paper to demonstrate differences between data collection methods for hospital length of stay and discharge destination. Administrative data from an electronic patient management program showed the highest level of completeness of capture and level of agreement with the gold standard of inpatient medical record review for both length of stay and discharge destination, and therefore may be an acceptable data collection method for these measures.

Keywords: Data collection, health services research, length of stay, discharge destination, research methodology

1. Background

Hospital length of stay and discharge destination are important outcome measures used in health services research. Length of stay is often used as a measure of healthcare efficiency by researchers, clinicians, administrators, and policy makers in planning the delivery of health services [1–4]. Hospital discharge destination is an influencing factor on length of stay [3] providing a means of quantifying numerous measures such as; requirements for sub-acute inpatient care; changes in level of care; requirement for community services following discharge, and hospital death. Due to their importance, researchers use these measures as key indicators of effectiveness and efficiency when evaluating hospital service provision.

There are numerous methods by which data may be collected for research and hospital administrative purposes. Observational length of stay and discharge destination data can be manually collected from ward-based sources including; nursing handover records, paper-based ward discharge/transfer records, paper-based inpatient medical records, direct observation by experienced personnel, and 24hour recall of key hospital personnel (e.g. Nurse Unit Manager). However this is a time-intensive data collection method which is difficult to fund in the current environment where research funding is increasingly more competitive. Retrospective data may be collected via review of scanned inpatient medical records post hospital discharge. While this approach has previously been used as a gold standard measure for multiple outcomes [5–11], transforming medical records into research data is resource intensive and requires exceptional knowledge and skill in medical context and research [12]. An alternative to these traditional methods of hospital data collection has been to extract electronic administrative data. Retrospective hospital administrative data has become a commonly used source of inexpensive and readily available information. Administrative data is not normally entered specifically for research purposes, with previous literature indicating the use of administrative data in adverse events and coding for billing purposes may result in inaccurate data [12–23].

Interestingly despite the importance and frequent reporting of hospital length of stay and discharge destination measures, we were unable to identify any published empirical research comparing methods of data collection for these outcome measures. With this range of potential data sources and data collection approaches, it is important to consider the relative completeness and agreement between different data extraction methods. This research is therefore essential to ensure the validity of data collection for research that is used to inform decision making around health policy and clinical care.

2. Objectives

The purpose of this study was therefore to determine the completeness of capture and level of agreement between three different data collection approaches in measuring length of stay and discharge destination. These were:

Observational data manually collected from ward-based sources by a research assistant

retrospective administrative data extraction from an electronic patient management program (i.PM), and

retrospective review of scanned inpatient medical records post discharge from hospital (gold standard).

3. Methods

3.1 Study setting

This study was performed in conjunction with a larger stepped-wedge randomised controlled trial examining the effectiveness of acute weekend allied health services [24] and was approved by Monash Health Human Ethics Committee (Reference Number 13327B). The study was conducted at a major 520 bed public hospital providing acute and sub-acute services in urban South-East Melbourne, Australia and occurred during the first two weeks in February 2014. The study period and wards were selected in accordance with the stepped-wedge randomised controlled trial and included the acute assessment unit, neurosciences, plastics, surgical, orthopaedic, and two general medical wards. As there were no exclusion criteria, the study cohort included the total sample of consecutive individuals discharged from the study wards during the study period.

3.2 Outcome measures

Two outcome measures from the stepped-wedge randomised controlled trial were used in this analysis. These were selected as they are outcome measures in the larger project that were extracted from multiple sources and are key indicators of inpatient hospital effectiveness and efficiency.

Hospital length of stay. Hospital length of stay was reported in days and was determined by subtracting the date of hospital admission from the date of hospital discharge.

Discharge destination. Discharge destination is the location the patient is residing immediately post hospital discharge and can include: home, other hospital, rehabilitation facility, other supported residential facility (including retirement villages, supported residential services, respite and transitional care), low level care (hostel), high level care (nursing home) or death.

3.3 Data collection approaches

Five research assistants from allied health backgrounds collected hospital admission date, hospital discharge date and discharge destination via three methods. All research assistants received on-site training from a hospital researcher prior to collecting data.

Observational data manually collected by four research assistants from ward-based sources including nursing handover records, paper-based inpatient medical records, paper-based ward discharge/transfer records and verbal handover from ward staff based on the previous 24 hours. This data collection method was a pragmatic approach intended to replicate how this data would be collected in a large clinical trial with limited resources. Nursing handover records were updated daily by the nurse in charge. Ward transfer records were updated continuously by ward administrative staff Monday to Friday between 0730 – 2000 hours and 0730 – 1300 hours Saturdays, and nursing staff during all other hours.

Retrospective data extraction by one research assistant using administrative data from an electronic patient management program (i.Patient Manager CSC, Falls Church Virginia, USA). i.Patient Manager (i.PM) is the most widely used Patient Administrative System in Australian and New Zealand public hospitals. i.PM allows all administrative aspects of an inpatient episode to be securely tracked within a centralised database accessible by healthcare staff from multiple sites within the same health service [25]. Admission and discharge information is entered into i.PM by administrative staff during Monday to Friday between 0730 – 2000 hours and 0730 – 1300 hours Saturdays, and nursing staff during all other hours. This information includes date and time of hospital admission and hospital discharge, in addition to discharge destination.

Retrospective review of scanned inpatient medical records by two research assistants following patient discharge. All paper-based inpatient medical records are routinely scanned by health record administrative staff to form an integrated digital record within 48 hours of patient discharge. This record can then be reviewed electronically. For the purposes of this study, the retrospective review of scanned inpatient medical records was considered the gold standard data collection method. This justification is founded in the consideration of the medico-legal record of the patient admission as the primary source of information [26] and has previously been used as a gold standard measure when assessing multiple other outcomes including diagnostic accuracy and rates of adverse events but not for hospital length of stay or discharge destination [5–11, 27].

3.4 Procedure

Wards were attended daily by a research assistant between 0800 and 1200 hours. Observational data collected manually from ward based sources was entered directly into a survey tool (SurveyMonkey Inc. Palo Alto California, USA) via an electronic tablet device (iPad, Apple Inc, Cupertino CA, USA) at time of daily collection. Hospital admission and discharge data were collected from nursing handover records, paper-based inpatient medical records and ward admission and discharge records. If any data were unavailable or there were any discrepancies between data sources, research assistants clarified data through discussions with the nurse in charge or ward administrative staff. Data was exported from the survey tool into a Microsoft Office Excel spreadsheet (Microsoft, Redmond WA, USA).

Retrospective administrative data extracted from i.PM was entered into a separate Excel spreadsheet after patients had been discharged from hospital to ensure full availability and capture of inpatient episode data.

Similarly, research assistants independently extracted hospital admission date, hospital discharge date and discharge destination from the scanned inpatient medical records and entered data into a separate Excel spreadsheet. Inter-rater reliability analysis using Cohen’s Kappa coefficient was performed to determine consistency among the two research assistants, finding 93.8% agreement (Kappa=0.92) for length of stay and 100% agreement (Kappa=1.00) for discharge destination.

3.5 Analysis

3.5.1 Completeness of data capture

The computer program used to retrospectively review scanned medical records post discharge from hospital did not have a method for calculating total hospital admissions and hospital discharges between set dates. Therefore, for the purpose of this project, the researchers deemed a comparative analysis between retrospective administrative data collected from i.PM, and prospective data collection from ward-based sources as the most suitable assessment of the completeness of data capture. The number and percentage of data captured via each data collection method was presented using descriptive statistics.

3.5.2 Level of Agreement

Investigators compared level of agreement for the 376 admission and discharge data sets captured completely by all three data collection methods. Level of agreement between data collection from scanned medical record review, electronic patient management program (i.PM), and data collected from ward-based sources was analysed using a Bland-Altman comparison for length of stay and Cohen’s Kappa for discharge destination. To determine whether agreement between data collection methods was related to the day of week, of discharge data were entered into univariate logistic regression analysis as independent variables, agreement with inpatient medical record review was entered as the dependent variable.

Statistical analyses were performed using Stata (Version 13, StataCorp, College Station, Texas, USA).

4. Results

4.1 Completeness of data capture

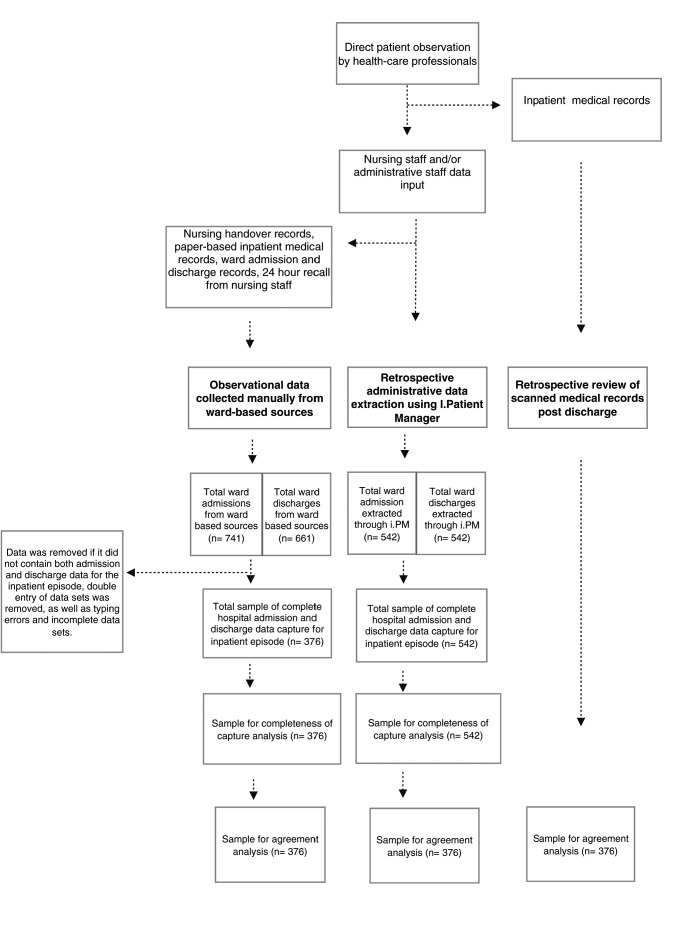

▶ Figure 1 outlines the data collection and analysis process, while ▶ Table 1 outlines the data cleaning process resulting in the identification of 376 complete hospital admission and hospital discharge data sets captured by all three data collection methods. This data set contained 178 (47%) females and 198 (53%) males with an average age of 59 ± 21 years.

Fig. 1.

Data collection and analyses process flowchart

Table 1.

Data cleaning process

| Admission data sets | Discharge data sets | |

|---|---|---|

| Original data sample | 741 | 661 |

| Double entries incomplete data typing errors | 365 | 285 |

| Cleaned data sample | 376 | 376 |

Of the 542 complete sets of hospital admission and hospital discharge data captured retrospectively by i.PM, only 376 (69%) complete data sets were captured via manual data collection from ward-based sources by a research assistant. It should be noted that data collection from ward-based sources did not produce any unique data set that wasn’t captured via i.PM.

4.2 Level of agreement

Descriptive data describing the level of agreement between data collection methods for length of stay and discharge destination are displayed in ▶ Table 2.

Table 2.

Agreement between ward-based sources, electronic patient management program and scanned inpatient medical records

| Agreement with inpatient medical records | Length of stay | Discharge destination | ||

|---|---|---|---|---|

| Ward sources | Administrative data | Ward sources | Administrative data | |

| Yes | 282 (75.0%) | 351 (93.4%) | 295 (78.5%) | 342 (91.0%) |

| No | 94 (25.0%) | 25 (6.6%) | 81 (21.5%) | 34 (9.0%) |

4.2.1 Length of Stay

Bland-Altman comparison between length of stay data collected via scanned inpatient medical records and ward-based sources by a research assistant resulted in limits of agreement of –5.323 to 5.637 with a mean difference of 0.157 (-0.121 – 0.435). Pitman’s Test in variance resulted in r=0.186 (p=0.00).

Bland-Altman comparison between length of stay data collected via scanned inpatient medical records and retrospective administrative data from the electronic patient management program (i.PM) resulted in limits of agreement of –1.564 to 1.415 with a mean difference of –0.074 (-150 – 0.001). Pitman’s Test in variance resulted in r=0.026 (p=0.613).

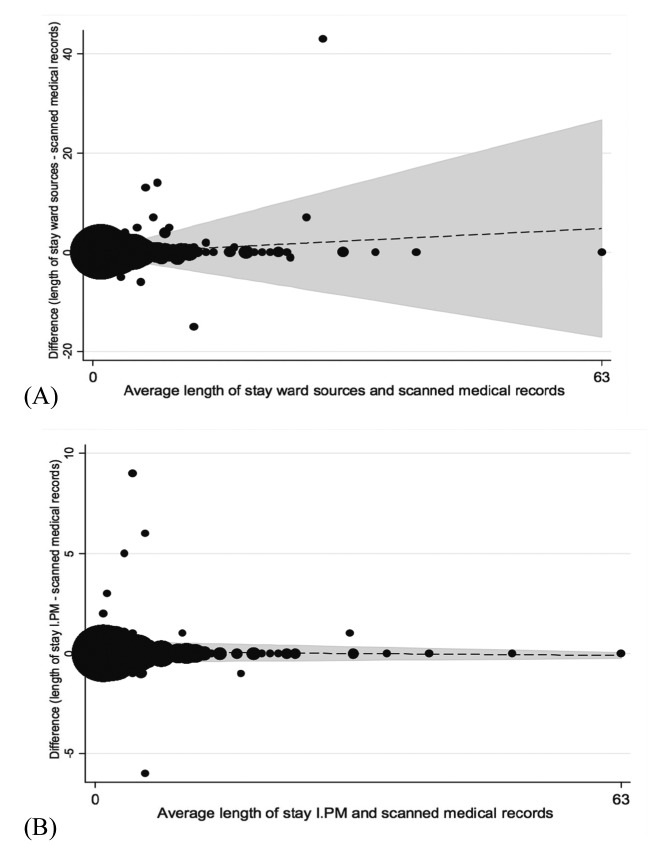

Agreement between data collection methods for hospital length of stay are displayed as Bland-Altman plots (▶ Figure 2), with differences in agreement based on day of week of discharge displayed in ▶ Table 3.

Fig. 2.

Bland-Altman plots comparing length of stay using different data collection methods.

Table 3.

Agreement between ward-based sources, electronic patient management program and medical record review for length of stay based on day of week of discharge

| Day of week | n (%) Agreement between SMR and ward sources | Odds Ratio Robust (95% Confidence Interval) | P-Value | n (%) Agreement between SMR and IPM | Odds Ratio Robust (95% Confidence Interval) | P-Value |

|---|---|---|---|---|---|---|

| Monday | 25 (65.8) | 0.61 (0.29–1.25) | 0.17 | 35 (92.1) | 0.81 (0.24–2.74) | 0.74 |

| Tuesday | 41 (73.2) | 0.90 (0.47–1.71) | 0.74 | 54 (96.4) | 2.10 (0.48–9.18) | 0.33 |

| Wednesday | 49 (76.6) | 1.11 (0.59–2.09) | 0.75 | 61 (95.3) | 1.54 (0.44–5.36) | 0.49 |

| Thursday | 36 (69.2) | 0.71 (0.38–1.35) | 0.30 | 49 (94.2) | 1.19 (0.34–4.16) | 0.79 |

| Friday | 39 (75.0) | 0.35 (0.51–1.97) | 1.00 | 48 (92.3) | 0.83 (0.27–2.55) | 0.75 |

| Saturday | 63 (80.8) | 1.52 (0.81–2.83) | 0.19 | 71 (91.0) | 0.65 (0.27–1.57) | 0.34 |

| Sunday | 29 (80.6) | 1.42 (0.60–3.37) | 0.42 | 33 (94.3) | 0.76 (0.21–2.70) | 0.67 |

4.2.2 Discharge Destination

Cohen’s Kappa coefficient of agreement between discharge destination data collected via scanned inpatient medical records and ward-based sources by a research assistant resulted in 83.1% agreement with a k=0.63 (p=0.00).

Cohen’s Kappa coefficient of agreement between discharge destination data collected via scanned inpatient medical records and retrospective administrative data from the electronic patient management program (i.PM) resulted in 92.0% agreement with a k=0.81 (p=0.00).

Differences in agreement based on day of week of discharge are displayed in ▶ Table 4.

Table 4.

Agreement between ward-based sources, electronic patient management program and medical record review for discharge destination based on day of week of discharge

| Day of week | n (%) Agreement between SMR and ward sources | Odds Ratio Robust (95% Confidence Interval) | P-Value | n (%) Agreement between SMR and IPM | Odds Ratio Robust (95% Confidence Interval) | P-Value |

|---|---|---|---|---|---|---|

| Monday | 24 (63.2) | 0.42 (0.21–0.87) | 0.02 | 36 (94.7) | 1.88 (0.43–8.26) | 0.40 |

| Tuesday | 48 (85.7) | 1.77 (0.81–3.89) | 0.15 | 51 (91.1) | 1.02 (0.39–2.68) | 0.97 |

| Wednesday | 51 (79.7) | 1.09 (0.57–2.11) | 0.79 | 60 (93.6) | 1.60 (0.54–4.72) | 0.40 |

| Thursday | 39(75) | 0.80 (0.40–1.58) | 0.52 | 48 (92.3) | 1.22 (0.41–3.65) | 0.72 |

| Friday | 37 (71.2) | 0.63 (0.33–1.22) | 0.17 | 47 (90.4) | 0.92 (0.35–2.44) | 0.87 |

| Saturday | 64 (82.1) | 1.33 (0.70–2.53) | 0.39 | 67 (86.0) | 0.51 (0.23–1.11) | 0.09 |

| Sunday | 32 (88.9) | 2.34 (0.80–6.84) | 0.12 | 33 (91.7) | 1.10 (0.32–3.82) | 0.88 |

5. Discussion

Data accuracy has previously been examined for electronic patient medical records and hospital administration data for multiple clinical outcome measures including diagnostic accuracy and rates of adverse events [5–11, 27]. However, to the author’s knowledge, this is the first investigation to compare and demonstrate differences in completeness of capture and agreement between hospital data collection methods for length of stay and discharge destination.

Our results demonstrate that the administrative data-set extracted from i.PM captured a larger set of complete hospital admission and hospital discharge data than the ward-based data-set. Importantly there were no unique data captured from ward-based sources that were not captured via i.PM. Therefore, researchers, hospital administrators and clinicians may be able to rely on electronic patient management programs as an effective method of capturing occasions of inpatient episodes of care.

There are many potential contributing factors to the discrepancy in completeness of capture between observational data collected from ward-based sources, and retrospective administrative data extraction from i.PM. Research assistants cannot be present on all wards at all times of the day, so it is possible that data may be missed for patients whose ward admission and discharge occurred when research assistants were not present on the ward, highlighting a limitation of this data collection method. There may have also been limited opportunity for research staff to access all ward resources for all patients on the ward. For example, if a patient was in the operating room, having a procedure or in radiology, their inpatient medical records are not located in the ward but are with the patient. Prospective observational data collection has been used in research examining hospital adverse events [29, 30] where data collectors were integrated into the ward environment and attended regular meetings. This method of data collection is difficult to fund in the current environment where competition for research funding is increasingly fierce. With increasing use of health information technology globally [31, 32] traditional research methodology must evolve to embrace opportunities to collect reliable retrospective data from electronic sources. Our results support the use of retrospective administrative data collection from electronic patient management programs to capture inpatient episodes of care.

Using a Bland-Altman comparison strong levels of agreement in length of stay were observed between all three investigated data collection methods, with a slightly larger mean difference observed in data from ward-based sources. Less significant p values in Pittman’s Test of variance when comparing electronic administrative data may have been due to the high levels of agreement in this data. There was no significant difference in agreement between different days of the week, with weekend data from ward-based sources demonstrating a slight trend towards improved agreement. Although this may appear to indicate that any method of data collection could be used in the hospital setting, when displayed in Bland-Altman plots data from ward-based sources demonstrated lower levels of agreement as length of stay increased when compared with inpatient medical record review (Figure 2 A). As can be seen in Figure 2 (A), one patient had a recorded hospital length of stay that was greatly different in the ward-based source data collection method, compared to the other 2 methods. On further examination, this patient was an inpatient in the health services’ “Hospital in the Home Program” prior to being admitted to the ward. The length of stay collected from the ward-based source only represented a portion of the patient’s total length of stay, however, this information was not clear to research assistants when collecting this patient’s data from ward-based sources. This may indicate that for patients with long “outlier” hospital length of stays, data collected from ward-based sources is less likely to be correct. These findings have further relevance in the funding of clinical trials where resources may be sought for staffing to manually collect data. This study suggests that retrospective data collection via electronic patient management programs can improve data completeness and agreement compared to manual ward-based data collection, when measuring hospital length of stay.

Using Cohen’s Kappa coefficient the electronic patient management program (i.PM) demonstrated the highest levels of agreement with the gold standard retrospective review of scanned medical records for discharge destination data. Discharge destination data collected from ward-based sources on a Monday was significantly less likely to agree with review of inpatient medical records. This may be due to change of staff from weekend to weekday staff, or that wards may have been busier on a Monday morning. Lower levels of agreement in data from ward-based sources generally, may be due to inaccuracy in recording of discharge destination by ward staff as this data is not usually recorded for research purposes and there appeared to be a perception by ward staff that accurate recording of discharge destination was of a lower organisational importance.

The knowledge of agreement between different hospital data collection methods remains limited despite the importance of data accuracy for research validity and health services quality management. This study was performed in seven wards in a single acute urban Melbourne hospital, which limits the external generalisability of the findings. In addition, as the study only measured a narrow scope of outcome measures using a single program, conclusions should be drawn cautiously for other electronic patient management programs and outcome measures. While inter-rater reliability was tested between research assistants reviewing inpatient medical records, this analysis was not performed on research assistants collecting data from ward-based sources. Due to the simplicity of the task in transcribing (collecting) data from one source to another it was deemed unlikely that there would be systematic bias between research assistants, however authors do recognise this as a limitation to this study. Future research should continue to examine accuracy in methods of hospital data collection, for the purposes of research and health services management. Due to the limitations of this research, the authors recommend future investigation to examine the cost effectiveness of data collection methods, as well as a broader scope of outcome measures, for example; unplanned hospital readmissions, rapid response teams, and patient adverse events (falls, pressure injuries, and deep vein thrombosis). Inclusion of cost effectiveness of data collection methods using time-intensive comparisons in future research could help to identify what level of investment is essential for the valid and accurate conduct of clinical trials in the hospital setting. Evaluation across multiple hospital sites using different electronic management programs in future research will improve the ability to generalise results across health services.

6. Conclusion

Although previous research has sourced data from electronic patient management programs, this is the first paper to demonstrate difference in completeness of data capture and level of agreement between data collection methods for hospital length of stay and discharge destination. Administrative data from an electronic patient management program showed the highest level of completeness of capture and level of agreement with the gold standard of inpatient medical record review for both length of stay and discharge destination, and therefore may be an acceptable data collection method for these measures.

Acknowledgments

The authors thank staff from Monash Health, Western Health, Melbourne Health, and Monash University, who contributed their time and effort to this investigation, particularly Monash Health ward staff, Allied Health WISER Unit, Health Information Services, and Monash University Allied Health Research Unit.

Footnotes

Clinical Relevance Statement

Electronic administrative data has become a key source of inexpensive and readily available information for hospital outcome measures such as hospital length of stay and discharge destination. Interestingly while previous studies have shown the use of administrative data in adverse events and coding for billing purposes may result in inaccurate data, no such studies were identified examining the use of administrative data for key outcome measures such as length of stay and discharge destination. This is the first paper to demonstrate that the administrative data from electronic patient management programs are an effective data collection method for key hospital outcome measures (length of stay and discharge destination). Future clinicians and hospital administrators can utilise opportunities to collect reliable hospital length of stay and discharge destination data from electronic sources, while researchers can improve data collection methodology when using length of stay and discharge destination as research outcome measures.

Conflict Of Interest

This study was performed in conjunction with a larger stepped-wedge randomised controlled trial examining the effectiveness of acute weekend allied health services. The results of our manuscript for publication helped shape the data collection methodology for the stepped-wedge trial mentioned above. All investigators involved in this manuscript are involved in the stepped-wedge randomised control trial. This research was funded by a Partnership Grant from the Australian National Health and Medical Research Council. Professor Terry Haines is supported by a Career Development Fellowship (Level 2) from the National Health and Medical Research Council. The NHMRC has had no direct role in the writing of the manuscript or the decision to submit it for publication.

Protection Of Human Subjects

This paper was an observational study only collecting routinely available data from the hospital systems and did not involve any direct intervention to participants. This study was approved by Monash Health Human Ethics Committee (Reference number: 13327B).

References

- 1.Morgan M, Beech R.Variations in lengths of stay and rates of day case surgery: implications for the efficiency of surgical management. Journal of Epidemiology and Community Health 1990; 44(2):90–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shojania KG, Showstack J, Wachter RM. Assessing Hospital Quality: A Review for Clinicians. Effective Clinical Practice 2001; 4(2):82–90. [PubMed] [Google Scholar]

- 3.Brasel KJ, Lim HJ, Nirula R, Weigelt JA. Length of stay: an appropriate quality measure? Archives of Surgery 2007; 142(5):461–466. [DOI] [PubMed] [Google Scholar]

- 4.Faulkner MP, Walsh J, Filby D, Reid M, Stokes B, Torzillo P, Jackson C. National Health Performance Authority Hospital Performance: Length of stay in public hospitals in 2011–2012. 2013; Nov:1–56. [Google Scholar]

- 5.Maresh M, Dawson AM, Beard RW. Assessment of an on line computerized perinatal data collection and information system. British Journal of Obstetrics and Gynecology 1986; 93(12):1239–1245. [DOI] [PubMed] [Google Scholar]

- 6.Ricketts D, Newey M, Patterson M, Hitchin D, Fowler S.Markers of data quality in computer audit: the Manchester Orthopaedic Database. Annals of the Royal College of Surgeons of England 1993; 75(6):393–396. [PMC free article] [PubMed] [Google Scholar]

- 7.Wilton R, Pennisi AJ. Evaluating the accuracy of transcribed computer-stored immunization data. Pediatrics 1994; 94(6):902–906. [PubMed] [Google Scholar]

- 8.Hogan WR, Wagner MM. Accuracy of data in computer-based patient records. Journal of the American Medical Informatics Association 1997; 4(5):342–355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tirschwell DL, Longstreth WT. Validating administrative data in stroke research. Stroke 2002; 33(10):2465–2470. [DOI] [PubMed] [Google Scholar]

- 10.Michel P, Quenon JL, de Saraaqueta AM, Scemama Comparison of three methods for estimating rates of adverse events and rates of preventable adverse events in acute care hospitals. British Medical Journal 2004; 328(7433): 199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bullano MF, Kamat S, Willey VJ, Barlas S, Watson DJ, Brenneman SK. Agreement between administrative claims and the medical record in identifying patients with a diagnosis of hypertension. Medical care 2006; 44(5):486–490. [DOI] [PubMed] [Google Scholar]

- 12.Zhan C, Miller M.Administrative data based patient safety research: a critical review. Quality and Safety in Health Care 2003; 12(suppl. 2): ii58-ii63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wennberg J, Gittelsohn A.Small Area Variations in Health Care Delivery A population-based health information system can guide planning and regulatory decision-making. Science. American Association for the Advancement of Science 1973; 182(4117):1102–1108. [DOI] [PubMed] [Google Scholar]

- 14.Roos LL, Jr, Roos NP, Cageorge SM, Nicol JP. How Good Are the Data?: Reliability of One Health Care Data Bank. Medical Care 1982; 20(3):266–276. [DOI] [PubMed] [Google Scholar]

- 15.May DS, Kelly JJ, Mendlein JM, Garbe PL. Surveillance of major causes of hospitalization among the elderly, 1988. MMWR. CDC surveillance summaries: Morbidity and mortality weekly report. CDC surveillance summaries/Centers for Disease Control 1991; 40(1):7–21. [PubMed] [Google Scholar]

- 16.Rosenthal GE, Harper DL, Quinn LM, Cooper GS. Severity-adjusted mortality and length of stay in teaching and nonteaching hospitals: results of a regional study. Journal of the American Medical Association 1997; 278(6):485–490. [PubMed] [Google Scholar]

- 17.Bernstein CN, Blanchard JF, Rawsthorne P, Wajda A.Epidemiology of Crohn’s disease and ulcerative colitis in a central Canadian province: a population-based study. American journal of epidemiology 1999; 149(10):916–924. [DOI] [PubMed] [Google Scholar]

- 18.Asch SM, Sloss EM, Hogan C, Brook RH, Kravitz RL. Measuring underuse of necessary care among elderly Medicare beneficiaries using inpatient and outpatient claims. Journal of the American Medical Association 2000; 284(18):2325–2333. [DOI] [PubMed] [Google Scholar]

- 19.Goff DC, Jr, Pandey DK, Chan FA, Ortiz C, Nichaman MZ. Congestive heart failure in the United States: is there more than meets the I (CD code)? The Corpus Christi Heart Project. Archives of internal medicine 2000; 160(2):197–202. [DOI] [PubMed] [Google Scholar]

- 20.Magid DJ, Calonge BN, Rumsfeld JS, Canto JG, Frederick PD, Every NR, Barron HV; National Registry of Myocardial Infarction 2 and 3 Investigators. Relation between hospital primary angioplasty volume and mortality for patients with acute MI treated with primary angioplasty vs thrombolytic therapy. Journal of the American Medical Association 2000; 284(24):3131–3138. [DOI] [PubMed] [Google Scholar]

- 21.Schrag D, Cramer LD, Bach PB, Cohen AM, Warren JL, Begg CB. Influence of hospital procedure volume on outcomes following surgery for colon cancer. Journal of the American Medical Association 2000; 284(23):3028–3035. [DOI] [PubMed] [Google Scholar]

- 22.Virnig BA, McBean M.Administrative data for public health surveillance and planning. Annual review of public health 2001; 22(1):213–230. [DOI] [PubMed] [Google Scholar]

- 23.Hamilton WT, Round AP, Sharp D, Peters TJ. The quality of record keeping in primary care: a comparison of computerised, paper and hybrid systems. British Journal of General Practice 2003; 53(497):929–933. [PMC free article] [PubMed] [Google Scholar]

- 24.Haines T, Skinner E, Mitchell D, O’Brien L, Bowles K, Markham D, Plumb S, Chui T, May K, Haas R, Lescai D, Philip K, McDermott F. Application of a novel disinvestment research design to the use of weekend allied health services on acute medical and surgical wards-randomised trial and economic evaluation protocol. BMC Health Services Research 2014; 14(Suppl. 2): 53.24499391 [Google Scholar]

- 25.isofthealth.com Australia: Computer Sciences Corporation Patient Management (i.PM and webPAS), Inc; Copyright 2014 [cited 2014 Aug 15]. Available from: http://www.isofthealth.com/en-AU/Products/Hospital/Patient%20Management.aspx. [Google Scholar]

- 26.Feather H, Morgan N.Risk management: role of the medical record department. Topics in health record management 1991; 12(2):40–48. [PubMed] [Google Scholar]

- 27.Fortinsky R, Gutman JD. A two-phase study of the reliability of computerized morbidity data. The Journal of family practice 1981; 13(2):229–235. [PubMed] [Google Scholar]

- 28.Krippendorff K. Reliability in content analysis. Human Communication Research 2004; 30(3):411–433. [Google Scholar]

- 29.Andrews LB, Stocking C, Krizek T, Gottlieb L, Krizek C, Vargish T, Siegler M.An alternative strategy for studying adverse events in medical care. The Lancet 1997; 349(9048):309–313. [DOI] [PubMed] [Google Scholar]

- 30.Hill AM, Hoffmann T, Hill K, Oliver D, Beer C, McPhail S, Brauner S, Haines TP. Measuring falls events in acute hospitals—a comparison of three reporting methods to identify missing data in the hospital reporting system. Journal of the American Geriatrics Society 2010; 58(7):1347–1352. [DOI] [PubMed] [Google Scholar]

- 31.Gerber T, Olazabal V, Brown K, Pablos-Mendez A.An agenda for action on global e-health. Health Affairs 2010; 29(2):233–236. [DOI] [PubMed] [Google Scholar]

- 32.Schweitzer J, Synowiec C.The economics of eHealth and mHealth. Journal of health communication 2012; 17(supp1.): 73–81. [DOI] [PubMed] [Google Scholar]

- 33.safetyandquality.gov.au Sydney: Australian Commission on Safety and Quality in Health Care, (cited 2014 Aug 11). Available from: http://www.safetyandquality.gov.au/our-work/clinical-communications/clinical-handover/ [Google Scholar]