Significance

When making decisions together, we tend to give everyone an equal chance to voice their opinion. To make the best decisions, however, each opinion must be scaled according to its reliability. Using behavioral experiments and computational modelling, we tested (in Denmark, Iran, and China) the extent to which people follow this latter, normative strategy. We found that people show a strong equality bias: they weight each other’s opinion equally regardless of differences in their reliability, even when this strategy was at odds with explicit feedback or monetary incentives.

Keywords: social cognition, joint decision-making, bias, equality

Abstract

We tend to think that everyone deserves an equal say in a debate. This seemingly innocuous assumption can be damaging when we make decisions together as part of a group. To make optimal decisions, group members should weight their differing opinions according to how competent they are relative to one another; whenever they differ in competence, an equal weighting is suboptimal. Here, we asked how people deal with individual differences in competence in the context of a collective perceptual decision-making task. We developed a metric for estimating how participants weight their partner’s opinion relative to their own and compared this weighting to an optimal benchmark. Replicated across three countries (Denmark, Iran, and China), we show that participants assigned nearly equal weights to each other’s opinions regardless of true differences in their competence—even when informed by explicit feedback about their competence gap or under monetary incentives to maximize collective accuracy. This equality bias, whereby people behave as if they are as good or as bad as their partner, is particularly costly for a group when a competence gap separates its members.

When it comes to making decisions together, we tend to give everyone an equal chance to voice their opinion. This is not just good manners but reflects a long-standing consensus (“two heads are better than one”; Ecclesiastes 4:9–10) on the “wisdom of the crowd” (1–3). However, a question much contested (4, 5) is, once we have heard everyone’s opinion, how should they be combined so as to make the most of them? One recent suggestion is to weight each opinion by the confidence with which it is expressed (6, 7). However, this strategy may fail dramatically (8, 9) when “the fool who thinks he is wise” is paired with “the wise who [thinks] himself to be a fool” (10). In the face of such a competence gap, knowing whose confidence to lean on is critical (11).

A wealth of research suggests that people are poor judges of their own competence—not only when judged in isolation but also when judged relative to others. For example, people tend to overestimate their own performance on hard tasks; paradoxically, when given an easy task, they tend to underestimate their own performance (the hard-easy effect) (12, 13). Relatedly, when comparing themselves to others, people with low competence tend to think they are as good as everyone else, whereas people with high competence tend to think they are as bad as everyone else (the Dunning–Kruger effect) (14–16). In addition, when presented with expert advice, people tend to insist on their own opinion, even though they would have benefitted from following the advisor’s recommendation (egocentric advice discounting) (17–19). These findings and similar findings suggest that individual differences in competence may not feature saliently in social interaction. However, it remains unknown whether—and to what extent—people take into account such individual differences in collective decision-making.

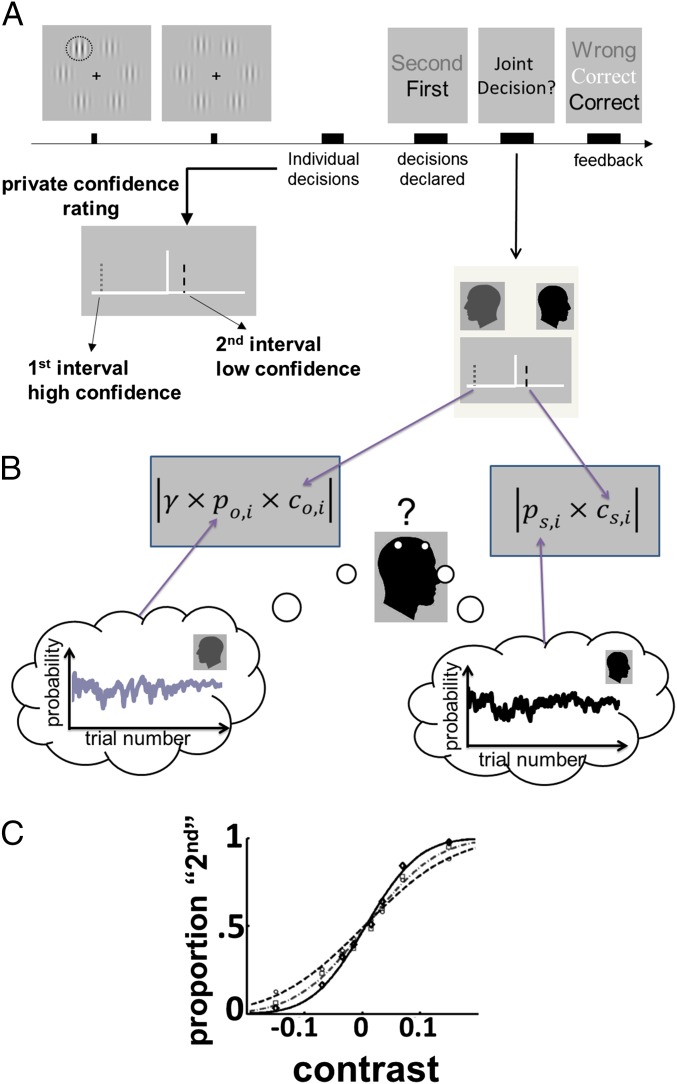

To address this issue, we developed a computational framework inspired by recent work on how people learn the reliability of social advice (20). We used this framework to (i) quantify how members of a group weight each other’s opinions and (ii) compare this weighting to that of an optimal model in the context of a simple perceptual task. On each trial, two participants (a dyad) viewed two brief intervals, with a target in either the first or the second one (Fig. 1A). They privately indicated which interval they thought contained the target, and how confident they felt about this decision. In the case of disagreement (i.e., they privately selected different intervals), one of the two participants (the arbitrator) was asked to make a joint decision on behalf of the dyad, having access to their own and their partner’s responses. Last, participants received feedback about the accuracy of each decision before continuing to the next trial. Previous work predicts that participants would be able to use the trial-by-trial feedback to track the probability that their own decision is correct and that of their partner (21). We hypothesized that the arbitrator would make a joint decision by scaling these probabilities (pself, and pother) by the expressed levels of confidence (pself × cself and pother × cother), thus making full use of the information available on a given trial, and then combining these scaled probabilities into a decision criterion (DC = pself × cself + pother × cother). In addition, to capture any bias in how the arbitrator weighted their partner’s opinion, we included a free parameter (γ) that modulated the influence of the partner in the decision process (DC = pself × cself + γ × pother × cother).

Fig. 1.

(A) The schematic shows an example trial. Dyad members observed two consecutive intervals, with a target appearing in either the first or the second one (here indicated by the dotted outline). They then privately decided which interval they thought contained the target and indicated how confident they felt about this decision. Next, their individual decisions were shared, and in the case of disagreement (i.e., they privately selected different intervals), one of the two dyad members (the arbitrator) was randomly prompted to make a joint decision on behalf of the dyad. Feedback about the accuracy of each decision was provided at the end of the trial. (B) We assumed that each participant tracked the probability of being correct given their own decision (thought bubble on the right) and that of their partner (thought bubble on the left). See main text and Methods for details. (C) A psychometric function showing the proportion of trials in which the target was reported to be in the second interval given the contrast difference between the second and the first interval at the target location. Circles, performance of the less sensitive dyad member averaged across dyads; gray squares, performance of the more sensitive dyad member averaged across dyads; and black squares, average performance of the dyad.

In four experiments, we tested whether and to what extent advice taking (γ) varied with differences in competence between dyad members. To anticipate our findings, we found that the worse members of each dyad underweighted their partner’s opinion (i.e., assigned less weight to their partner’s opinion than recommended by the optimal model), whereas the better members of each dyad overweighted their partner’s opinion (i.e., assigned more weight to their partner’s opinion than recommended by the optimal model). Remarkably, dyad members exhibited this “equality bias”—behaving as if they were as good as or as bad as their partner—even when they (i) received a running score of their own and their partner’s performance, (ii) differed dramatically in terms of their individual performance, and (iii) had a monetary incentive to assign the appropriate weight to their partner’s opinion.

Recently, psychological phenomena previously believed to be universal have been shown to vary across cultures—where culture is understood as a set of behavioral practices specific to a particular population (22)—calling the generalizability of studies based on Western, Educated, Industrialized, Rich, and Democratic (WEIRD) participants into question (23, 24). To test whether the pattern of advice taking observed here was culture specific, we conducted our experiments in Denmark, China, and Iran. Broadly speaking, we take these three countries to represent Northern European (Denmark), East Asian (China), and Middle Eastern (Iran) cultures. According to the latest World Values Survey,* 71.1% of Northern European respondents (data from Norway and Sweden) and 52.3% of Chinese respondents, but only 10.6% of Iranian respondents, favored the sentence “most people can be trusted” over “you can never be too careful when dealing with people.” Reflecting this pattern of responses, research using public goods games has shown that Danish participants contribute more than Chinese and that Chinese participants in turn contribute more than Middle Eastern (data available from Turkey, Saudi Arabia, and Oman) (25, 26). Our sample should thus be sensitive to any cultural commonalities or differences in advice taking.

Results

Experiment 1.

A total of 98 healthy adult males (15 dyads in Denmark, 15 dyads in Iran, and 19 dyads in China) were recruited for experiment 1. Each dyad completed 256 trials (Fig. 1A). Participants were separated by a screen and not allowed to communicate verbally with each other. The overall pattern of results was consistent between countries; we have included separate analyses for each country in SI Results. We quantified performance—that is, sensitivity to the contrast difference between the two intervals—as the slope of the psychometric function relating choices (i.e., individual choices and those made by participants on behalf of dyad) to the stimulus values (Fig. 1C; SI Methods).

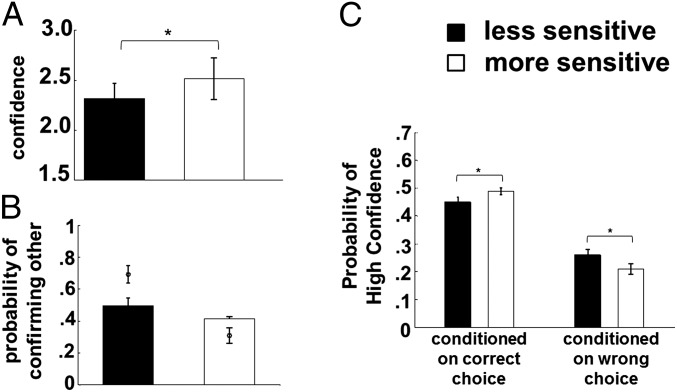

To address the question of how individual differences in competence affect joint decisions, we first asked if the performance of the dyad depended on who arbitrated the trial. More precisely, we calculated dyadic sensitivity (SI Methods) separately for the trials arbitrated by the less and the more sensitive member of each dyad. We defined collective benefit as the ratio of the sensitivity of the dyad to that of the more sensitive dyad member (i.e., sdyad/smax). Collective benefit was significantly higher in trials arbitrated by the more sensitive dyad members [t(46) = −4.18, P < 0.0004; paired t test]. We then asked if participants’ confidence reflected their individual differences in performance. Indeed, the more sensitive dyad members were, on average, more confident [Fig. 2A; t(46) = 2.42, P < 0.02, paired t test].

Fig. 2.

(A) Average confidence. Black bars, less sensitive dyad member; white bars, more sensitive dyad member. (B) Probability of confirming partner’s decision. Circles indicate the recommendation of the optimal model. (C) Probability of high confidence given a correct (left bars) and a wrong (right bars) decision. *P < 0.05. All error bars are 1 SEM.

When collapsed across dyad members, participants showed a small egocentric bias, confirming their partner’s decision in 45 ± 16% (mean ± SD) of disagreement trials. This result is consistent with previous works on egocentric advice discounting (13, 17). However, splitting the data as above showed that the less sensitive dyad members followed their partner’s advice as often as they confirmed their own decision (i.e., 50 ± 20%; mean ± SD). In contrast, the more sensitive dyad members followed their partner's advice on 41 ± 11% (mean ± SD) of the disagreement trials (Fig. 2B). This difference in advice taking was significant [Fig. 2B; t(46) = −2.35, P < 0.03, paired t test]. Taken together, the results showed that the more sensitive dyad members had a larger—more positive—influence on the joint decisions.

A critical insight, however, came from assessing participants' confidence in their correct and wrong individual decisions. For each participant, we calculated the probability that a participant expressed high confidence in a correct decision (Phigh|correct) and in an incorrect decision (Phigh|error) separately. We defined high confidence trials as those in which the participant’s confidence was higher than their own average confidence. A 2 (dyad member: less vs. more sensitive) × 2 (accuracy: wrong vs. correct decision) mixed ANOVA (dependent variable: Phigh) showed an expected significant main effect of accuracy [Fig. 2C; F(1, 92) = 491.2, P < 0.0001] but, importantly, no main effect of dyad member [Fig. 2C; F(1, 92) = 0.001, P > 0.96]. However, there was a significant interaction between dyad member and accuracy [Fig. 2C; F(1, 92) = 18.31, P < 0.0001]. In particular, the less sensitive dyad members were more likely (compared with their partner) to report high confidence in their incorrect decisions [Fig. 2C; t(46) = −2.15, P < 0.04, paired t test]. Conversely, the more sensitive members were more likely (compared with their partner) to report high confidence in their correct decision [Fig. 2C; t(46) = 2.39, P < 0.03, paired t test]. The interaction suggests that the less sensitive dyad members were more likely (than their partner) to lead the group astray by expressing high confidence in their errors; in contrast, the more sensitive dyad members were more likely, compared to their partner, to lead the group to the right answer by expressing high confidence when they were correct (see SI Methods for details).

To directly estimate how dyad members weighted each other’s opinions, we adopted a recent computational model (21). When resolving a disagreement, the arbitrator would have to compare their own decision and confidence with those of their partner. Importantly, to make the most accurate judgments, the weight assigned to each member should be informed by the reliability of their individual responses (27, 28). To learn this information, the arbitrator must dynamically track the history of trial outcomes (29). The model solves this task using Bayesian reinforcement learning (21) (SI Methods). In particular, it learns the probability that a given dyad member has made a correct decision on the current trial given their accuracy on previous trials (Fig. 1B). On the first trial, the prediction of probability correct is set to 50%. The model then, trial by trial, updates this prediction according to the discrepancy between the prediction of probability correct and the observed accuracy (i.e., the prediction error). If this prediction error is negative (i.e., the participant was wrong), the prediction of probability correct is decreased, but if this prediction error is positive (i.e., the participant was correct), then the prediction of probability correct is increased. Critically, the magnitude of this update (i.e., the learning rate) varies according to the volatility of each dyad member’s performance. Possible sources of volatility are lapses of attention, changes of arousal level, and/or perceptual learning. If performance is stable, then the trial-by-trial update is small (small learning rate), but if performance is volatile, then the trial-by-trial update is large (high learning rate).

In line with previous studies (30, 31), we assumed that the arbitrator, on a given trial, based their decision on their own predicted probability correct, ps,i and their partner’s predicted probability correct, po,i, where s denotes self, o denotes other, and i denotes the trial number (Fig. 1B). Further, we hypothesized that the arbitrator scaled these predictions by the expressed levels of confidence, ps,i × cs,i and po,i × co,i, where the sign of c indicates the participant’s individual choice: negative if a participant chose the first interval and positive for the second interval. Last, we introduced a free parameter, , which captured the influence of the partner in the decision process. This gave us the following decision criterion (DC)

| [1] |

where the arbitrator would respond first interval if DC < 0 and second interval if DC > 0 (SI Methods). As such, γ > 1 indicates that the arbitrator is strongly influenced by their partner, whereas γ < 1 indicates that the arbitrator is weakly influenced by their partner. We estimated this social influence (γ) under two constraints: (i) the parameter value that maximized the fit between the model-derived decisions and the empirically observed decisions (γfit) and (ii) the parameter value that maximized the accuracy of the model-derived decisions (γopt). Critically, by comparing γfit with γopt for each participant, we could judge whether the participant overweighted (γfit > γopt) or underweighted (γfit < γopt) their partner’s opinion relative to their own.

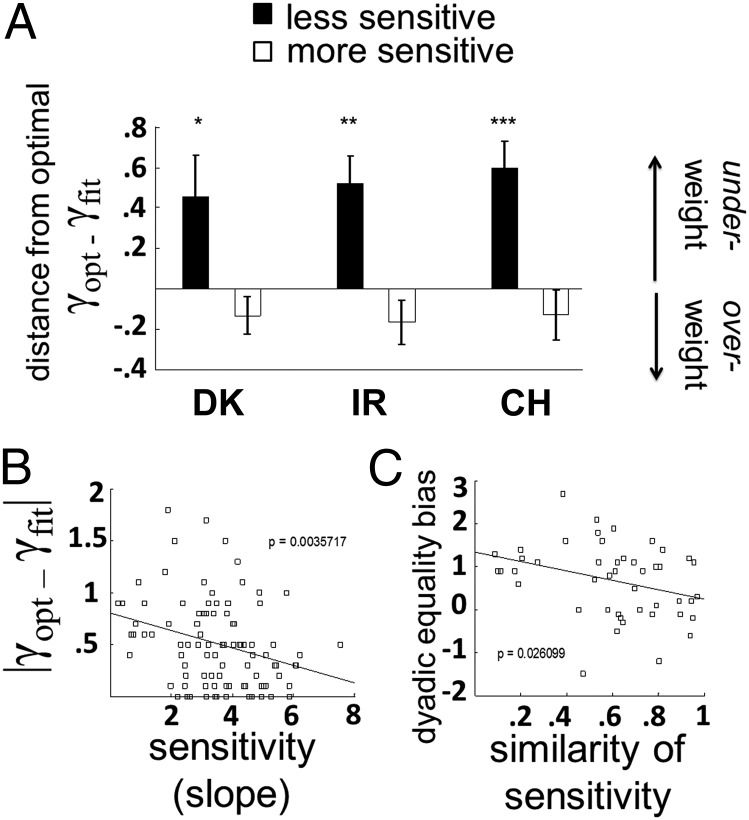

We first tested if the less and the more sensitive dyad members differed in how they weighted their partner’s opinion relative to their own (γfit smaller or larger than γopt). Interestingly, the less sensitive dyad members (smin) underweighted (i.e., γfit < γopt) the opinion of their better-performing partner [Fig. 3A, black bars; t(46) = −5.78, P < , paired t test]. In contrast, the more sensitive dyad members (smax) overweighted (i.e., γfit > γopt) the opinion of their poorer-performing partner [Fig. 3A, white bars; t(46) = 2.27, P < 0.03, paired t test]. Critically, the optimal model (γopt) allowed us to calculate the proportion of trials in which the arbitrator should have taken their partner’s advice. Echoing the previous results, the less sensitive dyad members took their partner’s advice significantly less often than they should have [compare the black bar (empirical data) and the circle above it (optimal model) in Fig. 2B; t(46) = −4.96, P < , paired t test]. In contrast, the more sensitive dyad members took their partner’s advice significantly more often than they should have [compare white bar and corresponding circle in Fig. 2B; t(46) = −3.66, P < 0.007, paired t test]. We return to the implication of these findings for egocentric advice discounting in Discussion.

Fig. 3.

(A) Deviation from optimal performance. Data plotted separately for each country. Positive and negative values indicate over- and underweighting, respectively. Black bars, less sensitive dyad member; white bars, more sensitive dyad member. *P < 0.05; **P < 0.003; ***P < 0.001. Error bars are 1 SEM. (B) Absolute deviation from optimal weighting, plotted against sensitivity (slope of psychometric function). Each data point represents one participant. (C) Combined equality bias is plotted against similarity of sensitivity. Each data point represents a dyad. For B and C, data are collapsed across cultures, and the lines show the least-square linear regression.

We then tested if the less and the more sensitive dyad members differed in terms of how effectively they weighted their partner’s opinion relative to their own (the absolute difference between the optimal and the empirical weight, |γfit − γopt|). Interestingly, the less sensitive dyad members were significantly worse at weighting their partner’s opinion [|γfit − γopt| larger for sminvs. smax; t(46) = 4.58, P < 10-4, paired t test]. This result is in line with an earlier finding (15) that misjudgments of one’s own competence is more severe in individuals with low competence than those with high competence (the Dunning–Kruger effect). However, our results go beyond those earlier findings by probing people’s implicit beliefs about relative competence as revealed through their decisions rather than a direct, one-shot report. Moreover, we found that, across participants, the absolute difference between the optimal and the empirical weight was negatively correlated with individual sensitivity (Fig. 3B; r = −0.29, P < 0.004, Pearson’s correlation). In other words, the more sensitive dyad members were better at judging their own competence relative to their partner.

The above results can be described as an equality bias for social influence—that is, participants appeared to arbitrate the disagreement trials as if they were as good as or as bad as their partner. In the context of our model, such an equality bias would entail that the empirical weights, γfit, were clustered around 1, whereas the optimal weights, γoptwere scattered away from 1. Indeed, we found that the empirical weights were significantly closer to 1 [i.e., |1 − γfit| < |1 − γopt| t(93) = −4.19, , paired t test]. Critically, an equality bias should be useful when true. More precisely, if dyad members are indeed equally sensitive, then the empirical weights and the optimal weight should be close to 1. However, for dyad members with different levels of sensitivity, the empirical and the optimal weight should diverge dramatically as equality bias would be counterproductive. This hypothesis entails that the similarity of dyad members’ sensitivity, smin/smax, should be negatively correlated with combined equality bias (i.e., the summed equality bias of both dyad members), which we defined as (γopt,smin – γfit,smin) – (γopt,smax – γfit,smax). More positive values indicate a higher equality bias (see SI Methods for details). Indeed, this predication was borne out by the data (Fig. 2C; r = −0.32, P < 0.03, Pearson’s correlation).

It is important to note that only the positive values of γopt – γfit indicate egocentric advice discounting. Indeed, when collapsed across all participants, γopt – γfit was significantly positive [one-sample t test comparing γopt – γfit (mean ± SD = 0.19 ± 0.63) to zero; t(93) = 2.95, P < 0.004]. Had we followed the convention established by previous studies on advice taking and investigated the overall average (rather than splitting smin and smax) of γopt – γfit, we might have interpreted our results purely in terms of egocentric advice discounting, whereas our results described above indicate that both types of advice discounting, egocentric and self-discarding, may coexist in social interaction.

Experiment 2.

One critique of the results may be that limitations on memory capacity may have made it too difficult for participants to track the history of trial outcomes (ps,i and po,i), leading to their inability to decide whose opinion is more accurate. We tested this hypothesis in a second study in which participants performed the same task as in experiment 1, except that they were informed about their own and their partner’s cumulative (as well as trial-by-trial) accuracy at the end of each trial. A total of 22 healthy adult males (11 dyads in Iran) were recruited for experiment 2 (see SI Methods for details). Despite the change in feedback, the results were similar to those obtained in experiment 1. In particular, the less sensitive dyad members underweighted (i.e., γfit < γopt) the opinion of their better-performing partner [Fig. S1, black bar; t(10) = −4.69, P < , paired t test]. In contrast, the more sensitive dyad members overweighted (i.e., γfit > γopt) the opinion of their poorer-performing partner [Fig. S1, white bar; t(10) = 3.73, P < 0.004, paired t test]. With cumulative feedback, holding previous trial outcomes in memory was not necessary. However, dyad members still exhibited an equality bias, ruling out limitations on memory capacity as an explanation of the above results.

Experiment 3.

Another possible interpretation of our results may be that dyad members exhibited an equality bias because their individual differences in sensitivity were, at least in some cases, relatively small or accidental. We tested this hypothesis in experiment 3 in which participants performed the same task as in experiment 1, except that we now covertly made the task much harder for one of the two participants. A total of 46 healthy adult males (13 dyads in Denmark and 10 dyads in Iran) were recruited for experiment 3 (see SI Methods for details). Briefly, we first ran a practice session (128 trials), without any social interaction (joint decisions and joint feedback), in which we estimated the sensitivity of each participant. We then ran the experimental session (128 trials), with social interaction as in the previous experiments, in which we covertly introduced noise to the visual stimulus presented to the less sensitive member of each dyad. Critically, this manipulation ensured that dyad members’ performance differed significantly [Fig. S2; smin vs. smax t(22) = 8.85, P < , paired t test]. Despite the conspicuous difference in performance, dyad members still exhibited an equality bias. The less sensitive dyad members underweighted (i.e., γfit < γopt) the opinion of their better-performing partners [Fig. S3, black bars; t(22) = −3.38, P < 0.003, paired t test]. In contrast, the more sensitive dyad members overweighted (i.e., γfit > γopt) the opinion of their poorer-performing partners [Fig. S3, white bars; t(22) = 2.11, P < 0.05, paired t test].

Experiment 4.

Finally, we tested whether monetary incentives would reduce—or even eradicate—the equality bias. We conducted a version of experiment 1 in which participants first bet on their choice individually and then collectively, with the payoff (correct: reward; error: cost) determined by the sum of bets (see SI Methods for details). Replicating our previous results, the less sensitive dyad members underweighted (i.e., γfit < γopt) their partner’s opinion [Fig. S4, black bar; t(16) = −3.91, P < 0.002, paired t test]. Conversely, the more sensitive dyad members overweighted (i.e., γfit > γopt) their partners [Fig. S4, white bar; t(16) = 5.04, P < 0.001, paired t test]. The addition of monetary incentives did not affect the equality bias.

Discussion

An important challenge for group decision-making (e.g., jury voting, managerial boards, medical diagnosis teams) is to take into account individual differences in competence among group members. Although theory tells us that the opinion of each group member should be weighted by its reliability (11, 32), empirical research cautions that this is easier said than done. To start with, we tend to grossly misestimate our own competence—not only when judged in isolation but also relative to others (14–16). This raises the question of whether—and to what extent—people take into account individual differences in competence when they engage in collective decision-making.

To address this question, we developed a computational framework inspired by previous work on how people learn the reliability of social advice (21). We quantified how members of a group weighted each other’s opinion in the context of a visual perceptual task. Research on advice taking (18, 19, 33) predicts that group members (irrespective of their relative competence) would insist on their own opinion more often than following their partner. Looking only at the raw behavioral results (Fig. 2B and Fig. S5, bars), one may have concluded that only the better-performing members of each group displayed egocentric discounting and insisted on following their own opinion more often than they followed that of their partner. However, the model-based analysis (Fig. 2B, circles) showed that the better-performing group members should (optimally) have followed their partner’s opinion even less often than they actually did (compare white bar and circle). Conversely, their poorer-performing counterparts should have followed their partner’s opinion even more often than they actually did (compare black bar and circle). Thus, thinking of advice taking as monolithically egocentric may miss out the nuances due to individual differences in competence arising in social interaction. Importantly, this divergence between the conclusions from the raw behavioral data and the model-based analysis underscores the power of the latter approach for understanding social behavior.

Our participants exhibited an equality bias—behaving as if they were as good or as bad as their partner. We excluded a number of alternative explanations. We replicated the equality bias in experiment 2 in which participants were presented with a running score of each other’s performance. Therefore, it is unlikely that the bias reflects a previously reported self-enhancement or self-superiority bias (34) as these were (at least for the less sensitive group members) explicitly contradicted by the running score. In addition, experiment 2 ruled out poor memory for partner’s recent performance as an alternative explanation. Moreover, in experiment 3, we replicated the equality bias when participants’ performance was (covertly) manipulated to differ conspicuously from that of their partner. It is therefore unlikely that our findings are an artifact of small, accidental differences in performance (i.e., due to noise and not competence per se) as this manipulation ensured that all differences in competence were large. Additionally, the results of experiments 2 and 3 also rule out ignorance of competence (15) as an explanation of the bias. We note that our results cannot be explained as a statistical artifact of regression to the main (see SI Methods for detailed discussion of this issue). Finally, we observed the equality bias across three cultures (Denmark, Iran, and China) that differ widely in terms of their norms for interpersonal trust (see Introduction), suggesting that this bias may reflect a general strategy for collective decision-making rather than a culturally specific norm.

So, why do people display an equality bias? People may try to diffuse the responsibility for difficult group decisions (35). In this view, participants are more likely to alternate between their own opinion and that of their partner when the decision is particularly difficult (high uncertainty), thus sharing the responsibility for potential errors. To test the shared responsibility hypothesis directly, one could vary the magnitude of the outcome of the group decisions and quantify the equality bias when (i) the reward is small and the cost of an error is high vs. (ii) the reward is large and the cost is low. The shared responsibility hypothesis would predict a stronger equality bias under condition i. Alternatively, the equality bias may arise from group members’ aversion to social exclusion (36). Social exclusion invokes strong aversive emotions that—some have even argued—may resemble actual physical pain (37). By confirming themselves more often than they should have, the inferior member of each dyad may have tried to stay relevant and socially included. Conversely, the better performing member may have been trying to avoid ignoring their partner. Finally, the equality bias may arise because people “normalize” their partner’s confidence to their own. More precisely, although the better-performing members of each group were more confident (Fig. 2A and Fig. S6), they also overweighted the opinion of their respective partners and vice versa. This suggests that participants may have rescaled their partners’ confidence to their own and made the joint decisions on the basis of these rescaled values.

It is also constructive to consider what cognitive purpose equality bias might serve. Cognitive biases often serve as shortcut solutions to complex problems (38). When group members are similar in terms of their competence, the equality bias simplifies the decision process (and lowers cognitive load) by reducing it to a direct comparison of confidence. Similarly, equality bias may facilitate social coordination, with participants trying to get the task done with minimal effort but inadvertently at the expense of their joint accuracy. Indeed, it has been argued (38) that interacting agents quickly converge on social norms (i.e., coordination devices) to reduce disharmony and chaos in joint tasks. Lastly, a wealth of research on “homophily” in human social networks (39) suggests that our tendency for associating and bonding with similar others may exploit the benefits of an equality bias. However, when a wide competence gap separates group members, the normative strategy requires that each opinion is weighted by its reliability (28). In such situations, an equality bias can be damaging for the group. Indeed, previous research has shown that group performance in the task described here depends critically on how similar group members are in terms of their competence (6, 8, 9). Future research could refine our understanding of this bias by assessing the actual real-life probability of the breakdown of the similarity assumption.

Previous research suggests that mixed-sex dyads are particularly likely to elicit task-irrelevant sex-stereotypical behavior (40, 41). We therefore chose to test male participants only. Our choice leaves open the possibility that such an equality bias may be different in female-only or female-male dyads. However, previous studies (42, 43) have shown that women follow an equality norm more often than men when allocating rewards, suggesting that the equality bias may be found for female dyads too. Future studies can use our framework to explore the equality bias in male-female and female-female dyads.

An important question arising from our approach is the extent to which the model parameters are biologically interpretable at neural level. A number of recent studies have identified the neural correlates of confidence in perceptual and economic decision-making (44, 45). Importantly, these studies focused on single participants, leaving open the possibility that confidence as communicated in social interaction may have a different set of neural correlates, or, at least, involve other stages of processing (46). Indeed, it has been suggested that limitations on the processes underlying communicated confidence may explain why groups sometimes fail to outperform their members (8). Future research should test whether the neural substrates that give rise to confidence for communication differ from when confidence guides individual behavior (wagering, slowing down, etc.), and, if so, at what stages of processing. Future research could also identify the neural correlates of the arbitration process that ensues once confidence has been expressed.

In the early years of the 20th century, Marcel Proust, a sick man in bed but armed with a keen observer’s vision, wrote “Inexactitude, incompetence do not modify their assurance, quite the contrary” (47). Indeed, our results show that, when making decisions together we do not seem to take into account each other’s inexactitudes. However, are people able to learn how they should weight each other’s opinions or are they, as implied by Monsieur Proust’s melancholic tone, forever trapped in their incompetence?†

Methods

In four experiments, pairs of participants made individual and joint decisions in a perceptual task (6). We implemented a visual search task with a two-interval forced choice design. Discrimination sensitivity for individuals and dyads was assessed using the method of constant stimuli. Social influence was assessed by using and adapting a previously published computational model of social learning (30). See SI Methods for details.

Supplementary Material

Acknowledgments

We thank Tim Behrens for sharing the code for implementing the computational model and Christos Sideras for helping collect the data for experiment 4. This work was supported by European Research Council Starting Grant “NeuroCoDec #309865” (to B.B.), the British Academy (B.B.), the Calleva Research Centre for Evolution and Human Sciences (D.B.), the European Union Mind Bridge Project (D.B., K.O., A.R., C.D.F., and B.B.), and the Wellcome Trust (G.R.).

Footnotes

The authors declare no conflict of interest.

*A cross-country project coordinated by the Institute for Social Research of the University of Michigan: www.worldvaluessurvey.org/.

†We did address this question by splitting our data (experiment 1) into two sessions to test whether participants moved closer toward the optimal weight over time. However, we found no statistically reliable difference between the two sessions. This could be due to participants’ stationary behavior or that our data and analysis did not have sufficient power to address the issue of learning.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1421692112/-/DCSupplemental.

References

- 1.Condorcet M. 1785. Essai sur l'application de l'analyse á la probabilité des décisions rendues á la pluralité des voix (de l'Impr. Royale, Paris, France)

- 2.Galton F. Vox populi. Nature. 1907;75(1949):450–451. [Google Scholar]

- 3.Surowiecki J. The Wisdom of Crowds. Doubleday; Anchor, New York: 2005. [Google Scholar]

- 4.Galton F. One vote, one value. Nature. 1907;75(1948):414–414. [Google Scholar]

- 5.Perry-Coste FH. The ballot-box. Nature. 1907;75(1952):509–509. [Google Scholar]

- 6.Bahrami B, et al. Optimally interacting minds. Science. 2010;329(5995):1081–1085. doi: 10.1126/science.1185718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Koriat A. When are two heads better than one and why? Science. 2012;336(6079):360–362. doi: 10.1126/science.1216549. [DOI] [PubMed] [Google Scholar]

- 8.Bahrami B, et al. What failure in collective decision-making tells us about metacognition. Philos Trans R Soc Lond B Biol Sci. 2012;367(1594):1350–1365. doi: 10.1098/rstb.2011.0420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bang D, et al. Does interaction matter? Testing whether a confidence heuristic can replace interaction in collective decision-making. Conscious Cogn. 2014;26:13–23. doi: 10.1016/j.concog.2014.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Shakespeare W. As You Like It. Wordsworth Editions; London: 1993. [Google Scholar]

- 11.Nitzan S, Paroush J. Collective Decision Making: An Economic Outlook. Cambridge Univ Press; Cambridge, UK: 1985. [Google Scholar]

- 12.Gigerenzer G, Hoffrage U, Kleinbölting H. Probabilistic mental models: A Brunswikian theory of confidence. Psychol Rev. 1991;98(4):506–528. doi: 10.1037/0033-295x.98.4.506. [DOI] [PubMed] [Google Scholar]

- 13.Soll JB. Determinants of overconfidence and miscalibration: The roles of random error and ecological structure. Organ Behav Hum Dec. 1996;65(2):117–137. [Google Scholar]

- 14.Falchikov N, Boud D. Student self-assessment in higher-education: A metaanalysis. Rev Educ Res. 1989;59(4):395–430. [Google Scholar]

- 15.Kruger J, Dunning D. Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121–1134. doi: 10.1037//0022-3514.77.6.1121. [DOI] [PubMed] [Google Scholar]

- 16.Metcalfe J. Cognitive optimism: Self-deception or memory-based processing heuristics? Pers Soc Psychol Rev. 1998;2(2):100–110. doi: 10.1207/s15327957pspr0202_3. [DOI] [PubMed] [Google Scholar]

- 17.Yaniv I, Kleinberger E. Advice taking in decision making. Organ Behav Hum Decis Process. 2000;83(2):260–281. doi: 10.1006/obhd.2000.2909. [DOI] [PubMed] [Google Scholar]

- 18.Yaniv I. Receiving other people's advice: Influence and benefit. Organ Behav Hum Dec. 2004;93(1):1–13. [Google Scholar]

- 19.Yaniv I, Kleinberger E. Advice taking in decision making: Egocentric discounting and reputation formation. Organ Behav Hum Dec. 2000;83(2):260–281. doi: 10.1006/obhd.2000.2909. [DOI] [PubMed] [Google Scholar]

- 20.Behrens TE, Hunt LT, Rushworth MF. The computation of social behavior. Science. 2009;324(5931):1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- 21.Behrens TE, Hunt LT, Woolrich MW, Rushworth MF. Associative learning of social value. Nature. 2008;456(7219):245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Roepstorff A, Niewöhner J, Beck S. Enculturing brains through patterned practices. Neural Netw. 2010;23(8-9):1051–1059. doi: 10.1016/j.neunet.2010.08.002. [DOI] [PubMed] [Google Scholar]

- 23.Henrich J, Heine SJ, Norenzayan A. Most people are not WEIRD. Nature. 2010;466(7302):29. doi: 10.1038/466029a. [DOI] [PubMed] [Google Scholar]

- 24.Henrich J, Heine SJ, Norenzayan A. The weirdest people in the world? Behav Brain Sci. 2010;33(2-3):61–83, discussion 83–135. doi: 10.1017/S0140525X0999152X. [DOI] [PubMed] [Google Scholar]

- 25.Gächter S, Herrmann B, Thöni C. Culture and cooperation. Philos Trans R Soc Lond B Biol Sci. 2010;365(1553):2651–2661. doi: 10.1098/rstb.2010.0135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Herrmann B, Thöni C, Gächter S. Antisocial punishment across societies. Science. 2008;319(5868):1362–1367. doi: 10.1126/science.1153808. [DOI] [PubMed] [Google Scholar]

- 27.Mannes AE, Soll JB, Larrick RP. The wisdom of select crowds. J Pers Soc Psychol. 2014;107(2):276–299. doi: 10.1037/a0036677. [DOI] [PubMed] [Google Scholar]

- 28.Nitzan S, Paroush J. Optimal decision rules in uncertain dichotomous choice situations. Int Econ Rev. 1982;23(2):289–297. [Google Scholar]

- 29.Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16(2):199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 30.Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10(9):1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 31.Yu AJ, Dayan P. Uncertainty, neuromodulation, and attention. Neuron. 2005;46(4):681–692. doi: 10.1016/j.neuron.2005.04.026. [DOI] [PubMed] [Google Scholar]

- 32.Bovens L, Hartmann S. Bayesian Epistemology. Oxford Univ Press; Oxford, UK: 2004. [Google Scholar]

- 33.Bonaccio S, Dalal RS. Advice taking and decision-making: An integrative literature review, and implications for the organizational sciences. Organ Behav Hum Dec. 2006;101(2):127–151. [Google Scholar]

- 34.Hoorens V. Self-enhancement and superiority biases in social comparison. Eur Rev Soc Psychol. 1993;4(1):113–139. [Google Scholar]

- 35.Harvey N, Fischer I. Taking advice: Accepting help, improving judgment, and sharing responsibility. Organ Behav Hum Dec. 1997;70(2):117–133. [Google Scholar]

- 36.Williams KD. Ostracism. Annu Rev Psychol. 2007;58:425–452. doi: 10.1146/annurev.psych.58.110405.085641. [DOI] [PubMed] [Google Scholar]

- 37.Eisenberger NI, Lieberman MD, Williams KD. Does rejection hurt? An FMRI study of social exclusion. Science. 2003;302(5643):290–292. doi: 10.1126/science.1089134. [DOI] [PubMed] [Google Scholar]

- 38.Schelling TC. The Strategy of Conflict. Harvard Univ Press; Cambridge, MA: 1980. [Google Scholar]

- 39.McPherson M, Smith-Lovin L, Cook JM. Birds of a feather: Homophily in social networks. Annu Rev Sociol. 2001;27:415–444. [Google Scholar]

- 40.Buchan NR, Croson RTA, Solnick S. Trust and gender: An examination of behavior and beliefs in the Investment Game. J Econ Behav Organ. 2008;68(3-4):466–476. [Google Scholar]

- 41.Diaconescu AO, et al. Inferring on the intentions of others by hierarchical Bayesian learning. PLOS Comput Biol. 2014;10(9):e1003810. doi: 10.1371/journal.pcbi.1003810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kahn A, Krulewitz JE, O'Leary VE, Lamm H. Equity and equality: Male and female means to a just end. Basic Appl Soc Psych. 1980;1(2):173–197. [Google Scholar]

- 43.Mikula G. Nationality, performance, and sex as determinants of reward allocation. J Pers Soc Psychol. 1974;29(4):435–440. [Google Scholar]

- 44.De Martino B, Fleming SM, Garrett N, Dolan RJ. Confidence in value-based choice. Nat Neurosci. 2013;16(1):105–110. doi: 10.1038/nn.3279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fleming SM, Huijgen J, Dolan RJ. Prefrontal contributions to metacognition in perceptual decision making. J Neurosci. 2012;32(18):6117–6125. doi: 10.1523/JNEUROSCI.6489-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Shea N, et al. Supra-personal cognitive control and metacognition. Trends Cogn Sci. 2014;18(4):186–193. doi: 10.1016/j.tics.2014.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Proust M. Remembrance of Things Past. Wordsworth Editions Ltd.; Ware, UK: 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.