Abstract

This paper investigates drive-response synchronization for a class of neural networks with time-varying discrete and distributed delays (mixed delays) as well as discontinuous activations. Strict mathematical proof shows the global existence of Filippov solutions to neural networks with discontinuous activation functions and the mixed delays. State feedback controller and impulsive controller are designed respectively to guarantee global exponential synchronization of the neural networks. By using Lyapunov function and new analysis techniques, several new synchronization criteria are obtained. Moreover, lower bound on the convergence rate is explicitly estimated when state feedback controller is utilized. Results of this paper are new and some existing ones are extended and improved. Finally, numerical simulations are given to verify the effectiveness of the theoretical results.

Keywords: Neural networks, Discontinuous activations, Exponential synchronization, Filippov solutions, State feedback control, Impulsive control

Introduction

Although neural networks with piecewise-linear neuron activation or continuously differentiable strictly increasing sigmoid activation have important applications in signal and image processing (Di Marco et al. 2010, 2005; Kamel and Xia 2009; Cao and Wan 2014; Zhang et al. 2014), it is reported that neural networks with discontinuous (or non-Lipschitz) neuron activations are an ideal model for neuron amplifiers with very high gain (Forti et al. 2005). For example, the sigmoidal neuron activations of the classical Hopfield network with high-gain amplifiers would approach a discontinuous hard-comparator function (Li et al. 1989). The high-gain hypothesis is crucial to make negligible the contribution to the network energy function of the term depending on the neuron self-inhibitions, and to favor binary output formation (Li et al. 1989; Forti and Nistri 2003). When dealing with neural networks possessing high-slope nonlinear activations it is often advantageous to model them with a system of differential equations with discontinuous neuron activations, rather than studying the case where the slope is high but of finite value. So far many results have been published on the convergence of periodic (almost) periodic solutions or equilibrium point of neural networks with discontinuous activations (Forti et al. 2005, 2006; Liu et al. 2011, 2012, 2014; Lu and Chen 2008, 2006; Wang et al. 2009; Cai and Huang 2011; Haddad 1981) since the global convergence criteria of this class of neural networks was firstly researched in Forti and Nistri (2003), nevertheless, synchronization result on this class of neural networks is seldom.

Since the pioneering work of Pecora and Carrol (1990), much attention have been attracted to synchronization due to its wide applications in engineering such as secure communication, biological systems, information processing (Yan and Wang 2014; Yang et al. 2011b, 2014; Liao and Huang 1999; Cao et al. 2013; Li et al. 2013; Rigatos 2014; Xu 2014. In Pecora and Carroll (1990), complete synchronization (synchronization for brief) was proposed, the mechanism of which is this: a chaotic system, called the driver (or master), generates a signal sent over a channel to a responder (or slave) which is identical with the driver, then the responder uses this signal to control itself so that it oscillates in a synchronized manner with the driver. By far many effective control methods have been proposed to realize synchronization, such as state feedback control, intermittent control, impulsive control, etc. Recently, by using the state feedback control technique, several quasi-synchronization criteria were obtained in Liu et al. (2011) for neural networks with discontinuous activations and parameters mismatches. Results in Liu et al. (2011) show that, under the linear state feedback controller as usual, complete synchronization cannot be realized even without parameter mismatches between the drive and response systems due to the discontinuity of activation function. Afterwards, by using a class of discontinuous state feedback control, authors in Yang and Cao (2013) considered exponential synchronization of neural networks with time-varying delays and discontinuous activations. However, authors of Yang and Cao (2013) did not consider distributed delay.

As a matter of fact, a realistic neural network should involve both discrete and distributed delays (Liao and Lu 2011). It is well known that discrete time-delays are introduced in neural networks due to the finite speed of switching of the amplifiers and transmissions of signal in the network, while distributed delays correspond to the presence of an amount of parallel pathways with a variety of axon sizes and lengths. The distributed delay is usually bounded since the signal propagation is distributed during a certain time period. The application of distributed delay can be found in Tank and Hopfield (1987), where a neural circuit with bounded distributed delays has been designed to solve a general problem of recognizing patterns in a time-dependent signal. Although there were many results on synchronization of continuous neural networks with discrete and distributed delays (mixed delays) (Cao et al. 2007; Yang et al. 2013; Wang et al. 2010; Yang et al. 2011a), as far as we know, result on synchronization of neural networks with discontinuous activations and mixed delays is few.

On the other hand, impulsive control technique has got the favor of many researchers because it needs small control gains and acts only at discrete time instants. These special characters of impulsive control can reduce control cost drastically (Yang et al. 2011b). However, result on synchronization of neural networks with discontinuous activations under impulsive control has not yet been reported in the literature.

Motivated by the above analysis, this paper aims to investigate global exponential synchronization of neural networks with discontinuous activation functions and time-varying mixed delays via two kinds of control technique: state feedback control and impulsive control. The bounded distributed delay is related to delay kernel, which makes the considered model more general. Unlike Lu and Chen (2008), Liu et al. (2012), Lu and Chen (2006), and Haddad (1981), in which constructing method was utilized to get approximate Filippov solutions of neural networks with discontinuous activations and mixed delays, we show the global existence of Filippov solutions through strict mathematical proof. Then, by using the sign function, novel state feedback controller and impulsive controller are designed to guarantee the synchronization goal. Moreover, the lower bound on the convergence rate is explicitly estimated when state feedback controller is utilized. Numerical simulations show the effectiveness of the theoretical results.

Notations

In the sequel, if not explicitly stated, matrices are assumed to have compatible dimensions. is the set of positive integers, denotes the identity matrix of -dimension. is the space of real number. The Euclidean norm in is denoted as , accordingly, for vector , , where denotes transposition. represents each component of is zero. denotes a matrix of -dimension, . stands for the closure of the convex hull of the set .

The rest of this paper is organized as follows. In section “Model description and some preliminaries”, model of discontinuous neural networks with mixed delays is described. Some necessary assumptions, definitions and lemmas are also given in this section. Exponential synchronization of the considered model under state feedback control and impulsive control are studied in sections “Synchronization under state feedback control” and “Synchronization under impulsive control”, respectively. In section “Examples and simulations”, two examples with their numerical simulations verify the effectiveness of our results. conclusions and future research interest are given in section “Conclusion”. At last, acknowledgments are presented.

Model description and some preliminaries

In this paper, we consider the neural network with time-varying discrete and distributed delays which is described as follows:

| 1 |

where is the state vector; , in which , are the neuron self-inhibitions; , and are the connection weight matrices; the activation function represents the output of the network; is the external input vector; the bounded functions and (They can be different) represent unknown time-varying discrete and distributed delays, respectively; is a non-negative bounded scalar function for describing the delay kernel.

The trajectory of the solution of neural network (1) can be any desired state: equilibrium point, a nontrivial periodic or almost periodic orbit, or even a chaotic orbit. In this paper, we suppose that the activation function is not continuous on . Hence, the system (1) becomes a differential equation with discontinuous right-hand side. In this case, the uniqueness of the solution of (1) might be lost, and at the worst case, one can not define a solution in the conventional sense.

In order to study the dynamics of a system of differential equation with discontinuous right-hand side, we first transform it into a differential inclusion (Filippov 1960) by using Filippov regularization, then by the measurable selection theorem in Aubin and Cellina 1984 we reach an uncertain differential equation. Thus, studying the dynamics of the system of differential equation with discontinuous right-hand side has at last been transformed into considering the same problem of the uncertain differential equation. The Filippov regularization is defined as follows.

Definition 1

Filippov (1960) (Filippov regularization). The Filippov set-valued map of at is defined as follows:

where , and is the Lebesgue measure of set .

By Definition 1, the Filippov set-valued map gives the convex hull of at the discontinuity points (ignoring sets of measure zero) when applied to the discontinuity points, but is otherwise the same as at continuous points.

For example, consider the following initial value problem (IVP):

| 2 |

where

| 3 |

According to Definition 1, the differential inclusion of the system (2) is as follows:

| 4 |

Function in (3) and its convex hull are shown in Fig. 1. Note that convex hull is not related to the values of at the discontinuous points.

Fig. 1.

Function in (3) (left) and convex hull for the (right)

A vector-value function defined on the interval is called a Filippov solution of (2) if it is absolutely continuous on and satisfies the differential inclusion for . By the measurable selection theorem in Aubin and Cellina (1984), we can find a measurable function such that for almost all (a.a.) and for a.a. .

The following properties hold Forti and Nistri (2003): for any and ; for any . A set-valued map with nonempty values is said to be upper semicontinuous at if, for any open set containing , there exists a neighborhood of such that . If is closed, has nonempty closed values, and is bounded in a neighborhood of each point , then is upper semicontinuous on if and only if its graph is closed (Forti and Nistri 2003).

From the above discussion, we give the following definition, which specifies what a Filippov solution of the system (1) is.

Definition 2

Cai and Huang (2011). A function , , is a solution (in the sense of Filippov) of the discontinuous system (1) on if:

-

(i)

is continuous on and absolutely continuous on ;

-

(ii)satisfies

5

or equivalently, by the measurable selection theorem in Aubin and Cellina (1984),

-

(ii’)There exists a measurable function , such that for a.a. and

6

The next definition is the initial value problem (IVP) associated to system (1).

Definition 3

(IVP) Forti et al. (2005). For any continuous function and measurable selection such that for a.a. by an initial value problem associated to (6) with initial condition , we mean the following problem: find a couple of functions , such that is a solution of (6) on for some , is an output associated to , and

| 7 |

Throughout this paper, we assume that

- ()

For every , is continuous except on a countable set of isolate points , where there exist finite right and left limits and , respectively. Moreover, has at most a finite number of jump discontinuities in every compact interval of ;

- ()

- There exist nonnegative constants and such that

, where , , for ;8 - ()

- There exist nonnegative constants and such that

, where , ;9 - ()

There exists constant and positive constants and such that , , and . Let .

- ()

There are positive function and positive constant such that and .

Remark 1

If there exists even one discontinuous point defined in (), the constant in (9) is positive. When the function is continuous on , then . Hence, the above assumptions include continuous activation function as a special case. This implies that results of this paper are also applicable to corresponding models with continuous function.

Remark 2

Generally, it is difficult to determine the exact value of at the discontinuous points of . What we only know is that is a bounded measurable function from the condition (). In order to realize complete synchronization of neural networks with discontinuous activations, the effects of the uncertainties of the measurable function must be surmounted. Obviously, the paper Liu et al. (2011) did not solve this problem.

In the literature, there were many results on the existence of solutions of differential inclusions with and without delays (Benchohra and Ntouyas 2000; Balasubramaniam et al. 2005). The main tool for proving the existence of solutions of differential inclusions is a fixed point theorem for condensing map due to Martelli (1975). Inspired by Benchohra and Ntouyas (2000) and Balasubramaniam et al. (2005), we shall prove that, under the above conditions, the neural network (1) exists solutions globally in the sense of Filippov. Before starting our proof, we first introduce the fixed point theorem for condensing map developed in Martelli (1975).

Lemma 1

Martelli (1975). Let be a Banach space and a condensing map. If the set is bounded, then has a fixed point, where denotes the set of all nonempty bounded closed and convex subsets of .

By using Lemma 1, we get the following Lemma 2. The analysis techniques is similar to those used in Benchohra and Ntouyas (2000), Balasubramaniam et al. (2005). In order to deal with the distributed delay, the method of exchanging integral order is utilized in (14).

Lemma 2

Suppose that – are satisfied. Then, there exists at least one solution of discontinuous neural network (1) on in the sense of Eq. (7).

Proof

Transform the problem in (7) into a fixed point problem. Consider the multi-valved map defined by

| 10 |

where is the Banach space of continuous functions from into normed by .

It is clear that the fixed points of are solutions to the IVP of (7).

It should be remarked that a completely continuous multi-valued map is the easiest example of a condensing map. By the similar process of the steps 1–4 in the proof of theorem 3.2 in Balasubramaniam et al. (2005) and the steps 1–4 in the proof of theorem 3.1 in Benchohra and Ntouyas (2000), it is easy to get that, under the assumptions –, is a completely continuous multi-valued map, upper semi-continuous with convex closed values.

Now we prove that the set is bounded.

Let , then for some . Thus there exists such that

| 11 |

Denote . For any , one has from and (11) that

| 12 |

It is easy to get that

| 13 |

where is the inverse function of .

Since is a non-negative bounded scalar function, there exists a positive constant such that for . It can be derived from and that

| 14 |

Therefore, it can be obtained from , (12), (13), and (14) that

where , .

Utilizing the Gronwall inequality yields:

| 15 |

It is obvious that for . Considering the inequality (15), we have , which implies that is bounded. As a consequence of Lemma 1, we deduce that has a fixed point which is a solution of (7).

From the above derivation process we also know that is bounded for any positive time, and hence it is defined on . This completes the proof.

Remark 3

By exchanging the integral order and using the integration by substitution, Lemma 1 gives sufficient conditions on the existence of Filippov solution to the neural network with time-varying mixed delays. It is easy prove the existence of Filippov solution to the neural network with time-varying discrete delay and unbounded distributed delay by using the same analysis technique as that in the proof of Lemma 1. As fa as we know, no published paper give strict mathematical proof for the existence of Filippov solution to neural networks with discontinuous activation functions and mixed delays. Although many attempts have been made by researchers, to the best of our knowledge, this problem has not been solved in the literature so far. Recently, the authors of Cai and Huang (2011) had to deal with the distributed delay as discrete delay [see the proof of Lemma 3.1 in Cai and Huang (2011)], authors in Lu and Chen (2008), Liu et al. (2012), Lu and Chen (2006), and Haddad (1981) only got approximate Filippov solutions to such discontinuous neural networks with mixed delays by constructing a sequence of continuous delay differential equations with high–slope right–hand sides. Moreover, the conditions on the discontinuous activation functions in Lemma 1 are very general. However, the discontinuous activation functions in Lu and Chen (2008), Liu et al. (2012), Lu and Chen (2006), and Haddad (1981) were monotonically nondecreasing or uniformly locally bounded. This is another reason for giving Lemma 1.

Based on the drive-response concept for synchronization proposed by Pecora and Carrol Pecora and Carroll (1990), we consider the neural network model (1) as the drive system, the controlled response system is given in the following form:

| 16 |

where is the state of the response system, is the controller to be designed, the other parameters are the same as those defined in system (1).

In view of Definitions 2, 3 and Lemma 2, the initial value problem of system (16) is

| 17 |

According to Pecora and Carroll (1990), if , then (17) is said to be synchronized with (1) under the controller .

Remark 4

Since the measurable functions and are uncertain at the discontinuous points of activation function , the usual state feedback controller and impulsive controller as those in Liu et al. (2011) and Yang et al. (2011b) can not realize synchronization between systems (1) and (17). Results of Liu et al. (2011) shows that only quasi-synchronization can be achieved when the usual linear state feedback controllers are added to systems with discontinuous right-hand side and discrete time delays. As for synchronization of neural networks with discontinuous activations or other systems with discontinuous right-hand side, we do not find any similar result in the literature, let alone result on synchronization of neural networks with time-varying mixed delays via impulsive control. This fact implies that realizing synchronization of neural networks with discontinuous activations and time-varying mixed delays is not an easy work.

Remark 5

Although achieving synchronization of neural networks with discontinuous activations under control is difficult, the stability of neural networks with discontinuous activations as (1) without control can be realized by designing special connection weight matrices, for instance, the matrices should satisfy the Lyapunov Diagonal Stable (LDS) condition (Forti et al. 2006) or other linear matrix inequalities (LMIs) (Lu and Chen 2008; Wang et al. 2009; Liu et al. 2012; Cai and Huang 2011). In this paper, we shill study the exponential synchronization of (16) and (1) without using these special and strict conditions.

In this paper, we study exponential synchronization between the neural networks (16) and (1), i.e., by adding suitable controller to (16), the state of (16) can be exponentially synchronized onto the state of (1). Based on the discussion above, if the state of (17) is exponentially synchronized onto the state of (7), then our synchronization goal can be realized. Let . Substituting (7) from (17) yields the following error system:

| 18 |

where .

Now we introduce the definition of exponential synchronization, which is used in this paper.

Definition 4

The controlled neural network (16) with discontinuous activations is said to be exponentially synchronized with system (1) if there exist positive constants such that

hold for .

Obviously, is the equilibrium point of the error system (18) when . If system (18) realizes global exponential stability at the origin for any given initial condition, then the global exponential synchronization between (16) and (1) [or (17) and (7)] is achieved.

Let be a locally Lipschitz continuous function. The Clarke’s generalized gradient of at Clarke (1983) is defined by , where is the set of Lebesgue measure zero where does not exist, and is an arbitrary set with measure zero.

The next lemma will be useful to compute the time derivative along solutions (18) of the Lyapunov function designed in the later sections.

Lemma 3

(Chain rule) Clarke (1983). If is C-regular Clarke (1983), and is absolutely continuous on any compact subinterval of . Then, and are differentiable for a.a. and

| 19 |

where is the Clark generalized gradient of at .

The next two lemmas will be utilized in this paper.

Lemma 4

Wang et al. (1992). If are real matrices with appropriate dimensions, then there exist number such that

Lemma 5

Yang et al. (2011a). Suppose is a non-negative bounded scalar function defined on and . For any constant matrix , , and vector function for , one has

provided the integrals are all well defined.

Synchronization under state feedback control

In the literature, there are many results concerning exponential synchronization of continuous neural networks with discrete or/and distributed delays. However, there is seldom published result on exponential synchronization of discontinuous neural networks with discrete or/and distributed delays. The main difficulty in studying the exponential synchronization comes from the discontinuous activations, which result in non-zero uncertain measurable selections and in the drive and response systems. In order to overcome this difficulty, in this section, we shall design a novel state feedback controller such that the uncertainty can be well dealt with and the controlled neural networks (16) can realize global exponential synchronization with system (1).

The following Theorem 1 is our first result.

Theorem 1

Suppose that the assumptions – are satisfied. Then the neural networks (1) and (16) can achieve global exponential synchronization under the following controller:

| 20 |

where , , is a positive constant, is a constant satisfying

| 21 |

, is the sign function. Moreover, the lower bound on the convergence rate is .

Proof

Define the following Lyapunov functional candidate:

In view of , one has . Computing the derivative of along trajectories of error system (18), we get from , Lemma 2, and the calculus for differential inclusion in Paden and Sastry (1987) that:

| 22 |

It can be get from Lemma 3 that

| 23 |

and

| 24 |

By virtue of Lemma 4, it can be obtained from that

| 25 |

On the other hand, it is easy to get that

| 26 |

Therefore, it follows from (21) and (26) that

| 27 |

Substituting (23–27) into (22) produces that

| 28 |

where .

By virtue of , one gets that

| 29 |

Hence, it is followed from (22) and (29) that

| 30 |

According to Gronwall inequality, one derives from (30) that

which implies the following inequality:

where .

According to Definition 4, the neural networks (1) and (16) achieve global exponential synchronization. This completes the proof.

Remark 6

According to Theorem 1, the designed state feedback controller (20) can realize global exponential synchronization between the discontinuous neural networks (1) and (16). According to the definition of sign function ( if , if , and if ), the controller (20) becomes if , if , and if , . Hence, the role of the controller is: it decreases when , it increases when , and the controller is not needed when . From the inequality (27) one can see that the term in the controller (20) plays the role of dealing with the uncertainties of the measurable selections. To the best of our knowledge, no result concerning complete synchronization of discontinuous neural networks with time-varying mixed delays is published in the literature, let alone exponential synchronization of discontinuous neural networks with time-varying mixed. Recently, in Liu et al. (2011), the synchronization issue of coupled discontinuous neural networks and other chaotic systems were investigated by using the classical state feedback controller, i.e., controller (20) without the term , however, only quasi-synchronization criteria were derived due to its disability in coping with the uncertainties of the uncertain measurable selections in the drive and response systems. Moreover, authors in Liu et al. (2011) did not consider distributed delay. Therefore, Theorem 1 in this paper is new and improve the corresponding results in Liu et al. (2011).

Remark 7

In the proof of Theorem 1, we do not use the well known Halanay inequality. One of the advantages using the method in the proof is that the relationship between the control gain and the convergence rate is obvious. However, it is not so obvious by using Halanay inequality. Moreover, the utilized Lyaponov functional (22) is simple and does not include the exponential function . In sum, by using the simple Lyaponov functional and the new proving method, we simplify the proof process.

Synchronization under impulsive control

In the above section, the designed state feedback controller leads to the global exponential synchronization between (1) and (16). However, the corresponding control cost may be high. It is well known that it is better that designed controller can not only realize the synchronization goal but also reduce the control cost. Impulsive control, as an effective and ideal control technique, is activated only at some isolated points. Obviously, the control cost can be drastically reduced if the response system can be synchronized with the drive system under impulsive control. Until now, impulsive control has been extensively utilized to study synchronization (Yang et al. 2011b), but result on synchronization of neural networks with discontinuous activations via impulsive control is seldom, let alone the same result of discontinuous neural networks with mixed delays. Hence, in the present section, a novel impulsive controller shall be designed such that the system (16) can globally exponentially synchronize with system (1). Furthermore, a useful corollary will be given such that the given synchronization criterion reduces conservativeness as much as possible.

The impulsive controller is designed as

| 31 |

where , are constants to be determined, and are defined in Theorem 1, the time sequence satisfies , and , is the Dirac impulsive function.

With the impulsive controller (31), the synchronization error system (18) turns out to the following hybrid impulsive system:

| 32 |

where , , .

The following Theorem 2 states the sufficient conditions guaranteeing the global exponential synchronization between (1) and (16) under the impulsive controller (32).

Theorem 2

Suppose that the assumptions – are satisfied. Then the neural networks (1) and (16) can achieve global exponential synchronization under the impulsive controller (31), if there exist positive constants such that the following inequalities hold:

| 33 |

| 34 |

| 35 |

where , .

Proof

Define the following Lyapunov functional candidate:

| 36 |

Computing the derivative of along trajectories of error system (32) for yields:

| 37 |

For constants , one has from Lemma 3 that

| 38 |

and

| 39 |

Substituting (25), (27), (38) and (39) into (37) produces that

| 40 |

On the other hand, when , it is obtained from inequality (34) and the second equation of (32) that

| 41 |

For any , let be the unique solution of the following impulsive delay system:

| 42 |

where , , .

For , using the ordinary differential equation theory, one gets

| 43 |

For , one obtains from (43) and the second equation of (42) that

| 44 |

and

| 45 |

It is followed that

By induction, we can derive that, for ,

| 46 |

It is derived from that

| 47 |

where .

It follows from (46) and (47) that

| 48 |

where . Now define

It can be derived from and (35) that

Since and is continuous on , there exists an unique constant such that

| 49 |

It is obvious that

We claim that the inequality

| 50 |

holds for . If it is not true, there exists a point such that

| 51 |

and

| 52 |

Then one has from (48) and (52) that

| 53 |

By simple computation, one can get

| 54 |

Noticing , one derives from (49), (53), and (54) that

which contradicts (51). So (50) holds. Letting , one gets from the lemma 3 in Yang et al. (2011a) that

which implies for . According to Definition 4, the neural networks (1) and (16) realize global exponential synchronization under the (31). This completes the proof.

In Theorem 2, for given and , different values of lead to different value of . It is well known that larger can further reduce the control cost. In order to enlarge the value of , appropriate values of and in (35) should be taken. The following Corollary 1 solves this problem.

Corollary 1

Suppose that the assumptions - are satisfied. Then the neural networks (1) and (16) can achieve global exponential synchronization under the impulsive controller (31), if the inequalities (33), (34) and the following inequality hold:

| 55 |

where and are defined in Theorem 2.

Proof

Define the function with variables as

In order to reduce the conservativeness of (35) holds, we only need to find a point such that takes the minimum value and . By simple computation, one has and . Let , one gets . Since and , takes the minimum value at the point . Let , one derives (28). This completes the proof.

Remark 8

When the activation function is continuous, the constant becomes zero. In this case, the state feedback controller (20) and impulsive controller (31) are also effective, and the constant can be chosen as . When , the controllers (20) and (31) become the classical the state feedback (Liu et al. 2011) and impulsive controllers (Yang et al. 2011b), respectively. Moreover, the bounded distributed delay becomes the usual one when the delay kernel . It is obvious from Lemma 4 and the proofs of Theorems 1 and 2 that the distributed delay can be extended to the unbounded case. Hence, the results of this paper are general and are applicable to neural networks with both discontinuous and continuous activations.

Remark 9

In this paper, the existence of Filippov solutions to a class of discontinuous neural networks with time-varying mixed delays is solved, analysis technology is different from those in Liu et al. (2012) and Cai and Huang (2011). One may think that whether the existence of the solution of (17) is guaranteed when the controller is added to the system. This problem can still be solved in this paper. In the case that is state feedback controller (20), the existence of the solution of (17) is guaranteed by using the same analysis technique in the proof of Lemma 1. In the case of impulsive controller (31), one can first translate (17) into the form of (46) by using the similar the method in (43–46), then apply the same analysis technique in the proof of Lemma 1. In order to avoid tedious repetition, we do not give all the proofs in this paper.

Examples and simulations

In this section, numerical examples and figures are given to show the theoretical results obtained above. Consider the following delayed neural networks:

| 56 |

where , , , , , , and

the activation function is with

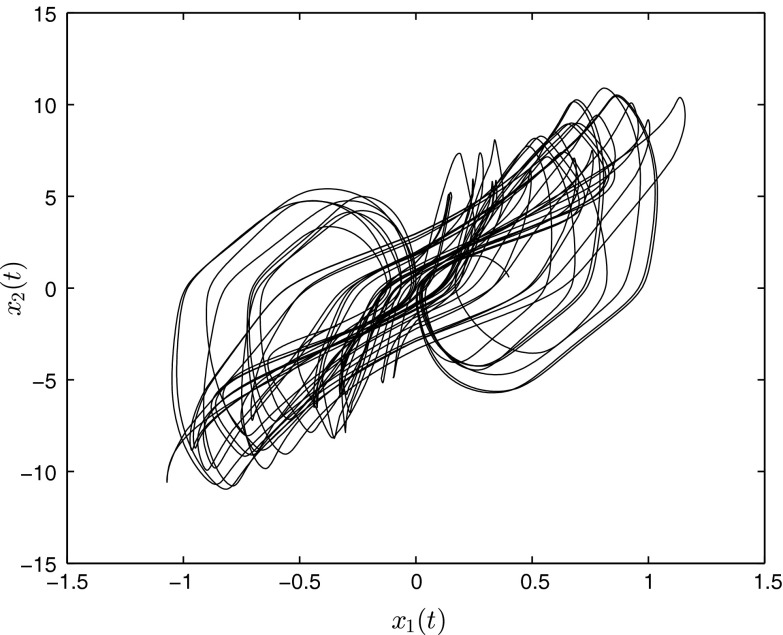

Figure 2 shows the trajectory of (56) with initial condition .

Fig. 2.

Trajectory of system (56) with initial value

Obviously, neural network (56) satisfies – with , , , , . Now consider the following controlled response system of system (56):

| 57 |

When no controller is added to the response system (57), the systems (56) and (57) can not realize synchronization, see Fig. 3.

Fig. 3.

Time response of the trajectory error between systems (56) and (57) without control

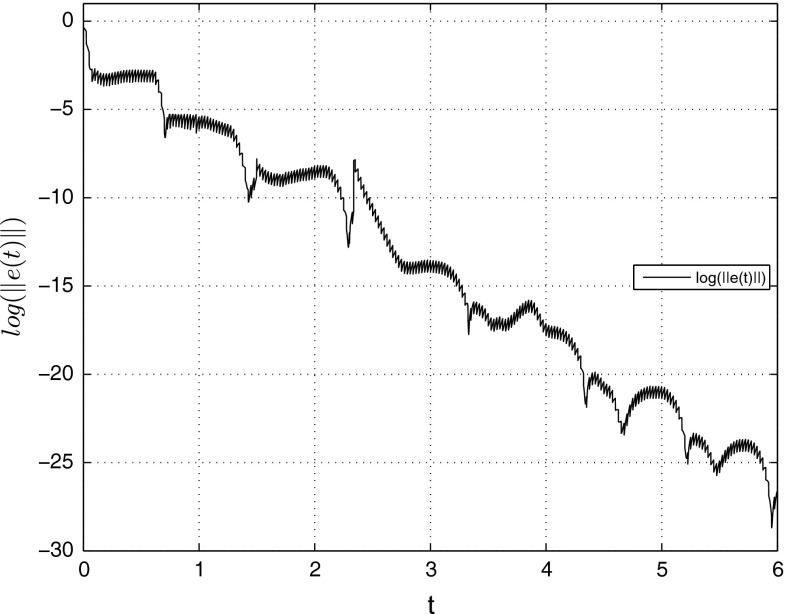

In the first case, we use the state feedback controller (20). Take . By simple computation, we get , , . Take , , . According to Theorem 1, system (57) can be exponentially synchronized with (56) under the state feedback controller (20) and the convergence rate is .

In the numerical simulations, we use the forward Euler method, which was used in Danca (2004) to obtain numerical solution of differential inclusions. The parameters in the simulations are taken as: step-length is 0.0005, for . The trajectory of is presented in Fig. 4, from which one can see that , so the convergence rate of is larger than 4.9, which is larger than . Therefore, the results in Theorem 1 are verified.

Fig. 4.

Time response of between systems (56) and (57) via state feedback controller (20)

Now we construct impulsive controllers. Taking , , , it is obtained that and

According to the above computation in the first case, we still take . Then the inequalities (33), (34) and (55) are satisfied. According to Corollary 1, system (57) can be exponentially synchronized with (56) under the impulsive controller (31).

In the numerical simulations, the other parameters and initial values of are the same as those in the first case. The trajectory of are presented in Fig. 5, which verify the effectiveness of Corollary 1, and hence the Theorem 2 is also effective.

Fig. 5.

Time response of between systems (56) and (57) via impulsive controller (31)

Remark 10

Numerical examples and simulations specify the effectiveness of the designed controllers and the obtained results. However, results and numerical simulations in Liu et al. (2011) only get quasi-synchronization for systems with discontinuous right-hand side under the linear state feedback controller (for instance see Remark 6, Corollary 2, and Figs. 8–10 in Example 3 in the reference Liu et al. (2011)). From the proofs of Theorems 1–2 and and Corollary 1 and the numerical simulations one can see that the term in the controllers plays a key role in dealing with the uncertain measurable functions and between the drive and response systems. Therefore, theoretical results and numerical simulations in this paper improve those in Liu et al. (2011).

Conclusion

The issue of controlled synchronization of neural networks with discontinuous activations has intrigued increasing interests of researchers from different fields. However, few published paper considered complete synchronization control of such system with mixed delays. Hence, this paper study the exponential synchronization of neural networks with discontinuous activations with mixed delays. Strict mathematical proof guaranteeing the global existence of Filippov solutions to neural networks with discontinuous activation functions and mixed delays has been given. Both state feedback control and impulsive control techniques have been considered. The designed controllers are simple and can be applied to neural networks with discontinuous and continuous activations. Compared with existing results which only quasi-synchronization can be realized, the obtained results of this paper are better. Numerical simulations show that the theoretical results are effective.

It is well known that finite-time synchronization means the optimality in convergence time Yang and Cao (2010). Recently, authors in Forti et al. (2005) investigated the global convergence in finite time for a subclass of neural networks with discontinuous activations and constant delay. However, finite-time synchronization in an array of coupled general neural networks with discontinuous activations and time-varying mixed delays has not been considered in the literature, this is an interesting and challenging problem to be considered in our future research.

Acknowledgments

This work was jointly supported by the National Natural Science Foundation of China (NSFC) under Grants Nos. 61263020, 11101053, 61272530, and 11072059, and CityU Grants 7008188 and 7002868, and the Program of Chongqing Innovation Team Project in University under Grant No. KJTD201308, the Natural Science Foundation of Jiangsu Province of China under Grant BK2012741.

Contributor Information

Xinsong Yang, Email: xinsongyang@163.com.

Jinde Cao, Email: jdcao@seu.edu.cn.

Daniel W. C. Ho, Email: madaniel@cityu.edu.hk

References

- Aubin J, Cellina A. Differential inclusions: set-valued maps and viability theory. New York: Springer; 1984. [Google Scholar]

- Balasubramaniam P, Ntouyas SK, Vinayagam D. Existence of solutions of semilinear stochastic delay evolution inclusions in a Hilbert space. J Math Anal Appl. 2005;305(2):438–451. doi: 10.1016/j.jmaa.2004.10.063. [DOI] [Google Scholar]

- Benchohra M, Ntouyas SK. Existence of mild solutions of semilinear evolution inclusions with nonlocal conditions. Georgian Math J. 2000;7(2):221–230. [Google Scholar]

- Cai Z, Huang L. Existence and global asymptotic stability of periodic solution for discrete and distributed time-varying delayed neural networks with discontinuous activations. Neurocomputing. 2011;74:3170–3179. doi: 10.1016/j.neucom.2011.04.027. [DOI] [Google Scholar]

- Cao J, Alofi A, Al-Mazrooei A, Elaiw A (2013) Synchronization of switched interval networks and applications to chaotic neural networks. Abstr Appl Anal. Article ID 940573, 11 p

- Cao J, Wan Y. Matrix measure strategies for stability and synchronization of inertial BAM neural network with time delays. Neural Netw. 2014;53:165–172. doi: 10.1016/j.neunet.2014.02.003. [DOI] [PubMed] [Google Scholar]

- Cao J, Wang Z, Sun Y. Synchronization in an array of linearly stochastically coupled networks with time delays. Physica A. 2007;385(2):718–728. doi: 10.1016/j.physa.2007.06.043. [DOI] [Google Scholar]

- Clarke F. Optimization and nonsmooth analysis. New York: Wiley; 1983. [Google Scholar]

- Danca M. Controlling chaos in discontinuous dynamical systems. Chaos Solitons Fractals. 2004;22:605–612. doi: 10.1016/j.chaos.2004.02.032. [DOI] [Google Scholar]

- Di Marco M, Forti M, Grazzini M, Pancioni L. Fourth-order nearly-symmetric cnns exhibiting complex dynamics. Int J Bifurc Chaos. 2005;15(5):1579–1587. doi: 10.1142/S0218127405012867. [DOI] [Google Scholar]

- Di Marco M, Forti M, Grazzini M, Pancioni L. Limit set dichotomy and convergence of semiflows defined by cooperative standard cnns. Int J Bifurc Chaos. 2010;20(11):3549–3563. doi: 10.1142/S0218127410027891. [DOI] [Google Scholar]

- Filippov A. Differential equations with discontinuous right-hand side. Matematicheskii Sb. 1960;93(1):99–128. [Google Scholar]

- Forti M, Nistri P. Global convergence of neural networks with discontinuous neuron activations. IEEE Trans Circuts Syst I. 2003;50(11):1421–1435. doi: 10.1109/TCSI.2003.818614. [DOI] [Google Scholar]

- Forti M, Grazzini M, Nistri P, Pancioni L. Generalized lyapunov approach for convergence of neural networks with discontinuous or non-Lipschitz activations. Physica D. 2006;214(1):88–99. doi: 10.1016/j.physd.2005.12.006. [DOI] [Google Scholar]

- Forti M, Nistri P, Papini D. Global exponential stability and global convergence in finite time of delayed neural networks with infinite gain. IEEE Trans Neural Netw. 2005;16(6):1449–1463. doi: 10.1109/TNN.2005.852862. [DOI] [PubMed] [Google Scholar]

- Haddad G. Monotone viable trajectories for functional differential inclusions. J Differ Eq. 1981;42(1):1–24. doi: 10.1016/0022-0396(81)90031-0. [DOI] [Google Scholar]

- Kamel MS, Xia Y. Cooperative recurrent modular neural networks for constrained optimization: a survey of models and applications. Cogn Neurodyn. 2009;3(1):47–81. doi: 10.1007/s11571-008-9036-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li JH, Michel AN, Porod W. Analysis and synthesis of a class of neural networks: variable structure systems with infinite grain. IEEE Trans Circuts Syst. 1989;36(5):713–731. doi: 10.1109/31.31320. [DOI] [Google Scholar]

- Li Y, Liu Z, Luo J, Wu H. Coupling-induced synchronization in multicellular circadian oscillators of mammals. Cogn Neurodyn. 2013;7(1):59–65. doi: 10.1007/s11571-012-9218-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liao CW, Lu CY. Design of delay-dependent state estimator for discrete-time recurrent neural networks with interval discrete and infinite-distributed time-varying delays. Cogn Neurodyn. 2011;5(2):133–143. doi: 10.1007/s11571-010-9135-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liao T, Huang NS. An observer-based approach for chaotic synchronization with applications to secure communications. IEEE Trans Circuts Syst I. 1999;46(9):1144–1150. doi: 10.1109/81.788817. [DOI] [Google Scholar]

- Liu J, Liu X, Xie W. Global convergence of neural networks with mixed time-varying delays and discontinuous neuron activations. Inf Sci. 2012;183:92–105. doi: 10.1016/j.ins.2011.08.021. [DOI] [Google Scholar]

- Liu X, Park JH, Jiang N, Cao J. Nonsmooth finite-time stabilization of neural networks with discontinuous activations. Neural Netw. 2014;52:25–32. doi: 10.1016/j.neunet.2014.01.004. [DOI] [PubMed] [Google Scholar]

- Liu X, Chen T, Cao J, Lu W. Dissipativity and quasi-synchronization for neural networks with discontinuous activations and parameter mismatches. Neural Netw. 2011;24(10):1013–1021. doi: 10.1016/j.neunet.2011.06.005. [DOI] [PubMed] [Google Scholar]

- Lu W, Chen T. Almost periodic dynamics of a class of delayed neural networks with discontinuous activations. Neural Comput. 2008;20(4):1065–1090. doi: 10.1162/neco.2008.10-06-364. [DOI] [PubMed] [Google Scholar]

- Lu W, Chen T. Dynamical behaviors of delayed neural network systems with discontinuous activation functions. Neural Comput. 2006;18(3):683–708. doi: 10.1162/neco.2006.18.3.683. [DOI] [Google Scholar]

- Martelli M. A Rothe’s type theorem for non compact acyclic-valued maps. Boll Unione Mat Ital. 1975;4(3):70–76. [Google Scholar]

- Paden B, Sastry S. A calculus for computing Filippov’s differential inclusion with application to the variable structure control of robot manipulators. IEEE Trans Circuts Syst. 1987;34(1):73–82. doi: 10.1109/TCS.1987.1086038. [DOI] [Google Scholar]

- Pecora L, Carroll TL. Synchronization in chaotic systems. Phys Rev Lett. 1990;64(8):821–824. doi: 10.1103/PhysRevLett.64.821. [DOI] [PubMed] [Google Scholar]

- Rigatos G (2014) Robust synchronization of coupled neural oscillators using the derivative-free nonlinear kalman filter. Cogn Neurodyn. doi:10.1007/s11571-014-9299-8 [DOI] [PMC free article] [PubMed]

- Tank D, Hopfield JJ. Neural computation by concentrating information in time. Proc Natl Acad Sci. 1987;84(7):1896–1900. doi: 10.1073/pnas.84.7.1896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J, Huang L, Guo Z. Global asymptotic stability of neural networks with discontinuous activations. Neural Netw. 2009;22(7):931–937. doi: 10.1016/j.neunet.2009.04.004. [DOI] [PubMed] [Google Scholar]

- Wang T, Xie L, de Souza CE. Robust control of a class of uncertain nonlinear systems. Syst Control Lett. 1992;19(2):139–149. doi: 10.1016/0167-6911(92)90097-C. [DOI] [Google Scholar]

- Wang Y, Wang Z, Liang J, Li Y, Du M. Synchronization of stochastic genetic oscillator networks with time delays and Markovian jumping parameters. Neurocomputing. 2010;73(13–15):2532–2539. doi: 10.1016/j.neucom.2010.06.006. [DOI] [Google Scholar]

- Xu A, Du Y, Wang R (2014) Interaction between different cells in olfactory bulb and synchronous kinematic analysis. Discrete Dyn Nat Soc. Artical ID 808792

- Yan C, Wang R. Asymmetric neural network synchronization and dynamics based on an adaptive learning rule of synapses. Neurocomputing. 2014;125:41–45. doi: 10.1016/j.neucom.2012.07.045. [DOI] [Google Scholar]

- Yang X, Cao J. Finite-time stochastic synchronization of complex networks. Appl Math Model. 2010;34(11):3631–3641. doi: 10.1016/j.apm.2010.03.012. [DOI] [Google Scholar]

- Yang X, Cao J. Exponential synchronization of delayed neural networks with discontinuous activations. IEEE Trans Circuts Syst I. 2013;60(9):2431–2439. [Google Scholar]

- Yang X, Huang C, Zhu Q. Synchronization of switched neural networks with mixed delays via impulsive control. Chaos Solitons Fractals. 2011;44(10):817–826. doi: 10.1016/j.chaos.2011.06.006. [DOI] [Google Scholar]

- Yang X, Cao J, Lu J. Synchronization of delayed complex dynamical networks with impulsive and stochastic effects. Nonlinear Anal Real World Appl. 2011;12:2252–2266. doi: 10.1016/j.nonrwa.2011.01.007. [DOI] [Google Scholar]

- Yang X, Cao J, Lu J. Synchronization of coupled neural networks with random coupling strengths and mixed probabilistic time-varying delays. Int J Robust Nonlinear Control. 2013;23(18):2060–2081. doi: 10.1002/rnc.2868. [DOI] [Google Scholar]

- Yang X, Cao J, Yu W. Exponential synchronization of memristive Cohen–Grossberg neural networks with mixed delays. Cogn Neurodyn. 2014;8(3):239–249. doi: 10.1007/s11571-013-9277-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Z, Cao J, Zhou D. Novel lmi-based condition on global asymptotic stability for a class of Cohen–Grossberg BAM networks with extended activation functions. IEEE Trans Neural Netw Lear Syst. 2014;25(6):1161–1172. doi: 10.1109/TNNLS.2013.2289855. [DOI] [Google Scholar]