Abstract

It remains unclear how single neurons in the human brain represent whole-object visual stimuli. While recordings in both human and nonhuman primates have shown distributed representations of objects (many neurons encoding multiple objects), recordings of single neurons in the human medial temporal lobe, taken as subjects' discriminated objects during multiple presentations, have shown gnostic representations (single neurons encoding one object). Because some studies suggest that repeated viewing may enhance neural selectivity for objects, we had human subjects discriminate objects in a single, more naturalistic viewing session. We found that, across 432 well isolated neurons recorded in the hippocampus and amygdala, the average fraction of objects encoded was 26%. We also found that more neurons encoded several objects versus only one object in the hippocampus (28 vs 18%, p < 0.001) and in the amygdala (30 vs 19%, p < 0.001). Thus, during realistic viewing experiences, typical neurons in the human medial temporal lobe code for a considerable range of objects, across multiple semantic categories.

Keywords: distributed representation, human single neuron, medial temporal lobe, object representation

Introduction

A key question in neuroscience is whether neuronal representation is distributed across populations of neurons or more localized to stimulus-selective neurons (Bowers, 2009). Distributed coding (i.e., individual neurons encoding multiple stimuli;Thorpe, 1995) may offer many advantages, such as high coding capacity, resistance to noise, and generalization to similar stimuli (Rolls and Treves, 1998; Rolls and Deco, 2002). In contrast, localized (or gnostic) coding (i.e., individual neurons encoding singular stimuli unequivocally; Barlow, 1972; Thorpe, 1989) may provide metabolic efficiency and a simple relation between single neuron activity and different instantiations of objects (Hummel, 2000; Lennie, 2003). While the question of distributed object representation has been thoroughly studied in nonhuman primates (Baylis et al., 1985; Rolls and Tovee, 1995; Baddeley et al., 1997; Treves et al., 1999; Franco et al., 2007), there is less consensus among the few human studies that have been performed. Due to clinical constraints, these studies have been largely confined to recordings from medial temporal lobe (MTL) brain areas (i.e., hippocampus and amygdala).

Several studies, wherein images were shown to epilepsy patients, have revealed neural selectivity for individual objects, consistent with distributed representation (Kawasaki et al., 2005; Rutishauser et al., 2006; Viskontas et al., 2006; Steinmetz et al., 2011). Because these experiments did not show multiple views of each object, they are limited to explaining the neural representation of the specific exemplars shown. To date, only one series of studies has explicitly tested object encoding by single neurons in the human MTL (Quiroga et al., 2005; Quian Quiroga et al., 2009). In these experiments, subjects viewed multiple views of objects with multiple presentations of the same view, yielding results suggesting that individual neurons in the MTL are strongly selective for a small number of individual or related objects.

Given the contrast between these results, an obvious question remains: What is the neural representation of objects when multiple views are shown in one viewing session with limited presentations? This distinction is critical because it is well established that the MTL is involved in both recognition and recollection of prior experiences (Scoville and Milner, 1957; Squire et al., 2004). Many imaging studies reveal hemodynamic changes in the MTL with greater memory strength specifically after stimulus repetition (Law et al., 2005, Daselaar et al., 2006; for review, see Gonsalves et al., 2005; Yassa and Stark, 2008); some have even theorized that repetition contributes to increased representational sparsity (Desimone, 1996; Wiggs and Martin, 1998).

Thus to better understand single-neuron responses to objects as people might encounter them naturally, and as a first step in understanding the effects of initial and multiple viewing, we had human epilepsy patients discriminate objects in a single session of an oft-used visual discrimination task (Kreiman et al., 2000), where each view of an object was presented only six times. In contrast to findings with higher numbers of presentations, our results reveal that object representation in the human MTL during initial viewing is notably distributed, with the activity of many recorded neurons predicting the presence of multiple, unrelated objects.

Materials and Methods

Subjects.

We recorded single-neuron activity from 21 patients at the Barrow Neurological Institute (14 female, 18 right-handed, ages 20–56, mean age = 40). All patients had drug-resistant epilepsy and were evaluated for possible resection of an epileptogenic focus. Each patient granted his/her consent to participate in the experiments per a protocol approved by the Institutional Review Board of Saint Joseph's Hospital and Medical Center. Data were recorded from clinically mandated brain areas, including the hippocampus, amygdala, ACC, and vPFC.

Microwire bundles and implantation.

The extracellular action potentials corresponding to single-neuron activity and local field potentials were recorded from the tips of 38 μm diameter platinum-iridium microwires implanted along with a depth electrodes used to record clinical field potentials (Dymond et al., 1972; Fried et al., 1999). Each bundle of nine identical microwires was manufactured in the laboratory and implanted using previously described techniques (Thorp and Steinmetz, 2009; Wixted et al., 2014, their supplement) and typically had an impedance of 450 kΩ at 1000 Hz. Each anatomical recording site received one bundle of nine microwires. Given eight sites typically implanted per patient, this resulted in 72 microwires implanted in each patient. Electrodes were placed through a skull bolt with a custom frame to align the depth electrode along the chosen trajectory. The error in tip placement using this technique is estimated to be ±2 mm (Mehta et al., 2005). Note that this resolution is insufficient to determine subfields within the hippocampus or nuclei within the amygdala.

Amplification and digitization of microwire signals.

After the patient recovered from surgery (typically within 6 h), the microwire bundles were connected to the headstage amplifiers, amplification, and digitization system as previously described (Steinmetz et al., 2013; Wixted et al., 2014, their supplement) The complete recording system has a 4.1 μV RMS noise floor that permits recovery of single-neuron activity signals on the order of 20 μV (Thorp and Steinmetz, 2009).

Filtering and event detection.

Spike sorting was performed using methods previously described (Valdez et al., 2013). In review, possible action potentials (events) were detected by filtering with a bandpass filter, 300–3000 Hz, followed by a two-sided threshold detector (threshold = 2.8 times each channel's SD) to identify event times. The signal was then high-pass filtered (100 Hz, single-pole Butterworth) to capture waveform shape with the event time aligned at the ninth of 32 samples (Viskontas et al., 2006; Thorp and Steinmetz, 2009). All events captured from a particular channel were then separated into groups of similar waveform shape (clusters) using the open-source clustering program, KlustaKwik (klustakwik.sourceforge.net), a modified version of the Govaert–Celeux expectation maximization algorithm (Celeux and Govaert, 1992, 1995). The first principal component of all event shapes recorded from a channel was the waveform feature used for sorting. After sorting, each cluster was graded as noise, multi-unit activity (MUA), or single-unit activity (SUA) using the criteria described previously (Valdez et al., 2013, their Table 2). Figure 1 shows an example of a cluster representing SUA. We recorded from a total of 3239 neurons (SUA and MUA) during these experiments (Table 1). The average number of clusters per channel of recording was 0.55 for SUA and 1.3 for MUA. This is larger than the 0.4 SUA per channel recently reported by Misra et al. (2014). Based on our prior work (Thorp and Steinmetz, 2009), we would expect such differences are due to different noise characteristics of the clinical recording environment, though they could also be due to different spike sorting techniques, as Misra et al. (2014) used a manual spike-sorting process. In our experience, this technique reported in Valdez et al. (2013) produces results comparable to prior reports in other laboratories (Viskontas et al., 2006) in terms of recorded waveform shapes, interspike intervals, and firing rates (Wild et al., 2012; regarding variability in spike sorting depending on the particular waveforms shapes being detected). While it is important to note that these and other reports of human single-unit recordings (Kreiman et al., 2000; Steinmetz, 2009) do not achieve the quality of unit separation achievable in animal recordings (Hill et al., 2011), they nonetheless represent neural activity at a much finer spatial and temporal scale than otherwise achievable.

Table 2.

Summary of results from the linear models applied to response counts

| Brain area and side | Number of neurons | Number of p values <0.05 | % of neurons with significant responses to objects* | P value under the binomial distribution |

|---|---|---|---|---|

| Left amygdala | 134 | 32 | 24 | 1.2 × 10−13** |

| Right amygdala | 100 | 23 | 23 | 6.9 × 10−10** |

| Left hippocampus | 103 | 18 | 17 | 3.5 × 10−6** |

| Right hippocampus | 95 | 24 | 25 | 3.6 × 10−11** |

*p < 0.05 and **p < 0.001.

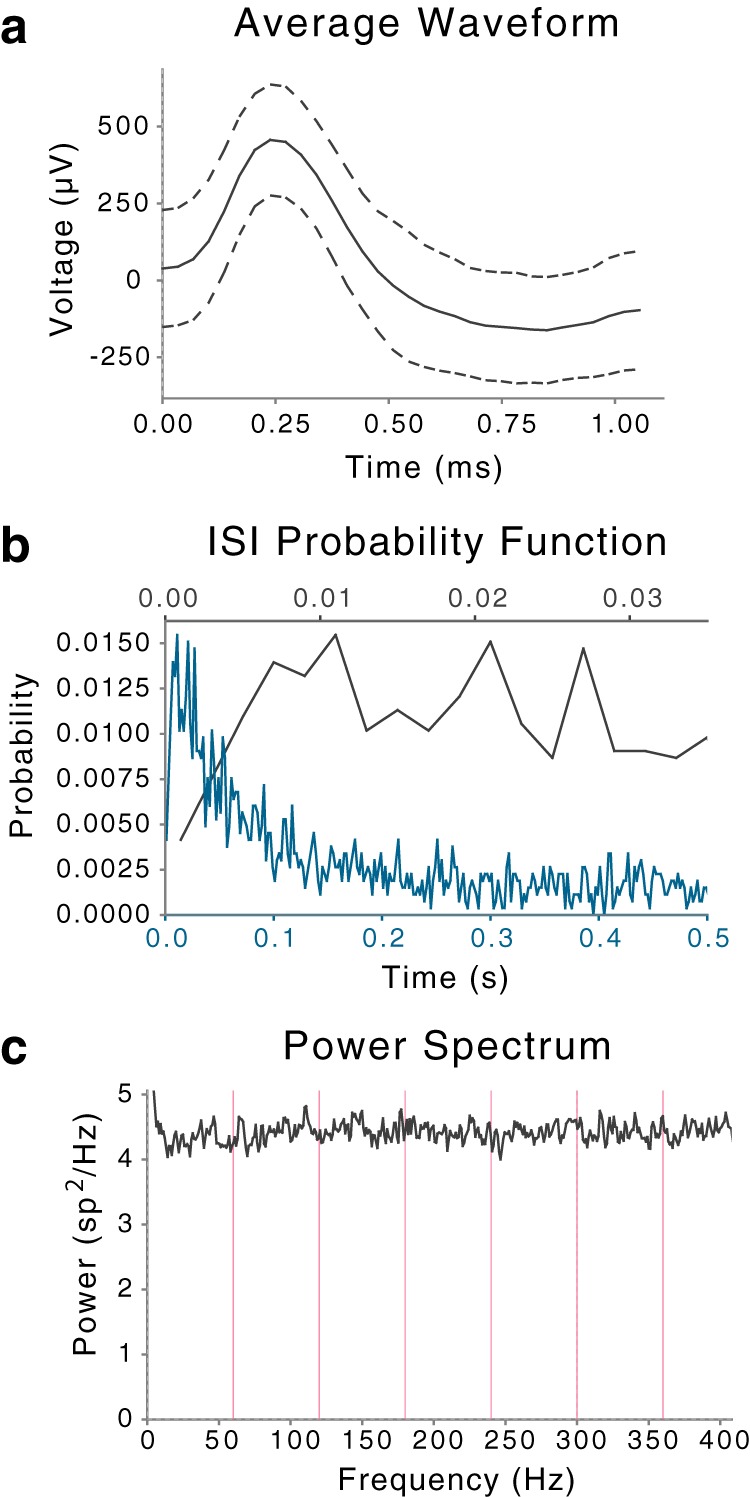

Figure 1.

Events in a cluster identified as SUA after sorting. Channel recorded from the left amygdala. a, Average waveform shape of events in cluster. y-axis: waveform shape with dashed lines indicating ±1 SD at each sample point. b, Distribution of interspike intervals (ISIs) for two duration scales. y-axis: probability of interval; x-axis: duration of interval shown on two scales, blue for the broader range 0–0.5 s on bottom and black for narrower range 0–0.035 s on top. c, Power spectral density of event times. y-axis: power spectral density in events2/Hz; x-axis: frequency in Hz, with magenta lines indicating primary and harmonics of the power line frequency (60 Hz).

Table 1.

Number of recorded neurons by brain area

| Brain area | SUA | MUA | Total |

|---|---|---|---|

| Amygdala | 234 | 578 | 812 |

| Hippocampus | 198 | 563 | 761 |

The results reported here exclude neurons from areas other than the hippocampus and amygdala and from recording sessions where the subject did not complete sufficient trials to test for object-selective neural responses. We focus this report on 432 clusters of SUA. Results for primary effects of object selectivity on MUA in the hippocampus and amygdala were in all cases similar and statistically significant, though with a smaller fraction of neurons with significant effects. This is consistent with MUA comprising a mixture of the same activity reported as SUA mixed with noise.

Experimental stimuli and task.

Subjects viewed images of 11 objects from each of three categories (animals, landmarks, and people) during each experimental session. The objects were chosen to match those used in Quiroga et al., 2005, with the exception of several images of laboratory personnel for the people category. None of the images were personally significant to the subjects, as in Viskontas et al., 2006. All images were chosen to have approximately similar properties of illuminance and contrast to reduce potential confounds of these factors (Steinmetz et al., 2011). We showed the images in random order, with each appearing individually in the center of a computer screen (subtending ∼9.5° visual angle) for 1 s. During each session, we showed four representations (three color pictures and the printed name) of each object six times each, for a total of 792 trials per experimental session. Subjects who participated in more than one session did so on different days with different stimulus sets and any neurons recorded on the same channels during different sessions were regarded as independent. We downloaded the pictures from the World Wide Web (Fig. 2), and the printed words were in English in 30 point, Helvetica font A. A total of 33 objects were depicted in each of two stimulus sets. The task was to press a button on a trackball (Kensington Expert Mouse) when an image (or name) represented a person, and a different button when an image (or name) represented a landmark or animal. Button assignment was randomized across experiments.

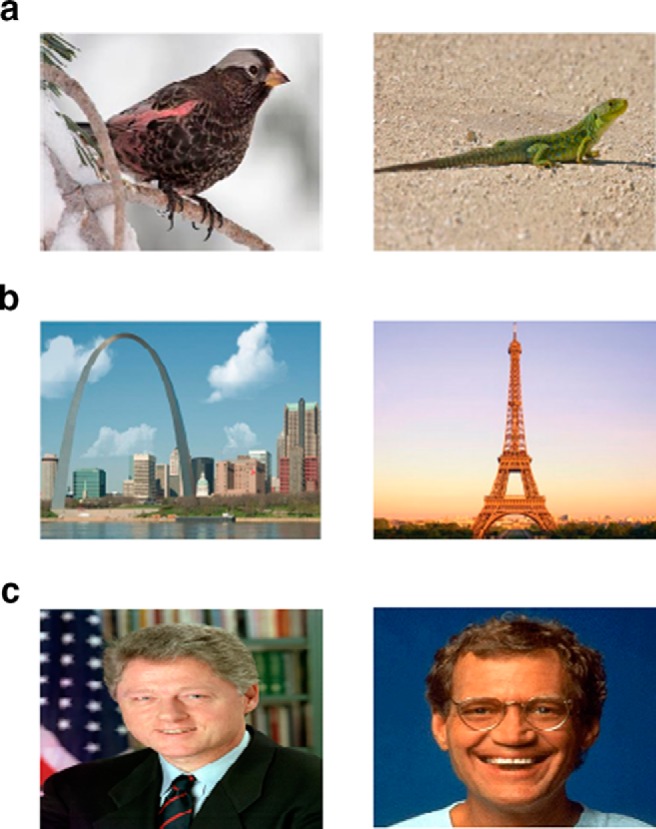

Figure 2.

Sample images from our stimulus sets. Subjects viewed three color images of each object. Objects were clearly visible in each image, with variation in object position and background: bird and lizard (a), Gateway Arch and Eiffel Tower (b), Bill Clinton and David Letterman (c). Readers interested in requesting images in our sets should contact the corresponding author.

Data analysis.

We initially analyzed the influence of several factors—object identity, object luminance, and object contrast—on neuronal responses. We included the latter two factors to account for their recently demonstrated effect on neuronal responses in the human hippocampus and amygdala (Steinmetz et al., 2011). We constructed a set of nested generalized linear models for each neuron (Maindonald and Braun, 2003, their Chap. 8), with these factors as independent variables and the firing rate of a single neuron (in a temporal window from between 200 and 1000 ms after stimulus onset) as the dependent variable. More precisely, model 1 contained only a constant term; model 2, constant + luminance terms; model 3, constant + luminance + contrast + luminance × contrast interacting terms; and model 4, constant + luminance + contrast + luminance × contrast interacting + object identity terms. There were 10 indicator variables for object identity in the model for a single experiment. We computed the improvement of fit for each successive model using the χ2 statistic (Maindonald and Braun, 2003). The comparison of model 4 to model 3 thus identifies neurons that have responses that distinguish among the different objects presented after accounting for differences in image luminance and contrast.

While prior studies have often restricted analysis of the effects of independent factors, such as object identity, to neurons with responses that differ from background firing, we do not do so, because this form of preselection can lead to erroneous conclusions (Steinmetz and Thorp, 2013). To study changes from background firing, we used two techniques: multinomial logistic regression and a bootstrapped test for changes from background firing.

Multinomial logistic regression predicted the presence of objects from our stimulus sets, based on the firing rates of neurons compared with their background firing rates. In logistic models, the “input” is comprised of a set of theoretically meaningful predictors, while the “output” is a predicted grouping (Maindonald and Braun, 2003, their Chap. 8; Dobson and Barnett, 2008, their Chap. 7), in our case extended to multiple categorical outcomes (Hosmer and Lemeshow, 2006). More particularly, the ratio of firing during presentation of images of an object relative to background firing is used to predict the odds that each object may have been presented by determining the closest-fitting coefficient in the logistic function. A coefficient of zero implies no change in odds due to changes in neuronal firing, whereas coefficient values other than zero signal different odds of one object being present versus no object being present (i.e., background neuronal activity). Statistically reliable changes in coefficient values from zero were determined using multivariate t tests (Hosmer and Lemeshow, 2006, their Chap. 2), one for each neuron. This approach is similar to a simplified version of the point-process framework proposed by Truccolo et al. (2005). The α-level was 0.05 in all t tests for nonzero coefficients.

As an independent test of whether neuronal firing is different from background firing, we used the bootstrapped changes from background test (CBT) described in detail (Steinmetz and Thorp, 2013). In brief, this test determines whether the observed responses, grouped by object, were likely to have arisen from the observed background firing. Together, our analyses first determined whether responses of the neurons distinguished between different objects presented (linear models), then determined whether particular objects could be predicted based on neural firing relative to background firing (multinomial logistic regression), and finally as an additional check tested whether firing in response to different objects differed from background (CBT).

Results

The linear models applied to response counts showed that object identity reliably affected firing rates (p < 0.05) in many MTL neurons: 17% in the left hippocampus (LH), 25% in the right hippocampus (RH), 24% in the left amygdala (LA), and 23% in the right amygdala (RA). Table 2 shows the proportions of object-selective neurons. Neither luminance nor contrast reliably influenced firing rates (p > 0.05).

The multinomial logistic regression models were used to determine whether the firing rates of single neurons could predict the presence of particular objects. These revealed that, in many object-selective neurons, firing rates by MTL area and side predicted the presence of more than one object (Table 3). Bilaterally, more neurons encoded several objects versus only one object in the hippocampus (28 vs 18%, p < 0.001) and in the amygdala (30 vs 19%, p < 0.001). For neurons coding for two or more objects in this analysis, 49% coded for objects drawn from two or three categories. Figures 3 and 4 illustrate the distributed response of an object-selective neuron in the right hippocampus.

Table 3.

Summary of results from the regression models used to predict the presence of objects

| Brain area and side | Mean fraction of objects encoded (%)* | % of neurons encoding only one object | % of neurons encoding more than one object | Pvaluea |

|---|---|---|---|---|

| Left amygdala | 25 | 19 | 33 | 2.0 × 10−5*** |

| Right amygdala | 28 | 19 | 30 | 0.001** |

| Left hippocampus | 22 | 20 | 28 | 0.01* |

| Right hippocampus | 27 | 17 | 28 | 0.001** |

aχ2 test.

*p < 0.05,

**p < 0.01, and

***p < 0.001.

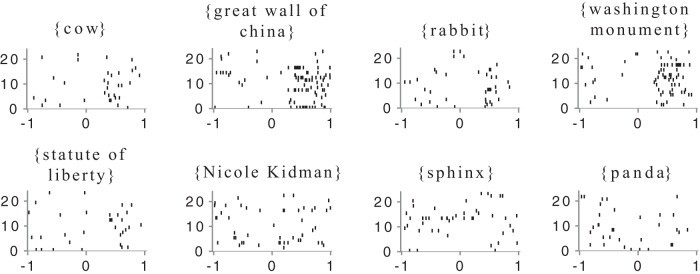

Figure 3.

Raster plots of responses of a neuron in the right hippocampus to the presentation of eight objects. Each line shows the responses on one trial, where an image of the object was presented at time 0. x-axis: time in seconds relative to stimulus onset; y-axis: index of object presentation in random order of appearance in experiment. Objects in the top row are those for which multinomial logistic regression, based on the firing rate relative to background firing, permits prediction of the object at a level above chance (p < 0.05). Objects in the bottom row could not be so predicted.

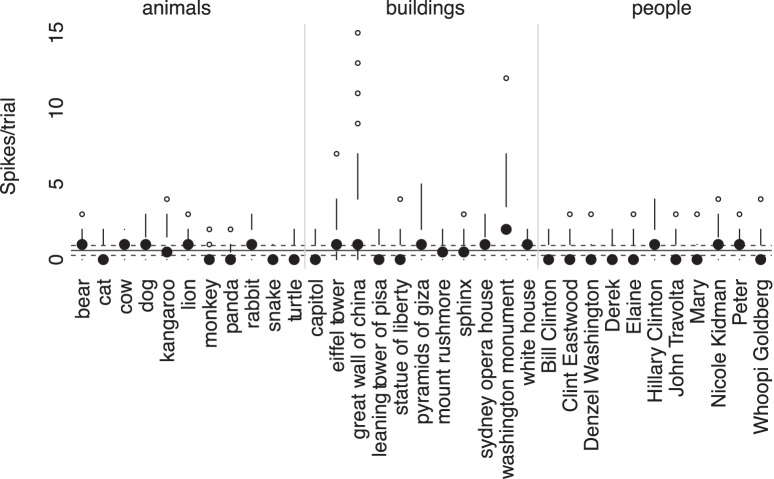

Figure 4.

Modified box-plot of distribution of responses to all objects shown for the same right hippocampal neuron shown in Figure 3. For each object, the solid dot shows the median response to presentation of an image of the object. Vertical lines extend from , (where IQR is interquartile range, n = number of observations, and 1.58 provides the equivalent to a 95% confidence interval for differences between medians; Chambers et al., 1983, their p. 62) to the data point furthest from the median, which is no more than ± (1.5 * IQR) beyond the first or third quartiles. Open circles show responses outside that range. Solid gray line shows the mean of background firing; dashed gray lines at of the background firing (n̄ = mean number of presentations of an object), representing a 95% confidence interval for the median of background firing. Thus median values above this line show strong responses relative to background firing for that specific object.

On average, the proportion of objects whose presence was predicted by single-neuron firing rates was 22% in the LH, 27% in the RH, 24% in the LA, and 23% in the RA. That is, the tuning of these neurons was much broader and the sparsity of their responses much lower (Rolls, 2007) than previously reported, where only ∼1–3% of stimuli elicited statistically reliable single-neuron responses (Quiroga et al., 2005; Mormann et al., 2008) though at a stricter level of statistical significance. Note that we refer to lifetime sparsity, or the proportion of stimuli that evoke statistically reliable neuronal responses (Bowers, 2009). Our results thus suggest that many human MTL neurons become active during the initial session of a visual discrimination task and that many respond to a range of stimuli from different semantic categories. A post hoc power analysis further showed that our sample size of 432 well isolated MTL neurons provided 95% power to detect a change of at least 5% in the fraction of objects that these neurons encoded (Erdfelder et al., 1996), or a difference of approximately two objects, reflecting a distinct sensitivity in our models.

While the multinomial logistic regression models show that differences of neural firing from background reliably predict the presence of different objects, we also separately tested whether these responses differ reliably from background firing, to confirm these results. Such differences can be difficult to observe when visually comparing responses to presentation of a single object to the immediately preceding background activity, so we used the recently described changes from background test (Steinmetz and Thorp, 2013), which provides a single test for each neuron.

Table 4 summarizes the number of neurons in each brain area, which had responses differing reliably from background (p < 0.05), as well as the number of those neurons that also had a reliable response to different objects (Table 2). As shown in that table, a substantial proportion of neurons in both the hippocampus and amygdala (20–30%) responded to the presentation of objects at a rate that differed reliably from background, and ∼3/4 of those also had responses that differed depending on the particular objects shown.

Table 4.

Summary of results of CBT

| Brain area and side | Number of neurons | Number of p values <0.05 | % of neurons with responses different from background | P value under the binomial distribution | Number of neurons with responses to objects and different from background | % of neurons with responses different from background with significant responses to objects |

|---|---|---|---|---|---|---|

| Left amygdala | 134 | 44 | 33 | 3.2 × 10−24 | 28 | 64 |

| Right amygdala | 100 | 28 | 28 | 5.3 × 10−14 | 21 | 75 |

| Left hippocampus | 103 | 20 | 19 | 1.7 × 10−7 | 15 | 75 |

| Right hippocampus | 95 | 30 | 32 | 1.8 × 10−16 | 23 | 77 |

Last, to ensure that other neurons or brain areas could decode the observed neuronal responses, we calculated the amount of information that neuronal firing rates provided about the presented objects. This is the mutual information between firing rate and the presented object, expressed mathematically as I(X; Y) = H(X) − H(X|Y), where X is the object presented, Y is firing rate, H(X) is the entropy of X, and H(X|Y) is the conditional entropy between X and Y (Cover and Thomas, 2006, their Chap. 2). In MTL neurons classified as object selective, firing rates contained on average 0.16 bits of information distinguishing the presented object, lower but generally congruent with estimates in nonhuman primates (e.g., 0.30 bits in monkey hippocampal neurons; Abbott et al., 1996). Table 5 shows MI values for the MTL (values in parentheses indicate 95% confidence intervals). Note that to distinguish objects in our stimulus set would require 5.04 bits, indicating that the firing rates of ∼30 average MTL neurons, if their firing is independent of one another, could provide full information about the presence of any given object.

Table 5.

Average mutual information (encoded per neuron) by brain area

| Brain area | Mean MI in bits |

|---|---|

| Amygdala | 0.17 (0.03–0.64) |

| Hippocampus | 0.15 (0.02–0.83) |

Discussion

The present observations cast new light on the debate regarding distributed versus localized representation of objects by single neurons in the human brain. In the first session of a visual discrimination task with only six presentations of each image, one that emphasizes initial object encoding, many neurons recorded in the MTL encoded the identities of multiple objects. We also discovered a smaller proportion of neurons that encoded only one object, supporting the existence of distinct encoding populations—one distributed and the other gnostic—and confirming the plausibility of long-held notions about dual representation schemes (Konorski, 1967, his p. 200). Our results, however, differ from prior models that sought to explicitly decode object identity from a few invariant MTL neurons (Quiroga et al., 2008). In such models, the number of neuronal spikes within certain time intervals (300–600, 300–1000, and 300–2000 ms) per trial were used as inputs to a decoding algorithm that predicted object identity, given the spike distributions of excluded trials. Compared with our statistical models—which predicted the identities of specific stimuli among many encoded by a single neuron—the leave-one-out decoding algorithm by Quiroga et al. (2007) would potentially confuse multiple objects whenever neurons encoded more than one stimulus, an ambiguity in prediction we acknowledge.

While the single-unit activity reported here is not as well isolated as that achieved in animal recordings (Hill et al., 2011), these techniques provide the highest spatial resolution yet achievable in the conscious human brain. Even if the SUA reported here is contaminated by noise, it is difficult to see how such contamination could create the appearance of a distributed code across a larger fraction of neurons; one would expect noise to decrease the number of neurons with apparent responses.

We note that the gnostic neurons described here are distinct from previously described neurons with invariant representations (Quiroga et al., 2005; Quian Quiroga et al., 2009). Although gnostic neurons encoded information about single objects in our linear models, these neurons failed to meet the previously applied criteria for invariant responses that far exceed baseline to a single object (Quiroga et al., 2005). Applying those criteria to both single- and multi-unit activity, as combined in prior reports (Quiroga et al., 2005; Quian Quiroga et al., 2009), we found one cluster of multi-unit activity (from a grand total of 1573, or 0.06%) that met the criteria for invariant single-neuron representations; whereas these same prior reports found 5% of neurons with invariant representations (Quiroga et al., 2005; Quian Quiroga et al., 2009). What is the cause of this 78-fold difference in the frequency of single-neuron invariant representations?

One idea would be that there were a greater number of separate objects shown in the screening session in prior work, 71–114 (mean = 93.9, Quiroga et al., 2005), compared with the 33 objects shown in our single-session design here. Assuming, however, that the objects for which recorded neurons are selective would be drawn randomly from a set of possible objects with which the subject is familiar, this hypothesis accounts for only a factor of 93.9/33 = 3. An additional factor of 26 remains.

While there are several possible technical and experimental factors that could explain this remaining difference (among them a higher percentage of faces in prior experiments [Mormann et al., 2011 ]or differences in the fraction of principal cells recorded at different medical centers [Ison et al., 2011]), one intriguing hypothesis is that it may reflect differences in how many times objects were recently viewed. The present study involved recently unseen views of objects (i.e., initial encoding during six presentations of each image), whereas prior human experiments included higher presentations counts in several sessions (Quiroga et al., 2005; Quian Quiroga et al., 2009; at least 12 presentations—6 in screening and 6 in test—and often up to 50 presentations when the same stimuli were used in other experiments in the same subjects) that may have enhanced a more sustained neuronal selectivity for frequently viewed images (i.e., visual learning; Logothetis et al., 1995; Freedman et al., 2006). In similar fashion, the observed image selectivity in MTL neurons likely also reflects contributions from recognition memory, as each new incarnation of a previously seen “concept” (e.g., different photographs of the same person) will likely evoke both familiarity, which has been documented in previous single-unit recording studies (Rutishauser et al., 2006; Jutras and Buffalo, 2010), and episodic memory (Wixted et al., 2014). Thus, the divergent results may reflect different stages of episodic representation, of when and where an object was viewed, an assumed primary coding function of the MTL (Squire et al., 2004). Given that repetition has been reported to suppress neural responses in the amygdala (Pedreira et al., 2010), this hypothesis clearly requires further testing in experiments designed to observe changes in neural responses, and the sharpening of neural representation in particular, as objects are presented an increasing number of times.

Finally, our results broadly agree with those of nonhuman primate studies, wherein a substantial body of work suggests that neurons, or groups of neurons, with diverse response profiles form the substrate of object representation (e.g., in monkey temporal cortex;Baylis et al., 1985; Rolls and Tovee, 1995; Baddeley et al., 1997; Treves et al., 1999; Franco et al., 2007). Some researchers have postulated that when such distributed responses reach a sufficient level of independence, there may be an exponential increase in representational capacity (Rolls, 2007), making it possible for a comparatively meager fraction of neurons to encode a large number of diverse stimuli. Although our findings in the hippocampus differ from those of other animal studies, e.g., those in which rat hippocampal place cells show a greater degree of sparsity during spatial encoding (O'Keefe, 1976; Wilson and McNaughton, 1993; Moser et al., 2008), our results overall support an initial object coding scheme in the human MTL, which is broadly selective and which reflects a lesser degree of sparsity.

Footnotes

This research was funded by National Institutes of Health Grant 1R21DC009871-0, the Barrow Neurological Foundation, and the Arizona Biomedical Research Institute (09084092). We thank the patients at the Barrow Neurological Institute who volunteered for these experiments and E. Cabrales for technical assistance. We also thank E. Niebur and S. Macknik for early comments on this manuscript.

The authors declare no competing financial interests.

References

- Abbott LF, Rolls ET, Tovee MJ. Representational capacity of face coding in monkeys. Cereb Cortex. 1996;6:498–505. doi: 10.1093/cercor/6.3.498. [DOI] [PubMed] [Google Scholar]

- Baddeley R, Abbott LF, Booth MC, Sengpiel F, Freeman T, Wakeman EA, Rolls ET. Responses of neurons in primary and inferior temporal visual cortices to natural scenes. Proc Biol Sci. 1997;264:1775–1783. doi: 10.1098/rspb.1997.0246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow HB. Single units and sensation: a neuron doctrine for perceptual psychology? Perception. 1972;1:371–394. doi: 10.1068/p010371. [DOI] [PubMed] [Google Scholar]

- Baylis GC, Rolls ET, Leonard CM. Selectivity between faces in the responses of a population of neurons in the cortex in the superior temporal sulcus of the monkey. Brain Res. 1985;342:91–102. doi: 10.1016/0006-8993(85)91356-3. [DOI] [PubMed] [Google Scholar]

- Bowers JS. On the biological plausibility of grandmother cells: implications for neural network theories in psychology and neuroscience. Psychol Rev. 2009;116:220–251. doi: 10.1037/a0014462. [DOI] [PubMed] [Google Scholar]

- Celeux G, Govaert G. A classification EM algorithm for clustering and two stochastic versions. Comput Stat Data Anal. 1992;14:315–332. doi: 10.1016/0167-9473(92)90042-E. [DOI] [Google Scholar]

- Celeux G, Govaert G. Gaussian parsimonious clustering models. Pattern Recogn. 1995;28:781–793. doi: 10.1016/0031-3203(94)00125-6. [DOI] [Google Scholar]

- Chambers JM, Cleveland WS, Kleiner B, Tukey PA. Graphical methods for data analysis. Belmont, CA: Wadsworth and Brooks; 1983. [Google Scholar]

- Cover TM, Thomas JA. Elements of information theory. Ed 2. New York: Wiley-Interscience; 2006. [Google Scholar]

- Daselaar SM, Fleck MS, Cabeza R. Triple dissociation in the medial temporal lobes: recollection, familiarity, and novelty. J Neurophysiol. 2006;96:1902–1911. doi: 10.1152/jn.01029.2005. [DOI] [PubMed] [Google Scholar]

- Desimone R. Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci U S A. 1996;93:13494–13499. doi: 10.1073/pnas.93.24.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobson AJ, Barnett AG. An introduction to generalized linear models. Boca Raton, FL: Chapman and Hall/CRC; 2008. [Google Scholar]

- Dymond AM, Babb TL, Kaechele LE, Crandall PH. Design considerations for the use of fine and ultrafine depth brain electrodes in man. Biomed Sci Instrum. 1972;9:1–5. [PubMed] [Google Scholar]

- Erdfelder E, Faul F, Buchner A. GPOWER: a general power analysis program. Behav Res Methods Instrum Comput. 1996;28:1–11. doi: 10.3758/BF03203630. [DOI] [Google Scholar]

- Franco L, Rolls ET, Aggelopoulos NC, Jerez JM. Neuronal selectivity, population sparseness, and ergodicity in the inferior temporal visual cortex. Biol Cybern. 2007;96:547–560. doi: 10.1007/s00422-007-0149-1. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Experience-dependent sharpening of visual shape selectivity in inferior temporal cortex. Cereb Cortex. 2006;16:1631–1644. doi: 10.1093/cercor/bhj100. [DOI] [PubMed] [Google Scholar]

- Fried I, Wilson CL, Maidment NT, Engel J, Jr, Behnke E, Fields TA, MacDonald KA, Morrow JW, Ackerson L. Cerebral microdialysis combined with single-neuron and electroencephalographic recording in neurosurgical patients. Tech note. J Neurosurg. 1999;91:697–705. doi: 10.3171/jns.1999.91.4.0697. [DOI] [PubMed] [Google Scholar]

- Gonsalves BD, Kahn I, Curran T, Norman KA, Wagner AD. Memory strength and repetition suppression: multimodal imaging of medial temporal cortical contributions to recognition. Neuron. 2005;47:751–761. doi: 10.1016/j.neuron.2005.07.013. [DOI] [PubMed] [Google Scholar]

- Hill DN, Mehta SB, Kleinfeld D. Quality metrics to accompany spike sorting of extracellular signals. J Neurosci. 2011;31:8699–8705. doi: 10.1523/JNEUROSCI.0971-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosmer DW, Lemeshow S. Applied logistic regression. New York: Wiley; 2006. [Google Scholar]

- Hummel JE. Localism as a first step toward symbolic representation. Behav Brain Sci. 2000;23:480–481. doi: 10.1017/S0140525X0036335X. [DOI] [Google Scholar]

- Ison MJ, Mormann F, Cerf M, Koch C, Fried I, Quiroga RQ. Selectivity of pyramidal cells and interneurons in the human medial temporal lobe. J Neurophysiol. 2011;106:1713–1721. doi: 10.1152/jn.00576.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jutras MJ, Buffalo EA. Recognition memory signals in the macaque hippocampus. Proc Natl Acad Sci U S A. 2010;107:401–406. doi: 10.1073/pnas.0908378107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawasaki H, Adolphs R, Oya H, Kovach C, Damasio H, Kaufman O, Howard M., 3rd Analysis of single-unit responses to emotional scenes in human ventromedial prefrontal cortex. J Cogn Neurosci. 2005;17:1509–1518. doi: 10.1162/089892905774597182. [DOI] [PubMed] [Google Scholar]

- Konorski J. Integrative activity of the brain: an interdisciplinary approach. Chicago: University of Chicago; 1967. [Google Scholar]

- Kreiman G, Koch C, Fried I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nat Neurosci. 2000;3:946–953. doi: 10.1038/78868. [DOI] [PubMed] [Google Scholar]

- Law JR, Flanery MA, Wirth S, Yanike M, Smith AC, Frank LM, Suzuki WA, Brown EN, Stark CE. fMRI activity during the gradual acquisition and expression of paired associate memory. J Neurosci. 2005;25:5720–5729. doi: 10.1523/JNEUROSCI.4935-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lennie P. The cost of cortical computation. Curr Biol. 2003;13:493–497. doi: 10.1016/S0960-9822(03)00135-0. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Poggio T. Shape representation in the inferior temporal cortex of monkeys. Curr Biol. 1995;5:552–563. doi: 10.1016/S0960-9822(95)00108-4. [DOI] [PubMed] [Google Scholar]

- Maindonald JH, Braun JW. Data analysis and graphics using R: an example-based approach. New York: Cambridge UP; 2003. [Google Scholar]

- Mehta AD, Labar D, Dean A, Harden C, Hosain S, Pak J, Marks D, Schwartz TH. Frameless stereotactic placement of depth electrodes in epilepsy surgery. J Neurosurg. 2005;102:1040–1045. doi: 10.3171/jns.2005.102.6.1040. [DOI] [PubMed] [Google Scholar]

- Misra A, Burke JF, Ramayya AG, Jacobs J, Sperling MR, Moxon KA, Kahana MJ, Evans JJ, Sharan AD. Methods for implantation of micro-wire bundles and optimization of single/multi-unit recordings from human mesial temporal lobe. J Neural Eng. 2014;11 doi: 10.1088/1741-2560/11/2/026013. 026013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mormann F, Kornblith S, Quiroga RQ, Kraskov A, Cerf M, Fried I, Koch C. Latency and selectivity of single neurons indicate hierarchical processing in the human medial temporal lobe. J Neurosci. 2008;28:8865–8872. doi: 10.1523/JNEUROSCI.1640-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mormann F, Dubois J, Kornblith S, Milosavljevic M, Cerf M, Ison M, Tsuchiya N, Kraskov A, Quiroga RQ, Adolphs R, Fried I, Koch C. A category-specific response to animals in the right human amygdala. Nat Neurosci. 2011;14:1247–1249. doi: 10.1038/nn.2899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moser EI, Kropff E, Moser MB. Place cells, grid cells, and the brain's spatial representation system. Annu Rev Neurosci. 2008;31:69–89. doi: 10.1146/annurev.neuro.31.061307.090723. [DOI] [PubMed] [Google Scholar]

- O'Keefe J. Place units in the hippocampus of the freely moving rat. Exp Neurol. 1976;51:78–109. doi: 10.1016/0014-4886(76)90055-8. [DOI] [PubMed] [Google Scholar]

- Pedreira C, Mormann F, Kraskov A, Cerf M, Fried I, Koch C, Quiroga RQ. Responses of human medial temporal lobe neurons are modulated by stimulus repetition. J Neurophysiol. 2010;103:97–107. doi: 10.1152/jn.91323.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quian Quiroga R, Kraskov A, Koch C, Fried I. Explicit encoding of multimodal percepts by single neurons in the human brain. Curr Biol. 2009;19:1308–1313. doi: 10.1016/j.cub.2009.06.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature. 2005;435:1102–1107. doi: 10.1038/nature03687. [DOI] [PubMed] [Google Scholar]

- Quiroga RQ, Reddy L, Koch C, Fried I. Decoding visual inputs from multiple neurons in the human medial temporal lobe. J Neurophysiol. 2007;98:1997–2007. doi: 10.1152/jn.00125.2007. [DOI] [PubMed] [Google Scholar]

- Quiroga RQ, Kreiman G, Koch C, Fried I. Sparse but not ‘grandmother-cell’ coding in the medial temporal lobe. Trends Cogn Sci. 2008;12:87–91. doi: 10.1016/j.tics.2007.12.003. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The representation of information about faces in the temporal and frontal lobes. Neuropsychologia. 2007;45:124–143. doi: 10.1016/j.neuropsychologia.2006.04.019. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Deco G. Computational neuroscience of vision. New York: Oxford UP; 2002. [Google Scholar]

- Rolls ET, Tovee MJ. Sparseness of the neuronal representation of stimuli in the primate temporal visual cortex. J Neurophysiol. 1995;73:713–726. doi: 10.1152/jn.1995.73.2.713. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Treves A. Neural networks and brain function. New York: Oxford UP; 1998. [Google Scholar]

- Rutishauser U, Mamelak AN, Schuman EM. Single-trial learning of novel stimuli by individual neurons of the human hippocampus-amygdala complex. Neuron. 2006;49:805–813. doi: 10.1016/j.neuron.2006.02.015. [DOI] [PubMed] [Google Scholar]

- Scoville WB, Milner B. Loss of recent memory after bilateral hippocampal lesions. J Neurol Neurosurg Psychiatry. 1957;20:11–21. doi: 10.1136/jnnp.20.1.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE. The medial temporal lobe. Annu Rev Neurosci. 2004;27:279–306. doi: 10.1146/annurev.neuro.27.070203.144130. [DOI] [PubMed] [Google Scholar]

- Steinmetz PN. Alternate task inhibits single neuron category selective responses in the human hippocampus while preserving selectivity in the amygdala. J Cogn Neurosci. 2009;21:347–358. doi: 10.1162/jocn.2008.21017. [DOI] [PubMed] [Google Scholar]

- Steinmetz PN, Thorp C. Testing for effects of different stimuli on neuronal firing relative to background activity. J Neural Eng. 2013;10 doi: 10.1088/1741-2560/10/5/056019. 056019. [DOI] [PubMed] [Google Scholar]

- Steinmetz PN, Cabrales E, Wilson MS, Baker CP, Thorp CK, Smith KA, Treiman DM. Neurons in the human hippocampus and amygdala respond to both low and high level image properties. J Neurophysiol. 2011;105:2874–2884. doi: 10.1152/jn.00977.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinmetz PN, Wait SD, Lekovic GP, Rekate HL, Kerrigan JF. Firing behavior and network activity of single neurons in human epileptic hypothalamic hamartoma. Front Neurol. 2013;4:210. doi: 10.3389/fneur.2013.00210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorp CK, Steinmetz PN. Interference and noise in human intracranial microwire recordings. IEEE Trans Biomed Eng. 2009;56:30–36. doi: 10.1109/TBME.2008.2006009. [DOI] [PubMed] [Google Scholar]

- Thorpe S. Local vs distributed coding. Intelletica. 1989;8:3–40. [Google Scholar]

- Thorpe S. Localized versus distributed representations. In: Arbib M, editor. The handbook of brain theory and neural networks. Cambridge, MA: MIT; 1995. p. 550. [Google Scholar]

- Treves A, Panzeri S, Rolls ET, Booth M, Wakeman EA. Firing rate distributions and efficiency of information transmission of inferior temporal cortex neurons to natural visual stimuli. Neural Comput. 1999;11:601–632. doi: 10.1162/089976699300016593. [DOI] [PubMed] [Google Scholar]

- Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol. 2005;93:1074–1089. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- Valdez AB, Hickman EN, Treiman DM, Smith KA, Steinmetz PN. A statistical method for predicting seizure onset zones from human single-neuron recordings. J Neural Eng. 2013;10 doi: 10.1088/1741-2560/10/1/016001. 016001. [DOI] [PubMed] [Google Scholar]

- Viskontas IV, Knowlton BJ, Steinmetz PN, Fried I. Differences in mnemonic processing by neurons in the human hippocampus and parahippocampal regions. J Cogn Neurosci. 2006;18:1654–1662. doi: 10.1162/jocn.2006.18.10.1654. [DOI] [PubMed] [Google Scholar]

- Wiggs CL, Martin A. Properties and mechanisms of perceptual priming. Curr Opin Neurobiol. 1998;8:227–233. doi: 10.1016/S0959-4388(98)80144-X. [DOI] [PubMed] [Google Scholar]

- Wild J, Prekopcsak Z, Sieger T, Novak D, Jech R. Performance comparison of extracellular spike sorting algorithms for single-channel recordings. J Neurosci Methods. 2012;203:369–376. doi: 10.1016/j.jneumeth.2011.10.013. [DOI] [PubMed] [Google Scholar]

- Wilson MA, McNaughton BL. Dynamics of the hippocampal ensemble code for space. Science. 1993;261:1055–1058. doi: 10.1126/science.8351520. [DOI] [PubMed] [Google Scholar]

- Wixted JT, Squire LR, Jang Y, Papesh MH, Goldinger SD, Kuhn JR, Smith KA, Treiman DM, Steinmetz PN. Sparse and distributed coding of episodic memory in neurons in the human hippocampus. Proc Natl Acad Sci U S A. 2014;111:9621–9626. doi: 10.1073/pnas.1408365111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yassa MA, Stark CE. Multiple signals of recognition memory in the medial temporal lobe. Hippocampus. 2008;18:945–954. doi: 10.1002/hipo.20452. [DOI] [PubMed] [Google Scholar]