Abstract

Adolescent decision-making is a topic of great public and scientific interest. However, much of the neuroimaging research in this area contrasts only one facet of decision-making (e.g., neural responses to anticipation or receipt of monetary rewards). Few studies have directly examined the processes that occur immediately before making a decision between two options that have varied and unpredictable potential rewards and penalties. Understanding adolescent decision-making from this vantage point may prove critical to ameliorating risky behavior and improving developmental outcomes. In this study, participants aged 14–16 years engaged in a driving simulation game while undergoing fMRI. Results indicated activity in ventral striatum preceded risky decisions and activity in right inferior frontal gyrus (rIFG) preceded safe decisions. Furthermore, participants who reported higher sensation-seeking and sensitivity to reward and punishment demonstrated lower rIFG activity during safe decisions. Finally, over successive games, rIFG activity preceding risky decisions decreased, whereas thalamus and caudate activity increased during positive feedback (taking a risk without crashing). These results indicate that regions traditionally associated with reward processing and inhibition not only drive risky decision-making in the moment but also contribute to learning about risk tradeoffs during adolescence.

INTRODUCTION

The decisions adolescents face in daily life often involve some level of risk. For example, an adolescent may need to assess whether or not to binge drink at a party at the risk of negative physical and social consequences. Choices like these involve a gamble: choose the safe option with less potential for reward (e.g., less social acceptance and conformity) or the risky option with a possibility for more reward, but also with a possibility for more loss (e.g., health risks, negative legal consequences).

Decisions involving risk are made throughout the lifespan, but adolescence is a particularly interesting developmental period during which to study risky decision-making. First of all, adolescents face many new contexts with high levels of risk—for example, situations involving drugs, cars, or peers—each with a possibility for harmful emotional or physical outcomes, and adolescents indeed engage in high levels of risky behavior in situations like these (Eaton et al., 2012; Simons-Morton et al., 2011). Because of the possible emotional and physical consequences of risk-taking, it is crucial that we further our understanding of how adolescents make decisions when risk is involved.

A popular hypothesis is that heightened sensitivity to reward during adolescence leads teenagers to be more vulnerable to making bad or suboptimal decisions when faced with real-life gambles, like choosing to drink and drive, due to a disproportionately developed “hot” motivational system compared with an underdeveloped “cold” cognitive control system (Albert & Steinberg, 2011; Casey, Jones, & Somerville, 2011; Casey & Jones, 2010; Somerville, Jones, & Casey, 2010). Aside from only a few studies (Cho et al., 2012; Bjork, Smith, Chen, & Hommer, 2010; Bjork et al., 2004), most research comparing adolescents to children or adults indeed supports the idea that adolescents are more sensitive to reward at the neural level, although sometimes this sensitivity is found during anticipation of reward (Geier, Terwilliger, Teslovich, Velanova, & Luna, 2010; Van Leijenhorst, Zanolie, et al., 2010; Galvan, Hare, Voss, Glover, & Casey, 2007) and other times during receipt of reward (Cohen et al., 2010; Van Leijenhorst, Zanolie, et al., 2010; Ernst et al., 2005). Hoogendam and colleagues (2013) found differences in both anticipation and outcome, with anticipatory activation across many regions increasing with age and outcome activation across many regions decreasing, supporting both sides of the hypoactivation-versus-hyperactivation debate.

Much of this previous work with implications for adolescent risk-taking has been designed to assess either passive reward processing or instrumental reward processing, but not necessarily to assess actual decision-making behavior (Richards, Plate, & Ernst, 2013). Many adolescent studies use variants of the well-validated monetary incentive delay task (Knutson, Fong, Adams, Varner, & Hommer, 2001), which induces anticipation of and response to both gain and loss (Bjork, Chen, Smith, & Hommer, 2010; Galvan et al., 2006, 2007; Bjork et al., 2004). Although this type of task effectively characterizes the processes involved in anticipation and receipt of instrumentally obtained rewards, it does not probe reward-guided decision-making, which is the problem behavior that motivates much of this work. Furthermore, even in true decision-making paradigms, it is rare that decisions have included a tradeoff between a risky and a safe option; instead, they have often involved two equally lucrative options or a clearly optimal one (Cohen et al., 2010; Van Leijenhorst, Crone, & Bunge, 2006; Ernst et al., 2005; May et al., 2004). One task that has been used, which includes risky and safe options, is the wheel-of-fortune task. However, studies using this task have only analyzed either equal probability choices (Ernst et al., 2005) or adult participants (Ernst et al., 2004). This leaves just a few adolescent risky decision-making studies that examine risky decision-making in a more comprehensive fashion, encompassing multiple features present in real-world adolescent decision-making, reviewed in greater detail below (Chein, Albert, O’Brien, Uckert, & Steinberg, 2011; Op de Macks et al., 2011; Van Leijenhorst, Gunther Moor, et al., 2010; Bjork, Smith, Danube, & Hommer, 2007; Eshel, Nelson, Blair, Pine, & Ernst, 2007). In our study, we extended this previous work and adapted the Stoplight Task, a driving simulation task employed in the fMRI study by Chein and colleagues (2011).

Our first goal was thus to characterize the processes propelling an adolescent to make a particular choice during a decision involving risk. Consider two situations: one where an adolescent chooses the risky option and one where he or she chooses the safe option. In the seconds preceding a decision, the options as well as the external context may be the same, yet the adolescent behaves differently. What neural mechanisms support these differences in behavior from decision to decision? Only a few studies have begun to address this question. In one study using a monetary decision-making task, contrasting risky with safe decisions yielded greater activity in regions traditionally associated with conflict monitoring and cognitive control (dorsal anterior cingulate cortex [dACC] and ventrolateral prefrontal cortex [vlPFC]), particularly for adults versus adolescents (Eshel et al., 2007). However, in this task, the probability and magnitude of reward were known explicitly.

Previous research has demonstrated that the brain processes risk differently when there is ambiguity regarding the outcome probabilities (Krain, Wilson, Arbuckle, Castellanos, & Milham, 2006), that adolescents have higher tolerance for ambiguous probabilities (Tymula, Belmaker, Glimcher, & Levy, 2013), and that individual preference for unknown probabilities is manifested in brain responses to risk as well (Huettel, Stowe, Gordon, Warner, & Platt, 2006). Furthermore, real-world gambles tend to include unknown probabilities; for example, an adolescent who decides to speed does not know the true likelihood of causing an accident. Although some studies have involved paradigms in which risk probabilities are unknown (e.g., the Balloon Analogue Risk Task; Lejuez et al., 2002; see also Tymula et al., 2013), only a few studies have investigated brain processes underlying decision-making in these contexts of unknown probability. This work has compared brain activity across different levels of risk in financial risk-taking tasks (Van Leijenhorst, Gunther Moor, et al., 2010; Bjork et al., 2007), across social contexts during the Stoplight Task (Chein et al., 2011), between individuals with varying family obligations (Telzer, Fuligni, Lieberman, & Galvan, 2013) and between individuals with and without traumatic brain injury (Chiu et al., 2012). To extend this work, we compared decision-related brain activity across two identical contexts (a yellow light) where adolescents ultimately made different choices (stop or go) to specifically identify brain processes leading to safe versus risky behavior when outcome probabilities were unknown. We hypothesized that response-contingent ventral striatum (VS) and lateral PFC would be involved in distinguishing between the two different types of behavior due to their previous associations with reward processing and cognitive control, respectively (Aron, Robbins, & Poldrack, 2004; Knutson, Westdorp, Kaiser, & Hommer, 2000). Although other regions of PFC may be involved as well, lateral PFC in particular has been associated with the cognitive control process of response inhibition, a likely component of inhibiting the action of driving through a yellow light (Aron et al., 2004). However, during our analyses, we did not restrict our search to any of our ROIs, instead interrogating the whole brain.

A second question we aimed to answer is how these brain processes differ between individual adolescents. For example, if an adolescent tends to engage in more risky behavior in everyday life or during the task itself, does this correlate with different activity during risky and safe decisions? Only a few studies, including those we review below, have examined how individual differences relate to differential neural activity during decision-making and reward processing. First, it should be noted that a growing body of work has begun to relate reward processing to various syndromes characterized by externalizing behaviors (e.g., ADHD and risk for substance use; Cservenka & Nagel, 2012; Rubia et al., 2009; Scheres, Milham, Knutson, & Castellanos, 2007). Furthermore, hypersensitivity to rewards in the striatum has been observed in adolescents with a temperament of behavioral inhibition (Guyer et al., 2006) as well as in adolescent social phobia (Guyer, 2012). However, we sought to understand individual differences in decision-making and reward processing in typically developing adolescents. Several studies investigating these differences have demonstrated that adolescents reporting more risky behavior (Galvan et al., 2007) or externalizing behaviors (Bjork, Chen, et al., 2010) in everyday life showed more anticipatory reward-related activity during the monetary incentive delay task. In the decision-making realm, several studies have demonstrated less risk-related dACC activity for those taking more risks (Van Leijenhorst, Gunther Moor, et al., 2010; Eshel et al., 2007). In our study, we likewise investigated how individual differences in both self-reported everyday behavior and laboratory-assessed behavior correlated with differences in decision-related activity.

Our third aim was to explore how adolescents’ neural responses during decision-making and reward processing change as a function of experience. Risky decisions are rarely made only once throughout the lifespan; instead, one must learn to navigate the same risky situations encountered previously. Although most real-life decisions involve outcome probabilities that are unknown, as a decision is encountered more often, expectations about the outcomes are adjusted. This sort of updating is dependent upon the so-called prediction error signal, and adolescents’ hyperresponsivity to reward has been explained in terms of an exaggerated neural response to such expectation violations (Cohen et al., 2010). Previous research demonstrates that adolescents’ risky behavior may be related to previously encountered outcomes (Katz, Fromme, & D’Amico, 2000), suggesting that neural responses may also change to accommodate past experiences. Even when there is no clear optimal decision, probabilistic cues, given by feedback, must be integrated into the adolescent’s expectations about the task. One particularly relevant study found that the extent to which adolescents are able to update their expectations, based on information they are given, appears to affect their decision-making (Van Duijvenvoorde, Jansen, Bredman, & Huizenga, 2012). Furthermore, the extent to which an individual responds to negative feedback by adjusting subsequent expectations predicts connectivity between subcortical and cortical regions, further highlighting the need to consider how neural responses evolve throughout the course of the experiment (van den Bos, Cohen, Kahnt, & Crone, 2012). To this end, we investigated brain activity that changed as more decisions were made.

Finally, it is important to note that adolescent reward processing has been almost exclusively assessed in tasks using financial incentives (for an exception, see Chein et al., 2011), which reflect only a fraction of the decisions that adolescents face in everyday life. These differences between adolescent and adult life highlight the difficulty of comparing reward processing between different age groups, given that engagement with a particular experimental task may differ dramatically between age groups if the task demands are more relevant to one age group versus another (Galvan, 2010). Thus, in this study, we chose to focus only on a narrow age range (ages 14–16) and used a driving simulation task (the Stoplight Task) that has previously been shown to engage this age group (Chein et al., 2011; Gardner & Steinberg, 2005). Our goal was not to compare adolescents to another age group but instead to characterize how the adolescent brain supports risky decision-making in an age-relevant task. Although no participants in our study possessed a driver’s license and thus their behavior in our paradigm was not likely related to previous experience, the results of this study provide data representing an activity in which our population will soon be engaged. Also, although this task was previously used in a neuroimaging study by Chein and colleagues (2011), this study examines decision-making processes in a unique manner by directly comparing brain activity on trials where risky versus safe choices were made and examining the correlates of this activity as well as by examining changes in brain activity over repeated iterations of the task.

In summary, this study used fMRI to investigate neural processes in adolescents performing a driving simulation, in which participants were required to make risky decisions. The study had three main goals. First, we wanted to characterize response-contingent neural activity during reward-guided decisions. Second, we aimed to show what individual differences correlated with those neural responses to better understand how brain responses correlate with real-world behaviors. Finally, we explored how the decision-making activity changed throughout the task to better understand how adolescents learn about risk tradeoffs with unknown probabilities.

METHODS

Participants

Twenty-seven adolescents (ages 14–16 years, mean age = 15.3, 13 girls) participated in the study and were compensated. Five participants were excluded from analyses due to computer malfunctions, and one was excluded due to excessive movement during the structural scan. An additional participant was excluded for only risking on 2 of the 50 trials (i.e., for being an outlier in terms of behavior, which is problematic for parameter estimation in neuroimaging analyses). The remaining 20 participants were used in the analyses described here (ages 14–16 years, mean age = 15.2, 12 girls). None of the participants possessed a driver’s license.

Behavioral Questionnaires

After scanning, all participants completed the Barratt Impulsivity Scale-Version 11 (α = .83; Patton, Stanford, & Barratt, 1995), the Resistance to Peer Influence scale (RPI; α = .78; Steinberg & Monahan, 2007), the Sensitivity to Punishment and Sensitivity to Reward Questionnaire-Short Form (SPSRQ-S; α = .75; Torrubia, Avila, Moltó, & Caseras, 2001), and the Sensation Seeking Scale (SSS-V; α = .81; Zuckerman, 2007), as well as a composite of risk-taking (CRT) including questions about substance use, deviant peer affiliation, and antisocial behavior (αs = .94, .55, and .65 for these three subscales), developed by the Oregon Research Institute (Stormshak, Fosco, & Dishion, 2010).

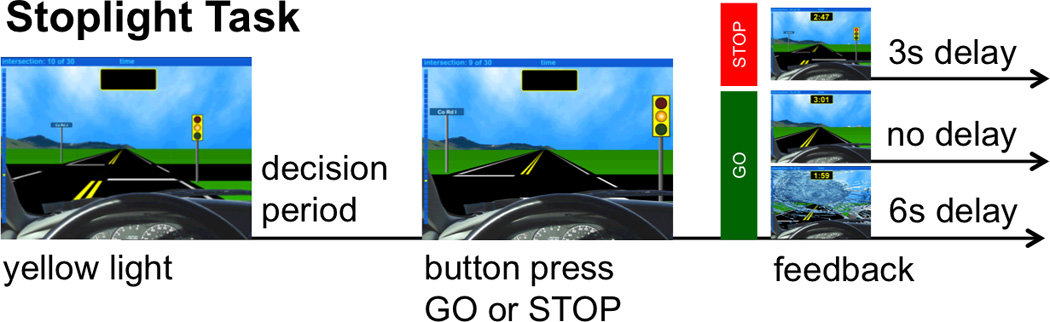

fMRI Paradigm

The behavioral task completed in the scanner was a driving simulation game called the Stoplight Task (Gardner & Steinberg, 2005). The perspective of the participant was that of a person driving a car (see Figure 1). While driving, participants encountered a series of stoplights. After each stoplight turned yellow (but before the intersection), participants had to decide whether to “stop” or “go” by pressing one of two buttons on a button box. If they chose to stop, they encountered a 3-sec delay. If they chose to go, they encountered either no delay (if they did not crash into another car) or a 6-sec delay (if they did crash into another car). The long delay was also accompanied by a simulation of a car crash, including a crashing noise and a broken windshield. If participants chose to go, they would reach the intersection between 1 and 1.5 sec after the onset of the yellow light. If they chose to go at every stoplight, they would crash 30% of the time, but they were not told this probability. They were encouraged to complete the game as quickly as possible, as if they were trying to arrive at a party before their friends left.

Figure 1.

Participants completed five games of the Stoplight Task, each consisting of 10 stoplights. After each stoplight turned yellow, participants were instructed to press one of two buttons indicating their choice to “go” or “stop.” If they chose to “go,” they risked crashing and incurring a longer delay than stopping would.

A similar task has been used in several studies (Chein et al., 2011; Steinberg et al., 2008; Gardner & Steinberg, 2005). There were several minor differences between the paradigm in our study and the others using a version of the Stoplight Task. First, in our task, participants were instructed to always press a button to indicate whether to stop or go, whereas in the previous studies mentioned above, the choice to go through the yellow light was inferred from the lack of a button press. Second, our measure of risk was the percentage of trials during which participants chose to run the yellow light, rather than their stop latencies, a measure that was incorporated into the risk-taking measure used in at least two previous studies (Steinberg et al., 2008; Gardner & Steinberg, 2005). Finally, there was no experimental manipulation regarding social context in the data presented here, as there was in Chein and colleagues (2011).

Participants were told they were required to press a button after the yellow light and before the intersection to stop or go. However, consistent with Peake, Dishion, Stormshak, Moore, & Pfeifer (2013) and Chein et al. (2011), the experiment was programmed such that, if no button was pressed, the car would still go through the intersection, so that pressing nothing would be equivalent to pressing the Go button. For the 16 of 20 participants that took advantage of this design feature, there was a mean of 12 “passive Go” trials (and these participants had a mean of 29 total Go trials), with an average of 38% of Go trials being passive across all 20 participants. Although a passive Go decision may differ in some ways from the more active decision of pressing the Go button, we included the passive and active Go trials in all analyses to preserve as many trials as possible and to be consistent with prior fMRI studies using this paradigm. Future studies using this paradigm should consider ways to deincentivize not pressing Go.

Five functional runs of data were collected, with each run as a distinct game consisting of 10 stoplights. After passing the tenth and final stoplight, a “Game Over” screen appeared, and participants were presented with the total time it took them to complete the game and how many times they crashed. The length of each game varied depending on participants’ decisions and the decision outcomes, but each run lasted approximately 2 min (M = 141.20 sec, SD = 5.20). Participants learned how to perform the task before the scanning session by watching a video of the task and being verbally instructed by the experimenter. Pilot testing demonstrated that, with a 30% probability of crashing at each stoplight, over five runs, adolescents made risky decisions approximately 50% of the time.

fMRI Data Acquisition

Data were acquired on a 3T Siemens Allegra MRI scanner at the Lewis Center for Neuroimaging at the University of Oregon. Five functional runs of T2*-weighted BOLD-EPI images were acquired with a gradient-echo sequence with repetition time (TR) = 2000 msec, echo time (TE) = 30 msec, flip angle = 80°, and 32 axial slices with interleaved acquisition, with slice thickness = 4 mm, in-plane resolution = 3.125 × 3.125 mm, matrix size = 64 × 64, field of view = 200 mm, and bandwidth = 2605 Hz/pixel. There were between 66 and 75 images per run, depending on the choices made during the task. During functional runs, acquisition was corrected according to head motion with prospective acquisition correction (PACE).

High-resolution T1-weighted anatomical images were also collected with the MP-RAGE sequence, with TR = 2500 msec, inversion time = 1100 msec, TE = 4.38 msec, flip angle = 8°, 160 contiguous slices coplanar to the functional scans, with slice thickness = 1 mm, in-plane resolution = 1 × 1 mm, matrix size = 256 × 192, field of view = 256 × 192 mm, and bandwidth = 130 Hz/pixel. Field maps were also collected (same parameters as the EPIs, except TR = 500 msec, TE = 4.99 msec, flip angle = 55°, bandwidth = 1530 Hz/pixel) to later correct for inhomogeneities in the magnetic field.

fMRI Analysis

Data were skull-stripped using FSL and preprocessed using SPM8 (SPM8, Wellcome Department of Cognitive Neurology, London, UK). Functional images were un-warped using the field maps, realigned to adjust for head motion, registered and warped into MNI space using DARTEL normalization procedures, and smoothed using a 6-mm Gaussian kernel, FWHM. Images were made up of 37,151 voxels, resliced to 3 × 3 × 3 mm. A high-pass filter of 128 sec was applied to eliminate low-frequency fluctuations in the signal. AR(1) was used to correct for serial autocorrelations.

In each participant’s fixed-effects analysis, a general linear model was created with four regressors of interest modeled as events: two decision regressors (Stop and Go) and two feedback regressors (Crash and No-Crash). The yellow light preceding a given decision (Stop or Go) served as the onset for that decision. The onset of the Crash event corresponded to another car crashing into the participant’s car. The No-Crash events had no clear onset time because they were by definition non-events; however, because the actual Crash events happened at most 2 sec after the yellow light, we modeled the No-Crash event as being 2 sec after the yellow light as well, at which point the outcome of the risky decision should have been clear. Although this onset did not correspond to a specific cue, it represents the best solution for incorporating into our model this type of feedback for participants’ risky decisions. A fifth regressor was used for the Game Over period at the end of each run, lasting from its onset until the end of the run. During this period, participants learned that the run was over and received the feedback on how quickly they had completed it. This regressor was thus created to eliminate responses to this information from the implicit baseline. In addition, because we wanted to assess changes in brain activity as the games progressed, we entered the functional run number (1 through 5) as a parametric modulator for each of the four conditions of interest (Stop, Go, Crash, and No-Crash). Functional images for the five runs were concatenated into one run and entered as one single session to permit parametric modulation by functional run number, and therefore, four additional regressors of no interest were added to account for mean-level differences across run. Finally, six motion parameters, derived from the online acquisition correction, were also used as regressors of no interest. Only three participants had at least one pair of images between which there was more than 1.5 mm of motion, and this image-to-image motion did not exceed 5 mm. As such, no one was excluded for excessive head motion during functional scans, and the six motion parameters accounted for any minute remaining variance due to head motion.

The model was convolved with the canonical hemodynamic response function, and the parameter estimates resulting from the general linear model were used to create linear contrast images. These single-subject contrast images were then entered into subsequent group-level single-sample t tests. Monte Carlo simulations were conducted using AFNI AlphaSim to determine the minimum cluster size needed for an FWE rate of .05, given a voxel-wise threshold of p = .001, and all results reported exceeded this threshold unless otherwise specified. Minimum cluster size varied slightly by analysis, ranging from 21 to 29, and specific thresholds are listed in all figures. We also used MarsBaR to extract parameter estimates for significant clusters in the group-level analyses for correlations with behavioral measures collected outside the scanner.

RESULTS

Behavioral Results

Risk was measured as the percentage of risky decisions a participant made. Level of risk across runs was .56 (for Runs 1 through 5, Ms = .67, .52, .58, .52, and .53; SDs = .22, .23, .26, .24, and .22, respectively), and risk varied significantly across runs (F(4, 80) = 2.7, p = .04). Specifically, there was a significant decrease from the first run to the second run, but there were no significant differences between any other run and the runs preceding it.

The yellow light appeared between 1000 and 1500 msec before the intersection, giving participants a limited amount of time to react to the yellow light and respond with their choice of Stop or Go. RTs for Stop trials (M = 369 msec, SD = 135) were significantly slower than Go trials (M = 295 msec, SD = 139) for those who used the button to respond for Go trials (t(16) = 2.12, p = .05).

Of all the behavioral measures (SSS, SPSRQ, RPI, CRT, impulsivity, and task riskiness), SSS correlated with SPSRQ (r(18) = .63, uncorrected p = .003), impulsivity (r(18) = .44, uncorrected p = .01), and CRT (r(18) = .46, uncorrected p = .04). SPSRQ also correlated with RPI (r(18) = −.54, uncorrected p = .01) and impulsivity correlated with CRT (r(18) = .59, uncorrected p = .006). This population was particularly low risk, as evidenced by responses on the CRT indicating the average level of risk was .26 (only 1 of 20 had smoked one cigarette in the past month; 3 of 20 had been to one or two parties), with only two participants expressing a level of risk greater than 1.3, both of which were statistical outliers (those two participants reported more substance use, deviant peer affiliation, and antisocial behavior). With these two outliers removed, the CRT correlated with none of the other measures.

fMRI Results

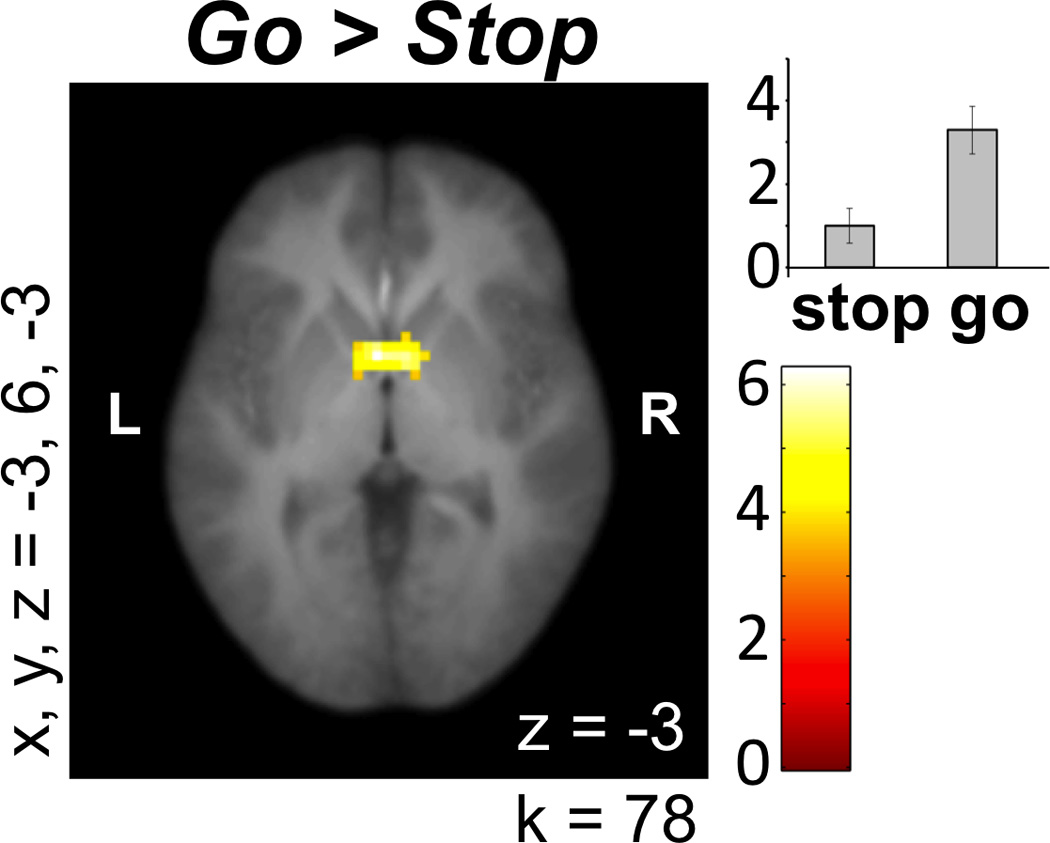

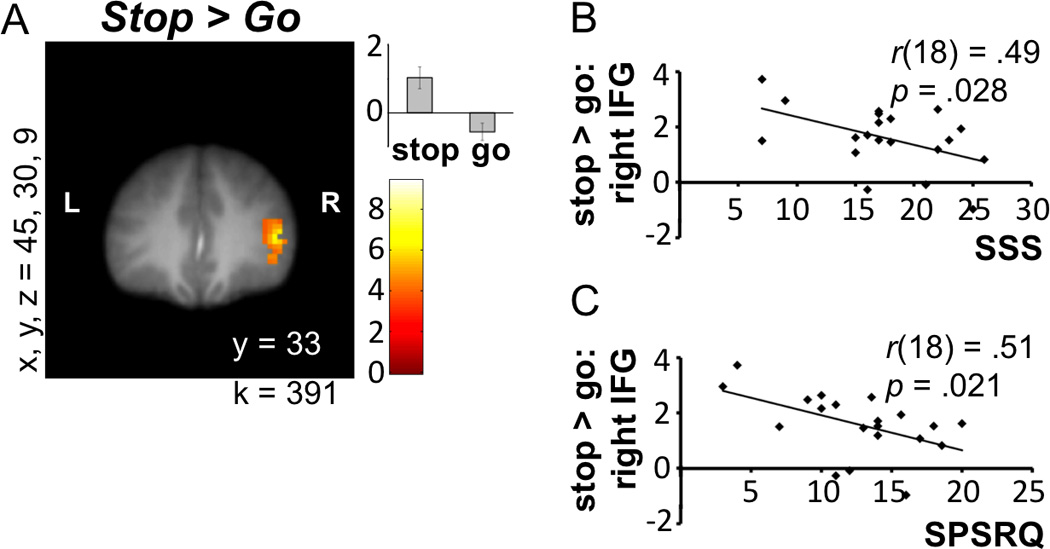

First, we contrasted the brain activity during Stop versus Go decisions using a single-sample t test. A single cluster in VS was found for the Go > Stop contrast (see Figure 2A). For the Stop > Go contrast, 10 clusters were found, including the hypothesized response in right inferior frontal gyrus (rIFG; see Figure 3A). The whole-brain results for these contrasts (Stop > Go and Go > Stop) are listed in Table 1.

Figure 2.

Coordinates for x, y, and z refer to the left–right, anterior– posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size. L and R refer to left and right. This figure depicts ventral striatal activity preceding Go decisions. Units in bar graph are arbitrary.

Figure 3.

Coordinates for x, y, and z refer to the left–right, anterior–posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size. L and R refer to left and right. (A) rIFG activity preceding Stop decisions. (B) Correlation between rIFG activity during safe versus risky decisions and sensation-seeking (SSS). (C) Correlation between rIFG activity during safe versus risky decisions and sensitivity to reward and punishment (SPSRQ). Units in bar graph are arbitrary.

Table 1.

Activity Preceding Go and Stop Decisions

| Contrast | Anatomical Region | x | y | z | k | t |

|---|---|---|---|---|---|---|

| Go > Stop | Bilateral ventral striatum | −3 | 6 | −3 | 78 | 6.23 |

| Stop > Go | R superior temporal gyrus | 57 | −42 | 12 | 913 | 8.95 |

| L superior temporal gyrus | −57 | −39 | 12 | 557 | 7.60 | |

| R inferior frontal gyrus | 45 | 30 | 9 | 391 | 6.91 | |

| Bilateral cuneus | 0 | −87 | 21 | 678 | 7.85 | |

| R parahippocampal gyrus | 21 | −51 | −3 | 80 | 4.61 | |

| R lingual gyrus | 27 | −69 | −9 | 110 | 6.29 | |

| R inferior parietal lobule | 54 | −27 | 42 | 74 | 5.45 | |

| R precentral gyrus | 54 | −6 | 30 | 62 | 5.08 | |

| R precuneus | 6 | −57 | 36 | 58 | 5.35 | |

| R inferior parietal lobule | 36 | −45 | 42 | 40 | 4.82 |

Coordinates for x, y, and z refer to the left–right, anterior–posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size.

Voxelwise threshold: p = .001; cluster size: k > 29 contiguous voxels; L and R refer to left and right.

We conducted an analogous contrast for the Crash and No-Crash conditions. Crash > No-Crash yielded clusters in bilateral superior temporal gyrus and lingual gyrus, consistent with the Crash event being predominantly an auditory and visual event. No-Crash > Crash yielded clusters in bilateral putamen, bilateral middle frontal gyrus, and left angular gyrus. For the detailed whole-brain results of these contrasts, see Table 2.

Table 2.

Activity following Crash and No-crash Outcomes

| Contrast | Anatomical Region | x | y | z | k | t |

|---|---|---|---|---|---|---|

| C > NC | Bilateral lingual gyrus | 6 | −84 | 0 | 785 | 8.80 |

| R superior temporal gyrus | 54 | −18 | 3 | 211 | 6.64 | |

| L superior temporal gyrus | −51 | −18 | 0 | 85 | 4.91 | |

| NC > C | R thalamus | 24 | −18 | 12 | 1411 | 7.52 |

| L putamen | −24 | −9 | 18 | 763 | 6.53 | |

| L angular gyrus | −30 | −54 | 39 | 107 | 5.36 | |

| R middle frontal gyrus | 42 | 27 | 30 | 46 | 4.98 | |

| L cerebellum | −12 | −66 | −39 | 48 | 4.93 | |

| R posterior cingulate | 30 | −66 | 15 | 34 | 4.67 | |

| White matter | −36 | −45 | 3 | 35 | 5.18 |

Coordinates for x, y, and z refer to the left–right, anterior–posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size.

Voxelwise threshold: p = .001; cluster size: k > 29 contiguous voxels; L and R refer to left and right; C and NC represent Crash and No-Crash conditions.

Using MarsBaR, we extracted parameter estimates for the single ventral striatal cluster from Go > Stop (x, y, z = [−3, 6, −3]; k = 78). Due to previous associations with self-control (Cohen, Berkman, & Lieberman, 2013; Aron et al., 2004), we also extracted the rIFG cluster from Stop > Go (x, y, z = [45, 30, 9]; k = 391). We then correlated these parameter estimates with both offline and online behavioral measures. We found that the extent of activity in rIFG for Stop > Go negatively correlated with SSS (r(18) = −.49, uncorrected p = .028; Figure 3B) and SPSRQ (r(18) = −.51, uncorrected p = .021; Figure 3C). There was also a marginally significant positive correlation between rIFG for Stop > Go and riskiness during the task (r(18) = .44, uncorrected p = .051) and a trend toward a positive correlation with RPI (r(18) = .41, uncorrected p = .07). Finally, the extent of activity in VS for Go > Stop also trended toward a positive correlation with scores on the SSS (r(18) = .41, uncorrected p = .075). None of the other survey measures correlated with the extent of activity in VS or rIFG for Go versus Stop decisions. With Bonferroni correction, p = .004 would be necessary to account for multiple comparisons, but we report the uncorrected p values here to include as much information as possible for this developing field.

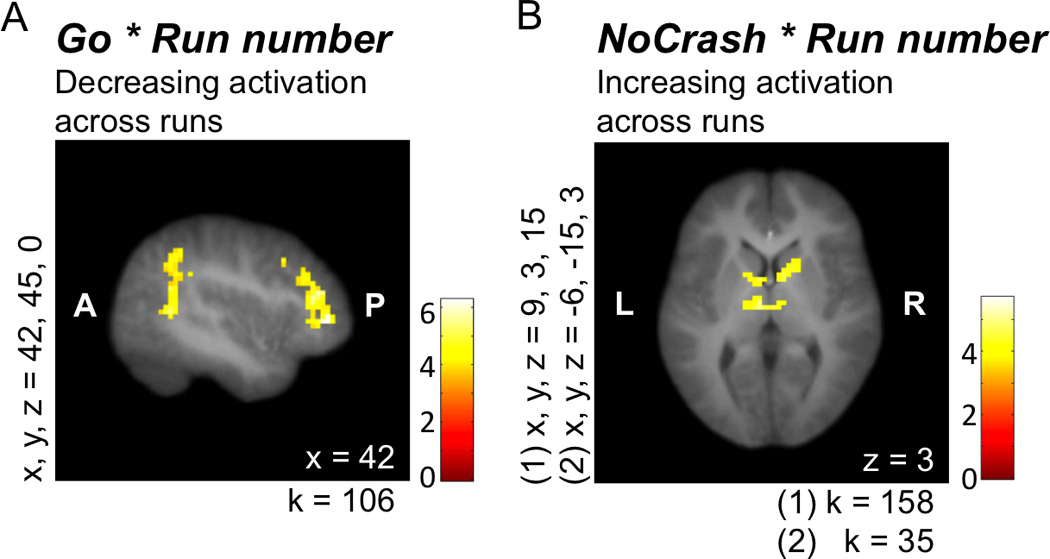

Finally, we used the parametrically modulated regressors to examine brain regions for which task-related activity increased or decreased over the course of the five functional runs. We will refer to the contrasts using these parametrically modulated regressors by appending INC (increase) or DEC (decrease) to indicate the direction of activity (e.g., Go-INC refers to the contrast subtracting rest from the parametrically modulated Go regressor, which reflects increases over time; Go-DEC refers to the reverse of this contrast, which reflects decreases over time). For example, we brought the individual contrasts for Go-INC to the group level to assess increases in Go-related brain activity over the course of the five runs. For the inverse of this contrast (Go-DEC), we found clusters in rIFG, right superior temporal gyrus, and cerebellum (see Figure 4A; Table 3). In other words, activity during Go decisions decreased in these regions as the runs progressed. No regions exhibited statistically significant increases or decreases in activity during Stop decisions. We conducted similar analyses for the two types of feedback events (Crash and No-Crash). No changes were found for the Crash events, but as runs progressed, No-Crash events were associated with increasingly more activity in bilateral thalamus and bilateral caudate (see Figure 4B; Table 3).

Figure 4.

Coordinates for x, y, and z refer to the left–right, anterior–posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size. A and P refer to anterior and posterior; L and R refer to left and right. (A) Activity in several regions, including lateral PFC, which decreased as the runs progressed. (B) Activity in (1) bilateral caudate and (2) bilateral thalamus, which increased as the runs progressed.

Table 3.

Activity Changing as Runs Progress

| Contrast | Anatomical Region | x | y | z | k | t |

|---|---|---|---|---|---|---|

| Go-DEC | R inferior frontal gyrus | 42 | 45 | 0 | 106 | 5.99 |

| R superior temporal gyrus | 45 | −51 | 9 | 185 | 6.01 | |

| L cerebellum | −33 | −69 | −27 | 35 | 5.80 | |

| No-Crash-INC | Bilateral caudate | 9 | 3 | 15 | 158 | 5.37 |

| Bilateral thalamus | −6 | −15 | 3 | 35 | 4.73 |

Coordinates for x, y, and z refer to the left–right, anterior–posterior, and inferior–posterior coordinates in MNI space, and k represents cluster size.

Voxelwise threshold: p = .001; cluster size: k > 21 contiguous voxels; L and R refer to left and right.

We also examined whether the increases observed during No-Crash events predicted the decreases observed during Go decisions. Interestingly, thalamus and caudate increases during No-Crash events were correlated with decreases in rIFG during Go decisions (rs(18) = −.51 and −.58, uncorrected ps = .02 and .0076, respectively). Thalamus increases also correlated with right superior temporal gyrus decreases (r(18) = −.48, uncorrected p = .03). These correlations were selective; neither of the increasing regions (thalamus and caudate) correlated with the decreases observed in cerebellum (rs(18) = .16 and .34, uncorrected ps = .49 and .14, respectively). With Bonferroni correction, p < .0083 would be necessary to account for multiple comparisons, with only the correlation between caudate increases and rIFG decreases surviving correction; however, we again include the uncorrected p values here to include as much information as possible for this developing field.

Supplementary Exploratory Analysis

We conducted an additional exploratory analysis to determine if there were differences in activity during decisions following crash events versus decisions following no-crash events and found significant clusters in dorsomedial thalamus (x, y, z = [−3, −6, 9], t(19) = 5.83, k = 68 and dorsolateral PFC (x, y, z = [36, 18, 39], t(19) = 5.74, k = 109). Whereas the other models investigated activity based on the ultimate decision made, these models separated decisions based on the outcome of the previous trial.

DISCUSSION

In this study, adolescents participating in a risky driving simulation exhibited different brain activity during risky and safe decisions. Specifically, VS activity preceded Go decisions and rIFG activity preceded Stop decisions. Furthermore, the extent of rIFG activity before Stop decisions correlated with self-reported sensation-seeking and sensitivity to reward and punishment, as well as risk-taking during the actual task. Finally, rIFG responses during Go trials decreased as the runs progressed, and thalamus and caudate responses during No-Crash events increased as the runs progressed, possibly indicating learning processes occurring throughout the task.

Risky and Safe Decisions

The above results demonstrate how adolescent neural activity differs during risky versus safe decisions. To our knowledge, this is the first study to comprehensively assess (at the whole-brain level) the differences in activity depending on the decision the adolescent made when presented with a risky and safe option.

Specifically, we found that risky decisions were preceded by responses in VS, a region that is often implicated in both anticipation and receipt of reward. This is complementary to the findings resulting from the same paradigm, employed by Chein and colleagues (2011). In particular, they used ROIs obtained from other analyses within the same study and found the VS was more active during risky versus safe decisions in adolescents but not adults. Our study replicated this finding in our whole-brain search. Consistent with our results, several studies have found ventral striatal activity during high-risk gambles in adults (Kuhnen & Knutson, 2005; Matthews, Simmons, Lane, & Paulus, 2004). Furthermore, activity in the nucleus accumbens, a portion of the VS, has been found for gain versus loss anticipation across many studies (Knutson & Greer, 2008). This suggests that, in our study, the anticipation of successfully passing through the yellow light may have led participants to be risky.

However, the VS has also been shown to respond to receipt of rewards, particularly when rewards are unpredictable or delayed, suggesting its activity represents prediction error (Pagnoni, Zink, Montague, & Berns, 2002; Berns, McClure, Pagnoni, & Montague, 2001). Consistent with this hypothesis, the VS likewise tracks with prediction error for negative feedback in a probabilistic classification learning task (Rodriguez, Aron, & Poldrack, 2006). Although it is unclear from this previous work whether the VS codes for anticipation of reward or prediction error, animal research suggests that one possibility is that separate portions of the VS (specifically, within the nucleus accumbens) are involved with these different components of reward processing—namely, wanting versus liking or anticipation versus receipt (Berridge, Robinson, & Aldridge, 2009). In our study, we only observed VS during anticipation, but not during receipt of reward (i.e., not during No-Crash events). One possibility is that participants in our study respond to luck in a varying manner, with only some recruiting VS (e.g., some may experience relief whereas others experience excitement, and these experiences may correspond to different neural signatures). Another possibility is that because No-Crash is by definition a non-event, participants experienced the reward of successfully passing through the yellow light at slightly different times, which in general suggests caution at interpreting the results of No-Crash findings in this study.

In contrast to risky decisions, safe decisions were preceded by more activity in the rIFG. As in our study, rIFG activity has previously been found to precede safe decisions in a monetary risk-taking paradigm that similarly investigated brain activity during safe versus risky decisions in adults (Matthews et al., 2004). Furthermore, assuming that the choice to “play it safe” involves a type of inhibition, these results are consistent with many other neuro-imaging and lesion studies, where the rIFG has been linked to the inhibitory component of response inhibition, task-switching, and memory suppression (Aron et al., 2004). Interestingly, Chein and colleagues (2011) did not find that lateral PFC was more active during safe versus risky decisions in either adolescents or adults in the same task. However, this could be due to the fact that they conducted an ROI analysis using clusters obtained in earlier analyses, combined with the fact that there is much evidence to suggest that different subdivisions of lateral PFC support different aspects of executive functioning.

Considering past work on reward processing and response inhibition, the above results suggest that activity supporting reward anticipation may lead an adolescent to choose the risky option over the safe one, whereas when the brain tends toward activity in rIFG, the adolescent will decide to stop and “play it safe.” In other words, during a decision, the weighing of choices is reflected by activity in regions including the VS and rIFG, and perhaps not surprisingly, the specific activity during a decision correlates with the subsequent action taken by the participant.

Individual Differences

In addition to differentiating between brain activity during risky and safe decisions, we also found that the extent of activity correlated with individual differences in task behavior and everyday riskiness on several well-validated measures. Specifically, we found that activity in rIFG during safe decisions correlated with overall riskiness during the task. Although it may at first seem like this heightened activity could mean that risky participants actually had more activity related to self control—a counterintuitive result—the design of this study suggests that inefficient rIFG processing may be contributing to this relationship. More specifically, the Stop trials were not trials during which participants were explicitly asked to stop, but instead where participants actually managed to stop as a result of the psychological processes leading up to the decision. Therefore this heightened activity for Stop trials may actually mean that compared with participants who were behaving more cautiously, those behaving riskily require a higher level of rIFG activity to successfully stop. With a higher threshold, individuals may reach it less often, and thus perform in a more risky fashion.

Although most studies have focused on making decisions in the context of risk irrespective of the actual choices made, our design separated risky and safe choices, thus allowing us to probe what brain activity might be driving risky behavior. Importantly, we saw no correlation between behavioral risks and decision-related activity collapsed across risky and safe decisions; instead, more rIFG activity specifically during safe versus risky decisions correlated with overall riskiness. This means the more risky participants did not have a profile of “more rIFG activity” overall, but instead specifically when successfully stopping. Such a result paints a more detailed picture than the traditional view of overall “frontal cortical immaturity” that is often proposed to explain adolescent risky behavior (for reviews, see Crone & Dahl, 2012; Pfeifer & Allen, 2012).

A different pattern of activity emerged in relation to offline self-reported sensation-seeking (SSS) and sensitivity to reward and punishment (SPSRQ). We found that rIFG activity during safe decisions negatively correlated with SSS and SPSRQ. In other words, those who scored high on SSS and SPSRQ had less rIFG activity during safe versus risky decisions. This implies that these behavioral scales are representing individual differences distinct from those of task riskiness, which is consistent with the fact that task riskiness did not correlate with these offline measures.

Sensation-seeking has been shown to be particularly high during adolescence, unlike impulsivity, which seems to steadily decline from childhood to adulthood (Steinberg et al., 2008; but for a discussion of nonlinear trajectories in impulsivity, see Crone & Dahl, 2012). Thus, it is also not necessarily problematic that sensation-seeking, but not impulsivity, relates to rIFG engagement—because the different measures seem to tap into separate facets contributing unique variance to adolescent risky behavior. Furthermore, the SPSRQ includes two subscales, sensitivity to reward and sensitivity to punishment, and it is the sensitivity to reward subscale that seems to be driving the correlation, which is consistent with the SSS correlation.

In the study by Steinberg and colleagues (2008), sensation-seeking was found to be a significant predictor of risky driving in the Stoplight game, but in our study, we found no correlation between sensation-seeking and Stoplight Task risk-taking. Our study was crucially different in several ways, however. Besides using a very restricted age range, compared with the 20-year range in the above study, we measured risk as the percentage of risky decisions made, rather than the time participants waited before stopping (Steinberg et al., 2008). Beyond risky driving in the Stoplight game, though, SSS tends to correlate with substance use, risky sexual behavior, and other real-life risk behavior (Roberti, 2004). Thus, the correlation between rIFG engagement during risk and self-reported sensation-seeking suggests that such risky behaviors in real life may be supported by differential engagement of the rIFG when adolescents are faced with risky decisions.

Learning to Play the Game

Because people face ongoing risky decisions throughout their lives, as the participants did in the Stoplight game, brain activity may be changing to accommodate past experiences during risky decision-making. We indeed found gradually changing brain activity for risky (Go) decisions as well as for No-Crash outcomes when participants risked and did not crash. Specifically, rIFG during risky decisions decreased with more decisions, and thalamus and caudate increased with more decisions.

It is likely that these changes in activity were related to learning during the task, considering the fact that, in both human and nonhuman primate studies, the caudate and the striatum in general have been linked to processes in reward learning, expectation, and habit formation (for a review, see Delgado, 2007). Interestingly, activity in the caudate has been found to decrease across trials, in contrast to our finding that caudate activity increased across trials (Delgado, Miller, Inati, & Phelps, 2005). Delgado and colleagues suggested that this signal might reflect learning-related prediction error that decreased as trial outcomes became more predictable and thus less informative. A similar argument could be made for our results, but given that we see increases instead of decreases in caudate activity, it would follow that adolescents are learning more on later trials than on earlier trials. This could be due to the particular paradigm or to a unique style of trial-and-error learning in adolescence. Future work should directly compare the learning processes occurring during risky decision-making in adolescents versus adults.

Finally, our results suggest an intriguing possibility that the increased caudate and thalamus response to “lucky” trials—where participants were risky and reaped the benefits—caused more disinhibition during subsequent decisions, reflected by gradually decreasing rIFG activity during Go trials. Accordingly, increases observed during the experience of luck in both thalamus and caudate correlated with the experiment-wide decreases observed in rIFG during risky decisions. To further confirm this hypothesis, we would need to model prediction error and subsequent decisions in a more formal trial-by-trial manner, but unfortunately the design of this study did not allow for this. However, the question of how adolescents learn from past outcomes to adjust their future deliberations between risky and safe options—and particularly, how they react to anomalous lucky outcomes—is a fruitful area for future research and may answer some important questions relevant to ongoing problems with adolescent risk-taking behavior.

Limitations and Future Directions

Although these results have important implications for adolescent risky behavior, several limitations of this study should be noted for the sake of interpreting the current data as well as considering future study designs. In an effort to make the task as ecologically valid as possible, we sacrificed several aspects of our design that should be viewed with caution. For example, scan length varied from participant to participant, in accordance with different decisions made by different participants. Also, the No-Crash events that were included in our model did not correspond to an actual visual cue. However, in an actual driving scenario, drivers do not receive a concrete cue verifying that they have made it through a stoplight without crashing.

Several other limitations should be noted for the sake of future work. First of all, as mentioned previously, the sample includes only adolescents who do not yet have a driver’s license, preventing any inference about the effects of previous driving experience. Also, with our sample size, we were not able to separate results by gender, though it may be interesting to compare risky behavior between male and female participants. Furthermore, though the driving task may engage adolescents more than a simple cognitive task, it only provides one context in which adolescents encounter risk deliberations, though many risk contexts are encountered in daily life. Future work should continue to investigate risk processing for a variety of different kinds of decisions, involving social, emotional, and health consequences. It is also not clear whether this task actually engaged this sample more than other tasks or whether boredom was a significant factor contributing to their decision-making, as no measure of boredom was collected. However, it is possible that participants only risked to avoid boredom. A relationship between boredom and risk-taking may be present in real life as well, though, and is a possible avenue for future research. Furthermore, future work may benefit from collecting information regarding the participants’ emotional reactions during the task; emotions, too, may be a factor contributing to their decision-making.

A final limitation was our inability to appropriately model prediction error and learning due to the number of trials in our experiment and the frequency of different outcomes. However, we urge the field to more fully investigate this aspect of prediction error and updating during adolescent risky decision-making, especially in light of the unique prediction error signal observed in adolescence (Cohen et al., 2010). Also, likely due to the length of the task, number of trials in each run, and online behavioral measures, we did not observe a clear pattern of behavioral change throughout the task, making it difficult to interpret the neural changes. This could arguably be more interesting than if behavioral changes had indeed been observed, because neural changes in theory should always accompany behavioral changes. However, with enough trials and better psychometric measures (besides just number of trials with risk), future work might be able to better depict the neural correlates of other aspects of risk learning that have only begun to be investigated, such as the tendency to switch strategies after negative feedback (Javadi, Schmidt, & Smolka, 2014).

Conclusion

In summary, this study elucidated differences in adolescent brain activity during risky and safe decisions, individual differences in such activity, and how decision-related and outcome-related activity changed over the course of the task. These data bridge the gap between the adolescent reward-processing literature, which has investigated the differences between different kinds of expectations and outcomes, and the adolescent risk and decision-making literature, which has investigated different levels of risk involved in decisions but has not extensively compared brain activity between different spontaneous choices made by participants. Furthermore, the fact that the decision-specific activity found in our analyses also correlated with other questionnaire and behavioral measures is an important addition to the body of literature reporting individual differences in reward and decision-making processes in both typically developing and abnormal populations (Cservenka & Nagel, 2012; Bjork, Chen, et al., 2010; Van Leijenhorst, Gunther Moor, et al., 2010; Rubia et al., 2009; Eshel et al., 2007; Galvan et al., 2007; Scheres et al., 2007). Further research should investigate whether variation in these profiles of activity can predict not just current behaviors but long-term outcomes later in adolescence and into early adulthood. We note that correlations between personality tendencies and brain activity found in the absence of behavioral differences highlight the utility of using neuroimaging methods to uncover differences in processing that may not be revealed by behavior alone.

Finally, our results regarding changes in brain activity throughout the task as adolescents learn to play the game provide a starting point that illuminates an important avenue for future research. Our analyses showed coarse changes in activations over the duration of the experiment during decisions and outcomes. These analyses, however, were vastly limited by the design of the task and thus are only preliminary; future work should more rigorously investigate this by modeling prediction error to surprising outcomes and subsequent modulation of decision-related brain activity. This is a particularly important future direction, especially given the possibility that adolescents’ prediction error response is different than that of other age groups (Cohen et al., 2010). Furthermore, understanding how adolescents learn how to behave in risky situations is crucial given that adolescents are anecdotally reported as having an abnormal and frustrating inability to learn appropriately from past experiences in risky situations and given that adolescents’ ability to learn how to navigate risk-reward tradeoffs indeed tends to be worse at younger ages (Cauffman et al., 2010; Hooper, Luciana, Conklin, & Yarger, 2004; Overman et al., 2004). Finally, understanding the specific computations involved in adolescent risk processing has important implications for those trying to change adolescents’ problematic learning patterns. With more information about the specific mechanisms supporting adolescent risk and decision processing, we will have a better framework for dealing and communicating more effectively with adolescents regarding risk—an essential objective for parents, teachers, and clinicians, as well as those designing and implementing interventions for populations at risk.

Acknowledgments

Support for this project was provided by the National Institute on Drug Abuse to the Center on Early Adolescence (DA018760, Tony Biglan, Oregon Research Institute, PI) and the NIMH Development, Emotion, Ecology, and Psychopathology Research Training grant to S.J. P. (5T32-MH20012, Elizabeth A. Stormshak, University of Oregon, Child and Family Center and College of Education, PI). J. H. P. was also supported by P50 DA035763, NIDA, U.S. PHS (PIs: Chamberlain & Fisher). Special thanks are due to the University of Oregon Developmental Social Neuroscience laboratory. MRI was performed at the Robert and Beverly Lewis Center for Neuroimaging at the University of Oregon.

REFERENCES

- Albert D, Steinberg L. Judgment and decision making in adolescence. Journal of Research on Adolescence. 2011;21:211–224. [Google Scholar]

- Aron AR, Robbins TW, Poldrack RA. Inhibition and the right inferior frontal cortex. Trends in Cognitive Sciences. 2004;8:170–177. doi: 10.1016/j.tics.2004.02.010. [DOI] [PubMed] [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. The Journal of Neuroscience. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE, Aldridge JW. Dissecting components of reward: “Liking”, “wanting”, and learning. Current Opinion in Pharmacology. 2009;9:65–73. doi: 10.1016/j.coph.2008.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Chen G, Smith AR, Hommer DW. Incentive-elicited mesolimbic activation and externalizing symptomatology in adolescents. Journal of Child Psychology and Psychiatry, and Allied Disciplines. 2010;51:827–837. doi: 10.1111/j.1469-7610.2009.02201.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Knutson B, Fong GW, Caggiano DM, Bennett SM, Hommer DW. Incentive-elicited brain activation in adolescents: Similarities and differences from young adults. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2004;24:1793–1802. doi: 10.1523/JNEUROSCI.4862-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Smith AR, Chen G, Hommer DW. Adolescents, adults and rewards: Comparing motivational neurocircuitry recruitment using fMRI. PloS One. 2010;5:e11440. doi: 10.1371/journal.pone.0011440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Smith AR, Danube CL, Hommer DW. Developmental differences in posterior mesofrontal cortex recruitment by risky rewards. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2007;27:4839–4849. doi: 10.1523/JNEUROSCI.5469-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casey BJ, Jones RM. Neurobiology of the adolescent brain and behavior: Implications for substance use disorders. Journal of the American Academy of Child and Adolescent Psychiatry. 2010;49:1189–1201. doi: 10.1016/j.jaac.2010.08.017. quiz 1285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casey BJ, Jones RM, Somerville LH. Braking and accelerating of the adolescent brain. Journal of Research on Adolescence: The Official Journal of the Society for Research on Adolescence. 2011;21:21–33. doi: 10.1111/j.1532-7795.2010.00712.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cauffman E, Shulman EP, Steinberg L, Claus E, Banich MT, Graham S, et al. Age differences in affective decision making as indexed by performance on the Iowa gambling task. Developmental Psychology. 2010;46:193–207. doi: 10.1037/a0016128. [DOI] [PubMed] [Google Scholar]

- Chein J, Albert D, O’Brien L, Uckert K, Steinberg L. Peers increase adolescent risk taking by enhancing activity in the brain’s reward circuitry. Developmental Science. 2011;14:F1–F10. doi: 10.1111/j.1467-7687.2010.01035.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu CY, Tlustos SJ, Walz NC, Holland SK, Eliassen JC, Bernard L, et al. Neural correlates of risky decision making in adolescents with and without traumatic brain injury using the balloon analog risk task. Developmental Neuropsychology. 2012;37:176–183. doi: 10.1080/87565641.2011.632796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho YT, Fromm S, Guyer AE, Detloff A, Pine DS, Fudge JL, et al. Nucleus accumbens, thalamus and insula connectivity during incentive anticipation in typical adults and adolescents. Neuroimage. 2012;66C:508–521. doi: 10.1016/j.neuroimage.2012.10.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JR, Asarnow RF, Sabb FW, Bilder RM, Bookheimer SY, Knowlton BJ, et al. A unique adolescent response to reward prediction errors. Nature Neuroscience. 2010;13:669–671. doi: 10.1038/nn.2558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JR, Berkman ET, Lieberman MD. Intentional and incidental self-control in ventrolateral pFC. In: Suss DT, Knight RT, editors. Principles of frontal lobe functions. 2nd ed. New York: Oxford University Press; 2013. pp. 417–440. [Google Scholar]

- Crone EA, Dahl RE. Understanding adolescence as a period of social-affective engagement and goal flexibility. Nature Reviews Neuroscience. 2012;13:636–650. doi: 10.1038/nrn3313. [DOI] [PubMed] [Google Scholar]

- Cservenka A, Nagel BJ. Risky decision-making: An fMRI study of youth at high risk for alcoholism. Alcoholism: Clinical and Experimental Research. 2012;36:604–615. doi: 10.1111/j.1530-0277.2011.01650.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR. Reward-related responses in the human striatum. Annals of the New York Academy of Sciences. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. Neuroimage. 2005;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- Eaton DK, Kann L, Kinchen S, Shanklin S, Flint KH, Hawkins J, et al. Youth risk behavior surveillance-United States, 2011. MMWR Surveillance Summaries. 2012;61:1–162. [PubMed] [Google Scholar]

- Ernst M, Nelson EE, Jazbec S, McClure EB, Monk CS, Leibenluft E, et al. Amygdala and nucleus accumbens in responses to receipt and omission of gains in adults and adolescents. Neuroimage. 2005;25:1279–1291. doi: 10.1016/j.neuroimage.2004.12.038. [DOI] [PubMed] [Google Scholar]

- Ernst M, Nelson EE, McClure EB, Monk CS, Munson S, Eshel N, et al. Choice selection and reward anticipation: An fMRI study. Neuropsychologia. 2004;42:1585–1597. doi: 10.1016/j.neuropsychologia.2004.05.011. [DOI] [PubMed] [Google Scholar]

- Eshel N, Nelson EE, Blair RJ, Pine DS, Ernst M. Neural substrates of choice selection in adults and adolescents: Development of the ventrolateral prefrontal and anterior cingulate cortices. Neuropsychologia. 2007;45:1270–1279. doi: 10.1016/j.neuropsychologia.2006.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvan A. Adolescent development of the reward system. Frontiers in Human Neuroscience. 2010;4:6. doi: 10.3389/neuro.09.006.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvan A, Hare TA, Parra CE, Penn J, Voss H, Glover G, et al. Earlier development of the accumbens relative to orbitofrontal cortex might underlie risk-taking behavior in adolescents. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2006;26:6885–6892. doi: 10.1523/JNEUROSCI.1062-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvan A, Hare T, Voss H, Glover G, Casey BJ. Risk-taking and the adolescent brain: Who is at risk? Developmental Science. 2007;10:F8–F14. doi: 10.1111/j.1467-7687.2006.00579.x. [DOI] [PubMed] [Google Scholar]

- Gardner M, Steinberg L. Peer influence on risk taking, risk preference, and risky decision making in adolescence and adulthood: An experimental study. Developmental Psychology. 2005;41:625–635. doi: 10.1037/0012-1649.41.4.625. [DOI] [PubMed] [Google Scholar]

- Geier CF, Terwilliger R, Teslovich T, Velanova K, Luna B. Immaturities in reward processing and its influence on inhibitory control in adolescence. Cerebral Cortex (New York, N.Y.: 1991) 2010;20:1613–1629. doi: 10.1093/cercor/bhp225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyer AE. Striatal functional alteration during incentive anticipation in pediatric anxiety disorders. The American Journal of Psychiatry. 2012;169:205. doi: 10.1176/appi.ajp.2011.11010006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyer AE, Nelson EE, Perez-Edgar K, Hardin MG, Roberson-Nay R, Monk CS, et al. Striatal functional alteration in adolescents characterized by early childhood behavioral inhibition. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2006;26:6399–6405. doi: 10.1523/JNEUROSCI.0666-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoogendam JM, Kahn RS, Hillegers MH, van Buuren M, Vink M. Different developmental trajectories for anticipation and receipt of reward during adolescence. Developmental Cognitive Neuroscience. 2013;6:113–124. doi: 10.1016/j.dcn.2013.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooper CJ, Luciana M, Conklin HM, Yarger RS. Adolescents’ performance on the Iowa gambling task: Implications for the development of decision making and ventromedial prefrontal cortex. Developmental Psychology. 2004;40:1148–1158. doi: 10.1037/0012-1649.40.6.1148. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Javadi AH, Schmidt DH, Smolka MN. Differential representation of feedback and decision in adolescents and adults. Neuropsychologia. 2014;56:280–288. doi: 10.1016/j.neuropsychologia.2014.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz EC, Fromme K, D’Amico EJ. Effects of outcome expectancies and personality on young adults’ illicit drug use, heavy drinking, and risky sexual behavior. Cognitive Therapy and Research. 2000;24:1–22. [Google Scholar]

- Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. NeuroReport. 2001;12:3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- Knutson B, Greer SM. Anticipatory affect: Neural correlates and consequences for choice. Philosophical Transactions of the Royal Society of London, Series B, Biological Sciences. 2008;363:3771–3786. doi: 10.1098/rstb.2008.0155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Westdorp A, Kaiser E, Hommer D. fMRI visualization of brain activity during a monetary incentive delay task. Neuroimage. 2000;12:20–27. doi: 10.1006/nimg.2000.0593. [DOI] [PubMed] [Google Scholar]

- Krain AL, Wilson AM, Arbuckle R, Castellanos FX, Milham MP. Distinct neural mechanisms of risk and ambiguity: A meta-analysis of decision-making. Neuroimage. 2006;32:477–484. doi: 10.1016/j.neuroimage.2006.02.047. [DOI] [PubMed] [Google Scholar]

- Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- Lejuez CW, Read JP, Kahler CW, Richards JB, Ramsey SE, Stuart GL, et al. Evaluation of a behavioral measure of risk taking: The balloon analogue risk task (BART) Journal of Experimental Psychology: Applied. 2002;8:75. doi: 10.1037//1076-898x.8.2.75. [DOI] [PubMed] [Google Scholar]

- Matthews SC, Simmons AN, Lane SD, Paulus MP. Selective activation of the nucleus accumbens during risk-taking decision making. NeuroReport. 2004;15:2123–2127. doi: 10.1097/00001756-200409150-00025. [DOI] [PubMed] [Google Scholar]

- May JC, Delgado MR, Dahl RE, Stenger VA, Ryan ND, Fiez JA, et al. Event-related functional magnetic resonance imaging of reward-related brain circuitry in children and adolescents. Biological Psychiatry. 2004;55:359–366. doi: 10.1016/j.biopsych.2003.11.008. [DOI] [PubMed] [Google Scholar]

- Op de Macks ZA, Gunther Moor B, Overgaauw S, Güroğlu B, Dahl RE, Crone EA. Testosterone levels correspond with increased ventral striatum activation in response to monetary rewards in adolescents. Developmental Cognitive Neuroscience. 2011;1:506–516. doi: 10.1016/j.dcn.2011.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overman WH, Frassrand K, Ansel S, Trawalter S, Bies B, Redmond A. Performance on the IOWA card task by adolescents and adults. Neuropsychologia. 2004;42:1838–1851. doi: 10.1016/j.neuropsychologia.2004.03.014. [DOI] [PubMed] [Google Scholar]

- Pagnoni G, Zink CF, Montague PR, Berns GS. Activity in human ventral striatum locked to errors of reward prediction. Nature Neuroscience. 2002;5:97–98. doi: 10.1038/nn802. [DOI] [PubMed] [Google Scholar]

- Patton JH, Stanford MS, Barratt ES. Factor structure of the Barratt impulsiveness scale. Journal of Clinical Psychology. 1995;51:768–774. doi: 10.1002/1097-4679(199511)51:6<768::aid-jclp2270510607>3.0.co;2-1. [DOI] [PubMed] [Google Scholar]

- Peake SJ, Dishion TJ, Stormshak EA, Moore WE, Pfeifer JH. Risk-taking and social exclusion in adolescence: Neural mechanism underlying peer influences on decision-making. Neuroimage. 2013;82:23–34. doi: 10.1016/j.neuroimage.2013.05.061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeifer JH, Allen NB. Arrested development? Reconsidering dual-systems models of brain function in adolescence and disorders. Trends in Cognitive Sciences. 2012;16:322–329. doi: 10.1016/j.tics.2012.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JM, Plate RC, Ernst M. A systematic review of fMRI reward paradigms in adolescents vs. adults: The impact of task design and implications for understanding neurodevelopment. Neuroscience and Biobehavioral Reviews. 2013;37:976–991. doi: 10.1016/j.neubiorev.2013.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberti JW. A review of behavioral and biological correlates of sensation seeking. Journal of Research in Personality. 2004;38:256–279. [Google Scholar]

- Rodriguez PF, Aron AR, Poldrack RA. Ventral-striatal/nucleus-accumbens sensitivity to prediction errors during classification learning. Human Brain Mapping. 2006;27:306–313. doi: 10.1002/hbm.20186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubia K, Smith AB, Halari R, Matsukura F, Mohammad M, Taylor E, et al. Disorder-specific dissociation of orbitofrontal dysfunction in boys with pure conduct disorder during reward and ventrolateral prefrontal dysfunction in boys with pure ADHD during sustained attention. The American Journal of Psychiatry. 2009;166:83–94. doi: 10.1176/appi.ajp.2008.08020212. [DOI] [PubMed] [Google Scholar]

- Scheres A, Milham MP, Knutson B, Castellanos FX. Ventral striatal hyporesponsiveness during reward anticipation in attention-deficit/hyperactivity disorder. Biological Psychiatry. 2007;61:720–724. doi: 10.1016/j.biopsych.2006.04.042. [DOI] [PubMed] [Google Scholar]

- Simons-Morton BG, Ouimet MC, Zhang Z, Klauer SE, Lee SE, Wang J, et al. The effect of passengers and risk-taking friends on risky driving and crashes/near crashes among novice teenagers. The Journal of Adolescent Health: Official Publication of the Society for Adolescent Medicine. 2011;49:587–593. doi: 10.1016/j.jadohealth.2011.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Somerville LH, Jones RM, Casey BJ. A time of change: Behavioral and neural correlates of adolescent sensitivity to appetitive and aversive environmental cues. Brain and Cognition. 2010;72:124–133. doi: 10.1016/j.bandc.2009.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg L, Albert D, Cauffman E, Banich M, Graham S, Woolard J. Age differences in sensation seeking and impulsivity as indexed by behavior and self-report: Evidence for a dual systems model. Developmental Psychology. 2008;44:1764–1778. doi: 10.1037/a0012955. [DOI] [PubMed] [Google Scholar]

- Steinberg L, Monahan KC. Age differences in resistance to peer influence. Developmental Psychology. 2007;43:1531–1543. doi: 10.1037/0012-1649.43.6.1531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stormshak EA, Fosco GM, Dishion TJ. Implementing interventions with families in schools to increase youth school engagement: The family check-up model. School Mental Health. 2010;2:82–92. doi: 10.1007/s12310-009-9025-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telzer EH, Fuligni AJ, Lieberman MD, Galván A. Meaningful family relationships: Neurocognitive buffers of adolescent risk taking. Journal of Cognitive Neuroscience. 2013;25:374–387. doi: 10.1162/jocn_a_00331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torrubia R, Avila C, Moltó J, Caseras X. The sensitivity to punishment and sensitivity to reward questionnaire (SPSRQ) as a measure of Gray’s anxiety and impulsivity dimensions. Personality and Individual Differences. 2001;31:837–862. [Google Scholar]

- Tymula A, Rosenberg Belmaker LA, Ruderman L, Glimcher PW, Levy I. Like cognitive function, decision making across the life span shows profound age-related changes. Proceedings of the National Academy of Sciences, U.S.A. 2013;110:17143–17148. doi: 10.1073/pnas.1309909110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Bos W, Cohen MX, Kahnt T, Crone EA. Striatum-medial prefrontal cortex connectivity predicts developmental changes in reinforcement learning. Cerebral Cortex (New York, N.Y.: 1991) 2012;22:1247–1255. doi: 10.1093/cercor/bhr198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Duijvenvoorde AC, Jansen BR, Bredman JC, Huizenga HM. Age-related changes in decision making: Comparing informed and noninformed situations. Developmental Psychology. 2012;48:192–203. doi: 10.1037/a0025601. [DOI] [PubMed] [Google Scholar]

- Van Leijenhorst L, Crone EA, Bunge SA. Neural correlates of developmental differences in risk estimation and feedback processing. Neuropsychologia. 2006;44:2158–2170. doi: 10.1016/j.neuropsychologia.2006.02.002. [DOI] [PubMed] [Google Scholar]

- Van Leijenhorst L, Gunther Moor B, Op de Macks ZA, Rombouts SA, Westenberg PM, Crone EA. Adolescent risky decision-making: Neurocognitive development of reward and control regions. Neuroimage. 2010;51:345–355. doi: 10.1016/j.neuroimage.2010.02.038. [DOI] [PubMed] [Google Scholar]

- Van Leijenhorst L, Zanolie K, Van Meel CS, Westenberg PM, Rombouts SA, Crone EA. What motivates the adolescent? Brain regions mediating reward sensitivity across adolescence. Cerebral Cortex (New York, N.Y.: 1991) 2010;20:61–69. doi: 10.1093/cercor/bhp078. [DOI] [PubMed] [Google Scholar]

- Zuckerman M. The sensation seeking scale V (SSS-V): Still reliable and valid. Personality and Individual Differences. 2007;43:1303–1305. [Google Scholar]