Abstract

This paper presents a systems identification approach for studying the long-term synaptic plasticity using natural spiking activities. This approach consists of three modeling steps. First, a multi-input, single-output (MISO), nonlinear dynamical spiking neuron model is formulated to estimate and represent the synaptic strength in means of functional connectivity between input and output neurons. Second, this MISO model is extended to a nonstationary form to track the time-varying properties of the synaptic strength. Finally, a Volterra modeling method is used to extract the synaptic learning rule, e.g., spike-timing-dependent plasticity, for the explanation of the input-output nonstationarity as the consequence of the past input-output spiking patterns. This framework is developed to study the underlying mechanisms of learning and memory formation in behaving animals, and may serve as the computational basis for building the next-generation adaptive cortical prostheses.

Keywords: spike, spatio-temporal pattern, learning rule, spike-timing-dependent plasticity

1. Introduction

Long-term synaptic plasticity has long been postulated as the underlying mechanism of learning and memory formation (Hebb, 1949). This hypothesis is supported by diverse lines of evidence involving various experimental approaches and animal species (Alkon et al., 1991; Barnes, 1979; Berger, 1984; Lynch and Baudry, 1984; Martin et al., 1997; McHugh et al., 1996; McNaughton et al., 1986; Morris, 1989; Nakazawa et al., 2002; Tonegawa et al., 1996). Specific forms of activity-dependent synaptic plasticity such as long-term potentiation/depression (LTP/LTD) and spike-timing-dependent plasticity (STDP) have been revealed and intensively investigated (Bi and Poo, 1998; Bliss and Lomo, 1973; Ito, 1989; Levy and Steward, 1983; Markram et al., 1997; Caporale and Dan, 2008; Feldman, 2012). However, the exact nature and functional properties of neural plasticity during learning and memory formation are less known, especially in the case of the mammalian brain in behaving animals. In fact, for many researchers, whether learning and memory rely on LTP-like synaptic plasticity still remains controversial (Bramham, 2010; Stevens, 1998). The aim of this paper is to formulate a computational framework for the identification of long-term synaptic plasticity and the associated learning rule from natural ensemble spiking activities recorded in animals performing learning and memory tasks. It has the potential to provide direct evidence and new insights to this fundamental question of neuroscience.

Synaptic plasticity in behaving animals is difficult to study for a variety of reasons. First, brain regions underlying learning and memory, like the hippocampus, are massively parallel, point- process MIMO systems. In any given brain region, information is represented in the ensemble firing of populations of neurons (Deadwyler and Hampson, 1995; Freeman, 1999; Georgopoulos et al., 1986; Pouget et al., 2003; Salinas and Abbott, 1994). The input-output signals are stimulus- or behaviorally-driven (spontaneous) spike trains, as opposed to the artificially delivered electrical stimuli and the evoked responses typically used in the in vitro studies of synaptic plasticity (Anderson et al., 1971; Berger et al., 1988a, b). Extraction of the neuron-to-neuron connection strength, e.g., synaptic weight or EPSP, and further changes of such a strength from ensemble spiking activities, which is a necessary step for studying neural plasticity, poses serious modeling and computational challenges (Berger et al., 2010; Brown et al., 2004; Song and Berger, 2009). Second, the spike train-to-spike train transformation exhibits strong nonlinear dynamical properties due to the numerous underlying nonlinear biological mechanisms and processes (Berger et al., 1994; Johnston and Wu, 1995). These nonlinear dynamical properties make the quantification of neural plasticity, which is equivalent to the identification of the nonstationarity of the nonlinear dynamics (Song and Berger, 2009), even more difficult. Third, to study neural plasticity caused by learning and memory formation, the experimental paradigm should be designed in such a way that both the behavioral performance and the changes of neural representation are shaped by the animals’ learning experience instead of incrementally forced by the training paradigm. Fourth, reliable and stable unitary recordings of multiple neuron ensembles are required during the whole learning process (Buzsaki, 2004). The scope of this study is to build mathematical methodologies to address the first two computational challenges.

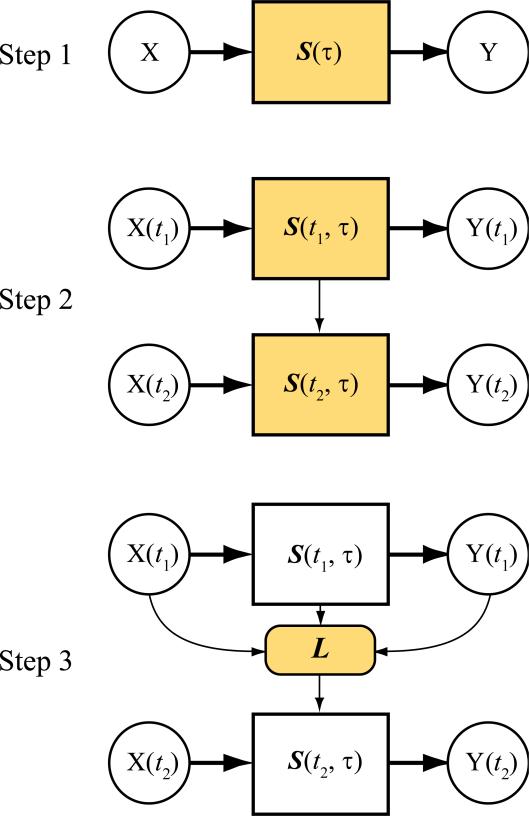

The proposed modeling strategy consists of three steps (Fig. 1). First, we formulate a nonlinear dynamical spiking neuron model to extract and represent the functional synaptic strength between the input and output neurons using spiking data. Model structure, parameter estimate method, and interpretations of the model variables are described. In this step, the model is stationary, i.e., it is not a function of time (Fig. 1, top). Second, we extend the model to a nonstationary nonlinear dynamical model to track the changes of the synaptic strength over time. In this step, the model becomes a function of time (Fig. 1, middle). Third, we utilize a Volterra modeling approach to identify the synaptic learning rule that can explain the changes of the synaptic strength as the consequence of the input-output spiking activities (Fig. 1, bottom). We elucidate the relationship between the Volterra kernel and the STDP learning rule, and further test the proposed modeling methods with simulations.

Figure 1.

Schematic diagram of the three modeling steps. X: input sequences; Y: output sequences; S: spiking neuron model; L: learning rule for S. In Step 1, S is not a function of time. In Steps 2 and 3, S varies with time. During learning, S evolves as the consequence of input and output activities following a learning rule. Colored boxes indicate the functions need to be identified in each modeling step.

2. Materials and Methods

2.1. Nonlinear dynamical spiking neuron model

First, a model structure is required for the extraction of the synaptic strength from the spiking activities of the input-output neurons. In such a model, the synaptic strength, as well as other parameters, should be estimated from the input-output spiking activities. This is a non-trivial task, since both inputs and output are point-process signals containing only the timings of the spikes and the synaptic strength thus need to be inferred instead of directly measured. We use the nonlinear dynamical spiking neuron model we previously developed for such a task (Song and Berger, 2009; Song et al., 2007, 2009a, b; Song et al., 2013).

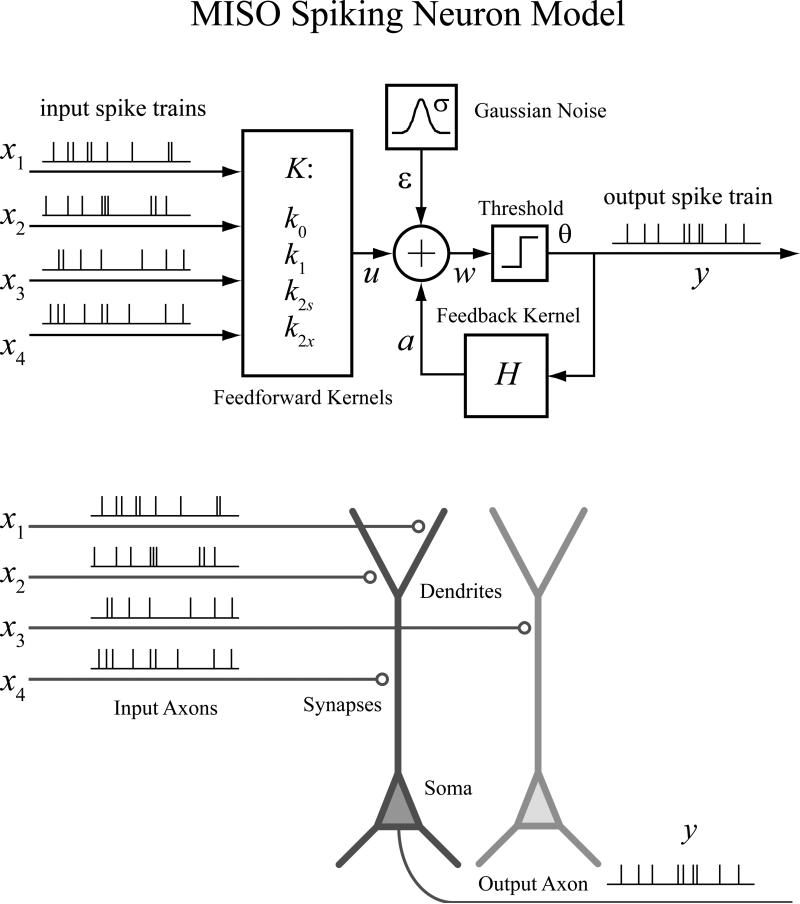

In this approach, a spiking neuron is represented as a multi-input, single-output (MISO) model with a physiologically plausible structure (Fig. 2).

| (1) |

| (2) |

Figure 2.

Multi-input, single-output (MISO) nonlinear dynamical model of spiking neuron. The MISO model takes a physiologically plausible structure. All model variables are simultaneously estimated from the input-output spiking activities.

Variable x represents input spike trains; y represents the output spike train; w represents the pre-threshold membrane potential of the output neurons, that is expressed as the summation of the post-synaptic potential u caused by input spike trains, the output spike-triggered after-potential a, and a Gaussian white noise ε with standard deviation σ. A threshold, θ, determines the generation of the output spike and the associated feedback after-potential (a).

The feedforward transformation from x to u and the feedback transformation from y to a take the form of a second-order Volterra model K and a first-order Volterra model H, respectively.

| (3) |

| (4) |

The zeroth-order kernel, k0 , is the value of u when there is no input. It determines the spontaneous firing rate of the output neuron. First-order kernels describe the linear relation between the nth input xn and u, as functions of the time intervals (τ) between the present time and the past time. Second-order self kernels describe the nonlinear interactions between pairs of spikes within the nth input xn as they affect u. Second-order cross kernels describe the nonlinear interactions between pairs of spikes from different inputs (xn1 and xn2 ) as they affect u. N is the number of inputs. Higher-order kernels are not included in this study for simplicity. h is a linear feedback kernel that transforms the output spike to the after-potential a. Mk and Mh are memory lengths of feedforward and feedback processes, respectively.

To reduce number of parameters and avoid overfitting, basis functions are utilized in model estimations (Song et al., 2013). Basis functions b can take the forms of Laguerre basis (Marmarelis, 1993; Marmarelis, 2004; Song et al., 2009c; Song et al., 2009d) or B-spine basis (de Boor, 1972). With input and output spike trains x and y convolved with b:

| (5) |

| (6) |

Equations 3 and 4 are rewritten into:

| (7) |

, , , and ch are the model coefficients of , , , and h , respectively. c0 is equal to k0. Given the kernels are smooth and continuous functions, the number of basis functions L can be much smaller than the memory length (Mk and Mh).

In Equation 7 and 8, v and vv can be calculated from the known x, y and b. The Volterra series essentially expresses the nonlinear relationship between u and x into a linear relationship between u and [v, vv]. The joint effect of the threshold θ and the Gaussian noise ε is equivalent to a probit link function that maps the value of u + a into the probability of y is equal to 1. The whole model thus can be expressed as a generalized linear model with the nonlinearity structured in the Volterra series. Therefore, this MISO model can be termed as a generalized Volterra model (GVM) (Song et al., 2009a, b). As a special case, the first-order GVM is equivalent to the commonly used generalized linear models (Paninski et al., 2004; Okatan et al., 2005; Pillow et al., 2005; Truccolo et al., 2005; Eldawlatly et al., 2009; Chen et al., 2011; Zhao et al., 2012).

Due to the point-process nature of the input and output signals, all model variables are dimensionless. In addition, the values of k, h, u, a, w, ε, σ, and θ can be scaled; θ and k0 can be translated, without influencing the probability of generating output spikes. So in practice, k0, k1, k2s, k2x, and h are first estimated with a unitary σ and a zero-valued θ using the Matlab® glmfit function, and then normalized with the absolute value of k0. In the final format, σ is equal to1/|k0|; θ is equal to zero; the baseline value of w (i.e., k0) is -1 (Song et al., 2007, 2009a). The Laguerre parameter controlling the asymptotic decaying rate of the basis functions, and the total number of basis functions L, are optimized with respect to the log-likelihood function (Song et al., 2013).

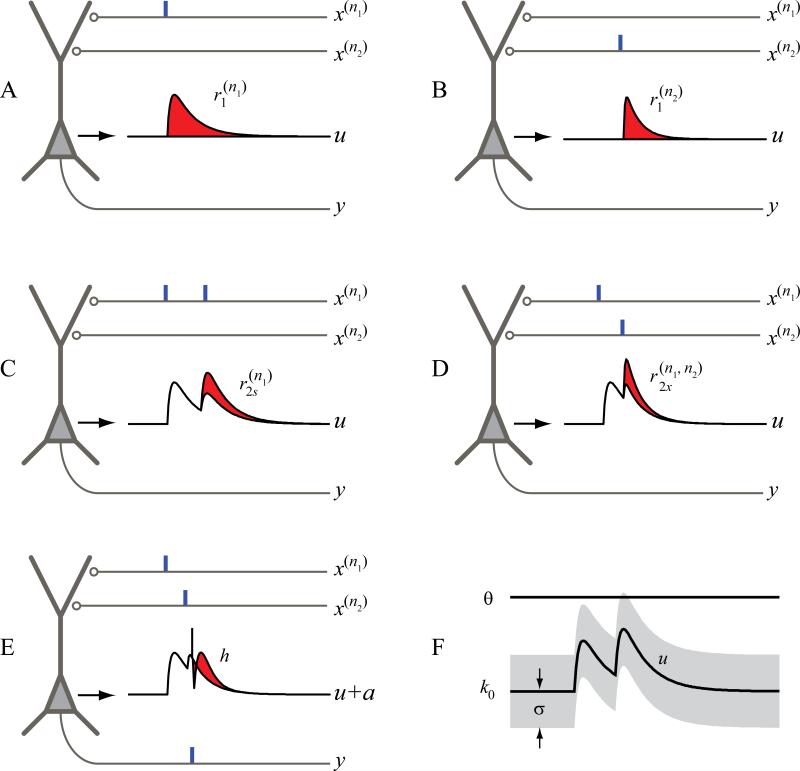

The Volterra kernels quantitatively describe the input-output nonlinear dynamics of the neuron. A more intuitive representation is the single-pulse and paired-pulse response functions (r1 and r2) derived from the kernels.

| (9) |

| (10) |

| (11) |

is essentially the postsynaptic potential (PSP) elicited by a single spike from the nth input neuron; describes the joint nonlinear effect of pairs of spikes from the nth input neuron in addition to the summation of their first order responses, i.e., . represents the joint nonlinear effect of pairs of spikes with one spike from neuron n1 and one spike from neuron n2 (Fig. 3)

Figure 3.

Physiological interpretation of the MISO spiking neuron model variables. k0 (r0) is resting membrane potential. r1 is the postsynaptic potential. r2 is the paired-pulse facilitation/depression function. h is the output spike-triggered after-potential. u is the synaptic potential. σ is the standard deviation of the Gaussian noise.

In this formulation, the ensemble neuronal input-output properties is represented by the GVM coefficients (S = [k, h]). All coefficients are simultaneously estimated from the input and output spike trains (x and y). Specifically, single-pulse response function r1 can be interpreted as the PSP, which is typically used as the measure of synaptic strength in experimental studies on long-term synaptic plasticity.

2.2. Nonstationary nonlinear dynamical spiking neuron model

The nonlinear dynamical spiking neuron model has provided a quantitative way to infer synaptic strength from spiking input-output activities. In order to study the long-term synaptic plasticity, we extend the GVM to be time-varying for the tracking of system nonstationarity. In this case, the GVM coefficients are estimated recursively from the input-output spikes and the changes of the nonlinear dynamics are tracked by the temporal evolution of the kernel functions (Fig.1, middle).

We develop the nonstationary model by combining the GVM with the point-process adaptive filtering method (Chan et al., 2008; Eden et al., 2004). In this approach, GVM coefficients (c) are taken as state variables while the input-output spikes are taken as observable variables. Using adaptive filtering methods, state variables are recursively updated as the observable variables unfold in time. The underlying change of system input-output properties then is represented by the time-varying GVM (S(t) = [k(t), h(t)]) reconstructed with the time-varying coefficients c(t).

Specifically, the probability of observing an output spike at time t, i.e., P(t), is predicted by the GVM at time t-1 based on the inputs up to t and output before t. Secondly, the difference between P(t) and the new observation of output y(t) is used to update the GVM coefficients. Using the stochastic state point process filtering algorithm, coefficient vector C(t) and its covariance matrix W(t) are both updated iteratively at each time step t as in:

| (12) |

| (13) |

| (14) |

Q is the coefficient noise covariance matrix. erf is the Gauss error function. In this method, W acts as the adaptive “learning rate” that allows reliable and rapid tracking of the model coefficients.

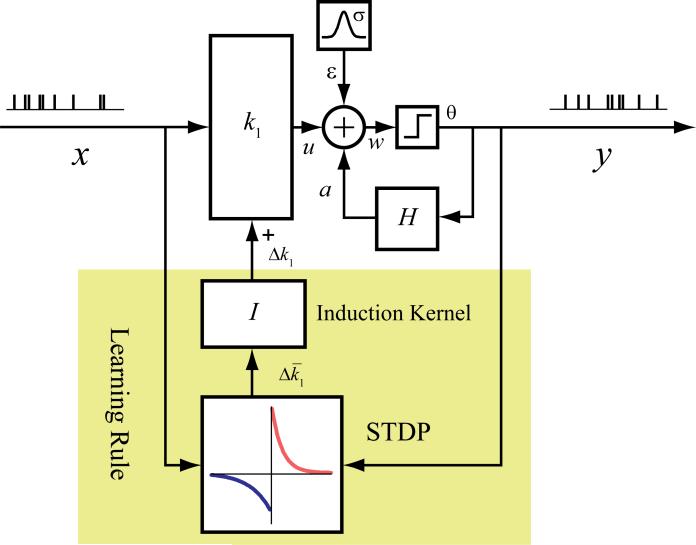

2.3. Identification of the synaptic learning rule

The nonstationary GVM above describes how the ensemble neuronal input-output properties evolve overtime. In the third step, we aim to explain how the nonstationarity happens by identifying the underlying synaptic learning rule (Fig. 1, bottom).

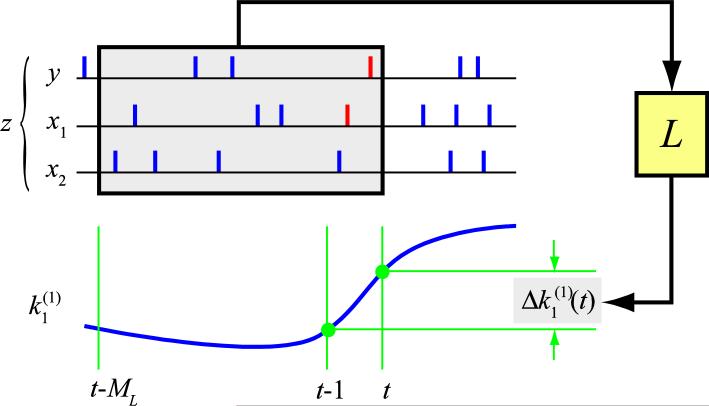

Given the estimated nonstationary model S(t), the change at time t can be calculated as ΔS(t) = S(t) - S(t-1), i.e., Δk(t) = k(t) - k(t-1), Δh(t) = h(t) - h(t-1), and ΔS(t) = [Δk(t), Δh(t)]. Given that ΔS(t) is caused by the preceding input and output activities (z = [x, y]), the sought ensemble synaptic learning rule L is essentially the causal relationship between the spatio-temporal pattern z and ΔS(t) (Fig. 4). ΔS(t) can be expressed as a Volterra functional power series of z in a finite memory window (ML) as:

| (15) |

Figure 4.

Schematic diagram showing the learning rule (L) from the ensemble spatio-temporal pattern of input/output spike trains (z: [x, y]) to the changes of the MISO GVM (Δk1). For simplicity, only two inputs and one first-order kernel (k1 between input x1 and the output y) are shown.

In this formulation, L0 is the zeroth-order learning rule describing the input/output activity-independent drift of the system; L1 is the first-order learning rule describing the linear relation between the changes of the GVM and the input or output activities; L2 is the second-order learning rule describing how the pair-wise nonlinear interactions between input/output spikes change the GVM; L3 is the third-order learning rule describing how the triplet-wise nonlinear interactions between input/output spikes change the MIMO model (Note that there are redundancies in L2 and L3 when n1, n2 and n3 contain same inputs. Equation 15 is used nonetheless for its simplicity). Higher-order learning rules are not included for simplicity.

Since ΔS(t) has been estimated, x and y are known, Equation 15 is essentially a linear model and the learning rule L thus can be estimated with the standard least-squares method. In practice, basis functions and penalized likelihood estimation methods can be utilized to reduce the total number of coefficients and select the significant terms in Equation 15. Compared with the existing learning rules such as (a) the classical Bienenstock-Cooper-Munro (BCM) model of synaptic modification and (b) the STDP, this formulation provides a general form of ensemble learning rule defining how the changes of GVM are determined by the spatio-temporal patterns of the input-output spikes. Linear and nonlinear interactions between multiple input/output spikes with different inter-spike intervals are explicitly included in this formulation (Fig. 4).

3. Results

3.1. Nonlinear dynamical model of the hippocampal CA3-CA1

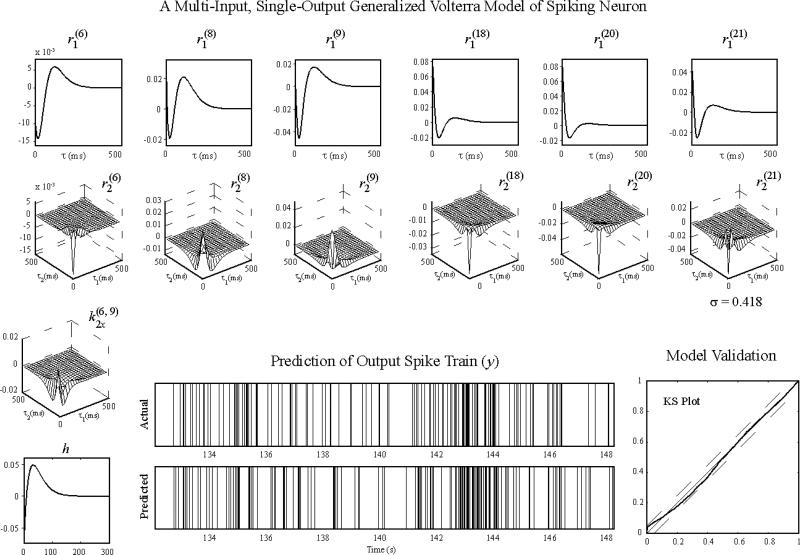

We have applied the nonlinear dynamical modeling to the hippocampal CA3 to CA1 in rodents and nonhuman primates (Berger et al., 2011; Berger et al., 2012; Hampson et al., 2012b; Hampson et al., 2012c; Hampson et al., 2013; Song et al., 2007, 2009a), and the prefrontal cortex layer 2/3 to layer 5 in nonhuman primates (Hampson et al., 2012a; Song et al., 2012). In these applications, the input-output spike trains are recorded from well-trained animals, where the input and output signals as well as the input-output transformations are stabilized, and thus sufficiently described by stationary GVMs. Figure 5 shows a GVM of a hippocampal CA1 neuron estimated from a rodent performing a delayed-nonmatch-to-sample (DNMS) task. Results show that this neuron receives inputs from 6 out of 24 CA3 neurons. The single-pulse and paired-pulse response functions (r1 and r2) are the PSPs and paired-pulse facilitation/depression functions inferred from the CA3-CA1 spiking activities. The zeroth-order kernel is -1. The threshold is 0. The standard deviation of the Gaussian noise is estimated to be 0.418. This MISO model can accurately predict the output spike train based on the input spike trains (Fig.5, bottom-middle) as verified with the out-of-sample Kolmogorov-Smirnov test based on the time-rescaling theorem (Fig. 5, bottom-right). In this stationary model, all model variables are time-invariant.

Figure 5.

A stationary MISO GVM of a hippocampal CA1 neuron. r1 are the single-pulse response functions. r2 are the paired-pulse response functions for the same input neuron. k2x are cross kernels for pairs of neurons. h is the feedback kernel. Output spike train is predicted with the model and the input spike trains. The model is validated with a out-of-sample, Kolmogorov- Smirnov (KS) test based on the time-rescaling theorem. In the KS plot, dashed lines are the 95% confidence bounds.

3.2. Simulation studies on nonstationary nonlinear dynamical model

We have tested intensively the nonstationary nonlinear dynamical modeling algorithm with synthetic input-output spike train data obtained through simulations. In all simulations, the inputs are Poisson random spike trains with mean firing rates range from 2 Hz to 6 Hz.

3.2.1. Step changes

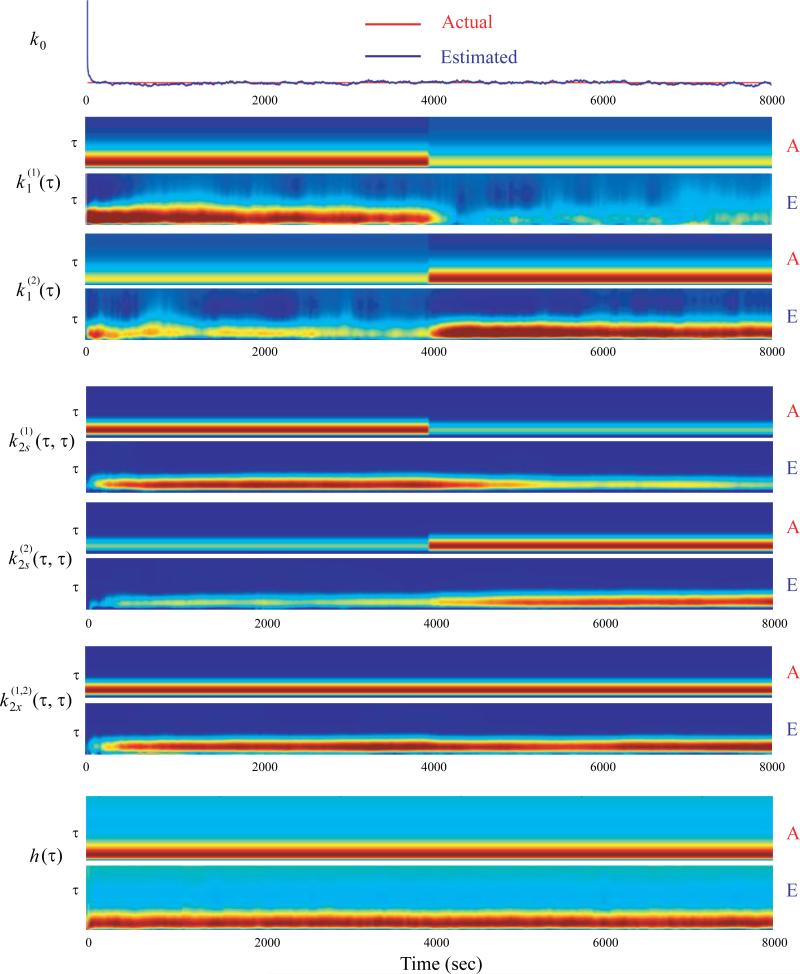

First, we simulate a second-order, 2-input, single-output spiking neuron model with step changes. The total simulation length is 8000 s. At 4000 s, the first-order kernel and the second-order self kernel of the first input (i.e., and ) decrease the amplitudes by half with the same waveforms; the first-order kernel and the second-order self kernel of the second input (i.e., and ) double the amplitudes with the same waveforms. Other kernels (i.e., k0, , and h) remain constant during the whole simulation. Using the simulated input-output spiking data, we apply the nonstationary nonlinear dynamical modeling algorithm to track the changes of the kernels. Results show that all kernels as well as the changes of the kernels can be recovered accurately from the simulated input-output spike trains (Fig. 6). In this nonstationary model, model variables become time-varying.

Figure 6.

Tracking a second-order, 2-input, single-output system with step changes. First-order kernels (k1) and second-order self-kernels (k2s) have step changes at 4000 s. Zeroth-order kernel (k0), second-order cross-kernels (k2x) and feedback kernel (h) remain constant. Memory length is 500 ms for k1, k2s, and k2x, 1000 ms for h. The amplitude of kernel is color coded. Only diagonal values of second-order kernels are plotted for simplicity. A: actual kernels; E: estimated kernels.

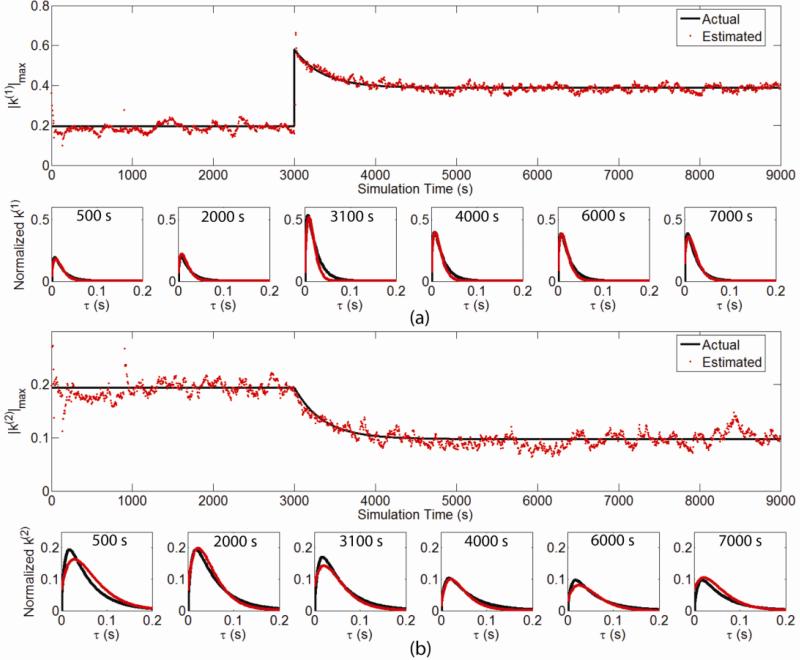

3.2.2. LTP and LTD-like changes

We further test the capability of the nonstationary modeling algorithm to track biologically plausible forms of synaptic plasticity. A first-order, 2-input system with concurrent LTP- and LTD-like changes at different inputs is simulated (Fig. 7). The total simulation length is 9000 s. At 3000 s, the kernels of the first input and the second input have LTP-like change (i.e., a near instantaneous increase followed by an exponential decay to a potentiated level double the initial amplitude) and LTD-like change (i.e., an exponential decay to half of the initial amplitude), respectively. Results show that the estimated kernels (red) track faithfully the actual kernels (black) during the whole simulation, given the LTP-like change being much more challenging than the step change.

Figure 7.

Tracking a first-order, 2-input system with LTP- and LTD-like changes. Black line: peak amplitudes of the actual kernels; red dots: peak amplitudes of the estimated kernels. Interval between successive estimation points plotted is 20 s.

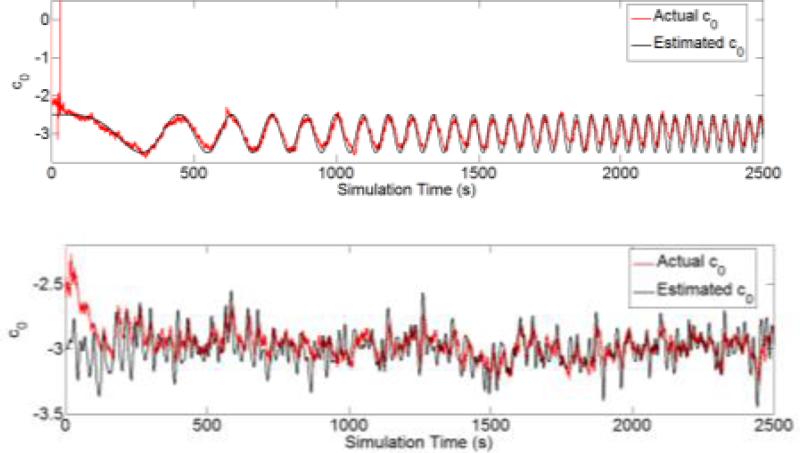

3.2.3. Input-independent changes

In our formulation of the nonstationary model, the zeroth-order time-varying kernel k0(t) (i.e., c0(t)) in Equation 13) describes the input-independent nonstationarity, i.e., changes of the output firing probability caused by latent factors other than the observed input spike trains. To test whether the algorithm can gracefully handle this type of nonstationarity, we modify the step-change simulation in Section 3.2.1 by varying the input-independent, baseline firing probability following a linear chirp (Fig. 8, top) and a random noise sequence (Fig. 8, bottom). Results show that the estimated baselines (red) rapidly converge to the actual baselines (black) in both simulations without interfering the estimation of higher-order kernels, which represent the input-dependent nonstationarities.

Figure 8.

Tracking the input-independent baseline k0 (c0). Top panel: k0 is a linear chirp from 0 Hz at 0 s to 0.0125 Hz at 2500 s. Bottom panel: k0 is a random noise sequence with mean at -3 and low pass filtered at 0.05Hz.

3.2.4. Larger number of inputs

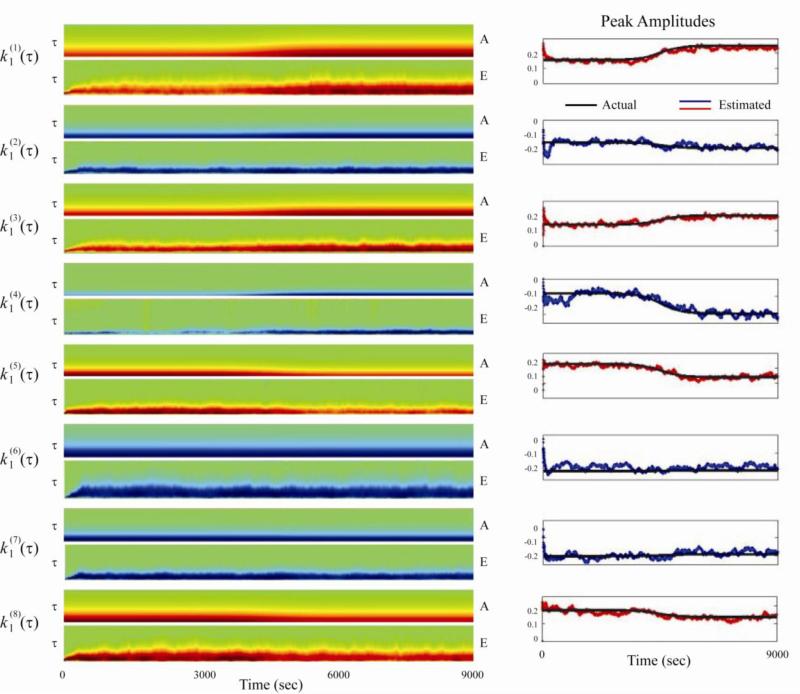

To validate the capability of the algorithm to tack simultaneous gradual changes with larger number of inputs, we design a first-order, 8-input system with sigmoidal changes. Among the eight inputs, three inputs increase amplitudes following a sigmoidal curve; three inputs decrease amplitudes following a sigmoidal curve; two inputs remain constant. The output mean firing rates before and after the sigmoidal changes are 3.677 Hz and 5.112 Hz, respectively. The total simulation length is 9000 s. Results show that all eight estimated kernels converge to the actual kernels during the simulation (Fig. 9).

Figure 9.

Tracking a first-order, 8-input system with sigmoidal changes. Left column: actual and estimated kernels across simulation time. The memory length is 200 ms. Right column: peak amplitudes of actual kernels (black) and estimated kernel (red for positive kernels and blue for negative kernels) across simulation time with a 1 s resolution.

3.3. Relationship between Volterra kernels and STDP

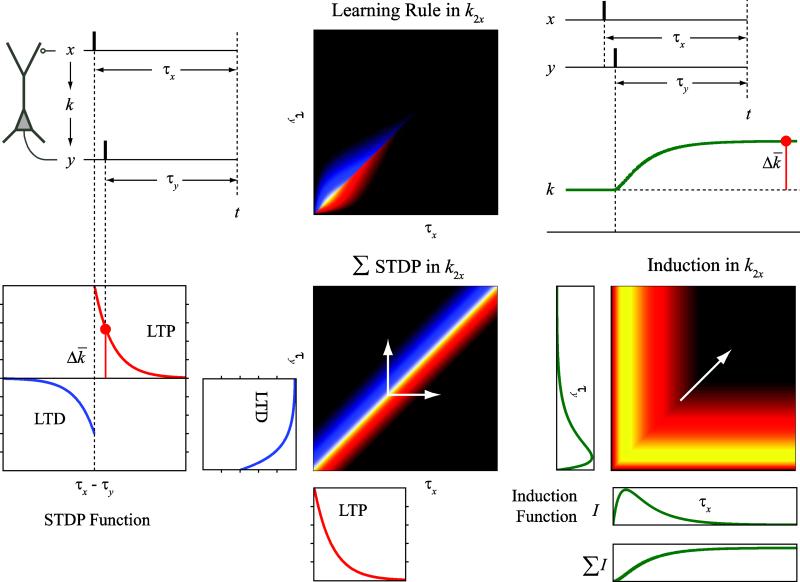

Equation 15 provides a general representation of the ensemble learning rule underlying the changes of the neuronal input-output function. It utilizes a Volterra power series to describe how the GVM variables change as the consequence of the interactions between the input-output spikes. Before testing the identification method with simulation, it is instructive to elucidate the relation between the Volterra kernels and the most accepted synaptic learning rule, i.e., STDP (Fig. 10).

Figure 10.

Relationship between the STDP function, the induction function (I), and the second- order cross kernel (k2x) of input (x) and output (y). Left column: STDP function. Center middle: the integral of the STDP function in k2x. Right column: the induction function I and its representation in k2x. The integral of I describes the STDP dynamics. The STDP function determines the steady-state change ( Δk̄) of the synaptic weight k. Center top: the k2x representation of the STDP function and the induction function. This k2x is calculated as the element-wise product of the k2x of STDP function and the k2x of I. Note that I is not plotted in scale for better visualization. White arrows indicate the directions of the STDP and induction functions in the cross kernel.

According to the STDP rule, the change of synaptic weight between a presynaptic neuron and a postsynaptic neuron is determined by the timings of the presynaptic and postsynaptic spiking activities (τx and τy). If a presynaptic (i.e., input) spike precedes a postsynaptic spike within a short time window (i.e., τx > τy), the synaptic weight will be enhanced; if the opposite happens (i.e., τx < τy), the synaptic weight will be reduced. The relationship between the amount of synaptic weight change and the spike timings, i.e., the STDP function, can be described with two exponential curves (Fig. 10, left). Since in this STDP expression the synaptic weight change depends only on the pair-wise interaction between a presynaptic spike and a postsynaptic spike, the Volterra expression of the learning rule contains only the second-order cross term between x and y. In the case of a single-input system, Equation 15 is reduced to:

| (16) |

In , The STDP function describes only the steady-state level of synaptic weight change. Another factor contributes to is the induction dynamics of the STDP, i.e., the synaptic weight does not change instantaneously following a delta function; instead, it relatively slowly builds up and then stabilizes following a smooth induction function I (Fig. 10, right). The STDP function and the induction function I jointly determine the shape of (Fig. 10, middle-top). In other words, this form of cross kernel between x and y is the Volterra representation of the STDP and induction functions.

3.4. Simulations studies on learning rule identification

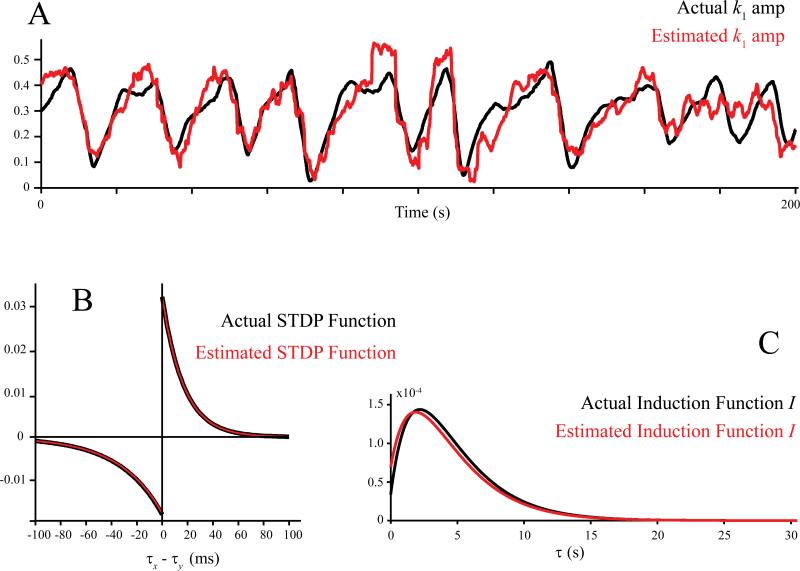

We further test the learning rule identification method with simulated input-output spiking data. A first-order, single-input, single-output spiking neuron model is built with the GVM structure (Fig. 11). In this model, the first-order feedforward kernel k1 has a typical EPSP shape determined by two exponentials with the time constants at 30 ms and 150 ms, controlling the onset and decay rates, respectively. The peak amplitude is 0.3. The feedback kernel has a negative exponential shape to model the refractory period of spike generation. The time constant and peak amplitude are 10 ms and -1. Noise standard deviation σ is 0.4. During the simulation, input spike train x is fed into the model to generate output spike train y. The synaptic strength between the input and output neurons, i.e., k1, is changed following the standard STDP rule and the induction function. In this simulation, the shape of k1 remains the same; only the amplitude is changed. For convenience, we use k1 to represent both the peak amplitude and the whole kernel function. The left (LTD) half of the STDP function is a single exponential with time constant and peak amplitude at 33.7 ms and -0.018. The right (LTP) half of the STDP function is a single exponential with time constant and peak amplitude at 16.8 ms and 0.032. Both sides share the same induction function determined by two exponentials with time constants at 900 ms and 4500 ms, for onset and decay rates, respectively. Without loss of generality, the integral of the induction function is set at 1. To prevent the intrinsic instability of the simulated system, we adjust the input patterns based on the level of k1. When k1 crosses below a threshold, the input x changes from a 5 Hz Poisson random train to a 50 Hz burst to cause more potentiation and increase of k1; when k1 is above a threshold, x is shifted to spike within 10 ms after output spikes to cause more depression and decrease of k1. This simple method ensures k1 to fluctuate in a stable manner. The total length of simulation is 200 s.

Figure 11.

Simulation of a first-order, single-input, single-output spiking neuron model with STDP learning rule. During the simulation, presynaptic spikes x and postsynaptic spikes y change the feedforward kernel k1 following the STDP and the induction functions. STDP function determines the steady-state change ; The induction function I defines the STDP Δk̄1 dynamics.

Using the nonstationary GVM method, we first track the changes of the synaptic strength, i.e., k1(t), and compare them with the actual values. Results show that k1(t) can be accurately recovered from the simulated input-output spike trains (Fig. 12, top).

Figure 12.

Identification of STDP and induction functions from spiking input-output data. A: peak amplitudes of the first-order feedforward kernel k1. B: STDP functions. C: induction functions. In all plots, back: actual; red: estimated.

Given k1(t), we further estimate the learning rule, i.e., the second-order cross kernel . In order to reduce the number of open parameters, we expand the kernel with three sets of Laguerre basis functions as:

| (17) |

where

| (18) |

| (19) |

| (20) |

| (21) |

In the equations above, c are the sought learning rule coefficients. They are split into cxy and cyx to represent the two halves of the cross kernel for x preceding y and y preceding x, respectively. Subscript A represents the STDP amplitude. Subscript ψ represents the STDP induction. Since all v can be calculated with the predefined basis functions, and the known x and y, Equation 17 is essentially linear and c can be estimated with a least-squares method. With the estimated coefficients, can be reconstructed as:

| (22) |

constitutes a general representation of the synaptic learning due to the fact that it does not require the independence of the STDP function and the induction function. In this study, however, since these two functions are assumed to be independent, is equal to the outer product of the two functions. The left (LTD) side of the STDP function can be obtained by integrating the vertical vectors in the upper half of along its diagonal line; similarly, the right (LTP) side of the STDP function can be obtained by integrating the horizontal vectors in the lower half of along its diagonal line. The induction function for LTD can be obtained by integrating the diagonal vectors in the upper half of along the vertical axis; similarly, the induction function for LTP can be obtained by integrating the diagonal vectors in the lower half of along the horizontal axis. The induction function is then normalized to have a unitary integral. The STDP functions are scaled accordingly.

Figure 12 shows the final identification results using the same 200 s input-output spiking data. It is evident that both the STDP function and the induction function are faithfully recovered with this method (Fig. 12B, C).

4. Discussion

This paper describes a systems identification approach for studying the STDP-like synaptic learning rule using spiking activities in learning animals. In this formulation, synaptic strength is represented as input-output (linear or nonlinear) dynamics between neurons; long-term synaptic plasticity is defined as a form of nonstationarity of such input-output dynamics; synaptic learning rule is essentially the function governing the formation of the long-term synaptic plasticity based on the input-output spiking patterns. As a special case, STDP is equivalent to a second-order learning rule describing the pair-wise interactions between single input spikes and single output spikes. Using simulated input-output data, we have shown that this modeling problem can be solved with a series of systems identification methods; it is possible to infer STDP-like synaptic learning rule purely from the input-output spiking activities.

The MISO model and the multi-input, multi-output (MIMO) model, which consists of a series of MISO models, have been used intensively as a tool to identify the neuronal functional connectivity (Song and Berger, 2009; Song et al., 2013), e.g., hippocampal CA3-CA1, and build cortical prostheses for restoring cognitive functions, e.g., hippocampal memory prosthesis (Berger et al., 2011; Berger et al., 2012; Deadwyler et al., 2013; Hampson et al., 2012b; Hampson et al., 2012c; Hampson et al., 2013). Previous results have shown that the MIMO model can predict accurately the output (e.g., hippocampal CA1) spike trains based on the ongoing input (e.g., hippocampal CA3) spike trains. Electrical stimulation with the spatio-temporal patterns of the predicted output spike trains can restore or even enhance the memory function during a spatial delayed nonmatch-to-sample task (DNMS) in rodents. Since all these previous models are stationary, their success must be due to the fact that these applications only involve learned behaviors in well-trained animals, where the behavioral performance and the spatio-temporal pattern representations of behaviors (or memory events) are all stabilized, and as a consequence, the MIMO input-output transformations can be sufficiently described by stationary MIMO models. The resulting hippocampal prostheses essentially replicate the input-output properties of the hippocampal CA3-CA1 after learning and memory formation. To build hippocampal memory prostheses capable of learning and memory formation in a self-organizing manner, on the other hand, it is required to identify and further mimic the nonstationary behaviors and the underlying learning rules of the MIMO input-output properties. The identification methods described in this paper provide a computational framework for studying such nonstationary properties and the identified functional learning rule may potentially be used as the computational basis for developing the next-generation, adaptive hippocampal memory prostheses.

Our learning rule identification algorithm consists of two steps. The first step relies on accurate estimation of the kernel changes. The second step is based on the assumption that the synaptic learning rule is STDP-like, i.e., the kernel changes depend on the interactions between presynaptic and post-synaptic spikes. We have shown that the nonstationary modeling algorithm can (a) handle various forms of kernel changes with larger number (e.g., eight) of inputs, and (b) differentiate between input-dependent changes and input-independent changes. In addition, given the facts that (a) existing STDP-like learning rules involve only a single input and a single output as in our simulation study on the learning rule identification method (Section 3.4), and (b) STDP-like learning rules do not take arbitrary forms (Caporale and Dan, 2008; Feldman, 2012), i.e., they are all continuous functions with stereotypical shapes that can be easily handled by a Laguerre-Volterra model (e.g., the standard STDP function involves only a single term in the Volterra formulation and two Laguerre basis functions), these two steps of the algorithm can naturally work together to achieve reliable estimation of the STDP-like learning rules, as shown in our simulation results.

Naturally, our next step will be applying these methods to experimental data, i.e., input-output spike trains recorded from animals performing a learning task. For example, we can record from the rat hippocampal CA3 and CA1, a well-defined pair of input-output regions, during the training of the memory-dependent DNMS task. Different from our previous studies on the hippocampal CA3-CA1, the new experiments may focus on the learning (training) phase of the behavioral task where the animal's performance improves over time. Preliminary results have shown that the spatio-temporal pattern representations of memory events (i.e., functional cell types) do evolve over time during the training of DNMS task (Goonawardena et al., 2010). The CA3 and CA1 spike trains simultaneously recorded during the training sessions will likely be the most relevant experimental data for the proposed computational studies.

In this study, we have tested the learning rule identification algorithm with a simple form of STDP. With the experimental data, we may use the full Volterra expression (Equation 15), as opposed to the reduced second-order cross kernel expression of STDP in this study, to identify the ensemble synaptic learning rule. Previous studies have shown the existence of single spike rule (Kempter et al., 1999; van Rossum et al., 2000), triplet rule, and quadruplet rule of STDP (Pfister and Gerstner, 2006; Wang et al., 2005). These learning rules, together with their induction functions, are equivalent to the first-order, third-order, and fourth-order learning rule kernels of the Volterra expression, respectively. A formal approach of identifying the learning rule from experimental data would be including all possible terms (e.g., zeroth-order, first-order, second-order, third-order, and even fourth-order terms) into the model and then using statistical model estimation methods (e.g., regularized estimation) to characterize and select the significant terms (Song et al., 2013). The resulting ensemble learning rule should provide a more thorough description of the long-term synaptic plasticity.

It should be noted that a spiking neuron with the standard paired spike STDP rule is intrinsically unstable since the STDP rule is unbounded, i.e., there is no mechanism to saturate the synaptic weight or prevent it from being negative. In this study, we solve this problem with a rather empirical method: the input patterns, rather than the neuronal input-output properties, are adjusted to keep the synaptic weight fluctuating within a certain range. The main reason behind this strategy is to use the standard STDP function in the simulation and show how it is related to the Volterra kernels. One way to implement a stable model is to add upper and lower bounds to the synaptic weight, so that the synaptic weight is nonnegative and the input will not drive the synaptic weight to very large values. The caveat of this method is that the synaptic weight tends to stay at the maximal or minimal values after a certain length of running time and stops showing plasticity. Another way is to make the changes of synaptic weight depend on not only the input-output spike timing as in the standard STDP learning rule, but also the current value of the synaptic weight (van Rossum et al., 2000): a higher value of synaptic weight will bias the system to more depression and a lower value will cause more potentiation. This method will guarantee the synaptic weight to fluctuate, although, at the same time, an ever changing synaptic weight may make it difficult to form long-term memories. The strategy used by real neurons to maintain stability still remains unclear and poses an interesting question to the exact nature of synaptic learning rule. Performing and extending the computational studies outlined in this paper on experimental data recorded during learning and memory formation seems to be a sensible approach to answer this question.

Highlights.

Formulation of a nonstationary nonlinear dynamical modeling approach for the identification of long-term synaptic plasticity using natural spiking activities

Elucidation of the relationship between Volterra kernels and spike-timing-dependent plasticity functions

Test of nonstationary modeling and learning rule identification methods with simulated spiking input-output data

Acknowledgements

this work is partially supported by the Defense Advanced Research Projects Agency (DARPA) through the Restorative Encoding Memory Integration Neural Device (REMIND) Program, and partially supported by the NIH U01 GM104604. R. H. M. C. is partially supported by a grant from the Research Grants Council of the Hong Kong Special Administrative Region, China [Project No. CityU123312].

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alkon DL, Amaral DG, Bear MF, Black J, Carew TJ, Cohen NJ, Disterhoft JF, Eichenbaum H, Golski S, Gorman LK, Lynch G, Mcnaughton BL, Mishkin M, Moyer JR, Olds JL, Olton DS, Otto T, Squire LR, Staubli U, Thompson LT, Wible C. Learning and memory. Brain Research Reviews. 1991;16:193–220. doi: 10.1016/0165-0173(91)90005-s. [DOI] [PubMed] [Google Scholar]

- Anderson P, Bliss TV, Skrede KK. Lamellar organization of hippocampal pathways. Experimental brain research. 1971;13:222–38. doi: 10.1007/BF00234087. [DOI] [PubMed] [Google Scholar]

- Barnes CA. Memory Deficits Associated with Senescence - Neurophysiological and Behavioral-Study in the Rat. J Comp Physiol Psych. 1979;93:74–104. doi: 10.1037/h0077579. [DOI] [PubMed] [Google Scholar]

- Berger TW. Long-term potentiation of hippocampal synaptic transmission affects rate of behavioral learning. Science. 1984;224:627–30. doi: 10.1126/science.6324350. [DOI] [PubMed] [Google Scholar]

- Berger TW, Chauvet G, Sclabassi RJ. A biological based model of functional properties of the hippocampus. Neural Networks. 1994;7:1031–64. [Google Scholar]

- Berger TW, Eriksson JL, Ciarolla DA, Sclabassi RJ. Nonlinear systems analysis of the hippocampal perforant path-dentate projection. II. Effects of random impulse train stimulation. J Neurophysiol. 1988a;60:1076–94. doi: 10.1152/jn.1988.60.3.1077. [DOI] [PubMed] [Google Scholar]

- Berger TW, Eriksson JL, Ciarolla DA, Sclabassi RJ. Nonlinear systems analysis of the hippocampal perforant path-dentate projection. III. Comparison of random train and paired impulse stimulation. J Neurophysiol. 1988b;60:1095–109. doi: 10.1152/jn.1988.60.3.1095. [DOI] [PubMed] [Google Scholar]

- Berger TW, Hampson RE, Song D, Goonawardena A, Marmarelis VZ, Deadwyler SA. A cortical neural prosthesis for restoring and enhancing memory. Journal of neural engineering. 2011;8:046017. doi: 10.1088/1741-2560/8/4/046017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger TW, Song D, Chan RHM, Marmarelis VZ. The Neurobiological Basis of Cognition: Identification by Multi-Input, Multioutput Nonlinear Dynamic Modeling. Proceedings of the IEEE. 2010;98:356–74. doi: 10.1109/JPROC.2009.2038804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger TW, Song D, Chan RHM, Marmarelis VZ, LaCoss J, Wills J, Hampson RE, Deadwyler SA, Granacki JJ. A Hippocampal Cognitive Prosthesis: Multi-Input, Multi-Output Nonlinear Modeling and VLSI Implementation. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2012;20:198–211. doi: 10.1109/TNSRE.2012.2189133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bi GQ, Poo MM. Synaptic modifications in cultured hippocampal neurons: Dependence on spike timing, synaptic strength, and postsynaptic cell type. J Neurosci. 1998;18:10464–72. doi: 10.1523/JNEUROSCI.18-24-10464.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bliss TV, Lomo T. Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. Journal of Physiology. 1973;232:331–56. doi: 10.1113/jphysiol.1973.sp010273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bramham CR. LTP not equal Learning: Lessons from Short-Term Plasticity. Frontiers in behavioral neuroscience. 2010;4:3. doi: 10.3389/neuro.08.003.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown EN, Kass RE, Mitra PP. Multiple neural spike train data analysis: state-of-the-art and future challenges. Nat Neurosci. 2004;7:456–61. doi: 10.1038/nn1228. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. Large-scale recording of neuronal ensembles. Nat Neurosci. 2004;7:446–51. doi: 10.1038/nn1233. [DOI] [PubMed] [Google Scholar]

- Caporale N, Dan Y. Spike timing-dependent plasticity: a Hebbian learning rule. Annu Rev Neurosci. 2008;31:25–46. doi: 10.1146/annurev.neuro.31.060407.125639. [DOI] [PubMed] [Google Scholar]

- Chan RHM, Song D, Berger TW. Tracking Temporal Evolution of Nonlinear Dynamics in Hippocampus using Time-Varying Volterra Kernels. Proceedings of the IEEE EMBS Conferen. 2008:4996–9. doi: 10.1109/IEMBS.2008.4650336. [DOI] [PubMed] [Google Scholar]

- Chen Z, Putrino DF, Ghosh S, Barbieri R, Brown EN. Statistical inference for assessing functional connectivity of neuronal ensembles with sparse spiking data. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2011;19:121–135. doi: 10.1109/TNSRE.2010.2086079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman DE. The spike timing dependence of plasticity. Neuron. 2012;75:556–571. doi: 10.1016/j.neuron.2012.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Boor C. On calculating with B-splines. Journal of Approximation Theory. 1972;6:50–62. [Google Scholar]

- Deadwyler SA, Berger TW, Sweatt AJ, Song D, Chan RH, Opris I, Gerhardt GA, Marmarelis VZ, Hampson RE. Donor/recipient enhancement of memory in rat hippocampus. Frontiers in systems neuroscience. 2013;7:120. doi: 10.3389/fnsys.2013.00120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deadwyler SA, Hampson RE. Ensemble activity and behavior - Whats the code? Science. 1995;270:1316–8. doi: 10.1126/science.270.5240.1316. [DOI] [PubMed] [Google Scholar]

- Eden UT, Frank LM, Barbieri R, Solo V, Brown EN. Dynamic analysis of neural encoding by point process adaptive filtering. Neural Comput. 2004;16:971–98. doi: 10.1162/089976604773135069. [DOI] [PubMed] [Google Scholar]

- Eldawlatly S, Jin R, Oweiss KG. Identifying functional connectivity in large-scale neural ensemble recordings: a multiscale data mining approach. Neural Computation. 2009;21:450–477. doi: 10.1162/neco.2008.09-07-606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman WJ. How Brains Make up Their Minds. Columbia University Press; 1999. [Google Scholar]

- Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233:1416–9. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- Goonawardena AV, Robinson L, Riedel G, Hampson RE. Recruitment of Hippocampal Neurons to Encode Behavioral Events in the Rat: Alterations in Cognitive Demand and Cannabinoid Exposure. Hippocampus. 2010;20:1083–94. doi: 10.1002/hipo.20706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson RE, Gerhardt GA, Marmarelis V, Song D, Opris I, Santos L, Berger TW, Deadwyler SA. Facilitation and restoration of cognitive function in primate prefrontal cortex by a neuroprosthesis that utilizes minicolumn-specific neural firing. Journal of neural engineering. 2012a;9:056012. doi: 10.1088/1741-2560/9/5/056012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson RE, Song D, Chan RHM, Sweatt AJ, Riley MR, Gerhardt GA, Shin DC, Marmarelis VZ, Berger TW, Deadwyler SA. A Nonlinear Model for Hippocampal Cognitive Prosthesis: Memory Facilitation by Hippocampal Ensemble Stimulation. IEEE Trans Neur Sys Reh. 2012b;20:184–97. doi: 10.1109/TNSRE.2012.2189163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson RE, Song D, Chan RHM, Sweatt AJ, Riley MR, Goonawardena AV, Marmarelis VZ, Gerhardt GA, Berger TW, Deadwyler SA. Closing the Loop for Memory Prosthesis: Detecting the Role of Hippocampal Neural Ensembles Using Nonlinear Models. IEEE Trans Neur Sys Reh. 2012c;20:510–25. doi: 10.1109/TNSRE.2012.2190942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson RE, Song D, Opris I, Santos LM, Shin DC, Gerhardt GA, Marmarelis VZ, Berger TW, Deadwyler SA. Facilitation of memory encoding in primate hippocampus by a neuroprosthesis that promotes task-specific neural firing. Journal of neural engineering. 2013;10:066013. doi: 10.1088/1741-2560/10/6/066013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebb DO. The organization of behavior. Wiley & Sons; New York: 1949. [Google Scholar]

- Ito M. Long-Term Depression. Annu Rev Neurosci. 1989;12:85–102. doi: 10.1146/annurev.ne.12.030189.000505. [DOI] [PubMed] [Google Scholar]

- Johnston D, Wu SM. Foundations of Cellular Neurophysiology. MIT Press; Cambridge, Mass.: 1995. [Google Scholar]

- Kempter R, Gerstner W, von Hemmen JL. Hebbian learning and spiking neurons. Phys Rev E. 1999;59:4498–514. [Google Scholar]

- Levy WB, Steward O. Temporal Contiguity Requirements for Long-Term Associative Potentiation Depression in the Hippocampus. Neuroscience. 1983;8:791–7. doi: 10.1016/0306-4522(83)90010-6. [DOI] [PubMed] [Google Scholar]

- Lynch G, Baudry M. The Biochemistry of Memory - a New and Specific Hypothesis. Science. 1984;224:1057–63. doi: 10.1126/science.6144182. [DOI] [PubMed] [Google Scholar]

- Markram H, Lubke J, Frotscher M, Sakmann B. Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science. 1997;275:213–5. doi: 10.1126/science.275.5297.213. [DOI] [PubMed] [Google Scholar]

- Marmarelis VZ. Identification of nonlinear biological systems using Laguerre expansions of kernels. Ann Biomed Eng. 1993;21:573–89. doi: 10.1007/BF02368639. [DOI] [PubMed] [Google Scholar]

- Marmarelis VZ. Nonlinear Dynamic Modeling of Physiological Systems. Wiley-IEEE Press; Hoboken: 2004. [Google Scholar]

- Martin KC, Michael D, Rose JC, Barad M, Casadio A, Zhu HX, Kandel ER. MAP kinase translocates into the nucleus of the presynaptic cell and is required for long-term facilitation in Aplysia. Neuron. 1997;18:899–912. doi: 10.1016/s0896-6273(00)80330-x. [DOI] [PubMed] [Google Scholar]

- McHugh TJ, Blum KI, Tsien JZ, Tonegawa S, Wilson MA. Impaired hippocampal representation of space in CA1-specific NMDAR1 knockout mice. Cell. 1996;87:1339–49. doi: 10.1016/s0092-8674(00)81828-0. [DOI] [PubMed] [Google Scholar]

- McNaughton BL, Barnes CA, Rao G, Baldwin J, Rasmussen M. Long-term enhancement of hippocampal synaptic transmission and the acquisition of spatial information. J Neurosci. 1986;6:563–71. doi: 10.1523/JNEUROSCI.06-02-00563.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris RG. Synaptic plasticity and learning: selective impairment of learning rats and blockade of long-term potentiation in vivo by the N-methyl-D-aspartate receptor antagonist AP5. J Neurosci. 1989;9:3040–57. doi: 10.1523/JNEUROSCI.09-09-03040.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakazawa K, Quirk MC, Chitwood RA, Watanabe M, Yeckel MF, Sun LD, Kato A, Carr CA, Johnston D, Wilson MA, Tonegawa S. Requirement for hippocampal CA3 NMDA receptors in associative memory recall. Science. 2002;297:211–8. doi: 10.1126/science.1071795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okatan M, Wilson MA, Brown EN. Analyzing functional connectivity using a network likelihood model of ensemble neural spiking activity. Neural Computation. 2005;17:1927–1961. doi: 10.1162/0899766054322973. [DOI] [PubMed] [Google Scholar]

- Paninski L, Pillow JW, Simoncelli EP. Maximum likelihood estimation of a stochastic integrate- and-fire neural encoding model. Neural Computation. 2004;16:2533–2561. doi: 10.1162/0899766042321797. [DOI] [PubMed] [Google Scholar]

- Pfister JP, Gerstner W. Triplets of spikes in a model of spike timing-dependent plasticity. J Neurosci. 2006;26:9673–82. doi: 10.1523/JNEUROSCI.1425-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pillow JW, Paninski L, Uzzell VJ, Simoncelli EP, Chichilnisky EJ. Prediction and decoding of retinal ganglion cell responses with a probabilistic spiking model. Journal of Neuroscience. 2005;25:11003–11013. doi: 10.1523/JNEUROSCI.3305-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pouget A, Dayan P, Zemel RS. Inference and computation with population codes. Annu Rev Neurosci. 2003;26:381–410. doi: 10.1146/annurev.neuro.26.041002.131112. [DOI] [PubMed] [Google Scholar]

- Salinas E, Abbott LF. Vector reconstruction from firing rates. J Comput Neurosci. 1994;1:89–107. doi: 10.1007/BF00962720. [DOI] [PubMed] [Google Scholar]

- Song D, Berger TW. Identification of Nonlinear Dynamics in Neural Population Activity. In: Oweiss KG, editor. Statistical Signal Processing for Neuroscience and Neurotechnology. McGraw-Hill/Irwin; Boston: 2009. [Google Scholar]

- Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Nonlinear dynamic modeling of spike train transformations for hippocampal-cortical prostheses. IEEE Trans Biomed Eng. 2007;54:1053–66. doi: 10.1109/TBME.2007.891948. [DOI] [PubMed] [Google Scholar]

- Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Nonlinear modeling of neural population dynamics for hippocampal prostheses. Neural Networks. 2009a;22:1340–51. doi: 10.1016/j.neunet.2009.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Sparse generalized Laguerre-Volterra model of neural population dynamics. Proceedings of the IEEE EMBS Conference. 2009b:4555–8. doi: 10.1109/IEMBS.2009.5332719. [DOI] [PubMed] [Google Scholar]

- Song D, Marmarelis VZ, Berger TW. Parametric and non-parametric modeling of short-term synaptic plasticity. Part I: Computational study. J Comput Neurosci. 2009c;26:1–19. doi: 10.1007/s10827-008-0097-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song D, Opris I, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Functional connectivity between Layer 2/3 and Layer 5 neurons in prefrontal cortex of nonhuman primates during a delayed match-to-sample task. Proceedings of the IEEE EMBS Conference. 2012:2555–8. doi: 10.1109/EMBC.2012.6346485. [DOI] [PubMed] [Google Scholar]

- Song D, Wang H, Tu CY, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Identification of sparse neural functional connectivity using penalized likelihood estimation and basis functions. J Comput Neurosci. 2013;35:335–57. doi: 10.1007/s10827-013-0455-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song D, Wang Z, Marmarelis VZ, Berger TW. Parametric and non-parametric modeling of short-term synaptic plasticity. Part II: Experimental study. J Comput Neurosci. 2009d;26:21–37. doi: 10.1007/s10827-008-0098-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens CF. A million dollar question: Does LTP = memory? Neuron. 1998;20:1–2. doi: 10.1016/s0896-6273(00)80426-2. [DOI] [PubMed] [Google Scholar]

- Tonegawa S, Tsien JZ, McHugh TJ, Huerta P, Blum KI, Wilson MA. Hippocampal CA1-region-restricted knockout of NMDAR1 gene disrupts synaptic plasticity, place fields, and spatial learning. Cold Spring Harb Sym. 1996;61:225–38. [PubMed] [Google Scholar]

- Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. Journal of Neurophysiology. 2005;93:1074–1089. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- van Rossum MC, Bi GQ, Turrigiano GG. Stable Hebbian learning from spike timing-dependent plasticity. J Neurosci. 2000;20:8812–21. doi: 10.1523/JNEUROSCI.20-23-08812.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang HX, Gerkin RC, Nauen DW, Bi GQ. Coactivation and timing-dependent integration of synaptic potentiation and depression. Nat Neurosci. 2005;8:187–93. doi: 10.1038/nn1387. [DOI] [PubMed] [Google Scholar]

- Zhao MY, Batista A, Cunningham JP, Chestek C, Rivera-Alvidrez Z, Kalmar R. An L(1)-regularized logistic model for detecting short-term neuronal interactions. Journal of Computational Neuroscience. 2012;32:479–497. doi: 10.1007/s10827-011-0365-5. [DOI] [PubMed] [Google Scholar]