Although every hospital has surgical complications, the quality of the response to adverse events varies widely across institutions.1 A high quality response is essential to halt the potential transition from an initial serious complication to a progressive cascade of adverse occurrences that can lead to death, or “failure to rescue”. As most surgeons know, this tragic ending to a patient’s clinical trajectory is not abrupt or wholly unexpected. Instead, theses failures are preceded by a steady accumulation of small clinical clues. In this issue of Annals, Johnston and colleagues2 provide valuable insight into the process of recognizing these clues and the subsequent “escalation of care” using a method rarely applied in surgery—Healthcare Failure Mode and Effects Analysis (HFMEA). Escalation of care (EOC) is an apt term that the authors define as “the recognition and communication of patient deterioration to a senior colleague.”

FMEA (without the H) is a technique for evaluating complex industrial or organizational processes. FMEA derives from the engineering community with principles that readily translate to the complex systems encountered by medical professionals. HFMEA (with the H) was born out of a need to tailor FMEA to a medical audience and resulted in utilizing components of several process evaluation tools, including root cause analysis (RCA).

RCA has a very similar structure to HFMEA. It also involves a multi-step process that includes the formation of a multidisciplinary team, diagraming the general steps in a process, and gathering of data related to specific series of events to identify potential contributing factors, and ultimately developing and implementing an action plan. The use of historical data and events in RCA guide the understanding and development of changes to prevent adverse events. In contrast, HFMEA does not view a system retrospectively after an event, but examines a system for potential hazards prospectively. Because HFMEA does not have an event to draw upon, the identification of hazards relies mostly on the “end-user” or expert opinion to develop an understanding of potential vulnerabilities. Thus, RCA and HFMEA provide different views of how adverse events occur and are potentially prevented. Using actual historical events and decisions made at branch points in care may give RCA an advantage in the understanding and prevention of future events.

Nonetheless, HFMEA has some advantages over RCA. It is explicitly aimed at the prevention of adverse events. Because HFMEA does not require a previous bad outcome or near miss, any system can be studied. Through the evaluation process, organizations can make these systems less vulnerable to failure. Finally, the method is “fault tolerant.” In other words HFMEA allows for minor errors or faults to occur in the system since such events may be inevitable and difficult to completely eliminate. Rather, it creates or reinforces a system that is responsive and less prone to recall bias or the fear of disclosure, embarrassment, blame, and punishment that can arise in the wake of an actual event.

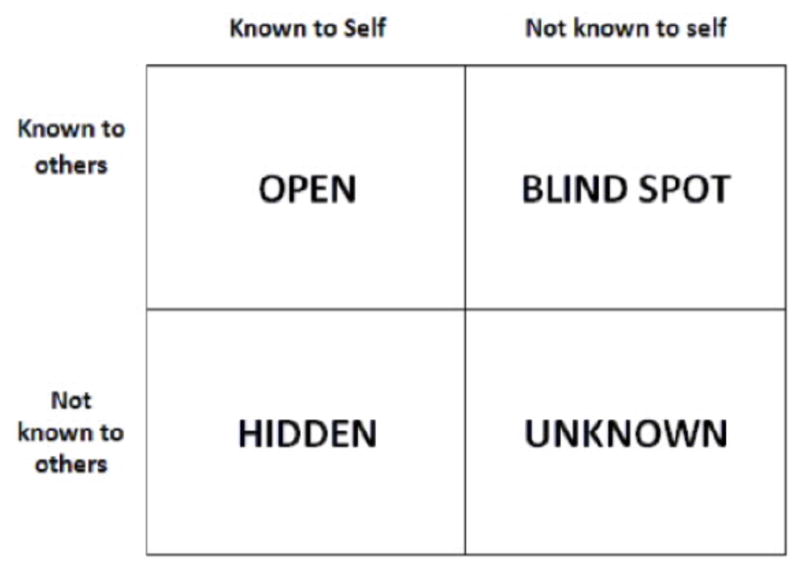

However, HFMEA clearly also has disadvantages. While this approach may prove fruitful for low risk, low complexity tasks such has hand hygiene, it is prone to significant blind spots. Using the Johari window cognitive psychology tool as an analogy (Fig. 1), the main area of greatest concern in the rescue process may be the surgical team’s blind spot, ie, the unknown quadrant in Figure 1. Unfortunately, sometimes a failure must occur before the steps or missteps leading to an adverse event can be identified, studied, and remedied. Therefore, the use of HFMEA and RCA together can provide an organization a more complete picture of dynamic, complex systems. While the authors present HFMEA as a prospective (and thus superior) approach to risk assessment over RCA, these methods are more accurately viewed as complementary, each with its own advantages and disadvantages.

Figure 1.

The Johari Window

Johnston and colleagues present a very thorough, well designed and executed evaluation of the EOC process in surgery using the HFMEA. They summarize their findings by citing communication technology, understaffing, and hierarchy as key drivers of escalation of care. As noted by the authors, these findings are well known and consistent with prior literature regarding how medical teams should respond to these events.2 To summarize the authors’ findings, we view their recommended interventions for improving escalation of care in three distinct buckets. First, there are structural components that require improvement or modification of existing systems. Examples from this study include the need for more permanent or experienced staff members on the wards or improvement in technology for the medical record or methods to enhance communication (ie, mobile phones). These interventions require significant capital investment and may take time to purchase and implement. However, these are already well-known contributors to good patient care.

Second, there are several improvements to processes of care recommended by the study participants. Specific examples from this study include development of escalation protocols or establishing explicit physiologic parameter thresholds to ensure the patient is receiving the appropriate levels of care. These interventions rely on some evidence and mostly expert opinion. However, as we have seen with other process measures or interventions, such as checklists, these are prone to user fatigue as providers return to their previous habits and practices.

Third, and potentially most important, is the culture of the organization and its members. As many have said before, “culture eats strategy for breakfast”. The present study hints at several recommendations related to safety culture, but falls short of emphasizing this as the key element to achieving improvements in rescue. A safe culture is the foundation on which aspects of organizational structure and processes of care can be built to promote more effective rescue. For example, investing in education for nurses to detect deteriorating patients or disseminating escalation protocols are only as effective as the unit or organization’s willingness to learn, implement, and adapt.

What is culture? And what does a safe culture look like? Culture refers to the shared, often unconscious attitudes and standards that govern behavior, especially in crisis situations that lack clearly defined pathways or processes. To better understand culture, one can think of it as the forces in an organization that operate in the background. These forces are shaped by the organization’s values, beliefs, traditions, norms, and even myths. Paradoxically, an organization’s culture is simultaneously nearly impossible to measure but an understanding of it is absolutely essential to change the organization.

Traditionally, qualitative methods, such as interviews, focus groups, or ethnography, have been employed to study culture. This requires an appreciation for the unique aspects of individual social settings and the nuances of the interactions between the levels of hierarchy over time. In order to change organizational safety culture and climate, healthcare leaders, practitioners, and researchers will need to move from the current “blame and shame” in the face of medical errors to an enriched progressive culture where errors are recognized as learning opportunities to better the organization.

Extensive research into the approach to supporting a positive safety culture have resulted in the following key organizational commitments—to construct reliable systems and processes, to support and encourage error reporting with an open and just culture, to embrace management practices and behaviors supportive of safety, and to detect and analyze errors and adverse events with robust investigation.3–5 This final commitment lends itself to some well-established methods for quality improvement, such as FMEA or RCA, which have begun to penetrate healthcare systems.

There are only a few rigorous examples of effectively changing culture to improve practice. Probably the most well-known and studied example of cultural change improving clinical outcomes is the Johns Hopkins Comprehensive Unit Based Safety Program (CUSP). Developed and pioneered by Peter Pronovost, CUSP evolved through literature review, discussion with experts, trial, and adaptation. The goal of this program was to create a safety program with several goals—it could be implemented sequentially in work units, would improve the culture of safety, would allow staff to focus safety efforts on unit-specific problems, and would include rigorous data collection that could be disseminated beyond the study setting.6 It has since been implemented in several large-scale quality improvement initiatives. However, while CUSP has been applied broadly, its effectiveness outside the ICU and specifically in the context of complication rescue or escalation of care is unknown.

Ultimately, improvements in surgical safety will require significant work in measuring culture, designing cultural improvement initiatives, and implementing change through continuous and rigorous engagement. Johnston et al have provided an important first step in that direction, but significant work remains in order to identify high leverage targets and implement widespread efforts to transform units towards a culture that better supports appropriate and timely escalation of care and improves the rescue of patients from postoperative complications.

Footnotes

Conflict of Interest/Disclosures: Dr. Dimick receives grant funding from the National Institutes of Health (NIH), the Agency for Healthcare Research and Quality (AHRQ), and BlueCross BlueShield of Michigan Foundation; and is a co-founder of ArborMetrix, Inc, a company that makes software for profiling hospital quality and efficiency. Dr. Ghaferi receives grant funding from the National Institutes of Health (NIH).

References

- 1.Ghaferi AA, Birkmeyer JD, Dimick JB. Variation in hospital mortality associated with inpatient surgery. N Engl J Med. 2009;361:1368–1375. doi: 10.1056/NEJMsa0903048. [DOI] [PubMed] [Google Scholar]

- 2.Johnston M, Arora S, Anderson O, et al. Escalation of Care in Surgery: A Systematic Risk Assessment to Prevent Avoidable Harm in Hospitalized Patients. Ann Surg. 2015 doi: 10.1097/SLA.0000000000000762. [DOI] [PubMed] [Google Scholar]

- 3.Kohn LT, Corrigan JM, Donaldson MS. A report of the Committee on Quality of Health Care in America, Institute of Medicine. Washington, DC: National Academy Press; 2000. To err is human: building a safer health system. [PubMed] [Google Scholar]

- 4.Weick KE. The reduction of medical errors through mindful interdependence. Medical error: What do we know. 2002:177–199. [Google Scholar]

- 5.Page A. Keeping patients safe: Transforming the work environment of nurses. National Academies Press; 2004. [PubMed] [Google Scholar]

- 6.Pronovost P, Weast B, Rosenstein B, et al. Implementing and validating a comprehensive unit-based safety program. J Patient Saf. 2005;1:33–40. [Google Scholar]