Abstract

Hypoacusis is the most prevalent sensory disability in the world and consequently, it can lead to impede speech in human beings. One best approach to tackle this issue is to conduct early and effective hearing screening test using Electroencephalogram (EEG). EEG based hearing threshold level determination is most suitable for persons who lack verbal communication and behavioral response to sound stimulation. Auditory evoked potential (AEP) is a type of EEG signal emanated from the brain scalp by an acoustical stimulus. The goal of this review is to assess the current state of knowledge in estimating the hearing threshold levels based on AEP response. AEP response reflects the auditory ability level of an individual. An intelligent hearing perception level system enables to examine and determine the functional integrity of the auditory system. Systematic evaluation of EEG based hearing perception level system predicting the hearing loss in newborns, infants and multiple handicaps will be a priority of interest for future research.

Keywords: Auditory brainstem response, Auditory evoked potential, Electroencephalogram, Hearing loss, Hearing perception, neural networks

1. INTRODUCTION

Hearing loss is the most prevalent sensory disability in the world. Over 275 million people in the world are differentially abled hearing level. Hearing impairment survey was conducted in different countries, and reported that 0.5 per cent of newborns have the onset of sensorineural hearing disorder [1]. Consequences of hearing loss include speech disorder, communication disorder, delay in language understanding, educational disadvantage, social separation and stigmatization [2, 3]. Universal newborn hearing screening test consists of transient evoked oto-acoustic emissions (TEOAE) and automated auditory brainstem response (AABR). Auditory brainstem response (ABR) is an electrical potential signal emanated from the scalp of the brain by presenting a sound stimulus to assess the functioning of auditory neuropathy by using electroencephalography [4]. EEG is a non-invasive clinical tool used for diagnosing brain diseases and also useful for both physiological research and medical applications. Vital brain activities from newborns to adults can be monitored using EEG signals. The somatosensory stimuli associated to visual and auditory reflect the hearing ability level [5-7]. EEG based hearing threshold estimation is also more ideal for persons who lack to provide the consistent or reliable behavioral response to the acoustic stimuli.

An ad-hoc intelligent hearing level assessment system is essential for determing the threshold level of a person for newborns, infants and multiple handicaps. The technological advancement in electronics and digital signal processing techniques enable the implementation of intelligent hearing level assessment system using the EEG as a reliable measurement technology.

This article presents a review on the recent physiological experiments that examined the effectiveness of auditory evoked potential in determining the hearing threshold level.This paper is organized as follows. In section 2 , the fundamentals of event related potential and evoked potential are given. In the following section, hearing threshold level estimation from auditory evoked potential signal is presented. Finally, the paper is concluded with discussion and conclusion.

2. NEUROPHYSIOLOGICAL SIGNAL

The electroencephalography is a non-invasive technique widely used in diagnosing many neurological diseases and problems associated with brain dynamics. The EEG signal is a clear indicator of the electrical activity of the brain and contains useful information about the brain state. Brain signals resulting from steady state tasks are briefly explained in subsections as follows.

2.1. Event Related Potential (ERP)

ERPs are task oriented potentials, spatio temporal patterns of brain signal, occurring to an event or task with respect to applied stimulus time. The transition from a disordered state to an ordered state of a brain in response to synchronisation of certain task or event, gives rise to event related potential [8, 9]. ERP refelcts the activity originating within brain and is phase locked to the stimulus onset [10, 11]. ERP provides a powerful tool for objective assessment of cognitive status and clinical studies of brain functions such as attention, memory and language.

2.2. Evoked Potential (EP)

Evoked potentials are commonly occurred in response to a physical stimulus. The physical stimuli are patterns of band energy received by the senses and their corresponding sensory receptors convert this energy into nerve impulse to the brain. The nerve impulses are interpreted in the cerebral cortex as sensations. These sensations are evoked by delivering auditory stimuli such as tone burst or click stimuli. Evoked potential components comprise both exogeneous and endogeneous components of the brain. Evoked potential components are generally fall into two categories, namely, Visual Evoked potential (VEP), and Auditory Evoked Potential (AEP).

2.3. Visual Evoked Potential

VEP is an electrical signal emanated from the brain while a visual stimulus is presented to the subject in a time locked manner. The VEP can be used as a diagnostic tool to detect ocular diseases in patients with visually impaired [12]. VEP response can also be used to detect eye diseases like glaucoma, diabetic retinopathy, multiple sclerosis, ocular hypertension, loss of peripheral (side) vision, macular degeneration and color blindness [13, 14].

2.4. Auditory Evoked Potential

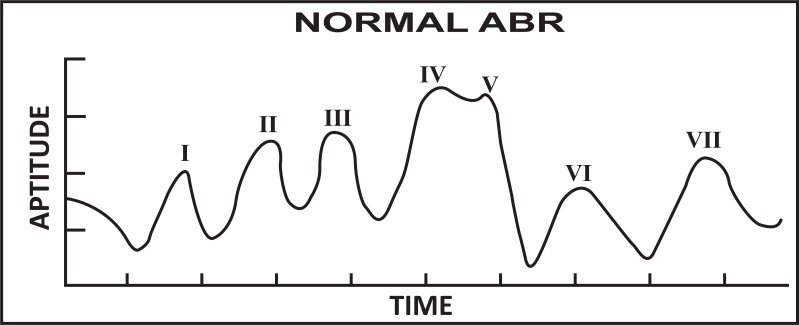

AEP is an electrical signal elicited from the brain while an auditory stimulus is presented in a time-locked manner. AEP signal consists of reproducible positive or negative peaks, latency, amplitude and behavioral correlation. AEPs are much smaller in amplitude compared to the EEG signals [15, 16]. AEP signals can be classified as either transient or steady-state. The AEP signal emanated while perceiving an audio stimuli with slow rate to avoid overlap of the immediate stimuli response and the corresponding evoked potentials are known as transient AEP. The AEP signal emanated while perceiving an audio stimuli with fast rate to induce overlap of individual responses and known as steady-state AEP [17]. ABR comprises the early portion of (0-12 millisecond) of AEPs. ABR is composed of several waves and peaks, known as Jewett waves. The ABR waves or peaks are normally labeled using Roman numerals I-VII. Waves I, III and IV are generally considered as clinically significant [18]. ABRs are used for diagnosis and localization of pathologies affecting brainstem pathways. ABRs are widely employed to test auditory function, and used as an important diagnostic tool in infant hearing screening [19].

Middle latency Auditory Evoked Potential (MLAEP) comprises of (8-50 millisecond) AEPS. Middle latency components were observed to be elicited when the subject was not perceived in the direction of attention to the auditory stimulus [20]. Directing attention of the subject to perceive the auditory stimulus has been reported to enhance the N1 (90 millisecond), P2 (170 millisecond) components of the evoked potential response [21]. MLAEP is useful in the objective assessment of auditory functions in patient and applications which include monitoring the depth of anesthesia, localization of thalamocortical lesions and assessment of the effectiveness of electrical stimulation for cochlear implantation [19, 22].

Mismatch negativity (MMN) comprises of portion of (200-400 millisecond) AEPs. MMN is elicited when flow of identical pure tone (standard stimulus) sounds followed by ‘deviant’ sound. The MMN is one of the genetically earliest perception related responses recorded over the scalp [23]. An MMN peaking at (200-400 millisecond) has been elicited by burst tone frequency change and phonemic vowel change in newborns [24]. The MMN phenomenon is valuable when investigating subjects with their feedback responses are unavailable or unreliable, such as infants. The MMN is a component of brain event related potential that helps to understand the brain process forming the biological substrate of central auditory perception and various forms of auditory memory [25, 26]. Rita ceponiene et al. [27] investigated the mismatch negativity component of event related potential of newborns and their attentiveness to sound duration and frequency. Neonates possess effective neural mechanism to perceive auditory stimuli frequency and duration discrimination mechanisms as indexed by MMN. They have reported that, MMN can be used as index level in indicating the level of hearing ability of neonates.

3. HEARING THRESHOLD ESTIMATION FROM AEP

In this paper, some of the peer reviewed papers were analyzed to estimate the hearing threshold level using perceived EEG signals. In earlier studies, the normal hearing person was subjected to various levels of acoustic stimuli and their corresponding auditory responses were recorded. Auditory evoked potential response reflects the hearing perception level of an individual. The most of the reviewed authors [28-34] investigated in determining the hearing threshold level by identifying the ABR peaks and few authors [47-51] had analyzed entire AEP signal (see Table 1).

Table 1.

Summary of AEP studies.

| Authors (Year) | Sample size & Stimulus Duration | Stimuli Frequency Range (Hz) | Stimulus Levels | Analysis method/ Feature Extraction | Reports/Results |

|---|---|---|---|---|---|

| T. W. Picton et al. (1973) | N= 12 ear, 1 sec | None | 60 dB lowered by fixed 5 dB. | The baseline was determined for peak amplitude and latency of the attend and the ignore conditions | Potential significance in amplitude was detected between attend and ignore conditions of the stimuli |

| E. Delgada et al. (1994) | N=24 ear, HL= 11 ear, 10 msec | None | 10-70 dB at an increment of 10 dB. | The peak identification system used a combined matched filtering and rule based system approach | Precision for peak V for normal subject was 96 % compared to 82.3 % for hearing impaired |

| Barrie. W. Jervis et al. (1983) | N=3 ear, 100 ms | 1 K | 40 , 70 dB | Energy levels in pre and post stimulus was compared by means of paired t-test. | AEP was due to phase reordering and contains additive energy in harmonic component |

| Robert Boston et al. (1981) | N=14 ear, 10 msec | 100, 500,1000 | 30, 50, 70, 80 dB | The bias, variance, and mean square error were determined from Hamming window spectral estimates | Peak occurs at lowest stimulus intensities, at which wave V is clearly defined. |

| J. Wilson et al. (1999) | N= 240 ear, 0.1 msec | None | 0, 10, 30, 50, 70, 90 dB | ABR waves time and frequency domain features were extracted | Both time and frequency domain features of ABR wave reflects change to subject age and gender |

| J. Wilson et al. (1999) | N= 240 ear, 0.1 msec | None | 90 dB | A Daubechies 5 wavelet was employed to discrete analysis the ABR wave | Multi resolution wavelet analysis reflects change to subject age and gender |

| Ulrich Hoppe (2001) | N= 22 ear, 300 msec | 500, 1 K, 2 K, 4 K | 20, 40,60, 80 dB | Wavelet features were extracted and then statistical test was conducted on classification | The proposed automatic detector finds the response as identical human experts |

| Robert Boston et al. (1989) | N= 14 ear, HL= 16 ear, 1.5 ms | None | None | A rule based expert system with heuristic criteria to identify peak V | The proposed system identifies when response was present but not as effective with no response present |

| Walker et al. (1983) | N = 4 ear, 28 clicks/sec | None | 25, 45, 65 dB | Matched filter was employed to detect peak V | Matched filter system reduces computational time from 30 min to 5-10 min for a hearing test |

| Tapio Gronofors. (1993) | N= 44 ear, 0.1 clicks/msec | None | 60, 70, 90 dB | Mutlifilters and an attributed automation were used to identify peak V | The proposed method identifies 80 per cent of peak V |

| Authors (Year) | Sample size & Stimulus Duration | Stimuli Frequency Range (Hz) | Stimulus Levels | Analysis method/ Feature Extraction | Reports/Results |

| Sudirman et al. (2009) | N= 4 ear, 10 sec | 40, 500, 5000, 15000 | None | AEP signal was analyzed by fast fourier transform | Feed -forward neural network was used to classify hearing level based on brain signals. |

| Masumi Kogure et al. (2010) | N= 10 ear, 500 msec | 440 | None | Gradient of the wave characteristics were analyzed to classify target and non-target trails. | Hearing level perception in targeted stimulus was 65.4-76.3 %, while for non- targeted stimulus was 66.4-70.5 % |

| Maryam Ravan et al. (2011) | 58 newborns, 300 msec | 294, 784 | 70 dB | First order and second order moment sequences of wavelet were identified as features | Brain neurological developments for hearing was not associated with the age difference in newborns |

| Sriraam (2012) | N= 16 ear, 10 sec | None | None | Two time- frequency domain features were extracted and classified using neural network | Applied features has a classification accuracy of 65.3-100 % |

3.1. Potential Significance of ABR Wave

Picton et al. [18] examined the effects of attention to the auditory evoked potentials in humans. When the standard auditory stimuli perceived with attention, there was a significant increase in the N1 (90 msec) P2 (170 msec) components and further peak was evoked near 450 msec to the perceived signal. The detected signals based on stimuli condition were used to index the hearing perception level of a subject. A remarkable distinct series of wave (I-VI), after the clicks stimulus was observed and it was first reported by Jewett and Williston. The observed auditory response waveform was consistent and detectable in all subjects. At first, this series of an auditory waveform was known as Jewett wave and latter as auditory evoked potential response or auditory evoked potential. Auditory brainstem responses (ABRs) comprise the early portion of (0-12 millisecond) of AEPs. Waves I, III and IV are generally considered as clinically significant [28]. Barrie W. Jervis et al. [29] had shown that the evidence of AEP was due to phase reordering and further contains additive energy in each harmonic component. This was established by employing angular statistics techniques.

The post stimulus latency and amplitudes of the defined peaks characterize the response of the ABR waveform. The significance of ABR waveform is that it does not require any attention or feedback response from the subject under test. ABR signals are used for diagnosis and localization of pathologies affecting brainstem pathways. ABR signals are widely employed to test auditory integrity and they are used as an important diagnostic tool in infant hearing screening test [30]. The change in amplitude and latency of ABR waveform occurs besides age, sex, test ear and vigilance of the subject [31-33]. Delgada et al. [28] have proposed the complete automated system for ABR response identification and waveform recognition. The analysis portion was divided into: (i) peak identification and labeling, (ii) ABR interpretation. When the threshold level was above 20 dB hearing loss, the subjects were flagged as having a form of hearing loss. When the peak intensity level was greater than 4.40 ms the subjects were flagged as having hearing pathologies. Hall et al. [34] reported that spectral compositions of ABR waveform contain valuable and unique prognostic information than their time domain counterparts. Arnaud jacquin et al. [35], concentrated on combining a signal adaptive denoising techniques based on complex wavelets with figure of signal quality (Fsp) to denoise Brainstem Auditory Evoked Response (BAER) signals. This proposed technique increases the detection rate of AEP response waves, which determines the presence of hearing loss of a patient in real time.

3.2. Clinical Importance of Peak V of ABR

Roberston et al. [19] described the evaluating alternation of techniques for estimating the spectrum of the ABR signal. The mid-frequency and high-frequency components of the ABR waves are most important for determining the latencies, and threshold estimation. Peak V has a primary importance in identifying the hearing level of a person, because this peak V clearly occurs only at the lowest sound stimulus intensity. Walker et al. [36] proposed a matched filter system to predict the peak V latency which is significant in estimating the hearing loss. Tapio et al. [37] proposed a method with multi-filters and an attributed automation system was used to identify the peak V. Strauss et al. [38] had proposed the fast detection of wave V in ABR using single sweep analysis with hybrid supervised system. This proposed work provides 100 % sensitivity and 90 % specificity in terms of identifying the peak V for normal hearing persons.

Andrew et al. [39], proposed that ABR is one of the most widely used auditory evoked potentials for determining thresholds. A method to estimate the ABR peak counts which is directly associated in determining the threshold level of a patient was proposed. The proposed algorithm finds the peaks of clinical interest specifically peak V, which is instrumental in discriminating normal subjects and hearing loss subjects on the basis of first and second order derivatives. A desired ABR peaks were extracted using a narrow band FIR filter with a zero phase shift. This proposed method demonstrates that neural network can be used to estimate latency of ABR peaks [40]. Rushaidin et al. [41] estimated the peak instantaneous energy of peak V of ABR wave as a feature and discriminated the normal and abnormal hearing persons on the basis of their derived threshold values. The normal ABR wave is shown in Fig. (1).

Fig. (1).

Normal ABR signal (presence of peak V visible).

3.3. Expert Rule Based System Detection of Peak V

Robert Boston et al. [30], proposed an expert decision support system for interpretation of brainstem auditory evoked potential response. The prototype system consists of 36 rules. 13 rules were framed in order to find the presence of a neural response and 10 rules to identify a peak as peak V. This proposed rule based system was not as effective in identifying ABR waveform with no response was present. D. Alpsan et al. [42], proposed that Feedforward neural network was employed to detect the brainstem auditory evoked potential response and no response signals. The maximum classification of 75.6 % was reported in discriminating the “response” and “no response” classes. R. Sanchez et al. [43], extracted individual features set and combination of features set from brainstem auditory evoked potential (BAEP) were given as an input feature vectors to linear discriminate function and artificial neural networks. The maximum classification accuracy of 97.2 %, and 98.85 % was reported using linear discriminate functions and artificial neural networks, respectively. Edwige Vannier et al. [44] proposed a brainstem auditory evoked potential detection method in the time domain based on supervised pattern recognition. The pattern of normal BAEP was used based on cross correlation with a template. The accuracy of detecting the BAEP pattern was 90 % and determination of threshold level is with a mean error of 5 dB. Nurettin et al. [45], proposed automated recognition of ABR waveform to detect the hearing threshold of a person. In this method, amplitude values, discrete cosine transform coefficients, and discrete wavelet transform coefficients were extracted from the ABR waveform classified using support vector machine and a classification accuracy of 97.7 % was reported. Jose Antonio et al. [46] constructed and developed an EEG auditory evoked potential data acquisition system (EEG-ITM03) and used to determine the hearing impairment of a patient. The EEG- ITM03 can be used under non-noise controlled conditions. AEP data from their corresponding channels are acquired and processed the signal to identify the presence/absence of hypoacusia.

3.4. Complete Characteristic Analysis of Recorded AEP

The authors [47-51] addressed the problem by analyzing the complete waveform of AEPs instead of searching the defined peaks. The interpretation of wave (IV, V) is more complex and even small changes in the waveform can lead to large changes in their latency and amplitudes of wave IV and V. The recorded AEP signal contains the deterministic and the stochastic components. Researchers have focused their attention on the changes of the structure of AEP signal or characteristic of AEP signal in determining the hearing threshold level of a person. Shangkai et al. [47] had estimated the AR models for the AEP records of normal hearing and abnormal hearing persons. The estimated hearing thresholds by model parameters were very different dynamic structures from normal and abnormal persons with hearing loss problems. The estimated hearing threshold by parametric model holds good agreement with the audiologist assessment. Sudirman et al. [48] investigated EEG based hearing ability identification level using artificial intelligence. AEP signal was recorded for 10 seconds and collected from temporal lobes (T3, T4, T5, and T6) of the brain. The signals were analyzed using FFT. The extracted features were trained using gradient descendant algorithm with momentum. The feed-forward neural network was used to classify the hearing level based on brain signals. Emre et al. [8] used the continuous time wavelet entropy of auditory evoked potential and characterized by the relative energy in the EEG frequency bands. Continuous time wavelet gives detailed information of AEP response of brain which determines threshold level of a person.

Masumi Kogure et al. [49], in their experiment, used sound localization system in which subjects were requested to listen to a sound cue and answer in the relative direction. Direction of stimuli plays a vital role in terms of subject perceiving the sound intensity level. It has been concluded that the EEG signal can be used to elicit the sound source direction. Maryam Ravan et al. [50] demonstrated that machine learning algorithms can be used to classify individual subjects using auditory evoked potential. The wavelet coefficient features of the evoked potentials were selected using greedy algorithm. The extracted features were fed into various machine learning algorithms such as multilayer perceptron neural network, support vector, and fuzzy C means clustering [52]. Sriraam extracted appropriate features from the recorded auditory evoked potential signals to differentiate the hearing perception based on target stimulus and non-stimulus. Applied two time domain features, spike rhythmicity, autoregression Levinson method two frequency domain features, power spectral density (AR Burg method), power spectral density (Yule-Walker method) have a classification accuracy of 65.3-100 % for normal hearing subjects.

4. DISCUSSION

The reviewed research papers illustrate that hearing threshold of a person can be estimated by analyzing different types of AEP signals. EEG based hearing threshold level determination system is suitable for persons who lack verbal communication (newborns, infants) and muscle movements (neuro muscular disorder).

4.1. Hearing Perception Loss in Newborns

Recently, most of the countries have adapted universal newborns hearing screening test to screen newborns using EEG. The screening tests for the newborns are usually conducted using transient oto-acoustic emissions and auditory brainstem response. AEPs are much smaller than EEG signal in terms of amplitude. Auditory brainstem response comprises the early portion (0-12 msec) of AEPs. The first seven peaks of auditory brain response waves are labeled as peak I-VII. The peak waves I, III, V are generally considered to be clinically significant. The main characteristics of ABR are inter-peak latency and their amplitude. A level of confidence is associated with identification of peaks (I, III, V) in response to sound stimulation. Peak V is mainly used to identify the hearing threshold level of newborns.

4.2. Issues with Existing ABR Methods in Clinics

The detection of hearing responses at threshold levels of different frequencies and sound pressure levels were not examined by current existing methods that are being used in clinics to test newborns hearing threshold level. References of human experts are always required in difficult cases. Further, the accuracy of the threshold determination depends on the relevance of the stimulation intensities. Boo et al. [53], in their research work, have compared the sensitivity and specificity of detecting sensorineural hearing loss using AABR and Oto acoustic emission (OAE) in newborns. Based on their reports, AABR finds the advantage in detection of sensorineural hearing loss in newborns. Their study also showed that both methods were not optimal in detecting hearing loss for mass screening of newborns.

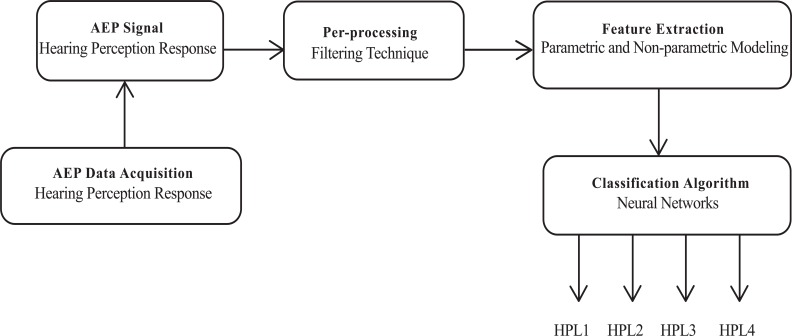

4.3. AEP Hearing Perception Level Estimation

AEP response reflects the auditory ability level of an individual. Stimulating a repetitive click-sound of specific frequency at different stimulus intensity levels (20 dB, 25 dB, 30 dB, 40 dB, 50 dB and 70 dB) was able to determine the hearing sound perception level of a person. The hearing perception level of a subject can be determined by selecting suitable features from the AEP response signal. With further investigation and interpretation of the AEP signal, it is feasible to discriminate the different hearing perception levels of a person. The block diagram of the proposed AEP based hearing perception detection system is shown in Fig. (2).

Fig. (2).

AEP based hearing perception level detection system.

HPL- Hearing perception level.

4.4. AEP based Frequency Specific Hearing Estimation

AEP response reflects the auditory ability level of an individual with respect to frequency and sound pressure level. Stimulating a repetitive click-sound of different frequency at fixed stimulus intensity level enables to determine the hearing frequency perception level of a person. This proposed method is able to detect person with low frequency hearing loss, mid-frequency hearing loss and high-frequency hearing loss.

In order to develop a successful common method to determine the auditory loss in all persons including multiple handicaps, and infants, number of issues need to be addressed:

(i) Establishing a standard hearing threshold protocol; (b) setting an appropriate hearing threshold criterion. Each of these issues, discussed below, present an opportunity for further research in the area.

The standard procedure for recording auditory evoked potentials can be formulated. This provides researchers to form a common platform to discuss the various AEP processing techniques and research findings with their counterparts. The important factors to be considered while recording the AEP signals are: 1) Electrodes and Electrode placement; 2) Type of stimulus, stimuli levels; 3) Stimuli frequency range; 4) Sound proof room; 5) Artifact and noise; 6) Selecting suitable features and machine learning algorithms. From the literature review, it has been observed that most of the researchers have employed a two channel EEG data acquisition system. Thus, it provides an opportunity to explore the potentially significant electrode locations emanating AEP signals. Based on the potentially significant areas, minimum number of electrode channels can be selected.

4.5. Hearing Threshold Criterion Setting

To distinguish the normal or hearing impaired state, one or more criteria are required. These criteria should yield both high sensitivity and specificity. The selected parameters should indicate the levels of hearing impaired from no impairment to profound impairment. Specific frequency with sound intensity level determines the objective threshold level. The issue of sex difference was neglected in almost all studies, because recorded AEPs does not show any marked differentiation between males and females. The multiple criteria may be necessary in predicting the specific type of hearing impairment based on AEPs for a single individual. The standalone intelligent hearing system based on EEG by incorporating octave band frequency can be designed and developed to determine the hearing threshold of a person. The efficient intelligent hearing level system may require the setting of multiple criteria and the use of multiple measures.

CONCLUSION

In this paper, we have reviewed the current state of the knowledge of auditory evoked potential used as a significant tool in determining the threshold levels of a person. To conduct AEP response test, a type of special attention or cooperation from the patients are not expected. AEPs are reliable indicators for hearing level of a person. AEPs can be used to detect and estimate the hearing levels from severe to profound hearing impairment. The AEP hearing test may also be used to cross-validate and examine to prevent persons who pretend as a normal hearing person in conventional pure tone test method for their social and economic benefits. AEPs signals can be analyzed in time-frequency domain in order to extract underlying detailed information about the auditory pathway. Ultimately, it appears that a standalone hearing level system based on EEG which can detect hearing loss for all including neonates, infants and multiple handicaps is needed essentially to help improve their quality of life.

ACKNOWLEDGEMENTS

The authors would like to thank Brigedier Jeneral Dato' Professor Dr. Kamarudin Hussin, the Vice Chancellor, University of Malaysia Perlis for his continuous support and encouragement. The authors are thankful and would like to acknowledge the Fundamental Research Grant Scheme (FRGS) grant: 9003-00278 by Ministry of Higher Education, Malaysia.

CONFLICT OF INTEREST

The authors confirm that this article content has no conflict of interest.

References

- 1.WHO International Day for Ear and Hearing. 2012.

- 2.Mather C., Smith A., Concha M. Global burden of hearing loss. In: Mather C., Doris M.F., editors. Global Burden of Diseases: 2004 Update. WHO Press Geneva; 2008. pp. 1–30. [Google Scholar]

- 3.Rao R.S., Subramanyam M.A., Nair N.S., Rajashekhar B. Hearing impairment and ear diseases among children of school entry age in rural South India. Int. J. Pediatr. Otorhinolaryngol. 2002;64(2):105–110. doi: 10.1016/S0165-5876(02)00032-0. [DOI] [PubMed] [Google Scholar]

- 4.Olusanya B.O., Luxon L.M., Wirz S.L. Benefits and challenges of newborn hearing screening for developing countries. Int. J. Pediatr. Otorhinolaryngol. 2004;68(3):287–305. doi: 10.1016/j.ijporl.2003.10.015. [DOI] [PubMed] [Google Scholar]

- 5.Preissl H., Lowery C.L., Eswaran H. Fetal magnetoencephalography: current progress and trends. Exp. Neurol. 2004;190(Suppl. 1):S28–S36. doi: 10.1016/j.expneurol.2004.06.016. [DOI] [PubMed] [Google Scholar]

- 6.Vanhatalo S., Kaila K. Development of neonatal EEG activity: from phenomenology to physiology. Semin. Fetal Neonatal Med. 2006;11(6):471–478. doi: 10.1016/j.siny.2006.07.008. [DOI] [PubMed] [Google Scholar]

- 7.Cummins T.D., Finnigan S., Ros J. Theta power is reduced in healthy cognitive aging. Int. J. Psychophysiol. 2007;66(1):10–17. doi: 10.1016/j.ijpsycho.2007.05.008. [DOI] [PubMed] [Google Scholar]

- 8.Cek M.E., Ozgoren M., Savaci F.A. Continuous time wavelet entropy of auditory evoked potentials. Comput. Biol. Med. 2010;40(1):90–96. doi: 10.1016/j.compbiomed.2009.11.005. [DOI] [PubMed] [Google Scholar]

- 9.Subha D.P., Joseph P.K., Acharya U R., Lim C.M. EEG signal analysis: a survey. J. Med. Syst. 2010;34(2):195–212. doi: 10.1007/s10916-008-9231-z. [DOI] [PubMed] [Google Scholar]

- 10.Luck K.S. Introduction to the Event Related Potential Technique. 2005. [Google Scholar]

- 11.Başar-Eroglu C., Kolev V., Ritter B., Aksu F., Başar E. EEG, auditory evoked potentials and evoked rhythmicities in three-year-old children. Int. J. Neurosci. 1994;75(3-4):239–255. doi: 10.3109/00207459408986307. [DOI] [PubMed] [Google Scholar]

- 12.Sokol S. Visually evoked potentials: theory, techniques and clinical applications. Surv. Ophthalmol. 1976;21(1):18–44. doi: 10.1016/0039-6257(76)90046-1. [DOI] [PubMed] [Google Scholar]

- 13.Shih Y.H., Huang Z.J., Chang C.E. Color pattern-reversal visual evoked potential in eyes with ocular hypertension and primary open-angle glaucoma. Doc. Ophthalmol. 1991;77(3):193–200. doi: 10.1007/BF00161367. [DOI] [PubMed] [Google Scholar]

- 14.Regan D., Neima D. Visual fatigue and visual evoked potentials in multiple sclerosis, glaucoma, ocular hypertension and Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry. 1984;47(7):673–678. doi: 10.1136/jnnp.47.7.673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Picton T.W. Human Auditory Evoked Potentials. USA: Plural Publishing; 2010. [Google Scholar]

- 16.Picton T.W., John M.S., Purcell D.W., Plourde G. Human auditory steady-state responses: the effects of recording technique and state of arousal. Anesth. Analg. 2003;97(5):1396–1402. doi: 10.1213/01.ANE.0000082994.22466.DD. [DOI] [PubMed] [Google Scholar]

- 17.Plourde G. Auditory evoked potentials. Best Pract. Res. Clin. Anaesthesiol. 2006;20(1):129–139. doi: 10.1016/j.bpa.2005.07.012. [DOI] [PubMed] [Google Scholar]

- 18.Picton T.W., Hillyard S.A. Human auditory evoked potentials. II. Effects of attention. Electroencephalogr. Clin. Neurophysiol. 1974;36(2):191–199. doi: 10.1016/0013-4694(74)90156-4. [DOI] [PubMed] [Google Scholar]

- 19.Boston J.R. Spectra of auditory brainstem responses and spontaneous EEG. IEEE Trans. Biomed. Eng. 1981;28(4):334–341. doi: 10.1109/TBME.1981.324801. [DOI] [PubMed] [Google Scholar]

- 20.Zhang X.S., Roy R.J., Schwender D., Daunderer M. Discrimination of anesthetic states using midlatency auditory evoked potential and artificial neural networks; Proceeding of IEEE International Conference on Engineering in Medicine and Biology Society; 2000. pp. 1383–86. [Google Scholar]

- 21.Mendel M.I., Goldstein R. Stability of the early components of the averaged electroencephalic response. J. Speech Hear. Res. 1969;12(2):351–361. doi: 10.1044/jshr.1202.351. [DOI] [PubMed] [Google Scholar]

- 22.Wilkinson R.T., Morlock H.C. Auditory evoked response and reaction time. Electroencephalogr. Clin. Neurophysiol. 1967;23(1):50–56. doi: 10.1016/0013-4694(67)90140-X. [DOI] [PubMed] [Google Scholar]

- 23.Näätänen R., Paavilainen P., Rinne T., Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin. Neurophysiol. 2007;118(12):2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- 24.Näätänen R., Winkler I. The concept of auditory stimulus representation in cognitive neuroscience. Psychol. Bull. 1999;125(6):826–859. doi: 10.1037/0033-2909.125.6.826. [DOI] [PubMed] [Google Scholar]

- 25.Korpilahti P. Electrophysiological correlates of auditory perception in normal and language impaired children. 1996. [Google Scholar]

- 26.Leppanen P.H., Eklund K., Lyytinen M. Event related brain potentials to change in rapidly presented acoustic stimuli in newborns. J. Develop. Neurophysiol. 1997;13:175–04. [Google Scholar]

- 27.Copeniene J.R., Kushneremko E., Fellman V., Renlund M., Suominen K., Noatanen R. Event related potential features indexing central auditory discrimination by newborns. J. Cogn. Brain Res. 2002;13:107–113. doi: 10.1016/s0926-6410(01)00093-3. [DOI] [PubMed] [Google Scholar]

- 28.Delgada E., Ozdamar O. Automated auditory brainstem response interpretation. IEEE Eng. Med. Biol. 1994;13:227–237. doi: 10.1109/51.281682. [DOI] [Google Scholar]

- 29.Jervis B.W., Nichols M.J., Johnson T.E., Allen E., Hudson N.R. A fundamental investigation of the composition of auditory evoked potentials. IEEE Trans. Biomed. Eng. 1983;30(1):43–50. doi: 10.1109/TBME.1983.325165. [DOI] [PubMed] [Google Scholar]

- 30.Boston J.R. Automated interpretation of brainstem auditory evoked potentials: a prototype system. IEEE Trans. Biomed. Eng. 1989;36(5):528–532. doi: 10.1109/10.24254. [DOI] [PubMed] [Google Scholar]

- 31.Wilson W.J., Aghdasi F. Fast Fourier transform analysis of the auditory brainstem response: Effects of stimulus intensity and subject age, gender, test Ear; Proceeding of IEEE International Conference on Africon; 1999. pp. 285–90. [DOI] [Google Scholar]

- 32.Wilson W.J., Aghdasi F. Discrete wavelet transform analysis of the auditory brainstem response: Effects of stimulus Intensity and subject age, gender, Test Ear; Proceeding of IEEE International Conference on Africon; 1999. pp. 291–96. [Google Scholar]

- 33.Hoppe U., Weiss S., Stewart R.W., Eysholdt U. An automatic sequential recognition method for cortical auditory evoked potentials. IEEE Trans. Biomed. Eng. 2001;48(2):154–164. doi: 10.1109/10.909636. [DOI] [PubMed] [Google Scholar]

- 34.Hall J.W., III Auditory brain stem response spectral content in comatose head-injured patients. Ear Hear. 1986;7(6):383–389. doi: 10.1097/00003446-198612000-00007. [DOI] [PubMed] [Google Scholar]

- 35.Jacquin E.C., John E.R. Optimal denoising of brainstem auditory evoked response for automatic peak identification and brainstem assessment; Proceeding of IEEE International Conference on Engineering in Medicine and Biology Society; 2006. pp. 1723–126. [DOI] [PubMed] [Google Scholar]

- 36.Woodworth W., Reisman S., Fontaine A.B. The detection of auditory evoked responses using a matched filter. IEEE Trans. Biomed. Eng. 1983;30(7):369–376. doi: 10.1109/TBME.1983.325036. [DOI] [PubMed] [Google Scholar]

- 37.Grönfors T. Peak identification of auditory brainstem responses with multi-filters and attributed automaton. Comput. Methods Programs Biomed. 1993;40(2):83–87. doi: 10.1016/0169-2607(93)90002-3. [DOI] [PubMed] [Google Scholar]

- 38.Strauss D.J., Delb W., Plinkert P.K., Schmidt H. Fast detection of wave V in ABRs using a smart single sweep analysis system; Proceeding of IEEE International Conference on Engineering in Medicine and Biology Society; 2004. pp. 458–61. [DOI] [PubMed] [Google Scholar]

- 39.Bradley P., Wilson W.J. Automated analysis of the auditory brainstem response; Proceeding of IEEE International Conference on Intelligent Sensors, Sensor Networks and Information Processing; 2004. pp. 541–45. [Google Scholar]

- 40.Tian J., Juhola M., Grönfors T. Latency estimation of auditory brainstem response by neural networks. Artif. Intell. Med. 1997;10(2):115–128. doi: 10.1016/S0933-3657(97)00389-8. [DOI] [PubMed] [Google Scholar]

- 41.Rushaidin M.M. Sh-Hussain Salleh, Tan Tian Swee, J. M. Najeb and A. Arooj, “Wave V detection using instantaneous energy of auditory brainstem response signal. Am. J. Appl. Sci. 2009;6:1669–1674. doi: 10.3844/ajassp.2009.1669.1674. [DOI] [Google Scholar]

- 42.Alpsan D., Ozdamar O. Brainstem auditory evoked potential classification by backpropagation networks; Proceeding of IEEE International Joint Conference on Neural Networks; 1991. pp. 1266–1271. [DOI] [Google Scholar]

- 43.Sánchez R., Riquenes A., Pérez-Abalo M. Automatic detection of auditory brainstem responses using feature vectors. Int. J. Biomed. Comput. 1995;39(3):287–297. doi: 10.1016/0020-7101(95)01110-Z. [DOI] [PubMed] [Google Scholar]

- 44.Vannier E., Adam O., Motsch J.F. Objective detection of brainstem auditory evoked potentials with a priori information from higher presentation levels. Artif. Intell. Med. 2002;25(3):283–301. doi: 10.1016/S0933-3657(02)00029-5. [DOI] [PubMed] [Google Scholar]

- 45.Acir N., Ozdamar O., Guzelis C. Automatic classification of auditory brainstem responses using SVM-based feature selection algorithm for threshold detection. Eng. Appl. Artif. Intell. 2006;19:209–218. doi: 10.1016/j.engappai.2005.08.004. [DOI] [Google Scholar]

- 46.Gnecchi J.A., Lara L.R. Design and construction of an EEG data acquisition system for measurement of auditory evoked potential; Proceeding of IEEE International Conference on Electronics, Robotics and Automotive Mechanics; 2008. pp. 547–552. [DOI] [Google Scholar]

- 47.Gao S.K., Loew M.H. An autoregressive model of the BAEP signal for hearing-threshold testing. IEEE Trans. Biomed. Eng. 1986;33(6):560–565. doi: 10.1109/TBME.1986.325820. [DOI] [PubMed] [Google Scholar]

- 48.Sudirman R., Seow S.C. Electroencephalographic based hearing identification using back propagation algorithm; Proceeding of IEEE International Conference on Science and technology for Humanity; 2009. pp. 991–95. [DOI] [Google Scholar]

- 49.Kogure M., Matszaki S., Wada Y. 2010. Investigation of brain computer interface that apply sound evoked event related potentials. [DOI] [Google Scholar]

- 50.Ravan M., Reilly J.P., Trainor L.J., Khodayari-Rostamabad A. A machine learning approach for distinguishing age of infants using auditory evoked potentials. Clin. Neurophysiol. 2011;122(11):2139–2150. doi: 10.1016/j.clinph.2011.04.002. [DOI] [PubMed] [Google Scholar]

- 51.Sriraam N. EEG based automated detection of auditory loss: A pilot study. Expert Syst. Appl. 2012;39:723–731. doi: 10.1016/j.eswa.2011.07.064. [DOI] [Google Scholar]

- 52.Boo N.Y., Rohani A.J., Asma A. Detection of sensorineural hearing loss using automated auditory brainstem-evoked response and transient-evoked otoacoustic emission in term neonates with severe hyperbilirubinaemia. Singapore Med. J. 2008;49(3):209–214. [PubMed] [Google Scholar]