Abstract

Objective

Intracortical brain-computer interface (BCI) decoders are typically retrained daily to maintain stable performance. Self-recalibrating decoders aim to remove the burden this may present in the clinic by training themselves autonomously during normal use but have only been developed for continuous control. Here we address the problem for discrete decoding (classifiers).

Approach

We recorded threshold crossings from 96-electrode arrays implanted in the motor cortex of two rhesus macaques performing center-out reaches in 7 directions over 41 and 36 separate days spanning 48 and 58 days in total for offline analysis.

Main results

We show that for the purposes of developing a self-recalibrating classifier, tuning parameters can be considered as fixed within days and that parameters on the same electrode move up and down together between days. Further, drift is constrained across time, which is reflected in the performance of a standard classifier which does not progressively worsen if it is not retrained daily, though overall performance is reduced by more than 10% compared to a daily retrained classifier. Two novel self-recalibrating classifiers produce a ~15% increase in classification accuracy over that achieved by the non-retrained classifier to nearly recover the performance of the daily retrained classifier.

Significance

We believe that the development of classifiers that require no daily retraining will accelerate the clinical translation of BCI systems. Future work should test these results in a closed loop setting.

1. Introduction

Brain-computer interfaces (BCIs) aim to assist disabled patients by translating recorded neural activity into control signals for assistive devices. Intracortical BCI systems rely upon arrays of chronically implanted microelectrodes which penetrate into the cortex to record the activity of tens to hundreds of neurons. The last decade has witnessed a substantial investment of resources by the community into developing decoders for intracortical BCI which are more accurate, both in offline simulations with prerecorded data and in online, closed-loop settings. However, in almost all cases these decoders are retrained daily in a supervised manner to maintain their accuracy (e.g., Musallam et al. (2004); Santhanam et al. (2006); Velliste et al. (2008); Li et al. (2009); Hochberg et al. (2012); Gilja et al. (2012); Collinger et al. (2012)). This retraining typically requires that the subject perform a series of trials where the goal of each trial, such as desired reach target, is known so labelled data for training can be collected.

While retraining procedures may only require minutes of a subject’s time, future users may find daily repetition of a task which stands between them and device use burdensome. Indeed, imagine the annoyance modern cell phone users would experience if they had to calibrate the touch screens of their devices everyday before use. A few minutes of calibration would be a small price to pay for the connectivity these devices provide, but it is difficult to imagine the widespread adoption of modern phones under these conditions. In the same way, we suggest that modern BCI systems should aim to achieve predictable performance each day without undue burden to users.

An alternative to retraining in a supervised manner is to employ self-recalibrating neural decoders which can simultaneously estimate a user’s intent along with changes in neural tuning parameters. These decoders, generally referred to as adaptive decoders, continuously train themselves during normal BCI use without requiring knowledge of a user’s true intended actions. In this way, the retraining period at the beginning of each day can be eliminated. There have been relatively few studies on self-recalibrating decoders for intracortical BCI, and the work that does exist has focused on BCI decoders which decode a continuously valued control signal, such as the position of a computer cursor or prosthetic limb (Eden et al., 2004a,b; Srinivasan et al., 2007; Li et al., 2011).

While continuous decoders have many important applications, classifiers of discrete actions are an important part of existing real-world BCI systems (Ohnishi et al., 2007). Potential clinical applications for BCI classifiers include making selections from a menu interface or virtual keyboard (e.g., Scherer et al. (2004); Santhanam et al. (2006); Brunner et al. (2011)), selecting among grasp types for a prosthetic hand (e.g., Pistohl et al. (2012); Chestek et al. (2013)), classifying finger movements (e.g., Aggarwal et al. (2008); Wang et al. (2009)), identifying periods of planning and movement (e.g., Shenoy et al. (2003); Achtman et al. (2007); Kemere et al. (2008); Wang et al. (2012)) and providing discrete control signals for a wheelchair (e.g., Leeb et al. (2007); Galán et al. (2008)). In some settings it may be possible to interface with a device using either a continuous or discrete control signal. For example, when interfacing with a menu, a cursor can be moved over items to be selected or a discrete decoder can be used to make a direct menu selection. In certain cases, the use of a classifier may provide a substantial performance improvement to an end user. Indeed, we have demonstrated a discrete decoder which transmits the user’s intent at a rate of up to 6.5 bits per second (~15 words per minute), which is faster than continuously guiding a cursor to make discrete selections (Santhanam et al., 2006).

Despite the potential clinical benefit that intracortical BCI classifiers may provide, the subject of self-recalibrating classifiers has received little attention in the intracortical community. In contrast, there is previous work in the EEG community which characterizes signal drift (e.g., Shenoy et al. (2006); Pascual et al. (2011)), and proposes self-recalibrating classifiers. Example algorithms include approaches based on variational Kalman filtering (Sykacek and Roberts, 2002; Sykacek et al., 2004), expectation maximization (Blumberg et al., 2007) and a variety of other methods to update the parameters of an LDA classifier (Vidaurre et al., 2008; Vidaurre and Blankertz, 2010). However, many of these algorithms consider only binary classification, and it is not clear the complexity of some (e.g., Sykacek and Roberts (2002); Sykacek et al. (2004); Blumberg et al. (2007)) is required for BCI application.

In the present work, we seek to develop self-recalibrating classifiers for intracortical BCI. We note this differs from the previous work of Santhanam et al. (Santhanam et al., 2009). Santhanam et al. develop classifiers with improved within-day accuracy by accounting for unobserved influences on neural activity, while we focus on achieving stable across-day performance through self-recalibration. We chose to forgo spike sorting in favor of the use of threshold crossings. That is we mark any voltage event which crosses a set threshold on each electrode as a spike. In one monkey, we also enforce that threshold events must pass a shape heuristic. Previous work has shown only minor reductions in performance when threshold crossings are used in place of spike sorting (Fraser et al., 2009; Chestek et al., 2011), and this removes the need to track and identify units across days.

Having made this design decision, we study how firing rates drift within and between recording sessions on different electrodes. This enables us to then develop self-recalibrating classifiers which take advantage of structure we find in this drift. Along the way, we also examine the performance of a standard BCI classifier that undergoes no daily retraining. In addition to being a novel result in itself, this provides a baseline when assessing the performance of the self-recalibrating classifiers presented in this work.

2. METHODS

Reach task and neural recordings

Animal protocols were approved by the Stanford University Institutional Animal Care and Use Committee. We trained two adult male monkeys (Macaca mulatta, monkeys I and L) to perform center-out reaches for liquid rewards. As illustrated in Fig. 1, visual targets were back-projected onto a vertical screen in front of the monkey. A trial began when the monkey touched a central yellow square. Following a randomized (300 – 500 ms) hold period, a visual reach target appeared. After a brief (35 ms) delay, the animal was permitted to reach. Reach targets could appear at one of twenty-eight locations. Each of the twenty-eight targets was typically located at a distance of 4.5 cm, 7.0 cm, 9.5 cm, or 12 cm from the central target and at an angle of 40°, 85°, 130°, 175°, 220°, 310°, or 355° from horizontal so that the targets were arranged in 4 concentric rings of 7 targets each. For all analyses in this paper, we collapse across reach distance and only consider the direction of reach on each trial. After the reach target was held for 200 ms for monkey L and 300 ms for monkey I, a liquid reward was delivered.

Figure 1.

A representative trial of the center out reaching task (Monkey L, trial ID: R,2008-12-26,mm, 109). The top row is the behavioral task. The middle row contains spike rasters across 96 electrodes and the bottom row presents the timecourse of the hand position. Full range of scale for the hand position is 15 cm.

Monkeys sat in a custom chair (Crist Instruments, Hagerstown, MD) with the head braced and nonreaching arm comfortably restrained. A 96-channel silicon electrode array (Blackrock Microsystems, Salt Lake City, UT) was implanted in motor cortex contralateral to the reaching arm. When identifying neural spikes on each electrode, threshold crossings (Chestek et al., 2009; Fraser et al., 2009) were used in place of spike sorting. For monkey L, we also required voltage waveforms to pass a shape heuristic. Threshold levels for each electrode were set at the beginning of each recording day with an automated method and then, with possible rare exception, held fixed for the remainder of data collection. The automated method of setting threshold levels consisted of collecting 2 seconds of filtered voltage data sampled at 30-kHz from each electrode and calculating a root-mean-square (RMS) like value from this data. Thresholds levels were then set at three times this value for each electrode.

Recordings were made on a total of 41 (monkey L) and 36 (monkey I) days spanning a period of 48 (monkey L) and 58 (monkey I) days in total. In all of the work that follows, we limit ourselves to only analyzing successful trials. In the data analyzed, there were (mean ± s.d.) 1737 ± 482 (monkey L) and 1688 ± 368 (monkey I) successful trials per day spanning 53 ± 16 (monkey L) and 88 ± 16 (monkey I) minutes per day.

2.1. Mathematical Notation

We aim to develop BCI classifiers which maintain stable day-to-day performance without any daily supervised retraining. For clarity, we will speak of developing classifiers within the context of the datasets used in this study, but the techniques presented here should generalize to other intracortical BCI classification scenarios.

Throughout this work, we rely on probabilistic methods to infer the intended reach direction from neural data. For trial t on day d, we form a feature vector, xd,t ∈ ℕE×1, from our neural data by counting the number of threshold crossings on each of E electrodes of the recording array. The notation xd,e,t will refer to the specific spike count for electrode e‡. Similarly, yd,t ∈{1, … , J} indicates which one of J directions a reach is made in for a specific trial and day. Throughout this work we follow the convention of using bold script exclusively to denote vectors. The symbol P will be used to indicate probability distributions.

On any given trial there is a probabilistic model relating observed spike counts to reach direction. We model this with the distribution P(xd,t|yd,t; Θd,t), where Θd,t is a set of parameters characterizing the distribution. In this work for electrode e, these parameters consist of the means μe,j and variances ve,j of spike counts when reaches are made in each direction j. We will refer to this set of parameters as tuning parameters. While both means and variances can drift in principle, we focus on achieving stable classification through tracking changes in mean spike counts. We term the set of μe,j parameters for a fixed reach direction j as class means, as they are mean counts conditioned on a class of movement. We can index class means across days and trials as μd,e,j,t, where d indexes day and t indexes a trial within a day. In some of the work that follows, we will speak of class means across all trials of a day, which will we denote as μd,e,j.

2.2. Tracking Drifting Tuning Parameters Across Time

Movements of the recording array relative to the brain (Santhanam et al., 2007), scar tissue buildup (Polikov et al., 2005), behavioral changes (Chestek et al., 2007) and neural plasticity (Taylor et al., 2002; Jarosiewicz et al., 2008; Ganguly and Carmena, 2009; Chase et al., 2012) may all cause tuning parameters to drift. In this section, we track drift in class means across days and time by convolving spike counts for all reach directions in a particular direction with a Gaussian kernel. This is a simple way of smoothing spike counts to estimate tuning parameters when true reach direction is known on each trial. This is an appropriate assumption for this analysis which aims to establish basic properties of tuning parameter drift. However, the techniques introduced in this section should not be confused with the methods that are introduced later to track drifting tuning parameters by our self-recalibrating classifiers when knowledge of true reach direction is not assumed known. Mathematically, estimates of tuning parameters are formed through convolution with a kernel as

| (1) |

where K(t − s; w) is Gaussian density function with 0 mean and standard deviation w and C = Σs:yd,s=j K(t−s; w) is a normalizing constant. Mathematically, the function K indexes over trial number, and therefore, t−s in the argument is the distance in number of trials between two trials and not time. There remains the challenge of selecting the proper value of the width, w, of the Gaussian density function. For the purposes of this paper, we will present results for a single value of w and then show in Supplementary Results that the qualitative results we obtain are robust as the value of w is varied.

We are interested in comparing the amount of drift between and within days. If we smooth only within days, we enforce smoothing within but not across days and we may bias our results to show larger between than within day drift. To account for this, we ignore day boundaries and smooth across all trials for a given electrode and reach direction as if they were on the same day. This may tend to “over-smooth” between days, but to foreshadow what is to come, we would like to argue that within-day variation in tuning parameters is small relative to between-day variation. Therefore, this procedure is conservative for our purposes.

2.3. A Standard BCI Classifier

We desire to examine the impact of tuning parameter drift on a standard BCI classifier. We select a Gaussian, naïve Bayes decoder as our standard classifier, which has been used for BCI classification before (Santhanam et al., 2006; Maynard et al., 1999). Briefly, this classifier models spike counts on electrodes as independent Gaussian distributions conditioned on reach direction. The notation μe,j and ve,j refer to the mean and variance of the distribution for electrode e and reach direction j. For notational convenience, we will group all the means and variances for a single electrode e into the vectors μe ∈ ℝJ×1 and ve ∈ ℝJ×1, respectively. The joint probability of observing spike counts for all electrodes can be computed as the product of probabilities for individual electrodes. Formally, we write this as

| (2) |

A uniform prior probability of reaching in any direction, P(yd,t) = 1/J, is employed and Bayes’ rule can then be used to find the posterior probability of reaching in direction j given neural data, P(yd,t = j|xd,t). This posterior is calculated for each reach direction, and the direction with the highest probability is selected as the decoded direction for each trial.

In this work we will examine the performance of two versions of this standard classifier, which differ only in the way they are trained. The first version, referred to as the standard retrained classifier, learns the μe and ve parameters fresh with trials from the beginning of each day on which classification will be performed. This emulates standard practice in the BCI field. The second version, referred to as the standard non-retrained classifier, is trained initially on all trials collected on a small number of days in which neural data and desired reach direction is known. After this training, the parameters of the standard non-retrained classifier are held fixed, and it is then used to predict reach direction for trials on subsequent days without any type of retraining or recalibration.

2.4. A Probabilistic Self-Recalibrating Classifier

We now describe the intuition and probabilistic model behind our two self-retraining classifiers. The first version, which we refer to as the SR classifier, takes a principled probabilistic approach which provides insight into the simplified self-recalibrating (SRS) classifier we subsequently present. Readers interested only in the SRS classifier may skip to section 2.5 after reading the part of this section outlining the probabilistic model common to both classifiers without loss of understanding.

The SR classifier can be considered an augmented standard classifier. It continues to assume tuning parameters are fixed within a day, but it no longer assumes class means are known at the beginning of each decoding day. Mathematically, it achieves this by introducing a distribution over class mean values that a decoding day begins with. As a decoding day progresses, the SR classifier produces refined distributions over class means while simultaneously estimating reach direction on a trial-by-trial basis.

A formal description of probabilistic model for the SR classifier follows. When explicitly referring to the class mean for electrode e, reach direction j on day d, we will use the notation μd,e,j. In results we show that the μd,e,j parameters for the same electrode drift from day to day in a highly correlated manner. Intuitively, this means that the average spike counts observed for two different reach directions on the same electrode tend to move up and down together across days. We capture this intuition by referencing all class means for electrode e to the same base value bd,e for day d, and we allow this base value to be drawn from a Gaussian distribution independently on each day. Mathematically,

| (3) |

where me is the mean and se is the variance of the distribution from which base values are drawn. For notational convenience we will group all the base values for all electrodes for day d into a vector be ∈ ℝE×1. We model the base values for different electrodes as independent, and the prior probability for the set of base values for all electrodes is therefore given by

| (4) |

We relate the class mean for reach direction j for an electrode to its base value through an offset, oe,j, so that

| (5) |

The offset, oe,j, is fixed across days. Class means for a single electrode can then vary from day to day but they must move up and down together. Having defined a probabilistic model for bd,e, we specify an observation model for a single electrode by defining the probability of a spike count on electrode e for trial t on day d given bd,e and reach direction j as

| (6) |

This observation model is almost identical to that of the standard classifier with one important exception: spike counts now depend on the base value, which is a random variable that can vary from day to day. To specify the complete observation model, we again model electrodes as conditionally independent given reaching direction so that

| (7) |

To complete the specification of the probabilistic model for the self-recalibrating classifier, we define the prior probability of reaching in any direction as uniform, exactly as specified for the standard model.

2.4.1. Decoding with the Self-Recalibrating Classifier

We now show how to decode reach direction while simultaneously refining knowledge of class means with the SR classifier. For each trial, we seek to find two distributions. The first is , the posterior distribution over reach directions. The most likely direction from this distribution is selected as the decoded reach direction on each trial. The second distribution is , which embodies the decoder’s knowledge of the base values for a day given the neural activity that it has seen.

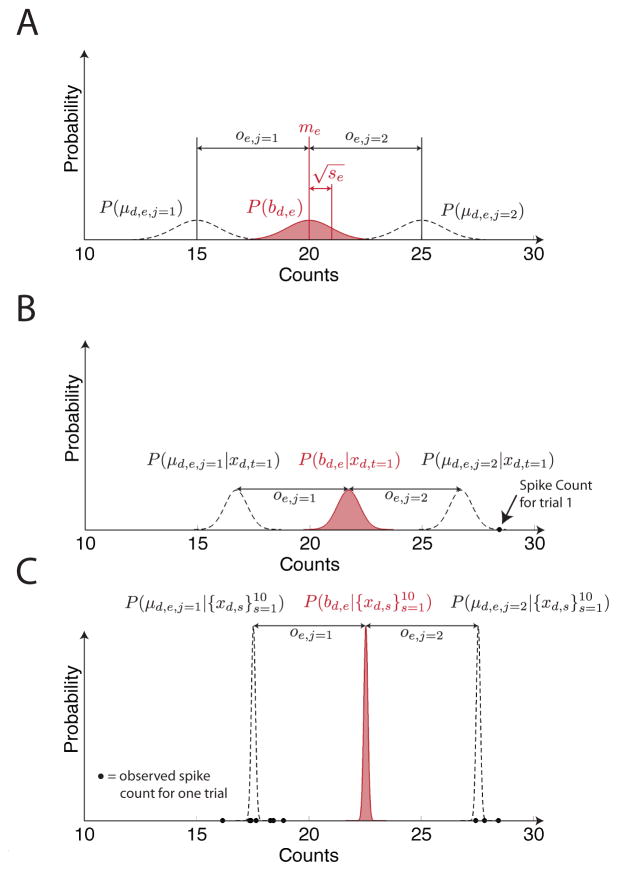

As a decoding day progresses, the decoder’s knowledge of the true base values for a day, as represented in the distribution , becomes more refined. This is possible even though the decoder is never provided with knowledge of the user’s true reach directions. The key intuition is that spike counts will tend to cluster around class means for a day, and this structure can be exploited by our classifier, as illustrated for a one electrode and two reach direction decoding scenario in Fig. 2.

Figure 2.

An illustration of the probabilistic distribution over tuning parameters for the self-recalibrating classifier at three different points in time during a decoding session on day d for an example where spike counts recorded on one electrode are used to predict reaches in one of two directions. Panel A shows the distribution over the base value for the day, bd,e, for the electrode at the start of the decoding day before any neural activity is available. This distribution is a Gaussian distribution with mean me and variance se. In all panels, distributions over bd,e are shown in red to indicate that this is the distribution the self-recalibrating classifier explicitly represents. Panel A also shows the distributions over μd,e,j=1 and μd,e,j=2, which are the mean number of spike counts conditioned on reaching in directions 1 and 2. The classifier does not explicitly represent these distributions, but they can be recovered from the distribution over bd,e with the formula μd,e,j = bd,e + oe,j . Panel B shows the distributions over bd,e, μd,e,j=1 and μd,e,j=2 after spike counts for one trial have been observed. Importantly, note that the true reach direction associated with this spike count is not made available to the classifier. Panel C shows distributions over the same quantities after 10 trials have been observed. The solid dots in panels B and C represent spike counts observed on individual trials.

We now describe the formal decoding procedure for arriving at the distributions and for each trial. The algorithm is recursive and will use the results from the previous trial, the distributions and , as its starting point in its calculations for trial t. When decoding the first trial, the prior distribution on reach directions, P(yd,t), is used in place of and the prior distribution on base values, P (bd), is used in place of .

Calculating . For trial t, we calculate using Bayes’ rule as

| (8) |

where in moving to the second line we have defined , made use of the fact that reach direction is independent of neural activity so that , and recognized that the denominator of the first line is a normalizing constant calculated as .

The scalar ct|j is the probability of the neural activity for trial t given all past neural activity seen during the day if the subject reaches in direction j. We will soon describe a means of calculating its value, but first note that it appears in the calculations for another distribution, , which we will need shortly. This distribution gives the probability of base values conditioned on all observed neural activity if the subject were to reach in direction j for the current trial. To calculate it, we again use Bayes’ rule to write

| (9) |

where P(xd,t|bd, yd,t = j) is given by Eq. 7 and is the posterior over base values obtained when decoding the previous trial. In forming the numerator of Eq. 9, we have used the fact that xd,t conditioned on bd is independent of all previous neural observations and that base value is independent of reach direction. We see that the numerator on the right hand side of Eq. 9 is in the form of the product of two Gaussians. is defined to be a Gaussian distribution with mean μt−1 and covariance matrix . Viewed as a function of bd, P (xd,t|bd, yd,t = j) is Gaussian with mean xd,t − oj, where oj ∈ ℝE×1 is a vector of offsets for each electrode for reach direction j and covariance matrix Vj ∈ ℝE×E, where Vj is a diagonal matrix with the values of ve,j along it’s diagonal. We can then calculate the mean, mt|j, and covariance t matrix, Σt|j, of of the left hand side of Eq. 7 as

| (10) |

| (11) |

where the notation ·−1 indicates matrix inversion. The scalar ct|j is then the integral over the numerator of Eq. 9 and can be calculates as

| (12) |

where P(xd,t|bd = mt|j, yt,d = j) is obtained by evaluating Eq. 7 for the current observation at bd = mt|j and is obtained by evaluating the posterior over base values from the last trial at the same point, bd = mt|j.

Calculating . Finally, we compute , the posterior distribution over the base values for all electrodes. An analytical expression for this can be written as

| (13) |

where the two terms on the right hand side of Eq. 13 are obtained from Eqs. 8 and 9. The right hand side of equation Eq. 13 is a mixture of multivariate Gaussians. This will be problematic as in general there will be an exponentially increasing number of components in the representation of as t increases. Therefore, we approximate the right hand side of Eq. 13 with mean mt and covariance matrix Σt using moment matching as has been done in previous hypothesis merging algorithms (Blom and Bar-Shalom, 1988). We use the standard formulas for moment matching with two multivariate Gaussians to write

| (14) |

| (15) |

where is calculated from Eq. 8. This completes the decoding procedure for trial t. For each new trial, this set of calculations is repeated in an iterative manner.

2.4.2. Learning the Parameters for the Self-Recalibrating Classifier

While the self-recalibrating classifier is designed to produce

stable day-to-day classification without daily retraining, it must be

initially trained once. To perform this initial training there must exist a

small number of days for which neural activity,

xd,t, has been

recorded along with associated reach directions,

yd,t. We will use the notation

to refer to this set of

days. To avoid any possible confusion, we stress that after the classifier

has been trained on this initial set of days, it requires no further

supervised retraining and will be equipped to autonomously calibrate itself

on new data that it encounters during normal decoding use.

to refer to this set of

days. To avoid any possible confusion, we stress that after the classifier

has been trained on this initial set of days, it requires no further

supervised retraining and will be equipped to autonomously calibrate itself

on new data that it encounters during normal decoding use.

The purpose of the initial training is to learn the mean, me, and variance, se, characterizing the distribution of base values for each electrode from Eq. 3 and the offset, oe,j, and variance, ve,j, characterizing the observation model in Eq. 6 for each electrode-class pair. Fitting the self-recalibrating classifier is more difficult than fitting the standard classifier because the daily base values for each electrode, bd,e, cannot be directly observed. Instead, these values are randomly drawn each day from the distribution specified by Eq. 3, and after the base values are drawn on each day, they specify the mean of the Gaussian distributions in Eq. 6 from which neural spike counts are drawn. Thus, the neural data we directly observe is essentially one step removed from the distribution that is characterized by the parameters we desire to estimate. Fig. 3 illustrates the conceptual difference between the standard and self-recalibrating classifier models.

Figure 3.

Graphical models for the generation of a single spike count on electrode e for trial t on day d for the standard (A) and self-recalibrating (B) classifiers. Circles denote random variables and dots indicate model parameters. In the standard classifier, the electrode-class means, μe,j, and variances, ve,j, for all reach directions directly characterize the distribution over observed spike counts, xd,e,t. For the self-recalibrating classifier, the base value for electrode e, bd,e, is randomly generated each day from a Gaussian distribution with mean me and variance se. For a given base value, spike counts are produced according to a distribution with electrode-class means μe,j = bd,e + oe,j and variances ve,j . In this figure, the notation and indicates the set of variances and means, respectively, for all reach directions for electrode e.

The expectation-maximization (EM) algorithm (Dempster et al., 1977) is a general procedure for finding the maximum likelihood model parameters in the presence of unobserved variables, in this case bd,e. The EM algorithm is an iterative procedure which is initialized with an initial guess for the model parameters. In this case, these are the me, se, oe,j and ve,j parameters. Each iteration of the algorithm produces new estimates for these parameters through a two step procedure. In the first step (referred to as the E-step) of each iteration, the latest parameter estimates and observed data are used to form a posterior distribution over the unobserved variables conditioned on the observed data. For the self-recalibrating classifier, the unobserved variables are the daily base values for each electrode, bd,e, and the observed data are the neural observations, xd,t, and reach directions, yd,t, for all trials on all days in the training set. In the second step of each iteration (referred to as the M-step), parameter values are updated so that the expectation of the log likelihood of the observed and unobserved data, computed using the posterior distribution found in the first step of each iteration, is maximized. This algorithm is known to converge to a local optimum. See Supplementary Methods for specific details of the EM algorithm used in this work.

2.4.3. Robust Classification Through Outlier Detection

The classification procedure described above can be modified to improve robustness on real world data. Key to the probabilistic model underlying the self-recalibrating classifier is the specification that firing rates can change between but not within days. While minor changes in firing rate throughout a day are tolerable, large changes, which may be observed in real data, can be problematic.

In particular, the self-recalibrating classifier represents knowledge of the base values for a day obtained from classifying trials 1 through t with the distribution . This distribution is refined for each trial that is classified, and over time the uncertainty for the base values for a day, represented in the covariance matrix Σt, is reduced. This presents no problem in normal operation. However, if the firing rate of an electrode suddenly changes after the uncertainty in base values for a day is reduced, spike counts recorded on that electrode will strongly and erroneously affect classification.

The classification procedure can be modified by adding an additional step to be performed before any of the steps already described when classifying reach direction for trial t. In this step, electrodes with large firing rate changes are flagged, and the uncertainty in the estimate for the base value for those electrodes is increased to a predetermined value. This prevents those electrodes from dominating when determining reach direction probability and maintains classification accuracy. As classification continues, uncertainty around the base value estimates for the flagged electrodes is reduced starting from the predetermined value as the self-recalibrating classifier refines the distribution .

To flag erratically behaving electrodes, a distribution which predicts the probability of the spike count on trial t for electrode e given all previous neural data, , is formed for each electrode as shown in Supplementary Methods. This can be used as a gauge for determining if the observed spike count, xd,e,t, for a trial and an electrode is anomalous by setting upper and lower thresholds on the value of xd,e,t so that a normally behaving electrode will only exceed these values approximately 1% of the time. Whenever an electrode does exceed these values, it is flagged as behaving erratically. As with any anomaly detection procedure, thresholds have to be set to balance true and false positive rates, and a false positive rate of 1% should not significantly affect classification performance.

After one or more electrodes are flagged as behaving erratically for trial t, uncertainty around the estimates for their associated base values in the distribution are increased. For ease of notation, we will momentarily switch from representing the set of base values in vector notation to set notation, so that each entry in the vector bd will be represented by a corresponding member in the set . We will refer to the set of flagged electrodes for trial t as Et, flagged and the set of non-flagged electrodes as Et,non-flagged. The uncertainty for the base values of erratically behaving electrodes represented in the distribution for is reset in a two step procedure. In the first step, the distribution over only non-flagged electrodes, , is formed. Formally, this is accomplished by forming the marginal distribution over the electrodes in Et,non-flagged from and is easily accomplished since is a Gaussian distribution. In the second step, a new distribution over the base values for all electrodes, which we refer to as , is formed as

| (16) |

where P′(bd,e) are Gaussian distributions. The mean of the distribution P′(bd,e) is set to, me,t−1, which is the element for electrode e of the mean vector for the distribution . This ensures that the mean of the final distribution, , is the same as that of original distribution . The variance of the distribution P′(bd,e) is set to se, the variance of the distribution that base values are modeled as being drawn from at the start of each decoding day. Computationally, these two steps can be carried out through a series of simple manipulations of the covariance matrix for and increase the uncertainty around base values for suspect electrodes in . After the distribution for is formed, classification, as described by the algorithm above, is performed where is replaced with as a starting point when decoding trial t.

2.5. A Simplified Self-Recalibrating Classifier

The SR classifier addresses the problem of base values, bd, which can drift from day to day by starting with a prior distribution, P(bd), over these values and then forming refined distributions, , over base values based on neural activity seen throughout the decoding day. When predicting reach direction for a trial, the SR classifier then considers the probability of reach directions under all possible values for bd and weights its estimate according to how likely each value of bd is, given by .

Thus, the SR classifier never assumes any single value

of bd is correct, but

decodes by considering an infinite number

bd values,

each weighted by some probability. This represent a fully probabilistic means of

decoding. However, it is not the only possible means of accounting for drifting

tuning parameters. In particular, we can forego modeling uncertainty in

bd and instead

decode with a single point estimate for

bd formed for

each trial. For the simplified classifier,

bd is defined

so that bd,e is the average number of spike counts

on electrode e for any trial on day d. We can

express this in mathematical notation as bd,e

=  [xd,e,t].

[xd,e,t].

An estimate for bd,e on trial t, which we refer to as , can be formed by taking the empirical average of all of the observed spike counts for electrode e on day d up to and including trial t. This estimate can be recursively formed as

| (17) |

| (18) |

We must select initial values for nd,0 and bd,e,0, where the hat has been dropped on bd,e,0 to indicate this is a parameter of the model specifying a value with which to initialize Eq. 18. If we select nd,0 = 0, will be the true empirical average for trials 1 through t. However, this has one disadvantage. Because of the very fact that Eq. 18 forms estimates as the empirical average of spike counts, at the start of the day there will be no previous trials with which to average spike counts for initial trials with and hence values for will be strongly influenced by the spike counts of each trial. This will result in large fluctuations in for the early trials of a decoding session. An alternative is to select bd,e,0 so that it represents the expected average spike count on electrode e for any given day and assign this some initial weight by setting nd,0 > 0. Intuitively, we can think of starting each day with an initial estimate of the average spike count for the electrode that was obtained as the empirical average of nd,0 “virtual samples.” This initial estimate is analogous to the prior distribution for the fully probabilistic classifier specified in Eq. 3. Importantly, as shown in Supplementary Methods, as long as nd,0 and bd,e,0 are chosen to be finite, approaches the true mean firing spike count for electrode e as the number of trials observed during a day grows. While this is reassuring, there are still values of these parameters that will result in optimal decoding, and below we discuss how values for nd,0 and bd,e,0 can be learned from the training data.

After an estimate for is formed for each trial, an estimate for the class means, μd,e,j, can be obtained with Eq. 5, and a classification of reach direction can be made using the standard classifier described in section 2.3 with the updated class means. The SRS classifier can be conceptualized as consisting of two algorithms acting together. The first algorithm tracks base values, and hence tuning parameters, using an accumulated average of spike counts. This simple first algorithm is independent of and provides input in the form of updated tuning parameters to the second algorithm, which serves as the decoding core and is nothing more than the standard classifier. Fig. 4 presents a schematic depiction of how the SRS classifier decodes a single trial and depicts the separation of the self-recalibrating and classification portions of the SRS decoding algorithm. See Supplementary Methods for a more formal description of the relationship between the SR and SRS classifiers. We note this method of tracking baseline firing rates is similar to the work of Li et al. (Li et al., 2011), who in their work on adaptive decoders for continuous control signals experimented with tracking changes in baseline firing rates by measuring the empirical average of spike counts. However, since their algorithm runs in a batch mode and does not update parameters on a single trial basis as our does, they do not introduce the concept of selecting initial values and weights to begin the empirical average for a day with.

Figure 4.

An illustration of how the SRS classifier predicts reach direction for a single trial. Starting from the top, spike counts, xd,t, for trial t are recorded and the SRS decoder updates its estimate for the day’s base values, b̂d,e,t, using a simple accumulated average. Updated estimates for tuning parameters, μ̂d,e,j, are then calculated from the updated estimates of the base values. Finally, the standard classifier is used with the updated tuning parameters to predict reach direction.

2.5.1. Learning Model Parameters for the Simplified Self-Recalibrating Classifier

As with the SR classifier, the SRS classifier must also be trained once on an initial set of training days. The goal of this training is to learn the electrode-class variances, ve,j, and the electrode-class offsets, oe,j, for all electrodes and reach directions. In addition, values for bd,e,0, must be learned for each electrode, and the initial weight, n0, which will be assigned to these means must be determined.

Given a collection of days,  , we estimate values for

bd,e,0,

oe,j and

ve,j as follows. First, for each day in

, we estimate values for

bd,e,0,

oe,j and

ve,j as follows. First, for each day in

we estimate the average

spike counts on each electrode over all reach directions,

μ̂d,e, and the average

spike counts on each electrode when the subject made reaches in direction

j, μ̂d,e,j.

We calculate

we estimate the average

spike counts on each electrode over all reach directions,

μ̂d,e, and the average

spike counts on each electrode when the subject made reaches in direction

j, μ̂d,e,j.

We calculate

| (19) |

and

| (20) |

where Td is the number of trials on day d, and Td,j is the total number of trials with reaches in direction j on day d. Having calculated these intermediate values, an estimate of bd,e,0 is then calculated as the average of the daily average spike counts as

| (21) |

where |  | is the number of days in

| is the number of days in  . Estimates for the class-electrode

offsets, oe,j, are calculated as the average of

the difference between daily class-electrode mean spike counts and the daily

average spike counts. We write this as

. Estimates for the class-electrode

offsets, oe,j, are calculated as the average of

the difference between daily class-electrode mean spike counts and the daily

average spike counts. We write this as

| (22) |

Finally, the electrode-class variances, ve,j, are estimated as

| (23) |

which can be understood as a generalization of the standard formula for the variance of a sample generalized to account for values of μd,e,j which may change between days.

Learning the value of n0 The equations above are used to estimate values for the bd,e,0, oe,j and ve,j parameters of the model. We turn to cross-validation to learn a value for n0, which is the amount of weight or number of virtual samples that should be assigned to the initial estimate of marginal spike counts for a day, bd,e,0.

In the cross-validation procedure, we seek the value of

n0 which will produce the highest

classification accuracy on novel data. To do this, multiple rounds of

training and validation are performed on the data that is present in

. In each round, one day in

. In each round, one day in

is set aside as a

validation day. On the remaining days, the

bd,e,0,

oe,j and ve,j

parameters of the model are learned. Importantly, these values are learned

on all days in

is set aside as a

validation day. On the remaining days, the

bd,e,0,

oe,j and ve,j

parameters of the model are learned. Importantly, these values are learned

on all days in  except the

validation day. This is critical because cross-validation uses the

validation day to simulate classification on novel data, and it is therefore

important that none of the trials in the validation day are used for

learning any part of the model for a round of cross-validation. After

learning these parameters, a value of n0 is

selected and the classifier is used to predict reach direction for each

trial in the validation day. This is repeated for a range of

n0 values. For a single round of

cross-validation, one or more values of n0 will

produce the highest classification accuracy on the validation day. To select

the final value of n0 for use in the trained

classifier, a round of cross validation is performed for each day in

except the

validation day. This is critical because cross-validation uses the

validation day to simulate classification on novel data, and it is therefore

important that none of the trials in the validation day are used for

learning any part of the model for a round of cross-validation. After

learning these parameters, a value of n0 is

selected and the classifier is used to predict reach direction for each

trial in the validation day. This is repeated for a range of

n0 values. For a single round of

cross-validation, one or more values of n0 will

produce the highest classification accuracy on the validation day. To select

the final value of n0 for use in the trained

classifier, a round of cross validation is performed for each day in

, and the value of

n0 which produces the highest average

accuracy across all rounds is chosen.

, and the value of

n0 which produces the highest average

accuracy across all rounds is chosen.

3. RESULTS

3.1. Tracking Tuning Parameter Drift Across Time

Figs. 5 and 6 show the results of the analysis of drifting tuning parameters for monkeys L and I, respectively. Throughout this work threshold crossings were collected in a single window per trial. In this analysis and in all of the work that follows we count spikes falling in a single 250 millisecond long window for each trial starting 150 milliseconds after the go cue. As described in section 2.2, spike counts from each electrode were then convolved with a Gaussian kernel to produce smoothed estimates of tuning parameters across trials. The results presented here were obtained with a kernel width of 100 trials. Results obtained with kernel widths of 25, 50 and 200 trials were qualitatively similar (see Supplementary Results).

Figure 5.

Statistical structure of changes in tuning parameters across days for monkey L. Panel A: Example traces of μ̂d,e,j,t for two representative tuning parameters on the same electrode across the first 25 days of data. Background shading represents day boundaries. Panel B: Distribution of the between (se,j for all e and j) and within-day (sd,e,j for all d, e, and j) standard deviations of tuning parameters. A small number (.08%) of within-day standard deviations are outside the range of this plot. Panel C: Daily means (black dots) and associated ellipses which indicate regions in which tuning parameters for each day fall with approximately 95% probability for the same μ̂d,e,j,t parameters shown in panel A on each of the 41 days of recorded data. Panel D: Distribution of correlation coefficients computed across and within electrode pairs. To relate panel C to D, the correlation of the cloud of 41 black points in (C) is one of the pairs of correlations that was used to construct the “same electrode” distribution in panel D. Panel E: Average values with 95% confidence intervals of unsigned percent change of mean tuning parameter values on days 2–41 from their corresponding value on day 1. Three electrodes were withheld form this analysis due to the small value of the tuning parameter for one or more reach directions on day 1. See text for details.

Figure 6.

Summary of the statistical structure of changes in tuning parameters across days for monkey I. Panels A, B and C correspond to panels B, D and E in Fig. 5. No electrodes were withheld when calculating the average shown in panel C. For space reasons, panels showing representative parameters traces and the spread of values for a pair of tuning parameters are not shown for monkey I.

Panel A in Fig. 5 shows the traces of two tuning parameters for one electrode for monkey L. Traces for monkey I were similar but are not shown. Three important properties of tuning parameter drift are apparent in this plot. First, tuning parameters change much more between than within days. Second, tuning parameters for the same electrode tend to move up and down together across days. Finally, tuning parameters do not tend to drift progressively further from their values on day 1 over time.

Fig. 5B and Fig. 6A provide a quantitative measurement of the observation that tuning parameters appear to drift more between than within days. The drift of a tuning parameter within a single day is quantified as the standard deviation of its estimated values over all of the trials within a day, σd,e,j. This can be measured for all days, electrodes and reach directions. The distribution of all σd,e,j values is plotted as the dashed line in each figure. Similarly, drift between days is quantified as the standard deviation of mean tuning parameter values for each day, σe,j. The distribution of σe,j parameters is plotted as the solid line in both figures. The mean of the between-day standard deviation values is significantly larger than that of the within-day standard deviation values (permutation test, p < .01). This finding motivates the decision to simplify the self-recalibrating classifiers so that they ignore within-day drift but compensate for between-day drift in tuning parameters.

Fig. 5D and Fig. 6B provide evidence that tuning parameters on the same electrode drift in a correlated manner. Correlation coefficients for all pairs of daily mean tuning parameters (the black dots for the example pair of tuning parameters in Fig. 5C) on the same electrode were calculated for each electrode. We also examined correlation across electrodes by measuring the correlation of daily mean values for pairs of tuning parameters for the same reach direction across all pairs of electrodes. The distribution of these two sets of correlation values is shown in Fig. 5D and Fig. 6B. For both subjects, correlation values for tuning parameters on the same electrode concentrate near 1. This insight has important practical implications for the design of self-recalibrating classifiers which will be detailed in Discussion.

While tuning parameters drift in a correlated manner on the same electrode, it is important to point out that the distributions of correlation coefficients in Fig. 5D and Fig. 6B leave room for tuning parameters to move in a limited manner relative to one another while still moving up and down together. Indeed, this is visible in Fig. 5A where traces of the two tuning parameters move up and down together between days but are closer to one another on days 1–4 than they are for days 7–10, for example. We will show below that our decoders, which use a simplified design that models parameters on the same electrode as moving in perfect lockstep, achieve good performance, but future decoders could be developed to model additional aspects of tuning parameter drift. Interested readers may refer to Supplementary Results, where we quantify changes in modulation depth across time.

Fig. 5E and Fig. 6C measure the tendency of tuning parameters to progressively drift from their value on day 1. The percent change of the mean tuning parameter values from day 1, Δd,e,j, for days 2 and on for all tuning parameters was calculated. The average of the absolute value of the percent change in tuning parameter values across all electrodes and reach directions for each day was then plotted in these figures. When performing this analysis, for numerical reasons we removed any electrodes which had a value of less than .001 spikes/window for any reach direction. The results are shown in Fig. 5E and Fig. 6C. If tuning parameters drift in an unconstrained manner, the average of the unsigned Δd,e,j values should monotonically increase with time. However, average values do not appear to systematically increase with time, supporting the conclusion that tuning parameters can drift from day to day but do so in a constrained manner.

3.2. Evaluation of Classifier Stability with Prerecorded Data

We compare the performance of the SR, SRS, standard retrained and standard non-retrained classifiers on the two prerecorded datasets described in section 2. The large number of days in each makes these ideal datasets for evaluating classifier stability.

The standard retrained classifier is trained fresh each day using the first 400 trials of the day. After training, the classifier is used to predict reach direction on each of the remaining trials of the day. Importantly, while true reach direction is known for each trial of the datasets and is used for training, this information is not made available to the classifier when predicting reach direction (testing) and is only used after a prediction is made to evaluate classifier accuracy.

The SR, SRS and standard non-retrained classifiers must be initially trained on a small number of days where neural activity is recorded and the desired reach direction for trials is known. After this they require no further training when classifying reach direction on days when only neural activity is available. For this analysis, all trials on the first 10 days of each dataset are used for training these classifiers. After this training, the classifiers are used to classify reach direction for trials on the remaining days of each dataset. To allow a fair head-to-head comparison with the standard retrained classifier, classification on each test day for these classifiers begins at trial 401 of each day. In this way, the set of trials that each classifier predicts reach direction for is the same on any given day. The breakup of data for training and testing each of the classifiers is shown in Fig. 7B.

Figure 7.

Partitioning of data when testing classifiers with (A) and without (B) daily retraining as described in Methods. Each column of rectangles represents an experimental day of collected data, and each rectangle represents a trial.

When training all classifiers, any electrode with an average of less than two spike counts per trial on the training data (the first 400 trials of each day for the standard retrained classifier and all trials on days 1–10 for the standard non-retrained, SR and SRS classifiers) is not used when subsequently decoding reach direction on test data. This step prevents these electrodes from causing problems if their average spike counts were to rise at a later point in time when performing classification. Critically, this can be done in clinical practice as the criterion for exclusion is based only on training data.

3.3. Performance of the Standard Classifier without Daily Retraining

We now examine the implications of tuning parameter drift on the standard non-retrained classifier. The performance of this classifier indicates what might happen if we simply used the standard classifier without retraining and did nothing to account for drifting tuning parameters. Of course, this is an offline analysis and subjects may be able to adapt their neural signals through cognitive strategies in a closed loop setting to improve BCI performance (Chase et al., 2009), but the results presented here may still be considered as a type of lower bound on performance without retraining.

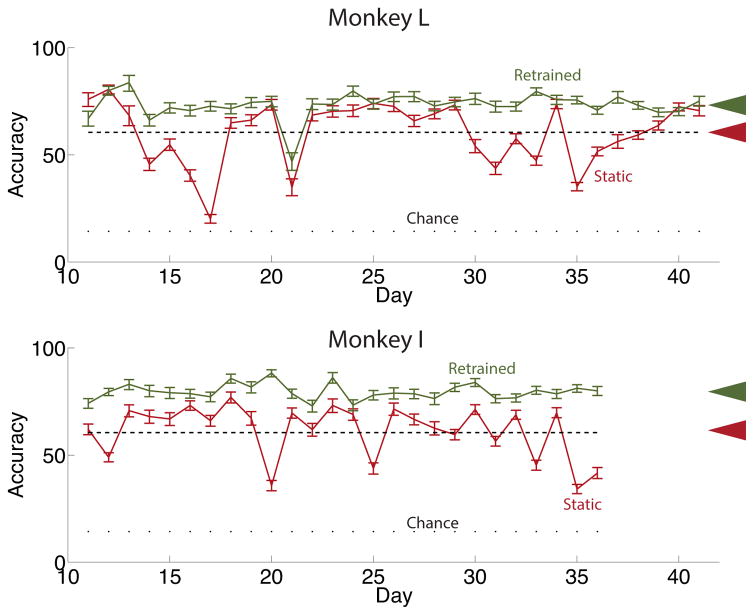

Examination of Fig. 8, which displays the average daily classification accuracy of the standard non-retrained classifier for both subjects, reveals two important findings. First, performance of the standard non-retrained classifier is substantially lower than that of the daily retrained classifier on most days. This motivates the development of classifiers which account for drifting tuning parameters. However, classification accuracy of the standard non-retrained classifier does not significantly decline with time, which constitutes the second finding. To quantify this claim, the slope of a linear fit to daily classification accuracy is not significantly different than zero for both subjects. Specifically, the 95% confidence interval for the slope, measured in units of percent accuracy per day, is (−.006, .006) for monkey L and (−.012, .001) for monkey I, which contains zero for both monkeys. While this finding might at first seem suprising, it is consistent with the finding above that tuning parameters do not show a systematic tendency to progressively wander from their values on day 1 but seem to vary within a relatively limited range.

Figure 8.

Daily accuracy values for the standard non-retrained and retrained classifiers for both subjects with 95% confidence intervals. The retrained classifier was retrained each day while the non-retrained classifier was trained on days preceding those decoded here and then held fixed. See Fig. 7 for a depiction of the partitioning of data when training and testing each classifier. The dashed line in each panel shows a linear fit of daily accuracy of the non-retrained classifier with test day. The slope of this fit is not significantly different from zero for either subject. Arrows on the right show overall accuracies of each classifier.

Performance of the Self-Recalibrating Classifiers

Figs. 9 and 10 show the daily classification accuracies of the SR and SRS classifiers for each subject. We emphasize that after these classifiers were trained on days 1–10 for a dataset, they were not provided with any information about the true reach direction of trials with which to retrain themselves when predicting reach direction on the following days. Instead, using the techniques described in section 2, the SR and SRS classifiers simultaneously predicted reach direction and the daily value of tuning parameters from neural data alone. Therefore, the classification accuracies reported here are indicative of what might be achieved in the clinic without any further daily supervised retraining or recalibration after the system is initially trained.

Figure 9.

Daily performance of the self-recalibrating (top panel) and simplified self-recalibrating (bottom panel) classifiers with 95% confidence intervals for data from monkey L. Arrows on the right show overall accuracies of each classifier. Results for the standard classifier with and without daily retraining are reproduced from Fig. 8 for reference.

Figure 10.

Daily performance of the self-recalibrating (top panel) and simplified self-recalibrating (bottom panel) classifiers with 95% confidence intervals for data from monkey I. Arrows on the right show overall accuracies of each classifier. Results for the standard classifier with and without daily retraining are reproduced from Fig. 8 for reference.

Table 1 compares the overall accuracies, calculated as the mean of the average daily accuracies, for all four classifiers considered in this work. The overall accuracies of the SR and SRS classifiers were both 14% higher than that of the standard non-retrained classifier for monkey L and 13% and 16%, respectively, higher for monkey I. These performance improvements were statistically significant for both self-recalibrating classifiers and both subjects (One Sided Wilcoxon Signed-Rank Test, p < 10−6 for both classifiers for monkey L, p < 10−5 for both classifiers for monkey I). Further, both self-recalibrating classifiers nearly recovered the accuracy achieved by the standard retrained classifier. The overall accuracy of the SR and the SRS classifiers were both 1% higher than that of the retrained classifier for monkey L and only 5% and 3% lower for monkey I. These differences were not statistically significant for either self-recalibrating classifier and monkey L (One Sided Wilcoxon Signed-Rank Test, p > 0.1 for SR classifier and p > .05 for the SRS classifier), while the small decrease in accuracies observed on the data for monkey I were (One Sided Wilcoxon Signed-Rank Test, p < 10−4 for SR classifier and p < 10−2 for the SRS classifier). To be clear, the self-recalibrating classifiers decoded the exact same trials, trials 401 and on for each test day, as the retrained classifier and achieved this performance without the benefit that the retrained classifier had of being retrained on the fully labeled data of the first 400 trials of each day.

Table 1.

Average daily accuracy with 95% confidence intervals for the standard non-retrained, retrained and both self-recalibrating classifiers for both subjects.

| Classifier | Monkey L | Monkey I |

|---|---|---|

| Non-Retrained | 60 ± 5% | 62 ± 5% |

| Retrained | 73 ± 2% | 80 ± 2% |

| Self-Recalibrating | 74 ± 2% | 75 ± 1% |

| Simplified Self-Recalibrating | 74 ± 2% | 77 ± 1% |

An important consideration when evaluating the performance of a self-recalibrating classifier is the number of trials required to learn tuning parameter values. A self-recalibrating classifier is of little use if its performance starts near chance each day and then takes hundreds of trials to reach acceptable prediction accuracy. Fig. 11 shows the average classification accuracy achieved by each of the self-recalibrating classifiers throughout the course of a decoding day for both subjects. These accuracies were calculated by dividing all of the trials decoded during a test day into sequential bins of twenty trials each and then calculating the average prediction accuracy in each of these bins over all days of data for a subject. The number of trials in each day varied, and the number of bins that accuracies could be calculated over was limited by the day with the fewest number of trials for each subject. Monkey L had at least 386 trials for classification on each test day and monkey I had at least 752. Examination of the plots in Fig. 11 reveal that both classifiers start a decoding day well above chance levels of performance. Further, the SR classifier shows little evidence of a “burn-in” period, where accuracy might be lower as tuning parameter values are being learned. It is likely analysis, a burn-in period of less than 20 trials might become apparent, but in any case the SR classifier rapidly achieves steady state performance. The SRS classifier shows evidence of slightly decreased performance in the first bin of 20 trials for both subjects, and performance appears to be at asymptotic levels by trials 21–40.

Figure 11.

Average accuracy throughout a decoding session for the SR and SRS classifiers for both subjects with 95% confidence intervals. Accuracy values are calculated as the average classification accuracy in sequential bins of 20 trials over all test days for a subject. There were 386 or more trials for classification on each test day for monkey L and 752 or more on each test day for monkey I. Panels A and C show results for monkey L, while panels B and D show results for monkey I.

Fig. 12 displays tuning parameter estimates on two electrodes produced by both self-recalibrating classifiers on the first five days of test data for monkey L. Results for monkey I were similar. For this analysis, estimates for true tuning parameters were obtained by convolved spikes with a Gaussian kernel with a standard deviation of 100 trials as described in Methods with one exception: days were processed separately and not concatenated together. The SR classifier produces a distribution over tuning parameter values on each trial (shown as the distributions over μd,e,j=1 and μd,e,j=2 in Fig. 2), and the mean of these distributions, , was used to plot the traces in Fig. 12. Even though there are seven tuning parameters per electrode, traces for only two tuning parameters per electrode are shown for visual clarity. Characteristics of the way both classifiers predict tuning parameters become apparent with inspection of the plots in Fig. 12. First, on all days estimates for tuning parameters on the same electrode move up and down together. This immediately follows from the modeling assumption that tuning parameters on a given electrode are yoked to a single base value, bd,e, in Eq. 5. Second, tuning parameter estimates vary more strongly at the beginning than at the end of a decoding session. This can be observed in the steep change in tuning parameter values at the beginning of certain days, such as day 14 for electrode 17 in Fig. 12. Intuitively, the reason for the reduced variability in tuning parameter estimates for both decoders is that confidence in estimates of base values increases as more neural activity is observed. Mathematically, this corresponds to less uncertainty in the distribution of base values, , for the SR classifier and an increasing value of nd,t for the SRS classifier.

Figure 12.

Estimates of tuning parameters on two representative electrodes (left and right columns) produced by the SR (top) and SRS (bottom) classifiers on the first five days of test data for monkey L compared to smoothed estimates obtained by convolving spikes with a Gaussian kernel. The “spikes” in the tuning parameter estimate of the SR classifier occur when the mechanism for detecting anomalous spike counts is triggered and the uncertainty around the parameter estimates for an electrode is reset. The false positive rate for detecting anomalous spikes was set to approximately 1%, and the rate of spikes seen in this plot should not be understood as the true rate of anomalous events.

The “spikes” in the parameter estimates for an electrode produced by SR classifier visible in both figures occur when that electrode is flagged as behaving erratically by the mechanism for detecting anomalous spike counts, which may occur after the threshold for an electrode is reset, as described in section 2.4.3. When this occurs, the uncertainty around the base value for that electrode is increased. This allows spike counts collected immediately after an electrode is flagged to strongly influence parameter estimates, and just as at the beginning of a day, multiple trials have to pass before the uncertainty for the base value of a flagged electrode is reduced and parameter estimates vary less. The reader may notice that the plotted electrodes are flagged as behaving erratically multiple times within the same day. As explained in section 2.4.3, the false positive rate of the flagging mechanism was set to 1%; therefore, the frequency of parameter resets seen in Fig. 12 should not be understood to indicate the true frequency of anomalous events. While we do not show results for this, in practice the SR classifier appears to be reasonably robust without this mechanism to detect and compensate for electrodes with anomalous spike counts. In fact, classification accuracy of the SR classifier was markedly improved on only three days for monkey L and on no days for monkey I by its inclusion.

4. Discussion

The development of self-recalibrating classifiers for intracortical BCI has received little previous attention. In this work, we first characterized properties of tuning parameter drift which can be leveraged for the design of self-recalibrating classifiers. We provided evidence that between-day tuning parameter drift is substantially larger than within-day drift and that tuning parameters on the same electrode drift in a highly correlated manner. Further, tuning parameter drift appears constrained across time. This is consistent with the performance of a non-retrained classifier, which is unstable from day-to-day but does not tend to decline with time. We developed two novel, self-recalibrating classifiers which leverage the insights gained into tuning parameter drift to maintain stable performance over periods of 31 and 26 days of prerecorded data. This results in an approximate 15% improvement in overall accuracy compared to the standard classifier without daily retraining. This improvement was large enough that both self-recalibrating classifiers nearly recovered the accuracy achieved by the standard classifier when it was retrained daily.

The self-recalibrating classifiers presented in this work train themselves daily without knowledge of true reach directions. This is the first report of such classifiers for intracortical BCI to the authors’ knowledge. While these results must be verified in a closed-loop setting, they may represent a significant step towards the clinical viability of intracortical BCI systems. Indeed, future systems patterned off of the ideas in this paper would be ready for use almost immediately after being powered on each day as threshold levels can be set via an automated method and the classifiers update their parameters autonomously in the background during normal BCI use.

The classifiers presented here require foreknowledge of the window in time during which a user is making a decision. Previous work has demonstrated the utility of BCI systems subject to this constraint (Vaughan et al., 2006). Further, self-paced BCI systems can be developed by combining the self-recalibrating classifiers presented here with previously published algorithms to determine at each point in time whether the user is intending to make a decision or not (Achtman et al., 2007; Kemere et al., 2008).

The two self-recalibrating classifiers performed very similarly. Is one to be preferred over the other for clinical application? The SRS classifier adds little computational cost to the standard BCI classifier. This has important implications for resource constrained systems, such as implantable medical devices. Computational hardware which supports the standard classifier will also likely support an upgrade to the simplified self-recalibrating classifier. However, the SR classifier may still be preferred in two scenarios. First, as shown in Fig. 11, the SR classifier requires fewer trials to reach stable levels of performance at the beginning of a decoding day. Second, the SRS classifier implicitly assumes that a user makes reaches in all directions with equal frequency. If this assumption is broken, which may occur if a user reaches predominantly in one direction, tuning parameter estimation will suffer. The SR classifier bypasses this shortcoming by calculating the probability of reaching in each direction while estimating tuning parameters for each trial. In addition to these considerations, the SR classifier makes modeling assumptions explicit. Changes to these assumptions can be carried out in a straight forward manner with the SR classifier, and it not obvious how this might be done with the SRS classifier.

Tuning parameter estimates produced by both self-recalibrating classifiers could differ significantly from those estimated as ground truth. However, this did not prevent either classifier from nearly reproducing the decoding accuracy of the daily retrained standard classifier. While this may seem surprising, it is likely classification accuracy is robust to small deviations in estimated tuning parameters as long as their relative ordering is maintained. Our models ensure this by referencing all tuning parameters on an electrode to a single base value. Preliminary analyses with more complicated models which did not force tuning parameters on the same electrode to move in perfect lockstep and also modeled drift in tuning parameters within days did not reveal markedly improved decoding performance (data not shown). Therefore, as the ultimate goal of this work is stable classification and not tuning parameter estimation, we believe the simpler models are to be preferred.

Our decoders also do not carry information about tuning parameters from one decoding day to the next. However, this does not significantly hinder decoding performance. This may seem surprising at first as Fig. 5A indicates that on some pairs of days the previous day’s tuning parameter values may be predictive of the next day’s values. However, we note that this is not the case for all days. In Fig. 5A, for example, there is a large jump in the value of parameters between days 4 and 5. Thus, on same days using information about the decoding parameters from the previous day would likely help the decoder learn the correct tuning parameters for the present day more quickly, and on some others it could hinder learning. Additionally, as shown in Figs. 11B and 11D, both decoders learn tuning parameter values quickly enough to allow average decoding performance to reach asymptotic levels in tens of trials. Thus, the improvement in decoding accuracy that could be realized by learning tuning parameters more quickly is fairly minimal. Finally, formally modeling a time series of base values between days would make our EM algorithm for the SR classifier more complicated as the distribution of base values conditioned on observed data would no longer factor as a product of the distribution of base values on individual days (see Eq. S1 of the Supplementary Methods), and additional parameters for a model of transitions in base values between days would need to be learned. Though these reasons motivate the simplification to not carry tuning parameter information between days, we acknowledge this may be interesting future work.

An important aim of this work was to identify properties of the drift in intracortical BCI signals that could be exploited for the development of simplified classifiers. The finding that tuning parameters on the same electrode drift in a highly correlated manner reduces the dimensionality of the space that tuning parameters must be tracked in as algorithms can track a single base value instead all individual parameters on an electrode. High correlations in the drift of tuning parameters on the same electrode may be consistent with changes in baseline firing rates. Changes in baseline could be driven by changes in recorded action potential amplitude (Chestek et al., 2011), micromovements of the recording array relative to cortical tissue (Santhanam et al., 2007) and daily fluctuations in the value of the threshold that was used for spike detection. Whatever the mechanisms which underlie this behavior, robust classification was achieved by the relatively simple SRS classifier. The success of this simple approach is tantalizing because it suggests that any BCI algorithm which can be parameterized by variables which are referenced to the average spike counts of electrodes could be made self-recalibrating by following a recipe similar to that presented in section 2.5 for the SRS classifier. While we caution below results that pertain to adaptive classifiers should not be trivially generalized to that of regressors, it could be productive to apply this same modification to standard algorithms for regression, such as the Kalman filter (Wu et al., 2006; Wu and Hatsopoulos, 2008) or population vector algorithm (Georgopoulos et al., 1986, 1988) to render them self-recalibrating.

Another important finding was that tuning parameters drift much more between than within days. This suggests that self-recalibrating classifiers can be simplified to capture only between-day changes, as was done in this work. Recently Perge et al. investigated intracortical BCI signal stability within the context of decoding continuous control signals (i.e., regression) in closed loop human experiments (Perge et al., 2013). While they do not explicitly compare within-day changes to between-day changes in BCI signals, they do report reduced decoding accuracy due to within-day changes in neural spike rates. The differing conclusions may be explained by the relative sensitivity of regressors and classifiers to changes in BCI signals. Even small signal changes in BCI signals will likely be reflected in the decoded output of a regressor, whereas the performance of a classifier will only be affected if the relative ordering of the probabilities assigned to each class (reach direction in this work) are altered. This should lend caution when applying our findings on tuning parameter drift to the continuous decoding scenario. While tuning parameters do move up and down together between days on the same electrode, other aspects of tuning, such as modulation depth, as shown in Supplementary Results, can also change. For the purposes of developing self-recalibrating classifiers for our datasets, we were able to simplify our classifiers and ignore these other aspects of drift. However, it may be that these simplifications would be more detrimental in the context of regression. This is not to degrade the importance of the present study. As discussed in the introduction, BCI classifiers offer unique and important clinical promise, and insights that allow for the unique simplification of BCI classifiers may facilitate their use in non-laboratory settings.

Recently Flint et al. examined BCI performance of a non-retrained continuous decoder of arm movements in a closed loop setting (Flint et al., 2013) when either local field potentials or threshold crossings were used for control signals. We focus on their results for threshold crossings. Two findings of their work are of direct relevance to the present study. First, in an offline analysis of hand-control data, they report highly variable day-to-day performance but little change in average performance with time as decoders age after the initial day they were trained on. This is consistent with our similar finding for a discrete decoder. Second, when they measure correlation coefficients between a variety of measures of closed loop decoder performance and time, they show non-degrading performance over time for one subject and a mild degradation of performance for a second. Beyond these overall trends, day-to-day variability in performance is still apparent. While this may be partly due to variability in subject motivation, it is also quite possible that the use of self-recalibrating classifiers could have provided reduced day-to-day variability in performance in this closed loop setting.

There are at least two possible reasons tuning parameter drift is constrained across time, as we observed in this work. First, it may be the case that tuning parameters are fixed across days but our estimates varied from day to day due to fluctuations in threshold settings based on a finite sample (two seconds) of data. However, this likely does not entirely explain the changes in tuning parameters that we observe because the relationship between multiunit activity and arm kinematics can change even when threshold settings are held fixed across days (Flint et al., 2013). A second tantalizing possibility is that micromovements of the recording array might underlie such tuning parameter changes so that electrodes are in a random position within a relatively stable neural environment. If this is the case, the development of recording arrays that maintain a more fixed position relative to brain tissue may hold promise for increasing the fundamental stability of signals for intracortical BCI use.

Supplementary Material

Acknowledgments