Abstract

Objective

This study aims at investigating the effect of response rate and class size interaction on students’ evaluation of instructors and the courses offered at heath science colleges in Saudi Arabia.

Methodology

A retrospective study design was adapted to ascertain Course Evaluation Surveys (CES) conducted at the health science colleges of the University of Dammam [UOD] in the academic year 2013–2014. Accordingly, the CES data which was downloaded from an exclusive online application ‘UDQUEST’ which includes 337 different courses and 15,264 surveys were utilized in this study. Two-way analysis of variance was utilized to test whether there is any significant interaction between the class size and the response rate on the students’ evaluation of courses and instructors.

Results

The study showed that high response rate is required for student evaluation of instructors at Health Science colleges when the class size is small whereas a medium response rate is required for students’ evaluation of courses. On the other hand, when the class size is medium, a medium or high response rate is needed for students’ evaluation of both instructors and courses.

Conclusions

The results of this study recommend that the administrators of the health science colleges to be aware of the interpretation of students’ evaluations of courses and instructors. The study also suggests that the interaction between response rate and class size is a very important factor that needs to be taken into consideration while interpreting the findings of the students’ evaluation of instructors and courses.

Keywords: Health Colleges, Student evaluation of teaching, Response Rate, Class size

Introduction

Higher Education Institutions (HEIs) in the Kingdom of Saudi Arabia (KSA) are becoming increasingly aware of the importance of Quality in the delivery of teaching and learning process due to the increasing number of students entering the educational system. Medical training all over the world is becoming more student-centered, with an emphasis on active learning rather than on passive attainment of knowledge.(1)

Teaching in higher education institution involves interaction with students; therefore, a huge emphasis has been given to student evaluations, ratings, feedback, and performance.(2) Since students are the individuals that are most exposed to and the most affected by the teacher’s teaching, their input on the evaluation of the teaching process is paramount. Research also indicates that students are the most qualified sources to report on the extent to which the learning experience was productive, informative, satisfying or worthwhile. (3) Accordingly, the academic institutions also rely on students’ ratings on different components of their core functions such as courses, teaching skills and academic programs. (4) Moreover, the students’ evaluation of teaching and learning effectiveness in HEIs has gained a tremendous attention in the field of psychology, quality control and assurance in the last few decades, (5) and it has been very commonly used in almost every university in the world. (6) Thus, the assessment of Educational Quality under an academic program, through students’ satisfaction, is one of the important aspects regarding quality management in Higher education. (7)

Brockx, Spooren and Mortelmans (2011) pointed out several reasons behind the utility of these evaluations and assessment by the higher education institutions viz. (i) quick feedback assuming that instructors make changes based on students’ evaluation, (ii) students’ evaluation is used for critical decisions such as promotion and tenure and (iii) accreditation and governmental agencies require such evaluations(8). Other benefits of the students’ ratings on teaching quality include instructors value the students’ input and make improvements in their teaching, instructors are rewarded for having excellent ratings, instructors with very low ratings are encouraged to seek help, students perceive and use ratings as a way to suggest improvements in teaching, students have more information on which to make their course selections, ratings motivate instructors to improve teaching and; students see ratings as a vehicle for change.(9, 10, 11)

University of Dammam [UOD] is presently carrying out several evaluations by students as required for academic accreditation by the National Commission for Academic Accreditation and Assessment [NCAAA].(12) Among these, the first one deals with Course viz Course Evaluation Surveys (CES) and the second deals with Program viz. Students Experience Survey (SES) and Program Evaluation Surveys (PES). Educational literature has also emphasized the student rating of teaching effectiveness because such evaluations have contributed to improve the quality of educational process especially if the proper reliability coefficients are used to assess the psychometric properties of the instruments. (13, 14) The most important part of these evaluations is the communication of rating results in a way that allows for fair and meaningful interpretations and comparisons by a wide range of stakeholders. (9, 15)

Several studies have been conducted to explore the factors that affect students’ evaluation of instructors and courses in higher education. However, a very few studies have addressed the impact of class size on students’ evaluation of teaching. (16, 28) Also, it has been observed that the interaction of class room size and the response rate of students in these surveys are not much addressed in the literature. Moreover, from the strategic point of view, it is essential for the university administrators of HEIs to understand how these factors are influencing the outcome of evaluations.

Thus, the present study is conducted with an objective to ascertain the interaction between response rate and class size (total number of students on the course) and its effect on students’ evaluation of instructors and the courses offered at selected health science colleges in UOD.

Methods

Study Design

A retrospective study design was adapted to ascertain CES surveys conducted at all the health science colleges [N=5] of UOD in the academic year 2013–2014.

The Questionnaire tool

Course Evaluation Survey (CES) tool is used to collect the data and it contains 14 five point-Likert Scale items divided into two sub-scales (instructor and course related items). This CES tool has been approved by UOD and adopted by NCAAA for accreditation purposes. CES tool was developed by a panel of experts in higher education quality management areas and several studies investigated its psychometric properties and usefulness.(30, 31, 32) Besides that, Corrected-Item-To-Total Correlation and Cronbach’s Alpha were calculated using random samples (n=50) selected from the current data. The results showed that Cronbach’s Alpha equals 0.963 and the Corrected-Item-To-Total Correlation ranges from 0.568 to 0.888 which adds evidence for the reliability and the validity of CES.

Selection of Data

The data used in this study is a part of data collected by UOD during the academic year (2013/2014) as a routine procedure for academic accreditation and monitoring purposes, which are being submitted to NCAAA. UOD through its Deanship for Quality and Academic Accreditation has developed special online application called “UDQUEST” that has been used electronically to collect data related to many different issues at the University. Among the surveys included in UDQUEST, CES which is used to evaluate instructors and the courses have been targeted in this study. These CES data which was downloaded from the UDQUEST related to all the Health science programs offered at UOD were utilized for analysis viz. Medicine, Nursing, Dentistry, Applied Medical science and Clinical Pharmacy. These five health science colleges offering 337 different courses were considered. Accordingly, 15,264 surveys were analyzed in this study.

Categorization of the Independent variables

There are two independent variables of this study i.e. the response rate and class size which are categorized into three levels; 1- low [class size less than 60 and response rate less than 73%]; 2- medium [class size (61–200) and response rate (74–91) and]; 3- high [class size over 200 and response rate over 92%] (Nulty in 2008)29. The large class size is not applicable to the current data. The above classification was designed assuming 3% sampling error and 95% confidence level conditions.

Statistical Analysis

To achieve the main purposes of this study, the following statistical tests were utilized viz (i) Descriptive Statistics, (ii) Two Way Analysis of Variance Full model was used where the dependent variables are Students Evaluation of both Instructors and Courses and the independent variables are Class Size and Response Rate, and (iii) Tukey Post Hoc for pairwise and follow up tests.

Results

The distribution of the samples according to the cross tabulation of response rate and class size is shown in Table 1. Since the main purpose of this study is to explore the effect of interaction between response rate and class size on students’ evaluation of instructors and courses, the descriptive statistics of two independent variables (Response rate and class size) with respect to the dependent variable i.e. mean score of two sub-scale (i.e. effectiveness of the instructors and courses) were carried out using SPSS version 19.

Table 1.

Descriptive statistics of response rate and class size by rating of students’ evaluation of instructors at Health Science colleges

| Response Rate | Class Size* | Mean | Std. Deviation | N |

|---|---|---|---|---|

| Low Response Rate | Small Class Size | 3.72 | 1.00 | 1036 |

| Medium Class Size | 3.59 | 1.00 | 3593 | |

| Total | 3.62 | 1.00 | 4629 | |

| Medium Response Rate | Small Class Size | 3.86 | 0.95 | 2842 |

| Medium Class Size | 3.61 | 0.92 | 2333 | |

| Total | 3.75 | 0.94 | 5175 | |

| High Response Rate | Small Class Size | 3.96 | 0.94 | 5197 |

| Medium Class Size | 4.21 | 0.71 | 263 | |

| Total | 3.98 | 0.93 | 5460 | |

| Total | Small Class Size | 3.90 | 0.95 | 9075 |

| Medium Class Size | 3.62 | 0.97 | 6189 | |

| Total | 3.79 | 0.97 | 15264 |

None of the evaluated courses fall in large class size level according to Nulty (2008).

Table 1 shows that the mean rating of students evaluation of instructors at health science colleges according to the cross tabulation of response rate and class size ranges from 3.59 to 4.21. The lowest mean ratings is recorded (i.e. 3.59) when the response rate is low and the class size is medium, while the highest rating is recorded (i.e. 4.21) when the response rate is high and the class size is medium. Further, a Two Way ANOVA was used to test the differences between these ratings mean as shown in Table 2.

Table 2.

Two Way ANOVA for students’ evaluation of instructors by response rate and class size at Health Science colleges

| Source | Sum of Squares | df | Mean Square | F | Sig. |

|---|---|---|---|---|---|

| Response Rate | 143.767 | 2 | 71.884 | 79.137 | 0.000 |

| Class Size | 2.817 | 1 | 2.817 | 3.101 | 0.078 |

| Response Rate Class Size | 52.410 | 2 | 26.205 | 28.849 | <0.001 |

| Error | 13859.573 | 15258 | 0.908 | ||

| Total | 233576.309 | 15264 |

Table 2 shows that the response rate and the interaction are statistically significant (p<0.001), while there is no statistical significance is established for class size (p=0.078). It is also seen that there is no statistical significance between small and medium class sizes. This result is in agreement with the findings of several other studies which demonstrated that there is no statistical effect of the class size on students’ evaluation of instructors.(16, 28)

Table 3 shows the pairwise comparisons of students’ evaluation of instructors by response rate variable. It is seen that the highest mean differences between the response rate levels is reported as 0.432 and it is between high and low response. Also, the entire mean differences are statistically significant and the higher response rate resulting higher rating of students evaluations of instructors at health science colleges.

Table 3.

Pairwise Comparisons of response rate by rating of students’ evaluation of instructors

| (I) Response Rate | (J) Response Rate | Mean Difference (I–J) | Std. Error | Sig. |

|---|---|---|---|---|

| Low Response Rate | Medium Response Rate | −0.081* | 0.021 | <0.001 |

| High Response Rate | −0.432* | 0.034 | <0.001 | |

| Medium Response Rate | Low Response Rate | 0.081* | 0.021 | <0.001 |

| High Response Rate | −0.352* | 0.033 | <0.001 | |

| High Response Rate | Low Response Rate | 0.432* | 0.034 | <0.001 |

| Medium Response Rate | 0.352* | 0.033 | <0.001 |

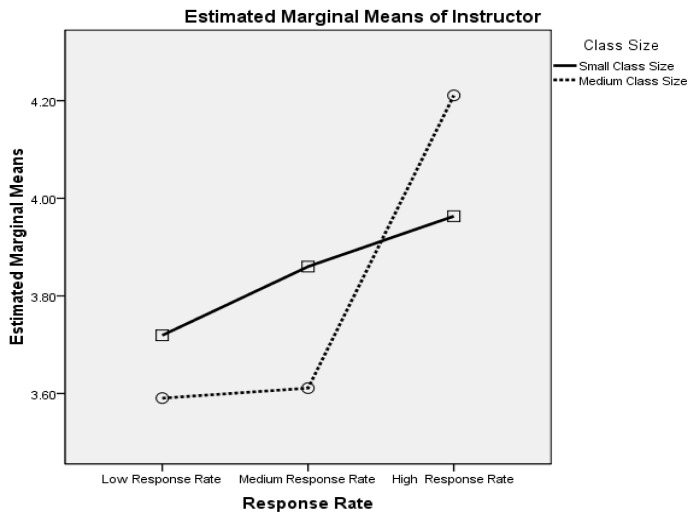

Figure 1 shows that when the class size is medium or small, rating of students evaluation of instructors at health science colleges increases as long as the response rate increases too. On the other hand; when the class size is medium, rating of students’ evaluation of instructors is stable when the response rate is moving from low to medium, and then the rating increases when it is moving to high response rate. Moreover, ratings of students’ evaluation of instructors almost stable across all levels of response rate when the class size is small. This suggests that when the class size is small, the response rate needs to be at least at medium level to have a stable students’ evaluation of instructors, and this medium response rate is enough when the class size is large.

Figure 1.

The interaction between Response Rate and Class Size on rating of students’ evaluation of instructors

Table 4 shows that the ratings means of students’ evaluation of health science courses according to the cross tabulation of response rate and class size range from 3.40 to 4.19. The lowest ratings mean is reported as 3.40 when the response rate is low and the class size is low, while the highest rating is reported as 3.88 when the response rate is high and the class size is medium. From the findings, it is interpreted that the students’ evaluations of instructors and courses have the highest ratings when the class size is medium and the response rate is high. Further, a Two Way ANOVA was used to test the differences between these ratings mean (Table-5).

Table 4.

Descriptive statistics of response rate and class size by rating of students’ evaluation of Courses at Health Science colleges

| Response Rate | Class Size | Mean | Std. Deviation | N |

|---|---|---|---|---|

| Low Response Rate | Small Class Size | 3.5463 | 1.00082 | 1036 |

| Medium Class Size | 3.4067 | 1.03567 | 3593 | |

| Total | 3.4379 | 1.02951 | 4629 | |

| Medium Response Rate | Small Class Size | 3.6336 | 1.00554 | 2842 |

| Medium Class Size | 3.4417 | 0.95719 | 2333 | |

| Total | 3.5471 | 0.98857 | 5175 | |

| High Response Rate | Small Class Size | 3.6769 | 1.00050 | 5197 |

| Medium Class Size | 4.1901 | 0.70205 | 263 | |

| Total | 3.7016 | 0.99424 | 5460 | |

| Total | Small Class Size | 3.6484 | 1.00286 | 9075 |

| Medium Class Size | 3.4532 | 1.00656 | 6189 | |

| Total | 3.5693 | 1.00890 | 15264 |

Table 5.

Two Way ANOVA for Health science students’ evaluation of courses by response rate and class size.

| Source | Sum of Squares | df | Mean Square | F | Sig. |

|---|---|---|---|---|---|

| Response Rate | 164.663 | 2 | 82.232 | 82.489 | 0.000 |

| Class Size | 5.479 | 1 | 5.479 | 5.489 | 0.019 |

| Response Rate × Class Size | 106.232 | 2 | 53.116 | 53.217 | 0.000 |

| Error | 15228.959 | 15258 | 0.998 | ||

| Total | 209993.440 | 15264 |

From the above table, it is found that the main effects (Response rate and Class size) and the interaction are statistically significant (P<0.001 and P<0.019). Since statistical significance was established, the data was further subjected to pairwise comparisons (Table-6).

Table 6.

Pairwise Comparisons between response rate and the rating of students’ evaluation of Courses

| (I) Response Rate | (J) Response Rate | Mean Difference (I–J) | Std. Error | Sig. |

|---|---|---|---|---|

| Low Response Rate | Medium Response Rate | −0.061* | 0.022 | 0.007 |

| High Response Rate | −0.457* | 0.036 | <0.001 | |

| Medium Response Rate | Low Response Rate | 0.061* | 0.022 | .007 |

| High Response Rate | −0.396* | 0.035 | <0.001 | |

| High Response Rate | Low Response Rate | 0.457* | 0.036 | 0.000 |

| Medium Response Rate | 0.396* | 0.035 | <0.001 |

Table 6 shows that the highest mean differences between the response rate levels is reported as 0.457, and it is between high and low response rate, taken into account that all the mean differences are statistically significant.

Table 7 shows that the statistically significant means are between small class size and medium class sizes.

Table 7.

Pairwise Comparisons between Class size and the rating of students’ evaluation of Courses

| (I) Class Size | (J) Class Size | Mean Difference (I–J) | Std. Error | Sig. |

|---|---|---|---|---|

| Small Class Size | Medium Class Size | −0.061* | 0.026 | 0.019 |

| Medium Class Size | Small Class Size | 0.061* | 0.026 | 0.019 |

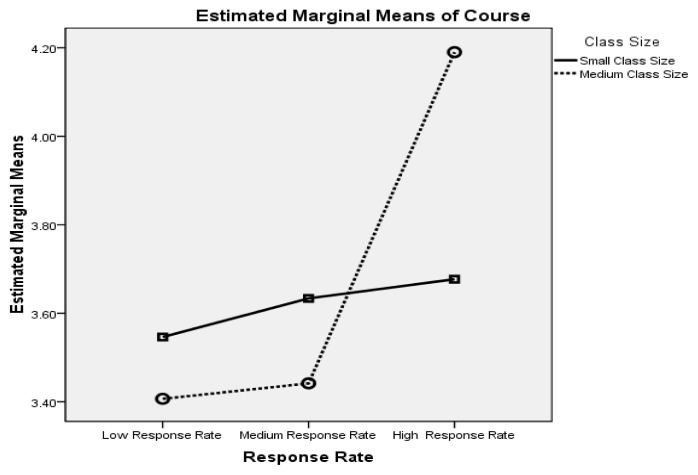

From the figure-2, it is found that the highest students’ evaluation of courses when the class size is medium and the response rate is high. Also, rating of students’ evaluation of courses get higher when the response rate gets higher and it gets relatively stable when moving to high response rate. This suggests that when the class size is small, the response rate needs to be at least at the medium level to have stable students’ evaluation of courses. Moreover, it is noticed from Figure 2 that when the class size is medium, rating of students’ evaluation of courses increase as the response rate increases.

Figure 2.

The interaction between Response Rate and Class Size on rating of students’ evaluation of Courses

Discussion

This research study is the documentation of the effect of response rate and class size interaction on the students’ evaluation of courses and instructors at Health science colleges in UOD. The present study focused only on the health science colleges offering programs in Applied Medical sciences, Dentistry, Clinical Pharmacy, Medicine, and Nursing. To the best of our knowledge, this is the first Saudi Arabia-based study to document the interactive effect of class size and response rate on students rating of instructors and courses offered in the Health science colleges’ domain.

The results of this study clearly demonstrated that there is an interaction between response rate and class size on ratings of students’ evaluations of instructors and courses at health Science colleges. It has been observed that when the class size is medium, the ratings of instructors and courses at health science colleges increase as the response rate increases. On the contrary when the class size is small, at least medium response size is required to get stable ratings of students’ evaluation of both instructors and courses. Therefore, the results of this study highly recommend the health science college administrators to be aware of the interpretation of these ratings.

The frequent use of students’ evaluation of courses is largely due to the easiness of collecting the data and presenting and interpreting the results.(33) Although it was primarily intended for formative purposes, such evaluations came into use for faculty personnel decisions. (34) The interpretation of this evaluation results is more complicated than it looks, and it entails a risk of inappropriate use by both teachers and administrators for both formative and summative purposes. (35) The results of this study showed an important misuse and misinterpretation that could appear when dealing with students’ evaluation of courses and instructors at health science colleges. More precisely, if the students evaluation of courses and Instructors are conducted using a small sample size (i.e. class size) and if the response rate is low this may leads to a misuse and misinterpretation of findings. Also, such findings may not be used for formative and faculty decision purposes. Thus, the findings of this study indicated that if the class size and the corresponding response rate are low, it will produce unstable students’ evaluation of courses and instructors.

Penny (2003) stated that the ways in which administrators engage with students’ evaluation of teaching effectiveness constitute one of the greatest threats to the purposes of these evaluations. (33) Although guidelines for the collection and interpretation of such evaluations data are available, many users are not sufficiently trained to handle these data, and they may even be unaware of their own ignorance.(36) Therefore; the misuse of these evaluations might have consequences for both the improvement of teaching and the careers of the teachers. (37)

This study adds a new concern (i.e. interaction between the class size and response rate) about source of misinterpretations of the data obtained from students’ evaluation of courses and instructors in health science colleges.

At most caution needs to be taken to ascertain the characteristics of the participants of these students’ evaluation surveys on courses and instructors if the class size is small and the response rate is low. In such case, it is paramount to explore the perceptions of those who participated and those who have not participated in the surveys to sidestep the misinterpretation of findings.

Limitations

There are few limitations to this study that need to be addressed. First, the results are derived from a self-report survey on the students’ evaluation of courses and instructors and independent verification of data was not possible. Further, at most caution need to be taken while interpreting the results of this study since there are other factors besides class size and response rate that might influence the students’ evaluation of teaching. These factors are grouped under three categories viz. Students centered, Faculty centered and the Course centered complexities. The students centered factors include gender,(16) cultural background of the students,(17) Domain-specific vocational interests (18) and Psychosocial Dynamics such as Instructors’ attractiveness. (19) Faculty centered factors include gender (16) and teachers characteristics. (18, 20, 21, 22) The Course centered factors include grades or expected grades,(8, 16, 23) course level (24) and course difficulty.(25, 26, 27)

Conclusion

This study concluded that high response rate is required for student evaluation of instructors at Health Science colleges when the class size is small whereas at least medium response rate is required for students’ evaluation of courses. On the other hand, when the class size is medium, a medium or high response rate is needed for both instructors and courses.

This study will help the academic developers in expediting the decision about the response rate required for different class sizes while evaluating the course and instructors at the health science colleges. Future work should study the effect of class size and response rate with respect to the students’ evaluation of program at health science colleges. Furthermore, a similar survey of students in other educational domains such as Engineering, Management, Arts and Humanities would be highly informative, especially regarding the impact of class size and response rate on students’ evaluation of courses and instructors.

Acknowledgements

The Author expressed his thankfulness to all the Academic Units at the University of Dammam which provides the opportunity to complete this research work. The author expressed his gratitude to Dr Mahmoud Faisal Al-Quraan, Director of Performance Measurement Unit of the Deanship of Quality and Academic Accreditation (DQAA) for proving technical inputs in completing this research work. Also, the author expressed his special thanks to Dr. Arun Vijay Subbarayalu, Director of Quality Studies and Research Unit of DQAA for his editorial help to bring out this article in full form.

References

- 1.Jones R, Higgs R, de Angelis C, Prideaux D. Changing face of medical curricula. Lancet. 2001;357(9257):699–703. doi: 10.1016/S0140-6736(00)04134-9. [DOI] [PubMed] [Google Scholar]

- 2.Apodaca P, Grad H. The dimensionality of students’ ratings of teaching: integration of uni and multidimensional models. Studies in Higher Education. 2005;30(6):723–748. [Google Scholar]

- 3.Archibong IA, Nja ME. Towards Improved Teaching Effectiveness in Nigerian Public Universities: Instrument Design and Validation. Higher Education Studies. 2011;1(2):78–91. [Google Scholar]

- 4.Aultman LP. An Expected Benefit of Formative Student Evaluations. College Teaching. 2006;54(3):251. [Google Scholar]

- 5.Ginns PM, Prosser, Barrie S. Students’ perceptions of teaching quality in higher education: the perspective of currently enrolled students. Studies in Higher Education. 2007;32(5):603–15. [Google Scholar]

- 6.Zabaleta F. The use and misuse of student evaluations of teaching. Teaching in Higher Education. 2007;12(1):55–76. [Google Scholar]

- 7.Al-Rubaish A. On the Contribution of Student Experience Survey Regarding Quality Management in Higher Education: An Institutional Study in Saudi Arabia. Journal of Service Science and Management. 2010;3(4):464–469. [Google Scholar]

- 8.Brockx B, Spooren P, Mortelmans D. Taking the grading leniency story to the edge. The influence of student, teacher, and course characteristics on student evaluations of teaching in higher education. Educational Assessment, Evaluation and Accountability. 2011;23(4):289–306. [Google Scholar]

- 9.Neumann R. Communicating student evaluation of teaching results: rating interpretation guides (RIGs) Assessment & Evaluation in Higher Education. 2000;25(2):121–134. [Google Scholar]

- 10.Ory JC, Ryan K. How do student ratings measure up to a new validity framework? New directions for institutional research. 2001;(109):27–44. [Google Scholar]

- 11.Archibong IA, Nja ME. Towards Improved Teaching Effectiveness in Nigerian Public Universities: Instrument Design and Validation. Higher Education Studies. 2011;1(2):78–91. [Google Scholar]

- 12.National Commission for Higher Education Institutions (NACCC) Self Evaluation scales for Higher Education Institutions. Feb, 2010. Retrieved from: www.ncaaa.org.sa.

- 13.Morley D. Assessing the reliability of student evaluations of teaching: choosing the right coefficient. Assessment & Evaluation in Higher Education. 2014;39(2):127–139. [Google Scholar]

- 14.Al-Kuwaiti A. Students evaluating teaching effectiveness process in Saudi Arabian medical colleges: A comparative study of students’ and faculty members perception. Saudi Journal of Medicine and Medical Sciences. 2014;2(3):166–172. [Google Scholar]

- 15.Kuzmanovic M, Savic G, Andric Gusavac B, Makajic-Nikolic D, Panic B. A Conjoint-based approach to student evaluations of teaching performance. Expert Systems with Applications. 2013;40(10):4083–4089. [Google Scholar]

- 16.Badri MA, Abdulla M, Kamali MA, Dodeen H. Identifying potential biasing variables in student evaluation of teaching in a newly accredited business program in the UAE. International Journal of Educational Management. 2006;20(1):43–59. [Google Scholar]

- 17.Davies Martin, Hirschberg Joe, Lye Jenny, Johnston Carol, McDonald Ian. The research report form University of Adelaide and Flinders University. Journal compilation © 2007 Blackwell Publishing Ltd; Systematic Influences on teaching evaluations: The Case for Caution. [Google Scholar]

- 18.Greimel-Fuhrmann B, Geyer A. Students’ evaluation of teachers and instructional quality – analysis of relevant factors based on empirical evaluation research. Assessment & Evaluation in Higher Education. 2003;28(3):229–238. [Google Scholar]

- 19.Freng S, Webber D. Turning up the heat on online teaching evaluations: Does “hotness” matter? Teaching of Psychology. 2009;36(3):189–193. [Google Scholar]

- 20.Clayson DE, Sheffet MJ. Personality and the student evaluation of teaching. Journal of Marketing Education. 2006;28(2):149–160. [Google Scholar]

- 21.Patrick CL. Student evaluations of teaching: effects of the Big Five personality traits, grades and the validity hypothesis. Assessment & Evaluation in Higher Education. 2011;36(2):239–249. [Google Scholar]

- 22.Shevlin M, Banyard P, Davies M, Griffiths M. The validity of student evaluation of teaching in higher education: love me, love my lectures? Assessment & Evaluation in Higher Education. 2000;25(4):397–405. 2000. [Google Scholar]

- 23.Griffin TJ, Hilton J, III, Plummer K, Barret D. Correlation between grade point averages and student evaluation of teaching scores: taking a closer look. Assessment & Evaluation in Higher Education. 2014;39(3):339–348. [Google Scholar]

- 24.Alauddin M, Kifle T. Does the student evaluation of teaching instrument really measure instructors’ teaching effectiveness? An econometric analysis of students’ perceptions in economics courses. Economic Analysis and Policy. 2014;44(2):156–168. [Google Scholar]

- 25.Addison WE, Best J, Warrington JD. Students’ Perceptions of Course Difficulty and Their Ratings of the Instructor. College student journal. 2006;40(2):409–416. [Google Scholar]

- 26.Neumann R. Disciplinary differences and university teaching. Studies in higher education. 2001;26(2):135–146. [Google Scholar]

- 27.Chen GH, Watkins D. Stability and correlates of student evaluations of teaching at a Chinese university. Assessment & Evaluation in Higher Education. 2010;35(6):675–685. [Google Scholar]

- 28.Koh HC, Tan TM. Empirical investigation of the factors affecting SET results. International Journal of Educational Management. 1997;11(4):170–178. [Google Scholar]

- 29.Nulty DD. The adequacy of response rates to online and paper surveys: what can be done? Assessment & Evaluation in Higher Education. 2008;33(3):301–314. [Google Scholar]

- 30.Al-Rubaish A, Wosornu L, Dwivedi SN. Appraisal of Using Global Student Rating Items in Quality Management of Higher Education in Saudi Arabian University. iBusiness. 2012;4(1):1–9. [Google Scholar]

- 31.Al-Rubaish A, Wosornu L, Dwivedi SN. Using Deductions from Assessment Studies towards Furtherance of the Academic Program: An Empirical Appraisal of Institutional Student Course Evaluation. iBusiness. 2011;3(2):220–228. [Google Scholar]

- 32.Al-Kuwaiti A, Maruthamuthu T. Factors influencing student’s overall satisfaction in Course Evaluation Surveys: An exploratory study. International Journal of Education and Research. 2014;2(7):661–674. [Google Scholar]

- 33.Penny AR. Changing the agenda for research into students’ views about university teaching: Four shortcomings of SRT research. Teaching in higher education. 2003;8(3):399–411. [Google Scholar]

- 34.Galbraith CS, Merrill GB, Kline DM. Are Student Evaluations of Teaching Effectiveness Valid for Measuring Student Learning Outcomes in Business Related Classes? A Neural Network and Bayesian Analyses. Research in Higher Education. 2012;53(3):353–374. 2012. [Google Scholar]

- 35.Franklin J. Interpreting the numbers: Using a narrative to help others read student evaluations of your teaching accurately. New Directions for Teaching and Learning. 2001;(87):85–100. [Google Scholar]

- 36.Spooren P, Brockx B, Mortelmans D. On the Validity of Student Evaluation of Teaching: The State of the Art. Review of Educational Research. 2013;83(4):598–642. [Google Scholar]

- 37.Boysen GA, Kelly TJ, Raesly HN, Casner RW. The (mis) interpretation of teaching evaluations by college faculty and administrators. Assessment & Evaluation in Higher Education. 2013:1–16. (ahead-of-print) [Google Scholar]