Abstract

Here we focus on the description of the mechanisms behind the process of information aggregation and decision making, a basic step to understand emergent phenomena in society, such as trends, information spreading or the wisdom of crowds. In many situations, agents choose between discrete options. We analyze experimental data on binary opinion choices in humans. The data consists of two separate experiments in which humans answer questions with a binary response, where one is correct and the other is incorrect. The questions are answered without and with information on the answers of some previous participants. We find that a Bayesian approach captures the probability of choosing one of the answers. The influence of peers is uncorrelated with the difficulty of the question. The data is inconsistent with Weber’s law, which states that the probability of choosing an option depends on the proportion of previous answers choosing that option and not on the total number of those answers. Last, the present Bayesian model fits reasonably well to the data as compared to some other previously proposed functions although the latter sometime perform slightly better than the Bayesian model. The asset of the present model is the simplicity and mechanistic explanation of the behavior.

Introduction

The process of information aggregation in social systems gives rise to emergent phenomena like the wisdom of crowds [1, 2]. In order to understand such phenomena a quantitative understanding of the mechanisms by which information is aggregated and used in opinion formation and decision making is needed. In the case of the wisdom of crowds, which refers to having a better estimation of the solution to a question when the opinions of multiple heterogeneous agents are aggregated, it has been shown that social interaction can lead to misleading estimations [3]. The issue of information aggregation is a hot topic which is expected to give insights into the solution of many societal problems. For example, 2014’s World Economic Forum’s meeting has the title “Leveraging collective intelligence for unprecedented challenges”.

Models of opinion dynamics are based on assumptions on the decision making process on interacting individuals. Simple decision making rules employed in these models include proportional imitation (i.e., the rate of the opinion conversion is proportional to the number of peers possessing the different opinion), employed in the voter model, majority rules (i.e., the same rate is a superlinear function), thresholding rules (i.e., thresholding function), reinforcement rules (i.e., adaptive function depending on experiences of agents), and homophily rules (i.e., similar individuals more likely interact) [4–6]. The type of the employed decision making rule affects the possibility, final state, speed, and other dynamical phenomena of collective opinion formation. However, in physics and even social sciences literature, justification of these different types of models is at best based on a qualitative assessment of human behavior. Beyond opinion dynamics, social dilemmas, which in many cases are based on binary decision making, also offer an opportunity to bridge theory to experiments [7, 8].

For animals in groups, recent work in this direction has identified Bayesian inference as a mechanism behind their collective behavior [9–21]. In humans, experimental evidence of Bayesian inference has been provided in the realm of perceptual and cognitive domains [22, 23]. Effects of Bayesian types of inference on collective behavior have been investigated with the use of mathematical and individual-based models [16, 24–29]. Toward quantitative understanding of social decision making of humans, the seminal experiment by Milgram and colleagues [30] designed to assess the probability to stop by a group of bystanders has recently been reproduced [31] whose results are fitted by a heuristic function. There are also other recent studies attempting to fit Bayesian (see the references above), evolutionary dynamical [32], and other [33, 34] models to behavioral data. The wisdom of crowds when interaction among participants is allowed is also a target of recent experimental studies [3, 35, 36]. However, a unifying quantitative framework to infer models of social decision making on the basis of behavioral data of humans is still lacking and much preceded by accumulating modeling frameworks for social animals [11, 15, 17].

In the present study, we address the potential of the Bayesian approach to explain human decision making under social interaction. We focus on subjects answering questions with binary options, one of which is correct. This situation contrasts with that of the previous studies on the wisdom of crowds that allowed virtually real values of answers [3, 35, 36]. We examine binary choices because many options in nature are discrete, as exemplified by voting, purchasing, and deciding where to live. In many of such situations, extrapolation from continuous settings is not obvious. We use previously published data sets in which the participants first answer in the absence of social information and later with the information about the answers submitted by the r previous respondents; r gradually increases for the same question [33, 34]. The participants answer in a sequence, the situation akin to that for previous Bayesian models of the emergence of herd behavior [24, 37]. We show that simple Bayesian models reasonably explain the behavioral data.

Materials and Methods

Model

We denote the two options of a question by A and B Without loss of generality, we assume that A and B are the correct and wrong answers of the question q, respectively. We label the N agents 1, …, N and denote the option that agent i (i = 1, …, N) selects in question q by x i(q) ∈ {A, B}. We denote by P[x i(q) = A] the strength of the belief (hereafter, simply the belief), with which agent i believes in A. A parallel definition is applied to P[x i(q) = B]. Note that P[x i(q) = A], P[x i(q) = B] ≥ 0, and P[x i(q) = A] + P[x i(q) = B] = 1.

We update the agent i’s belief as follows. We assume that the answer of the previous respondent j, i.e., x j(q), is generated according to the probability specified by the belief of agent j, i.e., P[x j(q) = A], which equals 1 − P[x j(q) = B]. Then, by using the Bayes’ theorem, agent i is assumed to update the belief on the basis of the old belief and x j(q). The posterior belief of agent i is given by

| (1) |

where P[x i(q) = A]pre and P[x i(q) = B]pre are prior beliefs summing up to unity. Parameter c ≡ P[x j(q) = A∣x i(q) = A] (1/2 ≤ c < 1) represents the flexibility of agent i in response to agent j’s answer. If c is close to unity, P[x j(q) = B∣x i(q) = A] = 1 − c is small such that 1 − P[x i(q) = A]post, i.e., P[x i(q) = B]post is large once agent i observes x j(q) = B for a given P[x i(q) = A]pre. If c is close to 1/2, P[x i(q) = A]post is insensitive to x j(q). By symmetry, we assumed that P[x j(q) = B∣x i(q) = B] = c such that P[x j(q) = A∣x i(q) = B] = 1 − P[x j(q) = B∣x i(q) = B] = 1 − c.

Iterative application of Equation (1) leads to

| (2) |

and P[x i(q) = B] = 1 − P[x i(q) = A], where n A and n B are the accumulated numbers of A and B responses of the previous respondents observed by agent i, respectively. The initial belief of agent i in option A is denoted by P 0[x i(q) = A]. It should be noted that the order in which the previous responses are observed does not affect i’s behavior. The belief of each agent i is uniquely determined by n A − n B and the initial belief. We can rewrite Equation (2) as

| (3) |

where p = {1 − P 0[x i(q) = A]}/P 0[x i(q) = A] and s = (1 − c)/c. Previous studies used Equation (3) to account for consensus decision making by fish [38, 39].

Data set

In the present study, we use the two data sets collected in Refs. [33, 34]. The first data set, which we denote by D 1, consists of two sets of face-to-face experiments [33]. Data set D 1 consists of the results obtained from two populations of subjects each of which contains N = 31 subjects (KUE-A and KUE-B in Ref. [33]). Each subject went through 100 questions. Each question allowed binary options, one being correct and the other being incorrect. Generally speaking, the subjects were asked to answer each question more than once under different information conditions. We refer to a sequence of answering sessions under a given question q (1 ≤ q ≤ 100) and information condition parameterized by r as a round. Subjects went through several rounds for each question in general.

The number of rounds that a subject experienced for each question depends on the subject. The N subjects in a population were randomly assigned labels 1, 2, …, N. In the first round, all subjects answered the question without referring to others’ responses. This is the memoryless condition (r = 0). If everybody answered within the allocated time, there were N data points for each population and question.

The second round was implemented as follows. First, subject 1 left this question without participating in the second and following rounds. Second, subject 2 observed the answer of subject 1 in the first round and possibly updated the private answer. Similarly, subject i observed subject (i − 1)’s answer in the first round and possibly updated the answer, where i runs from i = 3 to i = N in an ascending order. In the best case whereby everybody answered, N − 1 data points were collected in the second round. The collected data correspond to information condition r = 1.

The third round, corresponding to r = 2, was implemented as follows. First, subject 2 left without participating in the third and further rounds. Second, subject 3 observed the number of answers (n A, n B) submitted most recently by the previous r = 2 respondents and answered the question again. It should be noted that (n A, n B) = (2, 0), (1, 1), or (0, 2). To calculate (n A, n B), the answer of subject 1 in the first round and that of subject 2 in the second round were used. This is because subject 1 already left the question before the second round. In other words, the answer of subject 1 is assumed to be quenched to that made in the first round in the subsequent (i.e., second and later) rounds. Third, subject i answered after observing (n A, n B) calculated on the basis of the most recent choice of subjects i − 1 and i − 2, where i runs from 4 to N. There are at most N − 2 answers obtained from the third round.

After the third round was completed, further rounds were carried out with r = 3, 5, 7, 9, and ∞ in this order, where r = ∞ implies that the subjects can refer to the most recent answers of all the preceding respondents. Subject 3 had left before the fourth round, corresponding to r = 3, started. Subjects 4 and 5 had left before the fifth round, corresponding to r = 5, started. There are eight rounds in total. The labels of the subjects were fixed throughout the 100 questions.

The second data set, which we denote by D 2, consists of two sets of web-based experiments. They are denoted by HUE-A and HUE-B in Ref. [33] and the O and C treatments, corresponding to r = 0 and r > 0, respectively, in Exp-II in Ref. [34]. Data set D 2 consists of the results obtained from two subject populations each of which contains N = 52 subjects. Each subpopulation of subjects went through 120 questions. In D 2, each subject experienced up to 6 rounds, i.e., r = 0, 1, 5, 11, 21, and ∞ for each question. The labels of the subjects were randomly shuffled in the beginning of each question.

Results

Let us first consider the aggregate results for each experiment. As described previously, a subject answers a question after observing the number of the correct answer, n A, and that of the incorrect answer, n B, from the last r = n A + n B respondents. By the aggregate results we mean that we aggregate the number of correct answers across questions for the same condition (n A, n B). We denote by R(n A, n B) the number of answers obtained under condition (n A, n B), summed over respondents i and questions q. Out of these answers, the number of answer A, denoted by N A(n A, n B), is given by

| (4) |

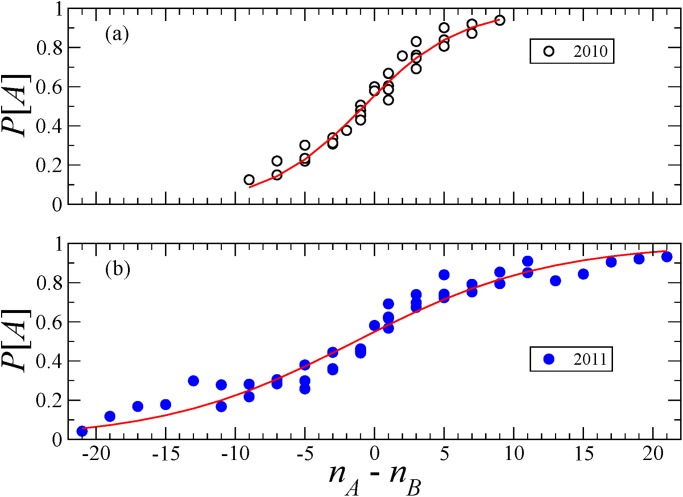

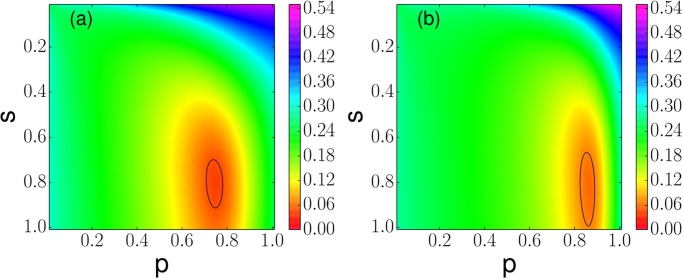

The fraction of A answers under condition (n A, n B) is given by N A(n A, n B)/R(n A, n B). This fraction for various (n A, n B) pairs is plotted in Fig. 1(a) and (b) for D 1 and D 2, respectively. We fit P[x i = A] given by Equation (3) to the experimental data, where we suppress q in the argument of x i because we have aggregated the data over the questions. We estimate the values of p and s by an exhaustive sampling in the parameter space. For each sampled (p, s) pair, we calculate the error by the total square distance between Equation (3) and the empirical values summed over the available (n A, n B) pairs. The parameter values yielding the smallest error are adopted. The results of the best fitting are shown by the solid curves in Fig. 1(a) and (b) for D 1 and D 2, respectively. For D 1, the best fit is obtained for p = 0.81, s = 0.75 which lead to a root mean squared error RMSE ≈ 0.042. For D 2, we obtain p = 0.82, s = 0.87 leading to RMSE ≈ 0.059. Fig. 1 indicates that Equation (3) fits both data sets reasonably well. The value of the RMSE as a function of both parameters is shown in Fig. 2.

Fig 1. Bayesian inference and experimental data.

We plot the probability to report a correct answer A as a function of n A − n B for various (n A, n B) pairs: (a) Data set D 1, (b) Data set D 2. The circles correspond to the data. The solid curves indicate the best fits of Equation (3): (p, s) = (0.80, 0.75) in (a) and (0.82, 0.87) in (b).

Fig 2. Parameter estimation.

Root mean squared error associated to the fitting of the model given by Equation (3) to data sets (a) D 1 and (b) D 2. The contour line shows a level of 0.05 and 0.07 in (a) and (b), respectively.

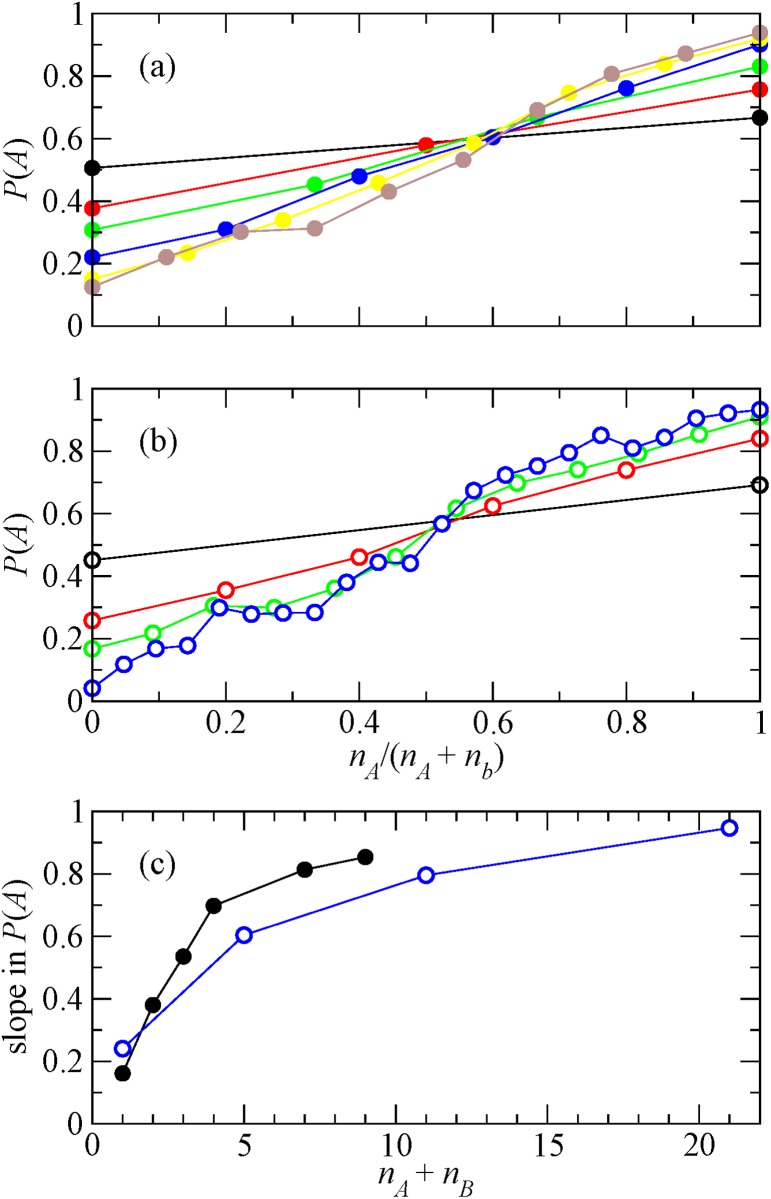

An alternative hypothesis of collective decision making is that P[x i = A] obeys Weber’s law such that it is a function that only depends on (n A − n B)/(n A + n B), or equivalently, n A/(n A + n B) [15, 17]. To test this hypothesis, we aggregate the data over q and i using the same aggregation as that used in Fig. 1, but separately for r to examine the effect of r on the decision making, and plot P[x i = A] as a function of n A/(n A + n B). The results are shown in Fig. 3(a) and (b) for D 1 and D 2, respectively. Each color corresponds to a value of r = n A + n B. If Weber’s law holds true, all curves collapse on a single curve. Fig. 3 indicates that it is not the case. To be more quantitative, in Fig. 3(c), we plot the slope of the curves obtained by applying the least square method to the data shown in Fig. 3(a) and (b). The figure indicates that the slope increases with r( = n A + n B) and seems to saturate. That would mean that Weber’s law is correct for sufficiently large r values. Nevertheless, for the r values accessed by the experiment, Weber’s law does not hold. With data for larger r values one could assess if Weber’s law holds and from which r value on.

Fig 3. Dependence on the fraction of correct answers.

(a) Probability to answer correctly as a function of the fraction of correct answers of the previous respondents for data set D 1. Black r = 1, red r = 2, green r = 3, blue r = 4, yellow r = 7, and brown r = 9. (b) Same results for data set D 2. Black r = 1, red r = 5, green r = 11, and blue r = 21. (c) Slope as a function of r obtained by the least square method applied to the plots in panels (a) and (b). The closed and open circles correspond to D 1 and D 2, respectively.

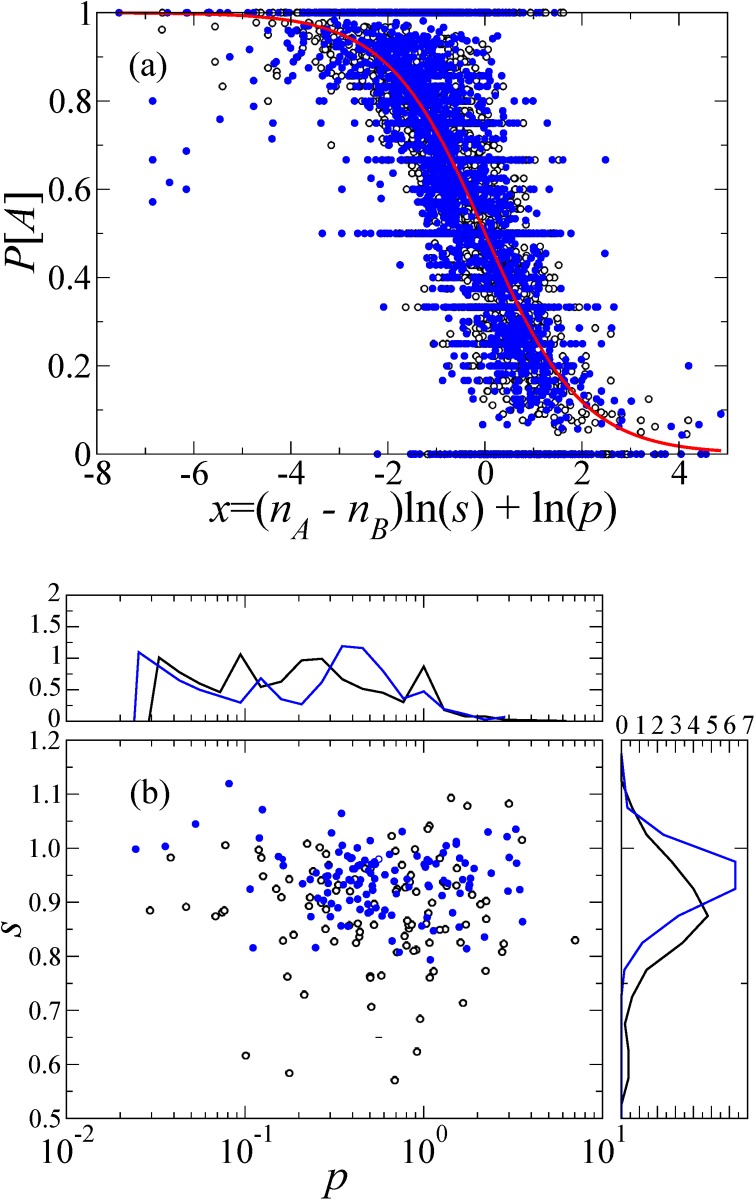

We have a reasonable fit of the data to Equation (3) even without aggregation over the questions. To show this, for a given question, we calculate the fraction of the correct answers , where R q(n A, n B) is the number of answers to question q obtained under condition (n A, n B), and is the corresponding number of answer A. The relationship between P[x i(q) = A] and z = (n A − n B) ln s q + ln p q for different questions is plotted in Fig. 4(a). If Equation (3) holds true, the results for different questions should collapse on a single curve P[x i(q) = A] = [1 + exp(z)]−1 shown by the solid line. The results for the different questions do roughly collapse on this curve. The estimated values of p q and s q for individual questions are shown in Fig. 4(b). As before, we obtained parameter values p q and s q by sampling the parameter space and finding the values giving the smallest error. Fig. 4(b) shows that the estimated parameter values depend on the question to a large extent. For some questions, p > 1, implying that the initial belief in the correct answer is worse than the random coin flip, i.e., P 0[x i(q) = A] < 0.5. For a majority of questions, however, the initial belief is better than the random coin flip, and for some questions, it is quite accurate (for example, p = 0.1 corresponds to P 0[x i(q) = A] = 0.91). Another remark is that p and s are apparently uncorrelated. This implies that the flexibility of the opinion change does not depend on the difficulty of the question.

Fig 4. Dependence on the question.

(a) Probability of correct answers as function of rescaled accumulated answers of previous respondents (n A − n B) ln (s) + ln (p). Each symbol represents a question. Black, open symbols correspond to D 1, and blue filled circles to D 2. We estimated the s and p values for each question by applying the least square method to the data for the corresponding question. (b) Estimated p and s values for different questions. The top and side panels show the distributions of p and s, respectively.

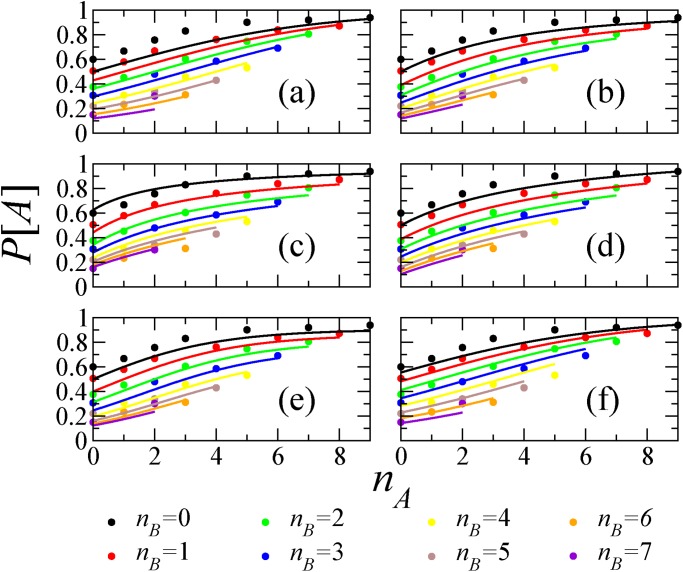

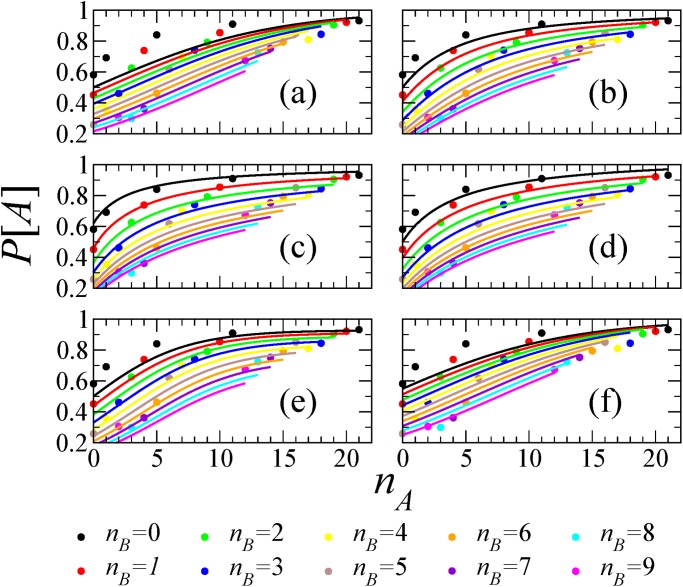

In the literature one can find different models that propose different functional forms for P[A∣n A, n B]. Following [17], we fitted several of them [17, 39–42] to the current data. The quality of fitting is shown in Figs. 5 and 6 for data sets D 1 and D 2, respectively, in different colors for different values of n B. For D 1, the best results are produced with the model in Ref. [42] with a RMSE ≈ 0.035, followed closely by the model presented in this paper (RMSE ≈ 0.042). For D 2, the best fitting (RMSE ≈ 0.046) is produced with the model in Ref. [42], and followed closely by the models in Refs. [40, 41] (RMSE ≈ 0.053) and Ref. [17] (RMSE ≈ 0.054), with none of them being the one in this paper. See Table 1 for more information.

Fig 5. Testing different models for data set D 1.

(a) , δ = 1.33, RMSE = 0.06, (b) P[A] = (δ + n A)ϵ/[(δ + n A)ϵ + (δ + n B)ϵ], δ = 3.90, ϵ = 1.95, RMSE = 0.05, (c) P[A] = (δ + ϵn A)/[1 + ϵ(n A + n B)], δ = 0.63, ϵ = 0.41, RMSE = 0.03, (d) P[A] = 1/2 + δ(n A − n B)/(n A + n B + ϵ), δ = 0.68, ϵ = 5.06, RMSE = 0.06, (e) P[A] = [1 + (1 + δϵ−nA)/(1 + δϵ−nB)]-1, δ = 8.32, ϵ = 1.60, RMSE = 0.05, (f) P[A] = (1 + ps nA−nB)−1, p = 0.81, s = 0.75, RMSE = 0.04. The different colors correspond to n B = 0 (black), 1 (red), 2 (green), 3 (blue), 4 (yellow), 5 (brown), 6 (grey), 7 (violet).

Fig 6. Testing different models for data set D 2.

(a) , δ = 1.15, RMSE = 0.07, (b) P[A] = (δ + n A)ϵ/[(δ + n A)ϵ + (δ + n B)ϵ], δ = 3.66, ϵ = 1.52, RMSE = 0.05, (c) P[A] = (δ + ϵn A)/[1 + ϵ(n A + n B)], δ = 0.62, ϵ = 0.35, RMSE = 0.05, (d) P[A] = 1/2 + δ(n A − n B)/(n A + n B + ϵ), δ = 0.57, ϵ = 4.39, RMSE = 0.06, (e) P[A] = [1 + (1 + δϵ−nA)/(1 + δϵ−nB)]−1, δ = 12.01, ϵ = 1.30, RMSE = 0.05, (f) P[A] = (1 + ps nA−nB)−1, p = 0.82, s = 0.87, RMSE = 0.06. The different colors correspond to n B = 0 (black), 1 (red), 2 (green), 3 (blue), 4 (yellow), 5 (brown), 6 (grey), 7 (violet), 8 (cyan), 9 (pink).

Table 1. Fitting results for different models.

| Model P[A] | Fitted parameters and RMSE | [Refs.] | |||

|---|---|---|---|---|---|

| D 1 | RMSE | D 2 | RMSE | ||

| δ = 1.33 | 0.061 | δ = 1.15 | 0.070 | [39] | |

| δ = 3.90, ϵ = 1.95 | 0.054 | δ = 3.66, ϵ = 1.52 | 0.053 | [40, 41] | |

| δ = 0.63, ϵ = 0.41 | 0.035 | δ = 0.62, ϵ = 0.35 | 0.046 | [42] | |

| δ = 0.68, ϵ = 5.06 | 0.056 | δ = 0.57, ϵ = 4.39 | 0.057 | [15] | |

| δ = 8.32, ϵ = 1.60 | 0.054 | δ = 12.01, ϵ = 1.30 | 0.054 | [17] | |

| (1 + ps nA−nB)−1 | p = 0.81, s = 0.75 | 0.042 | p = 0.82, s = 0.87 | 0.059 | [here] |

Results of fitting different models for P[A] to data sets D 1 and D 2.

It should be noted that the first model in Table 1 is equivalent to a special case of our model (i.e., p = 1). Therefore, the fitting cannot be better than the present model. Note also that we fitted the model in Ref. [17] with k = 0. The result of fitting with k as a free parameter gives rise to very small values of k (k = 0.04 for D 1 and k = 0.065 for D 2), in the order of 10−2. The parameter ϵ is insensitive to the small value of k being different to 0 (ϵ = 1.60 for D 1 and ϵ = 1.30 for D 2), while parameter δ is a much more sensitive (δ = 7.04 for D 1 and δ = 8.72 for D 2) (compare to results in Table 1), as the minimum in the optimization is flatter in the direction of the δ parameter, as happens also for parameter p in the present model (see Fig. 2). The quality of the fittings is of the same order as when using k = 0 (RMSE = 0.054 for D 1 and RMSE = 0.054 for D 2). This also happens for the zebrafish data in Ref. [17].

Discussion

We showed that the simple Bayesian model provides a quantitative agreement with behavioral data of humans sequentially answering questions with binary options. At least two other studies used the same model as ours to be fit to data in different contexts. In Ref. [39], sequential choices by fish between two identical refugia are modeled. Depending on whether the two refugia are identical or nonidentical (i.e., only one arm was with a replica predator), the unbiased prior (p = 1 in our notation) or a biased one (p ≠ 1) is used, respectively. In both unbiased and biased prior cases, the authors concluded s ≈ 0.4 (and the results are robust for 0.25 ≤ s ≤ 0.5), translating into c = 1/(s + 1) ≈ 0.7 in our notation. In another experiment with a different fish species, where fish individuals chose one of the two arms of a maze to avoid replica predators, Ward and colleagues [38] estimated s ≈ 1/e 0.478 ≈ 0.62, translating into c ≈ 0.62. In contrast, our results indicate s ≈ 0.7 − 0.8 and hence c ≈ 0.56 − 0.59. This difference may result from different species; humans may have lower responsitivity to social stimuli (i.e., c value closer to 0.5) than fish (see Ref. [32] for related experiments). The type of the task may also contribute to this difference. In the current study, the data sets used are quizzes asking general knowledge of the participants. By contrast, in the fish experiments, each fish chose between two pathways that were identical except for the possible presence of a replicator predator.

Quantitatively, some models fit better to our data than the present model does, in particular for data set D 2 (Table 1). However, it should be noted that some of these previous models were proposed as fits, without particular mechanistic derivation [40–42]. Another model, i.e., the fourth model in Table 1 [17], which results from the Taylor expansion of the model proposed in Ref. [15], has mechanistic underpinning. However, the model is derived from ant’s random walk on a specific arena [15]. In particular, the exit point that corresponds to the decision of one of the two alternatives is literally the spatial exit point of the animal. That may be why this model [15, 17] does not fit well to the present data. Compared to Arganda’s model [17] (fifth model in Table 1), the present model fits better to data set D 1 and worse to D 2.

A way to differentiate between models is to have data on the behavior for large number of information sources (large r). In that limit the different models provide different functional forms for P[x = A]. Therefore, the models from Table 1 give rise to different limits r → ∞. The first and the last one (model used in this paper) give rise to a step function. The second model converges to x ϵ/(x ϵ + (1 − x)ϵ), where x is the fraction of A responses, which coincides with Weber’s law for ϵ = 1. However, the values of ϵ estimated for our data are much larger than unity. The third function for large r approximates the fraction of A responses. The fourth function gives 1/2 + δ(2x − 1), which is a good approximation of the previous model given that the fitting parameter δ ≃ 1/2 for our data sets. The fifth model gives a constant value P[x = A] = 1/2 in the limit r → ∞. More experimental data for large r would enable the further validation of models.

There are some limitations of the present study. First, we ignored the individuality of the respondents. In fact, for each question, there should be those who know the correct answer and those who do not. Such personal knowledge can be incorporated to models for sequential answering [24, 33]. Clarifying this issue warrants future work. Second, we tried to incorporate the information about the previous responses into our model. However, the design of the experiment makes it difficult to cope with this issue. The answers offered to subject i in each round are not a random sample from the pool of responses in the previous round, but are the responses of the previous respondents i − 1, i − 2, …, i − r as initially labeled, which represents a biased sampling. Together with the influence of the history of self-responses on the new decision, these features affect the decision making process of the subjects and thus the evolution of the fraction of correct answers. Indeed in many situations individuals are not making decision from a tabula rasa but they are shaping decisions continuously from social interactions and external signals. Future developments of the theory are expected to incorporate these ingredients to deal with more realistic situations. Besides, large scale experiments taking advantage of the new technologies available would be welcome to confront with decision making theories.

Acknowledgments

We acknowledge the authors of Refs. [33, 34] for making their data open to public. We also thank Shintaro Mori for discussion and giving us information about the detailed procedure of their data acquisition. We also acknowledge insightful discussions with Gonzalo G. de Polavieja and Konstantin Klemm.

Data Availability

Data are from the study Mori S, Hisakado M, Takahashi T (2012) Phase transition to a two-peak phase in an information cascade voting experiment. Phys Rev E 86: 026109 whose authors may be contacted at mori@sci.kitasato-u.ac.jp.

Funding Statement

Project (FIS2011-24785) from the Ministerio de Economía y Competitividad (Spain) and FEDER (EU) supprted VME and JFG. CREST, Japan Science and Technology Agency and CREST program supported NM. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Galton F. Vox populi. Nature 1907;75: 450–451. 10.1038/075450a0 [DOI] [Google Scholar]

- 2. Surowiecki J. The Wisdom of Crowds. New York NY: Anchor Books; 2005. [Google Scholar]

- 3. Lorenz J, Rauhut H, Schweitzer F, Helbing D. How social influence can undermine the wisdom of crowd effect. Proc Natl Acad Sci USA 2011;108: 9020–9025. 10.1073/pnas.1008636108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Axelrod R. The Complexity of Cooperation. Princeton NJ: Princeton University Press; 1997. [Google Scholar]

- 5. Castellano C, Fortunato S, Loreto V. Statistical physics of social dynamics. Rev Mod Phys 2009;81: 591–646 10.1103/RevModPhys.81.591 [DOI] [Google Scholar]

- 6. Galam S. Sociophysics: A Physicist’s Modeling of Psycho-political Phenomena. New York NY: Springer; 2012. [Google Scholar]

- 7. Helbing D, Wenjian Y (2010) The future of social experimenting. Proc Natl Acad Sci USA 107: 5265–5266. 10.1073/pnas.1000140107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Szolnoki A, Wang Z, Perc M. Wisdom of groups promotes cooperation on evolutionary social dilemmas. Sci Rep 2012;2: 576 10.1038/srep00576 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. McNamara JM, Houston AI. The application of statistical decision theory to animal behaviour. J Theor Biol 1980;85: 673 – 690. 10.1016/0022-5193(80)90265-9 [DOI] [PubMed] [Google Scholar]

- 10. D Kahneman AT, Tversky A. Subjective probability: a judgment of representativeness In: Kahneman D, Slovic P, Tversky A, editors. Judgment under Uncertainty: Heuristics and Biases. Cambridge: Cambridge University Press; 1982. p. 32–47. [Google Scholar]

- 11. McNamara JM, Green RF, Olsson O. Bayes’ theorem and its applications in animal behaviour. Oikos 2006;112: 243–251. 10.1111/j.0030-1299.2006.14228.x [DOI] [Google Scholar]

- 12. Martins ACR, de B Pereira C, Vicente R. An opinion dynamics model for the diffusion of innovations. Physica A 2009;388: 3225–3232. 10.1016/j.physa.2009.04.007 [DOI] [Google Scholar]

- 13. McKay R, Efferson C. The subtleties of error management. Evol Hum Behav 2010;31: 309 – 319. 10.1016/j.evolhumbehav.2010.04.005 [DOI] [Google Scholar]

- 14. Trimmer PC, Houston AI, Marshall JAR, Mendl MT, Paul ES, McNamara JM. Decision-making under uncertainty: biases and bayesians. Anim Cogn 2011;14: 465–476. 10.1007/s10071-011-0387-4 [DOI] [PubMed] [Google Scholar]

- 15. Perna A, Granovskiy B, Garnier S, Nicolis SC, Labédan M, Theraulaz G, et al. Individual rules for trail pattern formation in Argentine ants (Linepithema humile). PLoS Comput Biol 2012;8: e1002592 10.1371/journal.pcbi.1002592 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Andreoni J, Mylovanov T Diverging opinions. Am Econ J Microecon 2012;4: 209–232 10.1257/mic.4.1.209 [DOI] [Google Scholar]

- 17. Arganda S, Pérez-Escudero A, de Polavieja GG. A common rule for decision making in animal collectives across species. Proc Natl Acad Sci USA 2012;109: 20508–20513 10.1073/pnas.1210664109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Johnson DD, Blumstein DT, Fowler JH, Haselton MG. The evolution of error: error management, cognitive constraints, and adaptive decision-making biases. Trends Ecol Evol 2013;28: 474 – 481. 10.1016/j.tree.2013.05.014 [DOI] [PubMed] [Google Scholar]

- 19. Marshall HH, Carter AJ, Ashford A, Rowcliffe JM, Cowlishaw G. How do foragers decide when to leave a patch? a test of alternative models under natural and experimental conditions. J Anim Ecol 2013;82: 894–902. 10.1111/1365-2656.12089 [DOI] [PubMed] [Google Scholar]

- 20. Marshall JAR, Trimmer PC, Houston AI, McNamara JM. On evolutionary explanations of cognitive biases. Trends Ecol Evol 2013;28: 469 – 473. 10.1016/j.tree.2013.05.013 [DOI] [PubMed] [Google Scholar]

- 21. Pérez-Escudero A, Miller N, Hartnett AT, Garnier S, Couzin ID, de Polavieja GG. Estimation models describe well collective decisions among three options. Proc Natl Acad Sci USA 2013;110: E3466–E3467. 10.1073/pnas.1309867110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci 2004;27: 712–719. 10.1016/j.tins.2004.10.007 [DOI] [PubMed] [Google Scholar]

- 23. Tenenbaum JB, Kemp C, Griffiths TL, Goodman ND. How to grow a mind: statistics, structure, and abstraction. Science 2011;331: 1279–1285. 10.1126/science.1192788 [DOI] [PubMed] [Google Scholar]

- 24. Banerjee AV. A simple model of herd behavior. Q J Econ 1992;107: 797–817. 10.2307/2118364 [DOI] [Google Scholar]

- 25. Orléan A. Bayesian interactions and collective dynamics of opinion: Herd behavior and mimetic contagion. J Econ Behav Organ 1995;28: 257–274. 10.1016/0167-2681(95)00035-6 [DOI] [Google Scholar]

- 26. Martins ACR. Continuous opinions and discrete actions in opinion dynamics problems. Int J Mod Phys C 2008;19: 617–624. 10.1142/S0129183108012339 [DOI] [Google Scholar]

- 27. Binmore K. Rational Decisions. Princeton NJ: Princeton University Press; 2008. [Google Scholar]

- 28. Acemoglu D, Ozdaglar A. Opinion dynamics and learning in social networks. Dyn Games Appl 2011;1: 3–49. 10.1007/s13235-010-0004-1 [DOI] [Google Scholar]

- 29. Nishi R, Masuda N. Collective opinion formation model under bayesian updating and confirmation bias. Phys Rev E 2013;87: 062123 10.1103/PhysRevE.87.062123 [DOI] [PubMed] [Google Scholar]

- 30. Milgram S, Bickman L, Berkowitz L. J Pers Soc Psychol 1969;13: 79 10.1037/h0028070 [DOI] [Google Scholar]

- 31. Gallup AC, Hale JJ, Sumpter DJT, Garnier S, Kacelnik A, Krebs JR, et al. Proc Natl Acad Sci USA 2012;109:7245 10.1073/pnas.1116141109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Traulsen A, Semmann D, Sommerfeld RD, Krambeck HJ, Milinski M. Human strategy updating in evolutionary games. Proc Natl Acad Sci USA 2010;107: 2962–2966. 10.1073/pnas.0912515107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Mori S, Hisakado M, Takahashi T. Phase transition to a two-peak phase in an information-cascade voting experiment. Phys Rev E 2012;86: 026109 10.1103/PhysRevE.86.026109 [DOI] [PubMed] [Google Scholar]

- 34. Mori S, Hisakado M, Takahashi T. Collective adoption of max–min strategy in an information cascade voting experiment. J Phys Soc Japan 2013;82: 084004 10.7566/JPSJ.82.084004 [DOI] [Google Scholar]

- 35. King AJ, Cheng L, Starke SD, Myatt JP. Is the true’ wisdom of the crowd’ to copy successful individuals? Biol Lett 2012;8: 197–200. 10.1098/rsbl.2011.0795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Moussaïd M, Kämmer JE, Analytis PP, Neth H. Social influence and the collective dynamics of opinion formation. PLoS ONE 2013;8: e78433 10.1371/journal.pone.0078433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Bikhchandani S, Hirshleifer D, Welch I. A theory of fads, fashion, custom, and cultural change as informational cascades. J Polit Econ 1992;100: 992–1026. 10.1086/261849 [DOI] [Google Scholar]

- 38. Ward AJW, Herbert-Read JE, Sumpter DJT, Krause J. Fast and accurate decisions through collective vigilance in fish shoals. Proc Natl Acad Sci USA 2011;108: 2312–2315. 10.1073/pnas.1007102108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Pérez-Escudero A, de Polavieja GG. Collective animal behavior from bayesian estimation and probability matching. PLoS Comput Biol 2011;7: e1002282 10.1371/journal.pcbi.1002282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Goss S, Aron S, Deneubourg JL, Pasteels JM. Self-organized shortcuts in the Argentine ant. Naturwissenschaften 1989;76: 579–581. 10.1007/BF00462870 [DOI] [Google Scholar]

- 41. Deneubourg JL, Aron S, Goss S, Pasteels JM. The self-organizing exploratory pattern of the Argentine ant. J Insect Behav 1990;3: 159–168. 10.1007/BF01417909 [DOI] [Google Scholar]

- 42. Meunier H, Leca JB, Deneubourg JL, Petit O. Group movement decisions in capuchin monkeys: the utility of an experimental study and a mathematical model to explore the relationship between individual and collective behaviours. Behaviour 2006;143: 1511–1527 10.1163/156853906779366982 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are from the study Mori S, Hisakado M, Takahashi T (2012) Phase transition to a two-peak phase in an information cascade voting experiment. Phys Rev E 86: 026109 whose authors may be contacted at mori@sci.kitasato-u.ac.jp.