Abstract

Bilingual infants succeed at learning their first two languages. What adaptive processes enable them to master the more complex nature of bilingual input? One possibility is that bilingual infants take greater advantage of the redundancy of the audiovisual speech that they usually experience during social interactions. Thus, we investigated whether bilinguals’ need to keep languages apart increases their attention to the mouth as a source of redundant and reliable speech cues. We measured selective attention to talking faces in 4-, 8-, and 12-month-old Catalan- and Spanish- monolingual and bilingual infants. Monolingual data paralleled previous findings, whereas bilingual data suggested an emerging move away from the eyes beginning earlier in development, followed by increasing attention to the mouth from 8 to 12 months of age. Thus, bilingual infants exploit the greater perceptual salience of redundant audiovisual speech cues earlier and longer than monolinguals to support their dual language acquisition processes.

Keywords: Bilingualism, Human Infants, Language Development, Audiovisual Speech, Multisensory Perception, Selective Attention

Introduction

Infants growing up bilingual appear to acquire two first languages as easily as monolingual infants acquire a single language. This is remarkable because the bilinguals’ task is far more difficult. Nonetheless, they learn the basic properties of their two input languages rapidly and manage to functionally separate their linguistic systems. What mechanisms enable bilingual infants to acquire two languages?

Existing data provide insights into some of these mechanisms. Some evidence indicates that monolingual and bilingual infants (a) acquire canonical babbling skills at the same time (Oller, Eilers, Urbano, & Cobo-Lewis, 1997), and that monolinguals and bilinguals can (b) distinguish between different languages at birth (Byers-Heinlein, Burns, & Werker, 2010), (c) differentiate phonologically close languages (Bosch & Sebastián-Gallés, 2001b; Molnar, Gervain, & Carreiras, 2014), and (d) discriminate consonantal phonetic contrasts (Burns, Yoshida, Hill, & Werker, 2007; Sundara, Polka, & Molnar, 2008). In contrast, other evidence suggests that bilingual infants develop some adaptive processes that permit them to deal with the more complex nature of dual language input (Byers-Heinlein & Fennell, 2014). For example, bilinguals maintain their sensitivity to lexical stress (Bijeljac-Babic, Serres, Höhle, & Nazzi, 2012) and differ from monolinguals in the time-course of building some specific contrastive phonetic categories (Bosch & Sebastián-Gallés, 2003; Garcia-Sierra et al., 2011; Sebastián-Gallés & Bosch, 2009). Moreover, bilinguals can distinguish between a native and a non-native language or between two non-native languages based on visual attributes alone at an age when monolingual infants no longer do (Sebastián-Gallés, Albareda-Castellot, Weikum, & Werker, 2012; Weikum et al., 2007).

Although research exploring infants’ ability to process language has been done mostly in the auditory domain, typical social interactions involve exposure to audiovisual speech. Moreover, infants become interested in audiovisual speech as they grow and acquire experience (Lewkowicz & Hansen-Tift, 2012; Tenenbaum, Shah, Sobel, Malle, & Morgan, 2013). For example, at four months American-English monolingual infants attend to a talker's eyes but by 8 months they shift their attention to her mouth (Lewkowicz & Hansen-Tift, 2012). This attentional shift gives infants access to highly salient redundant audiovisual speech cues just as they begin to babble and is likely to facilitate acquisition of speech perception and production.

To profit from the greater salience of redundant audiovisual speech, infants must be able to integrate multisensory information. Indeed, studies show that this general ability emerges in infancy (Kuhl & Meltzoff, 1982; Lewkowicz, 2010; Lewkowicz & Ghazanfar, 2006; Lewkowicz & Pons, 2013; Patterson & Werker, 1999; Pons, Lewkowicz, Soto-Faraco, & Sebastián-Gallés, 2009; Rosenblum, Schmuckler, & Johnson, 1997; Teinonen, Aslin, Alku, & Csibra, 2008). Studies also show that once reliance on redundant multisensory input begins in infancy, it becomes the default mode of perceptual functioning (Rosenblum, 2008; Stein, 2012). For example, congenitally deaf children who are fitted with cochlear implants exhibit enhanced responsiveness to audiovisual as opposed to auditory or visual speech (Bergeson, Pisoni, & Davis, 2005), people who are deprived of vision during infancy due to congenital cataracts exhibit deficits in audiovisual speech integration as adults (Putzar, Goerendt, Lange, Rösler, & Röder, 2007), and adults’ comprehension of audiovisual speech is better than of auditory speech (Sumby & Pollack, 1954).

If selective attention to redundant audiovisual speech cues facilitates acquisition of speech in monolingual infants, might this be even greater in bilingual infants? Studies have found that even though 4.5- and 6-month-old bilingual infants learning two rhythmically close languages can distinguish between such languages in an auditory-only task (Bosch & Sebastián-Gallés, 2001b), they exhibit longer latencies to orient to native auditory-only utterances than monolinguals when they have to discriminate one of their two familiar languages from a non-familiar language (Bosch & Sebastián-Gallés, 1997; Costa & Sebastián-Gallés, 2014). This suggests that bilinguals’ recognition of their native languages can be challenging in the absence of concurrent and redundant visual speech cues. Because bilingual infants need to unequivocally recognize both of their native languages and simultaneously keep them apart, they may exploit audiovisual speech cues more than monolinguals. Specifically, bilinguals may begin to attend to a talker's mouth earlier and more during the initial stage of dual language acquisition to learn the specific properties of each language. Moreover, bilingual infants may continue to take greater advantage of the redundancy of audiovisual speech cues located in a talker's mouth given that only 8-month-old bilingual infants can discriminate two non-native languages based on visual cues alone (Sebastián-Gallés et al., 2012).

We tested our predictions by examining selective attention in monolingual infants (Catalan- or Spanish-learning; Experiment 1) and in bilingual infants (Experiment 2) to the eyes and mouth of a talker producing native (or dominant) or non-native audiovisual speech. This enabled us to determine whether the previous results from American-English learning monolingual infants generalize to a different monolingual population and whether bilingual infants take greater advantage of audiovisual redundancy.

Experiment 1

Here, we attempted to corroborate and extend previous findings of developmental changes in monolingual infants’ relative deployment of selective attention to the eyes and mouth of a talker (Lewkowicz & Hansen-Tift, 2012) to infants from a different cultural background learning different languages (either Catalan or Spanish).

Method

Participants

A total of sixty infants, consisting of separate groups of 4-, 8- and 12-month-old infants, were tested. All infants were raised in a monolingual environment and were exposed to Catalan or Spanish at least 90% of the time. The linguistic status of the infants’ environment was carefully assessed by means of a language questionnaire (Bosch & Sebastián-Gallés, 2001b). The first age group consisted of 20 monolingual 4-month-old infants (8 from Catalan-speaking families and 12 from Spanish-speaking families, mean daily exposure to Catalan or Spanish 95.3%, SD: 3.9; 10 males, [M age, 4 months; range 3 months 28 days – 4 months 7 days]). The second group consisted of 20 monolingual 8-month-old infants (9 from Catalan-speaking families and 11 from Spanish-speaking families, mean daily exposure to Catalan or Spanish 95%, SD: 4.4; 12 males, [M age, 8 months; range, 7 months 29 days – 8 months 15 days]). The third age group consisted of 20 monolingual 12-month-old infants (11 from Catalan-speaking families and 9 from Spanish-speaking families, mean daily exposure to Catalan or Spanish 95.3%, SD: 3.9; 12 males, [M age, 12 months; range, 11 months 24 days – 12 months 14 days; 12 males]). Thirty-one additional infants were tested but did not contribute any usable data because of fussing or crying (7), failure to properly calibrate that was attributable either to the infant being uncooperative or to the eye tracker not being able to properly find the pupil (22), parental interference (1), and experimental error (1). The sample size in this study is consistent with the typical sample sizes used in other infant perception studies and, thus, our data-collection stopping rule was based on common practices in the infant development field.

Apparatus and Stimuli

Infants were seated in an infant seat in a sound-attenuated and dimly illuminated room, approximately 60 cm in front of a 17” computer monitor. Stimuli were presented on the computer monitor using Tobii Studio software and eye movements were recorded by a Tobii X120 standalone eye tracker at a sampling rate of 60 Hz. The stimuli consisted of 45 s multimedia movies of one of two female actors who were native speakers in their respective languages reciting a prepared monologue. One of the actors recited a Spanish or a Catalan version of the monologue, whereas the other recited an English version of the monologue. Thus, each infant watched two videos, one in her native language and the other in a non-native language. The order of the videos was counterbalanced across infants. To recruit maximal attention, the monologues were recited in an infant-directed (ID) manner (Fernald, 1985).

Procedure

We used the Tobii eye tracker's 5-point calibration routine to calibrate each infant's gaze behavior. As soon as the calibration routine was completed, we presented the two videos to each infant. While the infants watched the videos, we monitored their looking at two areas of interest (AOI) with the eye tracker. One AOI was the area around the talker's eyes while the other was the area around the talker's mouth.

Results

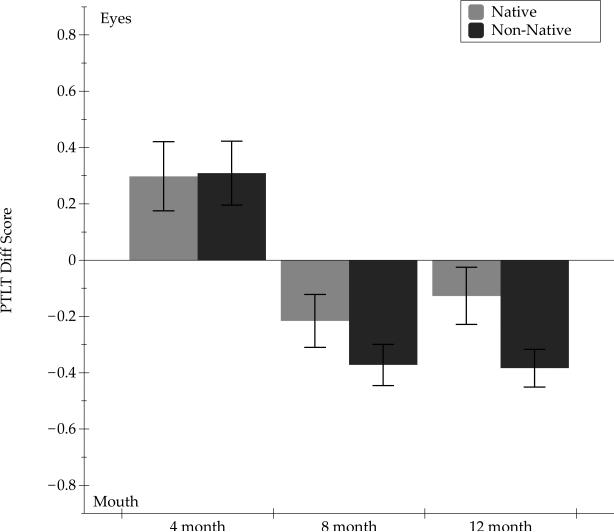

To determine the relative amount of time infants attended to the talker's eyes and mouth, we computed proportion-of-total-looking-time (PTLT) scores for each AOI, respectively, by dividing the total amount of time infants looked at each AOI by the time they spent looking at any part of the face. Then, we analyzed the PTLT scores with a mixed, repeated-measures analysis of variance (ANOVA), with AOI (eyes, mouth) and Language (native, non-native) as within-subjects factors and Language-Presentation Order, Age (4, 8, and 12 months), and Linguistic Background (Catalan, Spanish) as between-subjects factors. The analysis yielded a significant AOI x Age interaction [F(2, 54) = 13.58, p < .01, η2 = .339], indicating that looking at the two areas of the face differed as a function of age. The analysis also yielded a significant AOI × Language interaction [F(1, 54) = 8.88, p < .01, η2 = .125], indicating that looking at the two areas of the face differed as a function of the language spoken in the video. Finally, the analysis yielded an AOI × Language × Age interaction [F(2, 54) = 3.04, p = .05, η2 = .108], indicating that attention to the two areas of the talking face varied as a function of age and the language spoken in the video. Figure 1 shows the three-way interaction in the form of mean PTLT difference scores. These scores are based on subtracting the mouth-PTLT score from the eye-PTLT score for each participant and then computing the average of those individual PTLT differences scores at each respective age (a score above 0 signifies greater looking at the eyes while a score below 0 signifies greater looking at the mouth).

Fig. 1.

PTLT difference scores as a function of age in response to a native (either Spanish or Catalan) and a non-native (English) monologue in monolingual infants. Error bars represent standard errors of the mean.

To determine the source of the AOI × Language × Age interaction, we conducted planned comparison tests of looking at the two respective AOIs at each age. The first such test examined responsiveness to the native language. It revealed that the 4-month-old infants looked longer at the eyes [F(1, 19) = 5.93, p < .05, η2 = .229], that the 8-month-old infants looked longer at the mouth [F(1, 19) = 4.88, p < .05, η2 = .196], and that the 12-month-old infants looked equally at the eyes and the mouth [F(1, 19) = 1.55, ns, η2 = .067]. The second planned comparison test examined responsiveness to the non-native language. Once again, this comparison revealed that the 4- and the 8-month-old infants looked longer at the eyes and mouth, respectively [F(1, 19) = 7.41, p < .01, η2 = .272; F(1, 19) = 25.88, p < .01, η2 = .576] and that, this time, the 12-month-old infants looked longer at the mouth [F(1, 19) = 32.47, p < .01, η2 = .630].

Discussion

We found that 4-month-old infants attended longer to the talker's eyes and that 8-month-old infants attended longer to the talker's mouth regardless of which language she was speaking. In contrast, we found that 12-month-old infants attended equally to the talker's eyes and mouth when she spoke in the native language but that they attended longer to the talker's mouth when she spoke in a non-native language. These results replicate Lewkowicz & Hansen-Tift's (2012) findings from their study of monolingual, English-learning, American infants’ response to native and non-native (Spanish) audiovisual speech. Thus, our findings demonstrate that the developmental pattern of shifting attention generalizes to monolingual infants acquiring Catalan or Spanish in Spain.

Experiment 2

As previously suggested, bilingual infants may begin to attend more to a talker's mouth earlier in development and may continue to do so throughout the first year of life to deal with the greater challenge of processing two languages and keeping them apart. To test this prediction, we examined 4-, 8-, and 12-month-old Spanish-Catalan bilingual infants’ response to the same two videos presented in Experiment 1.

Method

Participants

A total of 63 bilingual infants, consisting of separate groups of 4-, 8-and 12-month-old infants, were tested. These infants were raised in a bilingual environment, where in addition to being exposed to their native language, they were exposed to at least 25% of another (i.e., either Spanish or Catalan) language. The Bosch & Sebastián-Gallés (2001b) language exposure questionnaire was administered to establish each infant's language environment. The first group consisted of 21 bilingual 4-month-old infants. Eight infants were Catalan-dominant and 13 were Spanish-dominant (M daily exposure to Catalan or Spanish: 62.7%, SD: 8.2%; 13 males, M age: 4 months, range 3 months 29 days – 4 months 9 days). The second group consisted of 21 bilingual 8-month-old infants. Seven were Catalan-dominant and 14 Spanish-dominant (M daily exposure to Catalan or Spanish 65.6%, SD: 6.4; 12 males, M age: 8 months; range, 7 months 26 days – 8 months 14 days). The third age group consisted of 21 bilingual 12-month-old infants. Nine were Catalan-speaking dominant and 12 were Spanish-dominant (M daily exposure to Catalan or Spanish 66.4%, SD: 8.0; 10 males, M age: 12 months; range, 11 months 20 days –12 months 17 days). Twenty-four additional infants were tested but did not contribute any usable data because of fussing or crying (4), failure to calibrate that was attributable either to the infant being uncooperative or to the eye tracker not being able to properly find the pupil (19), and experimental error (1).

We used two measures to ensure that the bilingual infants were equivalent to their monolingual peers in linguistic achievements. The first measure compared babbling at eight months using the initial mean babbling levels (MBLs) questionnaire (Morris, 2010) which measures the phonological diversity in babbling sounds. The second measure was derived from the MacArthur-Communicative Development Inventory (MCDI) of receptive and productive vocabularies (Fenson et al., 1993) given at 12 months of age. The babbling scores indicated that the 8-month-old monolingual infants from Experiment 1 and the bilingual infants from this experiment did not differ in their babbling activity [monolingual mean = 2.05, SD = 0.39; bilingual mean = 1.90, SD = 0.44]. A Mann-Whitney test comparing these two scores indicated no difference, Z = 1.11, ns. The MCDI vocabulary measures from the monolingual infants yielded a comprehension vocabulary that ranged from 15 to 268 words (M = 83.3, SD = 68.3) and the reported production measures ranged from 0 to 19 words (M = 6.8, SD = 6.2). To obtain comparable MCDI measures for the bilingual infants in the current experiment, we used the Bosch and Ramon-Casas (2014) method to measure their total receptive and expressive vocabulary size (Spanish + Catalan, correcting for the presence of form-similar cross-language synonyms). These combined scores yielded a comprehension vocabulary that ranged from 9 to 155 words (M = 65.4, SD = 46.1) and the reported production measures ranged from 0 to 16 words (M = 6.0, SD = 4.2). A comparison of these vocabulary measures by way of a Mann-Whitney test indicated that the two groups of infants did not differ in terms of their receptive [Mann-Whitney Z = 0.39, ns] nor productive [Mann-Whitney Z = 0.81, ns] lexicons. Thus, as can be seen from the measures of babbling and verbal abilities, our samples of monolingual and bilingual infants did not differ in terms of either measure.

Apparatus, stimuli, and procedure

The apparatus, stimuli, and procedure were the same as those in Experiment 1. Please note, however, that the native-language video in this experiment was in the infants’ dominant language.

Results

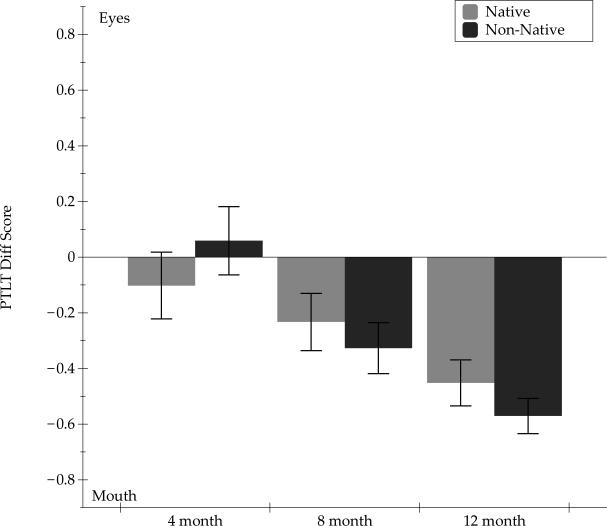

We used the same mixed, repeated measures ANOVA that we used in Experiment 1 to analyze the PTLT scores from this experiment except that here the between-subjects factor was Language Dominance (Spanish-dominant vs. Catalan-dominant). Results indicated that there was a main effect of AOI [F(1, 57) = 25.82, p < .01, η2 = .312], a significant AOI × Age interaction [F(2, 57) = 6.12, p < .01, η2 = .177], and an AOI × Language × Age interaction [F(2, 57) = 6.32, p < .01, η2 = .182]. The AOI effect indicated that there was an overall preference for the mouth while the AOI x Age interaction reflected differences in looking at the eyes and mouth as a function of age. Of course, the most interesting finding from the standpoint of our predictions was the AOI × Language × Age interaction. This interaction is depicted in Figure 2 and, as can be seen, looking at the eyes and mouth varied as a function of age and language.

Fig. 2.

PTLT difference scores as a function of age in response to a dominant and, thus, native monologue (either Spanish or Catalan) and a non-native (English) monologue in bilingual infants. Error bars represent standard errors of the mean.

Planned comparison tests of the triple interaction indicated that, for the native-language monologue, the 4-month-old infants looked equally at the eyes and the mouth [F(1, 20) = 0.69, ns, η2 = .034] and that, both, the 8- and the 12-month-old infants looked longer at the mouth [F(1, 20) = 5.15, p < .05, η2 = .205; F(1, 20) = 30.37, p < .01, η2 = .603, respectively]. The same developmental pattern held for the non-native language monologue. That is, the 4-month-old infants looked equally at the eyes and the mouth [F(1, 20) = 0.24; ns, η2 = .011], while the 8- and the 12-month-old infants looked longer at the mouth [F(1, 20) = 11.24, p < .01, η2 = .361; F(1, 20) = 81.26, p < .01, η2 = .802, respectively].

Discussion

In this experiment, we found that bilingual 4-month-olds looked equally long at the eyes and mouth and that they did so regardless of the language spoken by the talker, that bilingual 8-month-olds looked longer at the mouth and that they did so regardless of language spoken, and that bilingual 12-month-olds also looked longer at the mouth regardless of language spoken.

Results of a Monolingual-Bilingual Comparison

We compared the data from Experiments 1 and 2 to determine whether the changing patterns of selective attention differed as a function of language environment (i.e., monolingual vs. bilingual). For this comparison, we examined responsiveness to each test language separately because responsiveness to native versus non-native languages begins to differ early in infancy (Lewkowicz, 2014; Lewkowicz & Hansen-Tift, 2012; Pons et al., 2009; Werker & Tees, 2005).

For the analysis of responsiveness to native speech, we used a mixed, repeated-measures, ANOVA, with AOI (eyes, mouth) as a within-subjects factor and Language Environment (bilingual, monolingual) and Age (4, 8, and 12 months) as between-subjects factors. This analysis yielded a main effect of AOI [F(1, 117) = 9.04, p < .01, η2 = .072], an AOI × Age interaction [F(2, 117) = 7.40, p < .01, η2 = .112], an AOI × Language Environment interaction [F(1, 117) = 7.20, p < .025, η2 = .057], and a marginal AOI × Language Environment × Age interaction [F(2, 117) = 2.48, p = .08, η2 = .041].

To determine the source of the interactions, we performed planned comparison analyses of looking at the eyes and mouth, separately at each age, to determine whether monolingual and bilingual infants differed in their response profiles. These comparisons indicated that at four months of age the bilingual infants looked equally at the eyes and mouth [t(20) = 0.84, ns] but that the monolingual infants looked longer at the eyes [t(19) = 2.41, p < .05]. Furthermore, a direct comparison of the data from the two groups of 4-month-olds indicated that the bilingual infants looked longer at the mouth than did the monolingual infants [t(39) = 2.31, p < .05]. At eight months of age, both groups of infants looked longer at the mouth [bilingual: [t(20) = 2.26, p < .05; monolingual: [t(19) = 2.28, p < .05]. A direct comparison of the two groups indicated that they did not differ in their looking at the mouth [t(39) = 0.57, ns]. Finally, at 12 months of age, the bilingual infants looked longer at the mouth [t(20) = 5.51, p < .01], whereas the monolingual infants did not [t(19) = 1.25, ns]. A direct comparison of the data from the two groups indicated that the bilingual infants looked longer at the mouth than did the monolingual infants [t(39) = 2.50, p < .025].

We used the same mixed, repeated-measures, ANOVA that we used for the analysis of responsiveness to native speech for the analysis of responsiveness to non-native speech. This analysis yielded a main effect of AOI [F(1, 117) = 28.15, p < .01, η2 = .194] and an AOI × Age interaction [F(2, 117) = 26.78, p < .001, η2 = .314]. The planned comparison tests indicated that at four months bilingual infants looked equally at the eyes and mouth [t(20) = 0.12, ns] whereas monolingual infants looked longer at the eyes [t(19) = 2.70, p < .025]. A direct comparison of the two groups did not yield a significant difference. At eight months, both groups looked longer to the mouth [t(20) = 3.35, p < .01; t(19) = 5.80; p < .01, respectively], and a direct comparison indicated that the two groups did not differ in their looking at the mouth [t(39) = 1.26, ns]. Finally, at 12 months, both groups looked more at the mouth [t(20) = 12.06; p <.01; t(19) = 5.69; p < .01, respectively]. Crucially, however, the bilingual infants looked more at the mouth than did the monolingual infants [t(39) = 2.03, p < .05].

Discussion

Overall, we observed some similarities and some key differences between the bilingual and monolingual infants. First, at four months of age, monolingual infants looked longer at the eyes whereas the bilingual infants looked equally long at the eyes and mouth and they did so regardless of the language spoken by the talker. Second, at eight months of age both monolingual and bilingual infants looked longer at the mouth and they did so regardless of language spoken. Finally, at 12 months, the monolingual infants looked equally at the eyes and mouth in response to native speech and longer at the mouth in response to non-native speech, whereas the bilingual infants looked longer at the mouth in response to native and non-native speech.

General Discussion

Lewkowicz & Hansen-Tift (2012) found that English-learning American infants exhibit two shifts in the relative amount of selective attention that they devote to the eyes and mouth of a talker during the first year of life. The first shift – from the talker's eyes to her mouth - was found between four and eight months of age. The second shift – away from her mouth – was found by 12 months. We replicated these findings in a sample of monolingual infants growing up in a different cultural milieu and learning languages that are rhythmically different from English. Moreover, and consistent with our hypothesis, we found that bilingual infants generally took greater advantage of the redundancy of audiovisual speech cues than did the monolingual infants.

The main difference between the bilingual and monolingual infants was at four and 12 months. At four months, monolingual infants looked more at the eyes whereas the bilinguals did not, suggesting an earlier start of an attentional shift to the mouth in the bilinguals. At 12 months, the monolinguals looked equally at the eyes and mouth in response to native speech but more at the mouth in response to non-native speech. In contrast, the bilinguals looked longer at the mouth in response to familiar and unfamiliar speech and longer than monolinguals in both cases. At eight months, both monolingual and bilingual infants looked longer at the mouth. Overall, these findings support our prediction that bilingual infants are likely to maximally and efficiently exploit the highly salient audiovisual speech cues located in a talker's mouth. Presumably, this facilitates the bilinguals’ recognition and differentiation of both languages and, thus, the successful acquisition of both.

Certainly, the language recognition and differentiation problem becomes less pronounced with extended linguistic exposure. Initially in development, however, language differentiation in bilingual infants - especially in those exposed to rhythmically/phonologically close languages - is constrained by limited experience with linguistic input. Consequently, bilinguals must rely on available perceptual cues to build two distinct language systems and, as found here, bilinguals rely on the audiovisual speech located in a talker's mouth up through 12 months of age.

Our results provide the first evidence of a modulation of attention to the eyes and mouth of a talking face driven by differences in infants’ linguistic background (single vs. dual language input). Bilinguals' attention to audiovisual speech cues extends previous findings showing better discrimination of silent talking faces by bilingual than monolingual infants (Sebastián-Gallés et al., 2012; Weikum et al., 2007). Of course, our bilingual infants were learning two rhythmically proximal languages. This raises the question of whether bilingual infants learning more distant languages might also take greater advantage of redundant audiovisual cues. One possible answer is that rhythmical and phonological similarity/distance of two specific languages may modulate the degree to which infants take advantage of redundant audiovisual cues.

How might greater attention to a talker's mouth facilitate language acquisition in bilingual infants? Like their monolingual counterparts, bilingual infants show language-general phonetic discrimination abilities at four months of age (Bosch & Sebastián-Gallés, 2003), but unlike monolinguals, they find it challenging to recognize one of the two native languages purely on the basis of auditory input (Bosch & Sebastián-Gallés, 1997; Bosch & Sebastián-Gallés, 2001a). Our finding that bilingual infants begin to shift their attention to the redundant audiovisual speech cues earlier than monolinguals suggests that the greater perceptual salience of such cues probably helps bilinguals identify distinct language-specific features which help them keep the languages apart. Continued attention to redundant audiovisual speech cues through 12 months of age suggests that audiovisual information is still useful at that age not only for the acquisition of the perceptual attributes of the two languages but also for further gains in language acquisition.

Might redundant audiovisual speech continue to enhance language acquisition into later development and might it continue to play a different role in bilinguals than in monolinguals? Extant studies support both possibilities. For example, studies have shown that monolingual adults comprehend audiovisual speech better than auditory speech (Sumby & Pollack, 1954) and that adults rely on redundant visual speech when presented either with an ambiguous soundtrack (Lansing & McConkie, 2003) or with speech in noise (Vatikiotis-Bateson, Eigsti, Yano, & Munhall, 1998). Similarly, studies have found that bilingual adults find it easier to discriminate difficult-to-discriminate audible phonemes with the aid of concurrent visible articulations (Navarra & Soto-Faraco, 2007).

Acknowledgements

Supported by the Spanish Ministerio de Ciencia e Innovación (Grant PSI2010-20294 to F.P and PSI2011-25376 to L.B.) and by the Eunice Kennedy Shriver National Institute of Child Health & Human Development (Grant R01HD057116 to D.J.L.). We thank Maria Teixidó for her assistance.

Footnotes

Author Contributions

Pons developed the study concept. Lewkowicz contributed to study design. Pons and Bosch collected the data. Pons performed data analyses. Pons, Bosch, and Lewkowicz wrote and approved the final manuscript.

References

- Bergeson TR, Pisoni DB, Davis RAO. Development of Audiovisual Comprehension Skills in Prelingually Deaf Children With Cochlear Implants. Ear and Hearing. 2005;26:149. doi: 10.1097/00003446-200504000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bijeljac-Babic R, Serres J, Höhle B, Nazzi T. Effect of Bilingualism on Lexical Stress Pattern Discrimination in French-Learning Infants. PLoS ONE. 2012;7:e30843. doi: 10.1371/journal.pone.0030843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosch L, Ramon-Casas M. First translation equivalents in bilingual toddlers’ expressive vocabulary: Does form similarity matter? International Journal of Behavioral Development. 2014;38:317–322. [Google Scholar]

- Bosch L, Sebastián-Gallés N. Native language recognition abilities in 4-month-old infants from monolingual and bilingual environments. Cognition. 1997;65:33–69. doi: 10.1016/s0010-0277(97)00040-1. [DOI] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Early language differentiation in bilingual infants. In: Cenoz J, Genesee F, editors. Trends in bilingual acquisition. John Benjamins Publishing Company; Amsterdam: 2001a. pp. 71–93. [Google Scholar]

- Bosch L, Sebastián-Gallés N. Evidence of early language discrimination abilities in infants from bilingual environments. Infancy. 2001b;2:29–49. doi: 10.1207/S15327078IN0201_3. [DOI] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Simultaneous bilingualism and the perception of a language-specific vowel contrast in the first year of life. Language and Speech. 2003;46:217–243. doi: 10.1177/00238309030460020801. [DOI] [PubMed] [Google Scholar]

- Burns TC, Yoshida KA, Hill K, Werker JF. The development of phonetic representation in bilingual and monolingual infants. Applied Psycholinguistics. 2007;28:455–474. [Google Scholar]

- Byers-Heinlein K, Burns TC, Werker JF. The roots of bilingualism in newborns. Psychological Science. 2010;21:343–348. doi: 10.1177/0956797609360758. [DOI] [PubMed] [Google Scholar]

- Byers-Heinlein K, Fennell CT. Perceptual narrowing in the context of increased variation: insights from bilingual infants. Developmental Psychobiology. 2014;56:274–291. doi: 10.1002/dev.21167. [DOI] [PubMed] [Google Scholar]

- Costa A, Sebastián-Gallés N. How does the bilingual experience sculpt the brain? Nature Reviews Neuroscience. 2014;15:336–345. doi: 10.1038/nrn3709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Thal D, Bates E, Hartung JP, Reilly JS. The MacArthur Communicative Development Inventories: User's Guide and Technical Manual. Paul H. Brokes Publishing Co.; Baltimore: 1993. [Google Scholar]

- Fernald A. Five-month-old infants prefer to listen to motherese. Infant Behavior and Development. 1985;8:181–195. [Google Scholar]

- Garcia-Sierra A, Rivera-Gaxiola M, Percaccio CR, Conboy BT, Romo H, Klarman L, Kuhl PK. Bilingual language learning: An ERP study relating early brain responses to speech, language input, and later word production. Journal of Phonetics. 2011;39:546–557. [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Lansing CR, McConkie GW. Word identification and eye fixation locations in visual and visual-plus-auditory presentations of spoken sentences. Perception & Psychophysics. 2003;65:536–552. doi: 10.3758/bf03194581. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Infant perception of audio-visual speech synchrony. Developmental Psychology. 2010;46:66–77. doi: 10.1037/a0015579. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Early experience and multisensory perceptual narrowing. Developmental Psychobiology. 2014;56:292–315. doi: 10.1002/dev.21197. doi: 10.1002/dev.21197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Ghazanfar AA. The decline of cross-species intersensory perception in human infants. Proceedings of the National Academy Sciences USA. 2006;103:6771–6774. doi: 10.1073/pnas.0602027103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Hansen-Tift AM. Infants deploy selective attention to the mouth of a talking face when learning speech. Proceedings of the National Academy of Sciences. 2012;109:1431–1436. doi: 10.1073/pnas.1114783109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Pons F. Recognition of amodal language identity emerges in infancy. International Journal of Behavioral Development. 2013;37(2):90–94. doi: 10.1177/0165025412467582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molnar M, Gervain J, Carreiras M. Within-rhythm class native language discrimination abilities of Basque-Spanish monolingual and bilingual infants at 3.5 months of age. Infancy. 2014;19:326–337. [Google Scholar]

- Morris SR. Clinical application of the mean babbling level and syllable structure level. Language, speech, and hearing services in schools. 2010;41:223. doi: 10.1044/0161-1461(2009/08-0076). [DOI] [PubMed] [Google Scholar]

- Navarra J, Soto-Faraco S. Hearing lips in a second language: visual articulatory information enables the perception of second language sounds. Psychological research. 2007;71:4–12. doi: 10.1007/s00426-005-0031-5. [DOI] [PubMed] [Google Scholar]

- Oller DK, Eilers RE, Urbano R, Cobo-Lewis AB. Development of precursors to speech in infants exposed to two languages. Journal of Child Language. 1997;24:407–426. doi: 10.1017/s0305000997003097. [DOI] [PubMed] [Google Scholar]

- Patterson ML, Werker JF. Matching phonetic information in lips and voice is robust in 4.5-month-old infants. Infant Behavior & Development. 1999;22:237–247. [Google Scholar]

- Pons F, Lewkowicz DJ, Soto-Faraco S, Sebastián-Gallés N. Narrowing of intersensory speech perception in infancy. Proceedings of the National Academy of Sciences USA. 2009;106:10598–10602. doi: 10.1073/pnas.0904134106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Putzar L, Goerendt I, Lange K, Rösler F, Röder B. Early visual deprivation impairs multisensory interactions in humans. Nature Neuroscience. 2007;10:1243–1245. doi: 10.1038/nn1978. [DOI] [PubMed] [Google Scholar]

- Rosenblum LD. Speech perception as a multimodal phenomenon. Current Directions in Psychological Science. 2008;17:405. doi: 10.1111/j.1467-8721.2008.00615.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenblum LD, Schmuckler MA, Johnson JA. The McGurk effect in infants. Perception & Psychophysics. 1997;59:347–357. doi: 10.3758/bf03211902. [DOI] [PubMed] [Google Scholar]

- Sebastián-Gallés N, Albareda-Castellot B, Weikum WM, Werker JF. A bilingual advantage in visual language discrimination in infancy. Psychological Science. 2012;23:994–999. doi: 10.1177/0956797612436817. [DOI] [PubMed] [Google Scholar]

- Sebastián-Gallés N, Bosch L. Developmental shift in the discrimination of vowel contrasts in bilingual infants: is the distributional account all there is to it? Developmental Science. 2009;12:874–887. doi: 10.1111/j.1467-7687.2009.00829.x. [DOI] [PubMed] [Google Scholar]

- Stein BE. The new handbook of multisensory processing. MIT Press; 2012. [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Sundara M, Polka L, Molnar M. Development of coronal stop perception: Bilingual infants keep pace with their monolingual peers. Cognition. 2008;108:232. doi: 10.1016/j.cognition.2007.12.013. [DOI] [PubMed] [Google Scholar]

- Teinonen T, Aslin RN, Alku P, Csibra G. Visual speech contributes to phonetic learning in 6-month-old infants. Cognition. 2008;108:850–855. doi: 10.1016/j.cognition.2008.05.009. [DOI] [PubMed] [Google Scholar]

- Tenenbaum EJ, Shah RJ, Sobel DM, Malle BF, Morgan JL. Increased Focus on the Mouth Among Infants in the First Year of Life: A Longitudinal Eye-Tracking Study. Infancy. 2013;18:534–553. doi: 10.1111/j.1532-7078.2012.00135.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vatikiotis-Bateson E, Eigsti I-M, Yano S, Munhall KG. Eye movement of perceivers during audiovisual speech perception. Perception & Psychophysics. 1998;60:926–940. doi: 10.3758/bf03211929. [DOI] [PubMed] [Google Scholar]

- Weikum WM, Vouloumanos A, Navarra J, Soto-Faraco S, Sebastián-Gallés N, Werker JF. Visual language discrimination in infancy. Science. 2007;316:1159. doi: 10.1126/science.1137686. [DOI] [PubMed] [Google Scholar]

- Werker JF, Tees RC. Speech perception as a window for understanding plasticity and commitment in language systems of the brain. Developmental Psychobiology. Special Issue: Critical Periods Re-examined: Evidence from Human Sensory Development. 2005;46:233–234. doi: 10.1002/dev.20060. [DOI] [PubMed] [Google Scholar]