Abstract

Most approaches to visual prostheses have focused on the retina, and for good reasons. The earlier that one introduces signals into the visual system, the more one can take advantage of its prodigious computational abilities. For methods that make use of microelectrodes to introduce electrical signals, however, the limited density and volume occupying nature of the electrodes place severe limits on the image resolution that can be provided to the brain. In this regard, non-retinal areas in general, and the primary visual cortex in particular, possess one large advantage: “magnification factor” (MF)—a value that represents the distance across a sheet of neurons that represents a given angle of the visual field. In the foveal representation of primate primary visual cortex, the MF is enormous—on the order of 15–20 mm/deg in monkeys and humans, whereas on the retina, the MF is limited by the optical design of the eye to around 0.3 mm/deg. This means that, for an electrode array of a given density, a much higher- resolution image can be introduced into V1 than onto the retina (or any other visual structure). In addition to this tremendous advantage in resolution, visual cortex is plastic at many different levels ranging from a very local ability to learn to better detect electrical stimulation to higher levels of learning that permit human observers to adapt to radical changes to their visual inputs. We argue that the combination of the large magnification factor and the impressive ability of the cerebral cortex to learn to recognize arbitrary patterns, might outweigh the disadvantages of bypassing earlier processing stages and makes V1 a viable option for the restoration of vision.

Keywords: primary visual cortex, V1, magnification factor, vision restoration, visual prosthesis, plasticity

1. The problem of resolution

It is a remarkable fact that so vital a sensory modality to us primates is dominated by such a tiny sensory epithelium. In fact, most of what the average person thinks of when she thinks of “seeing” is foveal vision, subserved by only about one percent of the retina’s total area1. One manifestation of this fact is the great surprise evoked in observers when the extremely poor acuity of their extra-foveal retina is demonstrated to them: more than a few degrees away from the fovea we are all legally blind, meaning our acuity falls past 20/200. The bottom line is that when we want to see something, we point our foveae at it2.

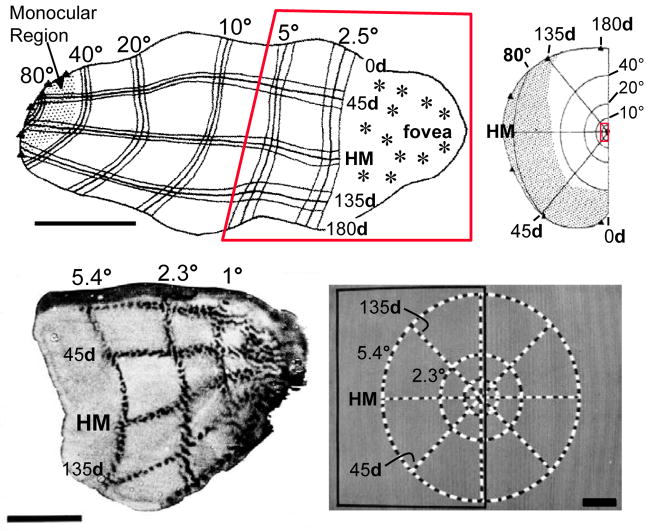

The basis for foveal acuity3 begins with the high density of cone photoreceptors in this region of the retina (1.6 x 105 cones / mm2 in the fovea; Wandell, 1995) and is preserved by the unique circuitry between the photoreceptors and the so-called “midget” retinal ganglion cells (Kolb & Dekorver, 1991; Kolb & Marshak, 2003). In the lateral geniculate nucleus (LGN), the next stage of visual processing, we already begin to see the over-representation of central vision. For example, in layer 6 of the monkey LGN, 20% of the retinotopic map is devoted to the central 5 degrees (0.5% of the visual field; Connolly & Van Essen, 1984; Malpeli & Baker, 1975). This trend continues and is greatly amplified in the projection from the LGN to V1, where nearly half (42%) of the entire surface of V1 is devoted to the central 5 degrees and about half of this half represents the fovea (figure 1; Tootell, Switkes, Silverman, & Hamilton, 1988; Van Essen, Newsome, & Maunsell, 1984).

Figure 1.

Retinotopic organization of macaque primary visual cortex. Top. Topography of V1 mapped with microelectrode recordings, from Van Essen et al. 1984. Bottom. Topography of peri-foveal V1 mapped using 2-deoxyglucose functional labeling, from Tootell et al. 1988. Scale bars: top left, 1 cm.; bottom left, 1 cm.; bottom right, 2°. For clarity, polar angles in degrees use the “d” symbol, whereas distances in the visual field in degrees use “°”.

For a visual prosthesis, the most relevant consideration determining the necessary electrode density is the distance that one must travel along neural tissue to cover one degree of the visual field, the “magnification factor” (MF). While there is variability in the literature, the most reliable consensus for the maximum MF in monkey V1 is 15–20 mm of cortex per degree of visual field, based on both electrophysiological recordings (Dow, Snyder, Vautin, & Bauer, 1981; Van Essen et al., 1984); fig. 1, top) and 2-deoxyglucose functional labeling (Tootell et al., 1988; Tootell, Silverman, Switkes, & De Valois, 1982; fig. 1, bottom). And roughly similar values have been reported in humans using either the mapping of phosphenes produced by direct stimulation of visual cortex (Cowey & Rolls, 1974, based on data from Brindley & Lewin, 1968), mapping of migraine-induced phosphenes (Grüsser, 1995) or functional magnetic resonance imaging (Duncan & Boynton, 2003; Engel et al., 1994; Sereno et al., 1995).

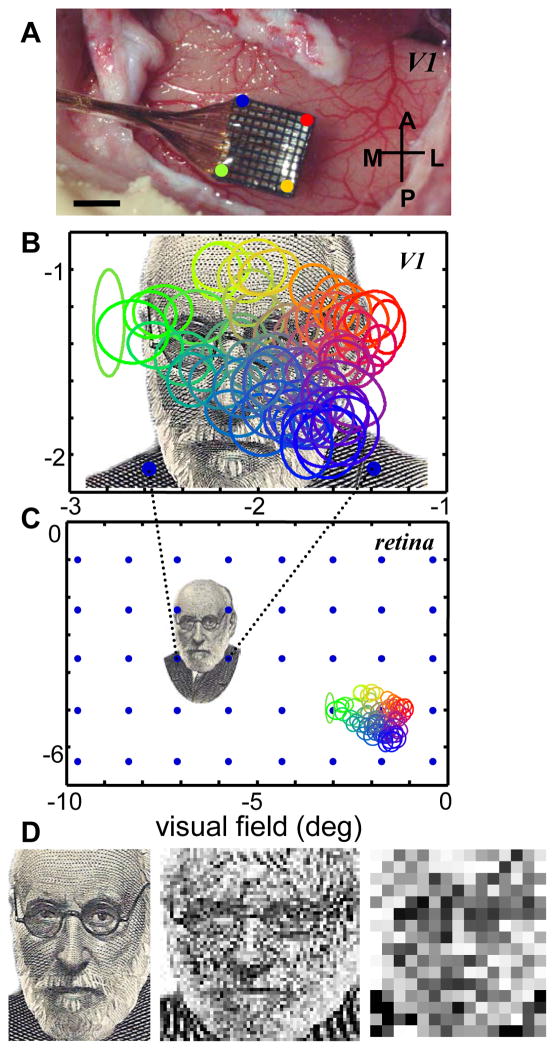

The enormous MF of foveal V1 means that it is possible to introduce a very high resolution representation of an image even with relatively coarse microelectrode arrays (MEAs)4. This is demonstrated graphically in figure 2. Panel A shows a conventional MEA—the spacing between electrodes is 0.4 mm—that has been implanted in the perifoveal representation of V1 in a macaque monkey. Figure 2B shows the actual receptive fields mapped to each one of the 100 electrodes in the 10 x 10 MEA. That is, each small ellipse depicts the tiny part of the visual world represented by a particular neuron (or small cluster of neurons). This map is superimposed upon an image of Ramon y Cajal that subtends approximately 2 degrees of the visual field5, a size at which a human observer would easily be able to recognize the image. This image could be well sampled by a collection of such MEAs (see below), or, in the case of a visual prosthesis, the electrical representation of the image could be introduced into V1 with good spatial fidelity. Compare this with the same electrode array if it were implanted directly on to the retina (figure 2C). Here the interelectrode spacing would correspond to more than one degree in the visual field, and our image of Ramon y Cajal would be represented very coarsely indeed—at any given time, only about 4 small spots (blue dots in figure 2, bottom panel) would be sampled.

Figure 2.

Cortical versus retinal magnification factors. A. A 10 x 10 multi-electrode array (MEA) implanted in primary visual cortex of a macaque monkey. Scale bar, 2 mm. B. Receptive field (RF) map of the MEA shown in the top panel. Each ellipse is the 2-standard deviation size of a 2-dimensional Gaussian fit to the response profile for the multi-unit activity on each electrode. The ellipses are color coded to indicate which electrode on the MEA they were recorded from. The 100 electrodes densely tile an area of the visual field that is roughly 1.5 x 2 degrees. The two blue dots at the bottom indicate the distance between two electrodes on the MEA in retinal coordinates (below). C. The electrode spacing of the MEA projected onto the retina. Each blue dot would approximate the location of a retinal ganglion cell’s receptive field if the same MEA shown at top were implanted in the retina. The images of Ramon y Cajal and the MEA RF map are shown at the same size, in degrees of visual field, in the middle and bottom panels to highlight the large difference in spatial scales. D. Three images of Ramon y Cajal: the original image of Cajal (left, 406 x 300 pixels) and the same image down-sampled to either 58 x 60 pixels (middle) or 16 x 15 pixels (right). Panels A and B are unpublished data from the Born lab.

To get a better idea of the amount of information that could potentially be introduced into primary visual cortex, consider that the size of V1 in the macaque monkey is roughly 1200 mm2 (Felleman & Van Essen, 1991) and human V1 is roughly twice this size (Sereno et al., 1995). The MEA pictured in figure 2 occupies only about 16 mm2, or just over 1% of the available surface area in the monkey. Even if only 50% of the V1 surface area could be successfully implanted with electrodes, it could accommodate 37 such MEAs or 3700 electrodes, roughly equivalent to a 60 x 60 pixel image. For comparison, MEAs with the highest electrode density for retinal prostheses currently allow for an image that is about 15 x 15 pixels. To give the reader a sense of the corresponding degradation of image quality, in figure 1D we show the original image of Ramon y Cajal, first at its original resolution of 406 x 300 pixels, followed by down-sampled versions at 58 x 60 pixels and 16 x 15 pixels to simulate the original image as sampled by a cortical vs. retinal array.

The above paragraph makes certain simplifying assumptions—such as the spatial regularity of sampling by a cortical array (see fig. 1B) and the use of a particular MEA geometry (see footnote #4)—that will definitely not be achievable with a real cortical visual prosthesis. While this will present challenges for designers of such prostheses, we do not believe them to be insurmountable given the potential post-V1, pattern-recognition plasticity that we describe in section 5. Further, the point of this exercise is not to argue the merits of a particular MEA—closer electrode spacing will be achievable with improvements in manufacturing techniques. The point is that high resolution sampling is a serious problem that limits the usefulness of MEA-based retinal prostheses (e.g. Jepson et al., 2014; but see also Zrenner 2013; Loudin et al. 2011 for approaches to high-resolution retinal prostheses), whereas it is not a challenge for an MEA-based V1 prosthesis.

As should be clear from the above discussion, the LGN also possesses a large advantage in magnification factor over that of the retina (Connolly & Van Essen, 1984; Malpeli & Baker, 1975). Some have argued that its MF would be sufficient for a viable visual prosthesis (Pezaris & Reid 2007), and that the LGN’s location and topography may make it more readily targetable with depth electrodes as compared to V1, much of whose surface is convoluted and buried within sulci. (For a detailed discussion of these issues, see Pezaris & Eskandar, 2009).

Given the fact that parts of human V1 lie within sulci, one might wonder about using visual cortex beyond V1 as a more accessible entry point for visual signals. Though it is clear that visual sensations can be evoked by electrically stimulating extrastriate cortex (Brindley & Lewin 1968; Murphy et al. 2009) and some early areas have rather large perifoveal MFs (Schira et al. 2009), we think that such an approach is unlikely to succeed for a variety of reasons. First, receptive fields are much larger and cortical topography much coarser resulting in a loss of effective sampling precision. Second, any given extrastriate area is much smaller in overall surface area than V1. Third, there is a tremendous fan-out of visual information after V1 with a resulting much greater degree of specialization of visual response properties, thus making it difficult to imagine how to activate an ensemble of neurons appropriate to a given desired percept. And finally, there is a steep decline in subjects’ ability to detect electrical stimulation of visual cortex as one moves anteriorly from the foveal representation in V1 (Murphey et al. 2009). All of these issues notwithstanding, it remains possible that extrastriate visual cortex is much more plastic than V1 and thus may be able to learn to extract structure from completely arbitrary patterns of inputs as long as they are lawfully related to the visual world. We address this issue of plasticity below, and the reader should keep in mind that all of these arguments may also pertain to cortex beyond V1—perhaps even more so. Only experiments using chronically implanted electrode arrays in extrastriate cortex of nonhuman primates will indicate whether this is a fruitful direction to pursue.

2. The problem of computational complexity

By the time normal visual signals reach V1, they have already undergone an enormous amount of processing, largely within the retina. The image input has already been filtered, in parallel, by over 20 separate channels (Masland & Raviola, 2000) each of which is computing some unique and complementary aspect of the visual scene (Werblin, Roska, & Balya, 2001)—presumably all of these are important for brain function at some level, though not all of them are critical for conscious vision. For example, one set of intrinsically photosensitive RGCs projects to hypothalamic areas that control circadian rhythms, and other RGCs are dedicated to measuring overall illumination levels that control pupil size. While these are important functions of the retina, they would not comprise an essential part of a visual prosthesis designed to restore form vision.

Of course, a retinal prosthesis has to deal with this same level of complexity. Even treatments that attempt to create directly light-sensitive bipolar cells or RGCs would suffer from this problem, albeit to a lesser degree. So, in this important sense, any visual prosthesis will sacrifice some or all of the retina’s computational power. The good news is that we already possess a sophisticated understanding of retinal circuitry, and modern approaches using dense electrode arrays combined with optogenetic tools promise to solve it completely in the near future (e.g. Field et al., 2010). This means that much of what the retina does can be replaced with smart, front-end processing in the prosthetic camera. However, even if all of the retina’s parallel circuits could be faithfully reproduced in silico, the problem of appropriately interfacing the different outputs with the appropriate output channels (i.e. RGCs) would remain daunting. However, as we will argue below, it may be possible for the more plastic cortex to make better use of such pre-processed information.

A further difficulty, however, with introducing signals directly into V1, is that V1 itself is frighteningly complex. It is beyond the scope of this review to detail what is known about the nature of V1 circuitry—this has been done previously (e.g. Callaway, 1998; Nassi & Callaway, 2009; Sincich & Horton, 2005). A brief glance makes it plain that a huge number and variety of computations are taking place within V1. Within an approximately 2 x 2 mm area of cortex (including the full thickness across cortical layers) of a monkey is contained a complete range of orientation columns, combinations of inputs from the two eyes and other important visual features such as binocular disparity (an important cue for depth), color, direction of motion and spatial frequency for some small neighborhood of the visual field. This unit, named a “hypercolumn” by Hubel and Wiesel, is then repeated in a quasi-crystalline array to tile the entire visual field (Hubel & Wiesel, 1974). While a number of these computations are also reasonably well understood and could thus be reproduced, appropriately inserting such highly processed information into the appropriate micro-domains (i.e. the proper layers and columns) of the cortex would be impossible both practically and in principle, since this fine-scale organization is not sufficiently stereotyped across individuals. Thus, conceptually, cortical prosthesis designs ignore all of the beautifully detailed circuitry of V1 and essentially treat each hypercolumn as a pixel. We note, however, that even with a coarse electrode array (fig. 2, top), many electrodes within each hypercolumn would potentially permit both improved spatial representation (fig. 2, middle) and perhaps allow different electrodes to represent different features of the image—this latter possibility will be expanded upon in section 6 below.

3. Proof of concept: V1-evoked phosphenes in humans and monkeys

Intracortical microstimulation with currents as low as a few microamperes has been shown to elicit small, retinotopically localized visual percepts called “phosphenes” in humans (Schmidt et al., 1996). Similar stimulation protocols in V1 of monkeys can reliably elicit saccades to the region of the visual field corresponding to the stimulated neurons’ receptive fields (fig. 3; Bradley et al., 2005; Tehovnik, Slocum, Carvey, & Schiller, 2005). Coincidentally, in humans the minimal interelectrode spacing that reliably produced two distinct phosphenes was on the order of 0.5 mm (Schmidt et al., 1996), just slightly larger than the spacing on the MEA shown in figure 2. Microstimulation with pairs of electrodes in V1 of monkeys also suggest that sites within approximately 1 mm of each other can be integrated for enhanced detection, whereas sites separated by greater distances are treated as independent events that can be compared, but not integrated (Ghose & Maunsell, 2012). Taken together, these results suggest that the dimensions of the hypercolumn place strong constraints on the design of MEAs suitable for a V1 prosthetic device.

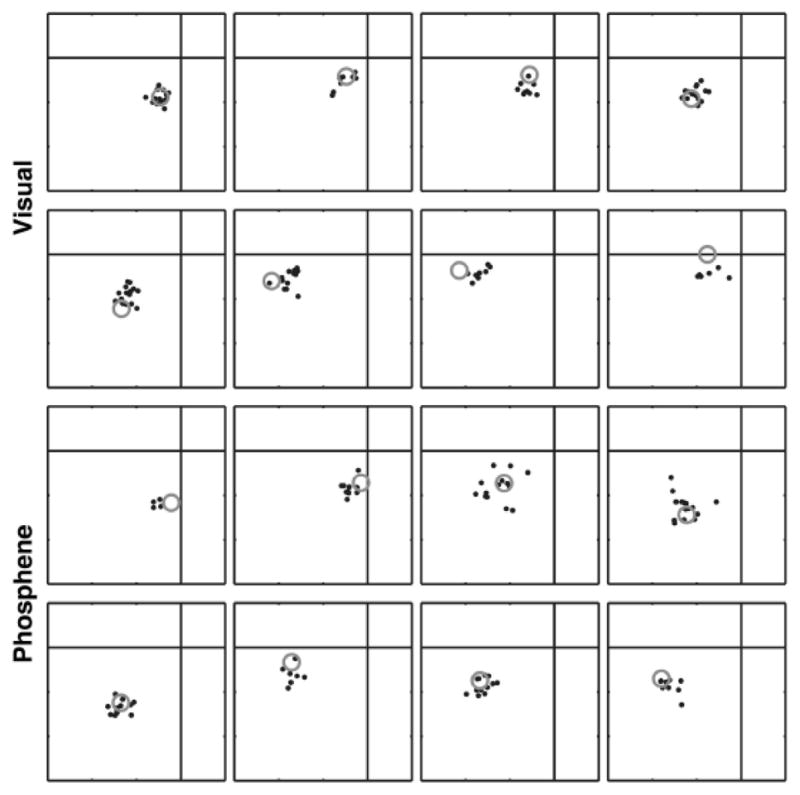

Figure 3.

A monkey makes memory-guided saccades to both visual targets (top two rows) and to phosphenes elicited by microstimulation of electrodes implanted in V1 (bottom two rows). Saccades to phosphenes are less accurate than those to visual targets, yet they still reliably track the retinotopic locations of the electrodes. The gray circles represent either the location at which the visual target was flashed (top) or the receptive field location of the neurons at the electrode that was stimulated. Small black dots depict the saccade end-point on each trial. Used with permission from Bradley et al. 2005.

There are a number of other important factors that affect the percept of phosphenes elicited by stimulation of the early visual pathways, such as the fact that they move with the eyes and are scaled in apparent size according to the vergence posture and accommodation of the subject. In addition, one might also think that it would be necessary to account for geometric distortions introduced by the retinotopic organization of V1 (fig. 1), integration of information from the two eyes and the appropriate dynamics of cortical stimulation. These issues have been treated in depth in previous publications (Schiller & Tehovnik, 2008; Schmidt et al., 1996; Tehovnik & Slocum, 2007; Tehovnik et al. 2009; Schiller et al. 2011; Tehovnik & Slocum 2013), and we will not elaborate upon them here.

Our point of departure for what follows is that all previous approaches have been based on the premise that the functional circuitry of the visual system is rather fixed in the adult. However, recent work has indicated a certain degree of plasticity of adult cortex, even in primary sensory areas. In the following section, we briefly review the literature on cortical plasticity with a special emphasis on that demonstrated in sensory cortex of adult animals.

4. Local cortical plasticity: teaching an old dog to recognize new patterns

Early in postnatal development, the cortex is remarkably plastic. A number of studies document the ability of cortical networks to profoundly and adaptively re-wire following changes in sensory input patterns—most noteworthy are those that show development of novel pattern selectivity in auditory cortex when visual inputs are re-routed to the auditory thalamus (reviewed in O’Leary 1989; Horng & Sur, 2006). However, there is a limited developmental window in which this plasticity is observed, giving rise to the concept of a “critical period” (Hensch, 2005; Hubel, 1988). Understanding this form of plasticity is crucial for the treatment of childhood visual disorders, but it is of limited relevance to the topic of visual restoration in blind adults, since the critical period has long since ended. However, these studies imply that cortical prostheses are likely to be ineffective in patients who have not had normal vision during the critical period. Moreover, the possibility that visual cortex can be recruited to perform other functions, such as Braille reading in early blind patients (Cohen et al. 1997), suggests that any visual prosthesis is likely to be most effective in people who have more recently lost visual function.

The topic of adult cortical plasticity is more controversial and has been reviewed extensively (Wandell & Smirnakis 2009). Early studies indicated that following small retinal lesions, V1 neurons whose receptive fields fell within the induced scotoma6 became responsive to adjacent regions of the spared visual field (C. I. Baker, Peli, Knouf, & Kanwisher, 2005; Gilbert & Li 2012). However, subsequent experiments (e.g. Smirnakis et al. 2005) have challenged the interpretation of this finding as true plasticity. It remains possible that some of the apparent plasticity could have been due to the existence of rare neurons with large receptive fields that spanned the border of the scotoma prior to the retinal lesion. Nevertheless, more recent experiments in which local inhibitory circuits were optogenetically mapped in somatosensory cortex of adult mice both before, during and after recovery from sensory deprivation (whisker trimming) have revealed an impressive degree of plasticity in this circuitry (Kätzel & Miesenböck, 2014). Conversely, and perhaps more relevant to a cortical prosthesis, over-activation of local patches of cortex using microstimulation results in an expanded representation of the feature preferred by the stimulated site (reviewed in Histed, Ni, & Maunsell, 2012; see also Talwar & Gerstein, 2001). The examples provided by deprivation and over-stimulation likely represent different sides of the same coin, showing that experience-dependent plasticity exists to some degree in the adult brain.

The above studies indicate that local connections in the adult cortex may be at least partially modifiable, but can such mechanisms actually support learning? Two sets of studies suggest that they can. The first set involves the use of spike-triggered microstimulation in which the spiking activity of a nearby reference neuron (measured with a separate, non-stimulating electrode) controls the timing of the stimulation. Thus the artificially stimulated neurons are activated contingent upon activity in the reference neuron—a condition that might be expected to produce learning (Rescorla, 1968). This manipulation does in fact produce enhanced correlations between the reference and target neurons (Jackson, Mavoori, & Fetz, 2006; Rebesco & Miller, 2011; Rebesco, Stevenson, Körding, Solla, & Miller, 2010; Song, Kerr, Lytton, & Francis, 2013) suggesting that cortical plasticity is sensitive to the statistical dependencies between the activities of nearby neurons even if those dependencies are entirely arbitrary.

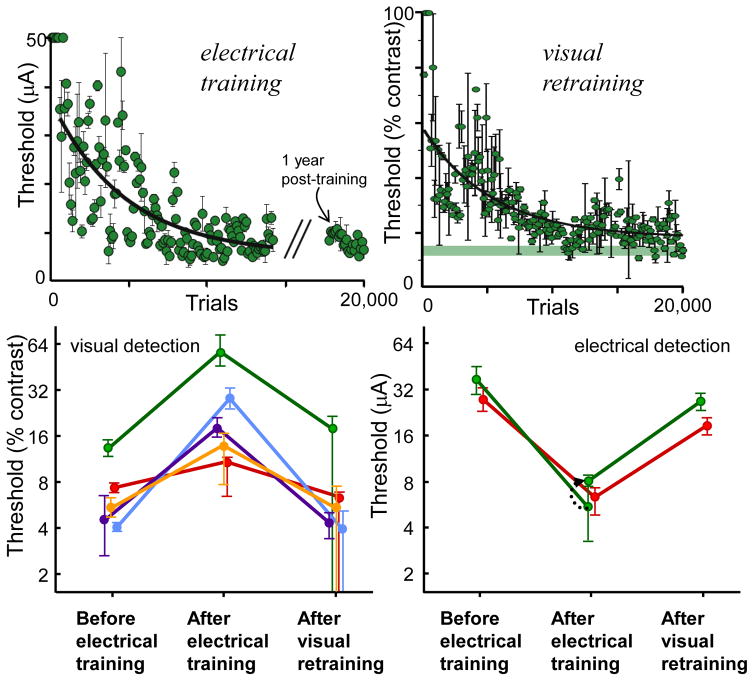

A second set of experiments, performed by Ni and Maunsell (2010), further demonstrate the ability of adult primary sensory cortex to learn. In a two-interval forced-choice task, monkeys were required to detect either a small visual stimulus in a given region of the visual field or microstimulation in the retinotopically corresponding region of V1. For either cue, prolonged training substantially lowered detection thresholds (fig. 4, top). The critical observation was that learning to detect the microstimulation disrupted the monkeys’ ability to detect the visual stimulus and vice versa (fig. 4, bottom). These changes were reversible and confined to roughly the same V1 hypercolumn (i.e. sites more than 2 or 3 mm away were unaffected by training), indicating that the local circuitry had somehow reconfigured to better detect the particular patterns of activity that were behaviorally relevant. This strongly suggests that the circuitry of cortex can adapt to consistent input patterns, even if those input patterns are distinctly unnatural, as is the case with microstimulation. We believe that this result bodes well for visual prostheses in general, but is particularly relevant for those relying on cortical microstimulation to introduce visual signals.

Figure 4.

Macaque monkeys learn to better detect microstimulation in V1. Top left. Threshold currents for detection of an electrical stimulus during a two-interval forced-choice task. The monkey’s performance improved exponentially with a τ of 4700 trials and an asymptote of 5.4 μA. Even after one year of not performing the task, the monkey’s current detection thresholds remained low. Error bars indicate 67% confidence intervals. Top right. After electrical training, visual thresholds at the same V1 site were dramatically increased, but returned to normal after visual retraining (τ of 5300 trials and an asymptote of 18% contrast). Each point is the threshold determined from 100 trials. Bottom. Reciprocal nature of changes in detection thresholds for visual (left) and electrical (right) stimuli at different V1 sites (colors). Error bars indicate 95% confidence intervals.

Used with permission from Ni & Maunsell 2010

Of course, plasticity in response to microstimulation could conceivably also produce long-term effects that are maladaptive, as is seen, for example, with the phenomenon of kindling (McNamara et al. 1980). While primary visual cortex does not appear to be as susceptible to this pathological form of plasticity, it serves to remind us of the necessity of performing additional long-term microstimulation experiments in animals to verify that introducing signals to the visual system in this way is both safe and effective. It will be especially important to demonstrate that the electrically induced phosphenes can be not only detected, as demonstrated by Ni and Maunsell (2010), but also serve as the basis for the recognition of objects. The human subject studied by Schmidt et al. (1996) was able to group multiple phosphenes into the representation of a vertical line (p. 518), but this is still a long way from true object recognition.

In sum, there is evidence that some forms of experience-dependent plasticity continue to exist into adulthood and that they can mediate a form of learning that would be conducive to a V1 visual prosthesis. However, these are purely local mechanisms. The process of seeing requires the recovery of structure based on the detection of regularities at higher levels of the visual hierarchy and on the integration of this information with exploratory movements (so-called “active sensing,” discussed below) in order to guide behavior. In the next section, we explore results indicating that not only can cortex adapt to detect non-natural inputs but that adaptation also occurs at higher levels of the visual system and is complete enough to inform perception and guide behavior.

5. High-level plasticity: “experiments with goggles” and the like

Beginning in the late 1920s, psychologists examined the ability of human subjects to adapt to various distortions of the visual world (reviewed in Kohler, 1962; for earlier work with inverting prisms, see Stratton, 1897). Many of their results are nothing short of astonishing. For example, simple prisms displace the image on the retina, convert straight edges to curved ones and create rainbow fringes along contrast borders. All of these distortions are completely eliminated over the course of several days (Gibson, 1933; Kohler, 1962; R. T. Born, unpublished observations). It is not simply that subjects learn to ignore the distortions and/or behaviorally compensate for them—they actually disappear, giving way to normal perception and, when the prisms are removed, the subject experiences perceptual distortions in the opposite direction (i.e. the fringes are of the complementary color and the direction of curvature reverses). Even the dynamic distortions created by the prism goggles—such as expansions and contractions along the horizontal meridian produced by combinations of head and eye movements—disappear over the course of several weeks, again to return in opposing directions when the goggles are removed. In the most extreme versions of these experiments, subjects adapted to conditions in which their visual world was inverted or right-left reversed.7 The lesson we take from these experiments is that the brains of adults who have grown up with normal vision have built a powerful internal model of the external world (Barlow, 1994) and that distortions that are prolonged and consistent can be corrected. Again, this sort of result appears to be good news in terms of restoring vision to adults who have become blind after the critical period. It is currently impossible to say at which level of the nervous system this plasticity occurs, but from the perspective of a satisfactory restoration of vision, it would hardly seem to matter.

Additional insights concerning high-level plasticity, and the nature of perception in general, have been learned from efforts dedicated to replacing one sensory modality, such as vision, with another modality, most commonly touch (Bach-y-Rita, Collins, Saunders, White, & Scadden, 1969; Maidenbaum, Abboud, & Amedi, 2014) or audition (Auvray, Hanneton, & O’Regan, 2007; Meijer, 1992). We should first point out that such devices appear to have been a practical failure: despite promising early results in laboratory settings and a long history of development, these devices are currently not in wide use among the blind (Lenay, Gapenne, Hanneton, Marque, & Genoulle, 2003; though, see Maidenbaum et al., 2014). Even so, we believe these efforts have taught us much about the brain’s ability to extract meaningful information from unusual, and in some cases highly abstract, sensory data.

In the 1960’s Paul Bach-y-Rita and colleagues modified a dental chair to accommodate 400 solenoid stimulators in a 20 x 20 grid so that images from a camera could be converted into a tactile matrix applied to the subject’s back. After extensive practice, this device allowed blind users to distinguish large letters and objects (Bach-y-Rita et al., 1969). Since then multiple versions of similar devices have been built and tested, projecting an image as tactile information onto the chest, back, fingertips, thigh or tongue (for review, see Lenay et al., 2003).

The first major lesson that these devices have taught us is the critical nature of active sensation. If the subjects were presented with a static form on the tactile matrix, no sensation other than a vague tickling was experienced. It was only when the subjects could manipulate the camera that a sense of objects in an external space could develop. This sense of space could be quite vivid—for example, when the lens of the camera was zoomed in (unbeknownst to the subjects), the subjects took evasive action appropriate for a looming object (Lenay et al., 2003), and they did this regardless of whether the tactile stimulation itself was applied to the chest or the back. Another interesting feature of these experiments is that the subjects could extract spatial information regardless of how they moved the camera, whether mounted on the head via spectacles or held in the hand. This suggests that the brain is capable of making complex spatial transformations through the learning of correlations between movements and their sensory consequences.

A second lesson, learned especially from vision-to-audition substitution systems8, is that subjects can learn to extract spatial information from codes that are fundamentally non-spatial. For tactile devices, there is no problem with spatial dimensions: a two dimensional image is translated onto a two dimensional tactile array. The devices assisting with auditory information do not directly map to two spatial dimensions, so a new code was created. One system, known as “vOICe” (Meijer, 1992), maps the rows of a 64 x 64 image to different frequencies: higher frequencies for the top and lower frequencies for the bottom of the image9. Each frequency is modulated in loudness according to the brightness of the corresponding pixel, and the image is presented as a left-to-right scan over time, typically requiring one second per full image. Both sighted and blind users can learn to localize and recognize objects after prolonged training periods, though such functionality is typically demonstrated in unnaturally easy conditions, where objects are presented in the absence of clutter and occlusion, and even under these conditions performance is slow and not completely reliable (Auvray et al., 2007). Nevertheless, such results are promising because they illustrate the brain’s ability to learn new, abstract codes even in adults.

Finally, experiments with so-called “brain-computer interfaces” (BCIs) have revealed the ability of cortical motor networks to learn new codes with which to produce “movements.” In these experiments, electrical signals from a population of neurons in motor cortex of alert monkeys are mapped, via a decoding algorithm, to produce movement of a cursor on a computer screen in a virtual space of either two (Ganguly et al. 2009; Chase et al. 2012) or three (Jarosiewicz et al. 2008) dimensions. The experimenter has complete control over the mapping between neural activity and cursor movement, allowing arbitrary perturbations to be introduced. Remarkably, the animal’s performance and the underlying neural tuning curves showed partial adaptation to such perturbations in just a few hundred trials (Jarosiewicz et al. 2008; Chase et al. 2012), and near perfect performance was attained after several days, even when the perturbation consisted of a completely random re-mapping (Ganguly et al. 2009). We do not know whether such results will generalize to visual cortex; however, the finding that normal, human visual cortex that has been visually deprived for as little as five days can be recruited for improved somatosensory discrimination (Merabet et al. 2008), suggests a similar degree of high-level plasticity. Moreover, such results provide additional motivation to explore the possibility that new, more abstract and more efficient codes might improve the ultimate functionality of visual prostheses.

6. Final speculations: Can V1 learn a new code?

The question of plasticity is particularly relevant when considering the code by which signals are introduced into V1, where “code” refers to how the visual inputs are pre-processed before being converted into electrical stimulation. Above, we mention the possibility of treating each electrode like a pixel. This approach seems reasonably conservative: the retinotopic arrangement of V1 roughly parallels the pixel-by-pixel sampling of the interfacing camera, and treating each electrode like a pixel preserves this relationship. It is a natural code in the sense that it is one that V1 already uses.

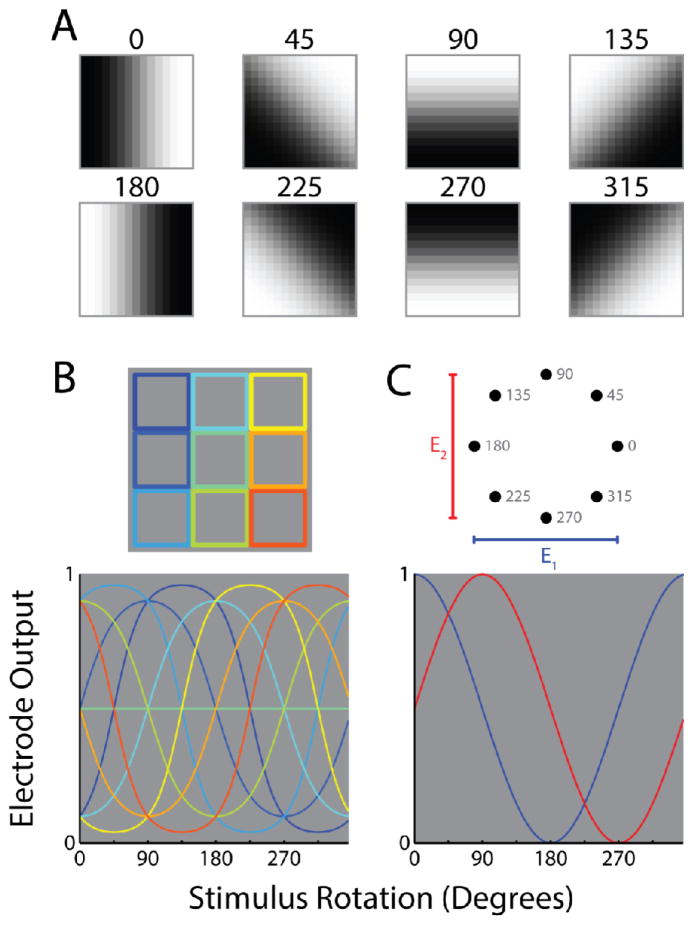

This makes the reasonable assumption that the best code is the one that V1 will best “understand.” This would certainly entail pre-processing of the image to instantiate retinal operations, such as center-surround opponency, as described in section two. But given the plasticity of cortex documented in the sections above, we speculate that V1 (and the rest of the visual system) might be able to learn new codes. And there certainly exist codes that represent visual information in more sparse and efficient ways, which may offer a considerable practical advantage in allowing fewer electrodes to deliver the same amount of visual information. Figure 5 demonstrates this idea for a simple “quadrature code” that is well established in engineering disciplines. The top row depicts eight possible visual stimuli, each of which could be effectively summarized by the orientation and sign of the contrast edge (the stimuli rotated by 0° and 180° have identical orientations but opposite signs of contrast). An inefficient pixel-to-electrode code (fig. 5B) maps each different stimulus to a separate electrode, thus requiring nine electrodes. A more efficient way to communicate the same information (fig. 5C) would be to first pre-process the stimulus to extract the dominant orientation and sign of contrast, and then use this information to vary the stimulation intensity at each of only two electrodes. This essentially produces outputs proportional to the cosine and sine of the underlying rotation, respectively, and would increase coding efficiency by a factor of 9/2.

Figure 5.

A new quadrature code for vision. A. Eight rotations of a contrast edge symbolizing an image fragment from an external stimulus. B. The default, “pixel-to-electrode” code. In this example the image fragments in A are downsampled into a 3 pixel by 3 pixel representation. The intensity of each pixel maps onto a distinct electrode. Top, the portion of each image fragment that feeds into each pixel/electrode. Bottom, the corresponding electrode outputs over the full range of stimulus rotations. C. A hypothetical, “non-natural” code. This code calculates the dominant orientation and sign of the stimulus and represents this information using 2 electrodes. The first electrode transmits a signal proportional to the cosine of the calculated rotation and the second transmits a signal proportional to its sine; such signals are said to be in quadrature, as they are out of phase by 90 degrees. Top, the “coordinates” of the 2 electrode outputs at each of the rotations shown in A. Bottom, the corresponding electrode output over the full range of stimulus rotations.

This thought experiment leaves us with two questions. Which codes make the best use of the fewest electrodes? And which codes will V1 understand? The relative importance of these two questions, however, is determined by the answer to the question we have been discussing: how plastic are the cortical and perceptual mechanisms within and beyond V1? Given sufficient plasticity, prostheses using V1 as an entry point could potentially overcome the limitation of low electrode density by preprocessing and condensing the visual information before converting it to electrical stimulation.

We have reviewed studies suggesting that, over time, the adult brain may be capable of extracting information provided through a condensed, non-natural code. The upshot of these studies is that plasticity exists into adulthood with regards to both low-level, local mechanisms (Section 4) and higher-level perception and action (Section 5). In the cortex, these plasticity mechanisms appear to depend on the temporal coincidence between the activities of neighboring neurons (Jackson et al., 2006; Rebesco et al., 2010; Rebesco & Miller, 2011). A prosthesis that faithfully translates the external environment into a cortical input will impose patterns of coincidence, and with sufficient experience, the patterns most fundamentally related to the structure of the external environment should occur often enough to meaningfully drive the reorganization of cortical circuits in the vicinity of the stimulating array. Provided the external sources of the signals are stable, a lawful relationship will exist between the active manipulations of the viewing device (i.e. head movements that change the viewpoint of the camera) and the resulting transformations of the signals across the array. In the case of a pixel-to-electrode code, the relationship is straightforward. For example, if the user turns her head to the right slightly, the signals on the electrode array will shift some number of electrodes to the left. Any single snapshot of the signals on the array may fail to resolve key descriptions of the visual environment because many possible stimuli could give rise to the same input. However, the number of possible stimuli that could give rise to the sequence of snapshots strung together by active sensing is comparatively small. The important point is that, as has been demonstrated through experiments in sensory substitution (Lenay et al., 2003), the brain is well equipped to use active sensing to extract the structure of the signals that impinge upon it.

The intersection between the type of learning acquired through active sensing and the type of learning permitted through plasticity within V1 is not at all clear. The alternative code used as an example above would require that the brain learn to appropriately combine the signals between the pair of electrodes that encode the same region of visual space. This could be facilitated both by the appropriate electrode spacing (Ghose & Maunsell, 2012) and by active sensing, which would enforce the tendency for head movements to result in translations of related pairs of signals. Alternatively, the structure of the inputs themselves (and the patterns they create across the input array) may drive local plasticity towards the extraction of those patterns, which may in turn facilitate higher-order pattern learning, such as that developed through active sensing.

To our knowledge, no studies have specifically asked whether plasticity mechanisms in the adult brain support the learning of a new code if normal sensory inputs are replaced with visually derived inputs through a cortical prosthesis. As such, the questions raised in this section await empirical answers and the viewpoints offered above are intended purely as provocative speculation that we hope will stimulate future research. Studies that directly investigate the conditions under which adult cortex adaptively reorganizes in response to arbitrarily patterned input will go a long way towards resolving the question of whether a cortical entry point for visual restoration makes sense. These studies will need to show that any reorganization is specific to the patterns that drive it and that this reorganization improves the representation of whatever information those patterns contain.

Highlights.

V1 has large magnification factor allowing for a high-resolution visual prosthesis.

V1 of adult monkeys can “learn” to better detect electrical signals over time.

Cortical plasticity may allow more efficient and abstract visual codes to be used.

Acknowledgments

We are grateful to John Maunsell for helpful comments on the manuscript. This work was supported by NIH EY11379 to RTB.

Footnotes

This is a rough estimate. There are a number of different definitions of the fovea based on factors ranging from anatomical features, such as the “foveal pit” (Polyak, 1941; Schein, 1988), to psychophysical measurements of absent rod vision or the subject's preferred locus of fixation (Putnam et al., 2005).

The peripheral retina obviously plays a critical role in alerting the observer to potential threats, usually involving moving objects. We largely ignore this function here, but suggest that a visual prosthesis might provide this function through some other means such as an audible warning when out-of-view motion is detected.

Acuity can be measured in a variety of ways including with sinusoidal gratings, letters of various sizes (e.g. Snellen) and a vernier task. Each of these yields slightly different measures of acuity. See Westheimer (1992) for an excellent treatment of this topic.

We use square MEAs with regularly spaced electrodes in this and other examples solely because these are the types of arrays for which we have experimental data in monkeys. There is no reason that a cortical visual prosthesis would need to adhere to this design. In fact, because of the irregular, folded nature of much of human V1, a more flexible, irregular design would likely be required.

This is approximately the angle of the visual field subtended by the width of your thumb held at arm's length.

The “scotoma” is defined as the region of cortex corresponding to the lesion in the retina. For lesions of the early visual pathways, the scotoma is manifest as a localized region of blindness in the visual field.

An original movie of one of Erismann’s subjects adapting to inverting goggles can be viewed at: http://www.awz.uni-wuerzburg.de/archiv/film_foto_tonarchiv/filmdokumente/th_erismann_ikohler/die_umkehrbrille_und_das_aufrechte_sehen/

Such systems should not be confused with the ability of some blind humans to echolocate using the reflected sounds from clicks that they produce with their tongues. This remarkable feat is beyond the scope of this article. (For reviews see Schenkman & Nilsson, 2010; Stoffregen & Pittenger, 1995; Teng & Whitney, 2011).

Interestingly, the mapping of high pitched sounds to the top and low to the bottom is not completely arbitrary. Subjects were faster at identifying the elevation of frequency-encoded objects for the “natural” cross-modal mapping (high-top, low-bottom) than for the opposite mapping (Melara & O'Brien 1987).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Richard T. Born, Email: rborn@hms.harvard.edu, Dept. of Neurobiology, Harvard Medical School; Center for Brain Science, Harvard University

Alexander Trott, Email: atrott@fas.harvard.edu, Dept. of Neurobiology, Harvard Medical School and Harvard PhD Program in Neuroscience.

Till Hartmann, Email: till@hms.harvard.edu, Dept. of Neurobiology, Harvard Medical School.

References

- Auvray M, Hanneton S, O’Regan JK. Learning to perceive with a visuo-auditory substitution system: localisation and object recognition with ‘the vOICe’. Perception. 2007;36(3):416–430. doi: 10.1068/p5631. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P, Collins CC, Saunders FA, White B, Scadden L. Vision substitution by tactile image projection. Nature. 1969;221(5184):963–964. doi: 10.1038/221963a0. [DOI] [PubMed] [Google Scholar]

- Baker CI, Peli E, Knouf N, Kanwisher NG. Reorganization of visual processing in macular degeneration. Journal of Neuroscience. 2005;25(3):614–618. doi: 10.1523/JNEUROSCI.3476-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow H. What is the Computational Goal of the Neocortex? In: Koch C, Davis JL, editors. Large-Scale Neuronal Theories of the Brain. MIT Press; 1994. pp. 1–22. [Google Scholar]

- Bradley DC, Troyk PR, Berg JA, Bak M, Cogan S, Erickson R, et al. Visuotopic mapping through a multichannel stimulating implant in primate V1. Journal of Neurophysiology. 2005;93(3):1659–1670. doi: 10.1152/jn.01213.2003. [DOI] [PubMed] [Google Scholar]

- Brindley GS, Lewin WS. The sensations produced by electrical stimulation of the visual cortex. The Journal of Physiology. 1968;196(2):479–493. doi: 10.1113/jphysiol.1968.sp008519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callaway EM. Local circuits in primary visual cortex of the macaque monkey. Annual Review of Neuroscience. 1998;21:47–74. doi: 10.1146/annurev.neuro.21.1.47. [DOI] [PubMed] [Google Scholar]

- Chase SM, Kass RE, Schwartz AB. Behavioral and neural correlates of visuomotor adaptation observed through a brain-computer interface in primary motor cortex. J Neurophysiol. 2012;108(2):624–44. doi: 10.1152/jn.00371.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M. Functional relevance of cross-modal plasticity in blind humans. Nature. 1997;389(6647):180–3. doi: 10.1038/38278. [DOI] [PubMed] [Google Scholar]

- Connolly M, Van Essen D. The representation of the visual field in parvicellular and magnocellular layers of the lateral geniculate nucleus in the macaque monkey. The Journal of Comparative Neurology. 1984;226(4):544–564. doi: 10.1002/cne.902260408. [DOI] [PubMed] [Google Scholar]

- Cowey A, Rolls ET. Human cortical magnification factor and its relation to visual acuity. Experimental Brain Research. Experimentelle Hirnforschung Expérimentation Cérébrale. 1974;21(5):447–454. doi: 10.1007/BF00237163. [DOI] [PubMed] [Google Scholar]

- Dow BM, Snyder AZ, Vautin RG, Bauer R. Magnification factor and receptive field size in foveal striate cortex of the monkey. Experimental Brain Research. Experimentelle Hirnforschung Expérimentation Cérébrale. 1981;44(2):213–228. doi: 10.1007/BF00237343. [DOI] [PubMed] [Google Scholar]

- Duncan RO, Boynton GM. Cortical magnification within human primary visual cortex correlates with acuity thresholds. Neuron. 2003;38(4):659–671. doi: 10.1016/s0896-6273(03)00265-4. [DOI] [PubMed] [Google Scholar]

- Engel SA, Rumelhart DE, Wandell BA, Lee AT, Glover GH, Chichilnisky EJ, Shadlen MN. fMRI of human visual cortex. Nature. 1994;369(6481):525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex (New York, NY 1991) 1991;1(1):1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Field GD, Gauthier JL, Sher A, Greschner M, Machado TA, Jepson LH, et al. Functional connectivity in the retina at the resolution of photoreceptors. Nature. 2010;467(7316):673–677. doi: 10.1038/nature09424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganguly K, Dimitrov DF, Wallis JD, Carmena JM. Reversible large-scale modification of cortical networks during neuroprosthetic control. Nat Neurosci. 2011;14(5):662–7. doi: 10.1038/nn.2797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganguly K, Carmena JM. Emergence of a stable cortical map for neuroprosthetic control. PLoS Biol. 2009;7(7):e1000153. doi: 10.1371/journal.pbio.1000153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghose K, Maunsell JHR. A strong constraint to the joint processing of pairs of cortical signals. Journal of Neuroscience. 2012;32(45):15922–15933. doi: 10.1523/JNEUROSCI.2186-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. Adaptation, after-effect and contrast in the perception of curved lines. J Exp Psychol. 1933;16(1):1–31. [Google Scholar]

- Gilbert CD, Li W. Adult visual cortical plasticity. Neuron. 2012;75(2):250–264. doi: 10.1016/j.neuron.2012.06.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grüsser OJ. Migraine phosphenes and the retino-cortical magnification factor. Vision Research. 1995;35(8):1125–1134. doi: 10.1016/0042-6989(94)00187-q. [DOI] [PubMed] [Google Scholar]

- Hensch TK. Critical period plasticity in local cortical circuits. Nature Reviews Neuroscience. 2005;6(11):877–888. doi: 10.1038/nrn1787. [DOI] [PubMed] [Google Scholar]

- Histed MH, Ni AM, Maunsell JHR. Insights into cortical mechanisms of behavior from microstimulation experiments. Progress in Neurobiology. 2012:1–16. doi: 10.1016/j.pneurobio.2012.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horng SH, Sur M. Visual activity and cortical rewiring: activity-dependent plasticity of cortical networks. Progress in Brain Research. 2006;157:3–11. doi: 10.1016/s0079-6123(06)57001-3. [DOI] [PubMed] [Google Scholar]

- Hubel DH. Eye, Brain, and Vision. Scientific American Library Series; 1988. Deprivation and Development. [Google Scholar]

- Hubel DH, Wiesel TN. Uniformity of monkey striate cortex: a parallel relationship between field size, scatter, and magnification factor. The Journal of Comparative Neurology. 1974;158(3):295–305. doi: 10.1002/cne.901580305. [DOI] [PubMed] [Google Scholar]

- Jackson A, Mavoori J, Fetz EE. Long-term motor cortex plasticity induced by an electronic neural implant. Nature. 2006;444(7115):56–60. doi: 10.1038/nature05226. [DOI] [PubMed] [Google Scholar]

- Jarosiewicz B, Chase SM, Fraser GW, Velliste M, Kass RE, Schwartz AB. Functional network reorganization during learning in a brain-computer interface paradigm. Proc Natl Acad Sci U S A. 2008;105(49):19486–91. doi: 10.1073/pnas.0808113105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jepson LH, Hottowy P, Mathieson K, Gunning DE, Dąbrowski W, Litke AM, Chichilnisky EJ. Spatially patterned electrical stimulation to enhance resolution of retinal prostheses. Journal of Neuroscience. 2014;34(14):4871–4881. doi: 10.1523/JNEUROSCI.2882-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kätzel D, Miesenböck G. Experience-dependent rewiring of specific inhibitory connections in adult neocortex. PLoS Biology. 2014;12(2):e1001798. doi: 10.1371/journal.pbio.1001798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler I. Experiments with Goggles. Scientific American. 1962 Apr 29; doi: 10.1038/scientificamerican0562-62. Retrieved April 29, 2014, from http://directlink.lib.harvard.edu/sfx_bypass/sfx_bypass.cgi?target=http%3A%2F%2Fezp-prod1.hul.harvard.edu%2Flogin%3Furl%3Dhttp%3A%2F%2Fdx.doi.org%2F10.1038%2Fscientificamerican0562-62%3Fnosfx%3Dy. [DOI] [PubMed]

- Kolb H, Dekorver L. Midget ganglion cells of the parafovea of the human retina: a study by electron microscopy and serial section reconstructions. The Journal of Comparative Neurology. 1991;303(4):617–636. doi: 10.1002/cne.903030408. [DOI] [PubMed] [Google Scholar]

- Kolb H, Marshak D. The midget pathways of the primate retina. Documenta Ophthalmologica Advances in Ophthalmology. 2003;106(1):67–81. doi: 10.1023/a:1022469002511. [DOI] [PubMed] [Google Scholar]

- Lenay C, Gapenne O, Hanneton S, Marque C, Genoulle C. Sensory substitution: Limits and perspectives. In: Hatwell Y, Arlette S, Gentaz E, editors. Touching for Knowing: Cognitive psychology of haptic manual perception. Philadelphia, PA: John Benjamins Publishing Company; 2003. pp. 275–292. [Google Scholar]

- Loudin JD, Cogan SF, Mathieson K, Sher A, Palanker DV. Photodiode circuits for retinal prostheses. IEEE Trans Biomed Circuits Syst. 2011;5(5):468–80. doi: 10.1109/TBCAS.2011.2144980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maidenbaum S, Abboud S, Amedi A. Neuroscience and Biobehavioral Reviews. Neuroscience and Biobehavioral Reviews. 2014;41:3–15. doi: 10.1016/j.neubiorev.2013.11.007. [DOI] [PubMed] [Google Scholar]

- Malpeli JG, Baker FH. The representation of the visual field in the lateral geniculate nucleus of Macaca mulatta. The Journal of Comparative Neurology. 1975;161(4):569–594. doi: 10.1002/cne.901610407. [DOI] [PubMed] [Google Scholar]

- Masland RH, Raviola E. Confronting complexity: strategies for understanding the microcircuitry of the retina. Annual Review of Neuroscience. 2000;23:249–284. doi: 10.1146/annurev.neuro.23.1.249. [DOI] [PubMed] [Google Scholar]

- McNamara JO, Byrne MC, Dasheiff RM, Fitz JG. The kindling model of epilepsy: a review. Prog Neurobiol. 1980;15(2):139–59. doi: 10.1016/0301-0082(80)90006-4. [DOI] [PubMed] [Google Scholar]

- Meijer PB. An experimental system for auditory image representations. IEEE Transactions on Bio-Medical Engineering. 1992;39(2):112–121. doi: 10.1109/10.121642. [DOI] [PubMed] [Google Scholar]

- Merabet LB, Hamilton R, Schlaug G, Swisher JD, Kiriakopoulos ET, Pitskel NB, Kauffman T, Pascual-Leone A. Rapid and reversible recruitment of early visual cortex for touch. PLoS One. 2008;3(8):e3046. doi: 10.1371/journal.pone.0003046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphey DK, Maunsell JH, Beauchamp MS, Yoshor D. Perceiving electrical stimulation of identified human visual areas. Proc Natl Acad Sci U S A. 2009;106(13):5389–93. doi: 10.1073/pnas.0804998106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nassi JJ, Callaway EM. Parallel processing strategies of the primate visual system. Nature Reviews Neuroscience. 2009;10(5):360–372. doi: 10.1038/nrn2619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ni AM, Maunsell JHR. Microstimulation reveals limits in detecting different signals from a local cortical region. Current Biology : CB. 2010;20(9):824–828. doi: 10.1016/j.cub.2010.02.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Leary DD. Do cortical areas emerge from a protocortex? Trends Neurosci. 1989;12(10):400–6. doi: 10.1016/0166-2236(89)90080-5. [DOI] [PubMed] [Google Scholar]

- Pezaris JS, Reid RC. Demonstration of artificial visual percepts generated through thalamic microstimulation. Proc Natl Acad Sci U S A. 2007;104(18):7670–5. doi: 10.1073/pnas.0608563104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pezaris JS, Eskandar EN. Getting signals into the brain: visual prosthetics through thalamic microstimulation. Neurosurgical Focus. 2009;27(1):E6. doi: 10.3171/2009.4.FOCUS0986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polyak SL. The Retina. University of Chicago Press; 1941. [Google Scholar]

- Putnam NM, Hofer HJ, Doble N, Chen L, Carroll J, Williams DR. The locus of fixation and the foveal cone mosaic. Journal of Vision. 2005;5(7):632–639. doi: 10.1167/5.7.3. [DOI] [PubMed] [Google Scholar]

- Rebesco JM, Miller LE. Enhanced detection threshold for in vivo cortical stimulation produced by Hebbian conditioning. Journal of Neural Engineering. 2011;8(1):016011. doi: 10.1088/1741-2560/8/1/016011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rebesco JM, Stevenson IH, Körding KP, Solla SA, Miller LE. Rewiring neural interactions by micro-stimulation. Frontiers in Systems Neuroscience. 2010:4. doi: 10.3389/fnsys.2010.00039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA. Probability of shock in the presence and absence of CS in fear conditioning. Journal of Comparative and Physiological Psychology. 1968;66(1):1–5. doi: 10.1037/h0025984. [DOI] [PubMed] [Google Scholar]

- Schein SJ. Anatomy of macaque fovea and spatial densities of neurons in foveal representation. The Journal of Comparative Neurology. 1988;269(4):479–505. doi: 10.1002/cne.902690403. [DOI] [PubMed] [Google Scholar]

- Schenkman BN, Nilsson ME. Human echolocation: Blind and sighted persons’ ability to detect sounds recorded in the presence of a reflecting object. Perception. 2010;39(4):483–501. doi: 10.1068/p6473. [DOI] [PubMed] [Google Scholar]

- Schiller PH, Tehovnik EJ. Visual prosthesis. Perception. 2008;37(10):1529–1559. doi: 10.1068/p6100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiller PH, Slocum WM, Kwak MC, Kendall GL, Tehovnik EJ. New methods devised specify the size and color of the spots monkeys see when striate cortex (area V1) is electrically stimulated. Proceedings of the National Academy of Sciences USA. 2011;108:17809–17814. doi: 10.1073/pnas.1108337108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schira MM, Tyler CW, Breakspear M, Spehar B. The foveal confluence in human visual cortex. J Neurosci. 2009;29(28):9050–8. doi: 10.1523/JNEUROSCI.1760-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt EM, Bak MJ, Hambrecht FT, Kufta CV, O’Rourke DK, Vallabhanath P. Feasibility of a visual prosthesis for the blind based on intracorticai microstimulation of the visual cortex. Brain : a Journal of Neurology. 1996;119:507–522. doi: 10.1093/brain/119.2.507. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, et al. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science (New York, NY) 1995;268(5212):889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Sincich LC, Horton JC. The circuitry of V1 and V2: integration of color, form, and motion. Annual Review of Neuroscience. 2005;28:303–326. doi: 10.1146/annurev.neuro.28.061604.135731. [DOI] [PubMed] [Google Scholar]

- Song W, Kerr CC, Lytton WW, Francis JT. Cortical plasticity induced by spike-triggered microstimulation in primate somatosensory cortex. PloS One. 2013;8(3):e57453. doi: 10.1371/journal.pone.0057453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoffregen TA, Pittenger JB. Human echolocation as a basic form of perception and action. Ecol Psychol. 1995;7:181–216. [Google Scholar]

- Stratton GM. Vision without inversion of the retinal image. Psych Rev. 1897;4:341–360. 463–481. [Google Scholar]

- Talwar SK, Gerstein GL. Reorganization in awake rat auditory cortex by local microstimulation and its effect on frequency-discrimination behavior. Journal of Neurophysiology. 2001;86(4):1555–1572. doi: 10.1152/jn.2001.86.4.1555. [DOI] [PubMed] [Google Scholar]

- Tehovnik EJ, Slocum WM. Phosphene induction by microstimulation of macaque V1. Brain Research Reviews. 2007;53(2):337–343. doi: 10.1016/j.brainresrev.2006.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tehovnik EJ, Slocum WM, Carvey CE, Schiller PH. Phosphene induction and the generation of saccadic eye movements by striate cortex. Journal of Neurophysiology. 2005;93(1):1–19. doi: 10.1152/jn.00736.2004. [DOI] [PubMed] [Google Scholar]

- Tehovnik EJ, Slocum WM, Smirnakis SM, Tolias AS. Microstimulation of visual cortex to restore vision. Progress in Brain Research. 2009;175:347–375. doi: 10.1016/S0079-6123(09)17524-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tehovnik EJ, Slocum WM. Electrical induction of vision. Neuroscience and Biobehavioral Reviews. 2013;37:803–818. doi: 10.1016/j.neubiorev.2013.03.012. [DOI] [PubMed] [Google Scholar]

- Teng S, Whitney D. The acuity of echolocation: Spatial resolution in the sighted compared to expert performance. Journal of Visual Impairment & Blindness. 2011;105(1):20–32. [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Silverman MS, Switkes E, De Valois RL. Deoxyglucose analysis of retinotopic organization in primate striate cortex. Science (New York, NY) 1982;218(4575):902–904. doi: 10.1126/science.7134981. [DOI] [PubMed] [Google Scholar]

- Tootell RB, Switkes E, Silverman MS, Hamilton SL. Functional anatomy of macaque striate cortex. II. Retinotopic organization. The Journal of Neuroscience : the Official Journal of the Society for Neuroscience. 1988;8(5):1531–1568. doi: 10.1523/JNEUROSCI.08-05-01531.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC, Newsome WT, Maunsell JH. The visual field representation in striate cortex of the macaque monkey: asymmetries, anisotropies, and individual variability. Vision Research. 1984;24(5):429–448. doi: 10.1016/0042-6989(84)90041-5. [DOI] [PubMed] [Google Scholar]

- Wandell BA. Foundations of Vision. Sunderland, MA: Sinauer Associates, Inc; 1995. [Google Scholar]

- Wandell BA, Smirnakis SM. Plasticity and stability of visual field maps in adult primary visual cortex. Nat Rev Neurosci. 2009;10(12):873–84. doi: 10.1038/nrn2741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werblin F, Roska B, Balya D. Parallel processing in the mammalian retina: lateral and vertical interactions across stacked representations. Progress in Brain Research. 2001;131:229–238. doi: 10.1016/s0079-6123(01)31019-1. [DOI] [PubMed] [Google Scholar]

- Westheimer G. Visual Acuity. In: Hart WM Jr, editor. Adler’s Psychology of the Eye. 9. St. Louis, MO: Mosby; 1992. pp. 531–547. [Google Scholar]

- Zrenner E. Fighting blindness with microelectronics. Sci Transl Med. 2013;5(210):210ps16. doi: 10.1126/scitranslmed.3007399. [DOI] [PubMed] [Google Scholar]