Abstract

Frontotemporal dementia is an important neurodegenerative disorder of younger life led by profound emotional and social dysfunction. Here we used fMRI to assess brain mechanisms of music emotion processing in a cohort of patients with frontotemporal dementia (n = 15) in relation to healthy age-matched individuals (n = 11). In a passive-listening paradigm, we manipulated levels of emotion processing in simple arpeggio chords (mode versus dissonance) and emotion modality (music versus human emotional vocalizations). A complex profile of disease-associated functional alterations was identified with separable signatures of musical mode, emotion level, and emotion modality within a common, distributed brain network, including posterior and anterior superior temporal and inferior frontal cortices and dorsal brainstem effector nuclei. Separable functional signatures were identified post-hoc in patients with and without abnormal craving for music (musicophilia): a model for specific abnormal emotional behaviors in frontotemporal dementia. Our findings indicate the potential of music to delineate neural mechanisms of altered emotion processing in dementias, with implications for future disease tracking and therapeutic strategies.

Keywords: music, voice, emotion, fMRI, bvFTD, musicophilia

Introduction

Music is an exceptionally emotionally rich and engaging sensory stimulus. Cognitive neuropsychology and functional neuroimaging studies in the healthy brain have shown that the neural mechanisms involved in analyzing music are intimately linked to the machinery of pleasure and reward.1,2 Emotion in music is processed by a complex distributed brain network architecture, including salience and evaluation systems in the insula, amygdala, and orbitofrontal cortex projecting to mesolimbic and subcortical dopaminergic pathways.1–6 More elemental affective attributes of dissonance and pleasantness, cognitive labeling of musical emotions, attribution of mental states to music, and appreciation of musical structure are likely to represent at least partially separable dimensions of music emotion coding.2–5,7,8 Taken together, this evidence suggests that the essentially abstract phenomenon of music may have had a neurobiological role during human evolution, perhaps by engaging a neural puzzle-solving algorithm that facilitates decoding of emotional mental states.9 This formulation underlines the social function of music, a key theme in contemporary neuroscience accounts.2,9

Various brain disorders have been found to impair music processing and, more particularly, perception and understanding of emotion in music.9–14 Within the neurodegenerative disease spectrum, the behavioral variant of frontotemporal dementia (bvFTD) produces striking deficits of music emotion processing as part of a wider syndrome of selective brain atrophy (chiefly affecting the frontal and temporal lobes) with impaired emotional understanding and responsiveness and profound disruption of interpersonal conduct.15–19 These patients exhibit a paradigmatic acquired disorder of social cognition that frequently spares general intellect; this creates challenges as well as opportunities. Clinically, bvFTD is liable to be misdiagnosed as a psychiatric disorder, and objective tools for characterizing abnormalities of emotional behavior remain very limited, although neurobiologically, bvFTD offers a unique window on critical brain network processes that support complex (particularly social) behaviors. Music is therefore especially attractive as a novel probe of disordered emotional and social signal processing in bvFTD: it promises to illuminate dysfunctional brain architecture (indeed, the study of bvFTD has already informed theoretical models of music biology9) and could potentially provide sensitive markers of brain dysfunction to aid clinical diagnosis and monitoring. Previous work on music emotion processing in bvFTD has focused largely on assessing recognition of emotions in musical pieces and correlation with structural neuroimaging of regional brain atrophy.16–18 Such approaches have several important limitations. The use of familiar music as an emotion carrier and procedures that depend on verbal labeling are potentially confounded by semantic impairment, which frequently accompanies bvFTD; meanwhile, structural neuroimaging techniques are essentially associational and cannot examine underlying dysfunctional brain mechanisms.

Here, we assessed brain mechanisms of music emotion processing in bvFTD directly, using functional MRI (fMRI) and a passive listening paradigm that manipulated two levels of emotion analysis—musical mode and dissonance—in simple chord sequences. This paradigm was well suited to address music emotion processing in patients with bvFTD on both behavioral and neuroanatomical grounds. Although musical modes, chords, and dissonance have well-established emotional resonances,20–23 these features lack the specific semantic associations of familiar melodies, particularly for nonmusicians.

The dimensions of consonance–dissonance and major–minor mode are likely to access different levels of music emotion representation. Although dissonance is a fundamentally aversive feature even for normal human infants,24 the processing of musical mode is likely to be strongly influenced by musical acculturation and cognitive set.23 However, these musical attributes have been shown to have robust brain substrates in normal human functional imaging and electrophysiological studies25–27 and differential vulnerability to focal brain insults10,13 in nonmusicians as well as trained musicians. Processing of musical mode has been shown to engage a distributed brain network, including prefrontal, anteromedial temporal, limbic, and brainstem areas,20,25,28,29 whereas processing of dissonance engages an overlapping anteromedial temporal, limbic, retrosplenial, and brainstem network.4,25 In this study, we compared music emotion processing with another domain of auditory emotion processing—human vocalizations—which is also vulnerable in bvFTD.16,30,31 We hypothesized that patients with bvFTD would show functional alterations of brain networks mediating musical mode and dissonance and vocal emotion processing relative to healthy older individuals and further that these dimensions of auditory emotion coding would have separable disease signatures within core corticolimbic circuitry that is targeted by the pathological process in bvFTD.32

Methods

Participants

Fifteen consecutive patients (three female; mean age 64 ± 8.2 (SD) years) fulfilling consensus diagnostic criteria for bvFTD15 and 11 healthy age-matched individuals (three female; mean age 64.0 ± 7.7 years) with no history of neurological or psychiatric disorders participated. No participant had a clinical history of hearing loss; none was a professional musician, and the patient and healthy control groups had similar musical backgrounds (years of formal musical training). Participant group demographic, clinical, and general neuropsychological data are summarized in Table S1. All patients had general neuropsychological assessments in keeping with their syndromic diagnosis and supportive structural brain MRI evidence of frontal and/or temporal lobe atrophy with no significant cerebrovascular burden. The bvFTD cohort was stratified post-hoc according to whether patients did or did not exhibit abnormal craving for music (musicophilia). This was defined operationally on the basis of a structured questionnaire33 as compulsive listening to music (typically, a small fixed repertoire of popular songs or classical pieces) for >10 h per week, where this represented a definite increase compared with premorbid levels. The patient subgroup with musicophilia (n = 6) had a mean age of 63 ± 8.1 years and symptom duration of 8.3 ± 5.2 years, similar to the patient subgroup without musicophilia (n = 9; age 64 ± 8.7 years; symptoms 8.9 ± 6.1 years). All participants gave informed consent in accordance with the Declaration of Helsinki.

Experimental stimuli and conditions

In creating the fMRI paradigm, we adopted a subtractive design intended to isolate the effects of variation in emotional attributes of interest against matched baseline conditions with fixed attributes and thereby to identify brain regions specifically processing the relevant attribute without requiring an in-scanner task. Musical stimuli were based on four-note arpeggio chords (C major, G major, A minor, E minor) and their dissonant versions, synthesized as digital wave files with string instrument timbre using Sibelius® software (http://www.sibelius.com). Chords were concatenated to create sequences in which the identity of adjacent chords always varied but the sequence as a whole could be either constant in mode (major or minor) or interleaving major and minor modes and either uniformly consonant or dissonant or interleaving consonant (minor mode) and dissonant chords. In addition, wave files of human male and female nonverbal emotional vocalizations (laughter or crying34) were concatenated to create sequences in which the gender for consecutive vocal segments always varied but the sequence as a whole could be either constant in emotion (laughter or crying) or interleave laughter and crying. All individual sequence elements (chords or vocalizations) were 1.5 seconds in duration with fixed mean intensity; each sequence comprised five elements (overall duration 7.5 seconds) in ABABA configuration. This experimental design yielded six conditions (music fixed mode consonant, MFC; music fixed dissonant, MFD; music changing mode, MCM; music changing dissonance, MCD; vocal fixed emotion, VF; vocal changing emotion, VC) plus an additional silence (rest) condition. Further details about stimuli and conditions are in Table S2.

Scanning protocol

During scanning, 16 trials in each fixed emotion condition, 32 trials in each changing emotion condition, and 10 silence trials (186 trials in total, in two consecutive runs) were presented in pseudorandomized, miniblocked order at a comfortable listening level (at least 70 dB) binaurally via pneumatic headphones embedded in ear-defenders (Esys fMRI system, Invivo Corporation, Orlando, FL, USA). Participants were asked to listen to the sounds with their eyes lightly closed, with no output task. In-house software written in Python (http://www.python.org) was used to integrate stimulus delivery with the scanner controls.

Brain images were acquired on a 3T TIM Trio whole-body MRI scanner (Siemens Healthcare, Erlangen, Germany). Functional echoplanar images were obtained in a sparse-sampling protocol with 8-second interscan pauses during which auditory stimuli were delivered; a B0 field-map for subsequent inhomogeneity correction and a volumetric MPRAGE structural sequence were also acquired for each participant. Further details about image acquisition can be found in Supporting Information.

Postscan behavioral assessments

After scanning, participants performed a two-alternative forced choice (same/different) 1-back psychophysical task to assess their perception of the musical stimuli (further details in Supporting Information). Results were analyzed using Stata 12.1® (StataCorp LP, College Station, TX, USA): a linear-regression model incorporated accuracy scores for all participants with between-group effects of interest and nuisance covariates of age, gender, and reverse digit span (a standard index of auditory working memory) thresholded at P < 0.05.

Analysis of fMRI data

The fMRI data were analyzed using statistical parametric mapping software (SPM8; http://www.fil.ion.ucl.ac.uk/spm). Functional scans for each participant were realigned to the first image, unwarped using field-map for distortions correction, and coregistered with the structural brain image. Structural images for all participants were then segmented into component tissue types and entered into the DARTEL toolbox35 to create a study-specific group mean template brain image for rendering statistical parametric maps. Functional and structural images were normalized to the structural template image aligned in MNI standard stereotactic space and smoothed with a 6-mm full-width-at-half-maximum smoothing kernel.

Processed fMRI data from both scanning runs were entered separately for each participant into first-level design matrices that modeled auditory conditions and rest as separate regressors comprising boxcars of one trial duration convolved with the canonical hemodynamic response function; six regressors of noninterest modeled head movements extracted during realignment. Experimental contrasts were constructed to assess the effects of auditory stimulation (all auditory conditions > rest), musical mode variation (MCM > MFC), musical dissonance variation (MCD > (MFC + MFD)), vocal emotion variation (VC > VF), music emotion level (the interaction of changing musical mode and changing musical dissonance: (MCM > MFC) > (MCD > MFD)); and music-specific emotion (the interaction of changing musical mode and changing vocal emotion: (MCM > MFC) > (VC > VF)). First-level contrast images of interest for each participant were then entered into second-level between-group two-sample t-tests (controls versus bvFTD) with covariates of age and gender; a similar between-subgroup second-level analysis was conducted post-hoc to compare patients with and without musicophilia. Contrasts were thresholded at peak statistical significance criterion P < 0.05 after family-wise error (FWE) correction for multiple voxel-wise comparisons over the whole brain (FWE whole brain) or within the prespecified anatomical region of interest (FWE small volume). Anatomical small volumes were derived from previous functional neuroimaging and lesion work4,10,13,20,25,28,29 and defined using the Harvard brain maps (http://www.fmrib.ox.ac.uk/fsl), comprising auditory association cortex in planum temporale and posterior superior temporal gyrus, anteromedial temporal lobe including amygdala and hippocampus, and inferior frontal lobe. To better interpret significant interactions, peak voxel β parameter estimates were extracted for each component condition and participant and analyzed post-hoc using paired within-group t-tests in Stata 12.1®.

Results

Functional MRI findings

Significant activations for the experimental contrasts of interest are summarized in Table1, and statistical parametric maps and condition mean parameter estimates are displayed in Figure1. Auditory stimulation per se in both patients and healthy older individuals produced bilateral activation of auditory cortex including Heschl's gyrus, planum temporale, and superior temporal gyrus (P < 0.05, FWE whole brain), with no significant between-group differences.

Table 1.

Summary of significant contrasts and regions of activation for patients vs. healthy controlsa

| Peak (mm) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Contrast | Area | Side | Cluster size (voxels) | x | y | z | P value | Z- score | |

| Musical mode | bvFTD > controls | Dorsal brainstem | – | 306 | 1 | −29 | −24 | 0.037 | 5.12 |

| Music emotion level | Controls > bvFTD | PT | L | 51 | −65 | −29 | 0 | 0.013 | 3.55 |

| bvFTD > controls | IFG | R | 90 | 55 | 12 | 11 | 0.028 | 3.77 | |

| Vocal emotion | Controls > bvFTD | Anterior STG | L | 20 | −52 | −10 | −11 | 0.021 | 3.53 |

| Posterior STG | L | 100 | −59 | 33 | 1 | 0.024 | 3.63 | ||

| Music-specific emotion | bvFTD > controls | Posterior STG | L | 292 | −61 | −31 | 0 | 0.001 | 4.67 |

Significant interactions of key emotion-processing contrasts with group are shown; boldface indicates contrast significant at P < 0.05 after FWE correction for multiple voxel-wise comparisons over the whole brain; other contrasts significant at P < 0.05 after FWE correction for multiple comparisons over prespecified anatomical regions of interest. Statistics and coordinates (in MNI space) for local maxima of activation are shown. Condition contrasts were defined as follows (see text for condition labels): musical mode (MCM > MFC); music emotion level ((MCM > MFC) > (MCD > MFD)); vocal emotion (VC > VF); music-specific emotion ((MCM > MFC) > (VC > VF)). bvFTD, behavioral variant of frontotemporal dementia; IFG, inferior frontal gyrus; PT, planum temporale; STG, superior temporal gyrus.

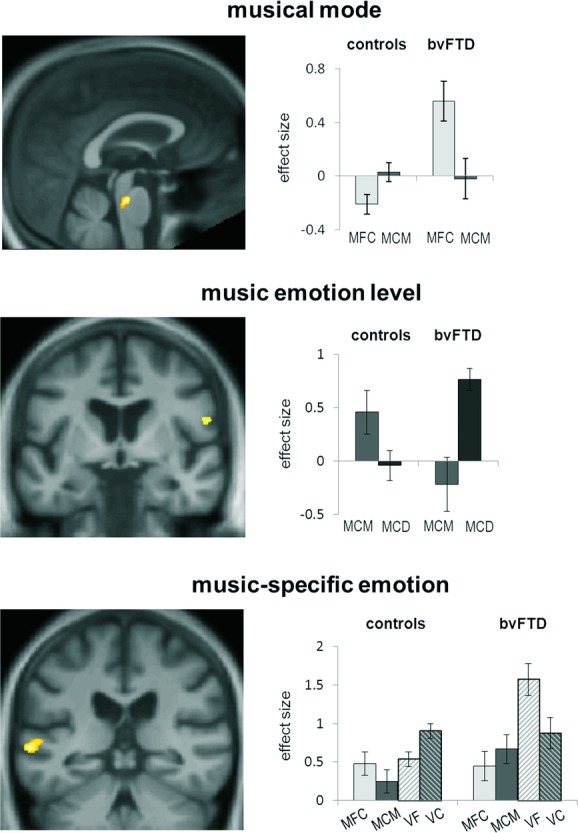

Figure 1.

Statistical parametric maps (SPMs; left panels) of significant between-group contrasts and effect sizes (group mean ± SD peak voxel β parameter estimates; right panels) in key music emotion conditions for behavioral variant frontotemporal dementia (bvFTD) and healthy control groups. SPMs are rendered on a study-specific group anatomical image in MNI space (threshold P < 0.001 uncorrected over whole brain for display; see also Table1). Contrasts were based on interactions as follows: musical mode, ((MCM > MFC) × (controls > bvFTD)); music emotion level, ((MCD > MCM) × (control < bvFTD)); music-specific emotion, ((MCM > MFC) × (VC > VF) × (controls < bvFTD)). MFC, music fixed mode consonant; MCM, music changing mode; MCD, music changing dissonance; VC, vocal changing emotion; VF, vocal fixed emotion.

Musical mode variation produced greater activation in dorsal brainstem (in the region of the raphe nuclei and locus coeruleus) in the healthy control group than in the bvFTD group ((MCM > MFC) × (controls > bvFTD), P < 0.05 FWE whole brain)); post-hoc analysis of β parameter estimates showed that this interaction was driven by a crossover interaction, whereby changing mode enhanced activity in controls (t10 = −3.31, P = 0.008) and reduced activity in patients (t10 = 6.68, P < 0.001) compared to fixed mode. Although musical dissonance variation produced significant activation in the left amygdala and right inferior frontal cortex in the bvFTD group, no significant differences with respect to healthy controls were observed for this contrast. However, there was a significant effect of music emotion level in the left planum temporale and right inferior frontal gyrus in the bvFTD group compared to the healthy control group ((MCD > MCM) × (control < bvFTD), P < 0.05 FWE small volume)); post-hoc analysis of β parameter estimates revealed that this interaction was driven by patient group effects of greater responses to musical mode than dissonance variation in left planum temporale (t14 = −2.22, P = 0.04) with no effect in controls, and the reverse pattern in right inferior frontal gyrus (t14 = 4.24, P < 0.001) with the opposite effect in controls (t10 = −2.34, P = 0.035).

Vocal emotion variation produced greater activation in the left anterior and posterior superior temporal sulcus in the healthy control group than in the bvFTD group ((VC > VF) × (controls > bvFTD), P < 0.05 FWE small volume)); post-hoc analysis of β parameter estimates showed that this effect was driven by a crossover interaction whereby changing vocal emotion enhanced activity in controls and reduced activity in patients compared to fixed vocal emotion for both anterior (controls: t10 = 2.58, P = 0.027; bvFTD: t14 = −2.69, P = 0.018) and posterior (controls: t10 = 2.44, P = 0.035; bvFTD: t14 = −3.72, P = 0.002) areas in superior temporal sulcus. In addition, music-specific emotional responses in an overlapping region of left posterior superior temporal sulcus were greater in the bvFTD group than in the healthy control group ((MCM > MFC) × (VC > VF) × (controls < bvFTD), P < 0.05, FWE small volume)); post-hoc analysis of β parameter estimates confirmed that this three-way interaction effect was driven by attenuated responses to musical mode variation (t10 = −2.43, P = 0.035) and enhanced responses to vocal emotion variation (t10 = 2.40, P = 0.037) in controls and the reverse response pattern in patients. No other significant effects were found at the prescribed threshold.

The post-hoc analysis comparing patient subgroups with and without musicophilia (Table S3) revealed separable activation profiles: the musicophilic subgroup showed greater activation of anterior superior temporal cortex for the effect of auditory stimulation and planum temporale for musical dissonance variation, whereas the nonmusicophilic subgroup showed greater activation of the temporal pole for the effect of musical dissonance variation and orbitofrontal cortex and amygdala for music-specific emotional responses (all P < 0.05, FWE small volume).

Postscan behavioral findings

Both bvFTD patients and healthy control participants performed significantly better than chance (proportion correct 0.5) in the postscan task assessing their discrimination of the musical stimuli (t-test results on proportion correct: controls, mean ± SD = 0.90 ± 0.10, t10 = 13.44, P < 0.001; bvFTD, mean ± SD = 0.78 ± 0.13, t12 = 7.68, P < 0.001). Performance accuracy on the task did not significantly differ between the patient and healthy control groups (P = 0.2).

Discussion

Here we have demonstrated functional neuroana-tomical signatures of disease-associated alterations of musical and vocal emotion coding in a canonical dementia syndrome, bvFTD, relative to healthy older individuals. The present findings are in line with previous behavioral and structural neuroanatomical evidence for altered processing of emotion in music and other modalities in bvFTD,16–18 but in contrast to that previous work, we delineate functional brain network changes directly. The brain regions identified here as loci of altered processing in bvFTD include hubs within a distributed neural network that has been previously implicated both in the pathogenesis of bvFTD19,36 and in the analysis of music and other emotional sounds.1,2,9 The planum temporale and the auditory association cortex in the posterior superior temporal lobe are likely to mediate an early parsing of the auditory scene that disambiguates salient auditory sources from the acoustic background and initiates the process of auditory object identification,37 processes relevant to the analysis of both melodies and voices. Furthermore, these areas communicate with cross-modal and reward circuitry involved in evaluating the emotional significance of sounds.38–40 Inferior frontal cortex has been implicated in processing mode and tonality in music,28,41 and anterior superior temporal cortex has been implicated in processing emotional and other attributes of human vocalizations.42

Processing of musical mode here produced robust differential activation of dorsal brainstem in the region of the midline raphe nuclei and locus coeruleus in patients with bvFTD relative to healthy older individuals. A broadly similar association has been reported previously in the healthy brain during passive listening to musical chord changes25 and underpinning the modulatory effect of music on pain perception.43 These brainstem nuclei are major effector hubs in coordinating primitive arousal and emotional responses to sensory stimulation via widespread ascending and descending serotonergic and noradrenergic pathways. Furthermore, they have been proposed as key output sites mediating dysfunctional large-scale network responses to salient stimuli in bvFTD.36

Although care is needed in interpreting the post-hoc analysis comparing patients with and without musicophilia based on small case numbers, there was evidence of a separation of music emotion-processing mechanisms underpinning these music behavior phenotypes. Musicophilia was associated with relatively enhanced activation of auditory association cortex, whereas the absence of musicophlia was associated with relatively enhanced activation of orbitofrontal and anteromesial temporal lobe, including the amygdala. This differentiation might reflect relative processing biases toward musical pattern analysis versus evaluation (and, potentially, censoring) of musical behavioral responses, respectively, a formulation in line with recent general models of music emotion analysis.1,2,9 However, further work is required to substantiate this conjecture.

Taken together, the present findings reveal a complex profile of functional alterations linked to this neurodegenerative syndrome. Relative to healthy individuals, bvFTD was associated with bidirectional activity shifts and separable regional functional signatures of emotion modality (music versus nonverbal vocal) and processing level (musical mode versus dissonance) within a common distributed frontotemporosubcortical network. This complexity is in line with functional signatures demonstrated previously for semantic analysis of nonverbal sounds in another canonical neurodegenerative syndrome, semantic dementia.44 It is of interest that neither auditory stimulation per se nor the processing of musical dissonance showed a significant disease effect here. This suggests that the coding of more complex emotion information (such as musical mode) may be relatively more vulnerable to the neurodegenerative process in bvFTD.

This study has several limitations that suggest directions for future work. Case numbers were relatively small, and findings require corroboration in larger cohorts; this is an issue of particular relevance in bvFTD, which is a highly pathologically heterogeneous syndrome underpinned by accumulation of diverse pathogenic proteins with potentially distinct patterns of network disintegration.45 It will also be important to assess bvFTD alongside Alzheimer's disease and other dementia syndromes in order to further identify the disease specificity of the signatures identified. Our paradigm was based on passive listening to generic emotional stimuli with relatively simple structure. In daily life, however, much of the emotional impact of music is carried by more complex structures such as melodies and rhythms, often with specific semantic associations. The effects of neurodegenerative disease on the brain mechanisms that process these more familiar musical entities remain to be defined. Furthermore, although postscan behavioral testing here established that patients were able to perceive stimulus changes comparably to healthy individuals, the extent to which task demands may modulate the functional neuroanatomy of music emotion processing remains unclear. Notwithstanding these caveats, the present findings provide a prima facie case for a more comprehensive functional neuroanatomical analysis of emotion processing in the dementias. It will be of particular interest to determine whether musical and social signal processing share functional brain circuitry in these diseases and whether, indeed, music might serve as a model for dysfunctional social brain mechanisms in bvFTD and other neurodegenerative syndromes9,18 and, furthermore, to identify substrates for specific musical behavior phenotypes.33 In addition to revealing disease mechanisms, music may constitute a sensitive biomarker of emotional dysfunction in neurodegenerative disease with implications for diagnosis and monitoring. More speculatively, the powerful neuromodulatory effect of music on behavior in brain disorders33,43 might have therapeutic potential in dementia syndromes if interventions are informed by valid pathophysiological models.

Acknowledgments

We thank all patients and healthy volunteers for their participation. The Dementia Research Centre is supported by Alzheimer's Research UK, the Brain Research Trust, and the Wolfson Foundation. This work was funded by the Wellcome Trust, the UK Medical Research Council, and the NIHR Queen Square Dementia Biomedical Research Unit. J.D.W. is supported by a Wellcome Trust Senior Clinical Fellowship (Grant No. 091673/Z/10/Z).

Conflicts of interest

The authors declare no conflicts of interest.

Supporting Information

Additional supporting information may be found in the online version of this article.

Table S1. Demographic and general neuropsychological data for participant groups

Table S2. Experimental auditory stimuli and conditions

Table S3. Significant contrasts and regions of activation for musicophilic versus nonmusicophilic patients

References

- 1.Zatorre RJ. Salimpoor VN. From perception to pleasure: music and its neural substrates. Proc. Natl. Acad. Sci. U.S.A. 2013;110(Suppl. 2):10430–10437. doi: 10.1073/pnas.1301228110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Koelsch S. Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 2014;15:170–180. doi: 10.1038/nrn3666. [DOI] [PubMed] [Google Scholar]

- 3.Blood AJ. Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 2001;98:11818–11823. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Koelsch S, Fritz T, von Cramon Y, et al. 2006. Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 27:239–250. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Koelsch S, Skouras S, Fritz T, et al. The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. Neuroimage. 2013;81:49–60. doi: 10.1016/j.neuroimage.2013.05.008. [DOI] [PubMed] [Google Scholar]

- 6.Salimpoor VN, van den Bosch I, Kovacevic N, et al. Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science. 2013;340:216–219. doi: 10.1126/science.1231059. [DOI] [PubMed] [Google Scholar]

- 7.Pereira CS, Teixeira J, Figueiredo P, et al. Music and emotions in the brain: familiarity matters. PLoS One. 2011;6:e27241. doi: 10.1371/journal.pone.0027241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lehne M, Rohrmeier M. Koelsch S. Tension-related activity in the orbitofrontal cortex and amygdala: an fMRI study with music. Soc. Cogn. Affect. Neurosci. 2014;9:1515–1523. doi: 10.1093/scan/nst141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clark CN, Downey LE. Warren JD. Brain disorders and the biological role of music. Soc. Cogn. Affect. Neurosci. 2014 doi: 10.1093/scan/nsu079. & pii: nsu079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Peretz I, Blood AJ, Penhune V, et al. Cortical deafness to dissonance. Brain. 2001;124:928–940. doi: 10.1093/brain/124.5.928. [DOI] [PubMed] [Google Scholar]

- 11.Griffiths TD, Warren JD, Dean JL. Howard D. “When the feeling's gone”: a selective loss of musical emotion. J Neurol. Neurosurg. Psychiatry. 2004;75:344–345. [PMC free article] [PubMed] [Google Scholar]

- 12.Stewart L, von Kriegstein K, Warren JD, et al. Music and the brain: disorders of musical listening. Brain. 2006;129:2533–2553. doi: 10.1093/brain/awl171. [DOI] [PubMed] [Google Scholar]

- 13.Gosselin N, Samson S, Adolphs R, et al. Emotional responses to unpleasant music correlates with damage to the parahippocampal cortex. Brain. 2006;129:2585–2592. doi: 10.1093/brain/awl240. [DOI] [PubMed] [Google Scholar]

- 14.Satoh M, Nakase T, Nagata K, et al. Musical anhedonia: selective loss of emotional experience in listening to music. Neurocase. 2011;17:410–417. doi: 10.1080/13554794.2010.532139. [DOI] [PubMed] [Google Scholar]

- 15.Rascovsky K, Hodges JR, Knopman D, et al. Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain. 2011;134:2456–2477. doi: 10.1093/brain/awr179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Omar R, Henley SM, Bartlett JW, et al. The structural neuroanatomy of music emotion recognition: evidence from frontotemporal lobar degeneration. Neuroimage. 2011;56:1814–1821. doi: 10.1016/j.neuroimage.2011.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hsieh S, Hornberger M, Piguet O, et al. Brain correlates of musical and facial emotion recognition: evidence from the dementias. Neuropsychologia. 2012;50:1814–1822. doi: 10.1016/j.neuropsychologia.2012.04.006. [DOI] [PubMed] [Google Scholar]

- 18.Downey LE, Blezat A, Nicholas J, et al. Mentalising music in frontotemporal dementia. Cortex. 2013;49:1844–1855. doi: 10.1016/j.cortex.2012.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Warren JD, Rohrer JD. Rossor MN. Clinical review: frontotemporal dementia. BMJ. 2013;347:f4827. doi: 10.1136/bmj.f4827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Green AC, Baerentsen KB, Stødkilde-Jørgensen H, et al. Music in minor activates limbic structures: a relationship with dissonance? Neuroreport. 2008;19:711–715. doi: 10.1097/WNR.0b013e3282fd0dd8. [DOI] [PubMed] [Google Scholar]

- 21.Halpern AR. Perception of structure in novel music. Mem. Cogn. 1984;12:163–170. doi: 10.3758/bf03198430. [DOI] [PubMed] [Google Scholar]

- 22.Leaver AM. Halpern AR. Effects of training and melodic features on mode perception. Mus. Percept. 2004;22:117–143. [Google Scholar]

- 23.Halpern AR, Martin JS. Reed TD. An ERP study of major–minor classification in melodies. Mus. Percept. 2008;25:181–191. [Google Scholar]

- 24.Trainor LJ, Tsang CD. Cheung VH. Preference for sensory consonance in 2- and 4-month-old infants. Mus. Percept. 2002;20:187–194. [Google Scholar]

- 25.Pallesen KJ, Brattico E, Bailey C, et al. Emotion processing of major, minor, and dissonant chords: a functional magnetic resonance imaging study. Ann. N.Y. Acad. Sci. 2005;1060:450–453. doi: 10.1196/annals.1360.047. [DOI] [PubMed] [Google Scholar]

- 26.Brattico E, Pallesen KJ, Varyagina O, et al. Neural discrimination of nonprototypical chords in music experts and laymen: a MEG study. J. Cogn. Neurosci. 2009;21:2230–2240. doi: 10.1162/jocn.2008.21144. [DOI] [PubMed] [Google Scholar]

- 27.Virtala P, Berg V, Kivioja M, et al. The preattentive processing of major vs. minor chords in the human brain: an event-related potential study. Neurosci. Lett. 2011;487:406–410. doi: 10.1016/j.neulet.2010.10.066. [DOI] [PubMed] [Google Scholar]

- 28.Khalfa S, Schön D, Anton JL, et al. Brain regions involved in the recognition of happiness and sadness in music. Neuroreport. 2005;16:1981–1984. doi: 10.1097/00001756-200512190-00002. [DOI] [PubMed] [Google Scholar]

- 29.Fujisawa TX. Cook ND. The perception of harmonic triads: an fMRI study. Brain Imaging Behav. 2011;5:109–125. doi: 10.1007/s11682-011-9116-5. [DOI] [PubMed] [Google Scholar]

- 30.Keane J, Calder AJ, Hodges JR, et al. Face and emotion processing in variant frontotemporal dementia. Neuropsychologia. 2002;40:655–665. doi: 10.1016/s0028-3932(01)00156-7. [DOI] [PubMed] [Google Scholar]

- 31.Dara C, Kirsch-Darrow L, Ochfeld E, et al. Impaired emotion processing from vocal and facial cues in frontotemporal dementia compared to right hemisphere stroke. Neurocase. 2013;19:521–529. doi: 10.1080/13554794.2012.701641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fletcher PD, Clark CN. Warren JD. Music, reward and frontotemporal dementia. Brain. 2014;137:e300. doi: 10.1093/brain/awu145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fletcher PD, Downey LE, Witoonpanich P, et al. The brain basis of musicophilia: evidence from frontotemporal lobar degeneration. Front. Psychol. 2013;4:347. doi: 10.3389/fpsyg.2013.00347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sauter DA, Calder AJ, Eisner F, et al. Perceptual cues in non-verbal vocal expressions of emotion. Q. J. Exp. Psychol. 2010;63:2251–2272. doi: 10.1080/17470211003721642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- 36.Zhou J. Seeley WW. Network dysfunction in Alzheimer's disease and frontotemporal dementia: implications for psychiatry. Biol. Psychiatry. 2014;75:565–573. doi: 10.1016/j.biopsych.2014.01.020. [DOI] [PubMed] [Google Scholar]

- 37.Griffiths TD. Warren JD. The planum temporale as a computational hub. Trends Neurosci. 2002;25:348–353. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- 38.Peelen MV, Atkinson AP. Vuilleumier P. Supramodal representations of perceived emotions in the human brain. J. Neurosci. 2010;30:10127–10134. doi: 10.1523/JNEUROSCI.2161-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Abrams DA, Lynch CJ, Cheng KM, et al. Underconnectivity between voice-selective cortex and reward circuitry in children with autism. Proc. Natl. Acad. Sci. U.S.A. 2013;110:12060–12065. doi: 10.1073/pnas.1302982110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Watson R, Latinus M, Noguchi T, et al. Crossmodal adaptation in right posterior superior temporal sulcus during face–voice emotional integration. J. Neurosci. 2014;34:6813–6821. doi: 10.1523/JNEUROSCI.4478-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mizuno T. Sugishita M. Neural correlates underlying perception of tonality-related emotional contents. Neuroreport. 2007;18:1651–1655. doi: 10.1097/WNR.0b013e3282f0b787. [DOI] [PubMed] [Google Scholar]

- 42.Kreifelts B, Ethofer T, Shiozawa T, et al. Cerebral representation of non-verbal emotional perception: fMRI reveals audiovisual integration area between voice- and face-sensitive regions in the superior temporal sulcus. Neuropsychologia. 2009;47:3059–3066. doi: 10.1016/j.neuropsychologia.2009.07.001. [DOI] [PubMed] [Google Scholar]

- 43.Dobek CE, Beynon ME, Bosma RL, et al. Music modulation of pain perception and pain-related activity in the brain, brain stem, and spinal cord: a functional magnetic resonance imaging study. J. Pain. 2014;15:1057–1068. doi: 10.1016/j.jpain.2014.07.006. [DOI] [PubMed] [Google Scholar]

- 44.Goll JC, Ridgway GR, Crutch SJ, et al. Nonverbal sound processing in semantic dementia: a functional MRI study. Neuroimage. 2012;61:170–80. doi: 10.1016/j.neuroimage.2012.02.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Warren JD, Rohrer JD, Schott JM, et al. Molecular nexopathies: a new paradigm of neurodegenerative disease. Trends Neurosci. 2013;36:561–569. doi: 10.1016/j.tins.2013.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1. Demographic and general neuropsychological data for participant groups

Table S2. Experimental auditory stimuli and conditions

Table S3. Significant contrasts and regions of activation for musicophilic versus nonmusicophilic patients