Abstract

Introduction

We sought to create a valid framework for detecting Adverse Events (AEs) in the high-risk setting of Helicopter Emergency Medical Services (HEMS).

Methods

We assembled a panel of 10 expert clinicians (n=6 emergency medicine physicians and n=4 prehospital nurses and flight paramedics) affiliated with a large multi-state HEMS organization in the Northeast U.S. We used a modified Delphi technique to develop a framework for detecting AEs associated with the treatment of critically ill or injured patients. We used a widely applied measure, the Content Validity Index (CVI), to quantify the validity of the framework’s content.

Results

The expert panel of 10 clinicians reached consensus on a common AE definition and four-step protocol/process for AE detection in HEMS. The consensus-based framework is composed of three main components: 1) a trigger tool, 2) a method for rating proximal cause, and 3) a method for rating AE severity. The CVI findings isolate components of the framework considered content valid.

Conclusions

We demonstrate a standardized process for the development of a content valid framework for AE detection. The framework is a model for the development of a method for AE identification in other settings, including ground-based EMS.

Keywords: EMS, Safety, Adverse Events, Error, Validity, Measurement

INTRODUCTION

Emergency Medical Services (EMS) providers give care to over 36 million patients annually.1 Patients often have traumatic injury or undifferentiated acute illness. EMS providers respond rapidly, make prompt decisions with limited data and implement care in diverse settings like a roadside, public place, or patient home. The time from initial patient contact to diagnosis, care delivery, and rapid transportation to a hospital is measured in minutes, not hours. EMS providers work under stressful conditions with limited resources against external factors that can impede delivery of safe and lifesaving care.

Adverse events (AEs) have been defined as unintended injuries caused by medical management (rather than underlying disease) and that resulted in measurable disability.2 The risk of an AE in the EMS environment is high, yet methods to detect AEs are underdeveloped and research on safety outcomes is sparse. Common AEs in EMS include patient injury from a stretcher drop, morbidity or mortality due to an improperly managed airway, missed diagnosis, or negative effects following a medication error.3–5 EMS providers may admit to near misses, errors, and AEs, but they underreport them, often out of fear of punishment or retaliation.6 Under reporting can lessen creation of a safety culture; lack of a strong safety culture is common among many EMS organizations and a precursor to frequent AEs, error, and safety compromising behavior.7,8

Detection of AEs in the EMS setting is complicated by a lack of consensus on appropriate sources of data for different clinical environments. Many analysts believe that the best way to detect AEs is through direct observation of patient care and continuous clinical surveillance.9 However, these two approaches are expensive and rarely used. Other data sources include malpractice claims, administrative databases, anonymous reporting, and reviews of patient care reports/charts.3,9–11 Obtaining information from anonymous reporting is the least costly method, but the breadth and depth of data collected is limited and represent what many may consider the “tip of the AE iceberg.”10 Patient care reports (PCRs) are the primary source of AE data and considered a mainstay of patient safety and quality assurance research.9,12

Ever since the publication of Institute of Medicine’s 1999 report, To Err is Human,13 which called for improved methods to detect AEs, investigators in all sectors of medicine have struggled to develop a reliable and valid method or process for AE detection.9,14 The Harvard Medical Practice (HMP) study is the most commonly cited framework for AE identification. This method used a two-step procedure whereby nurses or administrators screened PCRs for possible errors or AEs and forwarded them to two physicians who assessed harm using a six-point harm scale.2 Variations of the HMP method include use of multiple teams to screen PCRs,15 defined screening criteria and group consensus instead of independent nurse review,16 and review by a team of physicians after initial screening. Although research supports the use of multiple reviewers and raters during PCR review,17,18 the optimal process is undefined.19

The Institute for Healthcare Improvement (IHI) seeks to improve the process and increase efficiency of identifying healthcare AEs through the development of trigger tools applied to PCRs and other electronic patient records.20 Triggers are a method to operationalize AE detection. They are actions (e.g., endotracheal intubation) or key words or phrases (e.g., infiltrated, deteriorated, medication names) contained within a PCR that have a high probability of being linked to patient harm.21 Trigger tools may limit the amount of time required to review a chart (e.g. 20 minutes or less), and produce a collection of PCRs with a high probability of an AE.21 Investigators claim that tests of reliability support the IHI Global Trigger Tool’s utility as an efficient and reliable technique for inclusion in AE detection methodologies.20

Multiple studies have considered adverse outcomes of critically ill patients transferred within hospitals or between intensive care facilities.22–38 In all of these studies, a common limit is a lack of attention to content validity and reproducibility of a chosen method for AE detection in the EMS setting. Three studies sought to define and identify assorted AEs in the EMS environment. Seymour and colleagues reviewed Helicopter EMS (HEMS) charts for major or minor AEs involving mechanically ventilated patients.39 They defined major AEs as events that included death, cardiac/respiratory arrest, pneumothorax, and seizure. Minor AEs were based on physiologic changes in respiratory status, cardiovascular status, and other vital signs. The authors did not assess reliability or validity of the AE detection method. MacDonald and associates reviewed PCRs combined with anonymous reports from a large Canadian HEMS system.40 Two clinicians classified AEs based on a two-level measure of harm adapted from the taxonomy proposed by Chang et al, 2005.40,41 MacDonald and colleagues did not evaluate the content validity of their tool, and they found agreement between clinician raters was moderate. In the third study, five emergency medicine physicians developed a consensus based AE definition and a seven-level measure of AE severity.42 The measure is anchored from 1 = “AE with harm as a result of commission” to 7 = “No AE identified.”42 Agreement between clinician raters was fair to poor and the validity of the tool was not assessed.

Lack of a reliable and valid AE measure threatens confidence in current AE evidence and inhibits safety improvement. We sought to establish a uniform framework for AE detection in the HEMS setting; a component of prehospital care delivery servicing critically ill and injured patients.

METHODS

First, we assembled a panel of experts to agree on the parameters of a draft framework, called the “development phase.”43 During this stage, we used a modified Delphi technique that included a) assembling experts, b) repeated “polling” with Internet-based surveys of experts complemented by c) conferences or face-to-face meetings to clarify and establish consensus on a uniform structure (framework) of steps for identifying AEs.44 Our panelists proposed draft descriptions and example indicators for each component of the overall framework. We considered these essential to operationalizing use of the framework by clinician reviewers. We did not predefine or limit the total number of surveys or face-to-face meetings. We concluded the development phase when experts reached consensus on the structure of the framework, draft descriptions, and example indicators of framework components.

The second phase was the “quantification phase.” This phase involved the experts independently reviewing the draft descriptions and draft example indicators of framework components proposed in the development phase. The experts recorded a rating of “relevance” for each draft description and example indicator using a secure Internet-based survey. We used a 4-point rating scale to record relevancy ratings. Ratings were anchored as prescribed in the literature from “not relevant” to “highly relevant.”43 These ratings were then used to quantify content validity using the Content Validity Index (CVI) measurement.43 Content validity refers to how well a measurement’s components represent the full domain or range of content being measured.45 Judgment of a measure’s content validity is performed by individuals with expertise in a particular field (e.g., clinicians with deep knowledge and experience in prehospital emergency medicine).43,45,46 The University of Pittsburgh Institutional Review Board approved this study.

Study sample of experts

Our panel of experts included ten clinicians experienced in both emergency medicine and HEMS. The clinicians represent a sample of convenience, recruited from the lead investigator’s home academic medical center and affiliated clinical divisions. We stratified our ten experts into three groups based on the Harvard Medical Practice Study and other efforts.17,47 The senior physician group included three board certified emergency physicians with >20 years of experience as emergency physicians and experience providing medical direction to EMS workers. The mid-career physician group included three board certified emergency physicians all with >15 years of EMS related experience as a medical director or EMS worker prior to becoming a physician. The Quality Assurance (QA) officer group included four individuals who were certified as a flight paramedic (FP-C) or flight nurse (CFRN) and with responsibilities as a quality assurance officer in HEMS.

Study protocol

First, a member of our study team (PDP) began the study’s “development phase” by educating the expert panel about the literature on AEs. Education involved a brief summation of: a) current statistics of AEs in healthcare;48 b) known literature of AE investigations in EMS settings;3,4,40,42 c) findings from our previous experience with AE detection;42 d) commonly used methods and data sources for AE detection (i.e., trigger tools and chart review);12,20,48 and e) perceived ambiguity in AE definitions.49,50 A discussion about the importance of AE definitions ensued. PDP emphasized how a common definition is an essential starting point for accurate and reliable AE measurement.41,50–53 The panel discussed current research, such as variation in the sensitivity and specificity of AE definitions.17,42,49,50 Some definitions include the presence of serious injury or disability leading to additional care or hospitalization, whereas other definitions embraced only the “potential” for a negative outcome.50

One of the discussed definitions read: “An adverse event in EMS is a harmful or potentially harmful event occurring during the continuum of EMS care that is potentially preventable and thus independent of the progression of the patient’s condition.”42 The committee agreed to adopt this definition because it included the term, “potentially harmful,” which the panel believed would lead to a more broad/sensitive rather than conservative/specific approach to AE detection. This attention to sensitivity may reflect a perception among emergency physicians that AEs can occur as a result of EMS care but not be recognized until later in the hospital course.

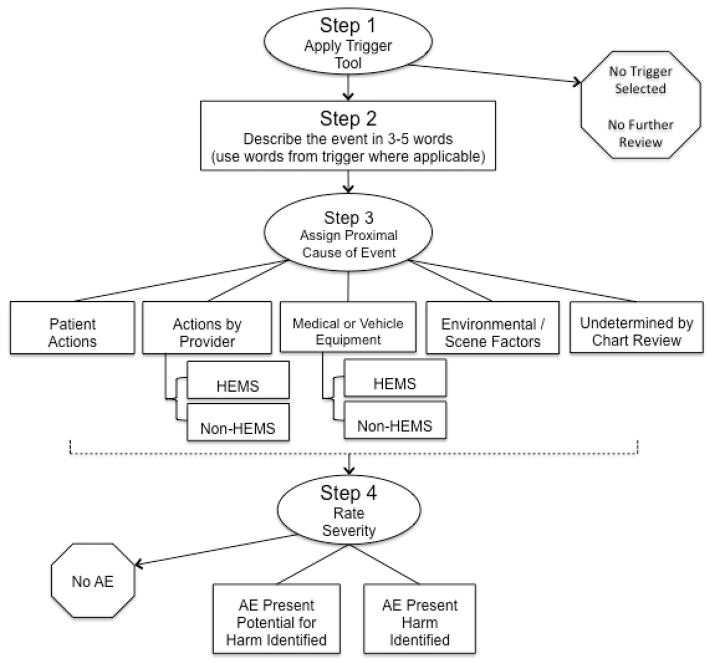

Second, PDP continued the development phase by establishing a draft AE detection framework. The draft framework was based on previous research, including the IHI’s trigger tool methodology and the Joint Commission’s Safety Event Taxonomy.20,41 The draft framework was organized into a three step process: Step 1 – Apply Trigger Tool, Step 2 – Rate AE Severity, and Step 3 – Assign Proximal Cause (Figure 1). The draft framework was presented to the panel of experts. The experts were then convened in multiple group face-to-face meetings to discuss how the framework’s structure, components, and content may be improved so it may be used for reliable and accurate AE detection. We concluded the development phase once the expert panel reached consensus on the overall structure of the framework and on each component of the framework.

Figure 1.

Initial draft of the framework for detecting AEs in HEMS

We then moved to the “quantification phase.” At this phase, experts reviewed the content of the prepared framework, not as a group, but as individuals. Each expert provided his or her individual rating of “relevancy” for each of the prepared descriptions and indicators of the framework. Experts used a 4-point rating scale to rate the relevancy of individual items: 1=not relevant, 2=somewhat relevant, 3=quite relevant, and 4=highly relevant.43 The ten experts provided a rating for each of the following: 1] the 14 draft triggers; 2] each of the prepared description(s) and draft indicator(s) of the draft five components of proximal cause; 3] each of the prepared description(s) and draft indicator(s) of the three draft components of AE severity; and 4] the prepared description(s) and draft indicator(s) for each component of proximal cause component. Prior to rating relevance, PDP presented the panel with a sample of PCRs selected at random from a local air-medical agency. We used these sample PCRs to illustrate a step-by-step process for applying the framework.

We then collated expert ratings of framework components. We used these ratings to calculate standard measures of the framework’s content validity: Item and Scale Content Validity Index (I-CVI and S-CVI) based on techniques developed by Lynn, Waltz and Bausell.43 The I-CVI is proportion of raters assigning a rating of 3=quite relevant or 4=highly relevant.43 An item with an I-CVI score greater than 0.78 is considered content valid.43,54 We calculated S-CVI scores: one for each component (triggers, proximal cause, and AE severity) and one for the entire framework (an overall S-CVI score). The S-CVI average score is the sum of I-CVI scores for all items divided by the total number of items.43 An S-CVI average score of 0.90 or greater implies high scale level content validity.43,54

RESULTS

We used 8 face-to-face meetings and 11 online surveys to complete the “development phase” of the AE framework. The panel of experts unanimously adopted a previously developed AE definition considered sensitive to the potential for AE occurrence post transfer of care from EMS to other healthcare providers. “An Adverse Event is a harmful or potentially harmful event occurring during the continuum of EMS care that is potentially preventable and thus independent of the progression of the patient’s condition.”42 The experts considered this definition appropriate for the HEMS setting because it was sensitive to the possibility that an AE may occur but not manifest until post-transfer of care from EMS personnel to in-hospital providers. The panel agreed that patients in the prehospital setting display ambiguous features and access to patient history is limited. They believed that EMS providers must integrate conflicting information in that short but care-intense interval and are often unaware of the patient’s response to their interventions. They declared that an AE could occur at any moment, with risks multiplied in austere environments, during encounters with violent patients, or during rapid transport using lights and sirens.

Consensus on framework structure

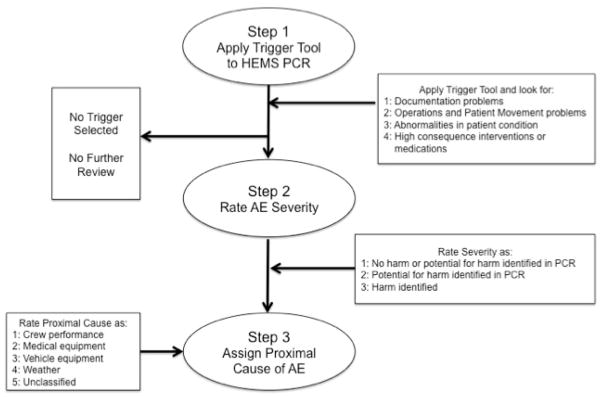

The panel reached consensus on the structure of the framework with a four-step process shown in Figure 2, from hereon referred to as the PittAETool. This consensus-based framework begins with the chart reviewer (rater) applying a trigger tool (step 1), followed by the rater composing a description of the event that prompted selection of triggers (step 2). The rater then assigns a proximal cause (step 3) followed by a rating of AE severity (step 4). The panel also reached consensus on five categories of proximal cause with variation in the number of examples for each: 1) Patient Actions – The AE was the result of action(s) by the patient; 2) Actions by Provider – The AE was the result of action(s) or inaction(s) by the crew [stratified into two sub-categories with HEMS crew versus Non-HEMS crew]; 3) Medical or Vehicle Equipment – [stratified into two sub-categories with HEMS crew versus Non-HEMS crew]; 4) Environmental / Scene Factors – Factors that may result from weather conditions or factors on the ground/scene or other. This includes temperature, light, and scene safety; 5) The proximal cause of the AE (regardless of severity) cannot be determined by the information available in the chart. See Appendix A for a full description of each category, definition, and listing of all examples.

Figure 2.

Consensus based framework for identifying AEs in HEMS: The PittAETool

The experts experimented with applying the framework by posing example patient encounters. For example, one expert posited the following scenario: “If a patient presents with a GCS of 3, the medical crew is required to restrain the patient as part of a protocol involving medication assisted intubation. Does an AE exist if a crew failed to restrain the patient’s arms per protocol but the patient was delivered to the hospital without incident? Does an AE exist if a crew failed to restrain the patient per protocol, the patient woke up, used his/her unrestrained hand to disrupt care and die? In both scenarios, the crew failed to restrain the patient per protocol, but one event was harmful.” These example applications of the framework assisted the expert panel with editing the structure of the framework so that step 2 (proximal cause rating) and step 3 (AE severity rating) were reversed. This reversal would allow a rater to assign proximal cause prior to rating AE severity. The panel commented that the reversal in steps allowed a reviewer to consider more information before a decision was made concerning AE severity.

The panel reached consensus on a structure for rating AE severity that included three categories: 1) No AE – A case where a trigger was selected, but no AE was identified after full review; 2) AE Present with Potential for Harm – An action that may lead to injury or harm, but there is no evidence that an injury or harm occurred; 3) AE Present and Harm Identified – An action or omission that led to injury or harm regardless of severity.

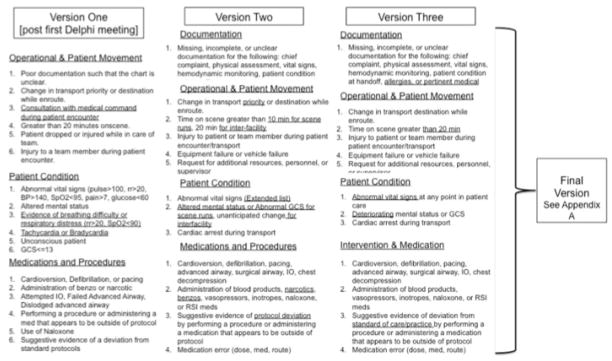

The trigger tool component represents a key element of the overall framework. Our experts sought to develop a comprehensive list of triggers with high sensitivity to detect minor events such as minor errors in documentation, yet specific enough to detect events with a high probability for patient harm or potential for harm. For example, during earlier meetings, the expert panel considered the following trigger appropriate: “Abnormal vital signs at any point in patient care.” The experts agreed that a large proportion of prehospital patients have abnormal vital signs, and use of this would have identified numerous charts with a low probability of harm (Figure 3). In the trigger’s final version, experts agreed on the following: “A worsening trend (deterioration) in patient hemodynamic or mental status indicators such as vital signs, level of consciousness, or Glascow Coma Score (GCS).”

Figure 3.

Development of the Trigger Tool component

The second trigger, “Time on scene greater than 20 minutes”, generated debate. This trigger is tied to a common time-based performance measure in prehospital EMS. Experts expressed concern that patient care encounters longer than 20 minutes on scene could be a marker of a possible error or patient harm in select conditions such as stroke, multi-system trauma, and myocardial infarction. While all ten panelists saw this trigger as important during the development stage and Delphi process, it was rated as having low relevance during the quantification phase, suggesting an unacceptably low specificity as a trigger.

Content validity scores

We used I-CVI and S-CVI scores to summarize the ratings of content validity by our 10 experts for the content of the draft framework. Eleven of the original 14 draft triggers met the cut point for acceptable content validity (≥0.78; see Table 1). The I-CVI for 23 of the 38 descriptions and example indicators for the five categories of proximal cause component met the established 0.78 cut point (Table 1). The mean I-CVI for the definition and indicators for the “No Adverse Event” category of AE severity fell below the 0.90 cut point. The S-CVI for the remaining two categories is acceptable at 0.92 and 0.97, respectively.

Table 1.

Content validity findings

| Trigger Tool Items | I-CVI | S-CVI |

|---|---|---|

| Documentation Triggers | ||

| Missing, incomplete, or unclear documentation for the following: chief complaint, physical assessment, vital signs, hemodynamic monitoring (e.g., ETC02), allergies, pertinent history or medications, patient condition at handoff of facility. | 0.80 | |

| Operational & Patient Movement Triggers | ||

| Change in transport mode or destination due to change in weather or patient condition. | 0.70 | |

| Time from dispatch to initial patient contact exceeds accepted standards. | 0.50 | |

| Time from initial patient contact to transfer of care exceeds accepted standards. | 0.90 | |

| Injury to patient or team member during patient encounter / transport (e.g., stretcher drop, needle stick, or other). | 1.00 | |

| Request for additional resources, personnel, or supervisor due to change in patient condition. | 1.00 | |

| Patient Condition Triggers | ||

| A worsening trend (deterioration) in patient hemodynamic or mental status indicators (e.g., vital signs, LOC, GCS score). | 1.00 | |

| Cardiac arrest during transport. | 1.00 | |

| Intervention and Medication Triggers | ||

| Use of any of the following interventions during patient care: (cardioversion, defibrillation, transcutaneous pacing, advanced airway attempt, surgical airway, Intraosseous (IO), chest decompression, chest tube). | 0.80 | |

| Failure of any intervention or procedure during patient care (some examples include: inability to obtain vascular access after a reasonable amount of time or number of attempts, failed IO, failed Nasogastric Tube (NG) placement, failed Foley placement, failed cardioversion, failed defibrillation, failed transcutaneous pacing, failed advanced airway or rescue airway, failed surgical airway, failed chest decompression). | 1.00 | |

| Use of following medications or fluids: (blood products, vasopressors or inotrope [e.g., dobutamine, dopamine], naloxone, RSI medications [e.g., succinylcholine]). | 0.80 | |

| Suggestive evidence of deviation from standard of care by performing an intervention or administering a medication that appears to be outside of protocol, or failure to perform an intervention or provide a medication that is within the standard of care. | 1.00 | |

| Medication error (e.g., administering wrong or unapproved dose, administering wrong or unapproved medication, administering medication via wrong or unapproved route). | 1.00 | |

| Other | ||

| No trigger directly applies, but I have suspicion that an AE may be present. | 0.70 | |

| Trigger S-CVI (The average I-CVI for trigger items) | 0.94* | |

| Determination of Proximal Cause | I-CVI | S-CVI |

|---|---|---|

| Proximal Cause – Actions by Patient | ||

| Definition: The AE was the result of action(s) by the patient. | 1.00 | |

| (A) Patient with capacity refuses transport to a specialty care facility. | 0.80 | 0.94 |

| (B) Patient with capacity refuses treatment specified in protocol. | 0.90 | |

| (C) Patient with capacity discontinues ongoing therapy. | 1.00 | |

| (D) Patient takes action that results or may result in harm to themselves or others. | 1.00 | |

| Proximal Cause – Actions by Provider | ||

| Definition: The AE was the result of action(s) or inaction(s) by the crew. | 1.00 | |

| HEMS Crew | 0.93 | |

| (A) Medication errors | 1.00 | |

| (B) Procedural errors (i.e., esophogeal intubation) | 1.00 | |

| (C) Failure to zero the A-line prior to vehicle / aircraft moving. | 0.70 | |

| (D) Failure to confirm orders from medical command. | 0.80 | |

| (E) Failure to activate cath-lab for STEMI patient | 0.90 | |

| (F) Failure to treat pain (e.g., extremity pain or treatment as indicated by protocol or medical oversight) | 0.90 | |

| (G) Documentation error | 0.40 | |

| (H) Inability to establish vascular access after a reasonable amount of time or number of attempts | 0.50 | |

| (I) Failure to administer 02 to a hypoxic patient | 1.00 | |

| (J) Failure to physically or chemically restrain a patient that is perceived to be at risk of harm to themselves or the crew | 0.90 | |

| Non-HEMS | ||

| (A) Delay in patient care due to delays by the referring or receiving facility (e.g., patient in CT scanner, patient receiving dialysis). | 0.60 | |

| Proximal Cause – Medical or Vehicle Equipment | ||

| Definition: Failure of the equipment, failure to troubleshoot and correct common problems with the equipment, or failure to remove defective equipment from service. | 1.00 | |

| HEMS Crew | ||

| (A) Suctioning device malfunctioned during use. | 0.90 | 0.98* |

| (B) An unanticipated malfunction with transport vehicle. | 0.60 | |

| (C) Nasal capnography not available or 02 supply diminished during transport. | 0.90 | |

| (D) All or any stretcher drop, tip, or malfunction. | 1.00 | |

| (E) Malfunction of laryngoscope or ET tube (e.g., bulb failure or balloon failure) | 1.00 | |

| (F) Fluid or medication pump failure or malfunction. | 1.00 | |

| (G) Ventilator malfunction | 1.00 | |

| (H) Failure of cardiac monitor | 1.00 | |

| (I) Missing equipment that is needed for use. | 1.00 | |

| Non-HEMS | ||

| (A) Delay in transport due to patient’s weight | 0.40 | |

| (B) Delay in transport due to lack of appropriate equipment (non-hems) | 0.40 | |

| Proximal Cause – Environmental/Scene Factors | ||

| Definition: Factors that may result from weather conditions or factors on the ground/scene (or other). This includes temperature, light, and scene safety. | 0.60 | |

| (A) Cold causing fogging of optics on a Video laryngoscope system. | 0.40 | 0.80* |

| (B) Freezing of fluids or drugs (e.g., mannitol). | 0.70 | |

| (C) Diversion to a non-trauma center due to weather conditions for a trauma patient. | 0.60 | |

| (D) Scene not safe delaying landing or take-off, prolonging on-scene time or pre-arrival (e.g., a remote landing zone). | 0.60 | |

| (E) Delay in managing patient’s airway due to prolonged extrication. | 0.80 | |

| (F) Delay due to prolonged arrival by ground or intercepting crew. | 0.70 | |

| (G) A delay in patient packaging and transport due to weather. | 0.30 | |

| Proximal Cause – Undetermined by Chart Review | ||

| Definition: The proximal cause of the AE (regardless of severity) cannot be determined by the information available in the chart. | 0.60 | |

| Proximal Cause S-CVI (The average I-CVI for proximal cause items) | 0.95* | |

| Judgment of Severity | I-CVI | S-CVI |

|---|---|---|

| No Adverse Event | ||

| Definition: A case where a Trigger was selected (e.g., cardiac arrest during transport), but no AE identified after full review. | 0.70 | |

| (A) Cardiac arrest during transport, but all documentation supports the crews committed no error and followed protocol(s) as prescribed. | 0.70 | 0.80* |

| (B) Trigger selected due to administration of a medication (e.g., naloxone). However, the use of naloxone was indicated for patient overdose of opiate and is not related to delivery of care (e.g., the crewmembers did not overdose the patient). | 0.60 | |

| (C) The time to transport the patient was delayed for necessary care, diagnostic procedures, or interventions outside control of medical crew (e.g. the patient was in the CT scanner at the referring facility or the patient requires a balloon pump, which will require longer time at bedside during the transition of care). | 0.80 | |

| (D) Missing, incomplete, or unclear documentation. | 0.60 | |

| AE Present – Potential for Harm | ||

| Definition: An action that may lead to injury or harm but there is NO evidence that an injury or harm occurred. | 0.89 | |

| (A) A medication error in which one type of medication (e.g., ketamine) was administered to an intubated patient for sedation in place of more appropriate medication or medication listed as the standard of care (off- line protocols e.g., Versed). | 1.00 | 0.92 |

| (B) A 22kg pediatric burn patient received 0.2mg/kg of morphine (4.4mg total) for pain rather than 0.1mg/kg (2.2mg total). The pediatric patient may exhibit no adverse reactions (i.e. hypotension and reduced respirations). | 1.00 | |

| (C) Administration of 500cc of saline when 300cc bolus was ordered by medical oversight. | 0.78 | |

| (D) Patient received 4mg of Midazolam when the oversight physician ordered 2mg. | 0.89 | |

| (E) Failure to administer ASA for a patient with chest pain for suspected cardiac etiology. | 1.00 | |

| (F) Failure to check vital signs before and after medication administration (i.e., Nitroglycerin). | 0.89 | |

| (G) Not adequately protecting patient’s airway. For example, no airway adjunct utilized for an unconscious patient with normal vital signs and no evidence of desaturation. | 1.00 | |

| (H) Stretcher dropped or tipped, but no evidence the patient or crew member(s) were injured. | 1.00 | |

| (I) Failure to immobilize a fracture in a trauma patient. | 0.78 | |

| (J) Failure to control hemorrhage in a hemodynamically stable patient. | 1.00 | |

| (K) Administration of Nitroglycerin to patient with documented use of phosphodiesterase inhibitors (e.g., Viagra). No evidence of hypotension is found. | 0.89 | |

| (L) Immediately recognized and quickly corrected missed intubation with no evidence of patient deterioration. | 0.78 | |

| AE Present – Harm Identified | ||

| Definition: An action or omission that led to injury or harm regardless of severity. | 1.00 | |

| (A) Delay in recognition of missed intubation with evidence of patient deterioration; or failure to control airway within a reasonable amount of time or number of attempts with evidence of patient deterioration. | 1.00 | 0.97 |

| (B) A seizure patient administered 4mg Midazolam when medical oversight gave orders for 2mg. The patient lost spontaneous respirations. Crewmembers responded by use of BVM ventilations and intubation. | 1.00 | |

| (C) Crew member intends to administer amiodarone for treatment of wide complex tachycardia at rate of 160. Crew member administers 10 mg Diltiazem and patient suffers a v-fib arrest. | 1.00 | |

| (D) Stretcher drop with injury to patient. | 1.00 | |

| (E) Failure to administer eclamptic patient Magnesium Sulfate and then seizes for a period of time. | 1.00 | |

| (F) Patient with a GCS ≤8 and evidence of respiratory compromise (i.e., SPO2 <90) but no airway intervention by crewmembers. | 1.00 | |

| (G) Crewmembers fail to activate specialty team where indicated (i.e. trauma team, cath lab, stroke alert). | 0.89 | |

| (H) Failure to decompress a tension-pneumothorax | 1.00 | |

| (I) Failure to adequately sedate a patient that was intubated as evidenced by follow-up from receiving facility that documented patient recall of events. | 0.89 | |

| (J) Administration of Nitroglycerin to patient with documented use of phosphodiesterase inhibitors (e.g., Viagra). Hypotension results. | 1.00 | |

| (K) A patient suffering from anaphylatic reaction is administered 0.3mg of Epinephrine 1:1000 Intravenous (IV) instead of Intramuscular (IM) and develop acute chest pain and change on EKG monitor. | 1.00 | |

| (L) Use of unsynchronized cardioversion in a patient with unstable Atrial Fibrillation (Afib) that results in Ventricular Fibrillation (Vfib) or asystole. | 0.89 | |

| Severity S-CVI (The average I-CVI for severity items) | 0.95* | |

| Overall S-SVI (The average I-CVI for all items in the tool) | 0.95* | |

The S-CVI with the items highlighted in grey removed.

DISCUSSION

Quality measurement begins with a validation of a measurement tool’s content.43 However, evidence of content validation is missing for a vast majority of existing methods and tools widely used for AE detection. Our expert panel adhered to a rigorous procedure for measurement development and produced a draft framework to organize the process of AE identification. Content validity scores show the overall framework (the PittAETool) is comprehensive and offers a basis from which to standardize AE detection in HEMS.

Standardization of an AE definition and framework for detecting and rating the severity and proximal cause of AEs is needed. Routine investigations by physician medical directors or quality officers are often unclear and do not allow for tests of reliability or validity. The detection process can be time consuming, and lead many in high-risk, low-resourced organizations to apply vague techniques to limited events with high-consequences. This partiality may result in many potential AEs missed and circumstances that contributed to an AE or potential AE uncorrected. Data from assorted methods are not easily compared and benchmarking is tenuous. Use of an untested AE detection method can have indirect effects on organizational culture. Trust and confidence in management may suffer when frontline workers perceive evaluation of their clinical care is based on inaccurate and unreliable tools or techniques.55 This may be prevented with use of measurement techniques shown to have some level of reliability or validity – such as our framework.

High visibility and popularity of trigger tools suggests this component of our framework may be the most useful and attractive of all components. However, it is unclear if a trigger tool should be considered beneficial or a potentially capricious instrument for AE detection in the HEMS setting or healthcare in general. There are limited data supporting the reliability and validity of existing trigger tools. One study of a 28-item pediatric trigger tool showed a mean positive predictive value of 3.7% (range 0.00 – 20.00).56 A study based in Sweden charged five teams of reviewers to use the global trigger tool to review patient care charts and identify AEs in five hospitals.15 The study showed wide variation between the five teams in the total number of triggers utilized (29 to 95 triggers selected) and fair-to-moderate agreement on selected triggers (kappa for dyadic team comparisons ranged from 0.32 to 0.60).15 Initial tests of the IHI’s global trigger tool show a mean agreement between clinician reviewers of only 0.50 post training.20 Is this level of agreement a benchmark for reliability? Future studies should focus on the sensitivity, specificity, and predictive validity of the trigger tool component.

The next step is to determine how to apply the PittAETool. Should a multi-step process be used analogous to the Harvard Medical Practice studies, with one group of nurses or paramedics screening charts followed by a selective review by physician medical directors? Should the process involve independent reviews by two, three, four or more clinicians followed by a meeting between the clinicians where consensus is reached? There is increasing support for the inclusion of multiple reviewers and raters for accurate AE detection,17,18 yet previous research suggests the answer is open-ended. Schildmeijer and colleagues created teams of three clinicians that included two nurses that performed initial chart screening followed by a final review by a single physician.15 Baker and colleagues also used a two-step process where the first step was led by a group of three nurses that applied 18 screening criteria to patient care charts.16 In contrast to the study by Schildmeijer and colleagues, the Baker et al study did not require independent nurse reviews prior to reaching a consensus decision. Step two of the Baker study used a different configuration of physician reviewers by requiring a team of three generalist physicians reviewed and rated charts received by nurses. Should application consider experience of raters? Studies show that experience, training, and the number of raters can impact agreement, a widely used marker for reliability in patient safety research.15,17,52

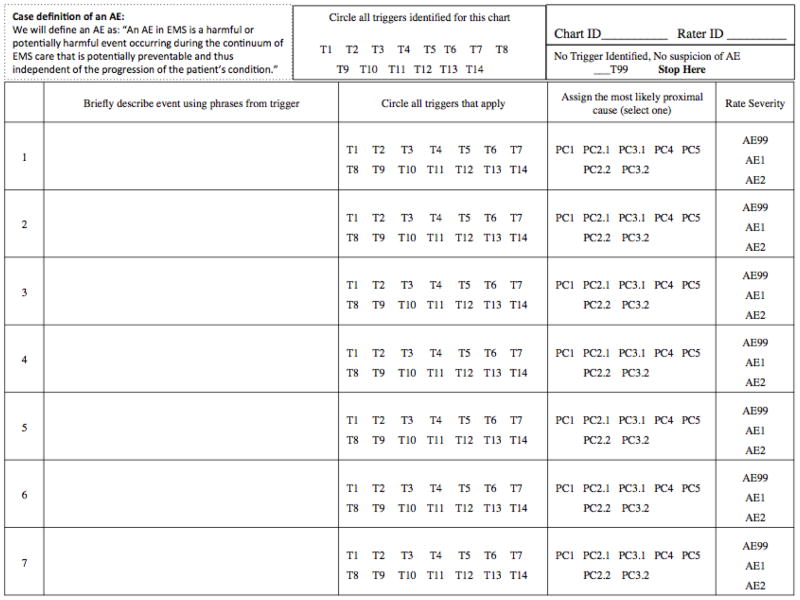

This draft framework, presented in full in Appendix A, represents a step-forward in the development and testing of AE detection in high-risk settings such as EMS. Our framework is intended to provide a common “frame of reference” for EMS organizations that seek evidence-based tools for quality and safety assurance and improvement. Research is needed to determine if the appropriate use of the PittAETool demands multiple different groups of raters (e.g. paramedics, physicians, nurses) and multiple individuals per group. A draft data collection form to begin this effort is included in Appendix B.

Improving safety requires multiple data sources.57 Identifying potential AEs plays a key role in implementing “just culture” principles that aim to improve patient safety via feedback and prevention.58,59 The PittAETool offers a systematic method for AE detection via chart review, aiding capture of AEs with potential for harm, and reducing bias towards only those events that result in harm. Successful implementation of the PittAETool may be achieved if launched in concert with a multi-pronged safety program (e.g., anonymous reporting, fatigue management, team training). Immediate use of PittAETool findings may include: a) quantifying the magnitude and trends of safety outcomes; b) isolating common characteristics and predictors of AEs; and c) evaluating the impact of a safety improvement program with a before/after design. Once AE trends are identified, future research may focus on development of educational programs informed by findings from the PittAETool’s implementation, such as targeting the most commonly selected proximal cause of AEs. A program informed by more reliable and accurate methods for AE detection may 1) have higher odds of acceptance by individual prehospital clinicians; and 2) be more effective in reducing the incidence of AEs.

LIMITATIONS

Our principal limitation is selection and inclusion of ten experts from one region of the U.S. A different group of experts could lead to a different framework and content. Several of the prepared descriptions and draft indicators fell below the prescribed cut point for the CVI measures of content validity. Research is needed to: 1) improve descriptions and draft indicators of the framework’s components; and 2) to evaluate the application of the framework to PCRs and assess utility with standard tests of reliability and validity (e.g., sensitivity and specificity).

CONCLUSIONS

We developed a content valid framework for identifying and rating the severity of adverse events in the high-risk helicopter EMS setting. The PittAETool is a model for the development of techniques for AE identification in other settings, including ground-based EMS.

Acknowledgments

Research reported in this publication was supported by the National Center For Advancing Translational Sciences of the National Institutes of Health under Award Number KL2TR000146.

Appendix A: The Pittsburgh AE Detection and Classification Tool (PittAETool)

Trigger Tool:

Step 1

4 Categories/11 Triggers

| Documentation Triggers | |

| 1 | Missing, incomplete, or unclear documentation for the following: chief complaint, physical assessment, vital signs, hemodynamic monitoring (e.g., ETC02), allergies, pertinent history or medications, patient condition at handoff of facility. |

| Operational & Patient Movement Triggers | |

| 2 | Time from initial patient contact to transfer of care exceeds accepted standards. |

| 3 | Injury to patient or team member during patient encounter / transport (e.g., stretcher drop, needle stick, or other). |

| 4 | Request for additional resources, personnel, or supervisor due to change in patient condition. |

| Patient Condition Triggers | |

| 5 | A worsening trend (deterioration) in patient hemodynamic or mental status indicators (e.g., vital signs, LOC, GCS score). |

| 6 | Cardiac arrest during transport. |

| Intervention & Medication Triggers | |

| 7 | Use of any of the following interventions during patient care: (cardioversion, defibrillation, transcutaneous pacing, advanced airway attempt, surgical airway, Intraosseous (IO), chest decompression, chest tube). |

| 8 | Failure of any intervention or procedure during patient care (some examples include: inability to obtain vascular access after a reasonable amount of time or number of attempts, failed IO, failed Nasogastric Tube (NG) placement, failed Foley placement, failed cardioversion, failed defibrillation, failed transcutaneous pacing, failed advanced airway or rescue airway, failed surgical airway, failed chest decompression). |

| 9 | Use of following medications or fluids: (blood products, vasopressors or inotrope [e.g., dobutamine, dopamine], naloxone, RSI medications [e.g., succinylcholine]). |

| 10 | Suggestive evidence of deviation from standard of care by performing an intervention or administering a medication that appears to be outside of protocol, or failure to perform an intervention or provide a medication that is within the standard of care. |

| 11 | Medication error (e.g., administering wrong or unapproved dose, administering wrong or unapproved medication, administering medication via wrong or unapproved route). |

Step 2

Describe

DIRECTIONS – Describe the event in 3–5 words. Use words from the trigger where applicable.

| Assign Proximal Cause of Event

| |

|---|---|

| 5 Categories of Proximal Cause

| |

| Category 1 | PC1 |

| Description: Actions By Patient | |

| Definition: The AE was the result of action(s) by the patient. | |

Example(s):

| |

| Category 2 | PC2 |

|---|---|

| Description: Actions By Provider | |

| Definition: The AE was the result of action(s) or inaction(s) by the crew. | |

|

Category 2.1 – HEMS Crew (PC 2.1) Example(s):

| |

|

Category 2.2 – Non-HEMS (PC 2.2) Example(s):

| |

| NOTE--- this is not where we will assign any issues related to equipment (i.e. stretcher drops) or failure to stock equipment. Those will be / should be assigned to Category 3; Medical or Vehicle Equipment. | |

| Category 3 | (PC 3) |

|---|---|

| Description: Medical or Vehicle Equipment | |

| Definition: Failure of the equipment, failure to troubleshoot and correct common problems with the equipment, or failure to remove defective equipment from service. | |

|

Category 3.1 – HEMS Crew (PC 3.1) Example(s):

| |

|

Category 3.2 – Non-HEMS (PC 3.2) Example(s):

| |

| Category 4 | (PC 4) |

|---|---|

| Description: Environmental / Scene Factors | |

| Definition: Factors that may result from weather conditions or factors on the ground/scene (or other). This includes temperature, light, and scene safety. | |

Example(s):

| |

| Category 5 | (PC 5) |

|---|---|

| Description: Undetermined by Chart Review | |

| Definition: The proximal cause of the AE (regardless of severity) cannot be determined by the information available in the chart | |

Step 4

Rate Severity

| AE 99 |

|---|

| Description: NO AE |

| Definition: A case where a Trigger was selected (e.g., cardiac arrest during transport), but no AE identified after full review. |

Example(s):

|

| 2 Categories of AE Severity

|

|---|

| Category 1 |

| Description: AE present – Potential for Harm (AE 1) |

| Definition: An action that may lead to injury or harm but there is NO evidence that an injury or harm occurred. |

Example(s):

|

| Category 2 |

|---|

| Description: AE present – Harm Identified (AE 2) |

| Definition: An action or omission that led to injury or harm regardless of severity. |

Example(s):

|

Frequently asked questions of raters

-

Can I select more than one trigger for the same event?

Yes, please select all applicable triggers

-

Can I select more than one proximal cause for each event?

Please select the single, most likely proximal cause for each event.

-

Is it acceptable to have more than one adverse event with the same trigger(s) as other events?

Yes. The triggers are broad and may encompass multiple events. Do not try to match each trigger with one unique event.

Appendix B. Example data collection instrument

|

Footnotes

PDP, CMG, MDW, RJW, RNR, VNM, FXG, and JCR developed the study design and collected study data. PDP and MDW analyzed study data. PDP, DMY, CMG, MDW, RJW, RNR, VNM, FXG, JCR, RMA, and JRL conceived the study, developed the manuscript.

PRESENTATIONS: Portions of this research were presented as a poster presentation at the National Association of EMS Physicians (NAEMSP) Annual Meeting. Bonita Springs, FL, January 11, 2013.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.FICEMS, NHTSA, NASEMSO. National EMS Assessment. Washington, DC: Funded by the National Highway Traffic Safety Administration; 2011. [Google Scholar]

- 2.Brennan TA, Leape LL, Laird NM, Hebert L, Localio AR, Lawthers AG, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. N Engl J Med. 1991;324(6):370–6. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- 3.Wang HE, Weaver MD, Abo BN, Kaliappan R, Fairbanks RJ. Ambulance stretcher adverse events. Qual Saf Health Care. 2009;18(3):213–16. doi: 10.1136/qshc.2007.024562. [DOI] [PubMed] [Google Scholar]

- 4.Wang HE, Lave JR, Sirio CA, Yealy DM. Paramedic intubation errors: isolated events or symptoms of a larger problem? Health Aff (Millwood) 2006;25(2):501–09. doi: 10.1377/hlthaff.25.2.501. [DOI] [PubMed] [Google Scholar]

- 5.Vilke GM, Tornabene SV, Stepanski B, Shipp HE, Ray LU, Metz MA, et al. Paramedic self-reported medication errors. Prehosp Emerg Care. 2007;11(1):80–84. doi: 10.1080/10903120601021358. [DOI] [PubMed] [Google Scholar]

- 6.Fairbanks RJ, Crittenden CN, O’Gara KG, Wilson MA, Pennington EC, Chin NP, et al. Emergency medical services provider perceptions of the nature of adverse events and near misses in out of hospital care: an ethnographic view. Acad Emerg Med. 2008;15(7):633–40. doi: 10.1111/j.1553-2712.2008.00147.x. [DOI] [PubMed] [Google Scholar]

- 7.Weaver MD, Wang HE, Fairbanks RJ, Patterson PD. Association between EMS workplace safety culture and safety outcomes. Prehosp Emerg Care. 2012;16(1):43–52. doi: 10.3109/10903127.2011.614048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Patterson PD, Huang DT, Fairbanks RJ, Simeone SJ, Weaver MD, Wang HE. Variation in emergency medical services workplace safety culture. Prehosp Emerg Care. 2010;14(4):448–60. doi: 10.3109/10903127.2010.497900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Thomas EJ, Petersen LA. Measuring errors and adverse events in health care. J Gen Intern Med. 2003;18(1):61–67. doi: 10.1046/j.1525-1497.2003.20147.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gallagher JM, Kupas DF. Experience with an anonymous web-based state EMS safety incident reporting system. Prehosp Emerg Care. 2012;16(1):36–42. doi: 10.3109/10903127.2011.626105. [DOI] [PubMed] [Google Scholar]

- 11.Brennan TA, Localio AR, Leape LL, Laird NM, Peterson L, Hiatt HH, et al. Identification of adverse events occurring during hospitalization. A cross-sectional study of litigation, quality assurance, and medical records at two teaching hospitals. Ann Intern Med. 1990;112(3):221–6. doi: 10.7326/0003-4819-112-3-221. [DOI] [PubMed] [Google Scholar]

- 12.Weingart SN, Wilson RM, Gibberd RW, Harrison B. Epidemiology of medical error. BMJ. 2000;320(7237):4. doi: 10.1136/bmj.320.7237.774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kohn LT, Corrigan JM, Donaldson MS. To Err is Human: Building a Safer Health System. Washington, DC: National Academies of Science; 2000. [PubMed] [Google Scholar]

- 14.Pronovost PJ, Thompson DA, Holzmueller CG, Lubomski LH, Morlock LL. Defining and measuring patient safety. Crit Care Clin. 2005;21(1):1–19. doi: 10.1016/j.ccc.2004.07.006. [DOI] [PubMed] [Google Scholar]

- 15.Schildmeijer K, Nilsson L, Arestedt K, Perk J. Assessment of adverse events in medical care: lack of consistency between experienced teams using the global trigger tool. BMJ Qual Saf. 2012;21(4):307–14. doi: 10.1136/bmjqs-2011-000279. [DOI] [PubMed] [Google Scholar]

- 16.Baker GR, Norton PG, Flintoft V, Blais R, Brown A, Cox J, et al. The Canadian Adverse Events Study: the incidence of adverse events among hospital patients in Canada. CMAJ. 2004;170(11):1678–86. doi: 10.1503/cmaj.1040498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Forster AJ, Taljaard M, Bennett C, van Walraven C. Reliability of the peer-review process for adverse event rating. PloS One. 2012;7(7):e41239. doi: 10.1371/journal.pone.0041239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Forster AJ, O’Rourke K, Shojania KG, van Walraven C. Combining ratings from multiple physician reviewers helped to overcome the uncertainty associated with adverse event classification. J Clin Epidemiol. 2007;60(9):892–901. doi: 10.1016/j.jclinepi.2006.11.019. [DOI] [PubMed] [Google Scholar]

- 19.Pronovost PJ, Goeschel CA, Marsteller JA, Sexton JB, Pham JC, Berenholtz SM. Framework for patient safety research and improvement. Circulation. 2009;119(2):330–37. doi: 10.1161/CIRCULATIONAHA.107.729848. [DOI] [PubMed] [Google Scholar]

- 20.Classen DC, Lloyd RC, Provost L, Griffin FA, Resar R. Development and evaluation of the Institute for Healthcare Improvement Global Trigger Tool. J Patient Saf. 2008;4(3):169–77. [Google Scholar]

- 21.Resar RK, Rozich JD, Classen DC. Methodology and rationale for the measurement of harm with trigger tools. Qual Saf Health Care. 2003;12(Suppl 2):ii39–45. doi: 10.1136/qhc.12.suppl_2.ii39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wiegersma JS, Droogh JM, Zijlstra JG, Fokkema J, Ligtenberg JJ. Quality of interhospital transport of the critically ill: impact of a mobile intensive care unit with a specialized retrieval team. Crit Care. 2011;15(1):R75. doi: 10.1186/cc10064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ligtenberg JJ, Arnold LG, Stienstra Y, van der Werf TS, Meertens JH, Tulleken JE, et al. Quality of interhospital transport of critically ill patients: a prospective audit. Crit Care. 2005;9(4):R446–R51. doi: 10.1186/cc3749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fromm RE, Dellinger RP. Transport of critically ill patients. J Intensive Care Med. 1992;7(5):223–33. doi: 10.1177/088506669200700503. [DOI] [PubMed] [Google Scholar]

- 25.Barton AC, Tuttle-Newhall JE, Szalados JE. Portable power supply for continuous mechanical ventilation during intrahospital transport of critically ill patients with ARDS. Chest. 1997;112(2):560–63. doi: 10.1378/chest.112.2.560. [DOI] [PubMed] [Google Scholar]

- 26.Braman SS, Dunn SM, Amico CA, Millman RP. Complications of intrahospital transport in critically ill patients. Ann Intern Med. 1987;107(4):469–73. doi: 10.7326/0003-4819-107-4-469. [DOI] [PubMed] [Google Scholar]

- 27.Brokalaki HJ, Brokalakis JD, Digenis GE, Baltopoulos G, Anthopoulos L, Karvountzis G. Intrahospital transportation: monitoring and risks. Intensive Crit Care Nurs. 1996;12(3):183–86. doi: 10.1016/s0964-3397(96)80554-4. [DOI] [PubMed] [Google Scholar]

- 28.Carson KJ, Drew BJ. Electrocardiographic changes in critically ill adults during intrahospital transport. Prog Cardiovasc Nurs. 1994;9(4):4–12. [PubMed] [Google Scholar]

- 29.Caruana M, Culp K. Intrhospital transport of the critically ill adult: a research review and implications. Dimens Crit Care Nurs. 1998;17(3):146–56. doi: 10.1097/00003465-199805000-00005. [DOI] [PubMed] [Google Scholar]

- 30.De Cosmo G, Primieri P, Mascia A, Gualtieri E, Bonomo V, Villani A. Intra-hospital transport of the anaesthetized patient. Eur J Anaesthesiol. 1993;10(3):231–34. [PubMed] [Google Scholar]

- 31.Evans A, Winslow EH. Oxygen saturation and hemodynamic response in critically ill, mechanically ventilated adults during intrahospital transport. Am J Crit Care. 1995;4(2):106–11. [PubMed] [Google Scholar]

- 32.Hurst JM, Davis K, Johnson DJ, Branson RD, Campbell RS, Branson PS. Cost and complications during in-hospital transport of critically ill patients: a prospective cohort study. J Trauma. 1992;33(4):582–85. doi: 10.1097/00005373-199210000-00015. [DOI] [PubMed] [Google Scholar]

- 33.Insel J, Weissman C, Kemper M, Askanazi J, Hyman AL. Cardiovascular changes during transport of critically ill and postoperative patients. Crit Care Med. 1986;14(6):539–42. doi: 10.1097/00003246-198606000-00004. [DOI] [PubMed] [Google Scholar]

- 34.Kalisch BJ, Kalisch PA, Burns SM, Kocan MJ, Prendergast V. Intrahospital transport of neuro ICU patients. J Neurosci Nurs. 1995;27(2):69–77. doi: 10.1097/01376517-199504000-00003. [DOI] [PubMed] [Google Scholar]

- 35.Link J, Krause H, Wagner W, Papadopoulos G. Intrahospital transport of critically ill patients. Crit Care Med. 1990;18(12):1427–29. doi: 10.1097/00003246-199012000-00024. [DOI] [PubMed] [Google Scholar]

- 36.Smith I, Fleming S, Cernaianu A. Mishaps during transport from the intensive care unit. Crit Care Med. 1990;18(3):278–81. doi: 10.1097/00003246-199003000-00006. [DOI] [PubMed] [Google Scholar]

- 37.Stearley HE. Patient’s outcomes: intrahospital transportation and monitoring of critically ill patients by a specially trained ICU nursing staff. Am J Crit Care. 1998;7(4):282–87. [PubMed] [Google Scholar]

- 38.Szem JW, Hydo LJ, Fischer E, Kapur S, Klemperer J, Barie PS. High-risk intrahospital transport of critically ill patients: safety and outcome of the necessary “road trip”. Crit Care Med. 1995;23(10):1660–66. doi: 10.1097/00003246-199510000-00009. [DOI] [PubMed] [Google Scholar]

- 39.Seymour CW, Kahn JM, Schwab CW, Fuchs BD. Adverse events during rotary-wing transport of mechanically ventilated patients: a retrospective cohort study. Crit Care. 2008;12(3):R71. doi: 10.1186/cc6909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.MacDonald RD, Banks BA, Morrison M. Epidemiology of adverse events in air medical transport. Acad Emerg Med. 2008;15(10):923–31. doi: 10.1111/j.1553-2712.2008.00241.x. [DOI] [PubMed] [Google Scholar]

- 41.Chang A, Schyve PM, Croteau RJ, O’Leary DS, Loeb JM. The JCAHO patient safety event taxonomy: a standardized terminology and classification schema for near misses and adverse events. Int J Qual Health Care. 2005;17(2):95–105. doi: 10.1093/intqhc/mzi021. [DOI] [PubMed] [Google Scholar]

- 42.Patterson PD, Weaver MD, Abebe K, Martin-Gill C, Roth RN, Suyama J, et al. Identification of adverse events in ground transport Emergency Medical Services. Am J Med Qual. 2012;27(2):139–46. doi: 10.1177/1062860611415515. [DOI] [PubMed] [Google Scholar]

- 43.Lynn MR. Determination and quantification of content validity. Nurs Res. 1986;35(6):382–86. [PubMed] [Google Scholar]

- 44.Linstone HA, Turoff M. The Delphi Method: Techniques and Applications. Reading, Massachusetts: Addison-Wesley; 1975. [Google Scholar]

- 45.Nevo B. Face validity revisited. Journal of Educational Measurement. 1985;22(4):287–93. [Google Scholar]

- 46.Wynd CA, Schmidt B, Schaefer MA. Two quantitative approaches for estimating content validity. West J Nurs Res. 2003;25(5):508–18. doi: 10.1177/0193945903252998. [DOI] [PubMed] [Google Scholar]

- 47.Brennan TA, Localio AR, Laird NL. Reliability and validity of judgments concerning adverse events suffered by hospitalized patients. Med Care. 1989;27(12):1148–58. doi: 10.1097/00005650-198912000-00006. [DOI] [PubMed] [Google Scholar]

- 48.Classen DC, Resar R, Griffin F, Federico F, Frankel T, Kimmel N, et al. ‘Global trigger tool’ shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood) 2011;30(4):581–89. doi: 10.1377/hlthaff.2011.0190. [DOI] [PubMed] [Google Scholar]

- 49.Michel P. Strengths and weaknesses of available methods for assessing the nature and scale of harm caused by the health system: literature review. Geneva, Switzerland: World Health Organization; 2003. [Google Scholar]

- 50.Walshe K. Adverse events in health care: issues in measurement. Qual Health Care. 2000;9(1):47–52. doi: 10.1136/qhc.9.1.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Young GP, Lowe RA. Adverse outcomes of managed care gatekeeping. Academic Emergency Medicine. 1997;4 (12):1129–36. doi: 10.1111/j.1553-2712.1997.tb03695.x. [DOI] [PubMed] [Google Scholar]

- 52.Localio AR, Weaver SL, Landis JR, Lawthers AG, Brenhan TA, Hebert L, et al. Identifying adverse events caused by medical care: degree of physician agreement in a retrospective chart review. Ann Intern Med. 1996;125(6):457–64. doi: 10.7326/0003-4819-125-6-199609150-00005. [DOI] [PubMed] [Google Scholar]

- 53.Weingart SN. Finding common ground in the measurement of adverse events. Int J Qual Health Care. 2000;12(5):3. doi: 10.1093/intqhc/12.5.363. [DOI] [PubMed] [Google Scholar]

- 54.Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007;30(4):459–67. doi: 10.1002/nur.20199. [DOI] [PubMed] [Google Scholar]

- 55.Burt CDB, Stevenson RJ. The relationship between recruitment processes, familiarity, trust, perceived risk and safety. J Safety Res. 2009;40(5):365–69. doi: 10.1016/j.jsr.2009.08.002. [DOI] [PubMed] [Google Scholar]

- 56.Takata GS, Mason W, Taketomo C, Logsdon T, Sharek PJ. Development, testing, and findings of a pediatric-focused trigger tool to identify medication-related harm in US children’s hospitals. Pediatrics. 2008;121(4):e927–e35. doi: 10.1542/peds.2007-1779. [DOI] [PubMed] [Google Scholar]

- 57.Parry G, Cline A, Goldmann D. Deciphering harm measurement. JAMA. 2012;307(20):2155–56. doi: 10.1001/jama.2012.3649. [DOI] [PubMed] [Google Scholar]

- 58.Ruchlin HS, Dubbs NL, Callahan MA. The role of leadership in instilling a culture of safety: lessons from the literature. J Healthcare Manag. 2004;49(1):47–59. [PubMed] [Google Scholar]

- 59.Frankel AS, Leonard MW, Denham CR. Fair and just culture, team behavior, and leadership engagement: The tools to achieve high reliability. Health Serv Res. 2006;41(4 Pt 2):1690–709. doi: 10.1111/j.1475-6773.2006.00572.x. [DOI] [PMC free article] [PubMed] [Google Scholar]