ABSTRACT

Purpose: To describe and compare the type and quality of evidence published in physical therapy (PT) journals during two time periods (2000–2002 vs. 2010–2012) and to explore scientific editors' opinions on changes in the types and quality of articles. Methods: A quantitative, longitudinal, retrospective journal review was used to categorize and assess the methodological rigour of items published in four PT journals using the Hedges Project. A quantitative, descriptive, cross-sectional survey explored the opinions of scientific editors. Percentages and frequencies of article types (as defined by the Hedges Project criteria), items passing rigour, and editor responses were calculated. Statistical significance of differences in article type and rigour between the two time periods was determined using Fisher's Exact Test. Results: There was a significant increase in original studies and review articles from 2000–2002 to 2010–2012 (p<0.001, p=0.002, respectively). The overall pass rate for rigour was 33.3% in 2000–2002 and 42.5% in 2010–2012, showing a significant increase (p=0.019). The majority of editors reported an increase in systematic reviews, qualitative designs, and randomized controlled trials and believed that quality had improved by 2010–2012. Conclusion: From 2000–2002 to 2010–2012, the quality of articles published in PT journals improved and the proportion of original studies and review articles increased.

Key Words: research, evidence-based practice, research design, publications

RÉSUMÉ

Objectif : Décrire et comparer le type et la qualité des données probantes publiées dans les revues de physiothérapie de deux périodes (2010–2012 et 2000–2002) et explorer les opinions des rédacteurs scientifiques sur l'évolution du type et de la qualité des articles. Méthodes : Un examen rétrospectif quantitatif et longitudinal des revues a permis de classer par catégorie les articles publiés dans quatre revues de physiothérapie et d'évaluer leur rigueur méthodologique, grâce au projet Hedges. Une étude transversale descriptive quantitative a permis d'explorer les opinions des rédacteurs scientifiques. Les pourcentages et les fréquences des types d'article (selon la définition des critères du projet Hedges), les articles d'une rigueur suffisante et les réponses des rédacteurs ont été calculés. La signification statistique des différences dans le type et la rigueur des articles entre les deux périodes a été déterminée à l'aide de la méthode exacte de Fisher. Résultats : Il y a eu une importante augmentation dans le nombre d'études originales et d'exposés de synthèse de 2000–2002 à 2010–2012 (p<0,001, p=0,002, respectivement). Le taux de réussite global pour ce qui est de la rigueur était de 33,3% en 2000–2002 et de 42,5% en 2010–2012, ce qui constitue une augmentation importante (p=0,019). La majorité des rédacteurs ont signalé une augmentation des examens systématiques, des conceptions qualitatives, et des essais contrôlés randomisés, et ils étaient d'avis que la qualité s'était améliorée. Conclusion : De 2000–2002 à 2010–2012, la qualité des articles publiés dans les revues de physiothérapie s'est améliorée et la proportion d'études originales et d'exposés de synthèses a augmenté.

Mots clés : médecine physique et réadaptation, conception de recherche, recherche clinique, pratique factuelle

Physical therapists need high-quality scientific literature to inform their own evidence-based practice (EBP).1 Various study designs can contribute to providing evidence for EBP, including randomized controlled trials (RCTs),2–5 single-subject designs and case series,6 cohort and observational studies,5 and qualitative methodologies.7,8 While RCTs have been viewed as the “gold standard” for quality in research design,9 they cannot always be applied to physical therapy (PT) practice;6 furthermore, some questions are better answered using other designs. For example, qualitative methodologies may be more appropriate for obtaining information on clients' experiences and feelings.7

The amount of published PT literature has increased significantly over the years:10–14 Wiles and colleagues12 found that the number of articles published in Physical Therapy doubled from 1945 to 2010, Fell and colleagues13 found that the number of articles indexed in Medline more than doubled between 2000 and 2009, and Kumar and colleagues found that there was a sharp increase in PT publications in PubMed from 2000 to 2010.14

Trends in types of PT literature have also been evaluated. Wiles and colleagues12 found that systematic reviews, cohort studies, and non-randomized controlled trials in Physical Therapy increased in prevalence from 1945 to 2010; Coronado and colleagues10 found an increased percentage of research reports, systematic reviews, and qualitative studies, but a decrease in topical reviews and non-systematic reports from 1980 to 2009, with no change in case reports or RCTs.10 More recently, Kumar and colleagues14 examined PT literature in PubMed between 1970 and 2010; they found that clinical trials, RCTs, and review articles were most prevalent, with a rapid increase after 1995.

It is not clear whether the growth in PT literature has corresponded to an increase in quality. A variety of tools have been used to assess quality in PT and rehabilitation journals, including quantitative content analyses driven by hierarchical levels of evidence1,5 and citation-driven bibliometric analyses.10,12,13,15,16 However, these measures have shortcomings: not all research questions are appropriately answered by RCT methodology,6,17 and bibliometric analyses measure trends and interest in published reports rather than the quality of the literature.

Miller and colleagues18 have assessed quality using a unique standard, the Hedges Project, which uses rigour criteria as a measure of quality for different study designs.18 The Hedges Project classifies publications by format (original study, review article, case report, or general and miscellaneous), interest to human health care (of interest or not of interest), purpose (etiology; prognosis; diagnosis; treatment, prevention, continuing medical education, or quality improvement; economics; clinical prediction guide; qualitative study; or something else), and rigour. The rigour criteria correspond to each purpose of publication (Box 1; see Miller and colleagues18 for complete Hedges Project criteria). Miller and colleagues18 assessed 179 articles from core PT journals published from 2000 to 2001 and found that approximately 11% of those articles passed the assessment for rigour.

Box 1. Rigour Criteria for Each Purpose Category as Defined in the Hedges Project from Miller and colleagues18(p.131).

| Purpose category | Rigour criteria |

|---|---|

| Etiology | Prospective standardized data collection, a clearly identified comparison group for those at risk for, or having, the outcome of interest, and blinding of observers to outcome of exposure |

| Prognosis | An inception cohort of individuals, all initially free of the outcome of interest, and follow-up of at least 80% of patients until a major study end point occurs or the study ends |

| Diagnosis | Clearly identified comparison groups, at least one of which is free of the disorder of interest, interpretation of a diagnostic (“gold”) standard without knowledge of the test results, interpretation of test without knowledge of the diagnostic standard result |

| Treatment and prevention | Random allocation of participants to comparison groups, follow-up of at least 80% of the participants entering the investigation |

| Continuing medical education and quality improvement | Random allocation of participants or units to comparison groups and follow-up of at least 80% of the participants |

| Economics | The economic question addressed must be based on comparison of alternatives. Alternative diagnostic or therapeutic services are compared on the basis of both the outcome produced (effectiveness) and the resources consumed (cost) |

| Clinical prediction guide | Generation of the guide in one or more sets of patients (training set), validation in another set of patients (test sets) |

| Reviews | Explicit statement of the inclusion and exclusion criteria applied for selecting articles, a description of the methods indicating the specific database sources, and at least one of the articles must meet the above noted criteria for treatment, diagnosis, prognosis, clinical prediction, etiology, quality improvement, or economics of health care |

| Qualitative study | The content relates to how people feel or experience certain situations; collection methods and analyses are appropriate for qualitative data |

Another way to assess the quality of evidence is to seek the opinions of experts in PT literature: the editors of PT journals. Editors' opinions on the quality of literature have not been evaluated in rehabilitation or PT; previous surveys have mainly evaluated editors' practices and behaviours in medical and nursing journals19,20 and the issue of excessive publication in scientific literature.21,22

To capture the trends in publication of PT literature, we need a measure of quality that encompasses more than the hierarchy of evidence or bibliometrics, as well as the opinions of leaders in the field. The purpose of our study, therefore, is to describe the types and quality of evidence published in PT journals and to explore the future of those journals. By applying the Hedges Project criteria and exploring editors' opinions, the study describes the current types and quality of research in PT journals. The specific objectives of this study are (1) to describe the types and quality of evidence published in core PT journals, (2) to compare the types and quality of evidence published in core PT journals (2010–2012 vs. 2000–2002), and (3) to explore the opinions of scientific editors of international peer-reviewed PT journals about changes in types and quality of articles published during their tenure and about future trends in these publications.

Methods

Our study had two parts. The first part was a quantitative, longitudinal, retrospective journal review designed to compare the types and quality of evidence published in PT journals in two time periods: 2010–2012 (to provide a benchmark of quality at the time of the study) and 2000–2002 (a decade earlier, which we considered sufficient time to be able to observe a change). The second part was a quantitative, descriptive, cross-sectional survey that explored the opinions of editors of international PT journals regarding the changes in types and quality of articles during their tenure and future trends in these publications. The University of Toronto Ethics Review Board approved this study and the research conforms to the Human and Animal Rights requirements of the February 2006 International Committee of Medical Journal Editors' Uniform Requirements for Manuscripts Submitted to Biomedical Journals.

Study sample

For part one, we chose four PT journals—the Journal of Physiotherapy, Physical Therapy, Physiotherapy, and Physiotherapy Canada—based on the following inclusion criteria: ranked on the 2011 ISI Journal Citation Report23, peer reviewed, includes “Physical Therapy” or “Physiotherapy” in the journal title, circulates internationally, and published in an English-speaking country.

For part two, we included all known scientific or lead editors of peer-reviewed journals published in English and indexed in CINAHL and Scopus with “Physical Therapy,” “Physical Therapists,” “Physiotherapy,” or “Physiotherapists” in the title who had a valid email address and Internet access. We contacted eligible editors via email and sent our online questionnaire (see Appendix 1 online) using LimeSurvey (Lime Survey Project Team, Hamburg, Germany).

Protocol

Evaluation of journal articles

For part one, we accessed all indexed items in all issues of the four journals in both time periods (2000–2002 and 2010–2012) via the University of Toronto Library database. Print issues of Physiotherapy Canada from 2000–2002 were used for our analysis, as these issues were not available online. All items were evaluated using the criteria of the Hedges Project, an instrument designed to assess the clinical applicability and methodological quality of health research articles without grading their quality based on research design alone.18,24 For the purposes of this article, the term item refers to any published material in a journal, including book reviews and editorials, as well as articles.

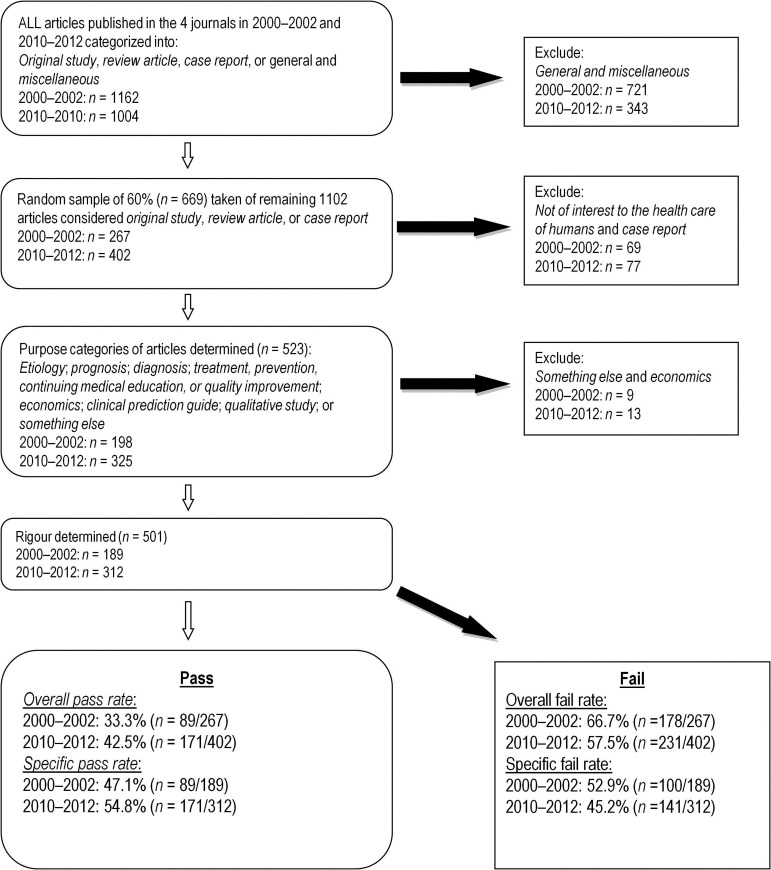

Our evaluation protocol is set out in Figure 1 and was carried out separately for each time period studied. Each of the five evaluators assessed approximately the same number of articles. Before evaluation began, we trained in the use of the Hedges Project by contacting Miller and colleagues18 for direction on applying the tool and evaluating 20 articles as a group to increase our collective consistency of assessment. Following the training period, consensus was reached through group evaluation for any articles that an individual evaluator found difficult to assess. A total of 2,166 items (n=1,162 for 2000–2002, n=1,004 for 2010–2012) were first formatted into four categorical types: (1) original study, (2) review article, (3) case report, and (4) general and miscellaneous. General and miscellaneous items, such as editorials and book reviews, were excluded from further quality assessment. For feasibility purposes, a sample of approximately 60% of items was randomly chosen using the random number generator function in Microsoft Excel 2007 (Microsoft Corp., Redmond, WA), yielding a total of 669 articles. These 669 articles were then assessed for relevance to human health care. Articles of interest to human health care, excluding case reports, were then evaluated further and placed in the appropriate purpose category: etiology; prognosis; diagnosis; treatment, prevention, continuing medical education, or quality improvement; economics; clinical prediction guide; qualitative study; or something else. Articles in the economics and something else categories were subsequently excluded. The Hedges Project has criteria for each purpose category listing specific requirements needed to receive a “pass” for methodological rigour. As such, the remaining articles were assessed for rigour, receiving either a “pass” or “fail” status. Further information regarding criteria for format categories, purpose categories, and rigour can be found in the study conducted by Miller and colleagues.18

Figure 1.

Evaluation process for journal articles

We made minor assumptions and modifications when applying the Hedges Project tool to article evaluation to reduce both open-ended interpretation of criteria and subjective evaluation of format category, purpose category, and methodological rigour (Box 2).

Box 2. Assumptions and Modifications Applied to Hedges Project Criteria.

| Hedges Project criteria | Assumption | Modification |

|---|---|---|

| Format category | ||

| General and miscellaneous articles | • Includes all items (not only articles) listed in a journal that are not original study, review article, or case report (e.g. editorials, book reviews) | |

| Case report | • Considered original study if has fewer than 10 subjects but performs analysis/analyses | |

| Of interest to the health care of humans | ||

| Yes | • The study must have direct applicability to some aspect of health care or clinical practice | |

| • Studies may still influence health care without using subjects with known impairments or disabilities | ||

| Purpose of original study or review article and rigour | ||

| Etiology | • The term “exposure” can also refer to a disease or condition | |

| Prognosis | • Study participants are free of the outcome of interest at the beginning of the study | |

| Diagnosis | • Diagnostic “gold” standard is not essential criterion | |

| Clinical prediction guide | • Includes measurement studies other than validity (e.g., reliability, minimal detectable change) | • Training set or tool development allowed to be carried out in a previous study rather than in the article being evaluated; however, previous study must be cited |

| • Study results have no impact on methodological rigor (e.g., the results of tool's validity or reliability) | • Measurement property and subjects in current study do not have to match previous work (e.g., reliability vs. validity; COPD vs. geriatric) | |

| • Measurement property can be measured in the same population at two time frames (does not have to be different subjects) | ||

| Qualitative study | • Research group has developed themes and codes | |

| • Question development via literature search or clinical expertise | ||

| Studies with more than one purpose category | • Study must meet rigour criteria for both purpose categories to pass | |

COPD=chronic obstructive pulmonary disease.

Survey of editors

For part two, we recruited editors using a modified Dillman approach:25 potential participants were contacted on three separate occasions with an invitation to participate in the study by completing a questionnaire. Participation was anonymous, and responses were not linked to the participant in any way. We initially piloted our 33-item questionnaire with two retired editors and then made revisions based on their feedback and on further critique among the authors.

Analysis

For part one, we defined two different pass rates (see Figure 1): overall pass rate, defined as the number of articles in the ∼60% random sample for each time period that passed, and specific pass rate, defined as the number of articles evaluated for methodological rigour—the final step of the Hedges Project criteria—that passed for that time period. Descriptive and inferential statistics were used to evaluate and compare the types and quality of literature published in PT journals between the two time periods using SPSS version 20 (IBM Corp. Armonk, NY). Nominal scale data were calculated as percentages and frequencies for format category, purpose category, and pass rate for each time period. We used Fisher's Exact Test (2-tailed), with α set at 0.05 and β at 0.2, to determine the statistical significance of differences in format categories and methodological rigour between the two time periods; to compare purpose categories, α was set at 0.01.

For part two, editor responses were evaluated using descriptive statistics. Nominal and ordinal data were calculated as percentages and frequencies.

Results

Format categories and interest to human health care

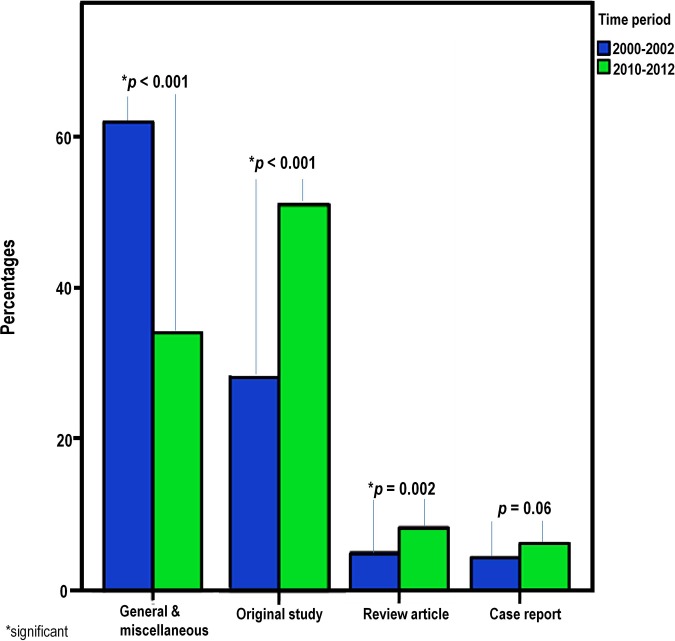

Table 1 presents the number of items classified per time period by journal title. Figure 2 shows the subsequent division of these items into the format categories. In 2000–2002, the highest proportion of items fell into the general and miscellaneous category, with original study the second most frequent; in contrast, these two categories were reversed in 2010–2012. Comparison of format categories between the two time periods revealed a significant increase in the proportion of both original studies (p<0.001) and review articles (p=0.002) and a significant decrease in the proportion of general and miscellaneous items (p<0.001) in 2010–2012 (Figure 2). The majority of our random sample of articles was of interest to human health care (2000–2002: 232/267 [86.9%]; 2010–2012: 362/402 [90.0%]); the difference between time periods was not significant (p=0.21).

Table 1.

Number of Items Classified by Journals Studied (n=2,166).

| No. (%) |

||

|---|---|---|

| Journal | 2000–2002 | 2010–2012 |

| Journal of Physiotherapy | 182 (15.66) | 199 (19.82) |

| Physical Therapy | 286 (24.61) | 429 (42.73) |

| Physiotherapy | 559 (48.11) | 179 (17.83) |

| Physiotherapy Canada | 135 (11.62) | 197 (19.62) |

| Total | 1,162 | 1,004 |

Figure 2.

Comparison of format category proportions between the time periods studied (n=2166). There was a significant increase in the proportion of original studies (2000–2002: 330/1162 [28.4%]; 2010–2012: 513/1004 [51.1%]) and review articles (2000–2002: 60/1162 [5.2%]; 2010–2012: 85/1004 [8.5%]) and a significant decrease in the proportion of general and miscellaneous items in 2010–2012 (2000–2002: 721/1162 [62.0%]; 2010–2012: 343/1004 [34.2%]).

Purpose categories

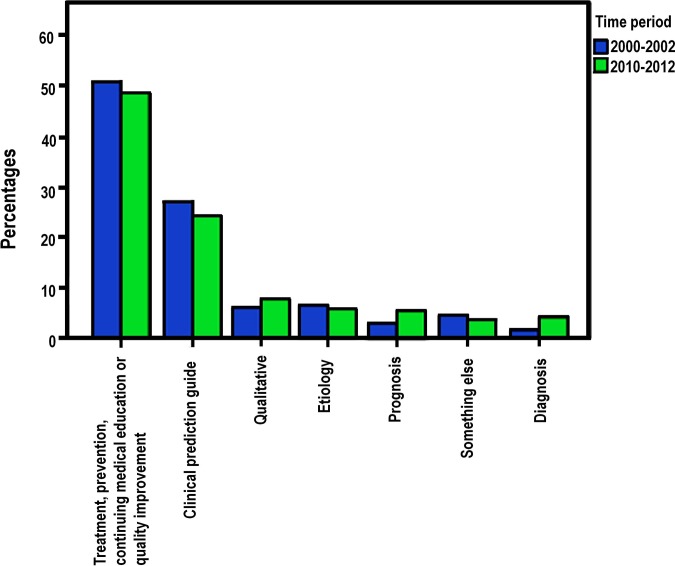

Figure 3 shows articles that were of interest to human health care divided into purpose categories. In both time periods, the most frequent purpose category was treatment, prevention, continuing medical education, or quality improvement, followed by clinical prediction guide. There was only one item in the economics category, which was therefore excluded as an outlier. Comparison of purpose categories between time periods found no significant differences in their proportions within each time period (see Figure 3; all p values >0.10).

Figure 3.

Comparison of Purpose Category Proportions Between the Studied Time Periods (n=522). No differences in proportions were found for any purpose category when compared between the two time periods (all p values>0.10).

Pass rates

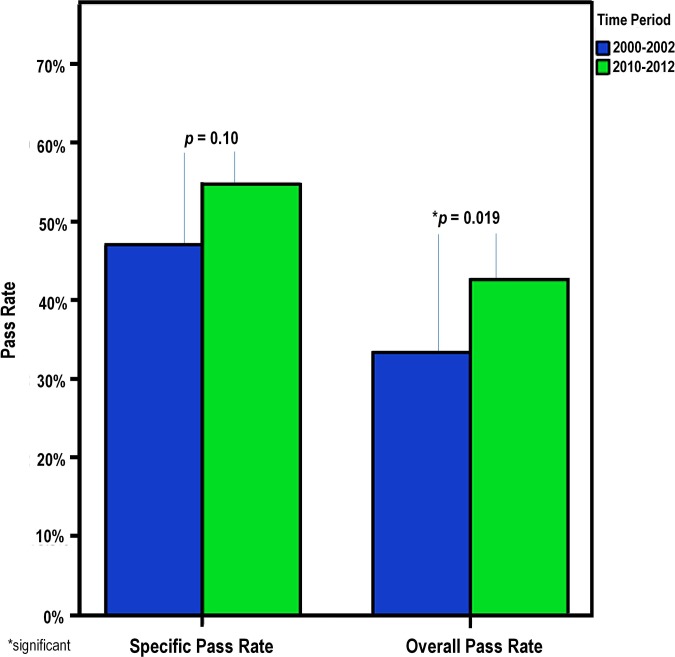

For all purpose category articles that we evaluated for rigour, the specific pass rate was 47.1% (89/189) for 2000–2002 and 54.8% (171/312) for 2010–2012 (see Figure 4; p=0.10). A more detailed analysis found no difference between time periods in the specific pass rate for any of the purpose categories (all p values >0.30). In contrast, the overall pass rate was 33.3% in 2000–2002 and 42.5% in 2010–2012, representing a significant increase over time (Figure 4; p=0.019). Further analysis showed that the increase in overall pass rate of review articles was significant (2000–2002: 7/267 [2.6%]; 2010–2012: 28/402 [7.0%]; p=0.013). However, the overall pass rate of original studies was not significantly different between time periods (2000–2002: 82/267 [30.7%]; 2010–2012: 143/402 [35.6%]; p=0.21).

Figure 4.

Overall Pass Rate (n=669) and Specific Pass Rate (n=501) Compared Between the Two Time Periods.

Survey results

Of the 36 questionnaires sent, 15 were returned; data from one questionnaire were not usable, leaving 14 responses for analysis. All editors were trained physical therapists. The majority had at least 4 years' experience as an editor (n=9, 64%) and a current education level of “PhD” or “post-doctoral” (n=12, 86%).

All editors (n=14, 100%) reported that the number of submissions to their journal had increased during their appointment (n=14, 100%). The majority believed that there had been a change in the types of manuscripts submitted (n=9, 64%), that the number of studies published had increased during their tenure (n=11, 79%), and that the quality of publications had improved (n=13, 93%). Editors believed systematic reviews (n=5, 36%), “other” designs (including qualitative) (n=3, 21%), and RCTs (n=2, 14%) had increased the most, while narrative reviews (n=7, 50%) had decreased the most.

Editors identified inadequate funding for PT research (n=13, 93%) and researchers' preference of submitting higher quality studies to non-PT journals (n=11, 71%) as barriers to improving the quality of publications.

The majority of editors predicted a continuing increase in the number of PT journals (n=9, 64%) and the number of submissions to PT journals (n=14, 100%) in the future. They identified systematic reviews (n=14, 100%) and RCTs (n=12, 86%) as the two research designs most likely to increase in the future. Finally, editors considered systematic reviews (n=14, 100%) and RCTs (n=12, 86%) as most beneficial to future clinical decision-making.

Discussion

This was the first study to describe the types and quality of evidence published in PT journals and to compare two time periods by applying the Hedges Project criteria and seeking the opinions of editors. We found that the overall rigour of articles, assessed by the criteria of the Hedges Project, was greater in 2010–2012 than in 2000–2002; that a greater proportion of original studies and review articles were published in 2010–2012; and that almost all editors considered the quality of publications to have increased in recent years.

There has recently been greater emphasis on EBP in the PT profession, with the research community providing more robust evidence to support professional practice. This shift toward EBP has likely produced stronger research designs, increased adherence to scientifically sound criteria, and emphasized knowledge translation. These factors, in addition to an increase in the proportion of original studies and review articles and a decrease in general and miscellaneous items from 2000–2002 to 2010–2012, may have contributed to the increase in overall pass rate in 2010–2012.

The pass rate calculation of Miller and colleagues18 is comparable to our specific pass rate rather than our overall pass rate. For the articles they evaluated for rigour, Miller and colleagues18 reported a 66% pass rate, higher than the 47% and 55% pass rates we found for articles published in 2000–2002 and 2010–2012, respectively. These rates cannot be directly compared due to several differences between the methodology employed by Miller and colleagues18 and in our study. First, Miller and colleagues18 analyzed articles published in 2000–2001; our study included articles from 2000–2002, and our random sample of approximately 60% of items yielded a larger number of articles for evaluation using the Hedges Project (n=267 vs. n=179). Second, we made assumptions and modified the Hedges Project criteria to reduce ambiguity in format and purpose categories and rigour criteria; thus, the Hedges Project tool we used differed slightly from the one used by Miller and colleagues.18 Third, Miller and colleagues18 took consecutive issues of the four chosen PT journals for analysis, whereas we used a random sample from all issues published in 2000–2002. Finally, Miller and colleagues18 used only one reviewer to identify the format and purpose categories and assess for rigour, whereas we used five evaluators.

In addition to using a quantitative measure that considered quality, we supplemented our results by seeking the opinions of editors. The majority of editors reported that the quality of articles has increased in recent years, which supports our findings regarding publication quality improvement as defined by the Hedges Project criteria. Editors also reported an increase in the number of submissions and predicted an increase in the number of journals and article submissions in the future. Similarly, Frontera and colleagues,26 reporting on editors' opinions in the field of physical and rehabilitation medicine, stated that both the amount of research and the number of articles submitted for review are increasing and that there will be a need for more journals in the future.

In terms of study design, the editors surveyed in our study saw the greatest increase in systematic reviews, RCTs, and “other” methodologies, including qualitative studies. This finding cannot be directly compared with our findings using the Hedges Project criteria, as the Hedges Project did not allow us to look specifically at different study designs. In agreement with our findings, an editorial by Durward27 regarding the journal Physiotherapy Research International reported that several qualitative studies have been published since the journal's first publication in 1996. In 2002, Refshauge,17 also discussing Physiotherapy Research International, noted that more RCTs have been included since the journal's first publication. In accordance with our findings, Crosbie28 suggested that there are now more systematic reviews published; he also suggested that future PT literature will include more RCTs and that systematic reviews will replace literature reviews.28

Future studies including rehabilitation journals in addition to PT journals would improve power and sensitivity in evaluating article quality in rehabilitation. It may also be worthwhile to explore possible reasons behind the change observed in types and quality of articles.

This study has several limitations. While the Hedges Project is a useful tool in that it is standardized and allows for evaluation of a variety of research designs, some of its criteria are vague and less applicable to PT than to other health care professions. In addition, articles from only four journals were evaluated for rigour. This decreases our ability to generalize our results to other PT and rehabilitation journals. Furthermore, five evaluators were used to apply the Hedges Project criteria; while a large number of evaluators can leave room for discrepancy and variance, we minimized this possibility by training with the Hedges Project criteria before assessment and by consulting members of the group to reach a consensus in cases of uncertainty.

Conclusion

There was an improvement in the quality of articles from 2000–2002 to 2010–2012. There was an increase in the proportion of original studies and review articles between those time periods. According to editors, this reflects an increase in RCTs and systematic reviews. Collectively, these findings imply a shift in PT journals toward higher quality, evidence-based research. Physical therapists can rely on PT journals as one source to inform their clinical practice but must still evaluate each article to determine its efficacy for clinical decision-making.

Key messages

What is already known on this topic

There has been an increase in published PT literature over the years, as well as a change in the types of publications. A variety of tools have been used to assess quality of literature in PT and rehabilitation journals including the Hedges Project.

What this study adds

The growth in PT literature over the years has been associated with an increase in quality over the last decade. There was in increase in the proportion of original studies and review articles in that time period.

Supplementary Material

Physiotherapy Canada 2014; 66(4);382–391; doi:10.3138/ptc.2013-67

References

- 1.Paci M, Cigna C, Baccini M, et al. Types of article published in physiotherapy journals: a quantitative analysis. Physiother Res Int. 2009;14(4):203–12. doi: 10.1002/pri.447. http://dx.doi.org/10.1002/pri.447. Medline:19746407. [DOI] [PubMed] [Google Scholar]

- 2.Costa LOP, Moseley AM, Sherrington C, et al. Core journals that publish clinical trials of physical therapy interventions. Phys Ther. 2010;90(11):1631–40. doi: 10.2522/ptj.20090419. http://dx.doi.org/10.2522/ptj.20090419. Medline:20724420. [DOI] [PubMed] [Google Scholar]

- 3.Maher C, Moseley A, Sherrington C, et al. Core journals of evidence-based physiotherapy practice. Physiother Theory Pract. 2001;17(3):143–51. http://dx.doi.org/10.1080/095939801317077605. [Google Scholar]

- 4.Maher CG, Sherrington C, Elkins M, et al. Challenges for evidence-based physical therapy: accessing and interpreting high-quality evidence on therapy. Phys Ther. 2004;84(7):644–54. Medline:15225083. [PubMed] [Google Scholar]

- 5.Kocak FU, Unver B, Karatosun V. Level of evidence in four selected rehabilitation journals. Arch Phys Med Rehabil. 2011;92(2):299–303. doi: 10.1016/j.apmr.2010.07.233. http://dx.doi.org/10.1016/j.apmr.2010.07.233. Medline:21272728. [DOI] [PubMed] [Google Scholar]

- 6.Schreiber J, Stern P. A review of the literature on evidence-based practice in physical therapy. [cited 2013 July 16];The Internet Journal of Allied Health Sciences and Practice [serial on the Internet] 2005 3(4) [about 21 screens]. Available from: http://ijahsp.nova.edu/articles/vol3num4/schreiber.pdf. [Google Scholar]

- 7.Gibson BE, Martin DK. Qualitative research and evidence-based physiotherapy practice. Physiotherapy. 2003;89(6):350–8. http://dx.doi.org/10.1016/S0031-9406(05)60027-2. [Google Scholar]

- 8.Jones M, Grimmer K, Edwards I, et al. Challenges in applying best evidence to physiotherapy. [cited 2013 July 16];The Internet Journal of Allied Health Sciences and Practice [serial on the Internet] 2006 4(3) [about 17 screens]. Available from: http://ijahsp.nova.edu/articles/vol4num3/jones.pdf. [Google Scholar]

- 9.Sackett DL, Rosenberg WM, Gray JA, et al. Evidence based medicine: what it is and what it isn't. BMJ. 1996;312(7023):71–2. doi: 10.1136/bmj.312.7023.71. http://dx.doi.org/10.1136/bmj.312.7023.71. Medline:8555924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Coronado RA, Riddle DL, Wurtzel WA, et al. Bibliometric analysis of articles published from 1980 to 2009 in Physical Therapy, journal of the American Physical Therapy Association. Phys Ther. 2011;91(5):642–55. doi: 10.2522/ptj.20100267. http://dx.doi.org/10.2522/ptj.20100267. Medline:21372202. [DOI] [PubMed] [Google Scholar]

- 11.Robertson VJ. A quantitative analysis of research in Physical Therapy. Phys Ther. 1995;75(4):313–22, discussion 322–7. doi: 10.1093/ptj/75.4.313. Medline:7899489. [DOI] [PubMed] [Google Scholar]

- 12.Wiles L, Matricciani L, Williams M, et al. Sixty-five years of Physical Therapy: bibliometric analysis of research publications from 1945 through 2010. Phys Ther. 2012;92(4):493–506. doi: 10.2522/ptj.20110013. http://dx.doi.org/10.2522/ptj.20110013. Medline:22194279. [DOI] [PubMed] [Google Scholar]

- 13.Fell DW, Burnham JF, Buchanan MJ, et al. Mapping the core journals of the physical therapy literature. J Med Libr Assoc. 2011;99(3):202–7. doi: 10.3163/1536-5050.99.3.007. http://dx.doi.org/10.3163/1536-5050.99.3.007. Medline:21753912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kumar SP, Sisodia V, Kumar A. Evidence base for physiotherapy/physical therapy: A specialty-based quantitative trend analysis of articles. Saudi J Health Sci. 2013;2(1):23–30. http://dx.doi.org/10.4103/2278-0521.112627. [Google Scholar]

- 15.Wakiji EM. Mapping the literature of physical therapy. Bull Med Libr Assoc. 1997;85(3):284–8. Medline:9285129. [PMC free article] [PubMed] [Google Scholar]

- 16.Bohannon RW. Core journals of physiotherapy. Physiotherapy. 1999;85(6):317–21. http://dx.doi.org/10.1016/S0031-9406(05)67135-0. [Google Scholar]

- 17.Refshauge K. Reflections on the direction of research and PRI. Physiother Res Int. 2002;7(2):iii–v. doi: 10.1002/pri.241. http://dx.doi.org/10.1002/pri.241. Medline:12109239. [DOI] [PubMed] [Google Scholar]

- 18.Miller PA, McKibbon KA, Haynes RB. A quantitative analysis of research publications in physical therapy journals. Phys Ther. 2003;83(2):123–31. Medline:12564948. [PubMed] [Google Scholar]

- 19.Freda MC, Kearney M. An international survey of nurse editors' roles and practices. J Nurs Scholarsh. 2005;37(1):87–94. doi: 10.1111/j.1547-5069.2005.00006.x. http://dx.doi.org/10.1111/j.1547-5069.2005.00006.x. Medline:15813592. [DOI] [PubMed] [Google Scholar]

- 20.Wilkes MS, Kravitz RL. Policies, practices, and attitudes of North American medical journal editors. J Gen Intern Med. 1995;10(8):443–50. doi: 10.1007/BF02599916. http://dx.doi.org/10.1007/BF02599916. Medline:7472701. [DOI] [PubMed] [Google Scholar]

- 21.Yank V, Barnes D. Consensus and contention regarding redundant publications in clinical research: cross-sectional survey of editors and authors. J Med Ethics. 2003;29(2):109–14. doi: 10.1136/jme.29.2.109. http://dx.doi.org/10.1136/jme.29.2.109. Medline:12672892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Anderson P. ‘Gatekeepers’ and the quality of the journal literature: findings from a survey of journal editors into the issue of alleged excessive publication in scholarly and scientific journals. Ser Rev. 1997;23(2):45–57. http://dx.doi.org/10.1016/S0098-7913(97)90050-2. [Google Scholar]

- 23.Thomson Reuters. ISI Web of Knowledge: Journal Citation Reports 2011 [Internet] 2012. [cited 2012 Sep 26]. Available from: http://thomsonreuters.com/journal-citation-reports/.

- 24.Lai TY, Leung GM, Wong VW, et al. How evidence-based are publications in clinical ophthalmic journals? Invest Ophthalmol Vis Sci. 2006;47(5):1831–8. doi: 10.1167/iovs.05-0915. http://dx.doi.org/10.1167/iovs.05-0915. Medline:16638988. [DOI] [PubMed] [Google Scholar]

- 25.Dillman DA. Mail and Internet surveys: the tailored design method. 2nd ed. Hoboken (NJ): Wiley; 2007. [Google Scholar]

- 26.Frontera WR, Grimby G, Basford J, et al. Publishing in physical and rehabilitation medicine. Am J Phys Med Rehabil. 2008;87(3):215–20. doi: 10.1097/PHM.0b013e3181664f7f. http://dx.doi.org/10.1097/PHM.0b013e3181664f7f. Medline:18287818. [DOI] [PubMed] [Google Scholar]

- 27.Durward B. Progress by design. Physiother Res Int. 2001;6(2):v–vi. doi: 10.1002/pri.214. http://dx.doi.org/10.1002/pri.214. Medline:11436676. [DOI] [PubMed] [Google Scholar]

- 28.Crosbie J. Physiotherapy research: A retrospective look at the future. Aust J Physiother. 2000;46(3):159–64. doi: 10.1016/s0004-9514(14)60325-1. http://dx.doi.org/10.1016/S0004-9514(14)60325-1. Medline:11676800. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.