Primary care practices are turning toward team-based strategies such as panel management, in which nonclinicians address routine preventive and chronic disease care tasks for a group of patients. No known validated instruments have been published for measuring panel management implementation. The authors developed the 12-item Panel Management Questionnaire (PMQ) measuring 4 domains. Data were assembled from self-administered cross-sectional surveys of 136 staff and 204 clinicians in 9 county and 5 university adult primary care clinics. Staff and clinician PMQ scores in each clinic were correlated. The clinic-level median PMQ score was positively associated with a composite clinic quality measure.

Abstract

Context:

To meet demands for evidence-based chronic and preventive services and to improve performance, primary care practices are turning toward team-based strategies such as panel management, in which nonclinicians address routine preventive and chronic disease care tasks for a group of patients. No known validated instruments have been published for measuring panel management implementation.

Objective:

To describe development and evaluation of the Panel Management Questionnaire (PMQ), a self-report tool measuring panel management capability in primary care.

Design:

Cross-sectional study for questionnaire validation.

Main Outcome Measures:

We developed the 12-item PMQ from a conceptual framework to measure 4 domains of panel management and tested the questionnaire for internal and external validity. Data were assembled from self-administered cross-sectional surveys that included the PMQ instrument sent from February 2012 through May 2012. We surveyed 136 staff and 204 clinicians in 9 county and 5 university adult primary care clinics. Additional data came from clinic quality measures routinely collected the quarter before the survey.

Results:

The PMQ scale demonstrated good internal consistency (Cronbach α = 0.92 and 0.84 for staff and clinicians, respectively). Staff and clinician PMQ scores in each clinic were correlated (Kendall τ = 0.45, p < 0.05). The clinic-level median PMQ score was positively associated with a composite clinic quality measure (Kendall τ = 0.42 for staff, 0.28 for clinicians).

Conclusions:

The PMQ measures self-reported panel management capability and may be a useful assessment and research tool for panel management implementation in primary care practice.

INTRODUCTION

Despite widely accepted evidence-based guidelines in the US for chronic and preventive services, patients receive only 55% of those recommended. 1 To bridge this gap between demand and capacity, the concept of primary care has evolved to include population management, requiring a shift for clinicians to assume responsibility for the health of all patients assigned to their panel. 2 However, estimates suggest that a primary care physician working in a traditional practice model would spend 21.7 hours per day providing recommended services for a panel of 2500 patients. 3 – 5 A team-based care strategy with delegation of routine preventive and chronic care tasks to allied health personnel can increase capacity in primary care, 6 – 9 and it has been proposed as a way to address the demands for evidence-based chronic and preventive services. 10 , 11

Panel management is the tools and processes for population care applied at the level of a primary care panel. It involves identifying patients who have unmet preventive and chronic care needs and reaching out to them during or outside a clinic visit. 12 In the panel management model, a team made up of a primary care clinician and designated staff, often medical assistants, is collectively accountable for a defined panel of patients, and the nonclinician staff share increased responsibility for routine preventive and chronic care. Implementation of panel management has been linked to improvements across multiple quality measures, including rates of cancer screening, immunizations, diabetes care, cardiovascular disease care, blood pressure control, and smoking cessation counseling. 13 – 20 Panel management is emerging as a prominent component of transformed models of high-performing primary care 21 and is a requirement for achieving recognition as a patient-centered medical home. 22 In this environment, it is important to define and measure how clinics are achieving new care models.

The process of transitioning a practice to a team-based model can be challenging and time-intensive. The effectiveness of the team can vary, 23 and complex interventions have variable success rates with multiple determinants. 24 As clinics adopt panel management, they require validated instruments to measure practice capability, both as a tool for quality-improvement cycles and for research aimed at investigating how panel management affects clinical outcomes. We could find no validated instruments for measuring facets of panel management implementation. However, several approaches may be considered. One could examine clinic quality measures for preventive and chronic care services to evaluate whether panel management is effectively closing care gaps. However, if quality measures are faltering, this approach does not provide information about which upstream panel management components are not functioning well, limiting targeted improvements. Moving upstream, one could measure actual panel management practices using direct observation, but this is time- and resource-intensive, limiting practical clinical application. A third approach is to assess the capability of the practice for panel management on the basis of a conceptual model of its key components, which could then facilitate improvement in effectiveness.

We developed and tested the Panel Management Questionnaire (PMQ), a self-report instrument for primary care staff and clinicians to assess capability in panel management. The objective of this study was to assess the psychometric properties and internal validity of the PMQ instrument as well as its external validity by investigating its association with clinic performance in closing care gaps.

METHODS

Participants and Setting

We conducted a cross-sectional survey of staff and clinicians in primary care practices using a self-administered questionnaire between February 2012 and May 2012. The study included nine of ten clinics providing comprehensive adult primary care services in a county health department-administered system (one clinic not providing staff respondents was excluded) and all five comprehensive adult primary care practices in a university-administered system. At the time, most clinics had been oriented to the principles of panel management and encouraged to implement this care model. All practices were located in San Francisco, CA. All staff and clinicians at the clinics were invited to participate in the survey.

Panel Management Questionnaire Instrument

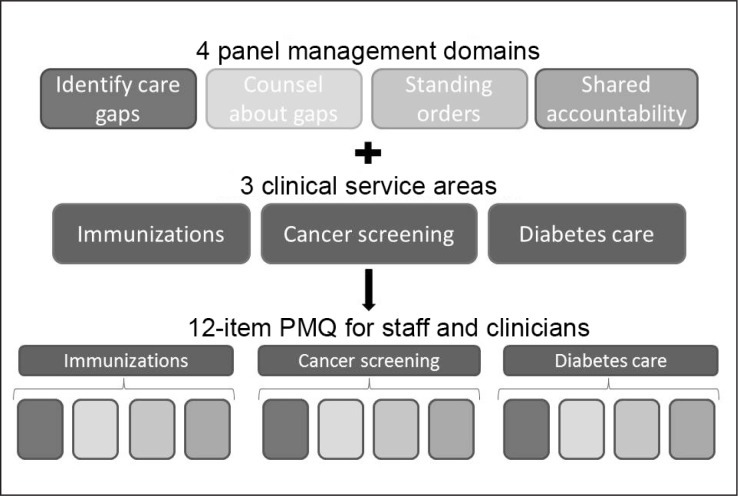

Guided by the literature and our own experiences facilitating the adoption of panel management, we developed the PMQ on the basis of a conceptual model of 4 domains of panel management. The domains were as follows: 1) Nonclinician staff assigned to panel management must have the ability to use patient registries and protocols to properly identify care gaps—patients who are not up to date on evidence-based preventive and chronic care services; 2) Once the care gaps are identified, the staff must be able to educate and counsel patients about these care gaps; 3) A practice must have standing orders authorizing staff to deliver or to place pending orders for services without waiting for the clinician to initiate an individual order for each patient; and 4) There must be a sense of shared accountability among staff and clinicians for quality of care, requiring both empowerment of the staff to fulfill this role and the clinicians’ trust in the staff to take responsibility for these tasks. The 12-item PMQ includes 1 item to represent each of these 4 domains, with each item applied to 3 representative service areas: immunizations, cancer screening, and diabetes care (Figure 1). For the domains of identifying gaps and educating patients, the staff items asked about confidence in performing these tasks, and the clinician items asked about confidence in staff members’ competence. For the other 2 domains, items inquired about staff members’ and clinicians’ endorsement of standing orders and shared responsibility. These 2 clinician questions used reverse wording to guard against acquiescent behaviors 25 as well as to more clearly assess clinician trust in the staff and acceptance of the shift in staff role.

Figure 1.

Development process for 12-item Panel Management Questionnaire (PMQ).

We developed survey questions through consultation with Medical Directors, clinical leaders, and practice facilitators from both systems. Four staff and 4 clinicians provided feedback on readability and face validity of the PMQ. A 10-point Likert scale was used for each item, with 10 indicating the highest level of agreement. We calculated a PMQ subscale score for each service type (eg, immunizations) by averaging the scores of the 4 domains for that service type, and a total PMQ score as the mean of all 12 items. Each question was weighted equally to calculate means. A score of 10 represented the greatest degree of panel management capability. Through review by staff and clinicians, and testing for face validity, the PMQ went through 9 iterations before reaching its final form. The final PMQ is available online at: www.thepermanentejournal.org/doi/10.7812/TPP/14-170#supplementary-materials.

Respondent Measures

The survey included items on respondent characteristics, including hours or shifts worked per week and tenure. Clinicians were categorized as resident physicians, attending physicians, or nurse practitioners/physician assistants. Staff were grouped into two categories to differentiate those working in medical assistant roles from those working in other roles (eg, front desk staff) using the question, “Do you room patients, take vitals, or contact patients between visits about their routine chronic and preventive care tests?” Only staff answering affirmatively were instructed to complete the PMQ portion of the survey because these are the staff responsible in these systems for panel management tasks. The survey was offered in both Web-based and paper form. Respondents were entered into a raffle for $25 gift cards.

Clinic Quality Measures

Clinic quality measures for the three service areas included in the PMQ were collected from routinely reported clinic data. The measures were rates of patients with up-to-date pneumococcal and tetanus, diphtheria, and acellular pertussis vaccinations; breast cancer, colon cancer, and cervical cancer screening; and for patients with diabetes, testing of hemoglobin A1c and low-density-lipoprotein cholesterol. A composite measure of clinic quality was calculated as the mean of all the rates. These measures were available only for the nine county-operated clinics because of an interruption in routine collection of quality measures amid electronic health record transitions at the university-based clinics during the study period. All clinic quality measures were reported in December 2012, two months before initial survey responses.

Data Analysis

We examined internal validity of the PMQ using individual respondents as the unit of analysis. Descriptive statistics of median and interquartile range were used to document ranges and distributions. We conducted an exploratory factor analysis for the PMQ items stratified by employment group (staff or clinician) and PMQ service type (immunization, cancer screening, and diabetes). Internal consistency for each PMQ service area subscale and the total PMQ scale was examined with Cronbach α. We considered an α of 0.60 as the minimum acceptable level of internal reliability.

We used Kendall τ to assess external validity, both to measure the degree of agreement between clinician and staff PMQ scores in each clinic and to measure the association between PMQ scores and related clinical quality measures for each clinic. Both used the clinic as the unit of analysis.

Data analysis was conducted using SPSS Version 20 (IBM, Armonk, NY) and Stata Version 12 (StataCorp, College Station, TX). The University of California, San Francisco Committee on Human Research approved the protocol. All individual-level responses were kept confidential. Clinic administrators received results aggregated at the level of their own clinic along with combined results for all clinics in their system for comparison.

RESULTS

Two hundred fifty of 398 staff (63%) and 204 of 359 clinicians (57%) responded to the survey. Of the 250 staff respondents, 136 reported working in direct patient care roles and were eligible for the PMQ. At the clinic level, the response rate ranged from 38% to 93% for staff and 43% to 100% for clinicians. The minimum number of respondents per clinic was 6 staff members and 2 clinicians, with smaller clinics having the highest response rates. Most of the staff worked 20 hours per week or more, whereas most of the clinicians worked 5 or fewer clinic sessions per week (Table 1). Table 2 shows clinic characteristics.

Table 1.

Participants’ characteristics

| Characteristic | Clinicians (n = 204) | Staff (n = 136) |

|---|---|---|

| Number of half-days of patient care/week, no. (%) | ||

| 1–2 half-days | 101 (49) | — |

| 3–5 half-days | 69 (34) | — |

| ≥ 6 half-days | 34 (17) | — |

| Work hours, no. (%) | ||

| < 20 hours/week | — | 14 (10) |

| ≥ 20 hours/week | — | 122 (90) |

| Tenure, no. (%) | ||

| < 1 year | 34 (17) | 28 (21) |

| 1–5 years | 89 (43) | 55 (40) |

| > 5 years | 81 (40) | 53 (39) |

| System, no. (%) | ||

| Public system | 133 (65) | 102 (75) |

| University system | 71 (35) | 34 (25) |

| EHR in transition (± 6 months), no. (%) | ||

| No | 190 (93) | 118 (87) |

| Yes | 14 (7) | 18 (13) |

| Clinician type, no. (%) | ||

| Attending physician | 101 (49) | NA |

| Nurse practitioner/physician assistant | 29 (14) | NA |

| Resident | 74 (37) | NA |

Staff were not asked this question about the number of half-days, only the work hours as listed below.

Clinicians were not asked this question about work hours, only number of half days as listed above.

EHR = electronic health record; NA = not applicable

Table 2.

Clinic characteristics (n = 14)

| Characteristic | Value |

|---|---|

| County system, no. (%) | 9 (64) |

| University system, no. (%) | 5 (36) |

| Number of clinicians, mean (range) | 26 (2–120) |

| Number of clinician FTEs, mean (range) | 5.1 (1.7–15.1) |

| Number of staff, mean (range) | 28 (8–61) |

| Serves as resident teaching clinic, no. (%) | 3 (21) |

| Annual visit volume, mean (SD) | 19,649 (13,784) |

This number includes residents from the teaching clinics, which contribute, on average, 51 clinicians to each of the largest 3 clinics.

Represents 11 clinics because the number of full-time equivalents was unavailable for 3 of the clinics, including 2 resident teaching clinics that were in the top 3 clinics by visit volume.

FTE = full-time equivalent; SD = standard deviation.

Descriptive data on PMQ items and factor-loading results for PMQ service-type subscales are shown in Table 3. Item scores tended to be higher among staff than clinicians, with the exception of the standing order items. In the exploratory factor analysis, eigenvalues consistently greater than 1.00 and scree plots suggested the presence of 1 factor in each service-type subscale. For staff, the PMQ subscale factor loadings were high, ranging from 0.76 to 0.92. For clinicians, factor loadings were all above 0.65 with the exception of scores for the standing order items, which ranged from 0.25 to 0.34.

Table 3.

Panel Management Questionnaire subscale descriptive statistics and factor loadings for staff and clinicians

| Survey respondent category | Immunizations | Cancer | Diabetes | |||

|---|---|---|---|---|---|---|

| Median (IQR) | Factor loading | Median (IQR) | Factor loading | Median (IQR) | Factor loading | |

| Staff | ||||||

| Identification of care gaps | 10.00 (2.00) | 0.86 | 9.00 (5.00) | 0.92 | 8.00 (5.75) | 0.92 |

| Counseling regarding gaps | 9.00 (2.00) | 0.87 | 8.50 (5.00) | 0.90 | 8.00 (5.00) | 0.89 |

| Standing orders to close gaps | 9.00 (6.00) | 0.78 | 9.00 (5.00) | 0.89 | 5.00 (8.00) | 0.76 |

| Shared accountability | 10.00 (4.00) | 0.86 | 9.00 (5.00) | 0.86 | 7.00 (8.00) | 0.88 |

| Clinicians | ||||||

| Identification of care gaps | 7.00 (5.00) | 0.86 | 7.00 (4.00) | 0.86 | 6.00 (5.50) | 0.85 |

| Counseling regarding gaps | 7.00 (4.00) | 0.80 | 5.00 (4.00) | 0.84 | 5.00 (4.00) | 0.84 |

| Standing orders to close gaps | 10.00 (2.00) | 0.25 | 9.00 (2.00) | 0.29 | 9.00 (3.00) | 0.34 |

| Shared accountability | 4.00 (7.00) | 0.66 | 3.00 (5.00) | 0.64 | 3.00 (5.00) | 0.33 |

Items measured using a Likert scale of 1 to 10, with higher scores indicating greater agreement.

Questions used negative wording and were reverse coded

IQR = interquartile range.

The PMQ demonstrated good internal consistency. Cronbach α for the PMQ total scale was 0.92 and 0.84 for staff and clinicians, respectively. Subscale score α ranged from 0.86 to 0.91 for staff and from 0.63 to 0.68 for clinicians (Table 4). Because of the acceptable level of the Cronbach α results and the theoretical importance of including all 4 domains of panel management in the staff and clinician PMQ scales, we retained the standing order items in the clinician PMQ scales despite their lower factor loading score.

Table 4.

Panel Management Questionnaire descriptive statistics and internal consistency

| Survey respondent category | Questionnaire scale or subscale | Median (IQR) | Cronbach α |

|---|---|---|---|

| Staff | Total scale | 9.00 (4.00) | 0.92 |

| Immunization subscale | 9.00 (3.00) | 0.86 | |

| Cancer subscale | 9.00 (4.50) | 0.91 | |

| Diabetes subscale | 7.00 (6.50) | 0.88 | |

| Clinicians | Total scale | 6.00 (3.88) | 0.84 |

| Immunization subscale | 7.00 (3.88) | 0.67 | |

| Cancer subscale | 6.00 (3.50) | 0.68 | |

| Diabetes subscale | 5.50 (4.50) | 0.63 |

Items measured using a Likert scale of 1 to 10, with higher scores indicating greater agreement.

IQR = interquartile range.

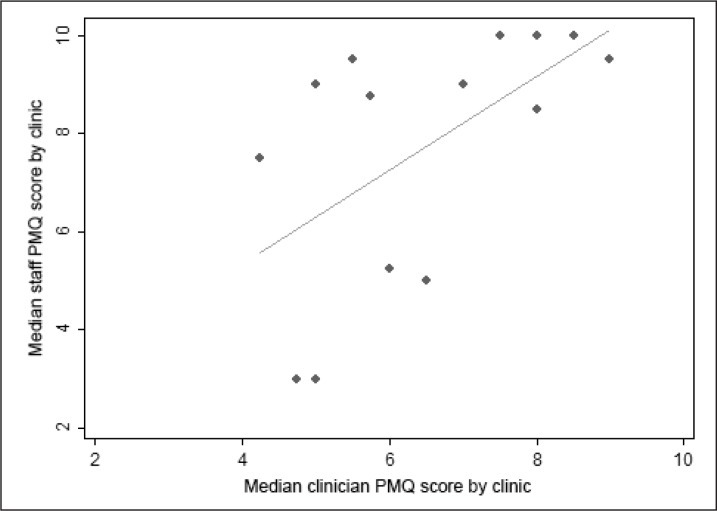

The median score for the overall 12-item PMQ was 9.0 (interquartile range = 4.00) for staff and 6.0 (interquartile range = 3.88) for clinicians (Table 4). Median service-type PMQ subscale scores were highest for immunizations, followed by cancer screening and then diabetes care among both staff and clinicians. In analysis of results at the clinic level, the median of staff total PMQ scores ranged from 3.0 to 10.0, and the median clinician PMQ scores ranged from 4.3 to 8.5 (Figure 2). Staff and clinician total PMQ scores in each clinic were moderately correlated (Kendall τ = 0.45, p < .05). In the county clinics, we found support for external validity, with a trend for higher staff and clinician median PMQ scores at the clinic level to be associated with better clinic quality of preventive and chronic care (Kendall τ = 0.42 and 0.28, respectively, for correlation of median staff and clinician PMQ scores with composite clinic quality measures).

Figure 2.

Median Panel Management Questionnaire (PMQ) total score for staff and clinicians across clinics.

Diagonal line = fitted values; dot = individual clinic.

DISCUSSION

Our study supports the internal and external validity of the PMQ as an instrument to measure self-reported degree of panel management capability in primary care practices. Factor analysis indicated that items generally loaded well on subscales, and the subscales and summary scale had very good internal reliability. The correlation between staff and clinician scores at the clinic level indicate a convergence between clinician and staff perspectives. This convergence from workers employed in different roles in a clinic supports the notion that the PMQ is measuring perceptions of a shared construct of panel management capability. The association with clinic quality measures previously shown to be improved by panel management supports the external validity of the PMQ.

The PMQ for clinicians appears to have less robust internal and external validity than the PMQ for staff. One reason may be that staff are better at assessing their own abilities of carrying out panel management, whereas clinicians, one step removed from the process, may less accurately assess staff or simply be unaware of what occurs when they are not in the room. In addition, the clinician PMQ included two negatively worded items to minimize the problems of inattention and acquiescence by respondents. Reverse coding can adversely affect reliability 26 and may have contributed to the low score in the exploratory factor analysis for the clinician standing orders item. This low score may also reflect the complexity of the question in that a clinician is asked whether the staff should be using standing orders, which is less concrete than the other three questions, perhaps assessing the ideal of what panel management could be vs the reality of what it is. This hypothesis is supported by the consistently higher score on the clinician standing order question than on the other three questions (Table 3), probably reflecting clinician acceptance of panel management rather than the current status of standing order utilization. We elected to retain the “standing orders” question as part of the clinician PMQ both to maintain parallel questions between the staff and clinician surveys and because of the question’s usefulness as an assessment of clinician buy-in.

Practices and investigators could consider surveying only staff when assessing panel management capability. However, we believed it important to include the clinician PMQ even though less psychometrically robust because it may still be useful for practice coaching. Practicewide acceptance of a new team structure is necessary for successful implementation, and both staff and clinician perspectives may give insight into areas amenable to focused training in the practice. For example, in one of the surveyed practices, the disparity between staff and clinician assessments sparked an important conversation among clinicians about how they can be assured that the staff are capable in their role as panel managers.

Subscale scores for immunizations were consistently higher than for cancer screening, which, in turn, had higher scores than for diabetes care. This pattern has face validity in that of the three, panel management of diabetes care is the most complex to master. In our experience, most clinics begin panel management work with immunizations, where care gap identification is dependent primarily on age and previous vaccination, and then proceed to cancer screening, which adds duration since last screening and knowledge of previous positive or negative test result to the algorithm. For diabetes care, in which care gap identification requires an understanding of target hemoglobin A1c and low-density-lipoprotein cholesterol levels, knowledge of past laboratory values, and duration since last laboratory testing, the algorithm is more complex. Because of this, as well as the need for additional monitoring of blood pressure, foot care, and ophthalmologic evaluation, clinics often reach this stage of panel management later.

The PMQ holds promise as a tool both for the pragmatic assessment and facilitation of panel management implementation and for research to better understand the process and outcomes of panel management. As a measure of practice capability, the PMQ may have particular application to efforts to redesign primary care practice. Practices could use the PMQ to measure baseline capability for panel management, identifying elements that are least developed and most likely to benefit from focused training and facilitation. The PMQ could also be used to track progress in developing capability for panel management in response to implementation efforts. For researchers, the PMQ could allow a time- and cost-efficient tool to further our understanding of predictors and outcomes of panel management capability. Finally, a major strength of the PMQ is its association at the clinic level with quality measures, which are often routinely reported for performance assessments. The positive trends for this association in our study suggest that practices with higher PMQ scores are very likely doing better at actual panel management, which would be one reason they achieve better quality scores. Although the correlation was not statistically significant, the analysis was limited by low statistical power (n = 9) at the clinic level.

LIMITATIONS

A limitation of our study is that we did not directly observe panel management practices to determine whether PMQ scores correlate at the individual, team, or clinic level with objective measures of staff practices. However, direct observation methods have their own limitations. There is no validated instrument for quantitatively scoring direct observation of panel management. We performed our study in a real-world context of facilitating adoption of panel management. Although we conducted direct observation in a limited manner to spot-check panel management implementation, the observations needed to create stable quantitative scores at the team or clinic level would require resources beyond the capabilities of most practice-based groups striving to evaluate primary care improvements. Moreover, some of the elements measured by the PMQ cannot be assessed through direct observation. In addition to correlating scores with clinic quality measures, one could consider a measure to assess patient experience of proactive care as a way to verify penetration of implementation.

Additional limitations of this study include respondents representing primary care clinics in only one city, which may limit generalizability. However, the sample was heterogeneous in that it included two different health systems and clinics of various sizes and in various stages of panel management adoption. Future directions can include verification of PMQ validity in additional patient populations and clinic types. The survey response rate was as low as 38% for staff and 43% for clinicians by clinic, which may have limited the precision of clinic-level estimates for testing external validity and introduced an element of nonresponse bias. However, overall response rates were comparable to or higher than response rates in other published surveys of clinicians. 27 The strong agreement between staff and clinicians in each clinic was reassuring. A final limitation was the lack of routinely available quality measures for the university system at the time the study was conducted.

CONCLUSION

These results suggest that the PMQ is a valid tool to measure self-reported panel management capability, including the knowledge, system design, and philosophical acceptance of staff performing panel management. The PMQ may serve as a measure for research and evaluation that can be linked to downstream clinical quality measures. It can also be applied to efforts to redesign primary care practices through focusing on facilitation of and tracking progress in the adoption of panel management as one of the building blocks of high performing primary care.

Supplementary Material

Panel Management Questionnaires

Acknowledgments

Without the close partnership of the leadership and staff of these 14 primary care clinics across San Francisco, this research would not be possible. The wisdom, expertise, and candid feedback of their clinic leadership, clinicians, and front-line staff were critical to the development of the Panel Management Questionnaire. The study team thanks the San Francisco Department of Public Health and the University of California San Francisco Medical Center for their support of this project.

Kathleen Louden, ELS, of Louden Health Communications provided editorial assistance.

Footnotes

Disclosure Statement

The author(s) have no conflicts of interest to disclose. The survey for this project was done in conjunction with a primary care transformation initiative supported by the Centers for Medicare and Medicaid Services Incentive Program under the Section 1115 California Medicaid Waiver. Dr Rogers was a primary care research fellow funded by NRSA grant T32 HP19025.

References

- 1. McGlynn EA, Asch SM, Adams Jet al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003. June 26 348(26):2635-45 DOI: 10.1056/NEJMsa022615. . [DOI] [PubMed] [Google Scholar]

- 2. Chen EH, Bodenheimer T. Improving population health through team-based panel management: comment on “Electronic medical record reminders and panel management to improve primary care of elderly patients”. Arch Intern Med. 2011. September 26 171(17):1558-9 DOI: 10.1001/archinternmed.2011.395. . [DOI] [PubMed] [Google Scholar]

- 3. Yarnall KS, Pollak KI, Østbye T, Krause KM, Michener JL. Primary care: is there enough time for prevention? Am J Public Health. 2003. April 93(4):635-41 DOI: 10.2105/AJPH.93.4.635. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Yarnall KS, Østbye T, Krause KM, Pollak KI, Gradison M, Michener JL. Family physicians as team leaders: “time” to share the care. Prev Chronic Dis. 2009. April 6(2):A59 . [PMC free article] [PubMed] [Google Scholar]

- 5. Østbye T, Yarnall KS, Krause KM, Pollak KI, Gradison M, Michener JL. Is there time for management of patients with chronic diseases in primary care? Ann Fam Med. 2005. May-Jun; 3(3):209-14 DOI: 10.1370/afm.310. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Bodenheimer T. Helping patients improve their health-related behaviors: what system changes do we need? Dis Manag. 2005. October 8(5):319-30 DOI: 10.1089/dis.2005.8.319. . [DOI] [PubMed] [Google Scholar]

- 7. Bodenheimer T, Pham HH. Primary care: current problems and proposed solutions. Health Aff (Millwood). 2010. May 29(5):799-805 DOI: 10.1377/hlthaff.2010.0026. . [DOI] [PubMed] [Google Scholar]

- 8. Ghorob A, Bodenheimer T. Sharing the care to improve access to primary care. N Engl J Med. 2012. May 366(21):1955-7 DOI: 10.1056/NEJMp1202775. . [DOI] [PubMed] [Google Scholar]

- 9. Altschuler J, Margolius D, Bodenheimer T, Grumbach K. Estimating a reasonable patient panel size for primary care physicians with team-based task delegation. Ann Fam Med. 2012. Sep-Oct; 10(5):396-400 DOI: 10.1370/afm.1400. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Barnes KA, Kroening-Roche JC, Comfort BW. The developing vision of primary care. N Engl J Med. 2012. September 6 367(10):891-3 DOI: 10.1056/NEJMp1204487. . [DOI] [PubMed] [Google Scholar]

- 11. Ghorob A, Bodenheimer T. Share the CareTM: building teams in primary care practices. J Am Board Fam Med. 2012. Mar-Apr; 25(2):143-5 DOI: 10.3122/jabfm.2012.02.120007. . [DOI] [PubMed] [Google Scholar]

- 12. Neuwirth EE, Schmittdiel JA, Tallman K, Bellows J. Understanding panel management: a comparative study of an emerging approach to population care. Perm J. 2007. Summer; 11(3):12-20 DOI: 10.7812/TPP/07-040. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kanter M, Martinez O, Lindsay G, Andrews K, Denver C. Proactive office encounter: a systematic approach to preventive and chronic care at every patient encounter. Perm J. 2010. Fall; 14(3):38-43 DOI: 10.7812/TPP/10-036. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lasser KE, Murillo J, Lisboa Set al. Colorectal cancer screening among ethnically diverse, low-income patients: a randomized controlled trial. Arch Intern Med. 2011. May 23 171(10):906-12 DOI: 10.1001/archinternmed.2011.201. . [DOI] [PubMed] [Google Scholar]

- 15. Hoffman RM, Steel SR, Yee EFet al. A system-based intervention to improve colorectal cancer screening uptake. Am J Manag Care. 2011. January 17(1):49-55 . [PubMed] [Google Scholar]

- 16. Jean-Jacques M, Kaleba EO, Gatta JL, Gracia G, Ryan ER, Choucair BN. Program to improve colorectal cancer screening in a low-income, racially diverse population: a randomized controlled trial. Ann Fam Med. 2012. Sep-Oct; 10(5):412-7 DOI: 10.1370/afm.1381. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Baker AN, Parsons M, Donnelly SMet al. Improving colon cancer screening rates in primary care: a pilot study emphasizing the role of the medical assistant. Qual Saf Health Care. 2009. October 18(5):355-9 DOI: 10.1136/qshc.2008.027649. . [DOI] [PubMed] [Google Scholar]

- 18. Feldstein AC, Perrin NA, Unitan Ret al. Effect of a patient panel-support tool on care delivery. Am J Manag Care. 2010. October 1 16(10):e256-66 . [PubMed] [Google Scholar]

- 19. Loo TS, Davis RB, Lipsitz LAet al. Electronic medical record reminders and panel management to improve primary care of elderly patients. Arch Intern Med. 2011. September 26 171(17):1552-8 DOI: 10.1001/archinternmed.2011.394. . [DOI] [PubMed] [Google Scholar]

- 20. Chuang E, Ganti V, Alvi A, Yandrapu H, Dalal M. Implementing panel management for hypertension in a low-income, urban, primary care setting. J Prim Care Community Health. 2014. January 1 5(1):61-6 DOI: 10.1177/2150131913516497. . [DOI] [PubMed] [Google Scholar]

- 21. Willard R, Bodenheimer T. The building blocks of high-performing primary care: lessons from the field [Internet]. Oakland, CA: California HealthCare Foundation; 2012. April [cited 2014 Dec 10]. Available from: www.chcf.org/publications/2012/04/building-blocks-primary-care. [Google Scholar]

- 22. Standards for patient-centered medial home (PCMH) [Internet]. Washington, DC: National Committee for Quality Assurance; 2011. [cited 2013 Apr 2]. Available from: www.iafp.com/pcmh/ncqa2011.pdf. [Google Scholar]

- 23. Shortell SM, Marsteller JA, Lin Met al. The role of perceived team effectiveness in improving chronic illness care. Med Care. 2004. November 42(11):1040-8 DOI: 10.1097/00005650-200411000-00002. . [DOI] [PubMed] [Google Scholar]

- 24. Helfrich CD, Weiner BJ, McKinney MM, Minasian L. Determinants of implementation effectiveness: adapting a framework for complex innovations. Med Care Res Rev. 2007. June 64(3):279-303 DOI: 10.1177/1077558707299887. . [DOI] [PubMed] [Google Scholar]

- 25. Cronbach LJ. Further evidence on response sets and test design. Educational and Psychological Measurement. 1950. April 10(1):3-31 DOI: 10.1177/001316445001000101. [DOI] [Google Scholar]

- 26. Weems GH, Onwuegbuzie AJ. The impact of midpoint responses and reverse coding on survey data. Measurement and Evaluation in Counseling and Development. 2001. October 34(3):166-76. [Google Scholar]

- 27. Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997. October 50(10):1129-36 DOI: 10.1016/S0895-4356(97)00126-1. . [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Panel Management Questionnaires