Abstract

Background

Survey research in healthcare is an important tool to collect information about healthcare delivery, service use and overall issues relating to quality of care. Unfortunately, physicians are often a group with low survey response rates and little research has looked at response rates among physician specialists. For these reasons, the purpose of this project was to explore survey response rates among physician specialists in a large metropolitan Canadian city.

Methods

As part of a larger project to look at physician payment plans, an online survey about medical billing practices was distributed to 904 physicians from various medical specialties. The primary method for physicians to complete the survey was via the Internet using a well-known and established survey company (www.surveymonkey.com). Multiple methods were used to encourage survey response such as individual personalized email invitations, multiple reminders, and a draw for three gift certificate prizes were used to increase response rate. Descriptive statistics were used to assess response rates and reasons for non-response.

Results

Overall survey response rate was 35.0%. Response rates varied by specialty: Neurology/neurosurgery (46.6%); internal medicine (42.9%); general surgery (29.6%); pediatrics (29.2%); and psychiatry (27.1%). Non-respondents listed lack of time/survey burden as the main reason for not responding to our survey.

Conclusions

Our survey results provide a look into the challenges of collecting healthcare research where response rates to surveys are often low. The findings presented here should help researchers in planning future survey based studies. Findings from this study and others suggest smaller monetary incentives for each individual may be a more appropriate way to increase response rates.

Keywords: Survey methodologies, Healthcare, Response rate, Specialists, Physicians

Background

With the rise of the Internet and email in recent decades, online and web-based tools offer promising advances for healthcare survey research methods [1,2]. Immediate survey delivery, real-time data tracking and inexpensive costs are selling points of email or web-based surveys [2]. Electronic surveys may also increase response rates through ease of access, as well as greater individual anonymity compared to face-to-face or telephone interviews [2,3]. Despite the increased use of web-based surveys, considerable debate about the success and usefulness of this type of survey mode exists [4-6]. Studies using both email and mail paper surveys demonstrate conflicting evidence as to whether email surpasses mail as a delivery modality [6]. Survey response rates have also in general been on the decline for the past decade in the field of health related research [1,6-8].

Despite the declining use of survey research methods, this type of research remains an important way of research to gather information about physicians’ knowledge, attitudes, and to evaluate the impact of clinical research on practice [9]. Soliciting physician input is also essential when existing healthcare policies are being updated or to inform new policies [10]. Unfortunately, physicians are a professional group with low survey response rates in general [11,12]. While family doctors have typically had low survey response rates [12,13], specialist physicians historically have demonstrated variability in response rates [14-17]. Kellerman and Herold [8] reviewed the variability of demographic characteristics on physician responses to surveys and found that medical specialty type was not associated with response rates. There is a clear dispute in the literature as to whether survey recruitment methods that are successful with general practitioners are also successful with other physician specialties. Also it is actually uncommon in the survey methodology literature to see surveys conducted across multiple physician specialties. Our study allowed us to look at variations in response rate across multiple specialties and in addition, our recruitment methodology was unique in that individualized/personalized emails were sent to each physician. As survey research with specialist physician groups is on the rise [18-20], more survey research involving physician specialists is needed in order to understand the reasons for differences in response rates within this medical group. Specifically, identifying new recruitment methods that may be uniquely related to the physician specialist groups is needed.

Differential effects by medical specialty may be the result of several factors including preference for survey mode, survey design, survey length and potential confounding factors such as the gender of respondents [6,8,21]. For example, in one survey study of family and specialist physicians, pediatricians not only had higher response rates overall and within the promised-incentive group but were also the least sensitive to the timing of the incentive. One possible reason for this is that the response rate may be confounded by gender; women may be more likely to respond to surveys than men and women make up a larger proportion of pediatricians [21].

Response rate can be further affected by the survey topics. When the topic is of high interest to respondents, potential respondents are more likely to respond to the survey [6,22-24]. In addition, whether survey topics are sensitive or non-sensitive or concern attitude or fact is likely to affect response rates in web surveys [6,22,24]. For example, obtaining data on physicians’ billing practices is often challenging due to the sensitive nature of the topic [25]. Only a handful of studies have examined and compared survey response rates among physician specialists, in whom different survey methodologies may be necessary to achieve acceptable response rate [14,26].

As part of a larger project assessing the impact of physician billing practices on the completeness of administrative data, we sought to examine response rates to a web-based survey developed for medical and surgical specialists. The survey was designed to gather demographic and billing information from physician specialists in Fee-for-Service (FFS) and Alternative Payment Plans (APP). The recent introduction of Alternative Payment Plans across Canada has changed the way that many physicians are reimbursed and subsequently the process of physician billing [27]. Physicians on APPs are encouraged to submit claims for the services they provide called “shadow bills”, for administrative purposes. Unfortunately, with these new APPs, they are not compensated for the time spent recording the services they deliver (i.e. shadow billing).

The objective of our study was to assess billing practices amongst a large group of medical and surgical specialists using a modified version of the Dillman method [23,28]. Our hypothesis was that the response rate to our web-based survey would be low due to the sensitive nature of the topic under investigation (i.e. physician billing practices). Here we discuss the results from our study findings including survey methodology and response rates, and we explore reasons for non-response.

Methods

Physicians’ survey

Survey design: The initial three-page survey was reviewed and refined for content validity by a working group of eight senior researchers and practicing physicians from the various relevant specialties [i.e. intensive care unit (ICU), internal medicine, neurology and neurosurgery, pediatrics, psychiatry and general surgery]. The refinement process included a content review from all stakeholders listed as co-investigators for the project.

The final survey gathered information regarding physicians’ billing status (FFS versus APP), whether they are obligated to shadow bill as part of their APP contract (if applicable), whether incentives are provided to them to shadow bill (if applicable) and demographic information. The email survey design and layout included two pages of questions. The primary method of accessing and completing the survey was via the Internet using a well-known and established survey company (www.surveymonkey.com). The survey company hosted and collected the survey data and only participants who were sent the email could connect to the hyperlink and respond to the questionnaire. However, if physicians had trouble accessing or completing the online survey, a paper version could be requested by mail or fax. Ethical approval for the study was obtained from the Office of Medical Bioethics at the University where the study took place out of.

Survey participants: The following physician specialties were targeted: ICU, internal medicine, neurology and neurosurgery, pediatrics, psychiatry and general surgery. We restricted our sample to these specialties as they were established APP and FFS programs and their specialty is a potential confounding factor for shadow billing behaviour and these specialty groups had a large number of registered physicians in a large Canadian city where the study took place.

Inclusion criteria were: 1) physicians employed and practicing in 2009; 2) on an APP or FFS payment plan and; 3) physicians providing inpatient or outpatient (i.e. clinic) services based at one of the four acute care hospitals in the city where the study took place. Exclusion criteria were: 1) general practitioners (as the majority are remunerated by FFS system and did not fit into the scope or budget of our study) and; 2) medical trainees (i.e. medical students, residents, and fellows) as the majority of them do not submit billings.

Respondent Sampling: The survey sampling frame was generated using a list of physicians from the 2008 Canadian Medical Directory. The original list included 1012 physicians, their clinic/hospital appointment, specialty, as well as contact information. Because the contact information on the list is not updated regularly, the information (i.e. phone, address, email) and specialty was further verified through the latest faculty/department contact lists, and physician contact directories posted on websites of Alberta Health Services, hospitals and the College of Physicians of Alberta website. After the verification of contact information, 108 physicians were excluded due to incorrect contact information or unavailable contact information. The final population of physicians targeted included 904 physicians (324 internists, 58 neurologists/neurosurgeons, 171 pediatricians, 118 psychiatrists and 233 general surgeons).

Survey administration and recruitment strategy

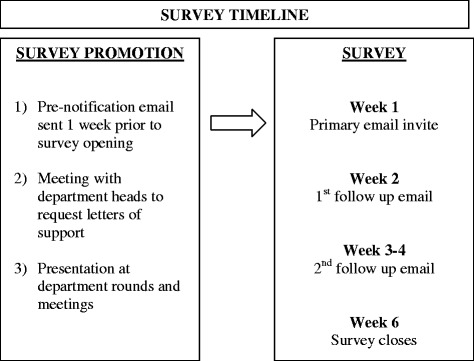

Survey promotion and process: Figure 1 outlines the survey invitation process and timeline. A website containing the project information, investigators’ contact information, and a link to the survey was developed. Meetings were held with department heads for each medical/surgical group to discuss the study and obtain letters of support. Meetings and presentations promoting the survey were also organized with the various medical departments. All the initial email invitations contained a link to the study website which provided further information regarding the study and research team, and a link to access the survey. Additionally, all emails contained the eligibility criteria for participating physicians, the opening and closing dates for access to the survey, and a unique identification number for each participant.

Figure 1.

Methodology of survey invitation.

The main strategy to promote the website and survey was to involve key individuals (i.e. influential physicians from each medical group included in the study) to facilitate, encourage, and support their colleagues, department heads, and other physicians to complete the survey. The emails were addressed and sent individually to each physician by name, thereby avoiding any issues with confidentiality which can be a challenge with mass emailing lists. Emails were also sent using personalized subject headings. The key physicians who were supporting the promotion of the survey authorized their names to be used in the subject heading (i.e. Dr. X is asking for your help). Supporting physicians’ names used in the subject heading were representative of all the medical departments/specialties involved in the study.

The goal of using a familiar physician’s name to personalize the invitation was to add peer influence and to increase the likelihood of physicians’ reading the email and consenting to participate. A generic subject heading listing the funding body (i.e. xxx funded research project) was also used in cases where the use of an individual physician’s name was not deemed appropriate. All responding inquiries or comments were directed primarily to the lead research coordinator and physician co-investigators. Physicians were given the opportunity to submit their unique study ID number with the submission of their survey, and by doing so their names were entered into a one-time draw for three $200.00 (Canadian) gift certificates to a local bookstore.

Finally, after the allotted time period passed to respond to the initial survey invite, a secondary survey was sent to all eligible non-respondents asking them to identify the reasons for not participating in the original survey. The actual question that was sent via email was “In a few words or sentences, please indicate why you were unable or unwilling to complete the survey.” Physician specialist responses were gathered qualitatively.

Statistical analysis

Descriptive statistics were used to describe specialty response rates and reasons for non-response in current case study. Chi-square analysis was used to examine differences between specialists by demographic characteristics or payment plan group. We compiled results from a secondary survey examining physicians’ reasons for non-response and categorized comments into five broader themes based on degrees of commonality within responses. All statistical analyses were conducted using Stata IC, Version 12 (StataCorp LP, College Station, TX).

Results

Initial physician survey

Of the 904 eligible physicians contacted, 317 eligible physicians responded to the survey, for an overall response rate of 35.0%. Table 1 outlines the baseline demographic characteristics of the survey respondents. The majority of respondents were male (55.1%) between the ages of 40 and 59 (51%). A large proportion of physicians had been in practice for more than 15 years (44.6%), with only 18.3% in practice less than 5 years. The majority of physicians (47.7%) were remunerated on a full-time APP plan and 38.1% were paid on a FFS plan (see Table 1).

Table 1.

Demographic characteristic of survey respondents

| Physician specialty | Physicians (% of 312) |

|---|---|

| Surgery | 28.2 |

| Internal medicine | 44.6 |

| Paediatrics | 18.0 |

| Psychiatry | 9.3 |

| Age* | |

| 30-39 years | 22.1 |

| 40-59 years | 51.0 |

| More than 59 years | 9.6 |

| Missing data | 17.3 |

| Sex* | |

| Male | 55.1 |

| Female | 27.6 |

| Missing data | 17.3 |

| Length of practice | |

| <5 year | 18.3 |

| 5-14 years | 37.2 |

| ≥15 years | 44.6 |

| Payment plan | |

| Fee-for-Service | 38.1 |

| Alternative Payment Plan (Part)* | 14.1 |

| Alternative Payment Plan (Full)* | 47.7 |

*Note: Alternative Payment Program (APP), Fee-For-Service Payment Program (FFS).

*Age percentage calculated among individuals with non-missing data; 54 individuals (17.3% of total sample) did not provide information.

*Sex percentage calculated among individuals with non-missing data; 54 individuals (17.3% of total sample) did not provide information.

Table 2 outlines the characteristics of physician survey respondents by type of payment plan. Internal medicine specialists on a full-time APP plan were most likely to respond (54%), followed by internal medicine specialists on a part-time APP plan (38.6%) and surgery (35.3%) and internal medicine (35.3%) specialists on a FFS plan. Psychiatrists on both full-time APP (8.7%) and FFS (9.2%) plans were least likely to respond to the survey. Physician specialists aged 40–59 years on both APP part-time (63.3%) and full-time (51%) plans were more likely to respond to the survey. FFS physicians (46.2%) in the 40–59 years of age category were also more likely to respond than other age categories. Male physician specialists had statistically significant higher levels of response across all payment plans (APP full 51.7%; APP part 54.5%; FFS 59.7%) compared to their female counterparts. Finally, those physician specialists with more than 15 years in practicing medicine were more likely to respond. Part-time APP physicians (50%) with the highest response rate followed by FFS (47%) and full-time APP physicians (41%).

Table 2.

Characteristic of physician specialists by payment program

| *FFS (% of 119) | *Part APP (% of 49) | *Full APP (% of 149) | P-value | |

|---|---|---|---|---|

| Proportion of physicians | 38.1 | 14.1 | 47.7 | |

| Physician specialty | ||||

| Surgery | 35.3 | 27.3 | 22.8 | |

| Internal medicine | 35.3 | 38.6 | 54.0 | |

| Paediatrics | 20.2 | 22.7 | 14.8 | |

| Psychiatry | 9.2 | 11.4 | 8.7 | 0.217 |

| Age* | ||||

| 30-39 years | 22.7 | 15.9 | 23.5 | 0.174 |

| 40-59 years | 46.2 | 63.3 | 51.0 | |

| More than 59 years | 9.2 | 2.2 | 12.1 | |

| Missing data | 21.8 | 18.2 | 13.4 | |

| Sex* | ||||

| Male | 59.7 | 54.5 | 51.7 | <0.05 |

| Female | 18.5 | 27.3 | 34.9 | |

| Missing data | 21.8 | 18.2 | 13.4 | |

| Length of practice | ||||

| <5 year | 37.0 | 38.6 | 36.9 | 0.456 |

| 5-14 years | 16.0 | 11.4 | 22.1 | |

| ≥15 years | 47.0 | 50.0 | 41.0 |

*Note: Alternative Payment Program (APP), Fee-For-Service Payment Program (FFS).

*Age percentage calculated among individuals with non-missing data; 54 individuals (17.3% of total sample) did not provide information.

*Sex percentage calculated among individuals with non-missing data; 54 individuals (17.3% of total sample) did not provide information.

The response rates by timing of reminders for the medical specialty groups are shown in Table 3. Internal medicine (33.6%) and neurology/neurosurgery (34.5%) had the highest response rates following the 1st follow-up/reminder email. Following the 2nd follow-up/reminder email, general surgery and pediatrics response rates increased the most. Overall response rates obtained by specialties were: Neurology/neurosurgery (46.6%), internal medicine (42.9%), general surgery (29.6%), pediatrics (29.2%) and psychiatry (27.1%).

Table 3.

Shadow billing survey response rates per medical specialty

| Internal medicine total n = 324 N (%) | Neurology/neurosurgery total n = 58 N (%) | Pediatrics total n = 171 N (%) | Psychiatry total n = 118 N (%) | General surgery total n = 233 N (%) | |

|---|---|---|---|---|---|

| *Response rate after 1st email follow up (1 week) | 109 (33.6%) | 20 (34.5%) | 12 (7.0%) | 9 (7.6%) | 31 (13.3%) |

| Response rate after 2nd follow up (3–4 week) | 134 (41.4%) | 25 (43.1%) | 50 (29.2%) | 22 (18.6%) | 66 (28.3%) |

| Total response rate(%) | 139 (42.9%) | 27 (46.6%) | 50 (29.2%) | 32 (27.1%) | 69 (29.6) |

*Overall response rate was 35.0% (317/904), total response rate (%) row is a result of additive responses from first and second email follow up reminders.

Of the physicians who responded to the initial survey, 82.6% (262/317) provided their unique identification number to be entered into the lottery draw.

Follow up survey of non-respondents

Sixty-three physicians responded to the secondary survey aimed at exploring reasons for non-response, for a response rate of 11.8% (n = 63/533). Of those who responded to the follow-up survey (n = 63), 70.5% were males and 29.5% were females. Respondents for this follow-up survey were from the following specialties: Internal medicine (34.2%), general surgery (27.8%), neurology/neurosurgery (0.03%), pediatrics (18.2%) and psychiatry (16.9%). Reasons for non-response were survey burden, with 60.3% of respondents reporting that there were too many survey requests and they lacked the time to complete them; 15.9% believed they were not eligible; 12.7% had no interest or saw no benefit to completing the survey; 7.9% felt the survey was asking information which was too private; and, 3.2% did not know their billing mechanism in order to complete the survey. It should be noted that in the current study, a handful of physicians (n = 5) responded unfavorably to the offer of a lottery draw incentive, finding it offensive and unethical.

Discussion

We conducted an online survey using a personalized invitation email strategy (with web-based survey) in addition to various other recruitment methods (multiple follow-up/reminders, lottery draw). These strategies have been used in prior survey studies to increase physician response rates [29,30]. The 35.0% response rate for our own survey was lower than anticipated, but in view of the sensitive nature of the topic under investigation, it was not unexpected. Our response rate is still higher or comparable to similar studies using email as a distribution mode among physician specialists [31,32].

The sensitive nature of our topic (i.e. physician billing practices) and the time-period during which our survey was conducted most likely contributed to a lower response rate. Survey research shows that survey topics which are sensitive or non-sensitive or concern attitude or fact is likely to affect response rates in web surveys [6,24]. According to several meta-analyses, the salience of a topic is one of the most important factors that influence response rates in both mail and web surveys [6,22,23,33,34]. The contract renewal period for all physicians’ on APPs occurred during the months in which our survey opened. This may have affected response rates, as physicians may have been cautious about consenting to have their billing practices reviewed for the purposes of our study, as the contract renewal process directly examined the quality of physician’s billing submissions. Despite this challenge, many of the responding physicians contacted us to ask questions about our study after the initial survey invitation, indicating they were interested in our study topic and requested having the results sent to them. In one case, a face-to-face interview was set up with a physician to discuss the details of the study. Given our project resources, email was the most efficient, inexpensive and timely manner of contacting survey participants. Tracking, managing, and organizing the incoming data was simple and was ideal for the short project timeframe.

Results from studies of previous web-based surveys indicate similar trends in response rates to the current study. In one meta-analyses, the mean response rate for 68 web-based surveys reported in 49 studies was 39.6% [22], similar to our current findings. In Kellerman et al. [8], the response rates for general practitioners and specialists were 40.1% (186/464) and 49.6% (235/474), respectively. Response rates among specialist physicians vary within the literature [21]. In one study, a mail survey, pediatricians had higher response rates compared to general practitioners, internists and obstetrics-gynecology physicians and were also the least sensitive to the timing of the incentive [21]. In the current study, neurologists and internists had the highest levels of response. This may also be due to gender differences in that a large proportion of women in those specialties responded to the questionnaire compared to the other specialties. Our findings are in concordance with previous literature which suggests women physicians may be more likely to respond to surveys than male physicians [6,21]. Additionally in the current study, internal medicine and pediatrics had some of the most longstanding Alternative Payment Programs (i.e. established in 2003–2005), which may have lessened the concern of physicians in responding to a survey about billing behaviors making them more likely to respond compared to the other specialties.

Among published studies involving physician as respondents, survey response rates seem to be most influenced by the use of individual monetary incentives [35-37]. For example, a US study found that the provision of a small ($2.00) monetary incentive sent to each physician invited to participate, yielded a substantially greater response rate (56.0% vs. 44.0 %) than the lottery draw of a larger, one-time, cash incentive [38]. It has been proposed that in order to achieve the response rates needed to validate health care policy-related research using survey methodology, the offer of monetary incentives may become a necessary part of the research process [39,40]. A recent Canadian study examining the use of a substantial monetary lottery incentive among physicians did not find that financial incentives improved response rate. In fact, response rates were lower in the following year (35.9% in 2004, 31.6% in 2007) [41]. In a study examining prepaid incentives to physician specialists, the response rate was 52.1% for physicians who received a $20 check versus 67.8% for physicians who received a $50 check (P < 0.001) [42]. As physicians become increasingly burdened with surveys, studies suggest larger incentives may be necessary to engage potential respondents and thus maximize response rate [42].

Based on these findings and from our own survey findings, individual smaller financial incentives for each respondent may increase initial buy-in from participants, and may be superior to large, one-time lottery draws. However since we did not include a comparison group of individual small financial incentives, this conclusion is somewhat limited. Additionally it is important to note, that a handful of physicians (n = 5) responded to the offer of a lottery draw incentive unfavorably. Similar studies have found negative responses to incentives [26,43], although not the point of withdrawing from the study. In the case of the current study, the physicians declined to participate as a result of being offered a personal incentive.

The timing of follow up reminders has also been shown to increase response rates. However, recommendations regarding the timing of follow up and frequency of follow up reminders vary substantially in the literature [13]. Our study was associated with an increase in response rates by medical or surgical specialty after each follow-up/reminder; however, no clear pattern surfaced as to which timeframe (1st week, 3rd week) is most ideal to increase response rates. Our results suggest that at least one follow-up reminder may prove beneficial in increasing response rates [4,44]. More recently, research suggests too many reminders may be viewed as possible harassment of potential respondents [45]. Future research should focus on the ideal number and nature of reminders and specifically, how much is too much.

Researchers must always explore and address the bias associated with non-response. Physicians who responded to our follow up survey (11.8%) displayed similar patterns or characteristics of response across gender and specialty. However, beyond these two characteristics, we were not able to compare or contrast other factors that may have influenced certain physicians to respond versus non-respondents. Kellerman and Herold [8] outline the reasons why responding and non-responding physicians tend to share similar characteristics. Physicians as a group are more homogeneous regarding knowledge, training, attitudes, and behavior and variations that do exist among physicians may not be as associated with willingness to respond or survey content [8].

In the current study, it is important to recognize that non-respondents may differ from participating physicians in ways we were unable to assess and is noted as a possible response bias issue. The main reason for initial non-response in our study was survey burden, with a lack of time to complete them (60.3%). Physicians commonly acknowledge that too many survey requests and growing constraints on their time limit their ability to participate in multiple, concurrent survey- based studies [46] . Given the demands on their time, survey topic or salience must be relevant and the survey must present a benefit to physicians in order for them to participate. Studies show physicians are interested in endorsing certain aspects of research where the opportunity to enact quality improvement and contribute to clinical knowledge is evident [47].

It was hypothesized that using personalized email subject headings with the names of key physicians who were supporting the promotion of the survey (i.e. Dr. X is asking for your help) would help bolster participation. As with mail surveys, previous literature indicates using personalized correspondence is apparently associated with higher response rates for electronic survey [22]. However this also may have led to response bias in the likelihood of increased participation of physicians in certain specialties (i.e. if the respondent was familiar with the physician who was promoting study). However, a generic subject heading listing the funding body (i.e. xxx funded research project) was also used in cases where the use of an individual physician’s name was not deemed appropriate. The authors feel this bias would not have affected the results in a significant fashion, especially given the low response rate.

It is important to discuss the limitations of our study. First, 108 physicians were excluded due to incorrect contact information or unavailable contact information, resulting in possible selection bias. Second, there is always the possibility that an email will be identified as “spam mail” when using email as a contact method, possibly further reducing the response rate. Third, we only used one survey mode (i.e. email) which may have limited our response rate. Other limitations included the lack of a comparison group to establish whether our personal survey method enhanced response rates. As there were no controlled groups to compare various interventions that may be associated with improved response rate, it is not possible to firmly establish definite drivers of the degree of response observed in this study. However, we attempted to explore the different reasons for non-responses among physicians in our survey. Finally, the survey was limited to one large metropolitan city in Canada; thus, the findings may not be generalizable to other geographical locations, or to general practitioners or physicians in training.

Conclusions

In conclusion, our online survey response rate of 35.0% remains comparable to response rates from previously published physician specialist survey-based studies. Variations across medical specialties may have been influenced by gender as woman in certain specialties (i.e. internal medicine and neurology) were more likely to respond than their male counterparts. The response rate in the current study was likely influenced by the sensitive survey topic, but it is likely that specialties (i.e. pediatrics and internal medicine) with longstanding APP programs were more likely to respond as they had more experience with billing within that program. Future survey studies are needed to determine the ideal methodology based on survey topics for physician specialists. This study shares some of the challenges and successes of conducting survey research among multiple physician specialties, where advancement in successful survey recruitment methods is necessary.

Acknowledgements

Data used in the analysis was collected through a project funded by the Canadian Institutes of Health Research (CIHR). H. Quan, N. Jetté, and W. A. Ghali are supported by Alberta Innovates Health Solutions (AIHS) Population Health Investigators Salary Awards. N. Jetté and W. A. Ghali are Canada Research Chair Tier 2 holders. Lindsay L. Sykes was funded by a Summer Studentship from AIHS.

Abbreviations

- APP

Alternative Payment Plans

- FFS

Fee-for-Service

- ICU

Intensive Care Unit

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Please note that all authors have agreed to the conditions noted on the authorship agreement form and take full responsibility for this data, the analyses and interpretation. HQ and NJ conceived of the study idea and HQ, BH, TN, CAB, ED, SS, WAG and NJ secured funding for the project through writing and application of the grant. CTC, HQ, BH, TN, CAB, ED, SS, WAG, LLS and NJ all took part in the development and refinement of the questionnaire. CTC, HQ and NJ carried out the survey and CTC and LLS gathered and analyzed results. CTC, HQ, BH, TN, CAB, ED, SS, WAG, LLS and NJ all took part in the development and editing of the manuscript. All authors read and approved the final manuscript.

Contributor Information

Ceara Tess Cunningham, Email: ceara.cunningham@albertahealthservices.ca.

Hude Quan, Email: hquan@ucalgary.ca.

Brenda Hemmelgarn, Email: Brenda.Hemmelgarn@albertahealthservices.ca.

Tom Noseworthy, Email: tnosewor@ucalgary.ca.

Cynthia A Beck, Email: Cindy.Beck@albertahealthservices.ca.

Elijah Dixon, Email: Elijah.Dixon@albertahealthservices.ca.

Susan Samuel, Email: S.Samuel@albertahealthservices.ca.

William A Ghali, Email: wghali@ucalgary.ca.

Lindsay L Sykes, Email: lindsay.sykes@hotmail.com.

Nathalie Jetté, Email: Nathalie.Jette@albertahealthservices.ca.

References

- 1.Sheehan KB. E-mail Survey Response Rates: A Review. J Computer-Mediated Comm. 2001;6(2):0. doi: 10.1111/j.1083-6101.2001.tb00117.x. [DOI] [Google Scholar]

- 2.Watt JH. Internet systems for evaluation research. N Dir Eval. 1999;1999(84):23–43. doi: 10.1002/ev.1151. [DOI] [Google Scholar]

- 3.Paolo AM, Bonaminio GA, Gibson C, Partridge T, Kallail K. Response Rate Comparisons of E-Mail- and Mail-Distributed Student Evaluations. Teach Learn Med. 2000;12(2):81–4. doi: 10.1207/S15328015TLM1202_4. [DOI] [PubMed] [Google Scholar]

- 4.Braithwaite D, Emery J, De Lusignan S, Sutton S. Using the Internet to conduct surveys of health professionals: a valid alternative? Fam Pract. 2003;20(5):545–51. doi: 10.1093/fampra/cmg509. [DOI] [PubMed] [Google Scholar]

- 5.Beebe TJ, Locke GR, 3rd, Barnes SA, Davern ME, Anderson KJ. Mixing web and mail methods in a survey of physicians. Health Serv Res. 2007;42(3 Pt 1):1219–34. doi: 10.1111/j.1475-6773.2006.00652.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fan W, Yen Z. Factors affecting response rates of the web survey: A systematic review. Comput Hum Behav. 2009;26(2):132–9. doi: 10.1016/j.chb.2009.10.015. [DOI] [Google Scholar]

- 7.Cummings SM, Savitz LA, Konrad TR. Reported response rates to mailed physician questionnaires. Health Serv Res. 2001;35(6):1347–55. [PMC free article] [PubMed] [Google Scholar]

- 8.Kellerman SE, Herold J. Physician response to surveys? A review of the literature. Am J Prev Med. 2001;20(1):61–7. doi: 10.1016/S0749-3797(00)00258-0. [DOI] [PubMed] [Google Scholar]

- 9.Burns KE, Duffett M, Kho ME, Meade MO, Adhikari NK, Sinuff T, et al. A guide for the design and conduct of self-administered surveys of clinicians. CMAJ. 2008;179(3):245–52. doi: 10.1503/cmaj.080372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Asch S, Connor SE, Hamilton EG, Fox SA. Problems in recruiting community-based physicians for health services research. J Gen Intern Med. 2000;15(8):591–9. doi: 10.1046/j.1525-1497.2000.02329.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tambor SE, Chase GA, Faden RR, Geller G, Hofman KJ, Holtzman NA. Improving response rate through incentive and follow-up: The Effect on a Survey of Physicians’ Knowledge of Genetics. Am J Public Health. 1993;83(11):1599–603. doi: 10.2105/AJPH.83.11.1599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sibbald B, Addington J, Brenneman D, Freeling P. Telephone versus postal surveys of general practitioners: Methodological considerations. Br J Gen Pract. 1994;44:297–300. [PMC free article] [PubMed] [Google Scholar]

- 13.Barclay S, Todd C, Finlay I, Grande G, Wyatt P. Not another questionnaire! Maximizing the response rate, predicting non-response and assessing non-response bias in postal questionnaire studies of GPs. Fam Pract. 2002;19(1):105–11. doi: 10.1093/fampra/19.1.105. [DOI] [PubMed] [Google Scholar]

- 14.Maheux B, Legault C, Lambert J. Increasing response rates in physicians' mail surveys: an experimental study. Am J Public Health. 1989;79(5):638–9. doi: 10.2105/AJPH.79.5.638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kline MW, O’Connor KG. Disparity between pediatricians’ knowledge and practices regarding perinatal human immunodeficiency virus counseling and testing. Pediatrics. 2003;112(5):e367. doi: 10.1542/peds.112.5.e367. [DOI] [PubMed] [Google Scholar]

- 16.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997;50(10):1129–36. doi: 10.1016/S0895-4356(97)00126-1. [DOI] [PubMed] [Google Scholar]

- 17.Grunfeld E, Mant D, Vessey MP, Fitzpatrick R. Specialist and general practice views on routine follow-up of breast cancer patients in general practice. Fam Pract. 1995;12(1):60–5. doi: 10.1093/fampra/12.1.60. [DOI] [PubMed] [Google Scholar]

- 18.Frey MK, Taylor JS, Pauk SJ, Hughes D, Turbendian HK, Sapra KJ, et al. Knowledge of Lynch syndrome among obstetrician/gynecologists and general surgeons. Int J Gynaecol Obstet. 2014;126(2):161–4. doi: 10.1016/j.ijgo.2014.02.024. [DOI] [PubMed] [Google Scholar]

- 19.Siddiqui ZK, Wu AW, Kurbanova N, Qayyum R. Comparison of Hospital Consumer Assessment of Healthcare Providers and Systems patient satisfaction scores for specialty hospitals and general medical hospitals: confounding effect of survey response rate. J Hosp Med. 2014;9(9):590–3. doi: 10.1002/jhm.2225. [DOI] [PubMed] [Google Scholar]

- 20.Suh E, Daugherty CK, Wroblewski K, Lee H, Kigin ML, Rasinski KA, et al. General internists’ preferences and knowledge about the care of adult survivors of childhood cancer: a cross-sectional survey. Ann Intern Med. 2014;160(1):11–7. doi: 10.7326/M13-1941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Delnevo CD, Abatemarco DJ, Steinberg MB. Physician response rates to a mail survey by specialty and timing of incentive. Am J Prev Med. 2004;26(3):234–6. doi: 10.1016/j.amepre.2003.12.013. [DOI] [PubMed] [Google Scholar]

- 22.Cook C, Health F, Thompson R. A Meta-Analysis of Response Rates in Web- or Internet-Based Survey. Educ Psychol Measurement. 2000;60:821–36. doi: 10.1177/00131640021970934. [DOI] [Google Scholar]

- 23.Dillman D. Mail and Telephone surveys surveys. The total design method. 2. Hoboken, NJ: John Wiley & Sons, Inc.; 2000. [Google Scholar]

- 24.Edwards P, Roberts I, Clarke M, DiGuiseppi C, Pratap S, Wentz R, et al. Increasing response rates to postal questionnaires: systematic review. BMJ. 2002;324(7347):1183. doi: 10.1136/bmj.324.7347.1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gosden T, Forland F, Kristiansen IS, Sutton M, Leese B, Giuffrida A, et al. Capitation, salary, fee-for-service and mixed systems of payment: effects on the behaviour of primary care physicians. Cochrane Database Syst Rev. 2000;3 doi: 10.1002/14651858.CD002215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Robertson J, Walkom EJ, McGettigan P. Response rates and representativeness: a lottery incentive improves physician survey return rates. Pharmacoepidemiol Drug Saf. 2005;14(8):571–7. doi: 10.1002/pds.1126. [DOI] [PubMed] [Google Scholar]

- 27.Canadian Institute for Health Information . Alternative payments and the national physician database (NPDB): The status of alternative payment programs for physicians in Canada, 2004–2005 and the preliminary information for 2005–2006. Ottawa: CIHI; 2007. [Google Scholar]

- 28.Schaefer D, Dillman DA. Development of a standard e-mail methodology: results of an experiment. Public Opin Q. 1998;62(3):378–97. doi: 10.1086/297851. [DOI] [Google Scholar]

- 29.Raza M, Bernstein CN, Ilnyckyj A. Canadian physicians' choices for their own colon cancer screening. Can J Gastroenterol. 2006;20(4):281–4. doi: 10.1155/2006/969832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Henry-Reid LM, O'Connor KG, Klein JD, Cooper E, Flynn P, Futterman DC. Current pediatrician practices in identifying high-risk behaviors of adolescents. Pediatrics. 2010;125(4):e741–7. doi: 10.1542/peds.2009-0271. [DOI] [PubMed] [Google Scholar]

- 31.Afghani B, Kong V, Wu FL. What would pediatric infectious disease consultants recommend for management of culture-negative acute hematogenous osteomyelitis? J Pediatr Orthop. 2007;27(7):805–9. doi: 10.1097/BPO/0b013e3181558981. [DOI] [PubMed] [Google Scholar]

- 32.Rice HE, Frush DP, Harker MJ, Farmer D, Waldhausen JH. Peer assessment of pediatric surgeons for potential risks of radiation exposure from computed tomography scans. J Pediatr Surg. 2007;42(7):1157–64. doi: 10.1016/j.jpedsurg.2007.02.007. [DOI] [PubMed] [Google Scholar]

- 33.Field TS, Cadoret CA, Brown ML, Ford M, Greene SM, Hill D, et al. Surveying physicians: do components of the “Total Design Approach” to optimizing survey response rates apply to physicians? Med Care. 2002;40(7):596–605. doi: 10.1097/00005650-200207000-00006. [DOI] [PubMed] [Google Scholar]

- 34.Thorpe C, Ryan B, McLean SL, Burt A, Stewart M, Brown JB, et al. How to obtain excellent response rates when surveying physicians. Fam Pract. 2009;26(1):65–8. doi: 10.1093/fampra/cmn097. [DOI] [PubMed] [Google Scholar]

- 35.Clark SJ, Adolphe S, Davis MM, Cowan AE, Kretsinger K. Attitudes of US obstetricians toward a combined tetanus-diphtheria-acellular pertussis vaccine for adults. Infect Dis Obstet Gynecol. 2006;2006:87040. doi: 10.1155/IDOG/2006/87040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Stankovic C, Mahajan P, Ye H, Dunne RB, Knazik SR. Bioterrorism: Evaluating the preparedness of pediatricians in Michigan. Pediatr Emerg Care. 2009;25(2):88–92. doi: 10.1097/PEC.0b013e318196ea81. [DOI] [PubMed] [Google Scholar]

- 37.Keating NL, Zaslavsky AM, Goldstein J, West DW, Ayanian JZ. Randomized trial of $20 versus $50 incentives to increase physician survey response rates. Med Care. 2008;46(8):878–81. doi: 10.1097/MLR.0b013e318178eb1d. [DOI] [PubMed] [Google Scholar]

- 38.Tamayo-Sarver JH, Baker DW. Comparison of responses to a US 2 dollar bill versus a chance to win 250 US dollars in a mail survey of emergency physicians. Acad Emerg Med. 2004;11(8):888–91. doi: 10.1111/j.1553-2712.2004.tb00776.x. [DOI] [PubMed] [Google Scholar]

- 39.Kahn JM, Asch RJ, Iwashyna TJ, Haynes K, Rubenfeld GD, Angus DC, et al. Physician attitudes toward regionalization of adult critical care: a national survey. Crit Care Med. 2009;37(7):2149–54. doi: 10.1097/CCM.0b013e3181a009d0. [DOI] [PubMed] [Google Scholar]

- 40.James KM, Ziegenfuss JY, Tilburt JC, Harris AM, Beebe TJ. Getting physicians to respond: the impact of incentive type and timing on physician survey response rates. Health Serv Res. 2011;46(1 Pt 1):232–42. doi: 10.1111/j.1475-6773.2010.01181.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Grava-Gubins I, Scott S. Effects of various methodologic strategies: survey response rates among Canadian physicians and physicians-in-training. Can Fam Physician. 2008;54(10):1424–30. [PMC free article] [PubMed] [Google Scholar]

- 42.Keating NL, Stoeckert KA, Regan MM, DiGianni L, Garber JE. Physicians’ experiences with BRCA1/2 testing in community settings. J Clin Oncol. 2008;26(35):5789–96. doi: 10.1200/JCO.2008.17.8053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tanasiuk EaI S. Do people always respond to incentives? Experience in data gathering through face to face interviews. American Society of Business and Behavioral Sciences. 2012;19:890–8. [Google Scholar]

- 44.McMahon SR, Iwamoto M, Massoudi MS, Yusuf HR, Stevenson JM, David F, et al. Comparison of e-mail, fax, and postal surveys of pediatricians. Pediatrics. 2003;111(4 Pt 1):e299–303. doi: 10.1542/peds.111.4.e299. [DOI] [PubMed] [Google Scholar]

- 45.Howell SC, Quine S, Talley NJ. Ethics review and use of reminder letters in postal surveys: are current practices compromising an evidence-based approach? Med J Aust. 2003;178(1):43. doi: 10.5694/j.1326-5377.2003.tb05043.x. [DOI] [PubMed] [Google Scholar]

- 46.Nakash RA, Hutton JL, Jorstad-Stein EC, Gates S, Lamb SE. Maximising response to postal questionnaires–a systematic review of randomised trials in health research. BMC Med Res Methodol. 2006;6:5. doi: 10.1186/1471-2288-6-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bailey P, Warren S, Buske L. Highlights of the 2002 Canadian Neurological Society (CNS) manpower survey. Can J Neurol Sci. 2005;32(4):425–32. doi: 10.1017/S0317167100004406. [DOI] [PubMed] [Google Scholar]