Abstract

Children living in rural and underserved areas experience decreased access to health care services and are often diagnosed with autism at a later age compared to those living in urban or suburban areas. This study examines the utility and validity of an ASD assessment protocol conducted via video conferencing (VC). Participants (n = 17) included families with young children (2.5–6 years) requesting an evaluation for ASD in an interdisciplinary clinic. We randomly assigned families to complete an additional evaluation either in-person or via VC prior to their clinic appointment and compared diagnostic impressions to their interdisciplinary clinic evaluation. Results demonstrate excellent inter-rater agreement on diagnoses between clinicians in the VC setting and the interdisciplinary team, which suggests VC may be a viable method to increase access to autism diagnostic services, and ultimately early intervention, for families in rural and underserved areas.

Keywords: autism, telemedicine, underserved populations, assessment, evaluation

Autism Spectrum Disorder (ASD) affects 1 in 68 children across all racial, ethnic, and socioeconomic groups and is four to five times more likely to occur in boys than girls (Centers for Disease Control and Prevention [CDC], 2014). However, there is variability in diagnostic procedures and age of diagnosis for these disorders within and among states. Delays in assessment and diagnosis result in postponed early intervention services critical for improved outcomes and, with an increase in the prevelance of ASD, a potential drain to limited financial resources if diagnosis is past the age when interventions can have the most impact. Great improvement across multiple outcomes (e.g., IQ, adaptive behavior, autism characteristics) has been documented when treatment is started prior to 30 months of age (Dawson 2008).

The American Academy of Pediatrics (AAP) recommends screening for ASD at 18 and 24 months as a part of medical surveillance in a medical home (AAP 2006) and timely, interdisciplinary evaluation for any child with a positive screen or who is otherwise suspected of having an ASD. Although early detection and intervention is critical, availability and access to professionals who provide diagnostic and clinical services for children with ASD is limited (Newschaffer, Falb, & Gurney 2005), particularly in rural areas (Carbone, Farley, & Davis 2010). Children living in rural and underserved areas are diagnosed at a later age compared to those in urban or suburban areas (Lauritsen et al. 2014) and have decreased access to health care services (Mandell et al. 2005). The 2013 Interagency Autism Coordinating Committee (IACC) recently shifted their attention to removing barriers to accessing services, reducing inequities, and promoting earlier detection and intervention for indivdiuals in rural and underserved areas. The American Academy of Neurology (2010) and the AAP (2006) recommend using structured and validated observation measures, a parent interview for autism, and the integration of medical history, record review, developmental assessments, and clinical observation. These guidelines promote quality and consistency in diagnosis but limit the availability of qualified professionals in community settings. This is particularly true when children live in rural areas at significant geographic distance from university medical centers that often provide such models, which makes time, travel, and expense critical barriers to service access.

In order to support improved outcomes for children with ASD, families should be afforded equal opportunities to access high quality health care and education. Changes in clinical practice are needed to address this inequity and promote earlier detection and intervention for these families. One possible solution is using video conferencing (VC) to aid in assessment and diagnostic procedures, which provides a means to reach families in rural and underserved areas. Several studies (Marcin et al. 2004; Farmer & Muhlenbruck 2001) demonstrated that the use of VC technology improved access to pediatric subspecialty care for children living in rural areas and suggest telemedicine (i.e., use of VC in health care) may be a viable means to provide services for children with ASD living in rural and underserved areas (Marcin et al. 2004; Nesbitt et al. 2006). Recent research investigates the use of VC in providing services to children with ASD, including ABA, parent training, and behavior assessment and treatment (Heitzman-Powel et al. 2013; Barretto et al. 2006), as well as inter-rater agreement on autism measures over VC (Reese et al. 2012). The goal of this paper is to present preliminary findings from a larger randomized, controlled study examining the utility and validity of video conferencing (VC) as a means to evaluate children for ASD, providing empirical evidence for alternative procedures and technology that would address the need outlined by the IACC. We hypothesized that 1) we can coach families via VC to correctly complete assessment activities with their child, and 2) clinicians can make an accurate diagnosis of ASD in young children.

Methods

Participants and Recruitment

We recruited families with children between 2.5–6 years old requesting an evaluation for an ASD through one of our interdisciplinary evaluation clinics (see Table 1). Participants met the following inclusion criteria: 1) sought an evaluation for possible ASD through our clinic, 2) spoke English as primary language with no interpreter services required, and 3) agreed to complete the experimental evaluation in addition to their scheduled visit. We contacted 105 potential participants in a one-year period. We spoke with 68 families (65%) in detail about the study with 38% (n = 26) scheduling a study visit and ultimately 9 participants canceling. Of the 62% that declined participation, the most common reasons (see Table 2) reflected barriers related to time (e.g., missed work/school) or accessing needed supports that would allow participation (e.g., transportation, child care for other children). Participants traveled an average of 67.2 miles (range = 11.2 – 202 miles) in our current study. We will present preliminary findings for 17 participants who completed all study procedures to date.

Table 1.

Participant Demographic Information: Total and by Condition

| Age (Years) | Sex | Race/Ethnicity | Time Between Visits (Days). | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Average | Range | Male | Female | White | African-American | Hispanic | Average | Range | |

| InP & VC | 4.47 | 2.4 – 5.8 | 70.6% | 29.4% | 88.2% | 5.9% | 5.9% | 24.6 | 1 – 47 |

| InP (n = 10) | 4.12 | 2.4 – 5.3 | 60.0% | 40.0% | 90% | 10% | 0% | 21.2 | 1 – 47 |

| VC (n = 7) | 4.96 | 2.7 – 5.8 | 85.7% | 14.3% | 85.7% | 14.3% | 0% | 29.6 | 12 – 42 |

Table 2.

Reasons given by those who declined to participate in the study

| Reason | Percentage (n = 37) |

|---|---|

| “Busy” or “Not interested” | 30% |

| No reason given | 30% |

| Cannot take time away from school and/or work | 19% |

| Transportation/Accessibility issues (i.e. too far, no ride) | 27% |

| Relocation or custody issues (i.e. divorce, foster care) | 14% |

| Need to care for other children or family members | 19% |

Note: Some families provided multiple reasons for their inability to participate, which are counted in multiple categories.

Procedures and Protocol Development

Formative procedures

We completed study procedures as formative assessment with five “practice” families prior to beginning the study. Practice families provided feedback on study procedures, including feedback on the content, length, and utility of watching a video model of activities they would do with their child in the course of their visit. We adapted the protocol procedures based on their feedback. Research clinicians completed the parent integrity checklist (Appendix available upon request) for each family to determine fidelity of completing the modified Autism Diagnostic Observation Schedule, Second Edition (ADOS-2; Lord et al. 2012) activities with their child. The parent integrity checklist serves an important dual function in that it demonstrates some standardization of play-based activities and elicits conditions under which social and communicative behaviors may be observed. This was not a measure of fidelity for ADOS-2 administration. Research clinicians achieved greater than 85% agreement (range = 89 – 92%.; M = 90.7%) on two of three practice family study visits prior to beginning actual participants.

Inter-rater Agreement Training

All members of the research team completed reliability practice training sessions and achieved adequate inter-rater agreement for all measures prior to evaluating study participants. Inter-rater agreement training addressed consistent scoring of ASD measures (Autism Diagnostic Interview–Revised, algorithm items (ADI-R; Le Couteur, Lord, & Rutter 2003) and the ADOS-2 Modules 1 and 2), rating ASD symptoms on the DSM-5, and determining diagnoses of ASD and/or any co-occurring disorders (if applicable). Each research team (i.e., pair of research clinicians in same setting) made independent ratings on DSM-5 criteria and diagnosis. Training procedures included team ratings, multiple practice sessions, and concluded with group discussions to help towards reliability of rating symptoms and diagnosis. Research clinicians trained with the goal of at least two of three consecutive sessions at 80% or above agreement on all DSM-5 criteria and diagnosis, resulting in a mean of 90.7% agreement (range = 89 – 92%) on two of three practice sessions. We monitored inter-rater agreement throughout the study by examining agreement between clinicians in the same setting (InP or VC) and continued training through group discussions of measures and criteria following each participant.

Study Procedures

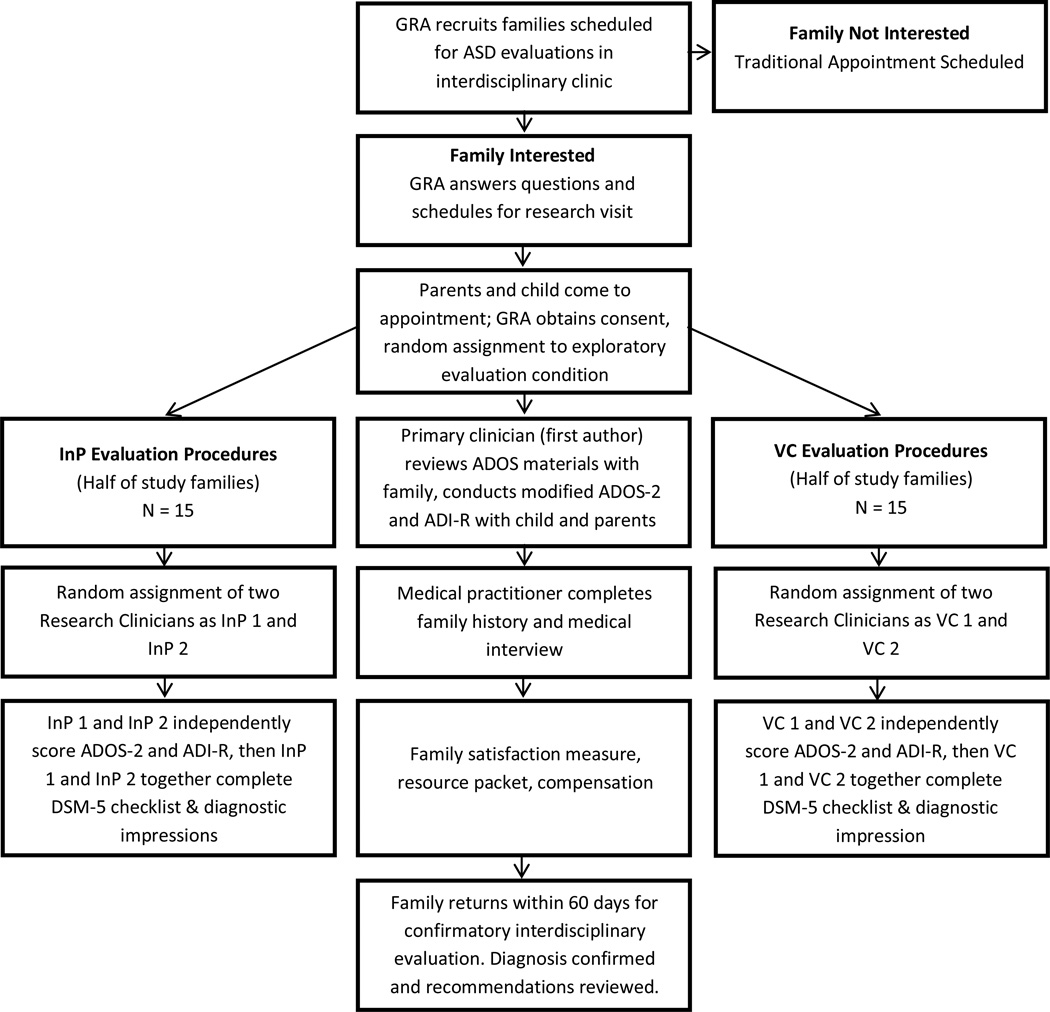

All families completed the experimental study evaluation prior and in addition to their scheduled clinic visit. We utilized simple randomization procedures and a random numbers generator to assign an equal number of families for in-person administration (InP) of assessments or administration of assessment procedures through the use of video conferencing (VC). All families completed identical study visit procedures (see Figure 1) and assessment protocol in their assigned condition with a pair of research clinicians observing and scoring measures in each setting for all participants. To minimize variability in test administration, the first author directed all families through evaluation activities, resulting in a total of four research clinicians (two VC, two InP), including the first author, observing and scoring assessments for each family during experimental study visits. To limit possible observer effects, we randomly assigned families to a pair of research clinician observers, resulting in equal opportunities for clinician pairs within the InP and VC conditions.

Figure 1.

Recruitment, Randomization, and Evaluation Procedures.

Following informed consent, study families completed a behavioral screening measure (e.g., Behavior Assessment System for Children – 2nd Edition (BASC-2)) and a developmental screening tool (e.g., Ages and Stages Questionnaire (ASQ)) prior to or at the start of their study visit. Families also provided available school records and medical histories for review by all research clinicians. The first author coached caregivers on completing modified ADOS-2 activities with their child. Research clinicians selected the appropriate module based on screening interviews, measures (e.g. ASQ), observations, and discussions with families upon arrival. Coaching procedures included a five- to ten-minute description of the rationale and purpose of the play-based assessment (e.g., “to elicit behaviors often related to ASD”), discussion of the hierarchy of presses (e.g., less to more specific prompts or presses), and viewing a sixteen-minute video modeling item-by-item instructions. The first author also instructed families to expect specific guidance and feedback throughout the assessment as to how to engage the child in the activities, and followed a script similar to the parent integrity checklist in order to provide consistent and reliable coaching. Our protocol is modeled on a published study (Reese et al. 2012) to accurately reflect evaluation procedures conducted by on-site interdisciplinary autism clinics and to provide adequate direction for families completing study activities. All research families completed assessments in the same room, which was connected through VC to another observation room within the same building. Conducting on-site simulations allowed for greater control than a community-based setting, which was important to establish this protocol’s efficacy. Videoconferencing equipment included high-definition monitors and cameras to provide the highest quality VC experience. Research clinicians in the VC setting controlled the camera throughout the experimental evaluation for optimal viewing angles during observations and activities.

Evaluation Procedures

The ASD evaluation consisted of several components, including a) a 20 minute observation of the child in an unstructured play setting with age-appropriate toys, b) coaching by the first author to facilitate modified ADOS-2 activities, c) structured interview using only the ADI-R algorithm items, d) medical and family history by a medical provider, e) feedback to families with a brief summary of observations and recommendations, and f) a satisfaction survey. We included the ADI-R in our protocol to obtain greater detail during the parent interview, which is a critical piece for evaluations conducted without face-to-face interactions with the child. To minimize interview time, we only complete interview questions included in the algorithm. Although diagnostic impressions from the experimental procedures (InP or VC) are not shared with families, we provided a summary of the child’s strengths, areas to improve, brief recommendations, and information about infant, toddler, or early childhood services available in their area. The evaluation protocol and measures were identical for each research study participant. In the in-person (InP) condition, the research clinician directs participants through evaluation activities while in the same room as the families, and in the VC condition, families are directed through video-conferencing technology. Research clinicians independently scored ASD measures (ADOS-2 and ADI-R) for inter-rater item comparisons within and between settings. Then, each research clinician pair discussed measures and other available information and used clinical judgment to identify the DSM-5 criteria for ASD met by the child, primary diagnosis (ASD or no ASD), and any potential co-existing conditions. We focused on DSM-5 criteria for diagnosis rather than cut scores on ASD measures for two reasons: 1) our primary research question is to examine inter-rater agreement with diagnoses made in clinical settings, and 2) we are examining the reliability of diagnostic evaluations for ASD through video-conferencing, which inherently limits the utility and social validity of relying on standardized assessments and strict cut scores. We compared diagnostic impressions and DSM-5 criteria for each family between research conditions (VC clinician ratings vs InP clinician ratings) and to the corresponding interdisciplinary confirmatory evaluation team when families returned for their regularly scheduled clinic visit (i.e., standard of care for ASD evaluations). This design allowed for randomization of participants to condition of administration (InP versus VC) yet permits diagnostic ratings to be made in both VC and InP settings for all participants, resulting in data on diagnoses made in all settings (VC, InP, and confirmatory interdisciplinary clinic team) for the entire sample. The design also provides comparisons in DSM-5 criteria and diagnosis between InP and VC settings for individuals under the same evaluation conditions, which allows to examine variability introduced by differences in day of visit and related environmental factors (e.g., parent response to measures, child behavior, number of clinicians present, etc.).

Interdisciplinary Confirmatory Evaluation

All families returned within 60 days (range =1 – 47 days; M = 24.6 days) for their regularly scheduled evaluation in an autism clinic at the university medical center. The independent interdisciplinary team consisted of a psychologist, speech language pathologist, occupational therapist or physical therapist, and a medical professional who was not present during the study visit. The interdisciplinary team was blind to experimental results and included standard-of-care evaluation procedures typically provided in our autism evaluation clinics. Evaluation procedures included the use of ASD interview measures (ADI-R or Childhood Autism Rating Scale-CARS), play-based measures (ADOS, free play), developmental measures, medical history, and review of existing information (e.g., school records, previous evaluations). The team provided diagnostic impressions and a brief list of recommendations the day of the visit, with a descriptive report following three to four weeks later. The confirmatory evaluation team identified the DSM-5 criteria for ASD met by the child, primary diagnosis (ASD or no ASD), and any potential co-existing conditions so comparisons could be made with the experimental study visit.

Outcome Measures and Statistical Analyses

DSM-5 Symptoms

Research clinicians paired in the same setting (InP or VC) discuss all available assessment information to make consensus ratings about DSM-5 symptoms for ASD met by each child (see Table 3 for a summary of individual criterion). Clinicians rate individual criterion as “yes” or “no” to indicate the symptom’s presence and used clinical judgment if caregiver interview and child observations resulted in contradictory information.

Table 3.

Percent Agreement across DSM-5 Criteria

| DSM Area | Criterion Description | InP v. VC VC v. Clinic | InP v. Clinic |

|---|---|---|---|

| (n = 14) | (n = 14) | (n = 17) | |

| A | |||

| Social-emotional reciprocity | 85.7% | 64.3% | 70.6% |

| Nonverbal communication | 92.9% | 85.7% | 94.0% |

| Developing relationships | 85.7% | 78.6% | 76.5% |

| B | |||

| Stereotyped/repetitive speech, motor movements, or use of objects | 92.7% | 64.3% | 64.7% |

| Routines, rituals, resistance to change | 85.7% | 64.3% | 64.7% |

| Restricted, fixated interests | 78.6% | 57.1% | 52.9% |

| Hyper/hypo-reactivity to sensory input | 64.3% | 71.4% | 52.9% |

| Diagnosis | 78.6% | 85.7% | 82.4% |

Note: Sample sizes vary due to missing data from technical difficulties for three participants.

Primary diagnosis

Research clinician pairs formulated a primary diagnosis for each child utilizing all information from the evaluation study visit (i.e., medical history, school records, BASC-2, developmental measure, ADOS-2, and ADI-R). Clinicians determined if the child met the DSM-5 diagnostic criteria for an ASD and identified potential co-existing conditions. Clinicians at the confirmation visit used independently obtained evaluation data to make ratings on DSM-5 symptoms and diagnosis. For DSM-5, primary diagnosis results in dichotomous data as “ASD” or “no ASD”.

Parent integrity for play-based activities

Research clinicians completed the parent integrity checklist (Appendix available upon request) for each family to determine fidelity of completing the modified ADOS-2 activities with their child (see description under Formative Measures). The research team created this measure based on administration guidelines in the ADOS-2 manual as a means to structure play activities and monitor variables that could contribute to variability in diagnostic reliability but not as a measure of standardized administration. The checklists are specific to the module and include a hierarchy of presses within each item on the ADOS-2. Raters determine whether families correctly complete critical steps, or presses, within each activity (yes or no), resulting in a summary item for each activity (e.g., Response to Name). Summary items reflect overall integrity (% correctly completed) of specific activities, including categories of No (≤ 50%), Mostly (51–79%), and Yes (≥ 80%). Integrity data includes total steps completed with integrity as well as a summary of activities completed with integrity.

Data Analysis

We computed descriptive statistics to examine preliminary data on the utility and reliability of our VC based protocol for completing ASD evaluations. To determine diagnostic accuracy, we compared the diagnosis of the experimental research team for each participant in both research conditions (InP and VC) to the diagnosis of the confirmatory interdisciplinary evaluation team for agreement or disagreement. We summarized the agreement/disagreement scores across the participants to yield average percent agreement scores between each setting (VC-interdisciplinary confirmatory team, InP-interdisciplinary confirmatory team, and VC-InP). Percent agreement scores equal to or greater than 80% are considered excellent agreement, with scores between 70–80% considered “adequate” and scores under 70% considered inadequate. We computed specificity and sensitivity for each experimental condition with respect to the standard of care (confirmatory interdisciplinary evaluation clinic team) and present raw data in Table 4. We also computed average inter-rater agreement for individual DSM-5 ASD criterion between each condition. We examined initial evidence for fidelity of our protocol by collecting data on parents’ integrity when completing the play-based activities with their child. Parent integrity is calculated as the percent of individual presses correctly completed (i.e., correctly completed presses divided by total number of presses) and by the extent to which parents completed specific activities (e.g., percentage of items the correct hierarchy of presses was followed vs. mostly followed).

Table 4.

Diagnostic Accuracy, Sensitivity, and Specificity.

| Experimental Team ASD Rating | Confirmatory Evaluation Team Actual Diagnosis Reliability | ||||

|---|---|---|---|---|---|

| ASD | No ASD | Accuracy | Specificity | Sensitivity | |

| In-Person (n = 17) | 82% | 78% | 88% | ||

| Yes | 7 | 2 | |||

| No | 1 | 7 | |||

| VC (n = 14) | 86% | 88% | 84% | ||

| Yes | 5 | 1 | |||

| No | 1 | 7 | |||

| Base rate of ASD diagnosis in sample (n = 17) = 47%. | |||||

Note: Sample sizes vary due to missing data from technical difficulties for three participants.

Results

Diagnostic and Criteria Agreement

Table 4 shows the accuracy, sensitivity, and specificity for each research condition as compared to the confirmation clinic team. Research clinicians observing and rating in the in-person (InP) condition demonstrated excellent accuracy in diagnosis 82% of the time, with good specificity (78%) and excellent sensitivity (88%). Research clinicians observing and rating in the video conference (VC) condition demonstrated excellent accuracy (86%), specificity (88%), and sensitivity (83%) for diagnosis, which is equal to or better than results comparing the InP condition to the confirmatory interdisciplinary evaluation clinic. Table 4 includes raw data in each cell to further examine accuracy. Data in the InP condition show one false negative (miss) and two false positives, while data in the VC condition show one false negative (miss) and one false positive. Using this sample, the base rate for ASD diagnosis was 47%, meaning the confirmatory interdisciplinary evaluation team made an ASD diagnosis in 8 out of 17 participants. Cells show somewhat similar rates in both the InP (7/17 = 41%) and VC (5/14 = 36%) conditions. When evaluating agreement within individual diagnostic criterion, agreement ranged from 52.9% to 92.9% (see Table 3). Generally, both InP and VC conditions demonstrated similar agreement to the confirmatory interdisciplinary evaluation clinic; however, there is often higher agreement between the experimental evaluation conditions (InP and VC groups) on individual diagnostic criteria than that between the research conditions and the interdisciplinary confirmation clinic.

Parent Integrity

Families in both conditions showed adequate fidelity of completing activities with their child (insert Table 5), correctly performing a similar number of presses in both the VC (90.9%) and InP (91%) conditions. We examined the integrity of parents completing the modified ADOS-2 activities with their child in two ways. First, we calculated the percent of individual presses correctly completed (i.e., correctly completed presses divided by the total number of presses (correct + incorrect). The amount of presses varies across participants, as the number and type of presses required are dependent on the child’s response to initial presses. For example, in the Response to Name item, children that respond immediately to the first press do not require further presses. The number of presses varies based on the module completed with each child. Parents completing activities from module 1 (n = 5) have a maximum of 57 presses across 10 items, whereas those completing activities from the module 2 (n = 10) have a maximum of 56 presses across 12 items. Therefore, the number of required presses (M = 43.7; SD = 6.30) differed for each child. There was little difference in the number of presses that should have been administered between the Module 1 (M = 44.2; SD = 6.10) and the Module 2 (M = 43.3; SD = 6.58) and a small difference in the percentage of correctly administered presses between the Module 1 (M = 89.4) and Module 2 (M = 91.8). Next, we examined parent integrity by computing the percentage of items (activities) in which parents completed or mostly completed the correct hierarchy of presses. These calculations resulted in similar percentages in the VC and InP conditions (see Table 4). Again, results indicate slightly better integrity on ADOS-2 Module 2 items as compared to Module 1.

Table 5.

Parent Integrity of Activities with Child

| Total Correct Individual Presses |

Correct Hierarchy of Presses |

Correct and Mostly Correct Hierarchy of Presses |

||||

|---|---|---|---|---|---|---|

| Percent | SD | Percent | SD | Percent | SD | |

| Condition | ||||||

| Live (n = 10) | 91.0% | 5.80 | 77.9% | 15.03 | 94.6% | 7.14 |

| ITV (n = 5) | 90.9% | 10.90 | 80.4% | 18.15 | 93.5% | 10.85 |

| Live & ITV | 91.0% | 7.45 | 78.6% | 15.50 | 93.5% | 8.10 |

| Modified ADOS-2 | ||||||

| Module 1 | 89.4% | 3.23 | 73.6% | 8.39 | 81.3% | 17.9 |

| Module 2 | 91.8% | 8.90 | 90.6% | 8.40 | 95.0% | 8.10 |

Note: The total reflects correctly completed individual items or presses, while correct hierarchy reflects integrity of completing activities (summary of individual presses required per activity).

Discussion

Efforts targeting screening and diagnosis are supported by improved outcomes following early intervention for children with ASD (Dawson et al. 2008) but families living in rural or underserved areas experience difficulties accessing qualified professionals to make diagnoses and provide adequate intervention services (Carbone et al. 2010; Newschaffer, Falb, & Gurney 2005). Thus, we are charged to challenge existing clinical practices and identify alternative procedures to provide families in rural and underserved areas quality health care and equitable opportunities for improved outcomes. Cost-effective, readily available technologies used in VC provide a venue for delivering quality health care services to these families and are increasingly used for health and mental health services (e.g., telepsychiatry). Our preliminary data support our hypotheses that we can coach families via VC to correctly complete assessment activities with their child and provides preliminary evidence that clinicians can make an accurate diagnosis of ASD in young children when evaluation procedures are completed through the use of VC.

Parents correctly completed the play-based activities with their child with coaching from the first author prior to and during the activities. Additional participants are needed to determine what factors contribute to the fidelity of completing these activities. Initial analyses suggest possible differences between the Module 1 and Module 2 activities. However, these differences could be explained by variables related to selecting the module (e.g., age, language level). Anecdotal observations suggest attending to the video model is an important component of coaching families. We noticed parents that paid close attention to examples in the video required fewer prompts or repeated trials during the activities, while parents who appeared distracted (e.g., asked unrelated questions, looked away from screen) needed more coaching throughout activities. Research clinicians in the VC condition demonstrated adequate inter-rater agreement on diagnosis when compared to the confirmatory evaluation team. We found more variability for inter-rater agreement on specific ASD symptoms in the DSM-5 criteria. Although inter-rater agreement between the VC condition and the confirmatory evaluation clinic would be considered inadequate on four of the seven specific DSM-5 criteria, disagreement did not impact agreement on overall diagnosis. For a diagnosis of ASD, clinicians must endorse all symptoms in category A and at least two symptoms from category B (see Table 3). We note better inter-rater agreement for symptoms in category A (64.3–85.7%) as compared to category B (57.1–71.4%); therefore, differences in category B are less likely to impact overall diagnosis. Future analyses with the final sample will examine differences on inter-rater agreement between individual criterion and variables that potentially contribute to overall agreement on diagnosis, including measures of parent integrity.

Limitations and Future Directions

We acknowledge limitations in our study and in the interpretation of this data. As this is preliminary data from a larger study, our sample size is small and does not allow for statistical analyses that could provide more information about variables that may impact the reliability and validity of our protocol. Stronger agreement between clinicians in the exploratory conditions (InP vs VC) for DSM-5 criteria is difficult to interpret and could be attributed to variability in child behavior between visits or attributed to how the research clinicians and confirmatory clinician teams interpret and endorse DSM-5 symptoms. Although we did complete initial training on DSM-5 criteria and diagnosis and inter-rater agreement, it is still possible clinician teams interpret information differently. Lastly, all families completed exploratory evaluation procedures within the university-based setting under controlled conditions. Future studies should evaluate the integrity of completing the protocol within rural, community-based VC settings and compare accuracy of diagnosis with the in-person, interdisciplinary confirmation clinic.

The results of this paper are preliminary and focus on the parent integrity of completing the play-based activities with their child and on diagnostic agreement between raters in the VC condition compared to the confirmatory interdisciplinary evaluation clinic (standard of care). We will conduct additional analyses with our final sample (n = 30), including Binomial tests to determine if agreement is significantly different from .80 within each of the conditions, Kappa coefficients to illustrate strength of agreement in another metric, and exploratory analyses to identify potential variables that influence both parent fidelity and diagnostic accuracy. These findings will inform refinement of our protocol and future studies evaluating VC as a means to conduct ASD evaluations. We are optimistic about the preliminary findings in this paper and see VC as a promising practice for evaluating children suspected of ASD in rural and underserved areas to promote early identification and intervention.

Acknowledgments

Research reported in this publication was supported by the National Institute Of Mental Health of the National Institutes of Health under Award Number R21MH098133. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. This study is based on pilot data from a previous study (Reese, Jamison, Wendland, Fleming, Braun, Schuttler, & Turek 2013) supported by a Department of Defense Autism Concept grant (number W81XWH-08-1-0233).

Contributor Information

R. Matthew Reese, Center for Child Health and Development, University of Kansas Medical Center

T. Rene Jamison, Center for Child Health and Development, University of Kansas Medical Center

Matt Braun, Center for Child Health and Development, University of Kansas Medical Center

Maura Wendland, Center for Child Health and Development, University of Kansas Medical Center

William Black, University of Missouri—Kansas City

Megan Hadorn, University of Kansas

Eve-Lynn Nelson, Center for Tele Medicine and Tele Health, University of Kansas Medical Center.

Carole Prather, Center for Child Health and Development, University of Kansas Medical Center

References

- American Academy of Neurology. Practice parameter: Screening and diagnosis of autism:. Report of the Quality Standards Subcommittee of the American Academy of Neurology and the Child Neurology Society. Neurology. 2000;55(4):468–479. doi: 10.1212/wnl.55.4.468. [DOI] [PubMed] [Google Scholar]

- American Academy of Pediatrics. The AAP Autism Screening Guidelines: Integrating Screening Guidelines in Primary Care Practice [PowerPoint slides] 2006 Retrieved from www2.aap.org/qualityimprovement/quiin/PreSIP/Autism.pptx. [Google Scholar]

- American Academy of Pediatrics. Developmental Surveillance and Screening. Center for Disease Control and Prevention Recommendations and Guidelines. 2006 Retrieved from http://www.cdc.gov/ncbddd/autism/hcp-recommendations.html.

- Barretto A, Wacker DP, Harding J, Lee J, Berg WK. Using telemedicine to conduct behavioral assessments. Journal of Applied Behavior Analysis. 2006;39(3):333–340. doi: 10.1901/jaba.2006.173-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carbone PS, Farley M, Davis T. Primary care for children with autism. American Family Physician. 2010;81(4):453–460. [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention, Morbidity and Mortality Weekly Report (MMWR) Prevalence of autism spectrum disorder among children aged 8 years—Autism and Developmental Disabilities Monitoring Network, 11 sites, United States, 2010. Surveillance Summaries. 2014;63(2):1–21. Retrieved from http://www.cdc.gov/mmwr/preview/mmwrhtml/ss6302a1.htm. [PubMed] [Google Scholar]

- Dawson G. Early behavioral intervention, brain plasticity, and the prevention of autism spectrum disorder. Developmental Psychopathology. 2008;20(3):775–803. doi: 10.1017/S0954579408000370. [DOI] [PubMed] [Google Scholar]

- Interagency Autism Coordinating Committee (IACC) Interagency Autism Coordinating Committee Strategic Plan for Autism Spectrum Disorder Research—2013 Update. 2013 Retrieved from http://iacc.hhs.gov/strategic-plan/2013/index.shtml.

- Farmer JE, Muhlenbruck L. Tele health for children with special health care needs: Promoting comprehensive systems of care. Clinical Pediatrics. 2001;40(2):93–98. doi: 10.1177/000992280104000205. [DOI] [PubMed] [Google Scholar]

- Heitzman-Powell LS, Buzhardt J, Rusinko LC, Miller TM. Formative evaluation of an ABA outreach training program for parents of children with autism in remote areas. Focus on Autism and Other Developmental Disabilities. 2013 [Google Scholar]

- Lauritsen MB, Astrup A, Pedersen CB, Obel C, Schendel DE, Schieve L, Yeargin-Allsopp M, Parner ET. Urbanicity and autism spectrum disorders. Journal of Autism and Developmental Disorders. 2014;44:394–404. doi: 10.1007/s10803-013-1875-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Couteur A, Lord C, Rutter M. The Autism Diagnostic Iinterview—Revised (ADI-R) Los Angeles, CA: Western Psychological Services; 2003. [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S, Gotham K, Bishop S. Autism Diagnostic Observation Schedule, second edition (ADOS-2) manual (Part 1): Modules 12013;4. Torrance, CA: Western Psychological Services; 2012. [Google Scholar]

- Marcin JP, Ellis J, Mawis R, Nesbitt TS, Dimand RJ. Using telemedicine to provide pediatric subspecialty care to children with special health care needs in an underserved rural community. Pediatrics. 2004;113:1–6. doi: 10.1542/peds.113.1.1. [DOI] [PubMed] [Google Scholar]

- Mandell DS, Ittenbach RF, Levy SE, Pinto-Martin JA. Disparities in diagnoses received prior to a diagnosis of autism spectrum disorder. Journal of Autism & Developmental Disorders. 2007;37:1795–1082. doi: 10.1007/s10803-006-0314-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nesbitt TS, Rogers SJ, Rich BA, Anders TF, Yellowlees PM, Brown JR, Keast PR. Enhancing mental health services to children with autism in rural communities: Guidelines for using telehealth to extend autism outreach. Sacremento, CA: MIND Institute, UC Davis; 2006. [Google Scholar]

- Newschaffer CJ, Falb MD, Gurney JG. National autism prevalence trends from United States special education data. Pediatrics. 2005;115(3):e277–e282. doi: 10.1542/peds.2004-1958. [DOI] [PubMed] [Google Scholar]

- Reese RM, Jamison R, Wendland M, Fleming K, Braun MJ, Oeth Schuttler J, Turek J. Evaluating interactive videoconferencing for assessing symptoms of autism. Telemedicine & e-Health. 2012;19(9):671–677. doi: 10.1089/tmj.2012.0312. [DOI] [PMC free article] [PubMed] [Google Scholar]